1. Introduction

Autonomous mobility is undergoing development across various platforms, catering to specific use cases that enhance user convenience and operational efficiency. These platforms encompass domains such as smart factories, smart farms, automobiles, and healthcare facilities. Within the realm of autonomous mobility, sensors, including cameras, lidars, and radars, play a pivotal role in obstacle detection, path tracking, and environmental perception. Additionally, the strategic placement of these sensors is a crucial consideration for effective autonomous operation. However, the diversification of autonomous mobility platforms introduces heightened complexity and uncertainty in mathematical modeling. The implications of such uncertainty can exert detrimental effects on control mechanisms. Hence, the development of perception, decision, and control techniques that account for disturbances and uncertainties is of paramount significance.

Zhang et al., employed camera-derived lane detection and lateral error calculations to propose a path-tracking algorithm for intelligent electric vehicles. This algorithm integrated a linear quadratic regulator based on error dynamics and sliding mode control [

1]. Muthalagu et al., presented an algorithm that utilizes vision or camera data to detect straight lines, curves, and steep lanes through a combination of edge detection, polynomial regression, perspective transformation, and histogram analysis [

2]. Jiao et al., introduced a lane detection approach that incorporated a multi-lane following Kalman filter. Their algorithm centered on lane voting vanishing points derived from camera-based original images for simplified grid-based noise filtering [

3]. Miyamoto et al., devised a unique road-following technique that combines image processing with semantic segmentation. The practicality of this method was confirmed through a 500 m test drive employing three webcams and a robot [

4]. De Morais et al., outlined a hybrid control architecture merging deep reinforcement learning with a robust linear quadratic regulator. This architecture leveraged RGB information from vision-based images to maintain an autonomous vehicle within the lane center, even amidst uncertainties and disturbances [

5]. Khan et al., proposed an efficient conventional neural network approach featuring minimal parameters and a lightweight structural model, which can be implemented in embedded devices for accurate lane detection using vision or camera-based images [

6].

Recent research dedicated to the advancement of human-following mobile robots has focused on object tracking algorithms leveraging techniques such as histogram of oriented gradient, support vector machines, color histogram comparison [

7], and correlation filters utilizing RGB and pixel data [

8]. Investigations are also in progress to devise path tracking for mobile robots that incorporate the robustness of sliding mode control [

9,

10] with the uncertainties and disturbances inherent in mathematical models and parameters and reflect the physical characteristics of the robot based on a model predictive controller [

11,

12]. Additionally, some studies are exploring path-following strategies for mobile robots, harnessing GPS and vision or camera sensors [

13,

14].

The particle filter, which is suitable for nonlinear and non-Gaussian filtering, finds application across diverse signal processing fields and mathematical models. It is widely utilized for visual tracking and localization using vision or cameras. Camera-based visual tracking is used in multiple applications ranging from automated surveillance to object tracking and medical care. Since the surrounding environment continuously changes when an object moves, studies are being conducted on object tracking that is robust despite changes in lighting, background confusion, and scale [

15,

16,

17,

18]. In the context of autonomous driving and vehicle navigation, researchers are actively engaged in refining location estimation through particle filter-based approaches for autonomous vehicles and robots. This aims to secure accurate location determination within indoor environments, mitigating the uncertainties associated with GPS sensors [

19,

20,

21]. A controller based on a mathematical model for path following autonomous vehicles and robots may adversely affect control performance due to uncertainties in parameters and mathematical models, as well as disturbances in various driving situations. Studies are currently being conducted to enhance the performance of path-tracking control by compensating for uncertainties and disturbances in parameters and mathematical models. This is achieved using neural networks and deep reinforcement [

22,

23,

24,

25,

26], as well as adaptive rules [

27,

28].

Previous research has explored methodologies for lane and object detection utilizing pixel and RGB data from camera images, particle filters, and image processing techniques. Furthermore, ongoing investigations have validated the evolution of robust path-tracking control algorithms based on artificial intelligence and adaptive principles. These algorithms are designed to counterbalance uncertainties and disturbances inherent in mathematical models and parameters. This framework has culminated in diverse forms of autonomous mobility tailored to distinct use cases, such as human following and path tracking, to enhance worker safety and operational efficiency. However, these path tracking algorithms based on artificial intelligence require extensive learning methods using reliable and sufficient training data that represent normal path tracking conditions. Additionally, since the results are derived from learned data, there is a limitation in the determination and quantification of path misjudgment, especially when obstacles are present in the path. Therefore, the present study proposes an adaptive steering control algorithm for autonomous mobility systems. The central objective is to enhance worker convenience and operational efficiency by tracking color-coded paths in initial infrastructure settings, such as smart farms and factories, with adaptive control algorithms without depending on the parameters of complex mathematical models. Additionally, by utilizing a particle filter to detect the target path, it becomes feasible to develop a functional safety algorithm that can identify in real-time path misjudgment with distance index. The control errors used for the autonomous path-tracking mechanism were derived through a multi-particle filter-based approach based on camera RGB data. The adaptive steering control algorithm harnesses the control error alongside the estimated coefficients derived from simplified error dynamics via an RLS methodology. The cost function incorporates the sliding mode approach and weighted injection while considering the control error. The weighted injection ensures swift convergence of control errors to zero, as it dynamically adjusts the injection magnitude based on the control error magnitude while also considering the finite-time convergence condition. To evaluate the performance of the adaptive steering control algorithm, tests were conducted on S-curved and elliptical paths using autonomous mobility with a single steering and driving module.

The remainder of this paper is structured as follows.

Section 2 introduces the concept of control error derivation through a multi-particle filter mechanism and describes the adaptive steering control algorithm.

Section 3 discusses the performance of the proposed algorithm in tests conducted in working environments using developed autonomous mobility. Finally,

Section 4 and

Section 5 present the conclusions and future avenues of research.

2. Adaptive Path Tracking Algorithm of Autonomous Mobility

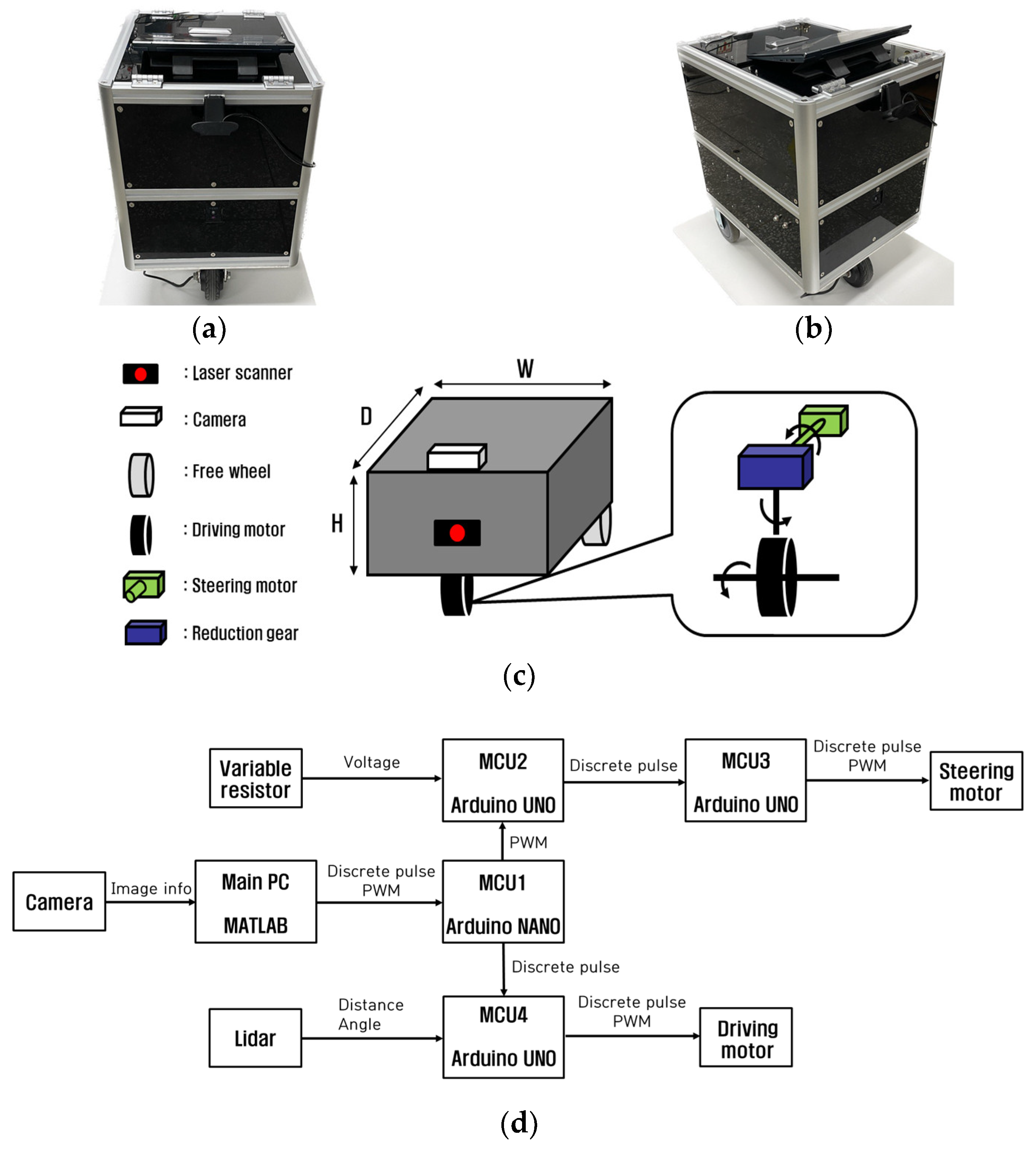

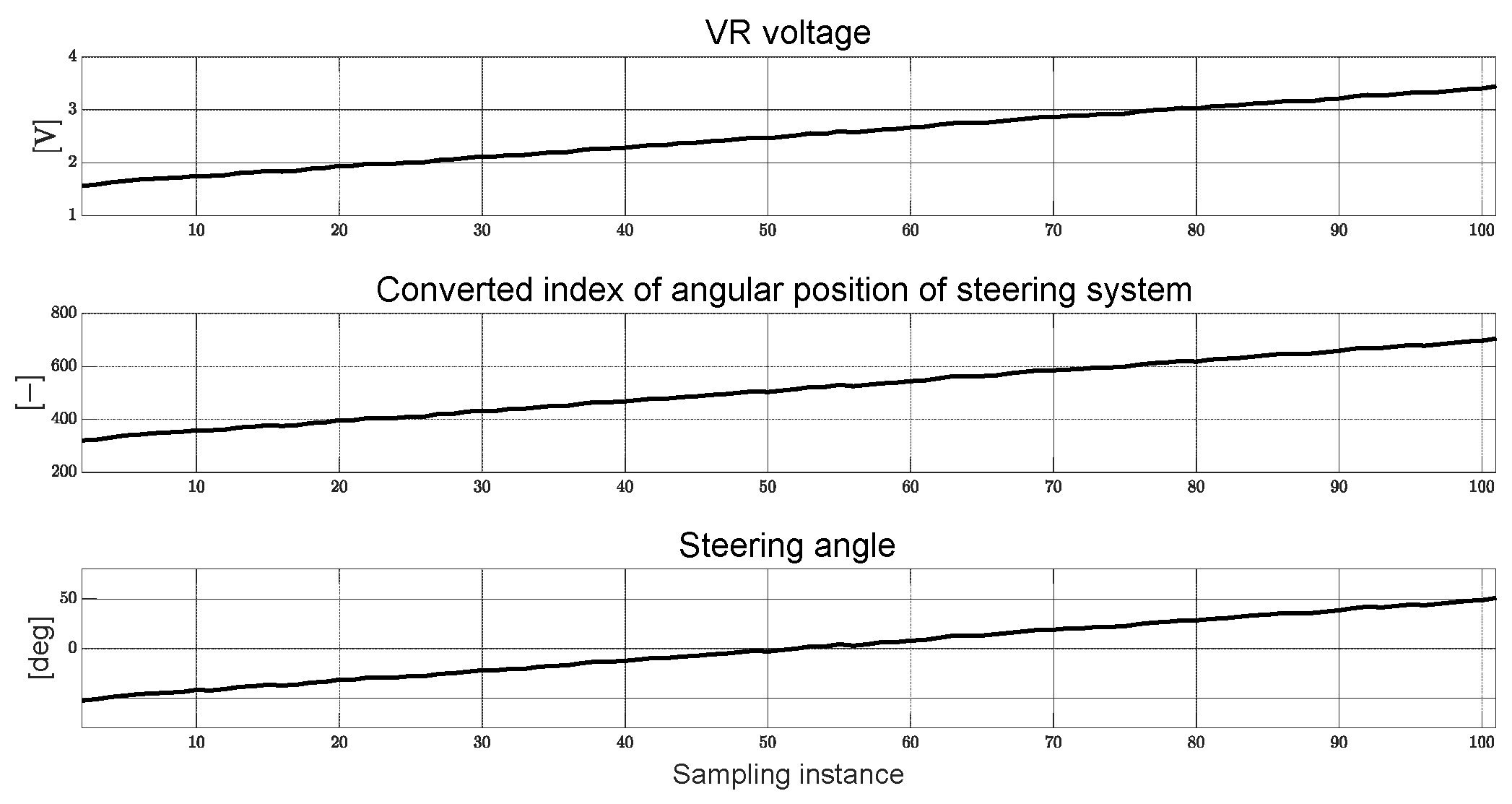

Figure 1 illustrates the comprehensive model schematic of the autonomous mobility path tracking algorithm. In the perception section, control errors and RGB errors were calculated using a camera based on a multi-particle filter. The current steering angle was calculated using a variable resistor (

VR), while distance and angle data were measured using lidar to detect obstacles in the surrounding environment. In the decision section, the steering angle error is calculated by using the control errors obtained from the multi-particle filter and the target steering angle derived from the adaptive steering control algorithm. Moreover, based on RGB errors and lidar-based measured distance and angle data, path recognition and obstacle detection were determined. In the control section, a pulse signal was generated to control the direction of the steering motor rotation based on the steering angle error. Additionally, a PWM signal was generated to converge the steering angle error to zero. These signals were then applied to the steering motor. The driving motor was controlled by a driving signal that passed through a functional safety algorithm for driving. Constant PWM signals were applied and traveled at a constant velocity.

Section 2.1 describes a method for deriving control errors using a camera based on a multi-particle filter. In

Section 2.2, an adaptive steering control algorithm designed based on a sliding mode approach and weighted injection using control errors and coefficients of simplified error dynamics estimated based on RLS is described.

2.1. Multi-Particle Filter-Based Control Errors Derivation

The particle filter converges towards the RGB value that best matches the camera image by iteratively executing particle update, likelihood calculation, and resampling steps. Equation (1) outlines the procedure for updating the generated particles. It predicts the current particles’ position and speed based on the preceding state value.

In Equation (1), the current particles’ positions and velocities

are forecasted by adding the discrete-time particles from the previous (

), coupled with the system noise sequence (

. This noise sequence results from the product of the standard deviation of position and velocity and the disturbance [

29].

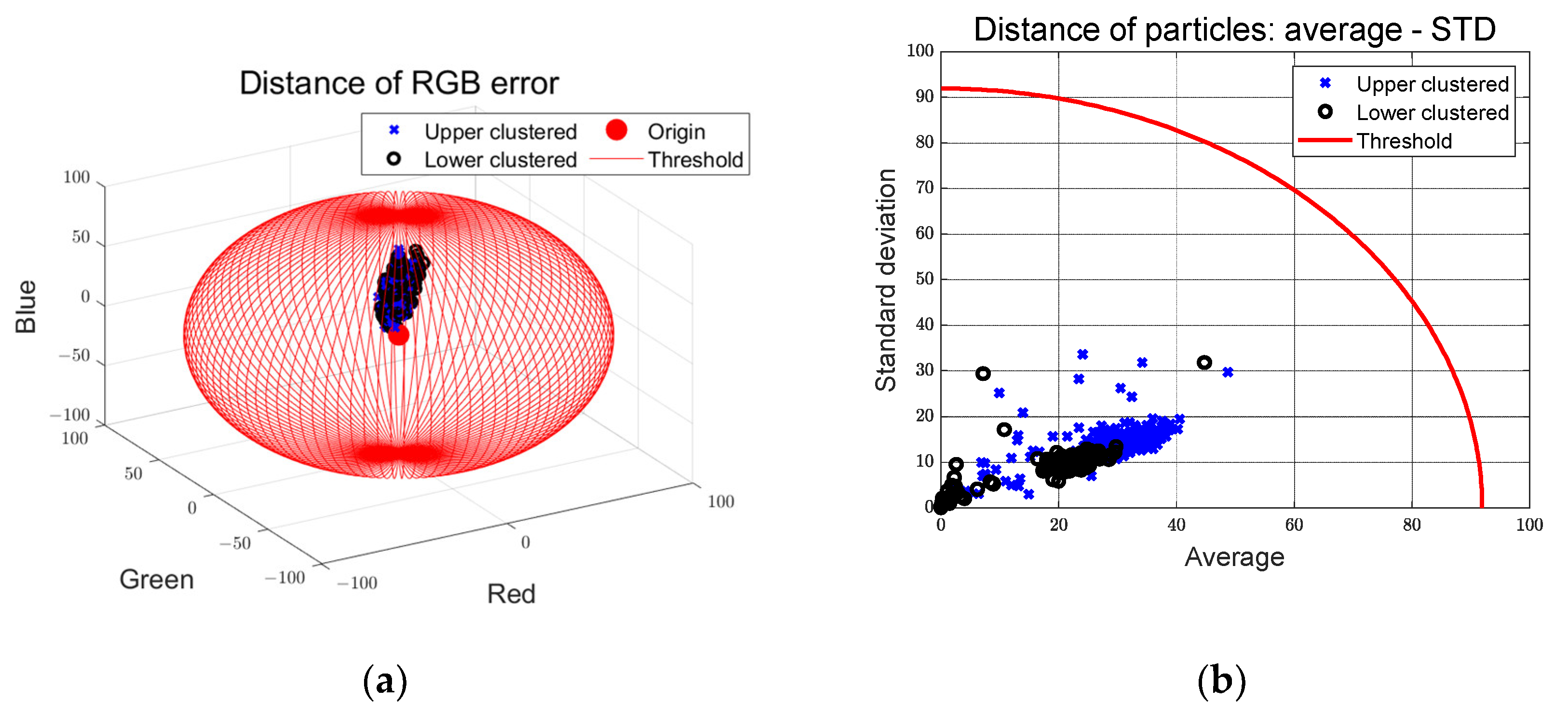

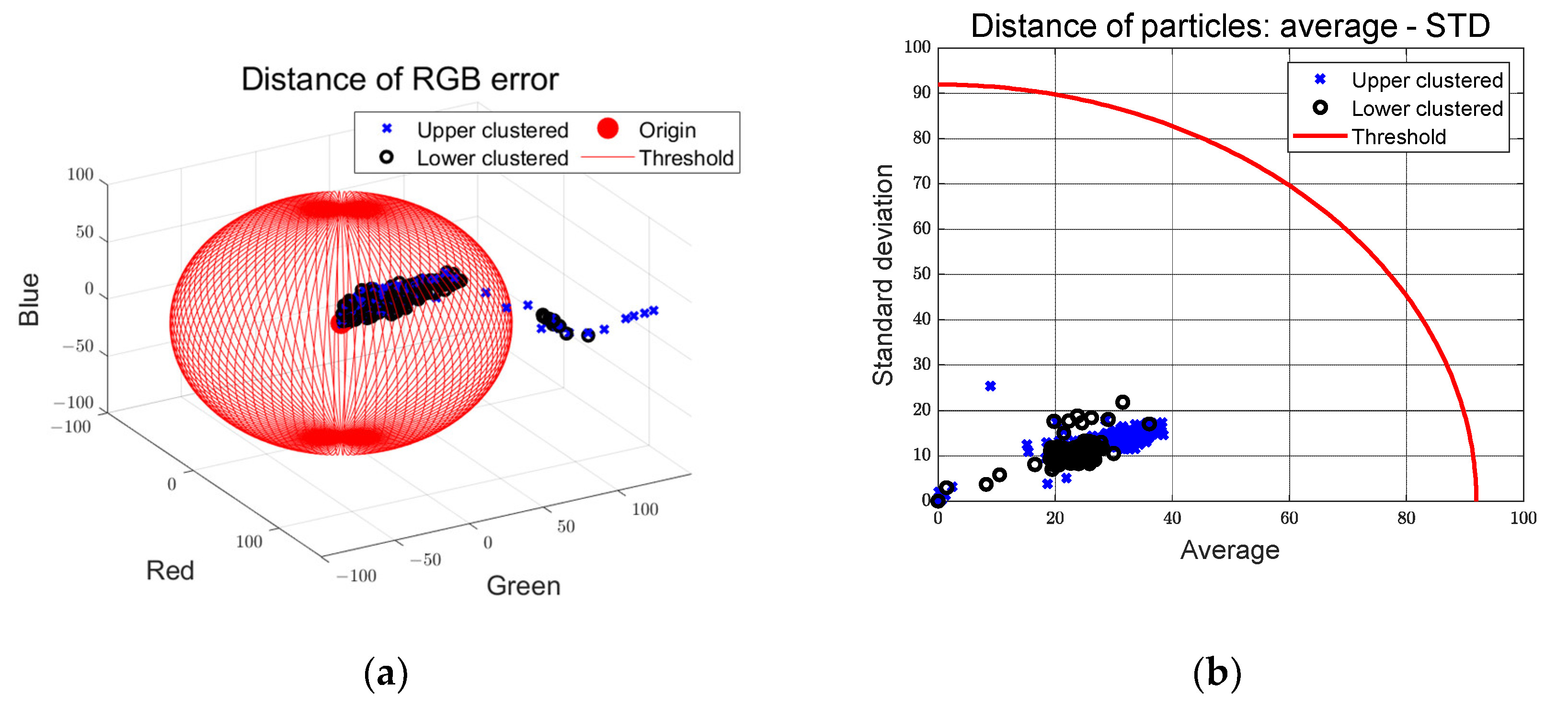

As shown in Equations (2) and (3), the likelihood is calculated using a Multinomial resampling methodology that takes into account the error between the target RGB value and the RGB standard deviation. Particles with a low weight are then replaced by particles with a high weight through weight calculation, as shown in Equation (4). This enables the determination of the predicted location that most closely corresponds to the target RGB, as illustrated in Equation (5). The iterative execution of particle update, likelihood calculation, weight update, and resampling engenders the iterative refinement of the predicted location.

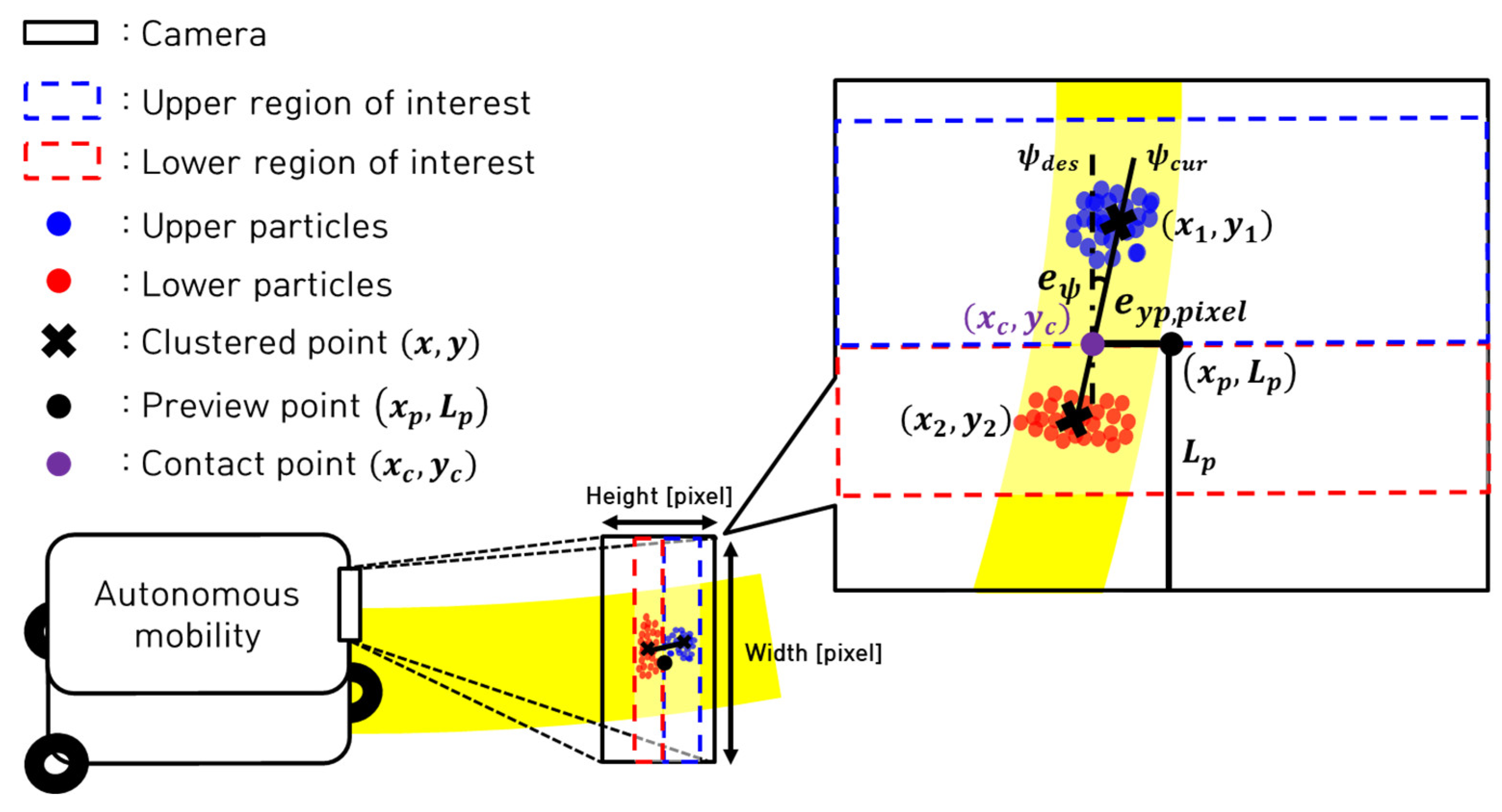

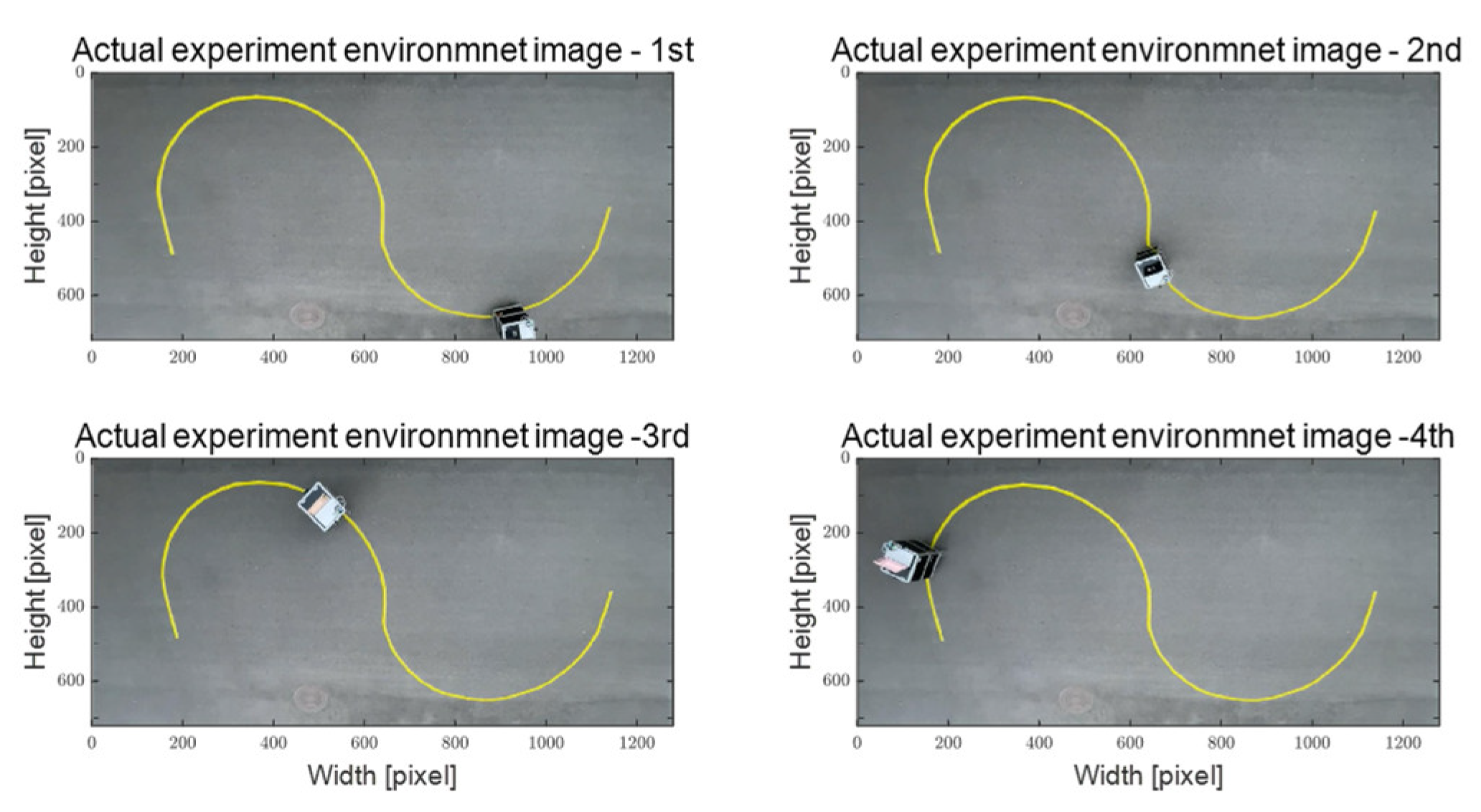

Figure 2 presents a schematic illustration of the control error derivation using a multi-particle filter.

Particles within two regions of interest (ROIs), denoted as upper and lower, are generated. Clustering these particles yields two clustering points emanating from the location most akin to the target path RGB. Employing a straight line that intersects the two cluster points and a contact point

perpendicular to the preview point

, pixel-based lateral preview error

and current yaw angle error

are derived (Equations (6)–(8)).

The preview point is designed at the camera’s width midpoint, with preview distance and ROI range being predetermined design parameters. To accommodate camera distortion and perspective, the upper and lower ROIs are set at a 2:1 ratio. The desired yaw angle, as determined through the multi-particle filter for a straight path, approximates zero based on experimental findings. The derived value for the target yaw angle is approximately −0.04 (rad).

2.2. Adaptive Steering Control Algorithm

Figure 3 presents the schematic model illustrating the proposed adaptive steering control algorithm. Multi-particle filter-based control errors are derived using the RGB data from the camera image. The coefficients pertinent to the RLS-based simplified error dynamics are estimated, alongside control errors, by assessing the rate of change in errors obtained through the Kalman filter. The adaptive steering control algorithm, designed based on the sliding mode approach and weighted injection, is responsible for determining the desired steering angle. This algorithm effectively employs both the estimated coefficient and control error.

Equation (9) encapsulates the integrated error achieved by assigning weights to the lateral preview and yaw angle errors, both of which stem from the multi-particle filter. Building upon this foundation, a simplified error dynamics is formulated, as depicted in Equation (10).

To deduce the coefficients pertinent to the error dynamics described in Equation (10), RLS with multiple forgetting factors is employed. This method involves the definition of output

, regressor

, and estimate

, as outlined in Equations (11) and (12).

Equation (13) presents a cost function for RLS with multiple forgetting factors that span from zero to one. As the value approaches unity, preceding data is accorded greater weight, thereby instigating gradual parameter estimation changes.

By leveraging the regressor outlined in Equation (12), the coefficients governing the estimation simplified error dynamics, which minimize the cost function of the recursive least squares method defined in Equation (13), are computed. This process is embodied in Equation (14) [

30].

Within Equation (14), the gain value

for estimation and the covariance

, which remains positively oriented, are updated at each sampling instance. This is outlined in Equation (15).

To assess the stability of the control algorithm and devise the control input, an A Lyapunov candidate function is introduced, as shown in Equation (16). The integrated error is factored into this process. Equation (17) lays out two conditions for the Lyapunov candidate function and its derivative, ensuring the integrated error’s convergence to zero within a finite time.

To ascertain the magnitude of the injection term required to expedite the integrated error’s convergence to zero within a finite duration, the integrated error’s rate of change, defined in Equation (10), can be substituted for the time derivative of the Lyapunov candidate function. This leads to the representation shown in Equation (18).

The control input, devised to minimize errors and ensure control stability, is defined in Equation (19). Within this equation, coefficients of the simplified error dynamics are estimated using RLS, and the injection term is established using integrated error, as denoted in Equation (20).

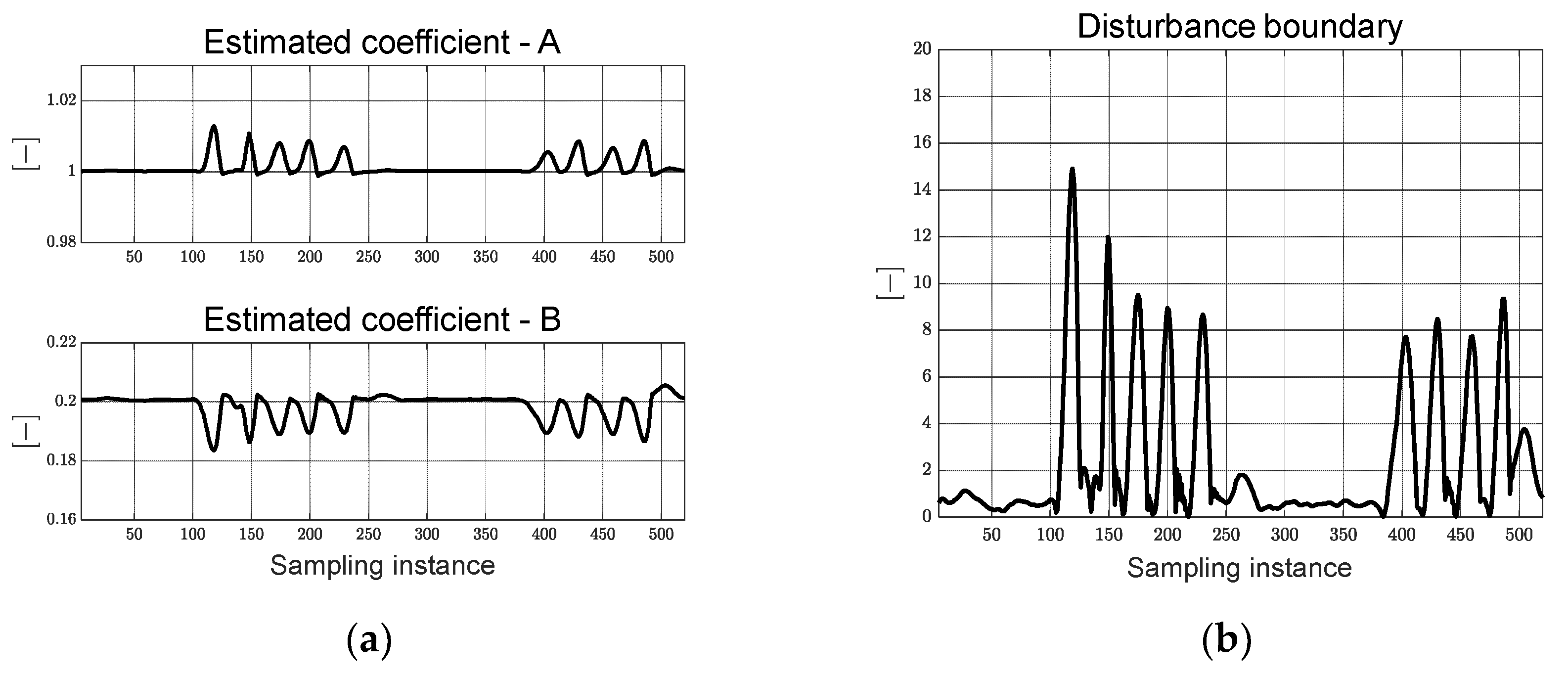

Substituting Equations (19) and (20) into the derivative of the Lyapunov candidate function results in the formulation depicted in Equation (21). The disparity between the actual system and the estimated value is represented as demonstrated in Equation (22). The scope of the disturbance boundary can be characterized using the reachability factor, as expounded upon in Equations (23) and (24).

By substituting the disturbance’s boundary, as compliant with Equation (24), into Equation (21), it can be expressed as Equation (25). Furthermore, by leveraging convergence condition 1 within a finite time, it can also be represented as Equation (26).

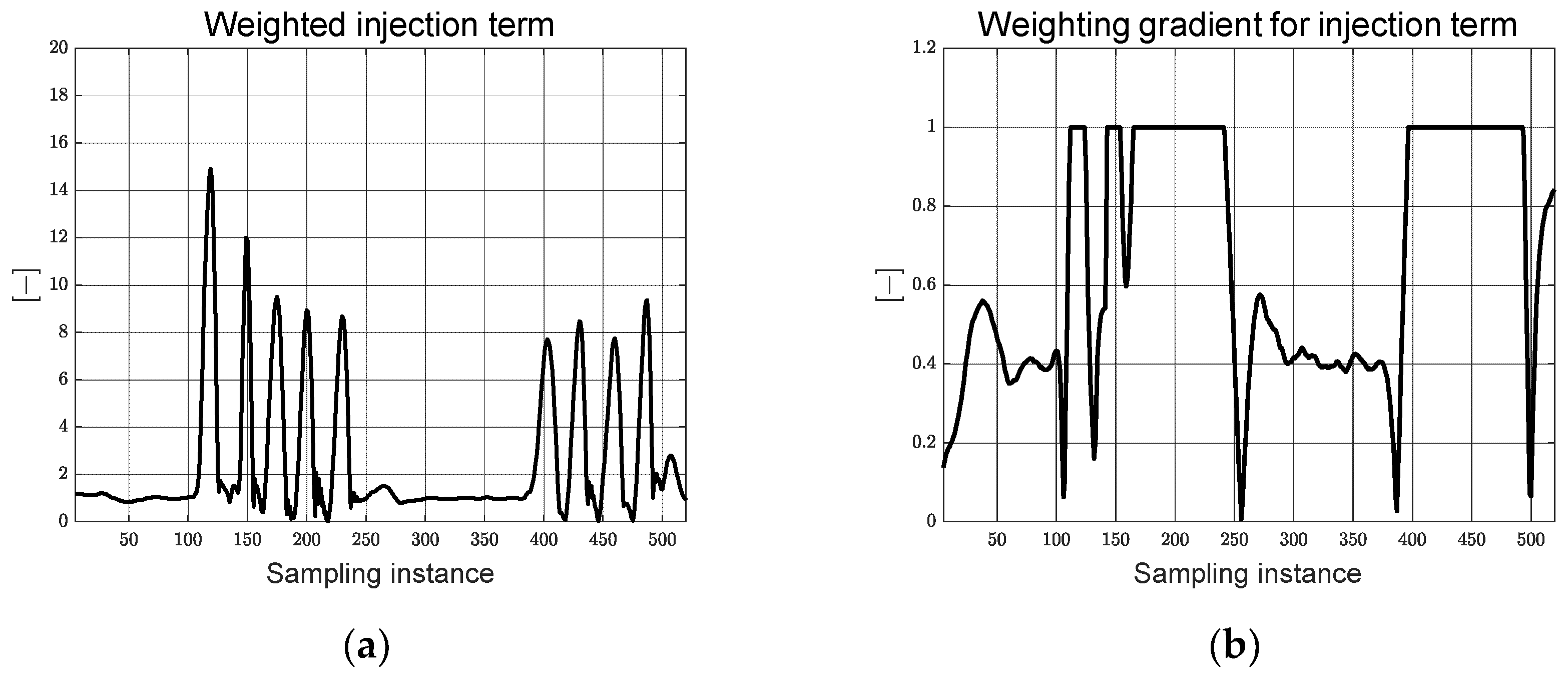

When the right-hand sides of Equations (25) and (26) are equated, the injection term’s magnitude can be determined using Equation (27).

The weighted injection term’s magnitude is defined as per Equation (28), with the weighting factor

ranging from zero to one. The weighting factor is designed using the absolute integrated error value and threshold for the integrated error (

), as elucidated in Equation (29). Where the threshold in Equation (29) is a design parameter used to adjust the gradient of the weighting factor. When the absolute value of the integrated error is smaller than the threshold, the weighting factor is computed by a linear function of less than one, which significantly impacts the finite time convergence condition. Conversely, when the absolute value of the integrated error is larger than the threshold, the weighting factor is set to a value of one. This ensures swift convergence to zero during instances of significant control error by modulating the injection term’s magnitude based on the error’s magnitude while adhering to the finite time convergence condition.

By constructing a Lyapunov candidate function grounded in a sliding mode approach and weighted injection, an adaptive steering control input is derived. Equation (30) showcases the derivation of the control input employing control errors and coefficients of the simplified error dynamics, as estimated through RLS. To mitigate the chattering phenomenon induced by the sign function, a sigmoid function is employed. When Equation (30) is integrated into Equation (18), which represents the Lyapunov function’s derivative, it yields Equation (31). This underlines that the algorithm’s Lyapunov stability is established based on the designed weighted injection term.

The ensuing section delves into the performance evaluation outcomes of the adaptive steering control algorithm, anchored in actual autonomous mobility.

4. Discussion

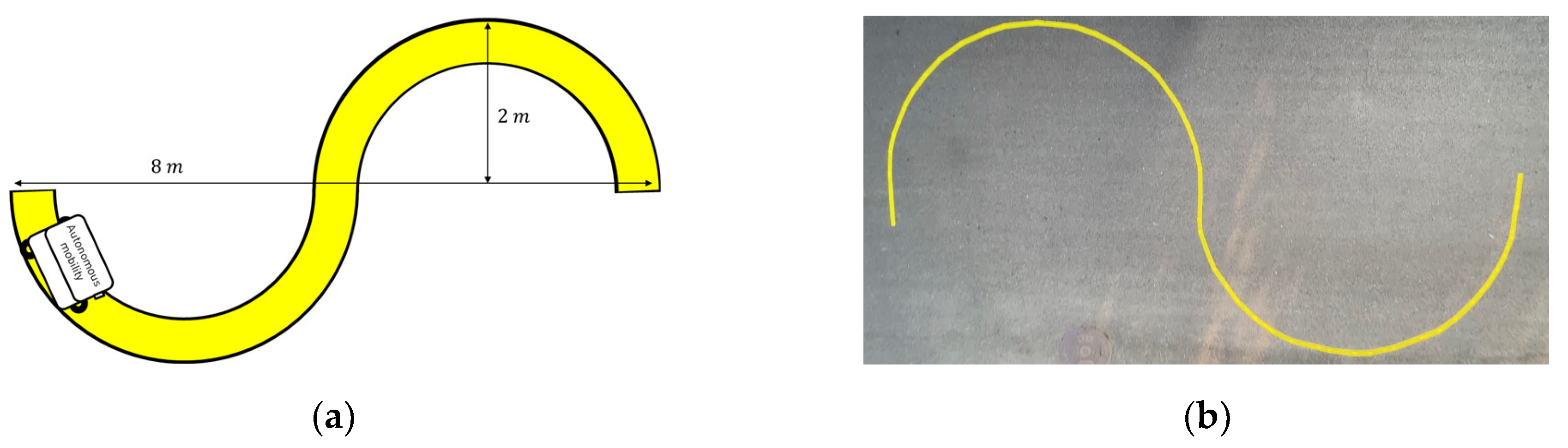

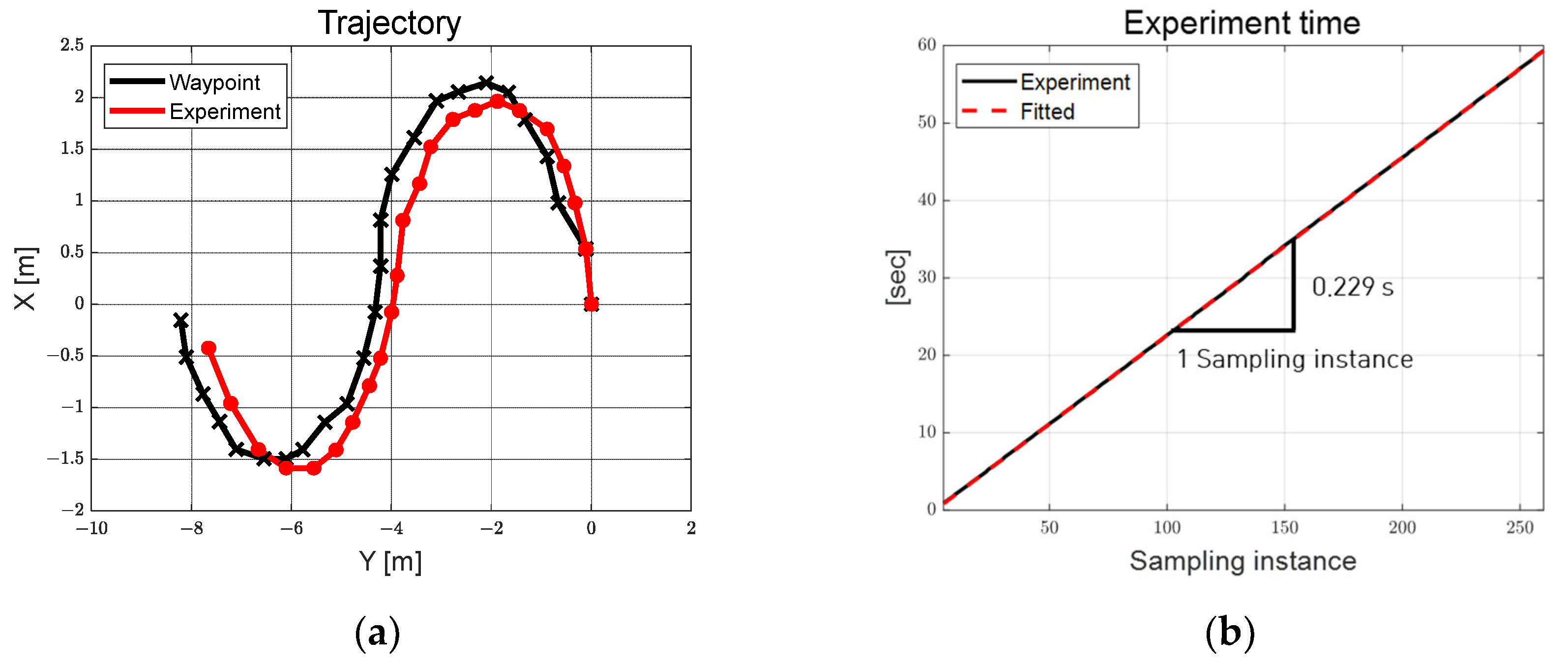

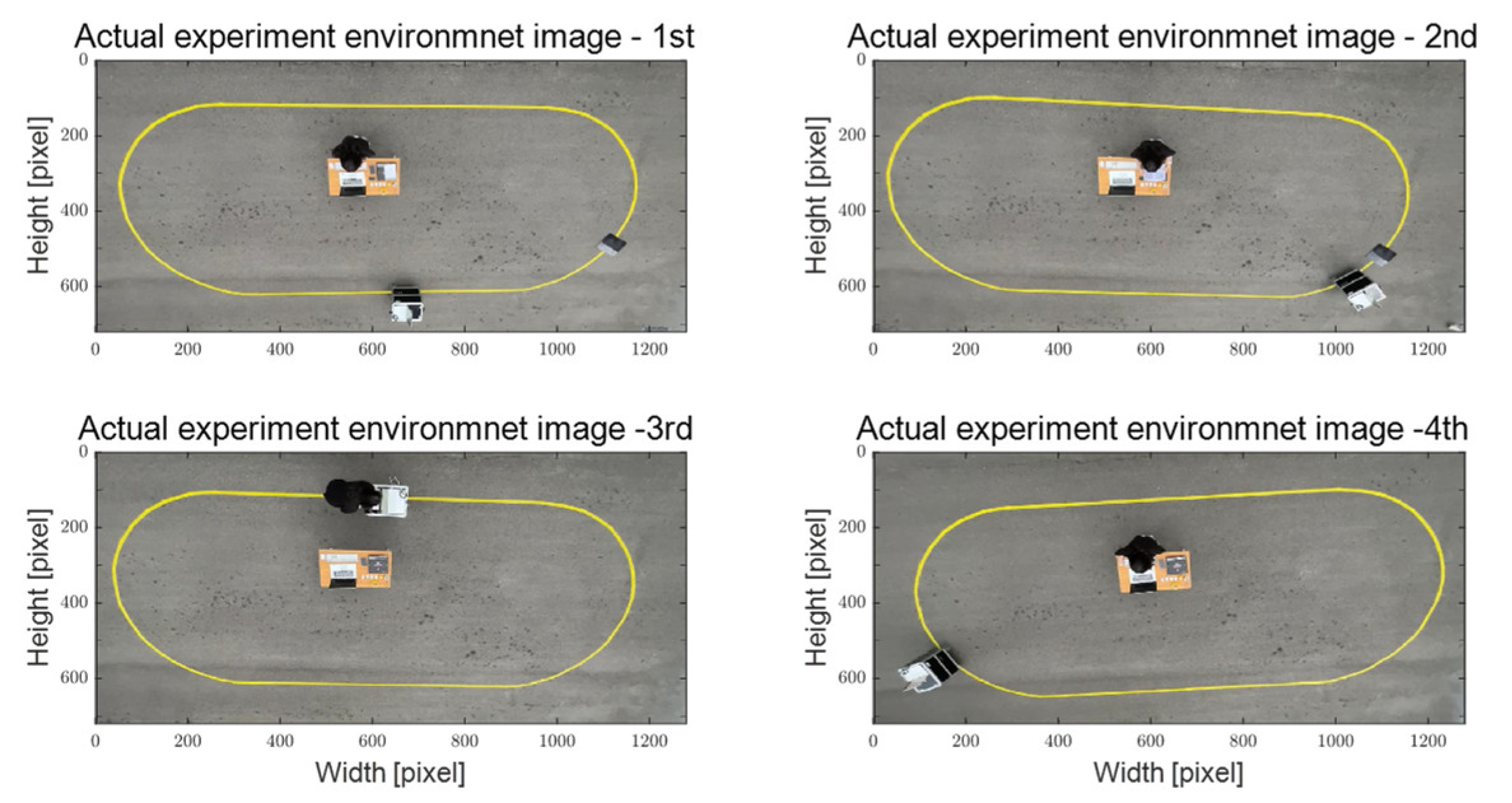

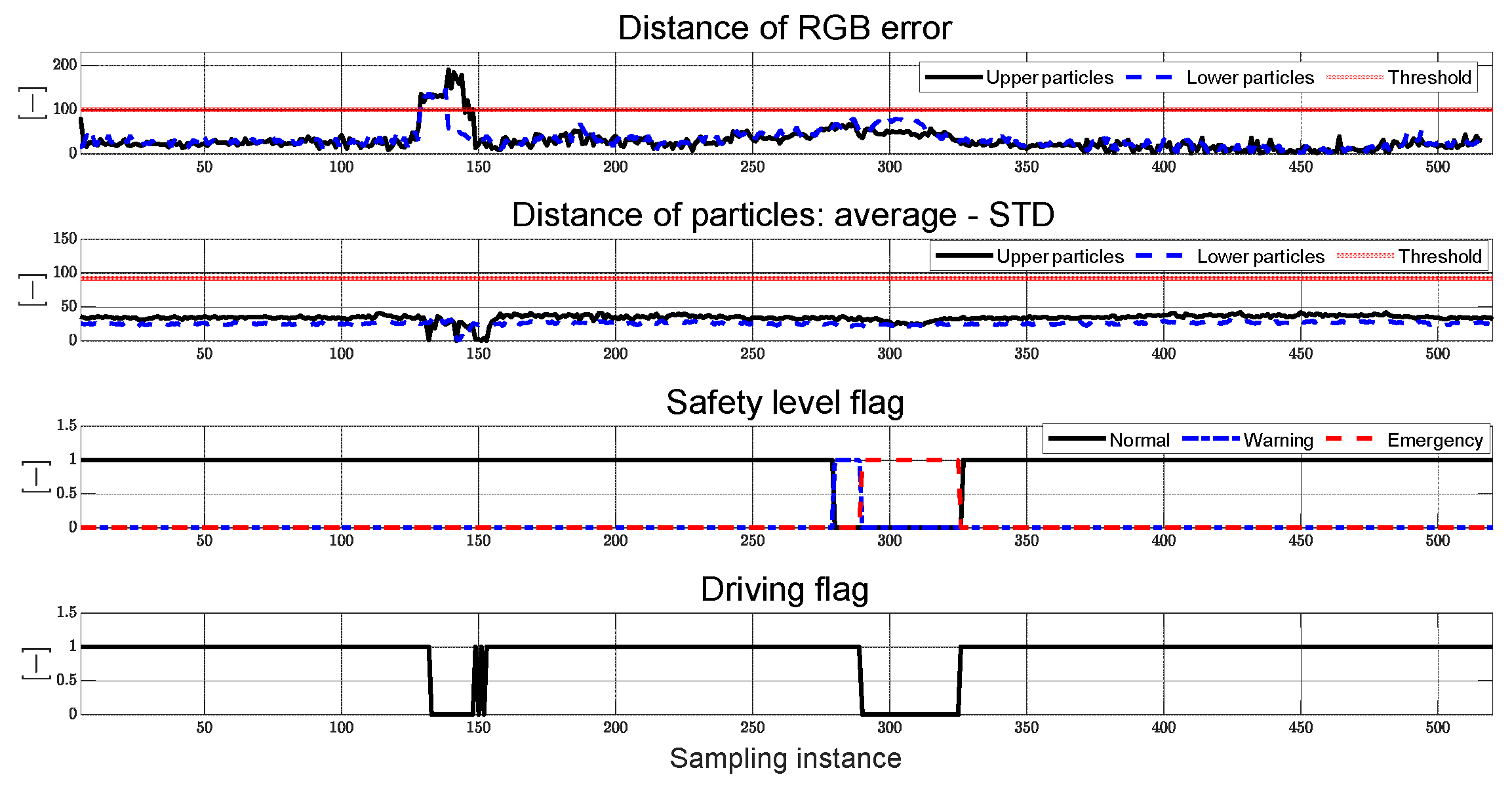

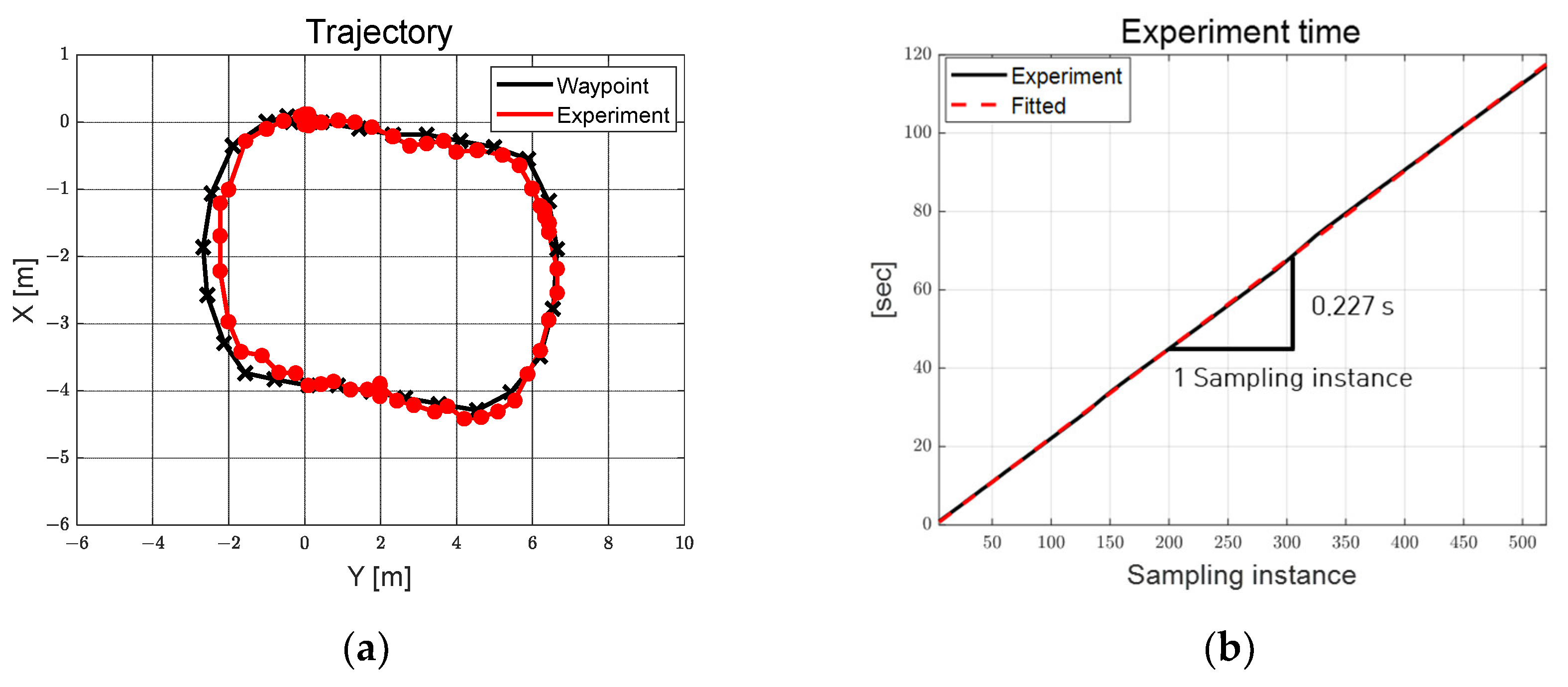

The performance of the adaptive steering control algorithm, as proposed in this study, was assessed using a single steering and driving system consisting of autonomous mobility. Evaluation scenarios encompass two distinct environments: an obstacle- and worker-free S-curved path and an elliptical path with obstacles and workers. These scenarios are designed to portray the utilization of autonomous platforms, catering to improve work efficiency and enhance worker convenience across diverse sectors, including smart farms and smart factories. The evaluation results confirm a reasonable path tracking performance of the adaptive steering control input, derived through multi-particle filter-based control error and coefficients estimated in real-time through RLS.

Since RLS-based coefficient estimation is sensitive to the initial value parameter setting, it was determined using the trial-and-error method. Additionally, the control error derivation in the recognition and the steering control process confirmed the oscillation phenomenon. This is expected to be the motion in the particle filtering process, the discrete signal of the rotation direction applied to the steering motor consisting of zero and one, and the signal processing process between the four MCUs and the main PC. The autonomous mobility used in this study traveled at a low speed of approximately 0.25 m/s, applying a constant PWM to the driving motor. With its relatively low mass of approximately 38.6 kg, the mobility exhibited reasonable path tracking outcomes even when experiencing oscillations. Nevertheless, it should be noted that such oscillations might potentially impact path tracking performance during high-speed operation. Addressing this concern is a goal for future work, potentially involving the utilization of filtering techniques like RLS and the Kalman filter to compute control errors, as well as smooth and fast signal processing between the MCUs and the main PC.

In addition, because the algorithm proposed in this paper relies on RGB images, changes in lighting conditions may affect the performance of the control. Since the control error is derived from tracking the RGB values of the target path using a particle filter, any sudden change in lighting requires modification of the particle filter parameters. Accordingly, the performance evaluation of the algorithm proposed in this paper was conducted between 6 and 8 PM, when sunlight does not have much influence. The average RGB values for scenarios 1 and 2 are shown in

Table 3. In the future, we plan to enhance the algorithm by incorporating average RGB values to robustly detect the target path, even in varying lighting conditions.

5. Conclusions

In this paper, we propose an adaptive steering control algorithm employing a sliding mode approach and a weighted injection term. The multi-particle filter-based control error using a camera was derived, and simplified error dynamics based on integrated control were defined by applying weighting factors to the control error. We estimate the coefficients for this error dynamics using RLS with multiple forgetting factors. The adaptive steering control input is then formulated by designing a cost function that incorporates the weighted injection term and sliding mode approach using the control errors and estimated coefficients.

The objective of this study is to achieve path tracking steering control for autonomous mobility platforms, overcoming the complexity-induced uncertainty of mathematical models seen in the development of diverse autonomous systems. The adaptive steering control input can be determined using the control error derived from pixel via a multi-particle filter, avoiding reliance on mathematical model parameters. This is achieved by estimating coefficients of the simplified error dynamics using real-time RLS. Furthermore, by incorporating a weighted injection term that adjusts according to the control error’s magnitude, the adaptive control input capable of rapidly reducing the control error to zero is attained while adhering to finite time convergence conditions.

The multi-particle filter and RLS-based adaptive path tracking algorithm were implemented in the MATLAB environment and evaluated using actual autonomous mobility. As a result of the evaluation, it was confirmed that the proposed adaptive steering control algorithm demonstrated reasonable path tracking performance on an S-curved path without obstacles and an elliptical path with obstacles. On average, the algorithm took 0.228 s per sampling instance. Furthermore, by implementing a functional safety algorithm, we have confirmed the potential for utilizing autonomous mobility that enhances work safety, efficiency, and worker convenience in diverse industries. However, an oscillation was observed as a result of the particle filtering process used to derive the control error, the signal processing between the four MCUs and the main PC, the discrete pulse signals applied to the steering motor and the steering control process.

In the future, we intend to assess scenarios at varying speeds and paths, aiming to enhance data and signal processing through software and hardware enhancements to minimize oscillations phenomenon in the derivation of control errors and steering control process. Through the software and hardware advancements, it is anticipated that the proposed perception and control algorithms can be effectively applied to path tracking of autonomous mobility platforms in various other applications, such as autonomous vehicles and robots, for active collaboration with human workers.