Abstract

Display color line defect detection is an important step in the production quality inspection process. In order to improve the detection accuracy of low contrast line defects, we propose a display line defect detection method based on color feature fusion. The color saliency channels in the RG|GR and BY|YB channels were obtained using the relative entropy maximum criterion. Then, RG|GR were combined with the a channel and BY|YB with the b channel to calculate the red-green and the blue-yellow color fusion maps. The fusion color saliency map of the red-green and the blue-yellow color fusion maps was obtained by color feature fusion. Finally, the segmentation threshold was calculated according to the mean and standard deviation of the fusion color saliency map. The fused color saliency map was binarized and segmented to obtain a binary map of color line defects. The experimental results show that for the detection of multi-background offline defects, the detection accuracy of the algorithm in this paper is better than 90%, while other mainstreams fail to detect. Compared with state-of-the-art saliency detection algorithms, our method is capable of real-time low-contrast line defect detection.

1. Introduction

The quality inspection of display products plays an important role in the production process. Existing display quality inspection uses Automated Optical Inspection (AOI) equipment for manual feeding to automatically detect defects in the display. The detection accuracy of AOI equipment depends on the corresponding algorithm, which limits the application of AOI technology [1]. Industrial production still relies on manual detection, which has low efficiency and unstable accuracy due to the influence of subjective feelings of testers [2]. In particular, color low-contrast defects are difficult to detect by the human. Therefore, using machine vision and digital image processing technology to achieve display defect detection has become an urgent problem to be solved.

Display defect detection technology has made great progress. Existing display defect detection methods are mainly divided into three types: methods based on image registration [3,4], background reconstruction [5,6,7,8,9,10] and deep learning [11,12,13,14,15,16,17]. Zhang et al. [4] utilized PatMax and image correction technology based on affine transformation to solve the problem of incompletely aligned pixel edges when the template image is registered with the test image. The method improves the accuracy of defect detection but cannot extract the features from the uniform background for the registration process. Ma et al. [7] proposed using the Gabor filter to remove noise and background from display images. Aiming at the problem of low defect contrast, the method utilizes the optical properties in printing to obtain images with the smallest contrast by blurring to achieve background reconstruction. This method can detect low contrast defects, but requires strict display background conditions and cannot be used in multi-display backgrounds. Lin et al. [11] proposed a deep channel attention-based classification network (DCAnet) as an image feature extractor and then proposed an adversarial training algorithm based on convolutional neural networks (CNN) to detect Mura defects. Zhu et al. [12] proposed a channel attention network based on layered multi-frequency. The network uses the attention mechanism to solve the problem of large variation in the aspect ratio of scratch defects. Zhu et al. [13] proposed a defect detection method for AOI equipment, which uses the Yolov3 [18] method to detect display point defects and abnormal display defects, which can detect defects in multiple backgrounds at the same time. Chang et al. [14] proposed a method combining image preprocessing and CNN for display defect detection and proposed a training method for the problem of small defect areas and the different background, which leads to sample imbalance. The deep learning method needs to construct a large amount of sample data to achieve effective detection, resulting in poor performance in small sample data.

Before defect detection, the screen needs to be powered on and display the test image. Commonly used test images include solid color images (gray, white), grayscale transition images, checkerboard images, and cross test images. The line defects appear as color or dark lines in the test images. Multiple display backgrounds make it difficult to extract line defect features. In the grayscale transition picture, the color of line defects will change according to the grayscale transition background, and also manifest as defect brightness change and defect contrast reduction, which makes it hard to accurately detect line defects in multiple backgrounds.

Existing methods lack the ability to effectively detect colored line defects in multiple backgrounds. Inspired by the theory of salient object detection, we propose a method for display line defects detection based on color feature fusion. To overcome the difficulty in extracting defect features under multiple display backgrounds, we adopted the salient color features method and adaptively obtained the color saliency channel based on the criterion of maximum relative entropy. To realize accurate low-contrast line defect detection, we adopt the fusion calculation method of significant color features.

Our contributions are as follows:

- (1)

- A novel display line defect detection method is proposed for the stable detection of line defects under multiple backgrounds.

- (2)

- A method of fusion salient color features is proposed to achieve background suppression of low-contrast defects and the enhancement of objects.

- (3)

- A salient color channel selection method is proposed, which realizes the salient color channel selection under multiple backgrounds.

2. Related Works

In recent years, the defect detection technology of display has made significant progress, among which the low contrast defect has drawn significant attention. Ngo et al. [5], respectively, adopted low-pass filtering background reconstruction, polynomial fitting and discrete cosine transform on the input image to obtain defect differential maps, followed by threshold segmentation to detect the defects in the difference shadow image. Yang et al. [6] proposed an anomalous region detection method using a gradient-based level set to accurately segment defects in candidate regions. Cui et al. [19] proposed using the double-segment exponential transform method to enhance the defect, solving the problem of close defect value to the background in a differential image. Finally, the Otsu’s method was used to achieve accurate segmentation of the defect. Hou et al. [20] proposed an Otsu’s-based method to select defect candidate regions, and then used variance and meshing to detect Mura and edge defects.

The salient object detection methods mainly focus on the places where the human eye pays the most attention in natural scenes. Commonly used methods include the spectral residual-based method (SR) [21], and frequency domain-based methods include (FT) [22], Phase spectrum of quaternion Fourier transform (PQFT) [23], Hypercomplex Fourier transform (HFT) [24] and so on. The FT method considers that the salient region is represented in a certain frequency range in the spectrum, and therefore uses difference Gaussian filtering to achieve the detection of the salient region. The PQFT method considers that the salient region can be represented by the phase spectrum in the frequency spectrum, so a direct inverse transformation of the phase spectrum can obtain the salient region. The HFT method uses a Gaussian kernel to convolve the frequency domain amplitude spectrum to obtain the significant region, and the method requires the determination of the optimal Gaussian kernel. These methods have been applied to the actual defect detection [25,26]. The advantage of the frequency domain-based method is that the periodic signal can be removed by the frequency domain analysis method, which can process images with a large number of periodic textures. These histogram-based contrast (HC) [27] and local histogram-based contrast (LC) [28] methods based on color information mainly quantify the colors in natural scenes to obtain saliency targets, but they are not effective for target detection in monotonous scenes. Saliency methods mainly use multiple feature fusion methods to detect salient objects [29], but rarely use individual features for salient object detection.

3. Methodology

3.1. Algorithm Architecture

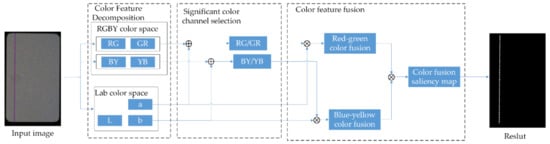

Figure 1 shows the algorithm architecture of a display color line defect detection method based on color feature fusion.

Figure 1.

Algorithm Architecture.

First, the original image is decomposed into color features using RGBY color space and Lab color space. Secondly, for the RG/GR-BY/YB opposite channel, the color saliency channel is obtained according to the maximum relative entropy criterion. The third is to calculate the red-green color fusion map and the blue-yellow color fusion map of the combination of RG|GR and a channel BY|YB and b channel, respectively, to perform color feature fusion. Color feature fusion is applied to obtain the fusion color saliency map of the red-green saliency map and blue-yellow saliency map. Finally, according to the segmentation threshold calculated by the mean and standard deviation of the fused color saliency map, the fused color saliency map is binarized and segmented to obtain a binary map of color line defects. It effectively realizes the accurate detection of display screen line defects under multiple backgrounds.

3.2. Color Feature Extraction

There are two main color spaces commonly used in salient object detection. A saliency-based color feature was proposed in RGBY [30], which is a wide-amplitude color-luminance separation color space. The other is the Lab color space, which is the most commonly used color space in saliency object detection. The original intention of the color space is designed according to human eye perception. So, the color characteristics are also in line with human eye perception [31].

The RGBY color space calculation method is described as:

where , and are the three channels of the original image. The RGBY color airdrop decomposes the RGB input image into two parts, including the color feature and the I brightness. The color information is the main features of line defects, so we only keep the color features in the RGBY space.

In [32], it was pointed out that the human eye’s attention to color in the RGBYI space mainly focuses on red and blue, thus forming an opposing color space. However, the salient color under any channel in defect detection is useful. Therefore, we expand the RGBY opposite space to form the RG|GR-BY|YB opposite color space. The calculation method is described as:

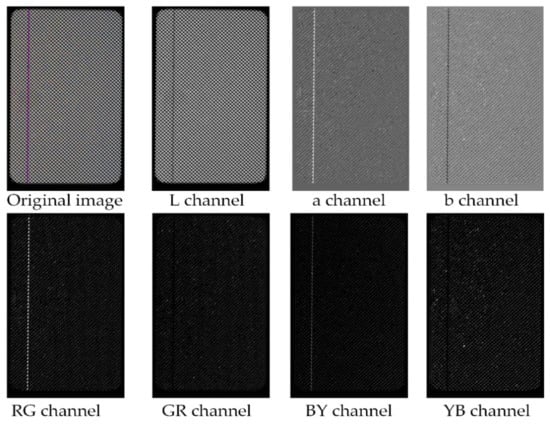

We decompose the original image into color as shown in Figure 2.

Figure 2.

Decomposition of original image using Lab color space and RGBY color space.

As shown in Figure 2, the Lab color space divides the original image into two parts. The brightness characteristics of the L channel are almost the same as the original image. The purple line defects of the image in the a channel are highlighted, while the background information is converted to a smaller value, and the position of the purple line in the b channel is converted to a value close to 0. In RG|GR-BY|YB space, the original image is mapped into four channels.

3.3. Significant Color Channel Selection

We start from the design principles of RGBY color space and Lab color space. The a channel of the Lab color space represents the color feature from red to green and the b channel represents the color feature from blue to yellow. This is related to the RG/GR-BY|YB color space we used. In RG|GR, the defect always occurs in one of the channels or in none of the channels due to its opposite properties. So, it was necessary to choose between opposite color channels. It is the same for BY|YB channels. We used the a-b channels to select the target channel containing the defect, respectively.

In the RG|GR-BY|YB contrasting colors, most of the background information has been eliminated while preserving the significant information of the defect. We used relative entropy to compare the similarity of RG|GR to a channel and BY|YB to b channel.

Relative entropy is calculated as:

The relative entropy represents the difference between the grayscale distribution (x) of the input feature and the grayscale distribution (x) of the input feature . When the two are the same, = 0, which can effectively measure the feature’s distribution distance.

In the above way, we obtain the RG|GR-BY|YB color channels that only contains the target.

3.4. Color Feature Fusion

In order to effectively separate the background and the target, we use feature fusion to enhance the target and suppress the background information. After adopting color feature selection in RG|GR-BY|YB space, we use RG|GR-BY|YB and a-b channels in Lab color channel for feature fusion. The channel selected by RG|GR-BY|YB only contains target information and a small amount of background noise, while the a-b channels contain a large amount of background information on the target. We fused the corresponding color channels so that the target in the a-b channels is enhanced while the background is suppressed.

where represents the red-green fusion feature map. represents the blue-yellow fusion feature map. ⨂ fusion operation. Further feature fusion on the red-green and the blue-yellow fusion feature maps are performed to obtain the fusion color saliency map:

where is the color saliency map, |∙| represents the modulo, and represents a Gaussian template.

We perform feature fusion twice, which can effectively separate color features from background noise. Finally, the separation of target and background can be achieved by using an adaptive threshold.

where is the segmentation threshold, is a parameter, is the mean value of the color saliency map and is the standard deviation of the color saliency map. and represent the length and width of the input image, respectively.

4. Experimental Results

4.1. Experimental Setup

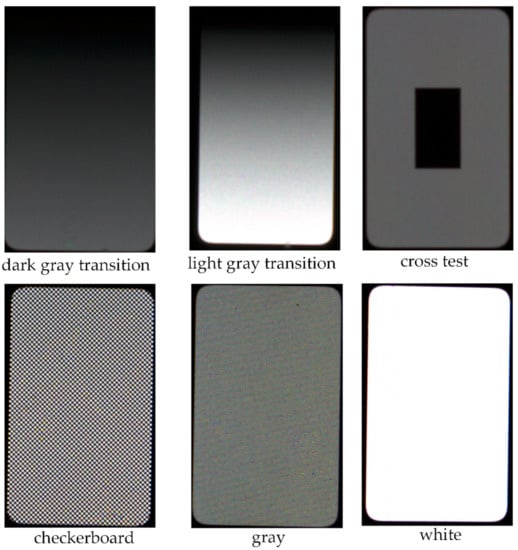

As shown in Figure 3, we used dark grayscale transition, light grayscale transition, cross test, checkerboard, gray and white backgrounds with dark and color line defect images of various contrasts. The image size is 951 × 1566. All images have corresponding GT maps in which defective areas are set to 1 and non-defective areas are set to 0. All experiments are run under MATLABR2018b with Inter Core I7-7700CPU, 16 G memory and 64-bit operating system.

Figure 3.

Typical Display Background.

4.2. Line Defect Detection Results

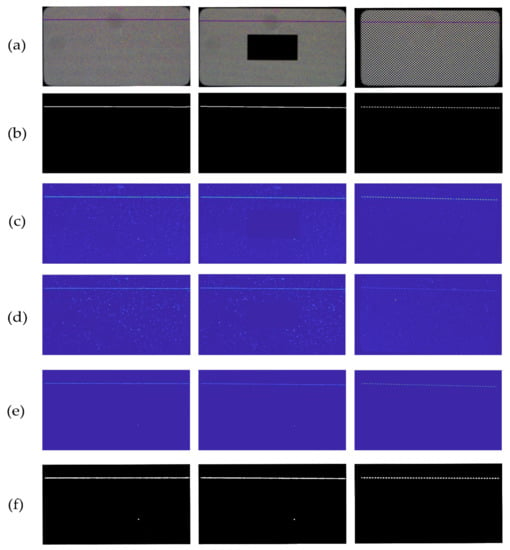

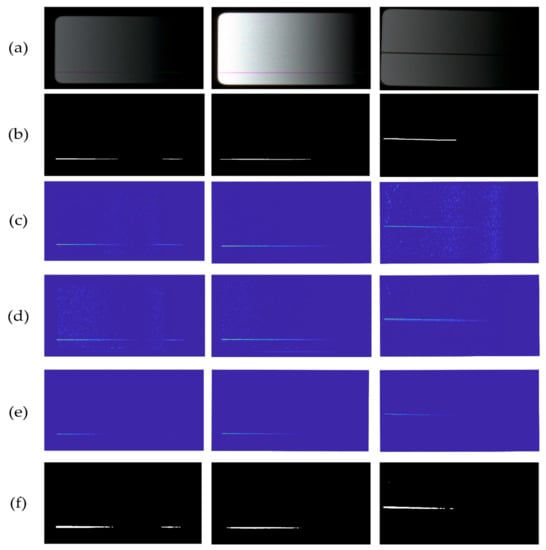

To show the detection results of the proposed method, we divided the line defects into stable and changing ones according to defect characteristics. Stable line defects will appear in any screen, as shown in Figure 4 below. The proposed method can directly detect defects under the condition of complicated background texture for checkerboard images and can effectively overcome the influence of transition background for grayscale images. The red-green in row (c) and blue-yellow in row (d) color feature maps are a little different but both describe the defect characteristics well.

Figure 4.

Stable feature defect detection results. (a) is the original image; (b) is the GT map; (c) red-green fusion feature map; (d) blue-yellow fusion feature map; (e) color fusion saliency map; (f) Threshold segmentation result map.

Figure 5 demonstrates the detection capability of our method for changing line defects. Due to the influence of grayscale transition background, the color of the line defects is not obvious to human eyes. It is difficult to find line defects when we directly observe the original image. The proposed method is able to detect low contrast as well as dark line defects. The color saliency map is shown in Figure 5e. The influence of the gray-scale transition background is effectively suppressed, while retaining the defects.

Figure 5.

Change defect feature detection results. (a) original image; (b) GT map; (c) red-green fusion feature map; (d) blue-yellow fusion feature map; (e) color fusion saliency map; (f) threshold segmentation result map.

TDR and FDR metrics are used to evaluate the defect detection results of our method.

where S is the threshold segmentation binary image, and GT is the ground truth image.

As shown in Table 1, the method in this paper achieves more than 90% TDR for both stable line defects and changing line defects. This shows that our method can effectively and stably detect color line defects in multiple backgrounds and achieve the accurate detection of low-contrast line defects.

Table 1.

Defect detection capabilities of our method.

4.3. Comparison of Different Methods

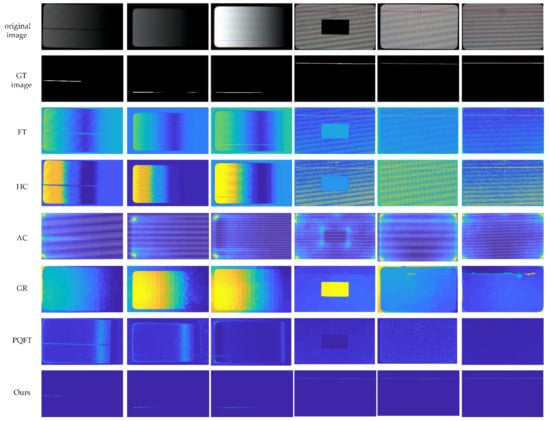

We compare saliency detection methods used in object detection. As shown in Figure 6, the saliency maps of FT, HC, Context-aware saliency detection (CA) [33], Graph-regularized saliency detection (GR) [34] and PQFT are compared with our method. The CA method uses local and global feature information to describe the salient regions and uses a priori constraints for salient region acquisition. The GR method uses super-pixels to detect the salient regions, which are determined by the super-pixel ensemble, and the method is driven by the data.

Figure 6.

Comparison of saliency maps of different methods.

As shown in Figure 6, in the grayscale transition picture, the FT method will consider the bright areas as significant areas when the background brightness is changing, which makes it difficult to separate the defects from the background.

It can be seen that the FT method is not useful for background suppression, making it difficult to separate the background and defects, especially under the cross test pattern. The detection results of the HC method is poor in the grayscale transition picture. Since the method uses the histogram to select salient objects, it makes brighter regions easier to be regarded as salient regions. The background and the target cannot be separated in the checkerboard. The AC method is easier to focus on the edges and corners of the image, which can only roughly describe the location of the defect and cannot accurately segment the defect. From the saliency map of the GR method, we could observe that the method cannot be used in the detection of in-line defects. The PQFT method has the ability to detect part of line defects, but its saliency map is relatively unstable. Among them, the purple line fails to be detected under the cross-test picture and the gray picture. There is a considerable amount of noise highlighted in grayscale transition images, which makes defect separation more difficult. On the contrary, our proposed method can preserve only line defects in the saliency maps, while suppressing the complicated backgrounds, making it simple to realize thresholding segmentation.

We use the metrics of NSS [35] and AUC to evaluate the quality of saliency maps. The NSS metric describes the object saliency of the saliency map. The larger the NSS value, the better the saliency map. AUC represents the authenticity of the detection method. When it is close to 1, the authenticity is the best, and when it is close to 0.5, the method is completely invalid. Due to the real-time requirement of defect detection, we also compared the time consumption of different methods, as shown in Table 2.

Table 2.

Performance comparison of different saliency methods.

As shown in Figure 6 and Table 2, compared with other methods, we can see that the PQFT method has the worst detection ability for dynamically changing images, with the smallest NSS average value. The NSS values of the FT method are relatively stable but small: around 1 under various display backgrounds, while the saliency map of the CA, HC and GR methods fluctuate greatly with the change in the background. In contrast, the proposed method has an average NSS value of 8 and is stable across different backgrounds. It can be seen from Table 2 that the HC method is completely ineffective when detecting changing defects, with the AUC values all close to 0.5. Compared with other methods, our method achieves a AUC value of 0.99, which demonstrates the highest authenticity of our method. When comparing time consumption, the PQFT method is the fastest, while the CA method consumes much more time than other methods. The time consumption of our method and the PQFT method is close to 0.7 s on average, which meets the needs of actual production.

5. Conclusions

In this paper, we propose a color line defect detection method for display screens based on color feature fusion. We use a salient color feature fusion method to effectively achieve line defect feature extraction and enhancement in multiple backgrounds. In the experimental section, we compare multiple methods, and the experimental results show that our proposed method is better than the existing methods. The method will soon be deployed in a real display production line for display defect detection. We have tested our proposed method with more defect types, and the results show that the method can detect weak color features and the shape of color regions is not limited. Among other things, our method can extract color Mura defects, which will be the next direction of our continued research on the method.

Author Contributions

Formal analysis, W.X., H.C., Z.W., B.L., L.S.; investigation, W.X., H.C.; methodology, W.X., H.C.; software, W.X.; validation, W.X., H.C.; resources, Z.W.; writing—original draft preparation, W.X.; writing—review and editing, W.X., H.C., B.L.; visualization, W.X.; supervision, H.C.; project administration, H.C., Z.W. All authors have read and agreed to the published version of the manuscript.

Funding

This paper is supported by the “Yang Fan” major project in Guangdong Province, China, No. [2020]05.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Abd Al Rahman, M.; Mousavi, A. A review and analysis of automatic optical inspection and quality monitoring methods in electronics industry. IEEE Access 2020, 8, 183192–183271. [Google Scholar]

- Ming, W.; Shen, F.; Li, X.; Zhang, Z.; Du, J.; Chen, Z.; Cao, Y. A comprehensive review of defect detection in 3C glass components. Measurement 2020, 158, 107722. [Google Scholar] [CrossRef]

- Li, C.; Zhang, X.; Huang, Y.; Tang, C.; Fatikow, S. A novel algorithm for defect extraction and classification of mobile phone screen based on machine vision. Comput. Ind. Eng. 2020, 146, 106530. [Google Scholar] [CrossRef]

- Zhang, J.; Li, Y.; Zuo, C.; Xing, M. Defect detection of mobile phone screen based on improved difference image method. In Proceedings of the 2019 International Conference on Intelligent Informatics and Biomedical Sciences (ICIIBMS), Shanghai, China, 21–24 November 2019. [Google Scholar]

- Ngo, C.; Park, Y.J.; Jung, J.; Hassan, R.U.; Seok, J. A new algorithm on the automatic TFT-LCD mura defects inspection based on an effective background reconstruction. J. Soc. Inf. Disp. 2017, 25, 737–752. [Google Scholar] [CrossRef]

- Yang, H.; Song, K.; Mei, S.; Yin, Z. An accurate mura defect vision inspection method using outlier-prejudging-based image background construction and region-gradient-based level set. IEEE Trans. Autom. Sci. Eng. 2018, 15, 1704–1721. [Google Scholar] [CrossRef]

- Ma, Z.; Gong, J. An automatic detection method of Mura defects for liquid crystal display. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019. [Google Scholar]

- Chen, L.-C.; Kuo, C.-C. Automatic TFT-LCD mura defect inspection using discrete cosine transform-based background filtering and ‘just noticeable difference’quantification strategies. Meas. Sci. Technol. 2007, 19, 015507. [Google Scholar] [CrossRef] [Green Version]

- Sun, Y.; Li, X.; Xiao, J. A cascaded Mura defect detection method based on mean shift and level set algorithm for active-matrix OLED display panel. J. Soc. Inf. Disp. 2019, 27, 13–20. [Google Scholar] [CrossRef] [Green Version]

- Sun, Y.; Xiao, J. A Region-Scalable Fitting Model Algorithm Combining Gray Level Difference of Sub-image for AMOLED Defect Detection. In Proceedings of the 2018 IEEE International Conference on Computer and Communication Engineering Technology (CCET), Beijing, China, 18–20 August 2018. [Google Scholar]

- Lin, G.; Kong, L.; Liu, T.; Qiu, L.; Chen, X. An antagonistic training algorithm for TFT-LCD module mura defect detection. Signal Processing Image Commun. 2022, 107, 116791. [Google Scholar] [CrossRef]

- Zhu, Y.; Ding, R.; Huang, W.; Wei, P.; Yang, G.; Wang, Y. HMFCA-Net: Hierarchical multi-frequency based Channel attention net for mobile phone surface defect detection. Pattern Recognit. Lett. 2022, 153, 118–125. [Google Scholar] [CrossRef]

- Zhu, H.; Huang, J.; Liu, H.; Zhou, Q.; Zhu, J.; Li, B. Deep-Learning-Enabled Automatic Optical Inspection for Module-Level Defects in LCD. IEEE Internet Things J. 2021, 9, 1122–1135. [Google Scholar] [CrossRef]

- Chang, Y.-C.; Chang, K.-H.; Meng, H.-M.; Chiu, H.-C. A Novel Multicategory Defect Detection Method Based on the Convolutional Neural Network Method for TFT-LCD Panels. Math. Probl. Eng. 2022, 2022, 6505372. [Google Scholar] [CrossRef]

- Ming, W.; Cao, C.; Zhang, G.; Zhang, H.; Zhang, F.; Jiang, Z.; Yuan, J. Application of Convolutional Neural Network in Defect Detection of 3C Products. IEEE Access 2021, 9, 135657–135674. [Google Scholar] [CrossRef]

- Li, Z.; Li, J.; Dai, W. A two-stage multiscale residual attention network for light guide plate defect detection. IEEE Access 2020, 9, 2780–2792. [Google Scholar] [CrossRef]

- Pan, J.; Zeng, D.; Tan, Q.; Wu, Z.; Ren, Z. EU-Net: A novel semantic segmentation architecture for surface defect detection of mobile phone screens. IET Image Process. 2022, 2022, 6505372. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Jin, S.; Ji, C.; Yan, C.; Xing, J. TFT-LCD mura defect detection using DCT and the dual-γ piecewise exponential transform. Precis. Eng. 2018, 54, 371–378. [Google Scholar] [CrossRef]

- Cui, Y.; Wang, S.; Wu, H.; Xiong, B.; Pan, Y. Liquid crystal display defects in multiple backgrounds with visual real-time detection. J. Soc. Inf. Disp. 2021, 29, 547–560. [Google Scholar] [CrossRef]

- Hou, X.; Zhang, L. Saliency detection: A spectral residual approach. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-tuned salient region detection. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Guo, C.; Ma, Q.; Zhang, L. Spatio-temporal saliency detection using phase spectrum of quaternion fourier transform. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Li, J.; Levine, M.D.; An, X.; Xu, X.; He, H. Visual saliency based on scale-space analysis in the frequency domain. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 996–1010. [Google Scholar] [CrossRef] [Green Version]

- Liu, G.; Zheng, X. Fabric defect detection based on information entropy and frequency domain saliency. Vis. Comput. 2021, 37, 515–528. [Google Scholar] [CrossRef]

- Zhang, F.; Li, Y.; Zeng, Q.; Lu, L. Application of printing defects detection based on visual saliency. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2021. [Google Scholar]

- Cheng, M.-M.; Mitra, N.J.; Huang, X.; Torr, P.H.; Hu, S.-M. Global contrast based salient region detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 569–582. [Google Scholar] [CrossRef] [Green Version]

- Zhai, Y.; Shah, M. Visual attention detection in video sequences using spatiotemporal cues. In Proceedings of the 14th ACM International Conference on Multimedia, SantaBarbara, CA, USA, 23–27 October 2006. [Google Scholar]

- Guan, S.; Shi, H. Fabric defect detection based on the saliency map construction of target-driven feature. J. Text. Inst. 2018, 109, 1133–1142. [Google Scholar] [CrossRef]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef] [Green Version]

- Shangwang, L.; Ming, L.; Wentao, M.; Guoqi, L. Improved HFT model for saliency detection. Comput. Eng. Des. 2015, 36, 2167–2173. [Google Scholar]

- Engel, S.; Zhang, X.; Wandell, B. Colour tuning in human visual cortex measured with functional magnetic resonance imaging. Nature 1997, 388, 68–71. [Google Scholar] [CrossRef]

- Goferman, S.; Zelnik-Manor, L.; Tal, A. Context-Aware Salient Detection. IEEE Trans Pattern Anal Mach Intell 2012, 34, 1915–1926. [Google Scholar] [CrossRef] [Green Version]

- Yang, C.; Zhang, L.; Lu, H. Graph-regularized saliency detection with convex-hull-based center prior. IEEE Signal Process. Lett. 2013, 20, 637–640. [Google Scholar] [CrossRef]

- Bylinskii, Z.; Judd, T.; Oliva, A.; Torralba, A.; Durand, F. What do different evaluation metrics tell us about saliency models? IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 740–757. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).