Abstract

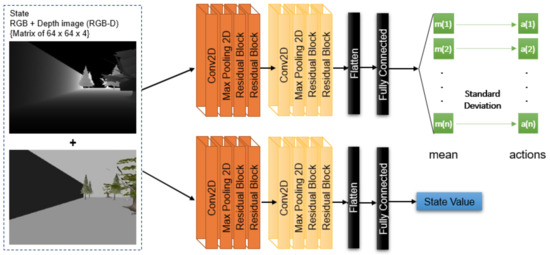

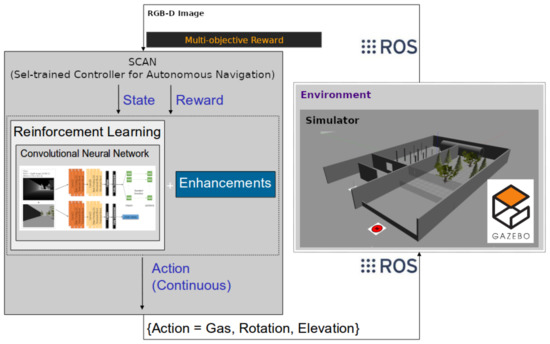

In this paper, we introduce a self-trained controller for autonomous navigation in static and dynamic (with moving walls and nets) challenging environments (including trees, nets, windows, and pipe) using deep reinforcement learning, simultaneously trained using multiple rewards. We train our RL algorithm in a multi-objective way. Our algorithm learns to generate continuous action for controlling the UAV. Our algorithm aims to generate waypoints for the UAV in such a way as to reach a goal area (shown by an RGB image) while avoiding static and dynamic obstacles. In this text, we use the RGB-D image as the input for the algorithm, and it learns to control the UAV in 3-DoF (x, y, and z). We train our robot in environments simulated by Gazebo sim. For communication between our algorithm and the simulated environments, we use the robot operating system. Finally, we visualize the trajectories generated by our trained algorithms using several methods and illustrate our results that clearly show our algorithm’s capability in learning to maximize the defined multi-objective reward.

1. Introduction

By reflecting on nature, one might see a variety of intelligent animals, each with some degree of intelligence, where all of them learn by experience to convert their potential capabilities into some skill. This fact inspired the machine learning community to devise and develop machine learning approaches inspired by nature, within the field of bio-inspired artificial intelligence [1]. Further, it motivated us to use the idea of learning by reinforcement signal in this paper. Reinforcement signals (such as positive rewards and punishments) are some of the primary sources of learning in intelligent creatures. This type of learning is implemented and applied by the reinforcement learning family of algorithms in machine learning.

While learning can be considered a type of intelligence, it can only manifest itself when applied to an agent or when used to control one. An agent could be any actual or simulated machine, robot, or application with sensors and actuators. Considering several categories of robots, Unmanned Aerial Vehicles (UAVs) are among the most critical agents to be controlled because they can fly, which means they can move to locations and positions that are not possible for Unmanned Ground Vehicles (UGV) and Unmanned Surface Vehicles (USV). One of the essential considerations in controlling a UAV is its navigation and control algorithm. There are several kinds of methods used for navigation in UAVs, some of them are dependent on some external guiding system (such as GPS), and a couple of them are based on rule-based controllers, which are different from an alive creature.

In this paper, we introduce a self-trained controller for autonomous navigation (SCAN) (Algorithm 1) in static and dynamic challenging environments using a deep reinforcement learning-based algorithm trained by Depth and RGB images as input, and multiple rewards. We control the UAV using continuous actions (a sample generated trajectory by our algorithm is shown in Figure 1) and train our RL algorithm in a multi-objective way in order to be able to reach a defined goal area while learning to avoid static and dynamic obstacles. We train our robot in a simulated environment using Gazebo sim., and for the communication between the algorithm and simulated agent, we use Robot Operating System (ROS).

In the rest of this text, we first discuss the related literature and then explain our main work with a detailed explanation for each part of our proposed algorithm, such as enhancements, neural networks, obstacle detection method, reward calculation, and trajectory visualization (In this research, to visualize the 3D trajectory in a 3D environment, we used 3D-SLAM. However, SLAM is used only for visualization purposes, and it is not used in any way for training, playing, or control of the UAV). In the next step, we present our results and discuss some crucial aspects of our work before the conclusion. Before starting our literature review, we want to emphasize the contributions of our paper here:

- First, we proposed a new RL-based self-learning algorithm for controlling a multi-rotor drone in 3-DoF (x, y, and z) in highly challenging environments;

- Second, our proposed algorithm simultaneously trains the policy and value networks using several rewards to create a multi-objective agent capable of learning to traverse through an environment to reach a goal location while avoiding the obstacles;

- Third, we verified our algorithm using two highly challenging environments, including static and dynamic obstacles (such as moving walls and nets);

- Fourth, our algorithm is an end-to-end self-learning controller in which the RGB-D image is the input to the policy network, and three-dimensional continuous action (gas (moving forward), rotation (to left or right), and elevation) are the output of our algorithm;

- Fifth, we used an onboard method (that is, defined within our algorithm, not received from external sources such as simulator) to calculate the reward related to obstacle avoidance and prediction of collisions, which makes our method capable of further learning after deployment on a real UAV.

2. Related Work

In this section, we first review works that used a rule-based controller such as PID, SMD, MPC, or LQR for control of the robot (UAV), then focus on works that used deep learning, or deep reinforcement learning, or reinforcement learning for low-level and high-level control of the UAV.

Looking at the literature, there are a couple of approaches that used rule-based methods for autonomous navigation. For example, the authors of [2] proposed a path planning algorithm using ant colony clustering algorithm. In [3] is proposed a method for generating a navigation path considering the energy efficiency. In [4], Singh et al. proposed an approach for obstacle avoidance in challenging environments. An approach for navigation and guidance proposed in Mina et al. [5] capable of multi-robot control and obstacle avoidance. Another approach for swarm robot control in terms of guidance and navigation is proposed in [6].

In addition, reinforcement learning and deep RL are used in several works to create intelligent autonomous robots by acting as the low-level and high-level flight controllers in Unmanned Aerial Vehicles (UAVs). In the high-level case, the controller generates the waypoints or trajectories and passes them to the low-level controller, and in the low-level case, the controller receives the waypoints or trajectories and executes them on the quad-copter either by sending speeds to the motors or by generating the proper forces. In terms of the low-level flight controllers, in work by Ng et al. [7], authors captured the flight data of a helicopter and used it to create a transition model for training low-level flight controllers for helicopters. Linear quadratic regulator (LQR) and differential dynamic programming (DDP) were used in [8] to develop an RL-based flight controller. Hwangbo et al. [9] proposed a low-level flight controller capable of generating motor speeds using reinforcement learning and proportional-derivative (PD). Molchanov et al. [10] proposed an approach focused on developing a low-level RL-based flight controller that automatically learns to reduce the gap between the simulation and actual quadcopters. The authors of [11] develop a method of combining PD controller and reinforcement learning-based controller for controlling a quadcopter in faulty conditions and use LSTM networks to detect the fault in different conditions.

In terms of high-level control using reinforcement learning, [12] proposed a method to control the drone using Deep RL for exploration and obstacle avoidance. In [13] by Zhang et al., guided policy search (GPS) is used after training in a supervised learning approach using data provided by the model predictive controller (MPC) and Vicon system for accurate position and attitude data. Liu et al. [14] used virtual potential field to develop a multi-step reinforcement learning algorithm. In [15], deep RL is used for obstacle avoidance in challenging environments. Long et al. [16] used a deep neural network in an end-to-end approach for efficient distributed multi-agent navigation, and their algorithm is capable of obstacle avoidance during navigation. In [17], deep RL is used to create a controller capable of path following while interacting with the environment. Zhou et al. [18] analyzed deep RL in challenging environments for formation path planning and obstacle avoidance. Liu et al. [19] used RL and probability map to create a search algorithm and improve the detection ability of the algorithm. Finally, deep RL is used for local motion planning in an unknown environment in [20], and for trajectory tracking and altitude control in [21].

In contrast to many rule-based and traditional methods that perform weakly in complex and dynamic environments and high dimensional state spaces, deep reinforcement learning algorithms can control the system by learning to generate the best action considering the defined reward and high dimensional sensor data as their state value. Further, RL-based algorithms have the advantage of autonomous learning from their own experiences, making them highly flexible and capable of learning to control, a feature which is not available in rule-based control algorithms or is limited.

In a nutshell, our work differs from rule-based algorithms and methods, mainly because our algorithm learns to control, but in a rule-based control algorithm, everything is designed and calculated beforehand. Further, it is different from a couple of Deep Learning and Deep RL works where it learns from high dimensional state space to control the UAV by generating waypoints in the XYZ axes. Many of the works reviewed in the literature either use only two dimensions for the control or do not use the high-dimensional state space of RGB-D as we used. For example, in [15] they focused on obstacle avoidance from RGB image, while our algorithm addresses obstacle avoidance and reaching to goal location from RGB-D image. Moreover, while some works such as [17] focused on following a path, our algorithm generates a path. Further, compared to works such as [18] where a Deep Q-Network capable of generating discrete action is used, in our work, a policy gradient-based reinforcement learning algorithm capable of generating continuous action is used. Compared to another similar work [20], while we use RGB-D data as the input to our Deep RL and generate 3D-action, they use 2D lidar data and generates 2D action. Further, our goal is an image, and their goal is a point fed to the algorithm. In addition, our method calculates the distance with obstacles and predicts the collision internally, making it possible for our algorithm to continue training even after deployment on an actual drone.

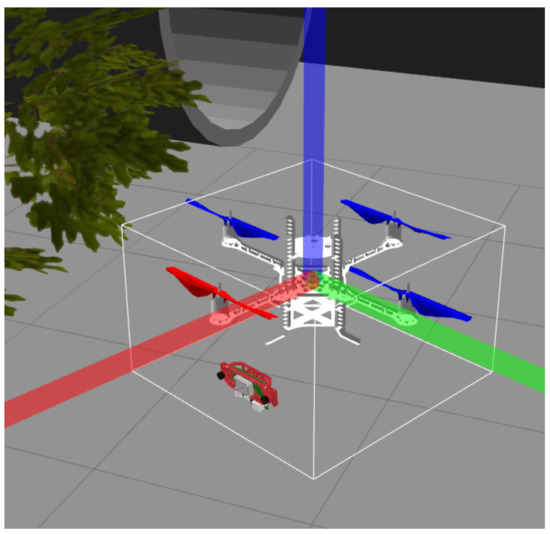

4. Simulation Environment

Gazebo simulator [28] is used in this work for training and evaluation of our models. We use the package provided for AscTec Pelican. The benefit of using Gazebo sim. with the provided package is that we can easily connect it to our algorithm using ROS (Robot Operating System), which gives us the advantage of mobility; that is, we can seamlessly move our algorithm to other platforms. We send the actions through our wrapper environment to the Gazebo simulator through the topics provided by RotorS package [29] and their topics. Further, we receive the RGB-D data from the provided topics, as well.

Furthermore, we gather the RGB-D data and actions results, plus the accumulated reward of the agent throughout the training process, to prove our hypothesis in the result section.

4.1. Static and Dynamic Environments

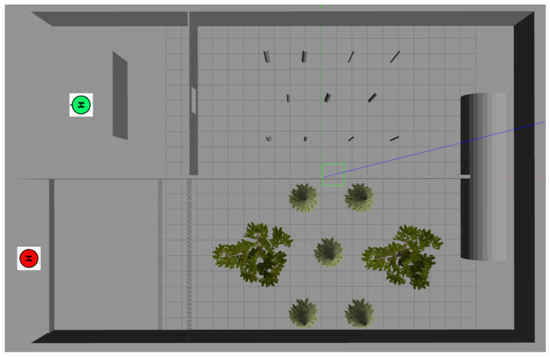

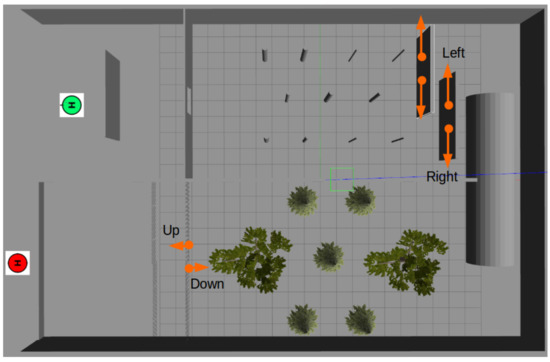

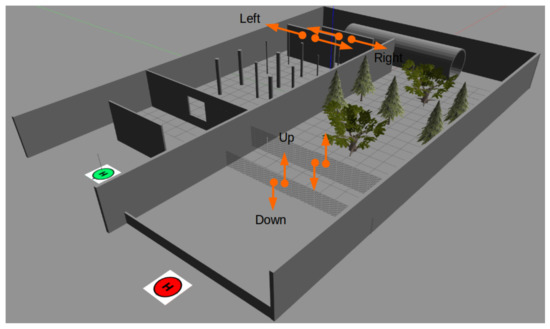

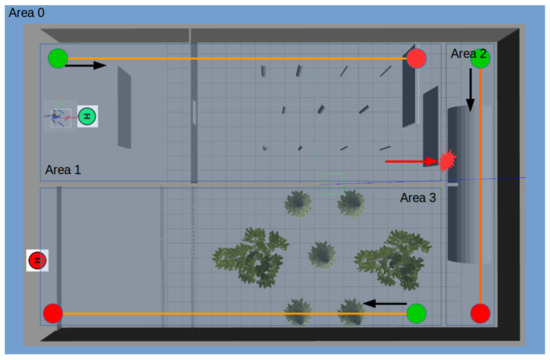

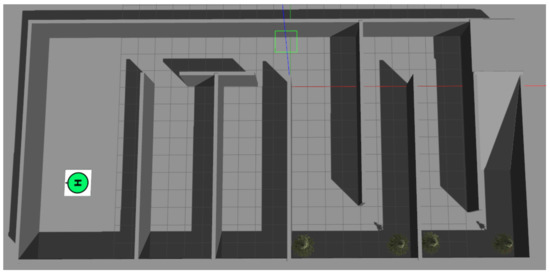

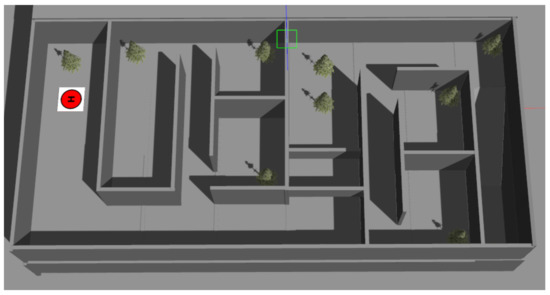

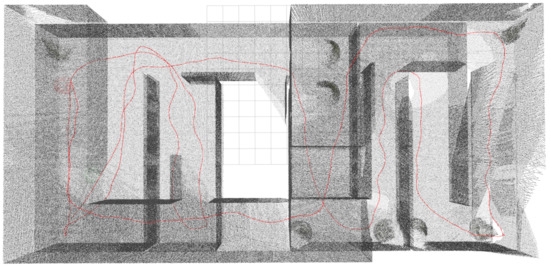

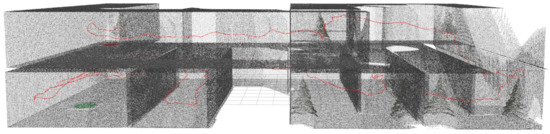

In this work, as it can be seen in Figure 5 and Figure 6, we defined a static environment, and as it can be seen in Figure 7 and Figure 8, we added two moving parts to the previous environments (two moving walls, and moving nets) to create a dynamic environment. Regarding the dynamic obstacles, that is, the walls and the nets, they move in one direction until they reach the other walls in case of walls, and the ground or max height (that is 3.0 m height) in case of nets. Further, we arranged them to move slightly slower than the moving speed of the UAV.

Figure 5.

Training environment (3D view).

Figure 6.

Training environment (top view).

Figure 7.

Dynamic training environment (top view).

Figure 8.

Dynamic training environment (3D view).

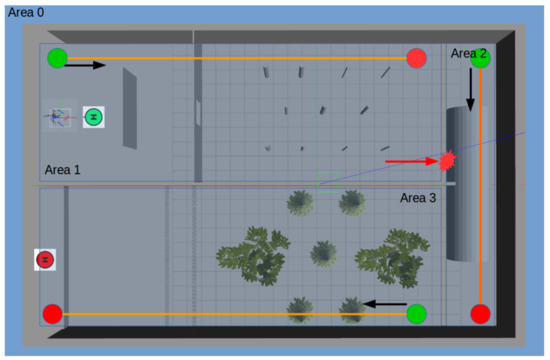

We used these environments to train our agent. Further, as it can be seen in Figure 9 and Figure 10, we divided the environment into three virtual areas used for tracing the agent as well as calculating our several rewards for training our agent.

Figure 9.

This diagram shows the top view of the environment and the sections of the environment used for generating reward and resetting the UAV.

Figure 10.

This diagram shows the top view of the dynamic environment and the sections of the environment.

4.2. A New Unknown Environment

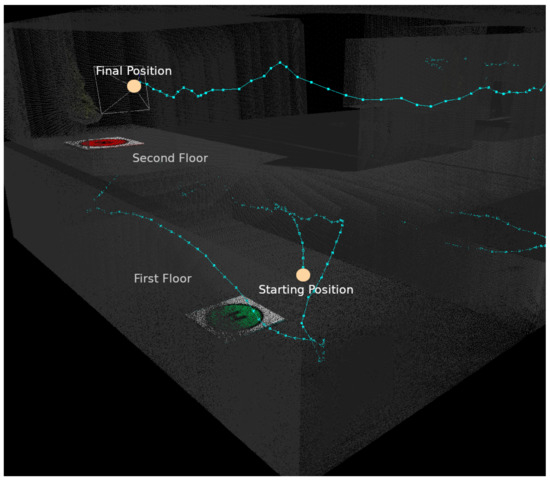

After training our agent in the static and dynamic environment mentioned in the previous section, we test it without any further training in a new unknown environment. This environment consists of many rooms and has two floors, and the only way that connect these two floors is a staircase.

4.3. Navigation Visualization

Considering that our algorithm is a high-level controller that generates the proper sequence of the waypoints to control the drone in an environment with obstacles, the main point would be the trajectory of the waypoints. As a result, we implement some methods to visualize the sequence (trajectory) of waypoints generated by our algorithm in the top-view map of the environments and the XZ, XY, and YZ planes.

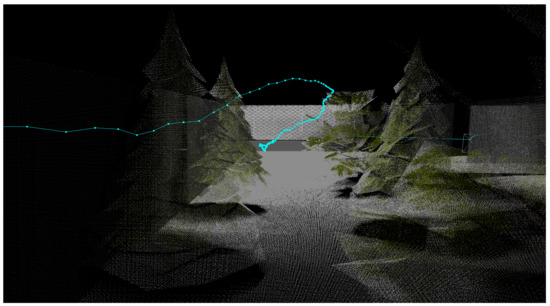

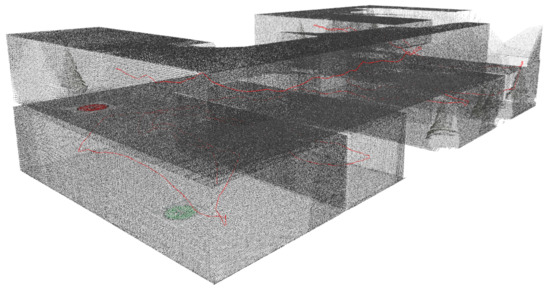

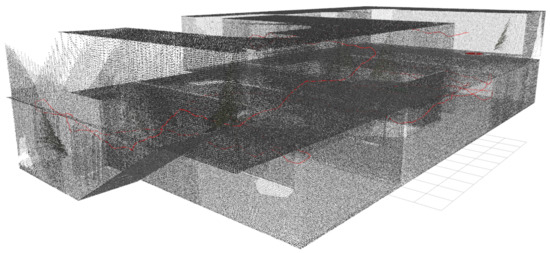

4.3.1. SLAM for Visualizing the Trajectory in 3D Map

In order to clearly illustrate the trajectory of the UAV controlled by our proposed algorithm, considering the fact that our algorithm is controlling the quad-copter in 3-dimension, the best solution from our point of view was to use SLAM. We used SLAM since it can localize the agent in an environment and at the same time does the mapping, and as a result, we can see the trajectory of the agent movement in the environment. As one can see, the images generated by the SLAM we used in this paper are focused on localization and not on mapping. In other words, the mapping result seen in our SLAM images in this paper are the result of moving through the shown trajectory from the starting point of that trajectory to the ending point of the trajectory; that is, we did not focus on the mapping part to generate a perfect map. Finally, here we again emphasize that the SLAM only is used for visualization purposes, and the result of the SLAM algorithm is not used in any way in the training, playing, and control of our algorithm and the agent shown in this paper. We used a SLAM package called RTAB-Map [30] and set it to use the RGB-D image and odometry data coming from the simulated agent in the Gazebo sim. to generate the mapping and localization information.

5. Experimental Result

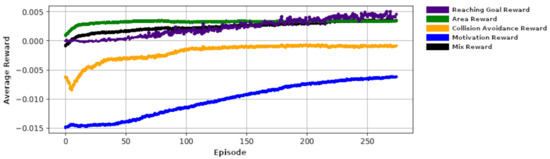

5.1. Learning Diagrams

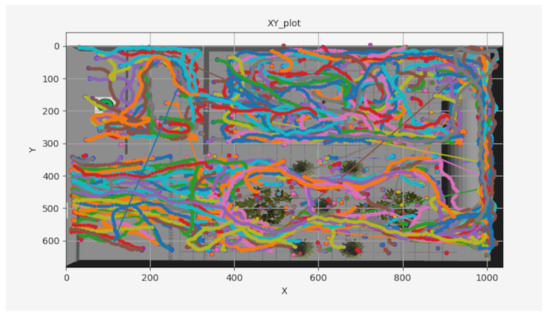

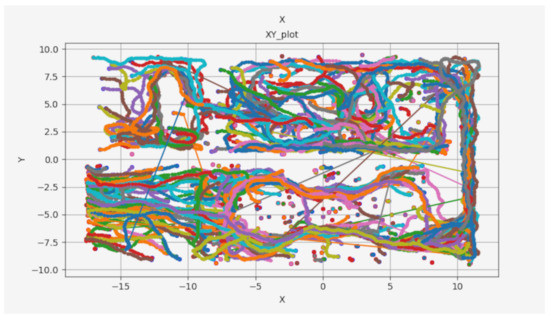

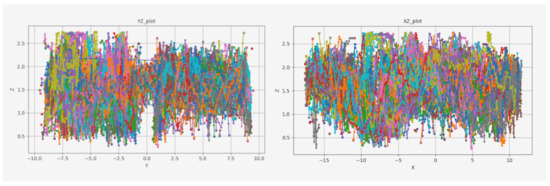

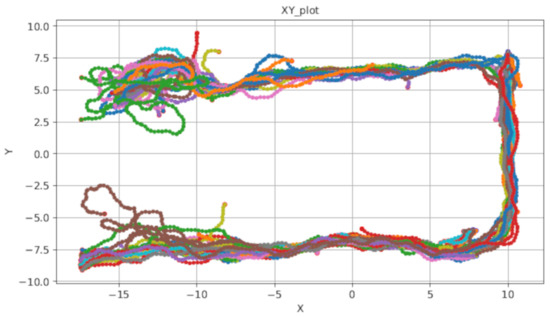

By looking at our navigation results in Figure 11, Figure 12 and Figure 13 (un-trained algorithm) and comparing it with Figure 14, Figure 15 and Figure 16 (trained algorithm), it is possible to see that our algorithm learns the shortest path for reaching to the goal position while avoiding the obstacles. The figure also shows the capability of our algorithm to control the UAV in the Z dimension. For example, the UAV is controlled to be at the height of about 1.5 m in the pipe area to pass through it. However, our algorithm increases and decreases the height of the UAV position several times in the area with several trees. Further, to pass the nets (while they are moving up and down), the UAV should be capable of optimal control over the Z dimension. Finally, the data in Table 1 shows the Success Rate of our trained algorithm, where Success Rate is equal to reaching to the goal location, starting from the beginning of the environment.

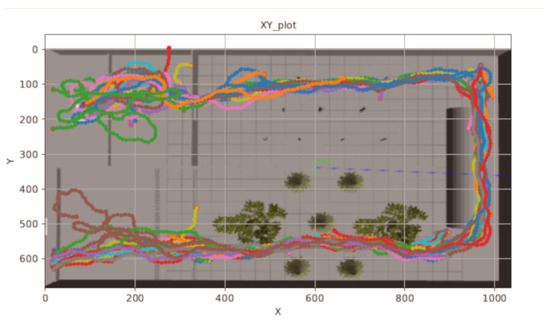

Figure 11.

This diagram illustrates the navigation of UAV using untrained SCAN in the challenging environment. The top left image shows the top-view navigation trajectory of UAV projected on the top-view of the environment map.

Figure 12.

This diagram illustrates the navigation of UAV using untrained SCAN in the challenging environment (XY plane).

Figure 13.

These diagrams illustrate the navigation of UAV using untrained SCAN in the challenging environment. The left image shows the trajetory of UAV navigation in the YZ plane. The right image shows the trajetory of UAV navigation in the XZ plane.

Figure 14.

This diagram illustrates the navigation of UAV using trained SCAN in the challenging environment. The top left image shows the top-view navigation trajectory of UAV projected on the top view of the environment map.

Figure 15.

This diagram illustrates the navigation of UAV using untrained SCAN in the challenging environment (XY plane).

Figure 16.

These diagrams illustrate the navigation of UAV using untrained SCAN in the challenging environment. The left image shows the trajectory of UAV navigation in the YZ plane. The right image shows the trajectory of UAV navigation in the XZ plane.

Table 1.

Success and Collision Rates.

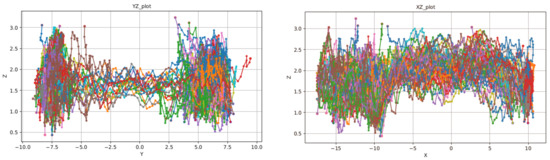

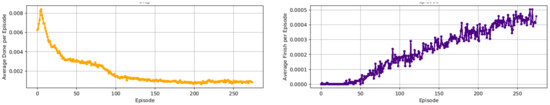

Besides that, as our result in Figure 17 and Figure 18 show, our algorithm can learn to maximize the rewards (several rewards that are defined) and also avoid the obstacles. Further, it can learn to reach the final position (the goal position), where the goal position is shown using a JPG image (red H) at the end of the environment.

Figure 17.

This figure shows that our algorithm is able to learn to maximize several rewards (multi-objective) in the first 250 episodes.

Figure 18.

This figure shows the number of collisions and number of approaches to the goal position by the agent, where the agent is capable of decreasing the collision and increasing the reaching to the goal position by the time.

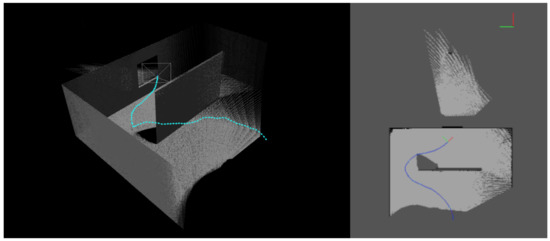

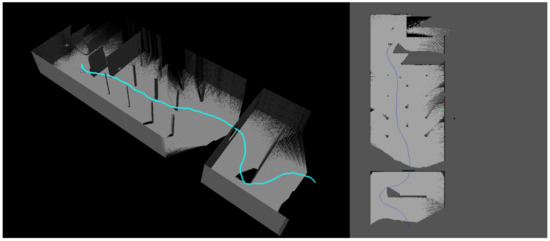

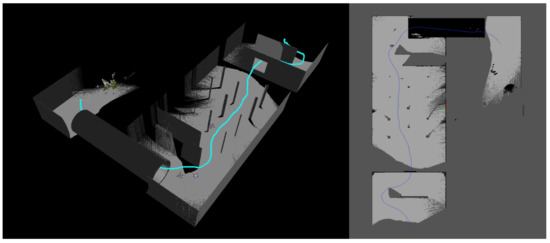

5.2. Trajectory of the UAV Controlled by SCAN

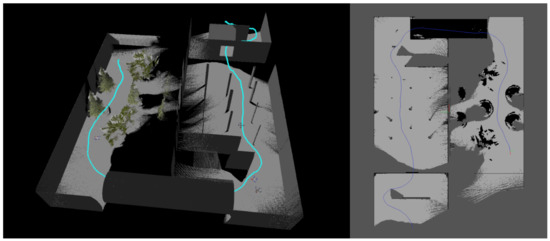

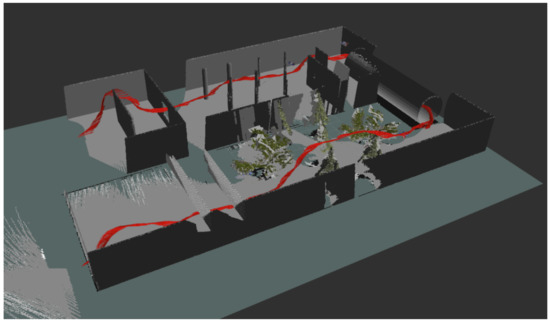

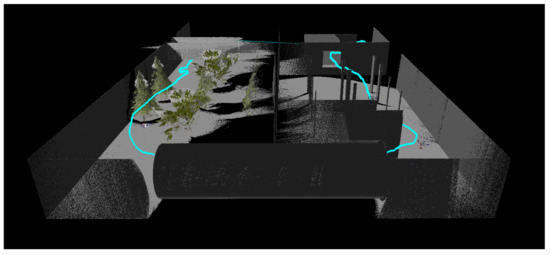

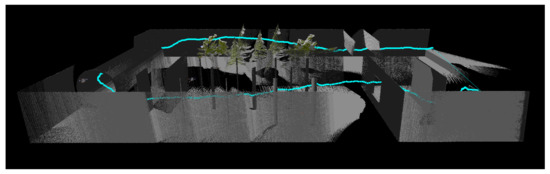

Figure 19 shows the trajectory of the UAV at the beginning of Area 1. Figure 20, Figure 21 and Figure 22 clearly show that the SCAN algorithm can learn to control the trajectory of the UAV to pass through Areas 1, 2, and 3 and maximize the defined rewards while avoiding obstacles. Further, Figure 23 and Figure 24 illustrate that the agent is learned to reach the final goal at the end of the challenging environment. Figure 25 shows the capability of the algorithm in controlling the UAV on the Z-axis, and Figure 26 shows that the algorithm is able to control the UAV inside the narrow and curved area of a pipe.

Figure 19.

Using SLAM for visualizing the trajectory of UAV controlled by the SCAN algorithm (Beginning of Area 1). In this paper, SLAM is used only for visualization purposes.

Figure 20.

Visualization of the trajectory of UAV controlled by the SCAN algorithm (Area 1). The left image is 3D-SLAM and the right image is 2D SLAM.

Figure 21.

Visualization of the trajectory of UAV controlled by the SCAN algorithm (Area 1 and Area 3).

Figure 22.

Visualization of the trajectory of UAV controlled by the SCAN algorithm (Area 1, Area 2, and Area 3).

Figure 23.

Visualization of the trajectory of UAV controlled by the SCAN algorithm (Area 1, Area 2, and Area 3) in rviz, 3D view.

Figure 24.

Visualization of the trajectory of UAV controlled by the SCAN algorithm (Area 1, Area 2, and Area 3) in rviz, top view.

Figure 25.

Visualization of the trajectory of UAV controlled by the SCAN algorithm (Area 1, Area 2, and Area 3), side-view.

Figure 26.

Visualization of the trajectory of UAV controlled by the SCAN algorithm (Area 1, Area 2, and Area 3), side view.

6. Discussion

The algorithm we describe in this work is a high-level controller algorithm. That is, it calculates the desired waypoints and sends them to the low-level controller of the UAV. As a result, here, we focus on the issues related to the high-level controller and consider that if the drone can move from point (a) to point (b) in the simulator, having the same command in the actual UAV, it will behave in the same way.

As mentioned earlier, our algorithm as a high-level controller uses the RGB and depth sensors. Further, we use these data to generate the collision signal and calculate the UAV’s distance from the opposite obstacle. While using this method slightly increases the calculation time in each simulation frame, making the online training possible in an actual UAV. If we use the simulator’s physics engine to detect the obstacles and collisions, we would be unable to detect the obstacles on a real drone and use them as new signals for onboard training after deploying the model trained in the simulator.

In this paper, considering the fact that we focused on the capability of the algorithm in terms of being multi-objective and learning to avoid obstacles while being able to finish the environments and reach the goal area, we did not discuss the computational time of our algorithm. However, our algorithm is a model-less Deep RL algorithm which is among the fastest and lightest, considering that from RGB-D image to continuous action commands is just a forward pass through the DNN. Further, it does not use localization methods such as SLAM or methods based on the point cloud, which is proven to be computational-heavy. Finally, our method’s computational load is also related to how many frames per second we need to generate new actions or way-points, which depends on the UAV application. In this research, we used Python and Tensorflow for creating and running our algorithm and generating the results. Further, we used a computer with an i9 CPU and Nvidia 1080ti GPU. This setup takes about 40 ms to generate a new waypoint from the RGB-D image, which means approximately about 25 frames per second. Nonetheless, in the deployment time, the Deep Neural Network weights should be extracted and loaded in a C/C++ code, where the computation is much faster, which compensates for the slower computational power of an onboard system (such as Nvidia TX2 or Nvidia Xavier NX for regular size drones) in an UAV.

Compared to many famous algorithms for planning or obstacle avoidance, where the RGB-D image should be passed through several steps to be converted to useful data such as trees and graphs, our algorithm is an end-to-end algorithm that receives the raw RGB-D image and directly generates the optimal actions or next waypoint. Further, as mentioned earlier, it learns to generate the optimal action by itself; in other words, our algorithm is an autonomous learner agent.

Finally, regarding the dynamic obstacles, they are moving at a constant speed. For every three moves of the UAV, dynamic obstacles move one step. Every time the UAV hits an obstacle or reaches the goal position, obstacles will be re-positioned in a random position along the direction of movement.

6.1. UAV Trajectory in the Unknown Environment

The second unknown environment consists of many rooms and trees, and also it comprises two floors, where the only way to move to the second environment is through the staircase at the end of the first floor (Figure 27 and Figure 28). Considering the trajectory illustrated in Figure 29, Figure 30, Figure 31, Figure 32 and Figure 33, related to the unknown environment, the UAV visits one of the rooms on the first floor, then returns to the starting location, and then continues moving through the corridors to the staircase, climbs the staircase, and moves through the corridors, passes through the trees, passes a similar obstacle to the nets, and flies to the target location. To explain the behavior of the UAV in the unknown environment, we believe the model trained in the static and dynamic challenging environment learned a couple of things. It learned to pass through the window and pipe, and perhaps this best explains why the UAV prefers to move through the corridors where they have a similar depth image. Further, it learned to ascend and descend when it wanted to pass the static and dynamic nets, which explains why it is easy for it to climb the staircase. Perhaps one question could be why the UAV chooses to go to the first room on the first floor instead of choosing the corridor, which we believe is due to the Gaussian noises we used to generate the final actions, where it gives a kind of random sense to the controller-generated actions.

Figure 27.

Unknown environment (top view of first floor). The green H is the starting location, similar to the previous environments.

Figure 28.

Unknown environment (top view of second floor). The red H is the destination location, similar to the previous environments.

Figure 29.

This figure illustrates the trajectory of the UAV from the starting location to the target location in the unknown environment from the starting location side (3D view).

Figure 30.

This figure illustrates the trajectory of the UAV from the starting location to the target location in the unknown environment from the staircase side (3D view).

Figure 31.

This figure illustrates the trajectory of the UAV from the starting location to the target location in the unknown environment (top view).

Figure 32.

This figure illustrates the trajectory of the UAV from the starting location to the target location in the unknown environment (side view).

Figure 33.

This figure illustrates parts of the trajectory of the UAV related to the start and end of the unknown environment.

6.2. Simulation to Real World Gap

The main issue that can presenr some difficulties for implementing this algorithm (model trained in the simulator) on an actual UAV (quad-copter) would be related to the RGB-D camera noises.

In order to ameliorate this issue, it is possible to: (1) Create an artificial noise in the simulated camera in Gazebo to simulate it as much as possible similar to an actual RGB-D sensor; and (2) Train a Variational Auto-encoder (VAE) network that converts the simulated data to real data before being used for the training of the SCAN algorithm.

7. Conclusions

In this paper, we introduced a self-trained controller for autonomous navigation (SCAN) in a static and dynamic (with moving walls and nets) challenging environment (including trees, nets, windows, and pipe) using deep reinforcement learning trained by Depth and RGB images. We trained our algorithm in the Gazebo simulator in dynamic and static environments, and by illustrating our results, we proved that our algorithm is capable of learning an optimal policy for high-level control of a UAV quadcopter. In this work, we trained our UAV using several rewards, including Obstacle Avoidance reward, Motivation reward, Reaching Goal reward, and Area reward, in a multi-objective fashion with two primary purposes: (1) Avoiding the obstacles (static and dynamic); (2) Learning to reach the desired goal position, that is, where the red sign is shown in the environment. Further, we used an onboard method to calculate the distance of obstacles and predict the collision without using the simulator physics engine.

In this work, we designed and trained our algorithm for controlling the 3 DoF to have a practical algorithm for autonomous navigation of UAVs. Further, we defined our algorithm to use ROS for the communication, which gives the algorithm the advantage of mobility; that is, it can be moved to an actual drone with minimum changes and effort.

Our research result concludes that Deep RL is a capable algorithm in terms of learning to control a UAV and navigate through challenging environments to reach a goal that is shown as a photo and not a single point. Further, it is possible to train the Deep RL algorithm to avoid the obstacles simultaneously in a multi-objective approach. While other algorithms that are not based on machine learning methods are also able to achieve the same goals, many of them are based on localization methods, such as SLAM or point-cloud, which are proven to be computationally heavier than deep RL.

In future work, it is possible to develop the algorithm further to control multiple agents at the same time, while the agents maintain a reasonable formation while following the leader.

Author Contributions

Writing—original draft, A.R.D. and D.-J.L.; Writing—review and editing, A.R.D. and D.-J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Unmanned Vehicles Core Technology Research and Development Program through the National Research Foundation of Korea (NRF), Unmanned Vehicle Advanced Research Center (UVARC) funded by the Ministry of Science and ICT, the Republic of Korea (2020M3C1C1A01082375) (2020M3C1C1A02084772), and also was supported by DNA+Drone Technology Development Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Science and ICT (No. NRF-2020M3C1C2A01080819), and also was supported by the Technology Innovation Program (or Industrial Strategic Technology Development Program-Development and demonstration of automatic driving collaboration platform to overcome the dangerous environment of databased commercial special vehicles) (20018904, Development and field test of mobile edge for safety of special purpose vehicles in hazardous environment) funded By the Ministry of Trade, Industry & Energy (MOTIE, Korea).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DRL | Deep Reinforcement Learning |

| ANN | Artificial Neural Network |

| SLAM | Simultaneous Localization And Mapping |

| ROS | Robot Operating System |

| SCAN | Self-trained Controller for Autonomous Navigation |

References

- Floreano, D.; Mattiussi, C. Bio-Inspired Artificial Intelligence: Theories, Methods, and Technologies; The MIT Press: Cambridge, MA, USA, 2008. [Google Scholar]

- Liu, X.; Li, Y.; Zhang, J.; Zheng, J.; Yang, C. Self-Adaptive Dynamic Obstacle Avoidance and Path Planning for USV Under Complex Maritime Environment. IEEE Access 2019, 7, 114945–114954. [Google Scholar] [CrossRef]

- Niu, H.; Lu, Y.; Savvaris, A.; Tsourdos, A. An energy-efficient path planning algorithm for unmanned surface vehicles. Ocean Eng. 2018, 161, 308–321. [Google Scholar] [CrossRef] [Green Version]

- Singh, Y.; Sharma, S.K.; Sutton, R.; Hatton, D.; Khan, A. A constrained A* approach towards optimal path planning for an unmanned surface vehicle in a maritime environment containing dynamic obstacles and ocean currents. Ocean Eng. 2018, 169, 187–201. [Google Scholar] [CrossRef] [Green Version]

- Mina, T.; Singh, Y.; Min, B.C. Maneuvering Ability-Based Weighted Potential Field Framework for Multi-USV Navigation, Guidance, and Control. Mar. Technol. Soc. J. 2020, 54, 40–58. [Google Scholar] [CrossRef]

- Singh, Y.; Bibuli, M.; Zereik, E.; Sharma, S.; Khan, A.; Sutton, R. A Novel Double Layered Hybrid Multi-Robot Framework for Guidance and Navigation of Unmanned Surface Vehicles in a Practical Maritime Environment. J. Mar. Sci. Eng. 2020, 8, 624. [Google Scholar] [CrossRef]

- Ng, A.Y.; Coates, A.; Diel, M.; Ganapathi, V.; Schulte, J.; Tse, B.; Berger, E.; Liang, E. Autonomous Inverted Helicopter Flight via Reinforcement Learning. In Proceedings of the Experimental Robotics IX, Singapore, 18–21 June 2006; Ang, M.H., Khatib, O., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 363–372. [Google Scholar]

- Abbeel, P.; Coates, A.; Quigley, M.; Ng, A.Y. An Application of Reinforcement Learning to Aerobatic Helicopter Flight. In Proceedings of the 19th International Conference on Neural Information Processing Systems, NIPS’06, Vancouver, BC, Canada, 4–7 December 2006; MIT Press: Cambridge, MA, USA, 2006; pp. 1–8. [Google Scholar]

- Hwangbo, J.; Sa, I.; Siegwart, R.; Hutter, M. Control of a Quadrotor With Reinforcement Learning. IEEE Robot. Autom. Lett. 2017, 2, 2096–2103. [Google Scholar] [CrossRef] [Green Version]

- Molchanov, A.; Chen, T.; Hönig, W.; Preiss, J.A.; Ayanian, N.; Sukhatme, G.S. Sim-to-(Multi)-Real: Transfer of Low-Level Robust Control Policies to Multiple Quadrotors. arXiv 2019, arXiv:1903.04628. [Google Scholar]

- Arasanipalai, R.; Agrawal, A.; Ghose, D. Mid-flight Propeller Failure Detection and Control of Propeller-deficient Quadcopter using Reinforcement Learning. arXiv 2020, arXiv:2002.11564. [Google Scholar]

- Sadeghi, F.; Levine, S. (CAD)2RL: Real Single-Image Flight without a Single Real Image. arXiv 2016, arXiv:1611.04201. [Google Scholar]

- Zhang, T.; Kahn, G.; Levine, S.; Abbeel, P. Learning Deep Control Policies for Autonomous Aerial Vehicles with MPC-Guided Policy Search. arXiv 2015, arXiv:1509.06791. [Google Scholar]

- Liu, J.; Qi, W.; Lu, X. Multi-step reinforcement learning algorithm of mobile robot path planning based on virtual potential field. In Proceedings of the International Conference of Pioneering Computer Scientists, Engineers and Educators, Taiyuan, China, 17–20 September 2021; Springer: Berlin/Heidelberg, Germany, 2017; pp. 528–538. [Google Scholar]

- Wang, W.; Luo, X.; Li, Y.; Xie, S. Unmanned surface vessel obstacle avoidance with prior knowledge-based reward shaping. Concurr. Comput. Pract. Exp. 2021, 33, e6110. [Google Scholar] [CrossRef]

- Long, P.; Liu, W.; Pan, J. Deep-learned collision avoidance policy for distributed multiagent navigation. IEEE Robot. Autom. Lett. 2017, 2, 656–663. [Google Scholar] [CrossRef] [Green Version]

- Woo, J.; Yu, C.; Kim, N. Deep reinforcement learning-based controller for path following of an unmanned surface vehicle. Ocean Eng. 2019, 183, 155–166. [Google Scholar] [CrossRef]

- Zhou, X.; Wu, P.; Zhang, H.; Guo, W.; Liu, Y. Learn to navigate: Cooperative path planning for unmanned surface vehicles using deep reinforcement learning. IEEE Access 2019, 7, 165262–165278. [Google Scholar] [CrossRef]

- Liu, Y.; Peng, Y.; Wang, M.; Xie, J.; Zhou, R. Multi-usv system cooperative underwater target search based on reinforcement learning and probability map. Math. Probl. Eng. 2020, 2020, 7842768. [Google Scholar] [CrossRef]

- Doukhi, O.; Lee, D.J. Deep Reinforcement Learning for End-to-End Local Motion Planning of Autonomous Aerial Robots in Unknown Outdoor Environments: Real-Time Flight Experiments. Sensors 2021, 21, 2534. [Google Scholar] [CrossRef] [PubMed]

- Barzegar, A.; Lee, D.J. Deep Reinforcement Learning-Based Adaptive Controller for Trajectory Tracking and Altitude Control of an Aerial Robot. Appl. Sci. 2022, 12, 4764. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal Policy Optimization Algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Schulman, J.; Levine, S.; Moritz, P.; Jordan, M.I.; Abbeel, P. Trust Region Policy Optimization. arXiv 2015, arXiv:1502.05477. [Google Scholar]

- Kakade, S.; Langford, J. Approximately Optimal Approximate Reinforcement Learning. In Proceedings of the Nineteenth International Conference on Machine Learning, ICML’02, Sydney, Australia, 8–12 July 2002; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2002; pp. 267–274. [Google Scholar]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous Methods for Deep Reinforcement Learning. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; Balcan, M.F., Weinberger, K.Q., Eds.; PMLR: New York, NY, USA, 2016; Volume 48, pp. 1928–1937. [Google Scholar]

- Dooraki, A.R.; Lee, D. Multi-rotor Robot Learning to Fly in a Bio-inspired Way Using Reinforcement Learning. In Proceedings of the 2019 16th International Conference on Ubiquitous Robots (UR), Jeju, Korea, 24–27 June 2019; pp. 118–123. [Google Scholar] [CrossRef]

- Dooraki, A.R.; Hooshyar, D.; Yousefi, M. Innovative algorithm for easing VIP’s navigation by avoiding obstacles and finding safe routes. In Proceedings of the 2015 International Conference on Science in Information Technology (ICSITech), Yogyakarta, Indonesia, 27–28 October 2015; pp. 357–362. [Google Scholar] [CrossRef]

- Gazebo Simulator. Available online: http://gazebosim.org/ (accessed on 16 March 2017).

- Furrer, F.; Burri, M.; Achtelik, M.; Siegwart, R. Chapter RotorS—A Modular Gazebo MAV Simulator Framework. In Robot Operating System (ROS): The Complete Reference (Volume 1); Springer International Publishing: Cham, Switzerland, 2016; pp. 595–625. [Google Scholar]

- Labbé, M.; Michaud, F. RTAB-Map as an open-source lidar and visual simultaneous localization and mapping library for large-scale and long-term online operation. J. Field Robot. 2019, 36, 416–446. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).