A Neural Network Structure with Attention Mechanism and Additional Feature Fusion Layer for Tomato Flowering Phase Detection in Pollination Robots

Abstract

1. Introduction

2. Materials and Methods

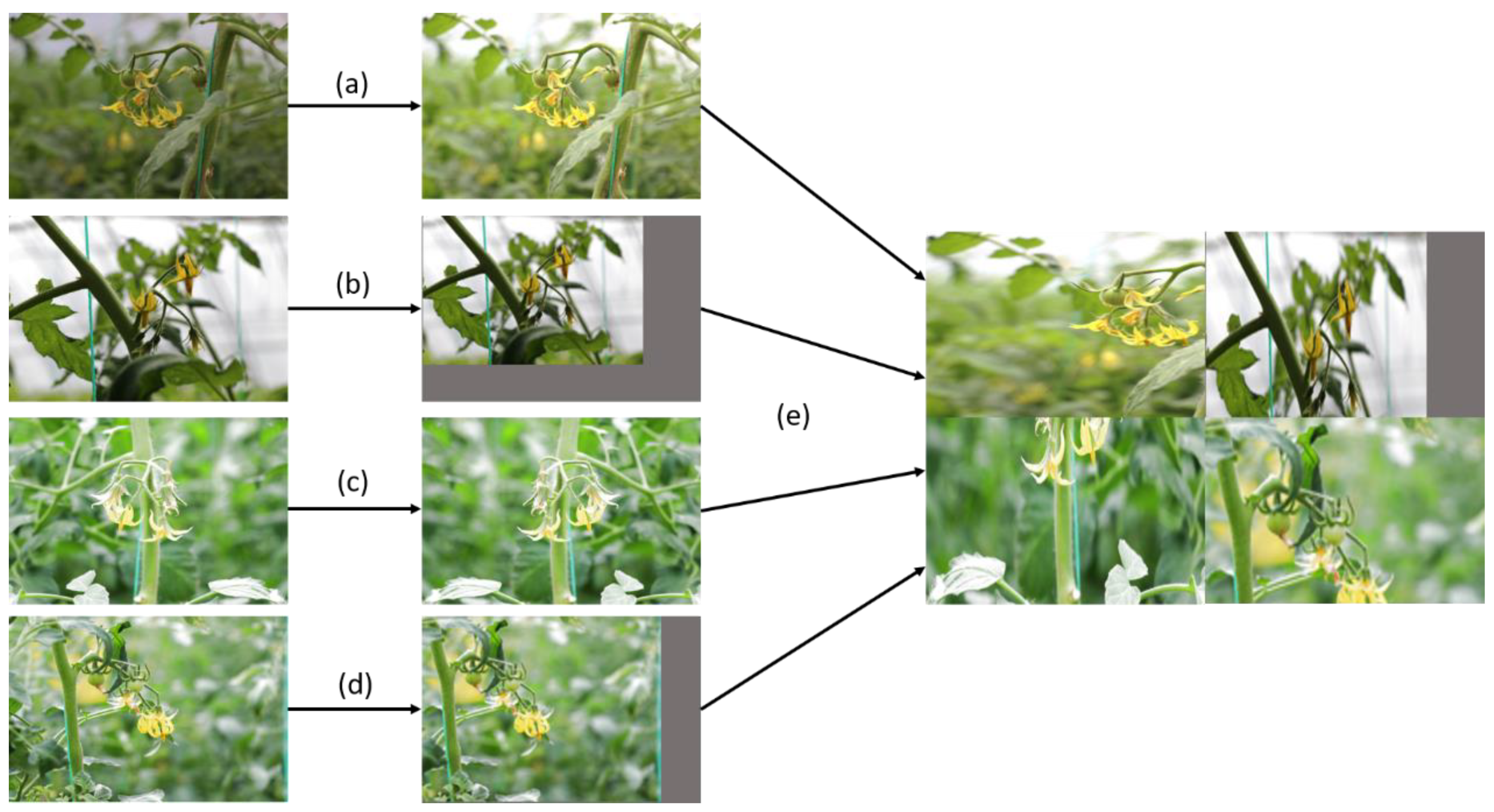

2.1. Data Collection and Augmentation

2.1.1. Data Collection

2.1.2. Data Augmentation

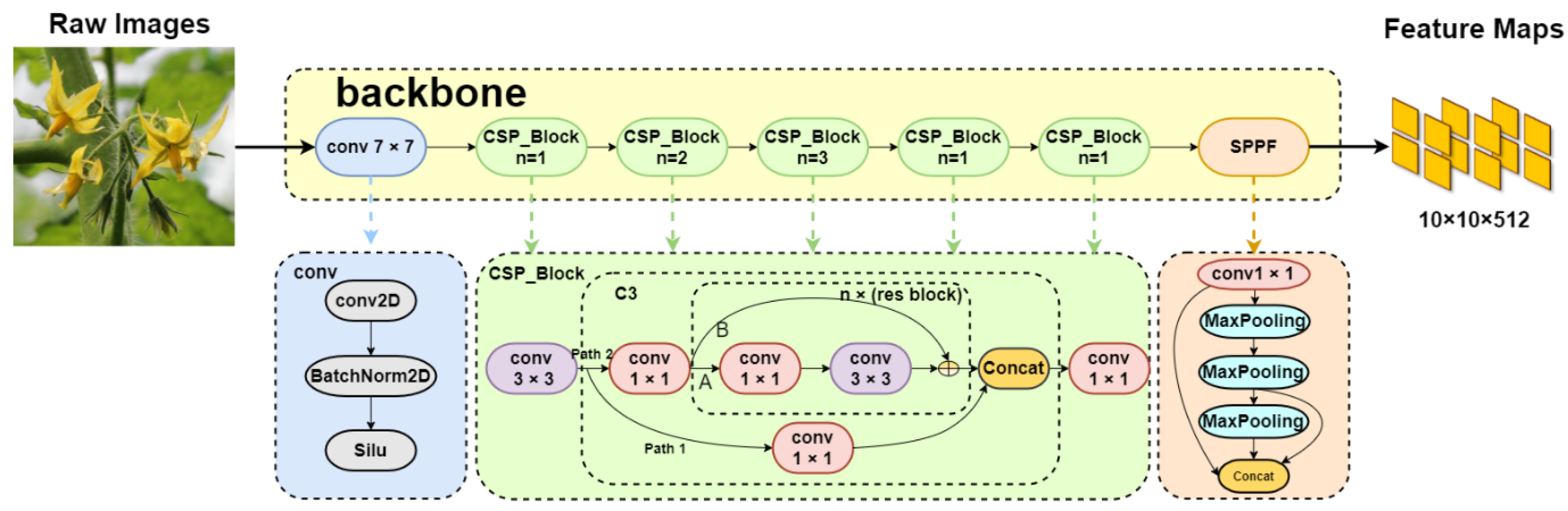

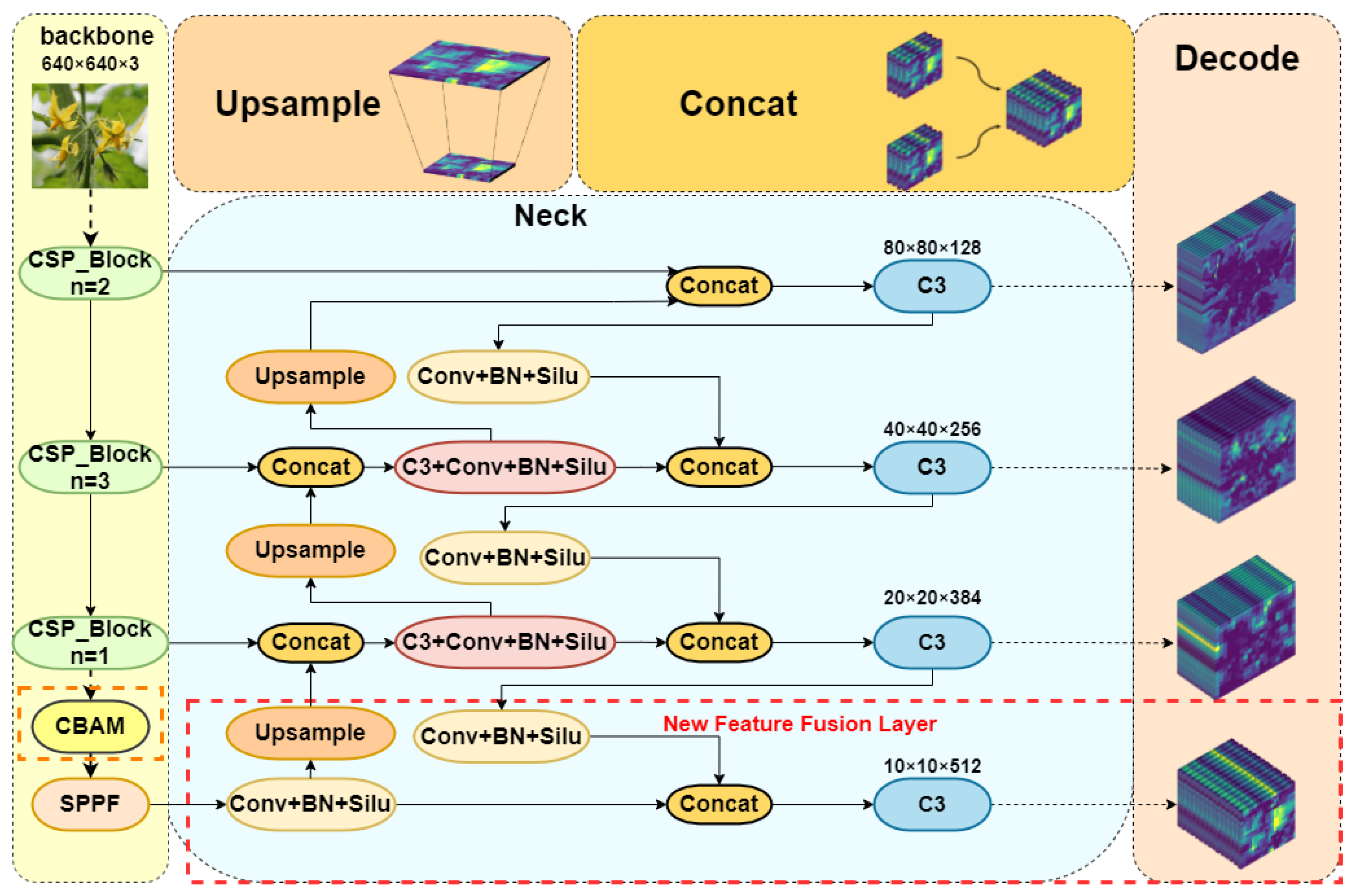

2.2. FlowerYolov5

2.2.1. Backbone of Yolov5

2.2.2. Improvements to the Model

- (1)

- Design the novel feature fusion layer

- (2)

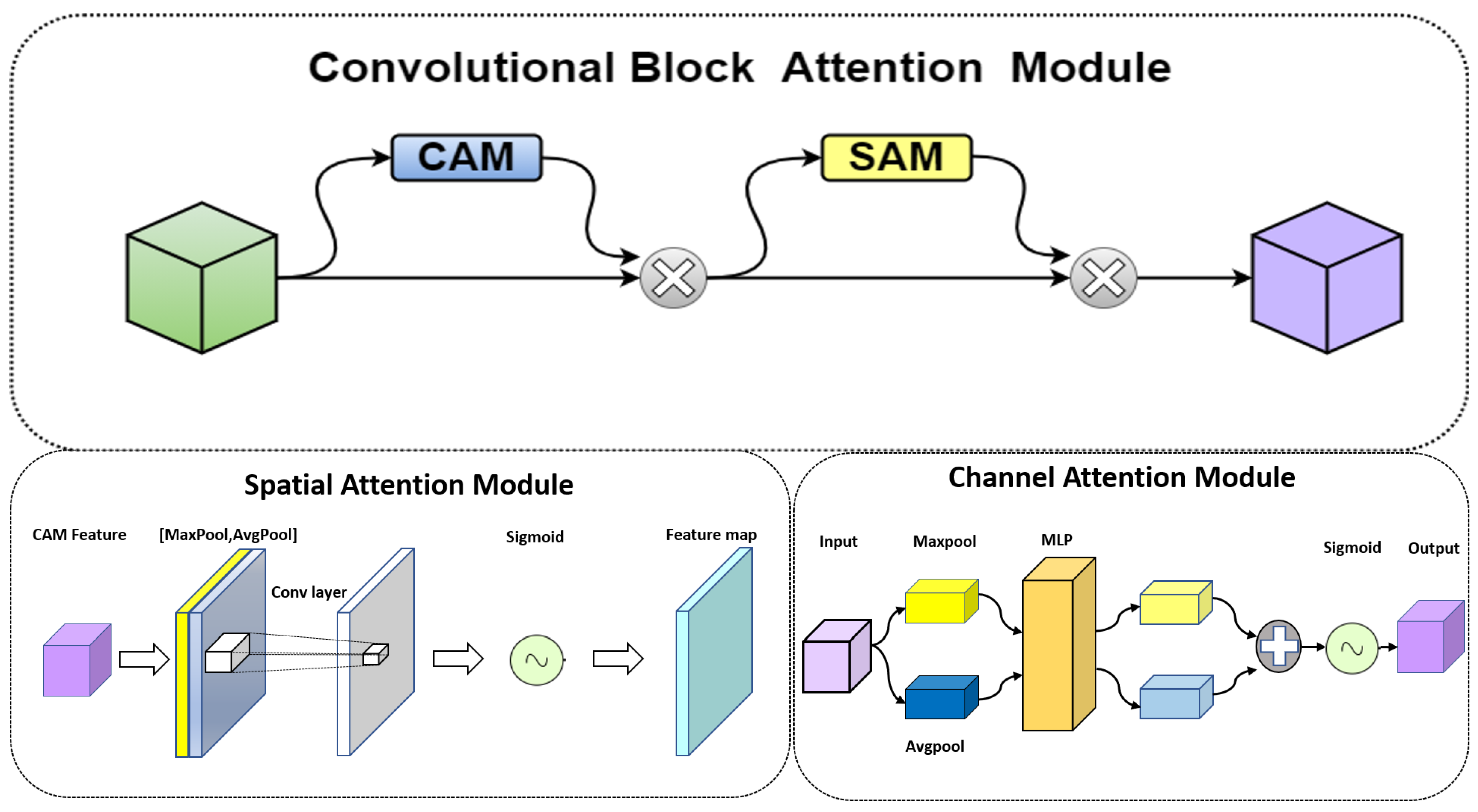

- Insert the Attention Mechanism Module

2.3. Bounding Box Regression and Loss Function

3. Results

3.1. Evaluation Indicators

3.2. Test Training Platform

3.3. Experimental Comparison

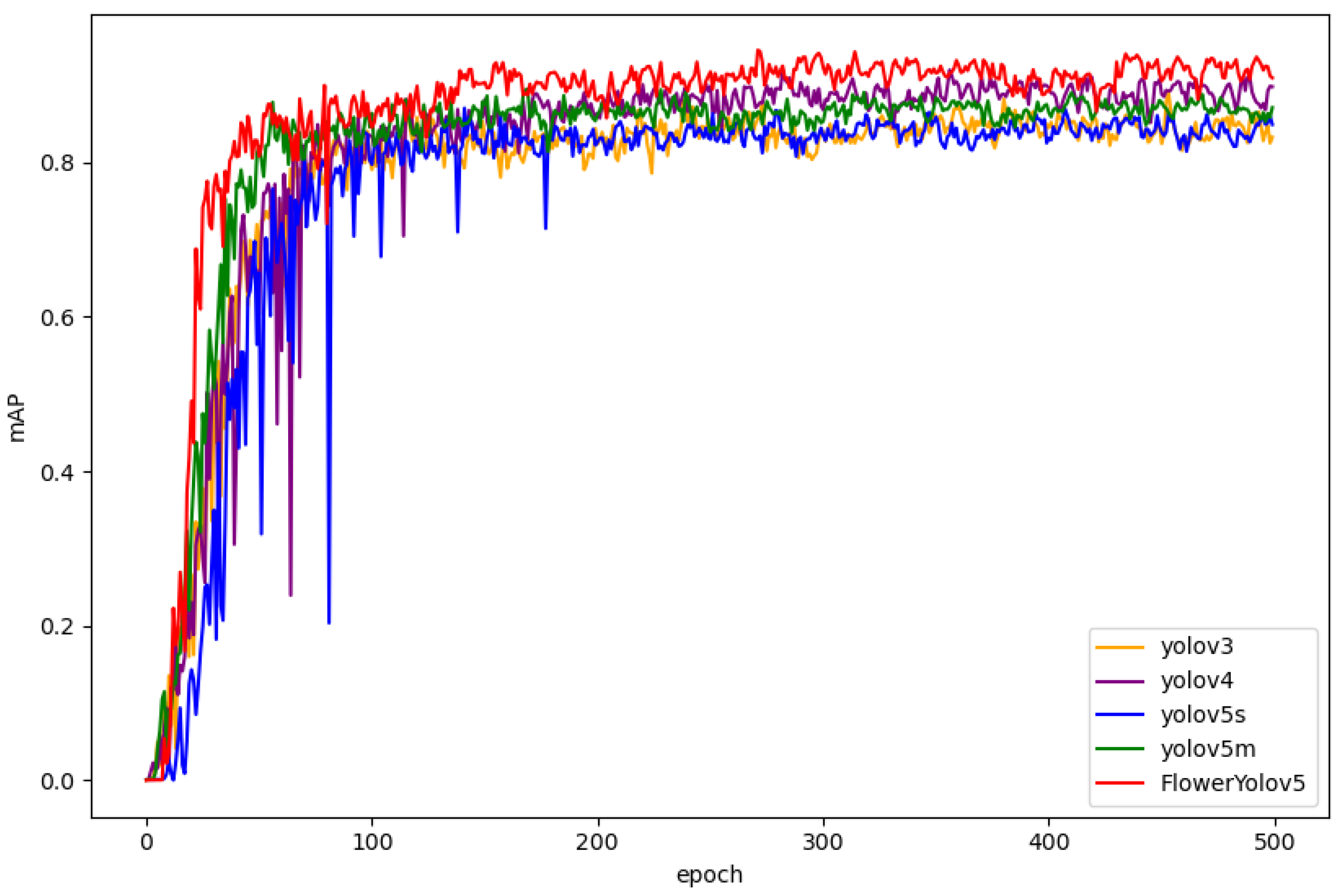

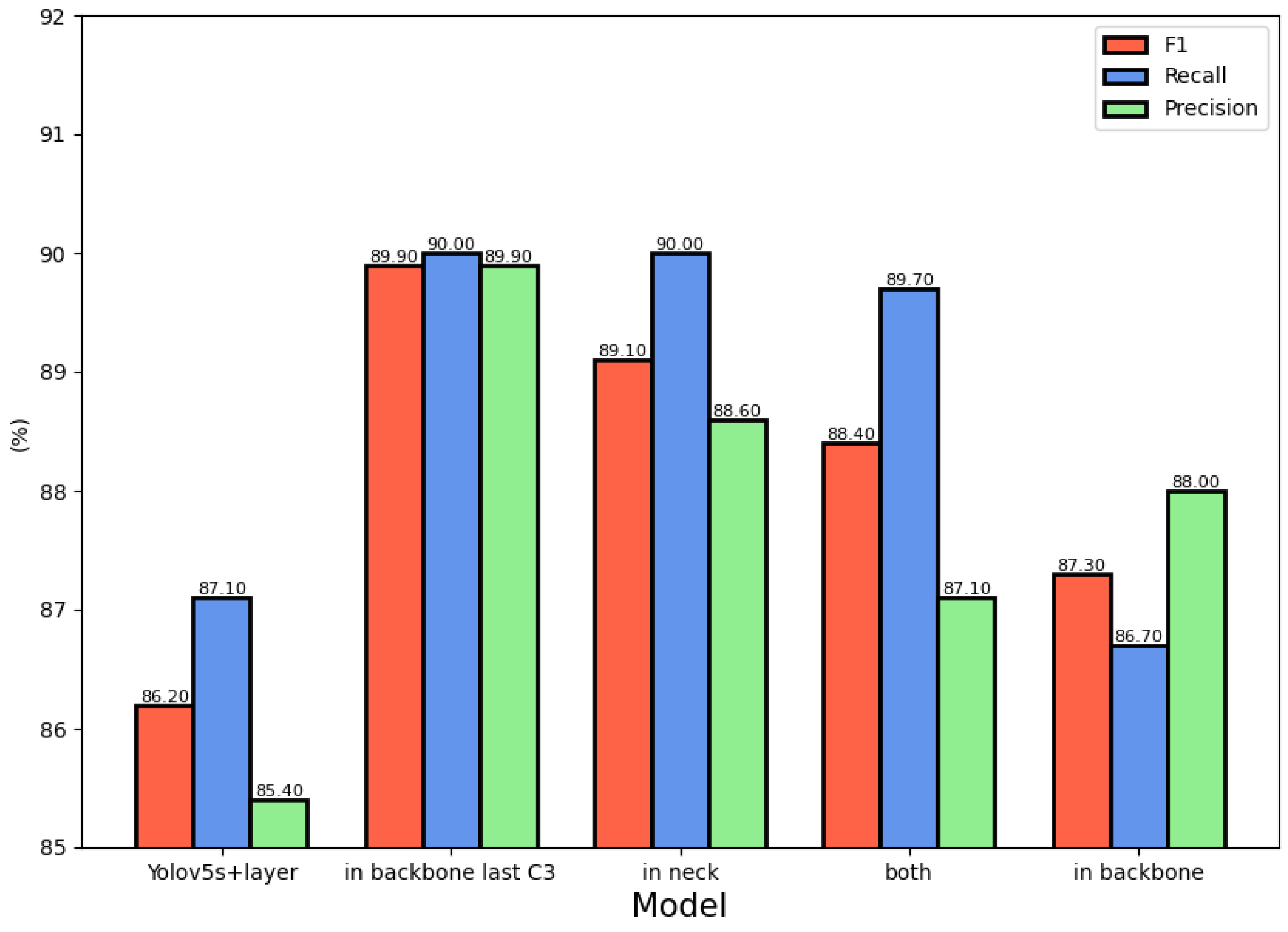

4. Discussion

5. Conclusions

- (1)

- The model performance verification experiment showed that FlowerYolov5 achieved a better performance, with 90.5% AP for the bud phase, 97.7% AP for the bloom phase, and 94.9% AP for the early fruit phase. In general, the mean average precision reached 94.2%, which is 7.6% better than that of the original Yolov5 network. Therefore, FlowerYolov5 can more accurately identify and classify different flowering phases, and provides a technical reference for precise identification by pollination robots.

- (2)

- A comparison of the detection results showed that the performance of FlowerYolov5 was generally better than that of Yolo series networks. The previous problem related to undetected flowers was improved.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Kong, J.; Wang, H.; Wang, X.; Jin, X.; Fang, X.; Lin, S. Multi-stream Hybrid Architecture Based on Cross-level Fusion Strategy for Fine-grained Crop Species Recognition in Precision Agriculture. Comput. Electron. Agric. 2021, 185, 106134. [Google Scholar] [CrossRef]

- Zheng, Y.; Kong, J.; Jin, X.; Wang, X.; Su, T.; Zuo, M. Crop Deep: The Crop Vision Dataset for Deep-learning-based Classification and Detection in Precision Agriculture. Sensors 2019, 19, 1058. [Google Scholar] [CrossRef] [PubMed]

- Kong, J.; Yang, C.; Wang, J.; Wang, X.; Zuo, M.; Jin, X.; Lin, S. Deep-stacking network approach by multisource data mining for hazardous risk identification in IoT-based intelligent food management systems. Comput. Intell. Neurosci. 2021, 2021, 1194565. [Google Scholar] [CrossRef] [PubMed]

- Tong, Y.; Yu, L.; Li, S.; Liu, J.; Qin, H.; Li, W. Polynomial Fitting Algorithm Based on Neural Network. ASP Trans. Pattern Recognit. Intell. Syst. 2021, 1, 32–39. [Google Scholar] [CrossRef]

- Ning, X.; Wang, Y.; Tian, W.; Liu, L.; Cai, W. A Biomimetic Covering Learning Method Based on Principle of Homology Continuity. ASP Trans. Pattern Recognit. Intell. Syst. 2021, 1, 9–16. [Google Scholar] [CrossRef]

- Ahmad, A.A. Automated Flower Species Detection and Recognition from Digital Images. Int. J. Comput. Sci. Net. 2017, 17, 144–151. [Google Scholar]

- Aleya, K.F. Automated damaged flower detection using image processing. J. Glob. Res. Comput. Sci. 2013, 4, 21–24. [Google Scholar]

- Dorj, U.; Lee, M.; Diyan-ul-Imaa. A New Method for Tangerine Tree Flower Recognition. Commun. Comput. Inf. Sci. 2012, 353, 49–56. [Google Scholar]

- Jin, X.-B.; Zheng, W.-Z.; Kong, J.-L.; Wang, X.-Y.; Bai, Y.-T.; Su, T.-L.; Lin, S. Deep-learning Forecasting Method for Electric Power Load Via Attention-based encoder-decoder with Bayesian Optimization. Energies 2021, 14, 1596. [Google Scholar] [CrossRef]

- Jin, X.-B.; Zheng, W.-Z.; Kong, J.-L.; Wang, X.-Y.; Bai, Y.-T.; Su, T.-L.; Lin, S. Probability Fusion Decision Framework of Multiple Deep Neural Networks for Fine-grained Visual Classification. IEEE Access 2019, 7, 122740–122757. [Google Scholar]

- Chen, Y.; Lee, W.S.; Gan, H.; Peres, N.; Fraisse, C.; Zhang, Y.; He, Y. Strawberry Yield Prediction Based on a Deep Neural Network Using High-Resolution Aerial Orthoimages. Remote Sens. 2019, 11, 1584. [Google Scholar] [CrossRef]

- Sun, J.; He, X.; Ge, X.; Wu, X.; Shen, J.; Song, Y. Detection of Key Organs in Tomato Based on Deep Migration Learning in a Complex Background. Agriculture 2018, 8, 196. [Google Scholar] [CrossRef]

- Sun, K.; Wang, X.; Liu, S.; Liu, C. Apple, peach, and pear flower detection using semantic segmentation network and shape constraint level set. Comput. Electron. Agric. 2021, 185, 106150. [Google Scholar] [CrossRef]

- Saad, W.H.M.; Karim, S.A.A.; Razak, M.S.J.A.; Radzi, S.A.; Yussof, Z.M. Classification and detection of chili and its flower using deep learning approach. J. Phys. Conf. Ser. 2020, 1502, 012055. [Google Scholar] [CrossRef]

- Chu, Z.; Hu, M.; Chen, X. Robotic grasp detection using a novel two-stage approach. ASP Trans. Internet Things 2021, 1, 19–29. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 386–397. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision & Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 6517–6525. [Google Scholar]

- Huang, Z.; Zhang, P.; Liu, R.; Li, D. Immature Apple Detection Method Based on Improved Yolov3. ASP Trans. Internet Things 2021, 1, 9–13. [Google Scholar] [CrossRef]

- Tian, M.; Chen, H.; Wang, Q. Detection and Recognition of Flower Image Based on SSD network in Video Stream. J. Phys. Conf. Ser. 2019, 1237, 032045. [Google Scholar] [CrossRef]

- Cheng, Z.; Zhang, F. Flower End-to-End Detection Based on YOLOv4 Using a Mobile Device. Wirel. Commun. Mob. Comput. 2020, 2020, 8870649. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Jiang, Y.; Chen, L.; Zhang, H.; Xiao, X. Breast cancer histopathological image classification using convolutional neural networks with small SE-ResNet module. PLoS ONE 2019, 14, e0214587. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Wang, C.; Liao, H.M.; Wu, Y.; Chen, P.; Hsieh, J.; Yeh, I. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 16–18 June 2020; IEEE: New York, NY, USA, 2020. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. arXiv 2019, arXiv:2005.03572. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.; Wang, J.; Chen, X.; Cao, J. Bifurcations in a fractional-order BAM neural network with four different delays. Neural Netw. 2021, 141, 344–354. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.; Liu, H.; Shi, X.; Chen, X.; Xiao, M.; Wang, Z.; Cao, J. Bifurcations in a fractional-order neural network with multiple leakage delays. Neural Netw. 2020, 131, 115–126. [Google Scholar] [CrossRef]

- Xu, C.; Liu, Z.; Aouiti, C.; Li, P.; Yao, L.; Yan, J. New exploration on bifurcation for fractional-order quaternion-valued neural networks involving leakage delays. Cogn Neurodyn. 2022, 16, 1233–1248. [Google Scholar] [CrossRef]

- Huang, C.; Li, Z.; Ding, D.; Cao, J. Bifurcation analysis in a delayed fractional neural network involving self-connection. Neurocomputing 2018, 314, 186–197. [Google Scholar] [CrossRef]

- Huang, C.; Zhao, X.; Wang, X.; Wang, Z.; Xiao, M.; Cao, J. Disparate delays-induced bifurcations in a fractional-order neural network. J. Frankl. Inst. 2019, 356, 2825–2846. [Google Scholar] [CrossRef]

- Kong, J.; Yang, C.; Lin, S.; Ma, K.; Xiao, Y.; Zhu, Q. A Graph-related high-order neural network architecture via feature aggregation enhancement for identify application of diseases and pests. Comput. Intell. Neurosci. 2022, 2022, 4391491. [Google Scholar] [CrossRef]

- Kong, J.; Wang, H.; Yang, C.; Jin, X.; Zuo, M.; Zhang, X. A Spatial Feature-Enhanced Attention Neural Network with High-Order Pooling Representation for Application in Pest and Disease Recognition. Agriculture 2022, 12, 500. [Google Scholar] [CrossRef]

- Jin, X.; Zheng, W.; Kong, J.; Wang, X.; Zuo, M.; Zhang, Q.; Lin, S. Deep-Learning Temporal Predictor via Bidirectional Self-Attentive Encoder–Decoder Framework for IOT-Based Environmental Sensing in Intelligent Greenhouse. Agriculture 2021, 11, 802. [Google Scholar] [CrossRef]

- Jin, X.-B.; Gong, W.-T.; Kong, J.-L.; Bai, Y.-T.; Su, T.-L. PFVAE: A Planar Flow-Based Variational Auto-Encoder Prediction Model for Time Series Data. Mathematics 2022, 10, 610. [Google Scholar] [CrossRef]

- Jin, X.; Zhang, J.; Kong, J.; Su, T.; Bai, Y. A Reversible Automatic Selection Normalization (RASN) Deep Network for Predicting in the Smart Agriculture System. Agronomy 2022, 12, 591. [Google Scholar] [CrossRef]

- Jin, X.; Gong, W.; Kong, J.; Bai, Y.; Su, T. A Variational Bayesian Deep Network with Data Self-Screening Layer for Massive Time-Series Data Forecasting. Entropy 2022, 24, 335. [Google Scholar] [CrossRef]

| Backbone | Precision | Recall | F1 | mAP | Mbyte | |

|---|---|---|---|---|---|---|

| Yolov3 | Darknet-53 | 0.894 | 0.798 | 0.843 | 0.874 | 63.5 |

| Yolov4 | CSPDarknet-53 | 0.895 | 0.838 | 0.866 | 0.89 | 65.5 |

| Yolov5s | CSPDarknet-53 | 0.802 | 0.867 | 0.833 | 0.864 | 13.7 |

| Yolov5m | CSPDarknet-53 | 0.880 | 0.847 | 0.863 | 0.891 | 40.2 |

| Yolov5l | CSPDarknet-53 | 0.833 | 0.910 | 0.869 | 0.911 | 88.5 |

| FlowerYolov5 | CSPDarknet-53 | 0.899 | 0.900 | 0.899 | 0.942 | 23.9 |

| Bud Phase (AP) | Bloom Phase (AP) | Early Fruit Phase (AP) | |

|---|---|---|---|

| Yolov5s | 79.5% | 96.6% | 83.1% |

| Yolov5s + fusion layer | 81.5% | 94.8% | 94.8% |

| Yolov5s + CBAM | 84.9% | 97.7% | 85.3% |

| FlowerYolov5 | 90.5% | 97.7% | 94.9% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, T.; Qi, X.; Lin, S.; Zhang, Y.; Ge, Y.; Li, Z.; Dong, J.; Yang, X. A Neural Network Structure with Attention Mechanism and Additional Feature Fusion Layer for Tomato Flowering Phase Detection in Pollination Robots. Machines 2022, 10, 1076. https://doi.org/10.3390/machines10111076

Xu T, Qi X, Lin S, Zhang Y, Ge Y, Li Z, Dong J, Yang X. A Neural Network Structure with Attention Mechanism and Additional Feature Fusion Layer for Tomato Flowering Phase Detection in Pollination Robots. Machines. 2022; 10(11):1076. https://doi.org/10.3390/machines10111076

Chicago/Turabian StyleXu, Tongyu, Xiangyu Qi, Sen Lin, Yunhe Zhang, Yuhao Ge, Zuolin Li, Jing Dong, and Xin Yang. 2022. "A Neural Network Structure with Attention Mechanism and Additional Feature Fusion Layer for Tomato Flowering Phase Detection in Pollination Robots" Machines 10, no. 11: 1076. https://doi.org/10.3390/machines10111076

APA StyleXu, T., Qi, X., Lin, S., Zhang, Y., Ge, Y., Li, Z., Dong, J., & Yang, X. (2022). A Neural Network Structure with Attention Mechanism and Additional Feature Fusion Layer for Tomato Flowering Phase Detection in Pollination Robots. Machines, 10(11), 1076. https://doi.org/10.3390/machines10111076