1. Introduction

The evolution of logistics leads to inevitable, significant changes in traditional warehouses, also known as distribution centers. The fulfillment and distribution approaches change, while this is further fueled by a shift in consumer expectations, order characteristics, and service requirements. This tendency is boosting the development of a new type of warehouse that is extremely adaptable, extendable, and responsive, and that optimizes the capabilities of man and machine in a new symbiotic relationship. Second, warehousing is benefiting from technological advancements, notably in the physical/mechanical sector. Collaborative robots, augmented reality, autonomous vehicles, sensor technologies, and the Internet of things are all coming together to create a new type of warehouse: the smart warehouse. Specifically, a well-designed warehouse with a lean approach across its supply chain, a so-called warehouse 4.0, can run efficiently. Furthermore, it is necessary to have standard operating procedures that prioritize warehouse performance metrics, for the purpose of simulation, before being evaluated and confirmed in real-world situations [

1].

At the same time, automated delivery in urban areas is a cost-effective way to keep up with the growing demand for e-commerce. Essentially, fleets of mobile delivery robots from a node (which can be a truck on the border of a car-free area), which are in charge of delivering goods, mail, etc. to the last mile, are an innovative way of automated logistics that can further boost the supply chain along with the warehouse 4.0 developments. According to the literature [

2,

3], several solutions are in principle possible, which range from fully automated mobile delivery robots to remotely human-operated minirobots fleets. Whilst fully automated robots have the lowest operational costs (no human operator is needed), they are harder to design and build, as they must be able to operate in crowded, highly dynamic, and unstructured environments [

4]. Such capabilities can be achieved on the basis of a possibly expensive suite of onboard sensors and sophisticated perception, cognition, and control algorithms. Finally, the lack of human supervision of the operation in complex (urban) environments poses serious liability issues. The designer has to spend extra effort to ensure that the robot does not harm the surrounding crowd and can operate safely. Therefore, fleets of remotely operated robots balance the reduction of operating costs and flexibility of service. Nevertheless, the remote operation of mobile systems has to rely on wireless, long-distance connectivity [

2].

This position paper analyzes the last-mile driverless delivery problem, proposing solutions to overcome its limitations and challenges. Specifically, a complete road map to achieve driverless last-mile delivery is the main goal of our approach. The main contributions of our work are summarized as follows:

To summarize the most recent and relevant literature in the domain of driverless vehicles.

To highlight and stress the opportunities of introducing autonomous driving in last-mile delivery.

To reveal the technological challenges and barriers of introducing such solutions in this domain.

To suggest, where possible, some conceptual alterations that should take place in order to realize true autonomous driving in urban environments.

To link the automated logistics section with the last-mile delivery needs.

The rest of this paper is structured as follows. In

Section 2, we discuss representative related works in the field of warehouse 4.0 and the last mile of supply chain management. In the same section, the problem formulation is also presented.

Section 3 describes the challenges of autonomous transportation. In

Section 4, we analyze the robotic process automation in logistics, while in the last section (

Section 5), we draw conclusions and make suggestions for future work.

2. Related Work and Problem Formulation

Owing to the growth of e-commerce and similar commercial activities, along with changes in consumer habits evidenced especially in the last two years of the COVID-19 pandemic, goods, transportation, and the freight fleet operating in cities have also increased [

3]. One auspicious way to decongest city roads of freightage relies on putting forward optimized ways to carry out the flow of both vehicles and merchandise. This can be done by aiming to achieve the transportation vehicles are fully loaded both ways, something that can be realized only if innovative logistics centers are to be established on the periphery of urban areas [

5]. The transit of goods from such a center to its ultimate destination is denoted as the last mile. In urban areas, the starting point is usually a city hub and the final points are many urban delivery points following the door-to-door paradigm (

Figure 1).

City logistics encounter many difficulties, as the last mile tends to be the stage exhibiting the least efficacy [

6]. Such difficulties might be owed to:

The volume increase of e-commerce transportation, by means of both parcels and deliveries [

7].

The growingly exacting typical final consumer, who demands faster and faster deliveries [

8].

The customization of the flow of merchandise, which leads to lower volume dispatches with timing constraints [

9].

The tougher environmental regulations in urban areas [

10].

The traffic conditions and regulations [

11].

The economic pressure from distributors on the cost of delivery [

12].

The city streets’ burden, which directly affects logistics operations, is something that does not seem to normalize over time [

13,

14].

The above-mentioned difficulties extend beyond the realization of an optimized sorting system [

6]. Moreover, it is a task depending solely on human resources to be realized; thus, it is normally fulfilled at standard working hours [

15].

The standard way to overcome issues imposed by different factors is automatization. To this end, autonomous vehicles (AVs) can provide solutions, i.e., to efficiently deliver services and goods to urban dwellers. Autonomous robots have been proposed for last-mile parcel delivery in urban areas [

16]. Such novelties can improve efficacy in terms of delivery times, cost, and environmental footprint (reduced CO

2 footprint and efficient energy profiles) [

17]. Simulations considering platoons of robots have indicated that mixed fleets are suitable for energy-efficient last-mile logistics [

18]. Furthermore, robots constitute the ideal agents for last-mile delivery, as they can conduct contactless deliveries, especially useful in pandemic situations [

19]. Shared autonomous vehicles (SAV) [

20,

21] are capable of conveying people and goods to their final destination. Therefore, they are appropriate for conducting last-mile delivery services, while it has been shown that mixed people and goods missions tend to be on average about 11% more efficient than single-function ones [

20]. Moreover, the truck-based scheduling problem has been modeled for last-mile delivery, by optimizing good deliveries to the customers, taking into account late deliveries [

15], providing promising findings in cases of heavy congestion [

22]. The authors in [

23] studied the application of platooning in urban delivery and discussed waiting times at a transfer point and the number of platoons using a microsimulation. In the work presented in [

24], the authors discussed a system model for autonomous road freight transportation highlighting a structural model and an operational model focusing on system dynamics.

3. Challenges for Autonomous Transportation

The typical operational scenarios that must be addressed by autonomous vehicles (AVs) in the context of last-mile delivery introduce major challenges, which in turn necessitate advanced robotic perception and cognition methods, robust and efficient enough to be deployed in the relevant real environments. The concept of autonomous vehicles for logistics tasks is not a new one. We can take for example the paradigm of the JD.com retailer, which recently realized a fleet of autonomous bots to deliver goods in a structured environment. Although such vehicles could fulfill effectively their task, they operated in a territory were geofencing was needed along with human supervision. This solution is mostly close to level four autonomous driving and also is quite far from level five autonomy. Moreover, further to the typical autonomous driving solutions, which so far mainly deal with navigation in well-structured streets, with clearly visible lanes and traffic signs, last-mile delivery includes also the need for the vehicle to move in further spaces, from which it will take the goods and at which it will deliver them. As such, the last-mile scenarios include also the need for AVs to navigate within unstructured, possibly congested and crowded—with pedestrians as well—spaces, while the vehicle may also need to perform complex maneuvering actions therein, so as to park and unpark on its own. Such spaces and navigation scenarios are rather complex, in need of highly robust and efficient methods for scene segmentation, understanding, and prediction, as well as for robot behavior and path planning, as further explained below.

3.1. Perception of Vehicles

An autonomous vehicle’s operation in a fully automated manner under all circumstances, the so-called level five automated driving level, requires a reliable and detailed perception of the surrounding scene. Currently, most open-source software stacks and autonomous driving companies focus on urban environments, where the existence of consistent road marks, traffic signs, or even detailed high-definition maps are a prerequisite. However, in order to extend the number of driverless vehicles in logistics applications, it is necessary to overcome these requirements and ensure continuous operation through any kinds of environment and under any environmental conditions [

25]. The coexistence of nonautonomous and autonomous vehicles in a common space introduces many challenges and increases the importance of risk assessment modules. This leads to the need for an autonomous system that imitates a normal driver’s behavior [

26], utilizes comfort and decreases risk situations during driving [

27], and generally thrusts the public acceptance of autonomous platforms [

28].

The requirements for the perception system derive from the localization and navigation systems that control the overall motion of the autonomous vehicle. Driverless vehicles used in logistics applications must be able to continuously localize with high accuracy in their environment, detect the drivable and traversable area [

29], decide on the optimal paths over structured and unstructured areas, and finally detect the delivery space in order to complete their mission. Apart from that, a smooth interaction with humans is required through advanced human–machine interaction interfaces.

Autonomous robotic systems are equipped with a plethora of different sensors, each one contributing in a unique way to the overall perception system. Cost, data format and hardware limitations are the main factors that introduce critical limitations to the perception system. Each type of sensor must be analyzed separately; however, their combination and how it can improve the overall performance are of great interest [

30].

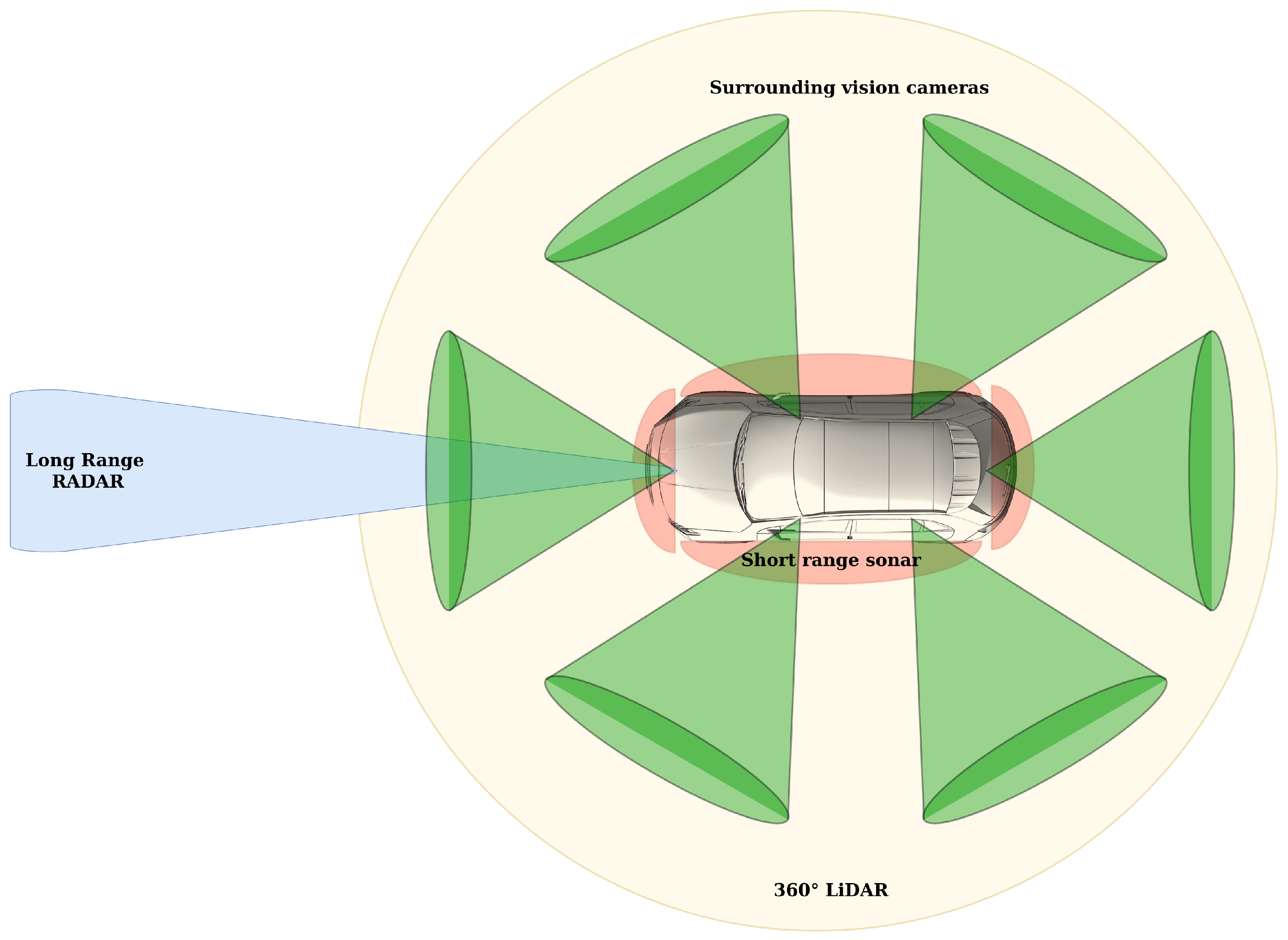

The key sensors in autonomous vehicles currently are: (i) vision cameras, (ii) LiDAR, (iii) radar, (iv) sonar, and (v) inertial measurement units (IMUs). Cameras are found in probably all driverless vehicles for direct visualization of the surrounding environment using high quality image data at a high frame rate, imitating human eyesight. They diversify according to their optical field of view, narrow or wide, and their image quality and are commonly placed around the vehicle, providing a 360° visual view. Color information provides the essential information for traffic signs interpretation, lane markings detection, and a detailed semantic segmentation of the surrounding scene [

31,

32]. However, their main disadvantage is their degraded operation in changing illumination conditions and their inability to provide accurate depth measurements. In

Figure 2, a classic topology of the sensors arrangement around the autonomous vehicle is graphically illustrated.

LiDAR sensors provide a 3D representation of the surrounding environment through the emission of infrared laser light beams. The resulting data from LiDAR sensors are a set of coordinates along with the surface reflectivity information. This kind of sensor is able to provide centimeter accuracy for long-range depth data, which is a great contribution to perception algorithms, while their operation remains unaffected in all light and weather conditions [

33]. The increased cost of spinning LiDAR sensors was an important issue for automotive industries, affecting negatively the mass production and data collection of AVs [

34]. However, solid-state scanning systems that have been introduced in recent years have led to significantly decreased costs and improved accuracy and robustness [

35].

Radar sensors are a key component of a driverless vehicle’s sensor suite [

36], due to their low cost in mass production and their ability to provide accurate depth measurements over long distances. This allows the operation of high-speed vehicles with improved collision avoidance systems, independently of the weather conditions. However, they are not able to provide information-rich data that will improve other tasks, e.g., semantics, in perception systems. Their operation principle is based on radio waves emissions and the calculation of their return time, in order to estimate the distance, angle, and velocity of the obstacles detected.

Regarding low-speed tasks of autonomous vehicles, such as parking and maneuvering in narrow areas, sonar sensors are suitable for estimating the surrounding free space. They can reliably detect large objects made of solid materials in short distances through the emission of sound pulses and the reading of the echoes, while maintaining the cost at low levels.

Inertial measurement units are an integral part of modern autonomous vehicles. They make use of accelerometers and gyroscopes, which can be fused with GNSS data when they are available. Through the continuous and reliable six-DOF movement information, the vehicle can estimate its position and orientation at all times, even in GPS-denied environments or when other localization modules fail to operate. The main drawback of IMUs is the error accumulation over time due to sensor drifting along with their low accuracy. Such problems are mostly tackled through the measurements’ filtering using estimation algorithms (e.g., Kalman Filter).

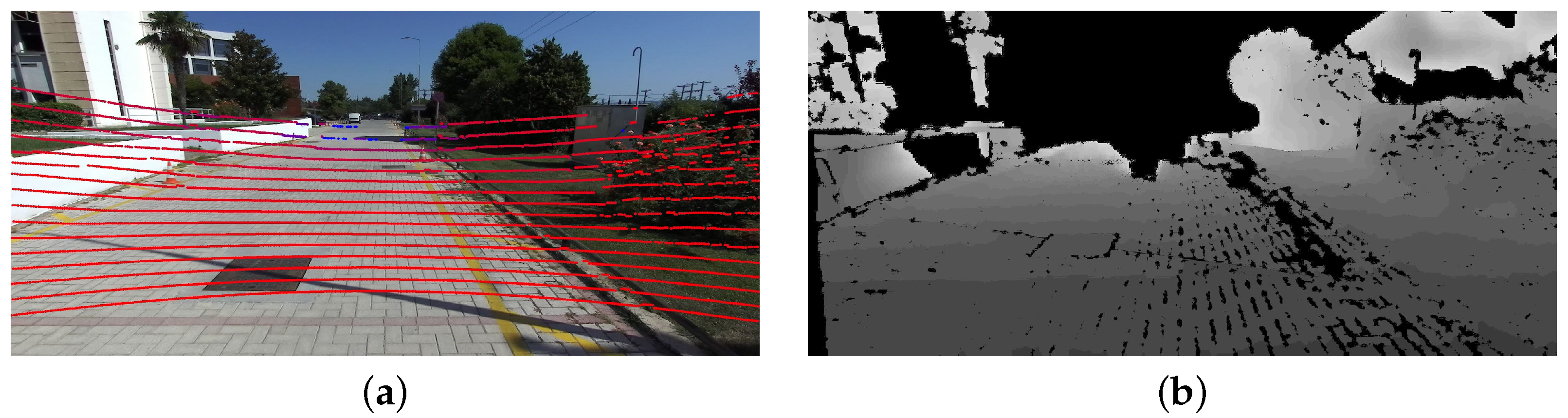

The aforementioned sensors always have an overlapping field of view, allowing a better understanding of the environment through different data representations. An essential component for such vehicles becomes the sensor fusion module, which is responsible for the fully accurate knowledge of the relative placement between the sensors. Online calibration must be available, in order to ensure that the sensors will always report accurate data [

37]. Sample data of multiple sensors with common FOV are shown in

Figure 3.

3.2. Cognition of Vehicles

To enable autonomous last-mile delivery for logistics tasks, AVs will also need to have advanced cognitive capabilities that will regulate their navigation behavior [

38], their adaptation to unexpected situations, and their safe operation in urban human-populated environments. The existence of remote human operators that can intervene and handle dangerous situations is currently required for automated vehicles that participate in last-mile delivery services as well as in other logistics tasks (e.g., the Hail robotaxi [

39]). Ideally, there would be no severe consequences if an AGV completes its mission through remote control, apart from a minor time delay. However, the coexistence with the surrounding traffic actors, pedestrians, and vehicles requires that any malfunction be solved directly and do not affect the traffic flow. Moreover, companies aim to decrease as much as possible the remote-operator-to-fleet size ratio. This indicates that driverless vehicles that undertake delivery tasks should achieve level five autonomous driving, based on which the vehicle can operate in any road network and under any weather conditions [

40].

However, the existing vehicles are only endorsed with advanced driver-assistive systems (ADAS) that can reach up to level three autonomy involving capabilities such as collision avoidance, emergency braking systems, departure warning, and lane-keeping assistance, all supervised by the actual driver. To reach level five autonomy, there are significant barriers that need to be overcome concerning, apart from the perception limitations, the cognitive functionalities that the future autonomous vehicles should retain. According to the Society of Automotive Engineers [

41], level five AVs will be a new type of vehicle with autonomous driving capabilities that can handle different scenarios, equipped with self-configuration and self-healing capabilities [

42], while enabling social interaction with their users.

The cognitive challenges that autonomous vehicles should tackle to reach level five autonomy can be better highlighted if we may juxtapose them with normal drivers’ cognitive behavior. A normal driver is capable of navigating and routing in a congestive urban environment given a map (typically provided by an application integrated into the infotainment system of the vehicle) and approximate GPS measurements to localize the vehicle’s location on this map. However, this is only high-level information and the drivers’ perception and cognitive functionalities close the loop of efficient driving by addressing hundreds of peculiarities that involve vehicle maneuvering and local navigation behaviors that comply with the highway code, ethical, and societal rules. The highway code comprises the set of information, regulations, and guidelines for all road users, in order to promote road safety.

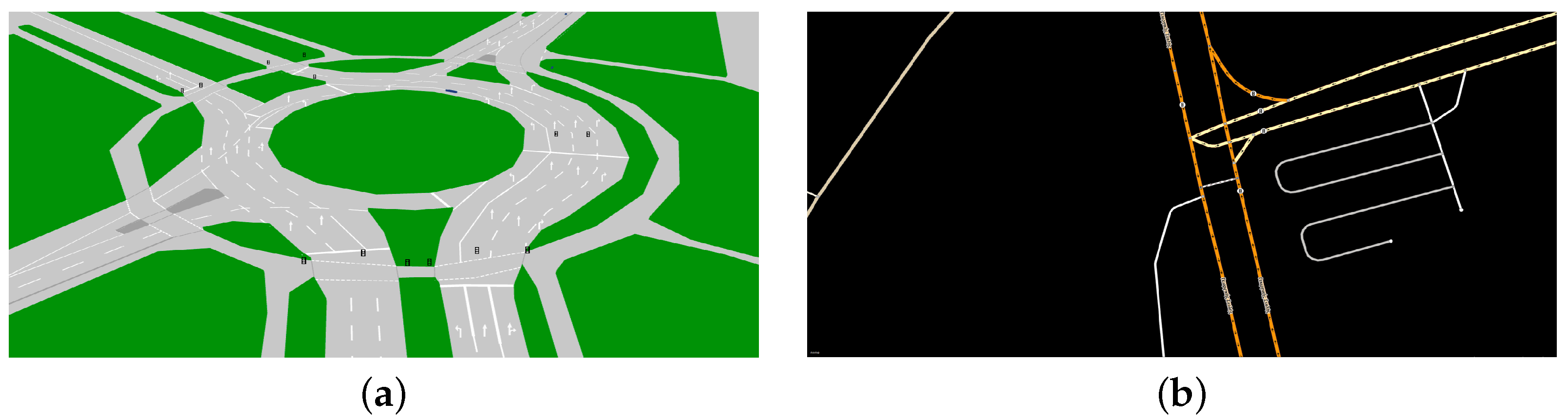

3.2.1. Availability of High-Definition Maps

For autonomous vehicles, such maps are typically called high-definition maps [

43] and have been introduced together with autonomous vehicles, as a necessary infrastructure component for reliable navigation. They describe a predefined area with high fidelity, including all traffic marks, available lanes, and barriers (see

Figure 4). Through this information, a vehicle is able to localize and safely navigate even in high-traffic areas, but this creates more limitations for the vehicles. The selected area must be already mapped from previous vehicles and any changes in the environment must be reflected in real time on the HD map information. In order to deploy autonomous vehicles anywhere, new innovative ways must be introduced that do not depend on the information of predetermined maps.

The high-level behavior planning and navigation of autonomous systems rely on GPS sensors for rough position estimation in such maps. Imitating a real driver who is using Google Maps for reaching an unknown destination, autonomous vehicles make use of online map applications in order to receive a route estimate given their initial GPS location and destination. Obviously, this information is not enough and the perception along with the local path-planning modules are responsible for the route execution [

44].

3.2.2. Limitations of GPS-Based Localization

GPS-based solutions with precise point positioning corrections cannot guarantee the stability of autonomous systems [

45]. Underground passageways and GPS-denied environments are areas that cannot be supported by GNSS solutions. Even with the optimal localization accuracy from such solutions, perception systems must always have a higher priority in the final decision-making of the vehicles, due to unprecedented situations that exist in real-world environments and the sparse GPS reception in specific areas. This is to stress that GPS-based localization on high-definition maps can be used for approximate vehicle positioning and can be part of global trajectory planning. To address this challenge closed-loop perception systems should operate constantly in order to improve the vehicle’s local positioning.

3.2.3. Challenges in High-Level Mission Planning and Decision-Making

High-level mission planning for autonomous vehicles dedicated to last-mile delivery concerns several functionalities that among others involve: vehicle parking in predetermined position for merchandise pick-up, global path planning that optimally addresses efficient multiple delivery targets by solving the routing problem, vehicle’s arrival at the delivery point, and even communication through advanced human–machine interaction interfaces for the package delivery [

46]. Such cognitive functionalities should be adaptable to cope with dynamic changes in the environment (blocked roads, extreme traffic conditions, etc.) ensuring driverless vehicle self-learning and reorganization. HD maps and GPS are used for the global path planning and replanning while optimization methods attempts to connect all the subordinate routes and tasks given the uncertain perception input. To this end, the state-of-the-art perception modules of autonomous vehicles rely on learning-based methods that are highly connected with the kind of data that have been used during the training procedure. Even though many publicly available datasets that contain a great diversity of road scenes have been introduced lately, and autonomous driving companies continuously update their data through testing in real-world environments, there is no guarantee that an undefined scenario may not be presented during the operation of driverless vehicles [

47]. Consequently, the generalization of deep learning methods is of high priority in the development of such systems, while having the option for remote support and/or teleoperation of certified personnel, until a fully safe operation is guaranteed. In addition, the understanding and generalization of revisited areas, i.e., the so-called loop-closure detection [

48], can further improve the vehicle’s localization on the map, reinforce the knowledge of the vehicle regarding its surroundings, and can be used for future routing based on past experiences, thus improving even more the last-mile delivery services.

3.2.4. The Complexity of Behavior Planning for Autonomous Vehicles

The behavior planning concerns a real-time vehicle’s reaction based on the spatiotemporal input obtained from its perception system. This involves real-time obstacle avoidance respecting at the same time the highway code, the detection of pedestrians, and the assessment of the situation for their safe avoidance or for granting them a priority, the unparking and parking in predetermined spots, or the safe response in congested situations. More precisely, several vehicles are already equipped with autonomous valet parking enabling them to park and unpark safely in structured areas, such as parking lots [

49]. However, these methods rely on visual input, which, even when fused with sonar measurements, are prone to errors in dynamic illumination conditions and operate well only in indoor parking lots. Autonomous vehicles in last-mile delivery should be able to detect and park in their dedicated delivery spot, yet in situations where the delivery spot is blocked or not well defined, the vehicle’s behavior adaptation system should compensate for such situations and infer a new, safe, and acceptable parking area in order to deliver the package.

Regarding obstacle avoidance, there are a plethora of methods to achieve this challenge according to [

50]. Most motion planning approaches take into account the obstacles for a given state at a specific moment [

51], without considering the future and past states of the surrounding dynamic objects, leading to an open-loop system that does not receive feedback from the rest of the traffic actors and operates for discrete time moments, regardless of the previous motion planning estimates. There are also other methods that employ the state lattice planner approach, which takes into consideration parameters from the dynamic environment [

52], yet their increased complexity, when loaded with thousands of observations, can render such methods insufficient for real-time inference. Pedestrian detection, motion intention, and reaction have been extensively studied in the past decades for autonomous vehicles’ safe navigation [

53].

Machine-learning-based methods have been utilized on LiDAR [

54] as well as on image data ([

55] that provide accurate measurements in real time, supporting multiple pedestrians’ detection in a single frame. However, such approaches are very computationally expensive and considering that this module should operate constantly along with other vital systems of the vehicle, e.g., localization, control, map update, object detection, in some cases, the performance can drop or the utilized computational unit can dramatically increase the costs of such solutions. An alternative could be the utilization of multitask neural networks, that are able to extract multiple inference results using a common network architecture [

56]. Such approaches commonly use shared blocks for feature extraction and employ separate decoders for the inference of each different desired output. The advantage of using these techniques is the reduction of computational cost and inference time, due to the shared weights, along with the end-to-end training of multiple tasks in a single model. One limitation on this is the absence of such complete publicly available datasets that retain multimodal and multipurpose contextual information, while the recording and annotation of such hyperdatasets is time-consuming and laborious work. Self-supervised networks have been introduced in order to replace the need for labeled data, while achieving the same efficiency [

57], but the current methods still require great advances for fulfilling the needs of driverless vehicles.

3.2.5. Delivery with Distributed Autonomous Vehicles

The existence of multiple driverless systems in the same environment has introduced the term connected vehicle technology [

58]. Vehicle-to-vehicle (V2V), vehicle-to-infrastructure (V2I) and vehicle-to-everything (V2X) technologies are the most common technologies present in modern vehicles, which allow the information exchange with other connected operating vehicles and infrastructure sensors, such as traffic congestion, traffic light status, etc. The development of such technologies presents great improvements for the entire traffic network and each vehicle independently [

59]. Vehicles’ routes can be optimized in real time in order to reduce traffic congestion [

60], notify vehicles of dangerous weather conditions, and even further reduce the total energy consumption from each vehicle by using smoother and synchronized driving motions.

4. Robotic Process Automation in Logistics

According to

Section 2, the last-mile delivery refers to the transportation of goods from a warehouse or distribution center to their final destination. Hence, some processes in a warehouse or distribution center should be automated. Warehouse automation consists of a range of complexity levels; to avoid repetitive operations, basic automation employs planning, machinery, and transportation, while artificial intelligence and robotic systems are used in advanced technologies. Hence, several processes in an intelligent warehouse should be automated to address autonomous goods transportation in the entire supply chain. The types of warehouse automation are as follows [

61]:

Simple warehouse automation: using basic technology to aid employees with tasks that would need more manual work otherwise (for example, a conveyor or carousel can transport products between two warehouse locations).

Warehouse system automation: activities and procedures are automated using developed AI methods.

Many distinct types of warehouse automation exist due to the wide range of warehouse capabilities accessible. Warehouse automation aims to eliminate human labor and speed up processes from receipt to shipping. Hence, goods-to-person (GTP) fulfillment is one of the most common ways for improving efficiency and reducing congestion. This category includes anything from conveyors to carousels to vertical lift systems. When implemented effectively, GTP systems have the potential to double or triple warehouse picking speed. Moreover, automated storage and retrieval systems (AS/RS) are automated systems and equipment for storing and retrieving materials or items, such as material-carrying vehicles, tote shuttles, and miniloaders. In high-volume warehouse applications with limited space, AS/RS systems are frequently used. Automated guided vehicles (AGVs) utilize magnetic strips, cables, or sensors to navigate a predetermined path across the warehouse. AGVs are a type of automated guided vehicle. AGVs can only be used in big, straightforward warehouse locations with this navigation scheme. AGVs are not a good fit for complex warehouses with a lot of human traffic and limited space, whilst AMRs use GPS systems to determine effective courses within a warehouse, making them more versatile than AGVs. Because AMRs use current laser guidance systems to identify obstructions, they can safely handle dynamic settings with a lot of human traffic. Furthermore, the voice-directed warehousing activities, also known as pick-by-voice, are carried out utilizing speech recognition software and mobile headsets. The technology creates efficient pick-up routes to instruct warehouse staff where to pick up or put away a product. This solution eliminates the need for portable tools such as RF scanners, allowing pickers to concentrate on their work in a safer and more productive way [

61].

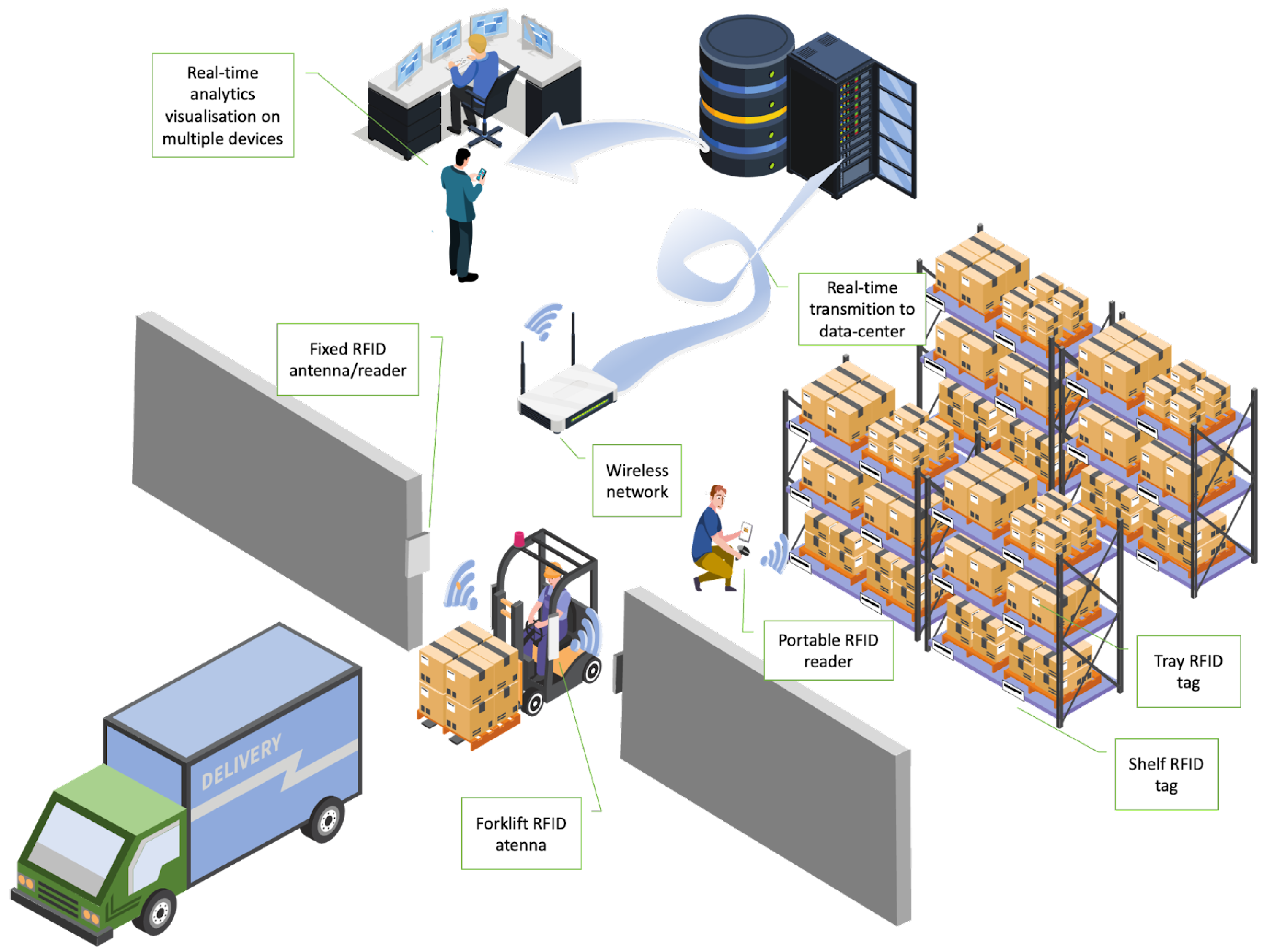

Furthermore, many companies use automated sortation systems for receiving, picking, packaging, and delivering orders in order fulfillment, for example, radiofrequency identification (RFID), as depicted in

Figure 5. The robotic equipment and systems are used to assist individuals with warehouse responsibilities and operations in this type of warehouse automation. For instance, self-driving mobile shelf loader robots lift product racks and move them to human pickers for retrieval and sorting. Finally, advanced warehouse automation uses automated warehouse robots and automation technologies to replace labor-intensive human techniques. This automated process assumes that the fleet of robotic forklifts navigates a warehouse using advanced AI, cameras, and sensors and transmits each forklift’s location [

62].

The development and adoption of automated intelligent systems in a modern WMS is very crucial for the monitoring/tracking of goods within the supply chain, including the transition between different storage nodes and distribution points. The industrial Internet of things (IoT) contributes to the automation of processes throughout the supply chain. In this regard, RFID-IoT plays a critical role in assisting supply chains in collecting, locating, improving, and optimizing supply chain processes in dynamic environments. In addition, RFID-IoT also allows the interconnection between devices and interface between the environment and humans. The advent of the Internet of things (IoT) technology was aided by RFID’s improved identification and WSN’s pervasive computing. The primary goal of the Internet of things (IoT) is to create a large network by combining numerous sensor devices such as RFID, a global navigation satellite system (GNSS), and a network to offer global information exchange. RFID and WSN are the IoT’s essential technologies, with RFID converting physical things and the environment into digital data and WSN providing dispersed and pervasive wireless systems. Furthermore, RFID’s superior sensing capability and WSN’s high ubiquitous capability are combined to create a globally networked object that can be discovered and exploited as a resource in an IoT network (RFID-IoT). RFID-IoT has improved device interoperability across a wide range of applications, and it is driving the industrial revolution, particularly in supply chain management.

Figure 5 graphically illustrates the concept of the RFID-based warehouse management system. Hence, the automation of the processes from the stage of storing them within the warehouse or the city hub contributes to the achievement of the last mile challenge. Hence, several processes in intelligent warehouses should be automated to address autonomous goods transportation in the entire supply chain. Moreover, many technologies that contribute to the automation of processes within warehouses are common and adopted by autonomous vehicles that achieve delivery at the final point, for example, the autonomous navigation of AGVs or AS/RS, or human–robot interaction.

5. Discussion

Level five autonomy vehicles should be capable of driving on any mapped road independently of whether a human driver steers the vehicle or not. Should the final destination be determined, the vehicle will be able to locomote towards it and deliver any passengers or goods. The vehicle will be self-managing, in the sense that it should accomplish any scenario by its own means and perform the whole driving task without any human intervention, i.e., it should competently respond to any unexpected situation on its own. Notwithstanding the dust being kicked up concerning fully autonomous cars, level five autonomy is still some time away from entering our cities. That is, the existence of automated vehicles along with pedestrians and other vehicles (either autonomous or not) can cause severe conflicts in the normal flow of traffic in urban scenarios. Even the escalation process may lead to a delay that will create an uncomfortable situation for the rest of the people that are involved in such scenarios. For instance, an AGV crossing an intersection interferes with multiple traffic actors and any malfunction that is not directly solved is a problem affecting everyone and not only for the goods’ transportation. In addition, companies that deploy such automated vehicles in order to optimize their benefits aim to reduce as much as possible the remote-operator-to-fleet size ratio, ideally making this ratio equal to zero. For these reasons, the current technological solutions on automated delivery vehicles require the existence of remote operators that can intervene and cope with dangerous or unexpected situations.

These reasons create the need for level five autonomous systems, and even though they cannot be deployed at this time, they will be a reality for last-mile delivery services, once the issues that are mentioned above have been solved. However, in order for such systems to be deployed, accompanying technologies need to be developed. That being said, the benefits of deploying level five autonomy include the vehicles’ continuous optimization of their own route, conditioned to their current state and their environment. In order accomplish that, a real-time expert system for monitoring traffic and weather conditions is essential. Such a system should include predictive models about the evolution of the traffic in the areas the AGV is going to move. Decision-making intelligent systems will enable the vehicle to dynamically reroute, on its way to deliver the last mile, thus saving operating time and fuel. In turn, such a continuous optimization will have a positive impact not only on enterprise’s revenues, but it will contribute to reduce congestion on city streets and, subsequently, will reduce the overall CO2 footprint.

6. Conclusions

Urban logistics has been more prevalent in recent years, due to the market digitization and the subsequent increase in online sales. However, there are a number of externalities associated with last-mile logistics, and researchers and businesses are constantly looking for ways to increase their efficiency, both by relying on conventional approaches and by turning to recently developed methodologies connected to artificial intelligence. In this paper, the conceptual approach to the challenges and solutions of driverless last-mile delivery was presented. Notably, a summary was provided of the key scientific approaches formulated by researchers in recent years to improve the performance of urban logistics, focusing on both established operational research techniques and state-of-the-art machine learning methodology. The further advancement of autonomous vehicles’ perception and cognition capabilities that will enable them to operate in urban environments and to cope with encountered challenges is essential to increase automation in last-mile delivery. Smart data processing and the handling of the AVs’ perception system should be combined with efficient machine learning strategies, e.g., multitasking learning algorithms that will relieve the computation burden of the AVs and will enable an efficient reuse of data knowledge with fewer resources. In the cognition domain, synergistic robotic and behavioral planning should be applied to enable autonomous agents to rapidly exchange and share information, in which 5G technology is also anticipated to contribute, in order to cope with complex navigation and delivery missions in urban and unstructured environments with increased traffic, human congestion, and unexpected situations. Attention to the safe, reliable, and ubiquitous connectivity of such technological domains should be applied. Finally, another challenge constitutes the interconnection of the current robotic process automation, which is already at a significant technological level, with autonomous vehicles such as trucks, robots, etc., which will contribute to the seamless integration of these technologies in logistics and will effectively contribute to the robustness and performance increase of the supply chain by ensuring the prompt transportation and delivery of goods. Concluding, it should be stressed that the impact of this work is to concentrate the attention and, hence, the research efforts of scholars towards the weaknesses of robotic perception, cognition, behavioral navigation, and supply chain organization where major flaws and limitations exist so as to expedite the introduction of level five driverless vehicles in unconstrained urban and rural environments.

Author Contributions

Conceptualization, V.B., K.T., D.G., I.K., D.F. and A.G.; methodology, V.B., K.T., D.G. and I.K.; validation, A.G. and D.T.; writing—original draft preparation, V.B., K.T., D.F. and I.K.; writing—review and editing, V.B., K.T. and I.K.; visualization, V.B., K.T. and A.G.; supervision, A.G. and D.T.; project administration, A.G. and D.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AGVs | Automated guided vehicles |

| AS/RS | Automated storage and retrieval systems |

| AVs | Autonomous vehicles |

| GNSS | Global navigation satellite system |

| GTP | Goods-to-person |

| IMU | Inertial measurement unit |

| IoT | Internet of things |

| RFID | Radiofrequency identification |

| SAV | Shared autonomous vehicles |

| V2E | Vehicle-to-everything |

| V2I | Vehicle-to-infrastructure |

| V2V | Vehicle-to-vehicle |

| WMS | Warehouse management system |

| WSN | Wireless sensor network |

References

- Boonsothonsatit, G.; Hankla, N.; Choowitsakunlert, S. Strategic Design for Warehouse 4.0 Readiness in Thailand. In Proceedings of the 2020 2nd International Conference on Management Science and Industrial Engineering, Osaka, Japan, 7–9 April 2020; pp. 214–218. [Google Scholar]

- Marsden, N.; Bernecker, T.; Zöllner, R.; Sußmann, N.; Kapser, S. BUGA: log–A real-world laboratory approach to designing an automated transport system for goods in Urban Areas. In Proceedings of the 2018 IEEE International Conference on Engineering, Technology and Innovation (ICE/ITMC), Stuttgart, Germany, 17–20 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–9. [Google Scholar]

- Taniguchi, E. City logistics for sustainable and liveable cities. In Green Logistics and Transportation; Springer: Berlin/Heidelberg, Germany, 2015; pp. 49–60. [Google Scholar]

- Faisal, A.; Kamruzzaman, M.; Yigitcanlar, T.; Currie, G. Understanding autonomous vehicles. J. Transp. Land Use 2019, 12, 45–72. [Google Scholar] [CrossRef]

- Impact of Self-Driving Vehicles on Freight Transportation and City Logistics. Available online: https://www.ebp.ch/en/projects/impact-self-driving-vehicles-freight-transportation-and-city-logistics (accessed on 30 June 2022).

- Ranieri, L.; Digiesi, S.; Silvestri, B.; Roccotelli, M. A review of last mile logistics innovations in an externalities cost reduction vision. Sustainability 2018, 10, 782. [Google Scholar] [CrossRef]

- Assmann, T.; Bobeth, S.; Fischer, E. A conceptual framework for planning transhipment facilities for cargo bikes in last mile logistics. In Proceedings of the Conference on Sustainable Urban Mobility, Skiathos Island, Greece, 24–25 May 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 575–582. [Google Scholar]

- Joerss, M.; Schröder, J.; Neuhaus, F.; Klink, C.; Mann, F. Parcel Delivery. The Future of Last Mile; McKinsey & Company: New York, NY, USA, 2016. [Google Scholar]

- Ruppik, D. Innovationen auf der letzten Meile. Int. Verkehrswesen 2021, 72, 32–33. [Google Scholar]

- Hu, W.; Dong, J.; Hwang, B.G.; Ren, R.; Chen, Z. A scientometrics review on city logistics literature: Research trends, advanced theory and practice. Sustainability 2019, 11, 2724. [Google Scholar] [CrossRef]

- Behiri, W.; Belmokhtar-Berraf, S.; Chu, C. Urban freight transport using passenger rail network: Scientific issues and quantitative analysis. Transp. Res. Part E Logist. Transp. Rev. 2018, 115, 227–245. [Google Scholar] [CrossRef]

- Park, H.; Park, D.; Jeong, I.J. An effects analysis of logistics collaboration in last-mile networks for CEP delivery services. Transp. Policy 2016, 50, 115–125. [Google Scholar] [CrossRef]

- Arvianto, A.; Sopha, B.M.; Asih, A.M.S.; Imron, M.A. City logistics challenges and innovative solutions in developed and developing economies: A systematic literature review. Int. J. Eng. Bus. Manag. 2021, 13, 18479790211039723. [Google Scholar] [CrossRef]

- He, Z. The challenges in sustainability of urban freight network design and distribution innovations: A systematic literature review. Int. J. Phys. Distrib. Logist. Manag. 2020, 50, 601–640. [Google Scholar] [CrossRef]

- Boysen, N.; Schwerdfeger, S.; Weidinger, F. Scheduling last-mile deliveries with truck-based autonomous robots. Eur. J. Oper. Res. 2018, 271, 1085–1099. [Google Scholar] [CrossRef]

- Poeting, M.; Schaudt, S.; Clausen, U. A comprehensive case study in last-mile delivery concepts for parcel robots. In Proceedings of the 2019 Winter Simulation Conference (WSC), Fort Washington, MD, USA, 8–11 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1779–1788. [Google Scholar]

- Figliozzi, M.; Jennings, D. Autonomous delivery robots and their potential impacts on urban freight energy consumption and emissions. Transp. Res. Procedia 2020, 46, 21–28. [Google Scholar] [CrossRef]

- Scherr, Y.O.; Saavedra, B.A.N.; Hewitt, M.; Mattfeld, D.C. Service network design with mixed autonomous fleets. Transp. Res. Part Logist. Transp. Rev. 2019, 124, 40–55. [Google Scholar] [CrossRef]

- Chen, C.; Demir, E.; Huang, Y.; Qiu, R. The adoption of self-driving delivery robots in last mile logistics. Transp. Res. Part Logist. Transp. Rev. 2021, 146, 102214. [Google Scholar] [CrossRef]

- Beirigo, B.A.; Schulte, F.; Negenborn, R.R. Integrating people and freight transportation using shared autonomous vehicles with compartments. IFAC PapersOnLine 2018, 51, 392–397. [Google Scholar] [CrossRef]

- Tholen, M.v.d.; Beirigo, B.A.; Jovanova, J.; Schulte, F. The Share-A-Ride Problem with Integrated Routing and Design Decisions: The Case of Mixed-Purpose Shared Autonomous Vehicles. In Proceedings of the International Conference on Computational Logistics, Enschede, The Netherlands, 27–29 September 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 347–361. [Google Scholar]

- Simoni, M.D.; Kutanoglu, E.; Claudel, C.G. Optimization and analysis of a robot-assisted last mile delivery system. Transp. Res. Part Logist. Transp. Rev. 2020, 142, 102049. [Google Scholar] [CrossRef]

- Haas, I.; Friedrich, B. Developing a micro-simulation tool for autonomous connected vehicle platoons used in city logistics. Transp. Res. Procedia 2017, 27, 1203–1210. [Google Scholar] [CrossRef]

- Csiszár, C.; Földes, D. System model for autonomous road freight transportation. Promet Traffic Transp. 2018, 30, 93–103. [Google Scholar] [CrossRef]

- Onozuka, Y.; Matsumi, R.; Shino, M. Autonomous Mobile Robot Navigation Independent of Road Boundary Using Driving Recommendation Map. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 4501–4508. [Google Scholar]

- Cascetta, E.; Carteni, A.; Di Francesco, L. Do autonomous vehicles drive like humans? A Turing approach and an application to SAE automation Level 2 cars. Transp. Res. Part Emerg. Technol. 2022, 134, 103499. [Google Scholar] [CrossRef]

- Brell, T.; Philipsen, R.; Ziefle, M. sCARy! Risk perceptions in autonomous driving: The influence of experience on perceived benefits and barriers. Risk Anal. 2019, 39, 342–357. [Google Scholar] [CrossRef]

- Nastjuk, I.; Herrenkind, B.; Marrone, M.; Brendel, A.B.; Kolbe, L.M. What drives the acceptance of autonomous driving? An investigation of acceptance factors from an end-user’s perspective. Technol. Forecast. Soc. Chang. 2020, 161, 120319. [Google Scholar] [CrossRef]

- Tsiakas, K.; Kostavelis, I.; Giakoumis, D.; Tzovaras, D. Road tracking in semi-structured environments using spatial distribution of lidar data. In Proceedings of the International Conference on Pattern Recognition, Virtual, 17–19 March 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 432–445. [Google Scholar]

- Kocić, J.; Jovičić, N.; Drndarević, V. Sensors and sensor fusion in autonomous vehicles. In Proceedings of the 2018 26th Telecommunications Forum (TELFOR), Belgrade, Serbia, 20–21 November 2018; pp. 420–425. [Google Scholar]

- Balaska, V.; Bampis, L.; Boudourides, M.; Gasteratos, A. Unsupervised semantic clustering and localization for mobile robotics tasks. Robot. Auton. Syst. 2020, 131, 103567. [Google Scholar] [CrossRef]

- Balaska, V.; Bampis, L.; Kansizoglou, I.; Gasteratos, A. Enhancing satellite semantic maps with ground-level imagery. Robot. Auton. Syst. 2021, 139, 103760. [Google Scholar] [CrossRef]

- Li, Y.; Ibanez-Guzman, J. Lidar for autonomous driving: The principles, challenges, and trends for automotive lidar and perception systems. IEEE Signal Process. Mag. 2020, 37, 50–61. [Google Scholar] [CrossRef]

- Liu, L.; Lu, S.; Zhong, R.; Wu, B.; Yao, Y.; Zhang, Q.; Shi, W. Computing Systems for Autonomous Driving: State of the Art and Challenges. IEEE Internet Things J. 2021, 8, 6469–6486. [Google Scholar] [CrossRef]

- Hecht, J. Lidar for self-driving cars. Opt. Photonics News 2018, 29, 26–33. [Google Scholar] [CrossRef]

- Bilik, I.; Longman, O.; Villeval, S.; Tabrikian, J. The rise of radar for autonomous vehicles: Signal processing solutions and future research directions. IEEE Signal Process. Mag. 2019, 36, 20–31. [Google Scholar] [CrossRef]

- Feng, D.; Haase-Schütz, C.; Rosenbaum, L.; Hertlein, H.; Glaeser, C.; Timm, F.; Wiesbeck, W.; Dietmayer, K. Deep multi-modal object detection and semantic segmentation for autonomous driving: Datasets, methods, and challenges. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1341–1360. [Google Scholar] [CrossRef]

- Kostavelis, I.; Gasteratos, A. Semantic mapping for mobile robotics tasks: A survey. Robot. Auton. Syst. 2015, 66, 86–103. [Google Scholar] [CrossRef]

- Ackerman, E. Hail, robo-taxi![top tech 2017]. IEEE Spectr. 2017, 54, 26–29. [Google Scholar] [CrossRef]

- Shreyas, V.; Bharadwaj, S.N.; Srinidhi, S.; Ankith, K.; Rajendra, A. Self-driving cars: An overview of various autonomous driving systems. Adv. Data Inf. Sci. 2020, 94, 361–371. [Google Scholar]

- SAE On-Road Automated Vehicle Standards Committee. Taxonomy and definitions for terms related to on-road motor vehicle automated driving systems. SAE Stand. J. 2014, 3016, 1–16. [Google Scholar]

- Jurgen, R. Self-Configuration and Self-Healing in AUTOSAR (2007-01-3507). In Automotive Electronics Reliability; IEEE: Piscataway, NJ, USA, 2010; pp. 145–156. [Google Scholar]

- Mi, L.; Zhao, H.; Nash, C.; Jin, X.; Gao, J.; Sun, C.; Schmid, C.; Shavit, N.; Chai, Y.; Anguelov, D. HDMapGen: A hierarchical graph generative model of high definition maps. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 4227–4236. [Google Scholar]

- Tsiakas, K.; Kostavelis, I.; Gasteratos, A.; Tzovaras, D. Autonomous Vehicle Navigation in Semi-structured Environments Based on Sparse Waypoints and LiDAR Road-tracking. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1244–1250. [Google Scholar]

- Knoop, V.L.; De Bakker, P.F.; Tiberius, C.C.; Van Arem, B. Lane determination with GPS precise point positioning. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2503–2513. [Google Scholar] [CrossRef]

- Zhao, C.; Li, L.; Pei, X.; Li, Z.; Wang, F.Y.; Wu, X. A comparative study of state-of-the-art driving strategies for autonomous vehicles. Accid. Anal. Prev. 2021, 150, 105937. [Google Scholar] [CrossRef] [PubMed]

- Balaska, V.; Bampis, L.; Gasteratos, A. Self-localization based on terrestrial and satellite semantics. Eng. Appl. Artif. Intell. 2022, 111, 104824. [Google Scholar] [CrossRef]

- Wang, H.; Wang, C.; Xie, L. Intensity scan context: Coding intensity and geometry relations for loop closure detection. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 2095–2101. [Google Scholar]

- Qin, T.; Chen, T.; Chen, Y.; Su, Q. Avp-slam: Semantic visual mapping and localization for autonomous vehicles in the parking lot. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 20–25 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 5939–5945. [Google Scholar]

- González, D.; Pérez, J.; Milanés, V.; Nashashibi, F. A review of motion planning techniques for automated vehicles. IEEE Trans. Intell. Transp. Syst. 2015, 17, 1135–1145. [Google Scholar] [CrossRef]

- Gu, T.; Dolan, J.M. On-road motion planning for autonomous vehicles. In Proceedings of the International Conference on Intelligent Robotics and Applications, Montreal, QC, Canada, 3–5 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 588–597. [Google Scholar]

- Zhang, C.; Chu, D.; Liu, S.; Deng, Z.; Wu, C.; Su, X. Trajectory planning and tracking for autonomous vehicle based on state lattice and model predictive control. IEEE Intell. Transp. Syst. Mag. 2019, 11, 29–40. [Google Scholar] [CrossRef]

- Combs, T.S.; Sandt, L.S.; Clamann, M.P.; McDonald, N.C. Automated vehicles and pedestrian safety: Exploring the promise and limits of pedestrian detection. Am. J. Prev. Med. 2019, 56, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Navarro, P.J.; Fernandez, C.; Borraz, R.; Alonso, D. A machine learning approach to pedestrian detection for autonomous vehicles using high-definition 3D range data. Sensors 2016, 17, 18. [Google Scholar] [CrossRef]

- Boukerche, A.; Sha, M. Design guidelines on deep learning–based pedestrian detection methods for supporting autonomous vehicles. Acm Comput. Surv. 2021, 54, 1–36. [Google Scholar] [CrossRef]

- Wu, D.; Liao, M.; Zhang, W.; Wang, X. YOLOP: You Only Look Once for Panoptic Driving Perception. arXiv 2021, arXiv:2108.11250. [Google Scholar] [CrossRef]

- Luo, C.; Yang, X.; Yuille, A. Self-supervised pillar motion learning for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3183–3192. [Google Scholar]

- Jadaan, K.; Zeater, S.; Abukhalil, Y. Connected vehicles: An innovative transport technology. Procedia Eng. 2017, 187, 641–648. [Google Scholar] [CrossRef]

- Abdulsattar, H.; Mostafizi, A.; Siam, M.R.; Wang, H. Measuring the impacts of connected vehicles on travel time reliability in a work zone environment: An agent-based approach. J. Intell. Transp. Syst. 2020, 24, 421–436. [Google Scholar] [CrossRef]

- Al Mallah, R.; Quintero, A.; Farooq, B. Prediction of Traffic Flow via Connected Vehicles. IEEE Trans. Mob. Comput. 2020, 21, 264–277. [Google Scholar] [CrossRef]

- Zaman, U.K.U.; Aqeel, A.B.; Naveed, K.; Asad, U.; Nawaz, H.; Gufran, M. Development of Automated Guided Vehicle for Warehouse Automation of a Textile Factory. In Proceedings of the 2021 International Conference on Robotics and Automation in Industry (ICRAI), Rawalpindi, Pakistan, 26–27 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Cao, J.; Zhang, S. Research and Design of RFID-based Equipment Incident Management System for Industry 4.0. In Proceedings of the 2016 4th International Conference on Electrical & Electronics Engineering and Computer Science (ICEEECS 2016), Jinan, China, 15–16 October 2016; Atlantis Press: Paris, France, 2016; pp. 889–894. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).