1. Introduction

Aero-engine accidents will lead to casualties and irreversible serious consequences. To prevent accidents, we must make timely and effective predictions of the remaining useful life of aero-engines.

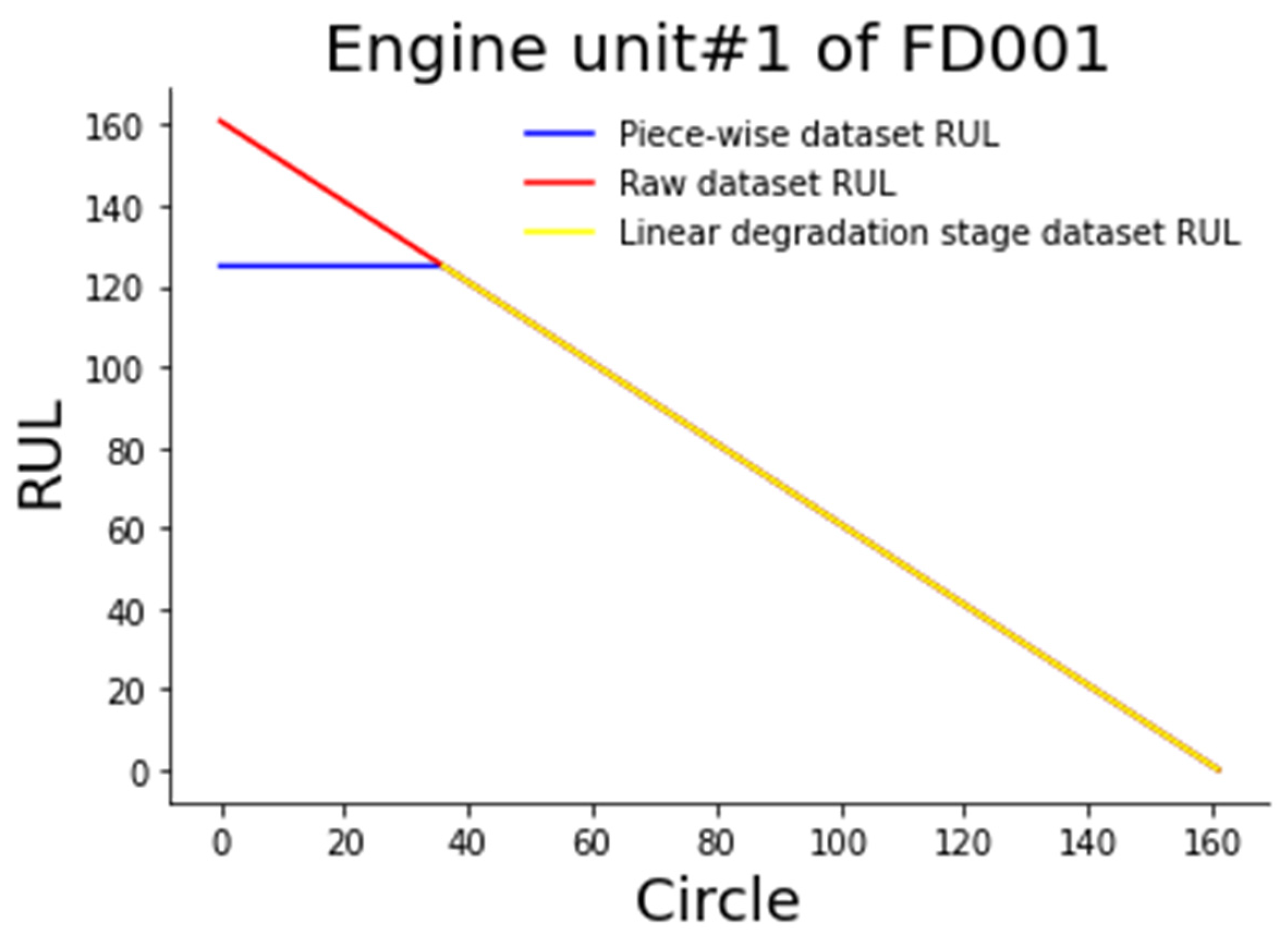

RUL (remaining useful life) prediction methods are generally divided into the model-based method, data-driven method, and hybrid method (the combination of the former two methods). For example, Jiao [

1] first used two LSTM (long short-term memory network) to extract two features from monitoring data and maintenance data, respectively, and then stacked the two features and sent them to the full connection layer to obtain the health index, and then built the state space model of the health index and obtained the RUL through extrapolation. The PSW (phase space warping) describes the dynamic behavior of the bearing tested on the fast time scale. As a physical-based model, the Paris crack propagation model describes the defect propagation of the bearing on the slow time scale. Qian [

2] completed the RUL prediction of the bearing by combining the enhanced PSW with the modified Paris crack propagation model and comprehensively used the information of the fast time scale and the slow time scale. Because the complex working conditions and internal mechanisms hinder the construction of physical models, it is difficult to implement model-based experimental RUL prediction. Since the data-driven method only needs to use historical monitoring data, the data-driven method is receiving more and more attention. In recent years, due to the rapid development of big data and computing power, artificial intelligence has been paid more and more attention and is widely used in RUL prediction.

A variety of artificial intelligence methods have been applied to predict the remaining useful life. Manjurul Islam [

3] defined a degree of defect (DD) metric in the frequency domain and inferred the health index of the bearing. Then, according to the health index and the least squares support vector machine, the start times (TTS) point of RUL prediction was obtained, and then the RUL of the bearing was obtained by using the cyclic least squares support vector regression (recurrent LSSVR). Yu [

4] used the multi-scale residual temporal convolutional networks (MSR-TCN) to extract the information of multiple scales to more comprehensively analyze health status, and combined this with the attention mechanism to avoid the impact of low correlation data in the prediction process to carry out the engine RUL prediction. Zhang [

5] selected 14 sensor signals as the original signals, and through the multi-objective evolutionary ensemble learning method, evolved the multiple DBN (deep belief network) at the same time and took accuracy and diversity as two conflicting goals. After that, the final diagnosis model was obtained by combining multiple DBNs and achieved better results than several different models.

Because the number used in RUL prediction is mostly time-series data and RUL is also time-series related, RNN (recurrent neural networks) with stronger processing ability for time-series data are widely used in RUL prediction. Zheng [

6] obtained the health factors by feature selection and PCA, and then combined the health factors and label input LSTM to predict the remaining useful life. Wu [

7] selected the sensor data by using monotonicity and correlation, and then completed the prediction of the remaining useful life of the aero-engine by combining the LSTM optimized by the grid search algorithm. Peng [

8] used VAE-GAN (variational autoencoder-generative adversarial networks) to generate the health index of the current state, and then used BLSTM (bidirectional long short-term memory) to generate the future sequence sensor data, and then obtained the health index according to the current state and the future state and extrapolated it to obtain RUL.

A variety of methods are adopted to improve the accuracy and speed of remaining life prediction. For example, the accuracy and speed of remaining useful life prediction are improved through some network structure changes [

9,

10,

11]. However, it is not easy to change the network structure according to the appropriate problems to improve the performance. It is easier to improve the prediction accuracy by enriching the state information. Therefore, many methods use a simpler way of enriching state information to improve prediction accuracy. Various approaches are used to enrich the state information and thus improve the prediction accuracy, such as extracting multiple features [

12,

13,

14,

15,

16], extracting multi-channel features [

17,

18,

19,

20], extracting both spatial and temporal features, extracting multi-scale features [

21,

22,

23], and considering the temporal and spatial dependence of sensors [

24].

Since different faults will lead to different degradation patterns, the fault features as important state information are very import for the remaining useful life prediction accuracy. Considering that different faults will lead to different degradation modes, Xia [

25] established a model based on the state data under each fault state and then used the outputs of the models of multiple degradation modes to obtain the final result. Cheng [

26] used two outputs of a transferable convolutional neural network (TCNN) to obtain the fault mode and RUL, respectively. Chen [

27] proposed that the degradation pattern of bearings should be classified into slow degradation and fast degradation according to RMS. Then, the BLSTM and attention mechanism were used for remaining useful life prediction. At present, the prediction of the remaining useful life of the engine combined with the fault information has not been paid enough attention. Moreover, the above method does not directly extract the fault features and enrich the fault features as independent information, which will lead to the different degradation mode information being not obvious, and thus reducing the diagnosis accuracy of different degradation modes.

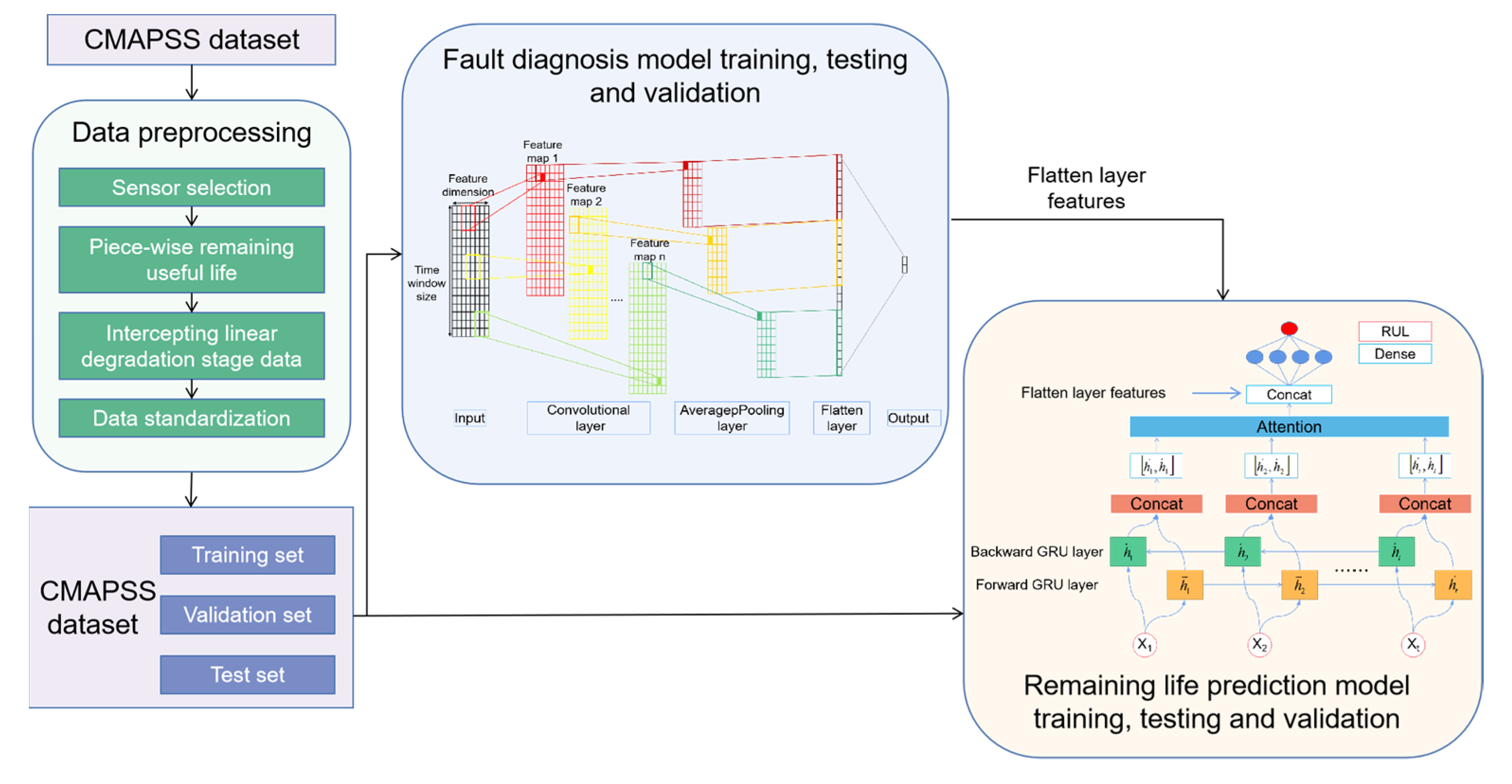

In order to involve the fault features as independent information in the remaining useful life prediction, the paper first uses CNN as a fault diagnosis network to classify faults and obtain fault features from them. Then, a remaining useful life prediction model based on BIGRU and the attention mechanism is developed and combined with the fault features for remaining useful life prediction.

4. Experimental Results and Analysis

4.1. Fault Diagnosis Model Results

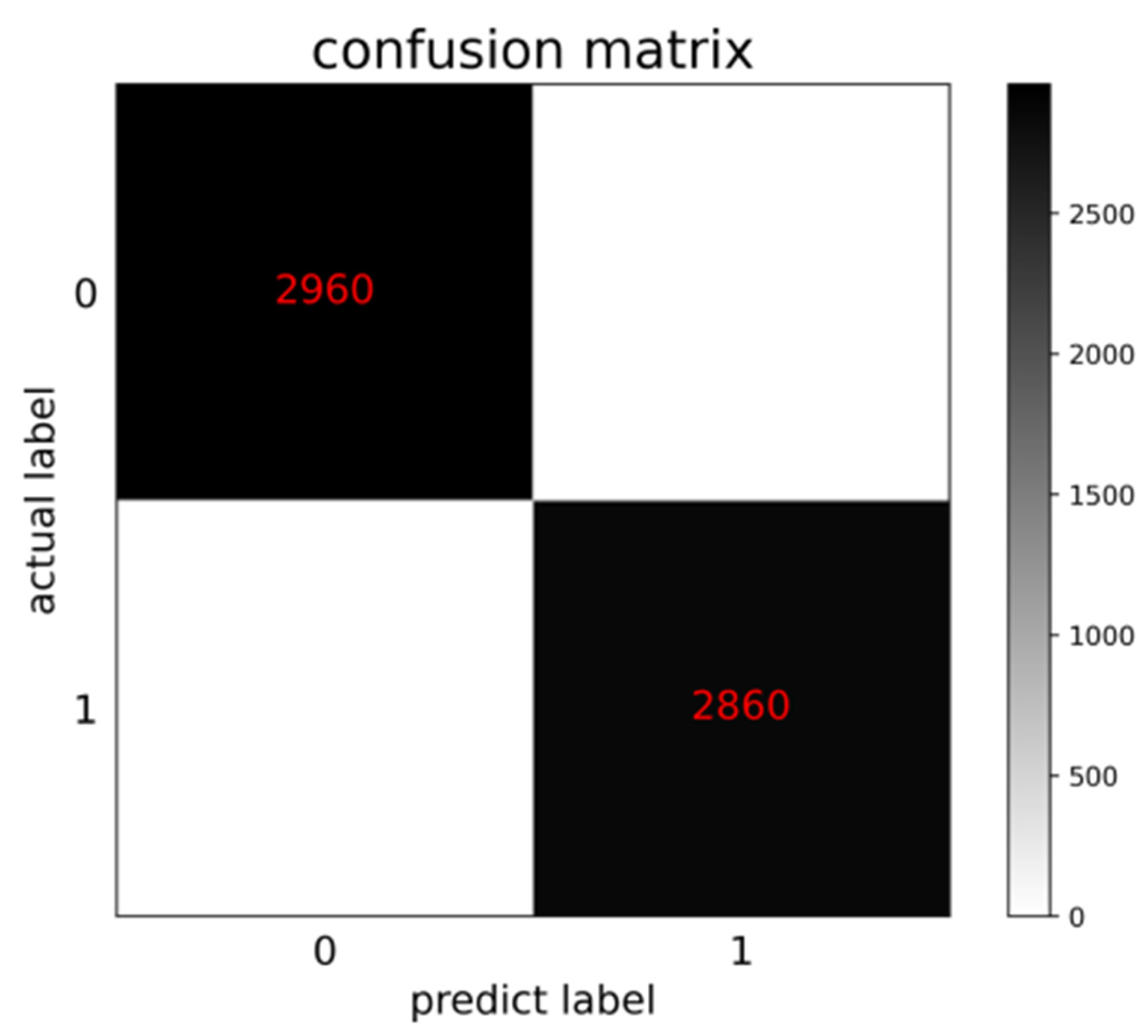

In order to extract fault information for remaining useful life prediction, the paper constructed a CNN-based fault diagnosis network to extract fault features. The output features of the fault diagnosis model flatten layer were used as fault information for the next step of remaining useful life prediction.

The parameters of the fault diagnosis model are shown in

Table 3.

We validated the performance of the model using the test sets. The confusion matrix of the test results of the fault diagnosis model on the test set is shown in

Figure 5. The horizontal axis represents the prediction label of the test sets. The vertical axis represents the real label of the test sets. Additionally, the main diagonal represents the correct number of samples predicted by the model. It can be seen that the test accuracy of the model reaches 100%. The generalization ability of the model is verified. The performance of the model is verified.

4.2. Comparative Test and Analysis of Influencing Factors of Fault Diagnosis Model

4.2.1. Comparative Experimental Analysis of the Number of Convolution Kernels

To verify the reasonableness of the number of convolutional kernels, comparison experiments were conducted on four numbers of convolutional kernels, 2, 4, 8, and 16.

The results in

Table 4 show that the highest testing accuracy of the model was achieved when the number of convolutional kernels is 16. It is also clear from the data in the table that as the number of convolutional kernels increases, both the variety of features extracted and the testing accuracy improve. The comparison of the experimental results shows that the number of convolutional kernels chosen in the paper is reasonable.

4.2.2. Comparative Experimental Analysis of Convolution Layers

To verify the reasonableness of the number of convolutional layers, comparison experiments were conducted on three convolutional layers, 1, 2, and 3.

From the results in

Table 5, it can be seen that the test accuracy of the fault diagnosis model reaches the highest when the number of convolutional layers is 1. The reasonableness of the number of convolutional layers selected in the paper is verified.

4.2.3. Comparative Experimental Analysis of Convolution Activation Function

To verify the rationality of the activation function of the convolutional layer, a comparison experiment between two activation functions, ‘tanh’ and ‘ReLU’, was conducted.

From the results in

Table 6, it can be seen that the highest testing accuracy of the model was achieved when the activation function is ‘ReLU’. The reasonableness of the activation function selected in the paper is verified.

4.3. Prediction Results of Remaining Useful Life

Since different fault states lead to different degradation patterns, the paper constructed a CNN-based fault diagnosis model and extracted fault information from it. Then, the remaining useful life prediction model based on BIGRU and the attention mechanism was combined with the fault information for remaining useful life prediction.

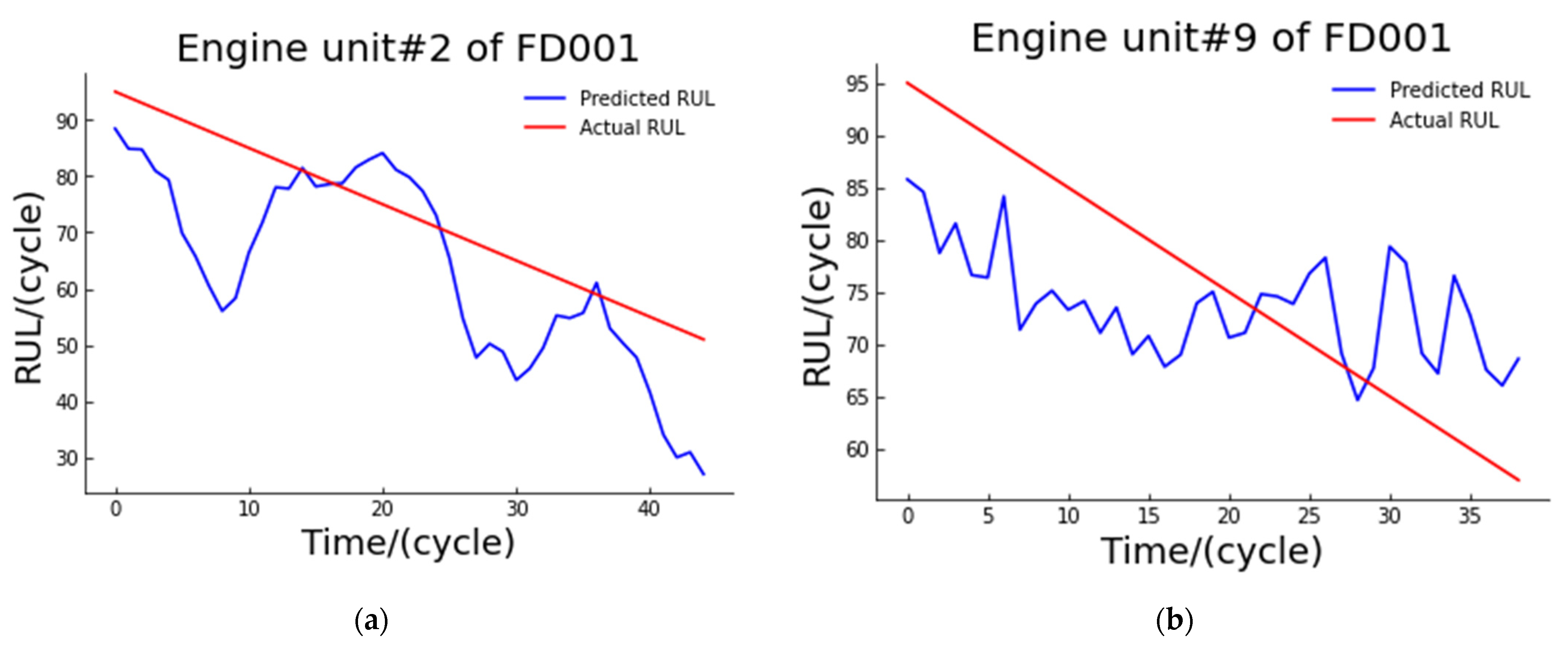

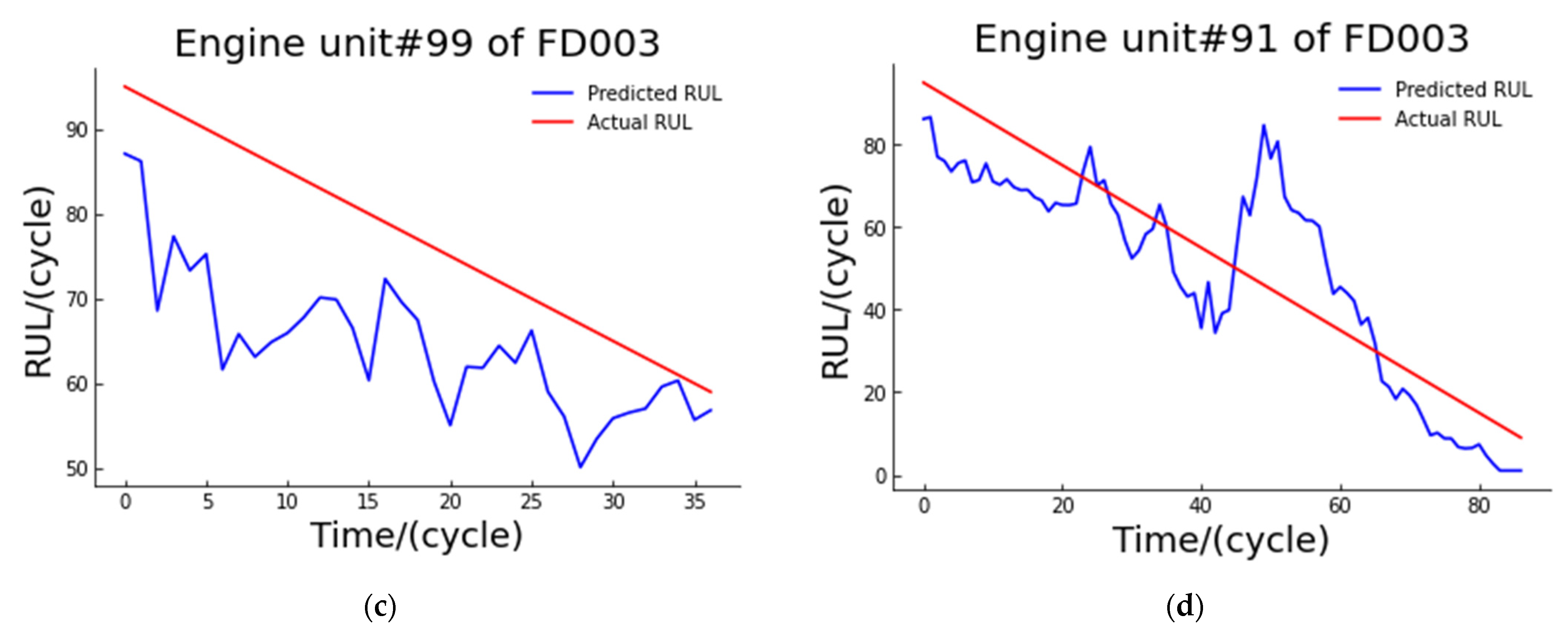

The following figures (

Figure 6) show the actual degradation curves and model predicted degradation curves for the two engines selected from the test sets of FD001 and FD003, respectively.

The overall RMSE of the dataset on the model was 11.046, and the minimum MSE of the model reached 0.911.

4.4. Comparison Test and Analysis of Influencing Factors of Prediction Model

The parameters of the remaining useful life prediction model are shown in

Table 7.

In this section, we investigated the effect of different factors (GRU uni- and bi-directional, attention mechanism, number of hidden units, and fault information) by comparing different existing methods.

When analyzing certain factors, other factors were set as default values. See the table for default values. The frameworks used in this paper were Python 3.8.8 and tensorflow 2.3.

4.4.1. Necessity Analysis of Bidirectional Network and Attention Mechanism

In this section, in order to verify the necessity of bidirectional networks and attention mechanisms for improving the accuracy of remaining lifetime prediction, we conducted comparative experiments of A-GRU, A-BIGRU, and BIGRU to analyze the necessity of bidirectional networks and attention mechanisms (

Table 8).

From the comparison of RMSE results of A-GRU and A-BIGRU, it can be seen that when the model is a bidirectional network, the RMSE of the model is lower, which means that the predicted value is closer to the real value. It can be concluded that when the network is bidirectional, the remaining useful life prediction model can combine the information from both time directions to make a more accurate prediction of the remaining useful life of the engine. The comparison of the RMSE results from A-BIGRU and BIGRU also shows that the remaining lifetime prediction accuracy is higher when the attention mechanism is added to the model. The percent improvement in the table is the percentage improvement of the method mentioned in the paper compared to the corresponding method. By comparing the experimental results, it can be concluded that the bidirectional network and the attention mechanism are necessary to improve the accuracy of the model.

4.4.2. Comparison of the Number of Hidden Cells of A-BIGRU Network

To verify the rationality of the number of hidden units of the A-BIGRU network selected in this paper, we conducted a comparative experiment on the number of four hidden units: 16, 32, 64, and 128.

It can be seen from

Table 9 that when the number of hidden units of the model is 32, the accuracy of the model reaches the highest. When the number of hidden units is too small, it cannot provide rich information for model analysis. When the number of hidden units is too large, the redundancy of information is not conducive to network prediction. Through experimental analysis, it was found that the accuracy of the model reaches the best when the number of hidden units of the model is 32.

4.4.3. Comparison Experiment of Fault Information Presence and Absence

In order to verify the necessity of fault information to improve the accuracy of remaining useful life prediction, this paper conducted a comparison experiment based on eight different network structures with or without adding fault information as the independent variable.

The RMSE results of the remaining useful life prediction model are shown in

Table 10. It can be seen that the accuracy of the eight network structures increased after the fault information was added, and the most intuitive expression is the decrease in RMS value. The model used in the paper has the largest reduction in RMSE value of 1.254 after combining the fault information, which indicates that the predicted remaining useful life is closer to the actual remaining useful life. The percent improvement in the table is the percentage improvement of the method with fault information relative to the method without fault information. Therefore, it can be concluded that the fault information is important for the remaining useful life prediction. The validity of the method in the paper is verified.

4.4.4. Comparison of Different Methods

In order to better show the advantages of the proposed method, a comparison with several current RUL prediction methods was performed. Since the dataset used in the paper was the collection of FD001 and FD003, the results of several comparison methods were taken as the average of the experimental results of FD001 and FD003. The comparison results are shown in

Table 11.

From the results in

Table 11, it can be concluded that the proposed method in the paper has different degrees of advantages over the existing methods. The maximum and minimum RMSE improvement percentages reached 21.65% and 8.29%, respectively.

5. Conclusions

Predicting the remaining useful life of an aero-engine is particularly important to prevent and mitigate risks and improve the safety of life and property. Maximizing the accuracy of the prediction can also provide more reasonable opinions for engine health management and thus take more reasonable maintenance measures.

Different faults correspond to different degradation patterns. However, in the past, the remaining useful life prediction of aero-engines did not sufficiently consider the fault information and involve it as independent information in the remaining useful life prediction. To solve this problem, the paper first classified the engine fault data by CNN to obtain the fault features. Then, the remaining useful life prediction was performed by combining the fault features and the remaining useful life prediction model based on BIGRU and the attention mechanism. After that, comparative experiments of different network structures, parameters, and methods were carried out. The experimental results show that the accuracy of the model is higher, and the parameters used are reasonable. Therefore, it proves that fault information is necessary to predict the remaining useful life of aero-engines.

The data used in the paper were experimental data under the same working conditions. We did not analyze the migration learning for different operating conditions. So, we will work in the direction of remaining useful life prediction with the involvement of fault information of different operating conditions in the future.