Recognition of Human Face Regions under Adverse Conditions—Face Masks and Glasses—In Thermographic Sanitary Barriers through Learning Transfer from an Object Detector †

Abstract

1. Introduction

2. Materials and Methods

2.1. Fever and Human Thermography

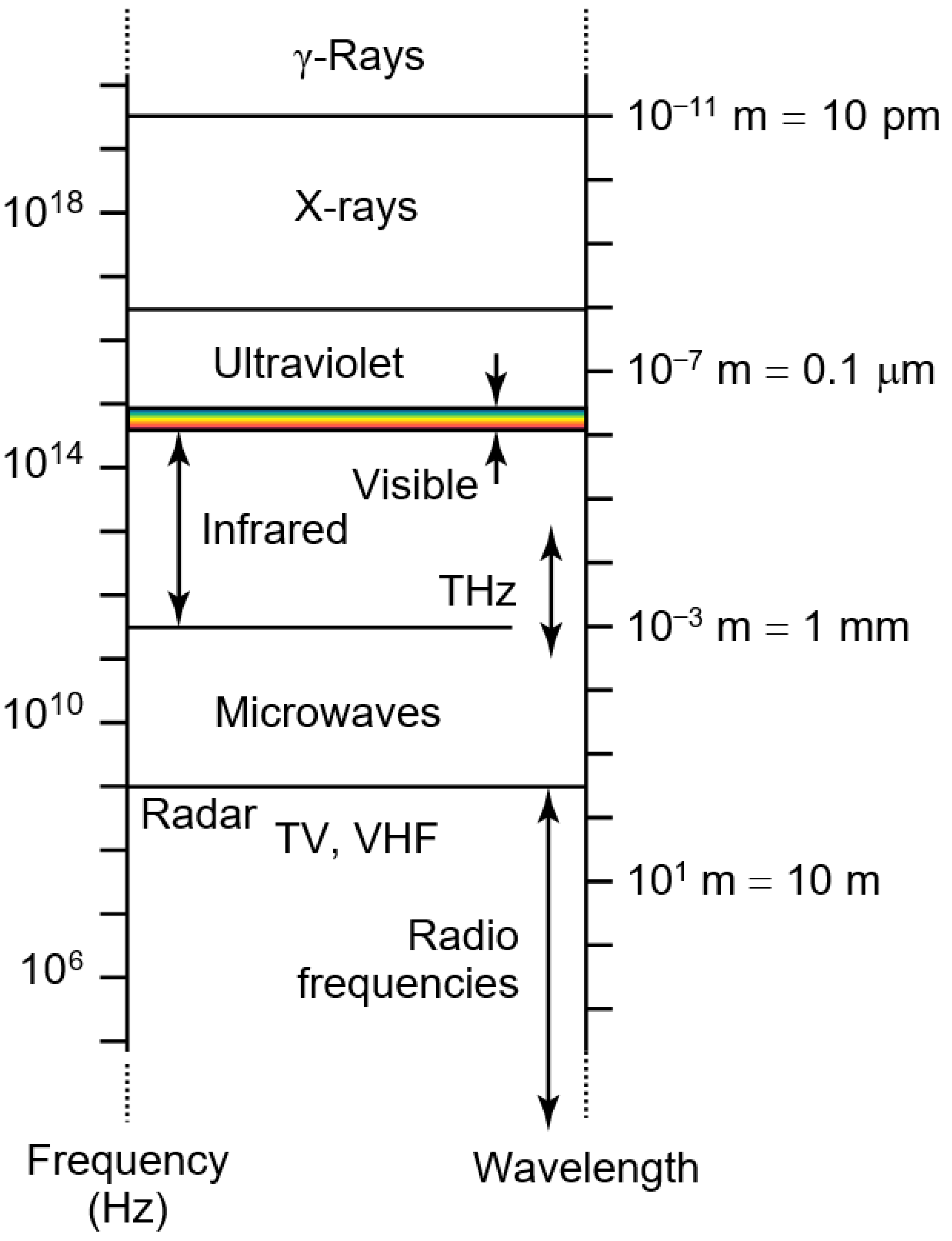

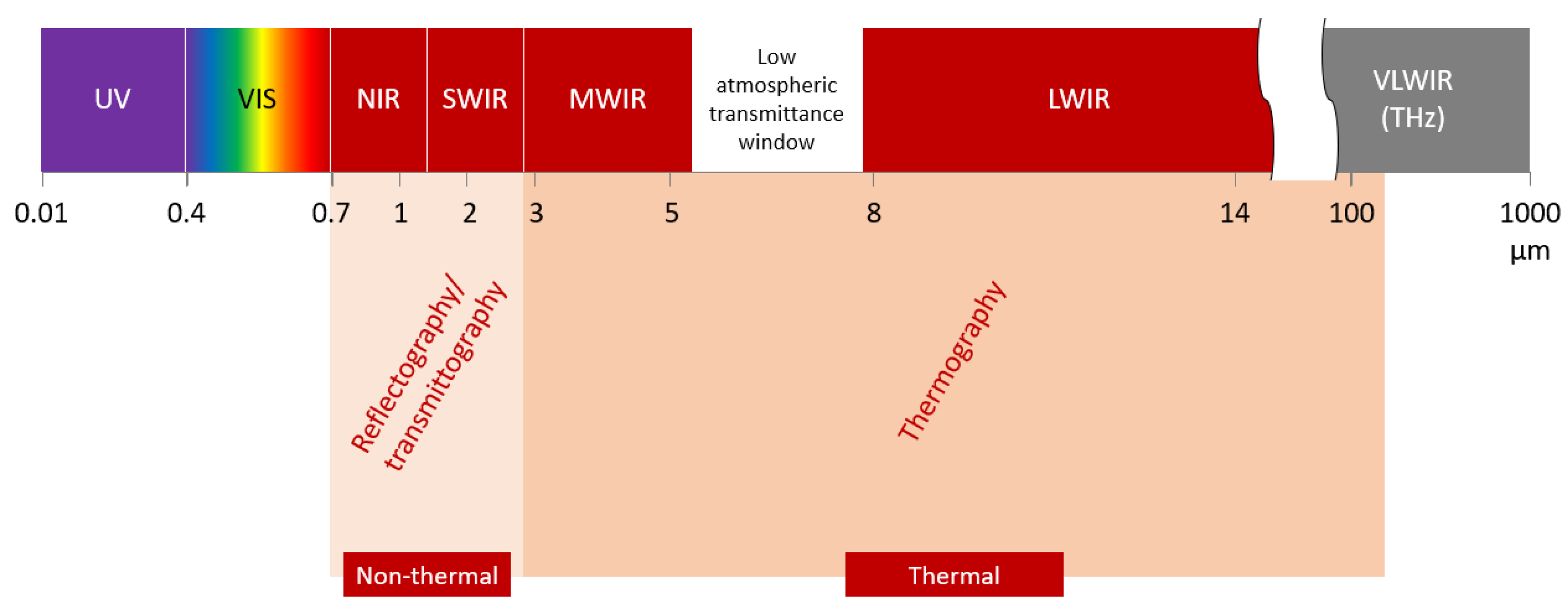

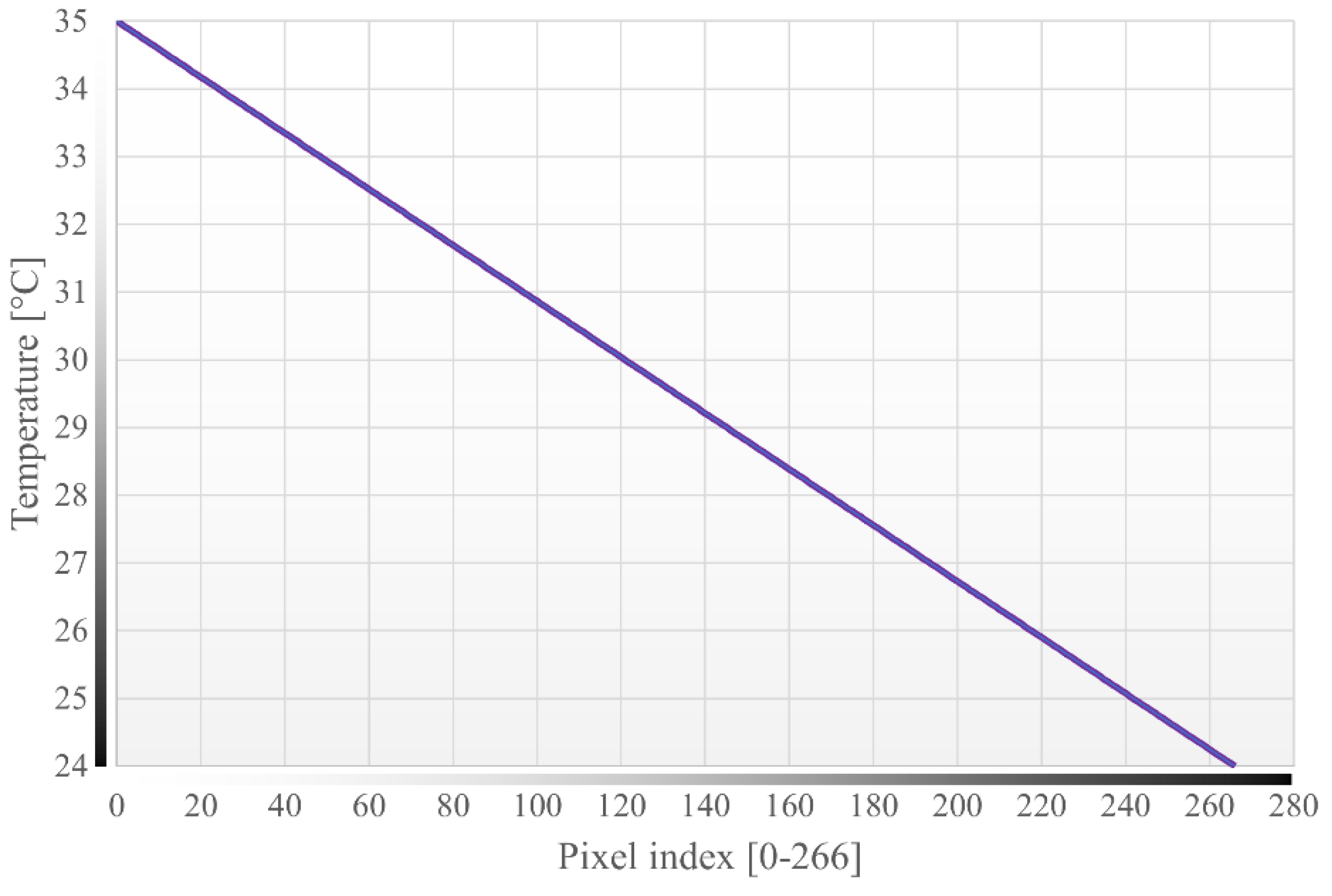

2.2. Infrared Thermography

2.3. Machine Learning

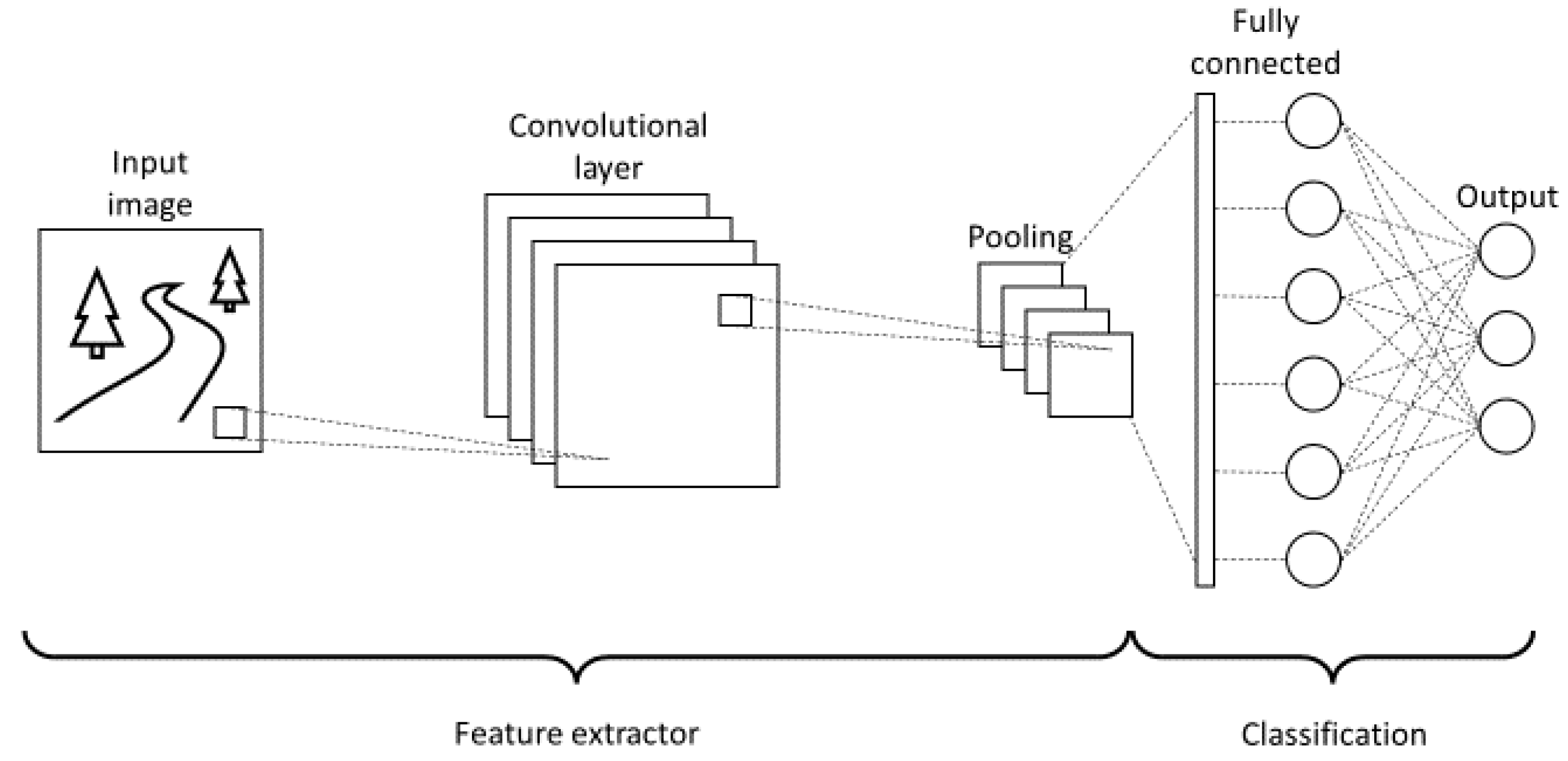

2.3.1. Convolutional Neural Networks

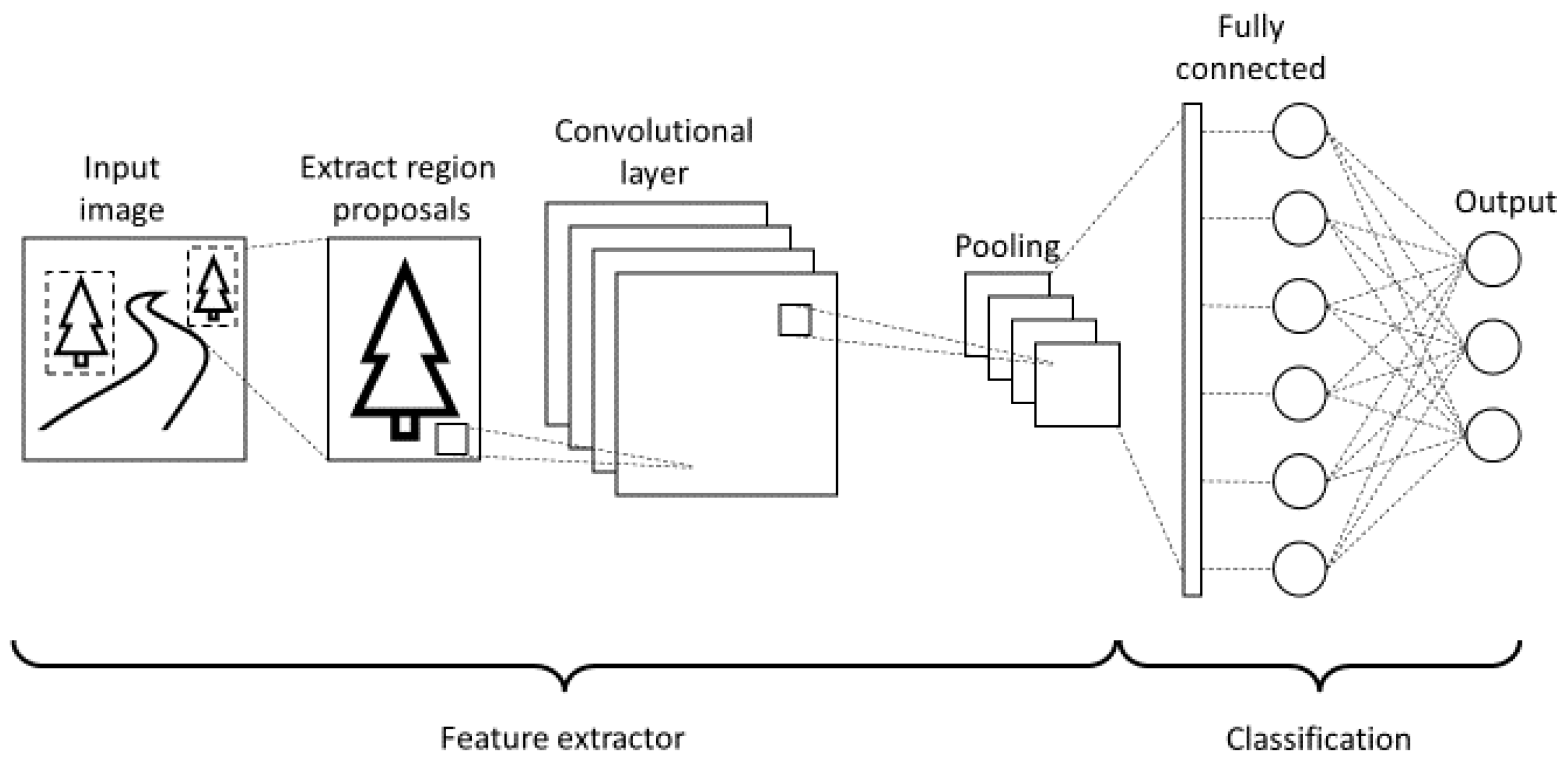

2.3.2. Region Based Convolutional Neural Networks

2.3.3. You Only Look Once Network

2.3.4. Optical Character Recognition

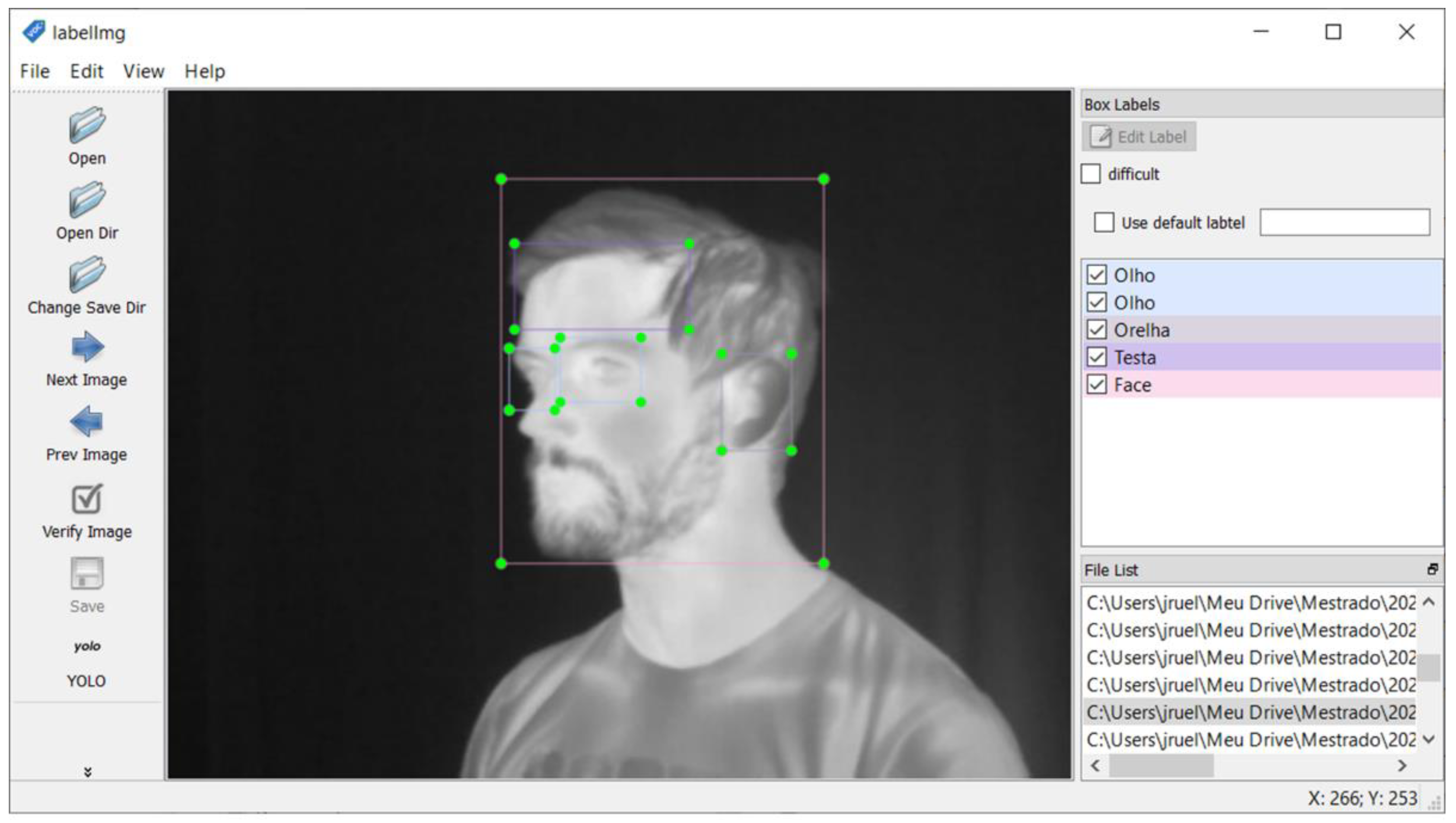

2.4. Dataset

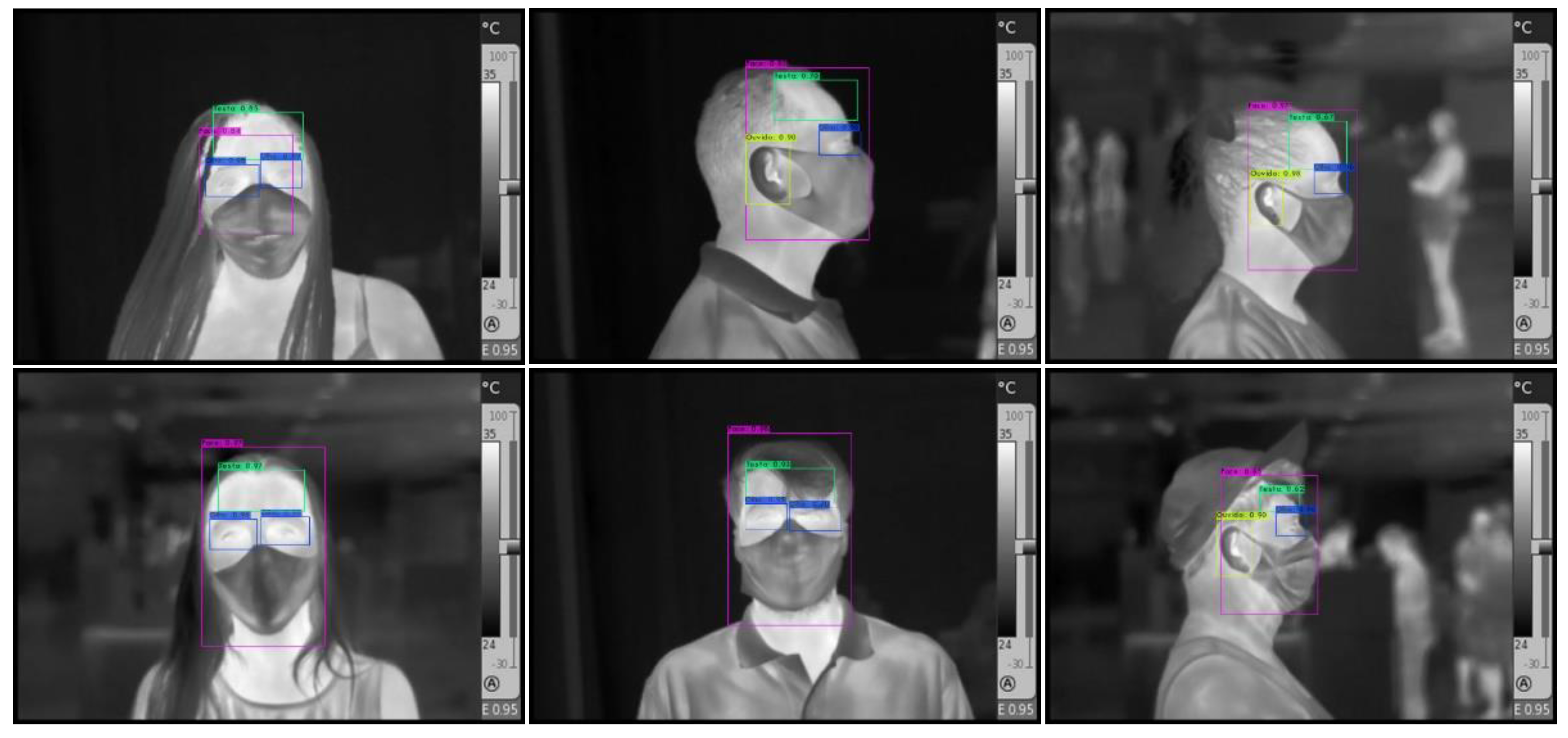

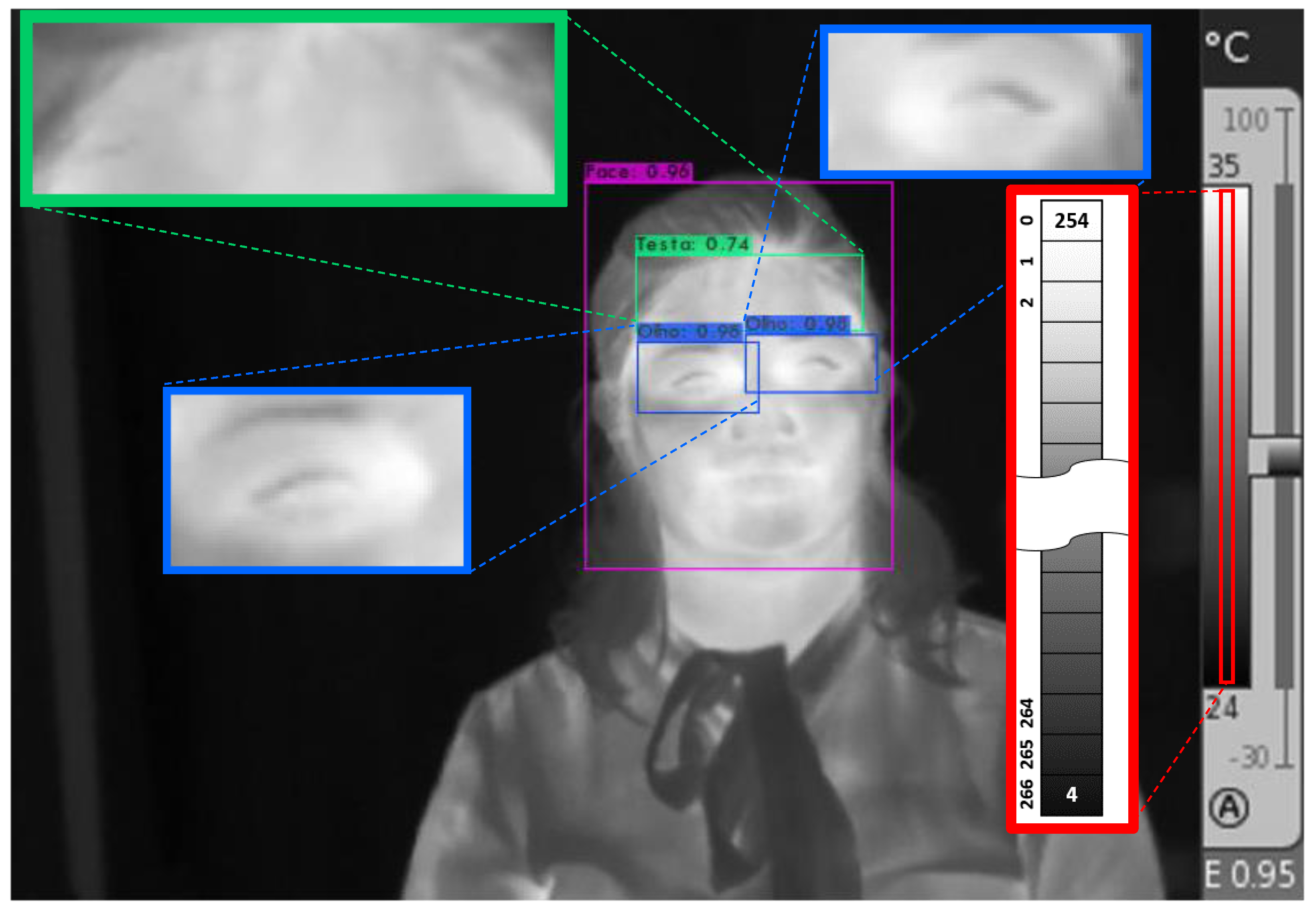

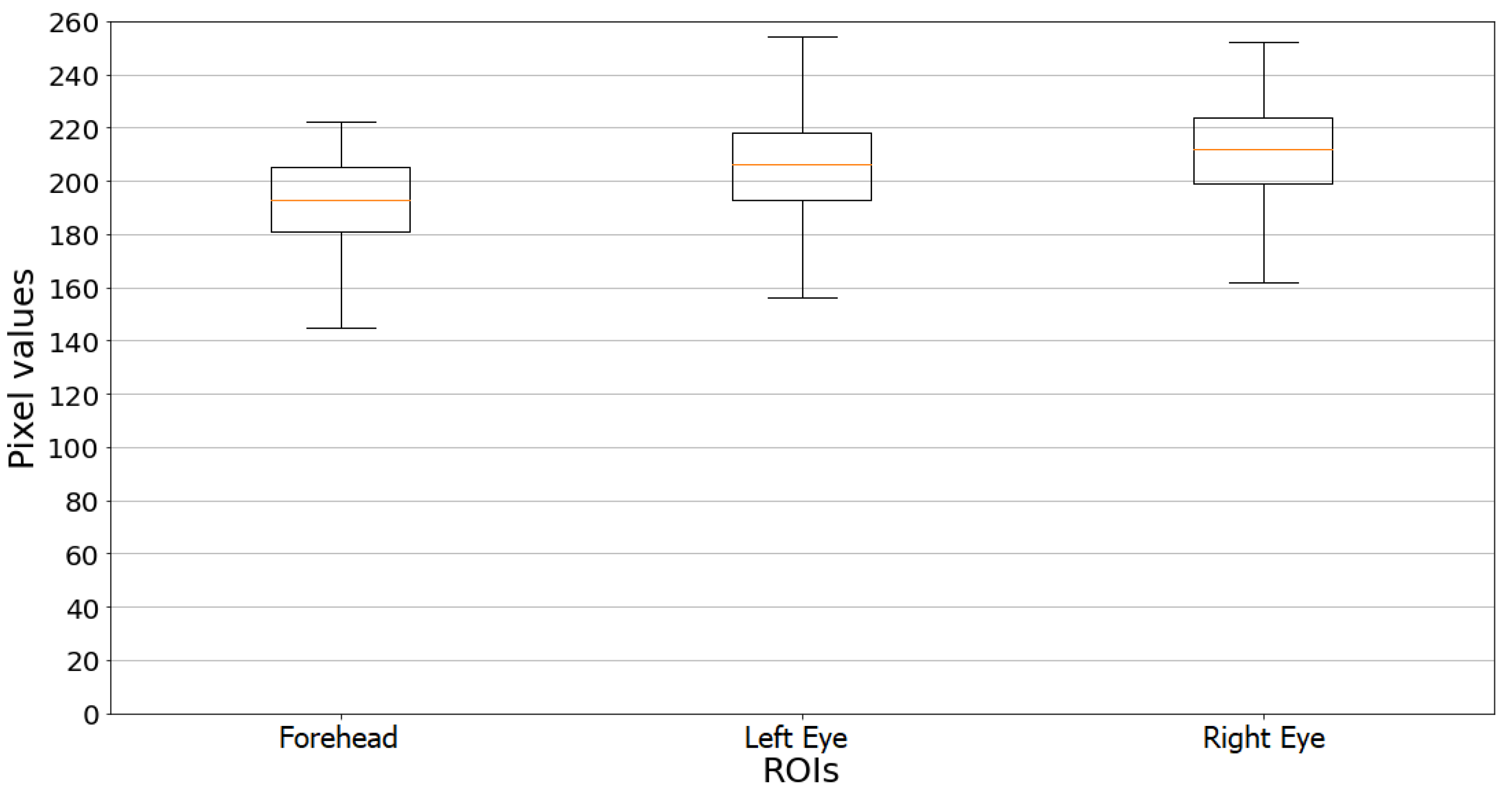

3. Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Lana, R.M.; Coelho, F.C.; Gomes, M.F.d.C.; Cruz, O.G.; Bastos, L.S.; Villela, D.A.M.; Codeço, C.T. Emergência do novo coronavírus (SARS-CoV-2) e o papel de uma vigilância nacional em saúde oportuna e efetiva. Cad. Saude Publica 2020, 36, e00019620. [Google Scholar] [CrossRef] [PubMed]

- Lima, C.M.A.d.O. Information about the new coronavirus disease (COVID-19). Radiol. Bras. 2020, 53, V–VI. [Google Scholar] [CrossRef] [PubMed]

- Willingham, R. Victorian Students in Coronavirus Lockdown Areas to Get Daily Temperature Checks on Return to Classrooms. Available online: https://www.abc.net.au/news/2020-07-09/victorian-school-kids-to-get-coronavirus-temperature-checks/12438484 (accessed on 22 January 2021).

- Thiruvengadam, M. You May Have Your Temperature Checked before Your Next Flight at JFK. Available online: https://www.travelandleisure.com/airlines-airports/jfk-airport/honeywell-temperature-monitoring-device-jfk-airport (accessed on 22 January 2021).

- Kekatos, M. FAA Opens the Door to Pre-Flight COVID-19 Screenings as a Small Airport in Iowa Rolls Out Temperature Checks and Questionnaires That Could Eventually Expand to the Nation’s more than 500 Airports. Available online: https://www.dailymail.co.uk/health/article-9169095/Small-airport-Iowa-rollout-temperature-checks-questionnaires.html (accessed on 22 January 2021).

- Jung, J.; Kim, E.O.; Kim, S.-H. Manual Fever Check Is More Sensitive than Infrared Thermoscanning Camera for Fever Screening in a Hospital Setting during the COVID-19 Pandemic. J. Korean Med. Sci. 2020, 35, e389. [Google Scholar] [CrossRef]

- Normile, D. Airport screening is largely futile, research shows. Science 2020, 367, 1177–1178. [Google Scholar] [CrossRef]

- Mekjavic, I.B.; Tipton, M.J. Myths and methodologies: Degrees of freedom—limitations of infrared thermographic screening for Covid-19 and other infections. Exp. Physiol. 2021, EP089260. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.H.; Horng, G.J.; Hsu, T.H.; Chen, C.C.; Jong, G.J. A Novel Facial Thermal Feature Extraction Method for Non-Contact Healthcare System. IEEE Access 2020, 8, 86545–86553. [Google Scholar] [CrossRef]

- Beall, E.B. Infrared Fever Detectors Used for COVID-19 Aren’t as Accurate as You Think. Available online: https://spectrum.ieee.org/news-from-around-ieee/the-institute/ieee-member-news/infrared-fever-detectors-used-for-covid19-arent-as-accurate-as-you-think (accessed on 22 January 2021).

- Vollmer, M.; Möllmann, K.P. Infrared Thermal Imaging: Fundamentals, Research and Applications; Wiley-VCH: Weinheim, Germany, 2010; ISBN 9783527407170. [Google Scholar]

- Bilodeau, G.A.; Torabi, A.; Lévesque, M.; Ouellet, C.; Langlois, J.M.P.; Lema, P.; Carmant, L. Body temperature estimation of a moving subject from thermographic images. Mach. Vis. Appl. 2012, 23, 299–311. [Google Scholar] [CrossRef]

- Ulhaq, A.; Born, J.; Khan, A.; Gomes, D.P.S.; Chakraborty, S.; Paul, M. COVID-19 Control by Computer Vision Approaches: A Survey. IEEE Access 2020, 8, 179437–179456. [Google Scholar] [CrossRef]

- Kopaczka, M.; Kolk, R.; Schock, J.; Burkhard, F.; Merhof, D. A Thermal Infrared Face Database with Facial Landmarks and Emotion Labels. IEEE Trans. Instrum. Meas. 2019, 68, 1389–1401. [Google Scholar] [CrossRef]

- Zhou, Y.; Ghassemi, P.; Chen, M.; McBride, D.; Casamento, J.P.; Pfefer, T.J.; Wang, Q. Clinical evaluation of fever-screening thermography: Impact of consensus guidelines and facial measurement location. J. Biomed. Opt. 2020, 25, 097002. [Google Scholar] [CrossRef]

- da Silva, J.R.; da Silva, Y.S.; de Souza Santos, F.; Santos, N.Q.; de Almeida, G.M.; Simao, J.; Nunes, R.B.; de Souza Leite Cuadros, M.A.; Campos, H.L.M.; Muniz, P.R. Utilização da transferência de aprendizado no detector de objetos para regiões da face humana em imagens termográficas de barreiras sanitárias. In Proceedings of the 2021 14th IEEE International Conference on Industry Applications (INDUSCON), São Paulo, Brazil, 15–18 August 2021; pp. 475–480. [Google Scholar] [CrossRef]

- Longo, D.; Fauci, A.; Kasper, D.; Hauser, S.; Jameson, J.; Loscalzo, J. (Eds.) Harrison’s Manual of Medicine, 18th ed.; McGraw-Hill: New York, NY, USA, 2014; ISBN 007174519X. [Google Scholar]

- Ring, E.F.J.; Jung, A.; Zuber, J.; Rutkowski, P.; Kalicki, B.; Bajwa, U. Detecting Fever in Polish Children by Infrared Thermography. In Proceedings of the 2008 International Conference on Quantitative InfraRed Thermography, Krakow, Poland, 2–5 July 2008. QIRT Council. [Google Scholar]

- Sun, G.; Saga, T.; Shimizu, T.; Hakozaki, Y.; Matsui, T. Fever screening of seasonal influenza patients using a cost-effective thermopile array with small pixels for close-range thermometry. Int. J. Infect. Dis. 2014, 25, 56–58. [Google Scholar] [CrossRef]

- Tay, M.R.; Low, Y.L.; Zhao, X.; Cook, A.R.; Lee, V.J. Comparison of Infrared Thermal Detection Systems for mass fever screening in a tropical healthcare setting. Public Health 2015, 129, 1471–1478. [Google Scholar] [CrossRef]

- Chiu, W.; Lin, P.; Chiou, H.Y.; Lee, W.S.; Lee, C.N.; Yang, Y.Y.; Lee, H.M.; Hsieh, M.S.; Hu, C.; Ho, Y.S.; et al. Infrared Thermography to Mass-Screen Suspected Sars Patients with Fever. Asia Pac. J. Public Health 2005, 17, 26–28. [Google Scholar] [CrossRef]

- Nishiura, H.; Kamiya, K. Fever screening during the influenza (H1N1-2009) pandemic at Narita International Airport, Japan. BMC Infect. Dis. 2011, 11, 111. [Google Scholar] [CrossRef] [PubMed]

- Silvino, V.O.; Gomes, R.B.B.; Ribeiro, S.L.G.; Moreira, D.D.L.; Santos, M.A.P. Dos Identifying febrile humans using infrared thermography screening: Possible applications during COVID-19 outbreak. Rev. Context. Saúde 2020, 20, 5–9. [Google Scholar] [CrossRef]

- Brioschi, M.; Teixeira, M.; Silva, M.T.; Colman, F.M. Medical Thermography Textbook: Principles and Applications, 1st ed.; Andreoli: São Paulo, Brazil, 2010; ISBN 978-85-60416-15-8. [Google Scholar]

- Haddad, D.S.; Brioschi, M.L.; Baladi, M.G.; Arita, E.S. A new evaluation of heat distribution on facial skin surface by infrared thermography. Dentomaxillofacial Radiol. 2016, 45, 20150264. [Google Scholar] [CrossRef] [PubMed]

- Haddad, D.S.; Oliveira, B.C.; Brioschi, M.L.; Crosato, E.M.; Vardasca, R.; Mendes, J.G.; Pinho, J.C.G.F.; Clemente, M.P.; Arita, E.S. Is it possible myogenic temporomandibular dysfunctions change the facial thermal imaging? Clin. Lab. Res. Dent. 2019. [Google Scholar] [CrossRef][Green Version]

- Ferreira, C.L.P.; Castelo, P.M.; Zanato, L.E.; Poyares, D.; Tufik, S.; Bommarito, S. Relation between oro-facial thermographic findings and myofunctional characteristics in patients with obstructive sleep apnoea. J. Oral Rehabil. 2021, 48, 720–729. [Google Scholar] [CrossRef] [PubMed]

- Derruau, S.; Bogard, F.; Exartier-Menard, G.; Mauprivez, C.; Polidori, G. Medical Infrared Thermography in Odontogenic Facial Cellulitis as a Clinical Decision Support Tool. A Technical Note. Diagnostics 2021, 11, 2045. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Ukida, H.; Ramuhalli, P.; Niel, K. (Eds.) Integrated Imaging and Vision Techniques for Industrial Inspection; Advances in Computer Vision and Pattern Recognition; Springer: London, UK, 2015; ISBN 978-1-4471-6740-2. [Google Scholar]

- Diakides, M.; Bronzino, J.D.; Peterson, D.R. (Eds.) Medical Infrared Imaging; CRC Press: Boca Raton, FL, USA, 2012; ISBN 9780429107474. [Google Scholar]

- Ongsulee, P. Artificial intelligence, machine learning and deep learning. In Proceedings of the 2017 15th International Conference on ICT and Knowledge Engineering (ICT&KE), Bangkok, Thailand, 22–24 November 2017; pp. 1–6. [Google Scholar]

- Sultana, F.; Sufian, A.; Dutta, P. Advancements in image classification using convolutional neural network. In Proceedings of the 2018 4th IEEE International Conference on Research in Computational Intelligence and Communication Networks, ICRCICN, Kolkata, India, 22–23 November 2018. [Google Scholar]

- Andrearczyk, V.; Whelan, P.F. Deep Learning in Texture Analysis and Its Application to Tissue Image Classification. In Biomedical Texture Analysis; Elsevier: Amsterdam, The Netherlands, 2017; pp. 95–129. ISBN 9780128121337. [Google Scholar]

- Ren, J.; Green, M.; Huang, X. From traditional to deep learning: Fault diagnosis for autonomous vehicles. In Learning Control; Elsevier: Amsterdam, The Netherlands, 2021; pp. 205–219. [Google Scholar]

- Phung, V.H.; Rhee, E.J. A High-accuracy model average ensemble of convolutional neural networks for classification of cloud image patches on small datasets. Appl. Sci. 2019, 9, 4500. [Google Scholar] [CrossRef]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object Detection with Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014. [Google Scholar]

- Yang, J.; Li, S.; Wang, Z.; Dong, H.; Wang, J.; Tang, S. Using Deep Learning to Detect Defects in Manufacturing: A Comprehensive Survey and Current Challenges. Materials 2020, 13, 5755. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, T.; Cheng, Y.; Al-Nabhan, N. Deep Learning for Object Detection: A Survey. Comput. Syst. Sci. Eng. 2021, 38, 165–182. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Ivašić-Kos, M.; Krišto, M.; Pobar, M. Human detection in thermal imaging using YOLO. In Proceedings of the ACM International Conference Proceeding Series, Daejeon, Korea, 10–13 November 2019. [Google Scholar]

- Memon, J.; Sami, M.; Khan, R.A.; Uddin, M. Handwritten Optical Character Recognition (OCR): A Comprehensive Systematic Literature Review (SLR). IEEE Access 2020, 8, 142642–142668. [Google Scholar] [CrossRef]

- Islam, N.; Islam, Z.; Noor, N. A Survey on Optical Character Recognition System. arXiv Preprint 2017, arXiv:1710.05703. [Google Scholar]

- Indravadanbhai Patel, C.; Patel, D.; Patel Smt Chandaben Mohanbhai, C.; Patel, A.; Chandaben Mohanbhai, S.; Patel Smt Chandaben Mohanbhai, D. Optical Character Recognition by Open source OCR Tool Tesseract: A Case Study. Artic. Int. J. Comput. Appl. 2012, 55, 975–8887. [Google Scholar] [CrossRef]

- Panetta, K.; Samani, A.; Yuan, X.; Wan, Q.; Agaian, S.; Rajeev, S.; Kamath, S.; Rajendran, R.; Rao, S.P.; Kaszowska, A.; et al. A Comprehensive Database for Benchmarking Imaging Systems. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 509–520. [Google Scholar] [CrossRef] [PubMed]

- de Souza Santos, F.; Da Silva, Y.S.; Da Silva, J.R.; Simao, J.; Campos, H.L.M.; Nunes, R.B.; Muniz, P.R. Comparative analysis of the use of pyrometers and thermal imagers in sanitary barriers for screening febrile people. In Proceedings of the 2021 14th IEEE International Conference on Industry Applications (INDUSCON), São Paulo, Brazil, 15–18 August 2021; pp. 1184–1190. [Google Scholar] [CrossRef]

- Lin, T. LabelImg. Available online: https://github.com/tzutalin/labelImg (accessed on 24 January 2021).

- Padilla, R.; Netto, S.L.; Da Silva, E.A.B. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the International Conference on Systems, Signals, and Image Processing, Niteroi, Brazil, 1–3 July 2020. [Google Scholar]

| Class Name | Precision mAP | True Positives—TP | False Positives—FP |

|---|---|---|---|

| Face | 97.38% | 120 | 15 |

| Eye | 98.61% | 161 | 15 |

| Forehead | 97.25% | 111 | 7 |

| Ear | 94.54% | 60 | 9 |

| Volunteer | Right Eye [°C] | Left Eye [°C] | Forehead [°C] | Ear [°C] |

|---|---|---|---|---|

| Nonfebrile volunteers | ||||

| 01 | 34.9 | 34.9 | 34.9 | - |

| 02 | 34.9 | 34.9 | 34.1 | - |

| 03 | - | 34.5 | 34.5 | 35.0 |

| 04 | 35.0 | 34.8 | 33.8 | - |

| 05 | 35.1 | - | 35.6 | 36.9 |

| 06 | - | - | - | 34.0 |

| 07 | 35.0 | 35.1 | 33.4 | - |

| 08 | 34.6 | 34.8 | 34.5 | - |

| 09 | - | 33.3 | 33.8 | 35.0 |

| 10 | - | 33.9 | 34.4 | 34.6 |

| 11 | 34.7 | 34.5 | 35.0 | - |

| 12 | - | - | 34.9 | - |

| 13 | 35.0 | 35.0 | 34.7 | - |

| 14 | 34.3 | - | 34.9 | - |

| 15 | 35.0 | 34.9 | 34.3 | - |

| 16 | 34.9 | 35.0 | 34.4 | - |

| 17 | 33.7 | - | - | 34.9 |

| 18 | - | 33.2 | 34.6 | 35.1 |

| 19 | - | 34.3 | 34.3 | 35.0 |

| 20 | 34.2 | - | 34.6 | - |

| Nonfebrile volunteers (Mean ± Std. Dev.) | 34.7 ± 0.4 | 34.5 ± 0.6 | 34.5 ± 0.5 | 35.1 ± 0.8 |

| Febrile Volunteers | ||||

| 21 | - | 37.2 | 37.1 | - |

| 22 | - | - | - | 37.9 |

| 23 | - | 39.7 | 39.2 | - |

| 24 | 37.2 | 37.3 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

da Silva, J.R.; de Almeida, G.M.; Cuadros, M.A.d.S.L.; Campos, H.L.M.; Nunes, R.B.; Simão, J.; Muniz, P.R. Recognition of Human Face Regions under Adverse Conditions—Face Masks and Glasses—In Thermographic Sanitary Barriers through Learning Transfer from an Object Detector. Machines 2022, 10, 43. https://doi.org/10.3390/machines10010043

da Silva JR, de Almeida GM, Cuadros MAdSL, Campos HLM, Nunes RB, Simão J, Muniz PR. Recognition of Human Face Regions under Adverse Conditions—Face Masks and Glasses—In Thermographic Sanitary Barriers through Learning Transfer from an Object Detector. Machines. 2022; 10(1):43. https://doi.org/10.3390/machines10010043

Chicago/Turabian Styleda Silva, Joabe R., Gustavo M. de Almeida, Marco Antonio de S. L. Cuadros, Hércules L. M. Campos, Reginaldo B. Nunes, Josemar Simão, and Pablo R. Muniz. 2022. "Recognition of Human Face Regions under Adverse Conditions—Face Masks and Glasses—In Thermographic Sanitary Barriers through Learning Transfer from an Object Detector" Machines 10, no. 1: 43. https://doi.org/10.3390/machines10010043

APA Styleda Silva, J. R., de Almeida, G. M., Cuadros, M. A. d. S. L., Campos, H. L. M., Nunes, R. B., Simão, J., & Muniz, P. R. (2022). Recognition of Human Face Regions under Adverse Conditions—Face Masks and Glasses—In Thermographic Sanitary Barriers through Learning Transfer from an Object Detector. Machines, 10(1), 43. https://doi.org/10.3390/machines10010043