Abstract

In some applications, one is interested in reconstructing a function f from its Fourier series coefficients. The problem is that the Fourier series is slowly convergent if the function is non-periodic, or is non-smooth. In this paper, we suggest a method for deriving high order approximation to f using a Padé-like method. Namely, we do this by fitting some Fourier coefficients of the approximant to the given Fourier coefficients of f. Given the Fourier series coefficients of a function on a rectangular domain in , assuming the function is piecewise smooth, we approximate the function by piecewise high order spline functions. First, the singularity structure of the function is identified. For example in the 2D case, we find high accuracy approximation to the curves separating between smooth segments of f. Secondly, simultaneously we find the approximations of all the different segments of f. We start by developing and demonstrating a high accuracy algorithm for the 1D case, and we use this algorithm to step up to the multidimensional case.

1. Introduction

Fourier series expansion is a useful tool for representing and approximating functions, with applications in many areas of applied mathematics. The quality of the approximation depends on the smoothness of the approximated function and on whether or not it is periodic. For functions that are not periodic, the convergence rate is slow near the boundaries and the approximation by partial sums exhibits the Gibbs phenomenon. Several approaches have been used to improve the convergence rate, mostly for the one-dimensional case. One approach is to filter out the oscillations, as discussed in several papers [1,2]. Another useful approach is to transform the Fourier series into an expansion in a different basis. For the univariate case this approach is shown to be very efficient, as shown in [1] using Gegenbauer polynomials with suitably chosen parameters. Further improvement of this approach is presented in [3] using Freud polynomials, achieving very good results for univariate functions with singularities.

An algebraic approach for reconstructing a piecewise smooth univariate function from its first N Fourier coefficients has been realized by Eckhoff in a series of papers [4,5,6]. There, the “jumps” are determined by a corresponding system of linear equations. A full analysis of this approach is presented by Betankov [7]. Nersessian and Poghosyan [8] have used a rational Padé type approximation strategy for approximating univariate non-periodic smooth functions. For multiple Fourier series of smooth non-periodic functions, a convergence acceleration approach was suggested by Levin and Sidi [9]. More challenging is the case of multivariate functions with discontinuities, i.e., functions that are piecewise smooth. Here again, the convergence rate is slow, and near the discontinuities, the approximation exhibits the Gibbs phenomenon. In this paper, we present a Padé-like approach consisting of finding a piecewise-defined spline whose Fourier coefficients match the given Fourier coefficients.

The main contribution of this paper is demonstrating that this approach can be successfully applied to the multivariate case. Namely, we present a strategy for approximating both non-periodic and non-smooth multivariate functions. We derive the numerical procedures involved and provide some interesting numerical results. We start by developing and demonstrating a high accuracy algorithm for the 1D case, and use this algorithm to step up to the multidimensional case.

2. The 1D Case

In this section, we present the main tools for function approximation using its Fourier series coefficients. We define the basis functions and describe the fitting strategy and develop the computation algorithm. After dealing with the smooth case we move on to approximate a piecewise smooth function with a jump singularity.

2.1. Reconstructing Smooth Non-Periodic Functions

Let , and assume we know the Fourier series expansion of f

The series converge pointwise for any , however, if f is not periodic, the convergence may be slow, and if the convergence is not uniform and the Gibbs phenomenon occurs near 0 and near 1. As discussed in [9,10], one can apply convergence acceleration techniques for improving the convergence rate of the series. Another convergence acceleration approach was suggested by Gottlieb and Shu [1] using Gegenbauer polynomials. Yet, in both approaches, the convergence rate is not much improved near 0 and near 1. We suggest an approach in the spirit of Padé approximation. A Padé approximant is a rational function whose power series agrees as much as possible with the given power series of f. Here we look for approximations to f whose Fourier coefficients agree with a subset of the given Fourier coefficients of f. The approximation space can be any favorable linear approximation space, such as polynomials or trigonometric functions.

We choose to build the approximation using kth order spline functions, represented in the B-spline basis:

is the B-spline of order k with equidistant knots , and is the number of B-splines whose shifts do not vanish in . The advantage of using spline functions is threefold:

- The locality of the B-spline basis functions.

- A closed form formula for their Fourier coefficients.

- Their approximation power, i.e., if , there exists a spline such that .

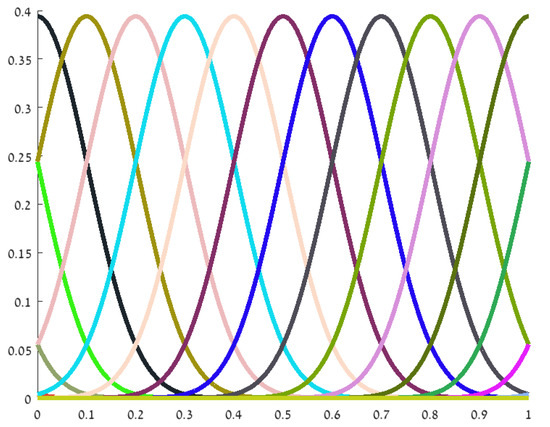

The B-splines basis functions used in the 1D case are shown in Figure 1. We denote by the restriction of to the interval . We find the coefficients by least-squares fitting, matching the first Fourier coefficients of S to the corresponding Fourier coefficients of f. That is,

Figure 1.

The B-splines used in Example 1.

Notice that it is enough to consider the Fourier coefficients with non-negative indices.

We denote by the restriction of to the interval , and by its Fourier coefficients. The normal equations for the least squares problem (3) induce the linear system for , where

and

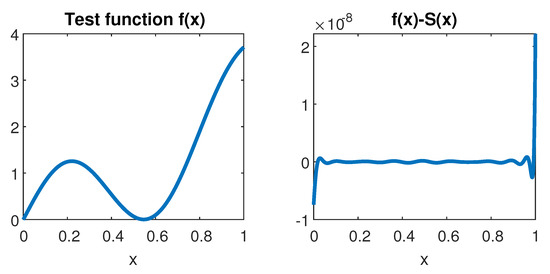

Numerical Example—The Smooth 1D Case

We consider the test function , assuming only its Fourier coefficients are given. We have used only the 20 Fourier coefficients , and computed an approximation using 12th degree splines with equidistant knots’ distance . For this case, the matrix A is of size , and . We have employed an iterative refinement algorithm described below to obtain a high precision solution. The results are shown in the following two figures. In Figure 2 we see the test function on the left and the approximation error on the right. Figure 3 presents the graph of in blue and the graph of , showing eight orders of magnitude reduction in the Fourier coefficients. Notice the matching in the first Fourier coefficients reflected in the beginning of the red graph.

Remark 1.

The powerful iterative refinement method described in [11,12] is as follows:

For solving a system , we use some solver, e.g., the Matlab pinv function. We obtain the solution . Next we compute the residual . In case is very large, the residual will be large. Now we solve again the system with at the right hand side, and use the solution to correct , to obtain

We repeat this correction steps a few times, i.e., , and

until the resulting residual is small enough.

Figure 2.

The test function (left) and the spline approximation error (right).

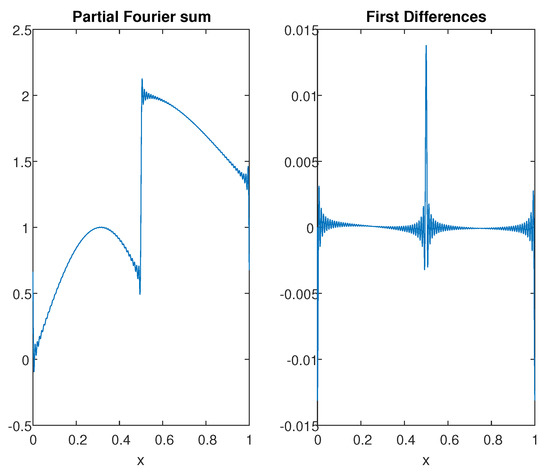

Figure 3.

of the given Fourier coefficients (blue), and of the Fourier coefficients of the approximation error (red).

2.2. Reconstructing Non-Smooth Univariate Functions

Let f be a piecewise smooth function on , defined by combined two pieces and , and assume that can be continuously extended to .

Here again, we assume that all we know about f is its Fourier series expansion. In particular, we do not know the position of the singularity of f. As in the case of a non-periodic function, the existence of a singularity in significantly influences the Fourier series coefficients and implies their slow decay. As we demonstrate below, good matching of the Fourier coefficients requires a good approximation of the singularity location. The approach we suggest here involves finding approximations to and simultaneously with a high precision identification of .

Let s be an approximation of the singularity location , and let us follow the algorithm suggested above for the smooth case. The difference here is that now we look for two separate spline approximations:

and

The combination S of and constitutes the approximation to f. Here again we aim at matching the first Fourier coefficients of f and of S. Here S depends on the coefficients of , the coefficients of and on s. Therefore, the minimization process solves for all these unknowns:

The minimization is non-linear with respect to s, and linear with respect to the other unknowns. Therefore, the minimization problem is actually a one parameter non-linear minimization problem, the parameter s. Using the approximation power of kth order splines (), and considering the value of the objective cost function for , we can deduce that the minimal value of is . We also observe that an deviation from implies a bounded deviation of the minimizing Fourier coefficients

As shown below, these observations can be used for finding a good approximation to .

We denote by the restriction of to the interval , and by the restriction of to the interval . We concatenate these two sequences of basis functions, and into one sequence , and denote their Fourier coefficients by For a given s, the normal equations for the least squares problem (9) induce the linear system for the splines’ coefficients , where:

and

Remark 2.

Due to the locality of the B-splines, some of the basis functions and may be identical 0. It thus seems better to use only the non-zero basis functions. From our experience, since we use the generalized inverse approach for solving the system of equations, using all the basis functions gives the same solution.

The generalized inverse approach computes the least-squares solution to a system of linear equations that lacks a unique solution. It is also called the Moore–Penrose inverse, and is computed by Matlab pinv function.

The above construction can be carried out to the case of several singular points.

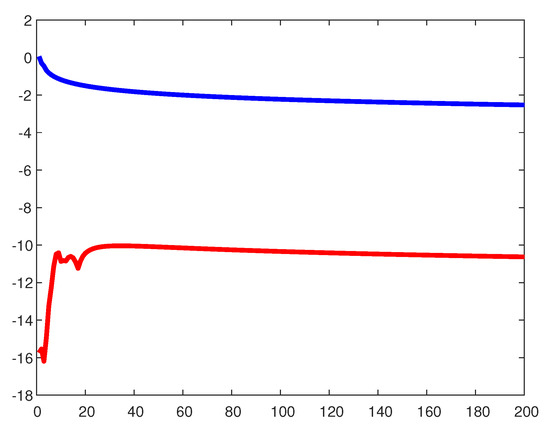

2.2.1. Finding

We present the strategy for finding together with a specific numerical example. We consider a test function on with a jump discontinuity at :

As expected, the Fourier series of f is slowly convergent, and it exhibits the Gibbs phenomenon near the ends of and near . In Figure 4, on the left, we present the sum of the first 200 terms of the Fourier series, computed at 20,000 points in . This sum is not acceptable as an approximation to f, and yet we can use it to obtain a good initial approximation to . On the right graph, we plot the first differences of the values in the left graph. The maximal difference is achieved at a distance of order from .

Figure 4.

A partial Fourier sum (left) and its first differences (right).

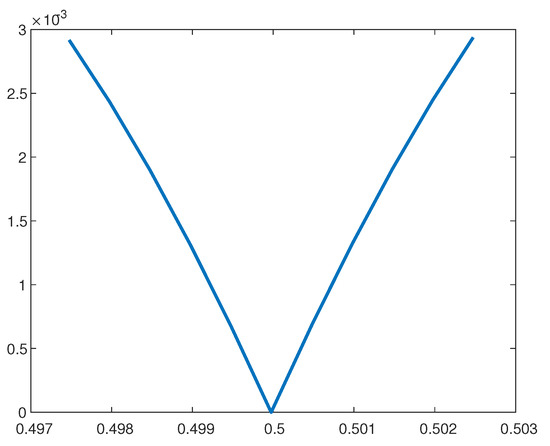

Having a good approximation is not enough for achieving a good approximation to f. However, can be used as a starting point for an iterative method leading to a high precision approximation to . To support this assertion we present the graph in Figure 5, depicting the maximum norm of the difference between 1000 of the given Fourier coefficients and the corresponding Fourier coefficients of the approximation S, as a function of s, near . This function is almost linear on each side of , and simple quasi-Newton iterations converge very fast to . After obtaining a high accuracy approximation to , we use it for deriving the piecewise spline approximation to f.

Figure 5.

The graph of the error as a function of s near .

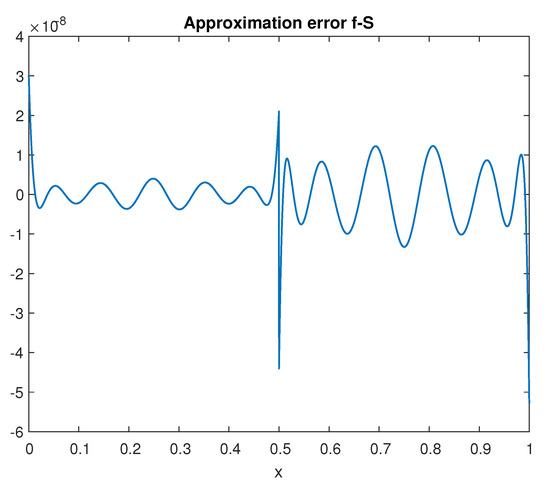

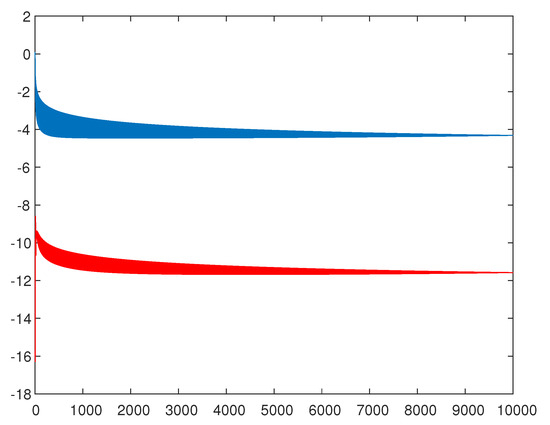

In the following, we present the numerical results obtained for the test function defined in (13). We have used only 20 Fourier coefficients of f, and the two approximating functions and are splines of order eight, with knots’ distance . Figure 6 depicts the approximation error, showing that , and that the Gibbs phenomenon is completely removed. Figure 7 shows of the absolute values of the given Fourier coefficients of f (in blue), and the corresponding values for the Fourier coefficients of (in red). The graph shows a reduction of ∼7 orders of magnitude. These results clearly demonstrate the high effectiveness of the proposed approach.

Figure 6.

The approximation error for the 1D non-smooth case.

Figure 7.

of the given Fourier coefficients (blue), and of the Fourier coefficients of the approximation error (red).

2.2.2. The 1D Approximation Procedure

Let us sum up the suggested approximation procedure:

- (1)

- Choose the approximation space for approximating and .

- (2)

- Define the number of Fourier coefficients to be used for building the approximation such that

- (3)

- Find first approximation to : Compute a partial Fourier sum and locate maximal first order difference.

- (4)

- Calculate the first Fourier coefficients of the basis functions of , truncated at .

- (5)

- (6)

- Update the approximation to , by performing quasi-Newton iterations to reduce the objective function in (9).

- (7)

- Go back to (4) to update the approximation.

3. The 2D Case—Non-Periodic and Non-Smooth

3.1. The Smooth 2D Case

Let , and assume we know its Fourier series expansion

Such series are obtained when solving PDE using spectral methods. However, if the function is not periodic, or, as in the case of hyperbolic equations, the function has a jump discontinuity along some curve in , the convergence of the Fourier series is slow. Furthermore, the approximation of f by its partial sums suffers from the Gibbs phenomenon near the boundaries and near the singularity curve.

We deal with the case of smooth non-periodic 2D functions in the same manner as we did for the univariate case. We look for a bivariate spline function S whose Fourier coefficients match the Fourier coefficients of f. As in the univariate case, it is enough to match the coefficients of low frequency terms in the Fourier series. The technical difference in the 2D case is that we look for a tensor product spline approximation, using tensor product kth order B-spline basis functions.

The system of equations for the B-spline coefficients is the same as the system defined by (4) and (5) in the univariate case, only here we reshape the unknowns as a vector of unknowns.

Numerical Example—The Smooth 2D Case

We consider the test function

assuming only its Fourier coefficients are given. We have used only 160 Fourier coefficients, and constructed an approximation using 10th degree tensor product splines with equidistant knots’ distance in each direction. For this case, the matrix A is of size , and . Again, we have employed the iterative refinement algorithm to obtain a high precision solution (relative error ). Computation time ∼18 s.

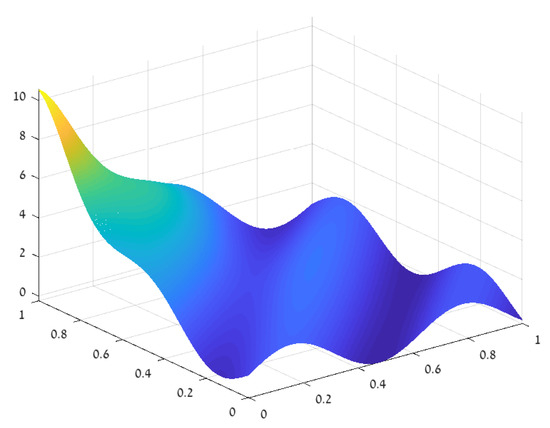

In Figure 8 we plot the test function on . Note that it has high derivatives near .

Figure 8.

The test function for the smooth 2D case.

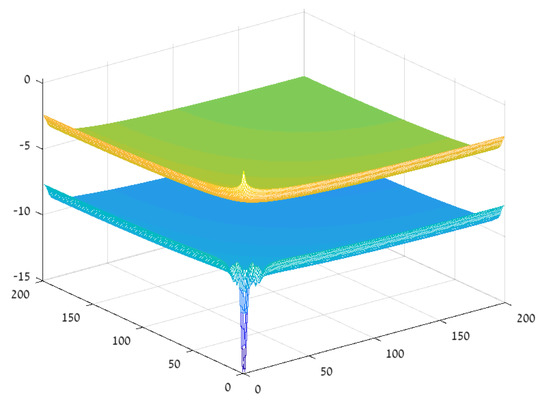

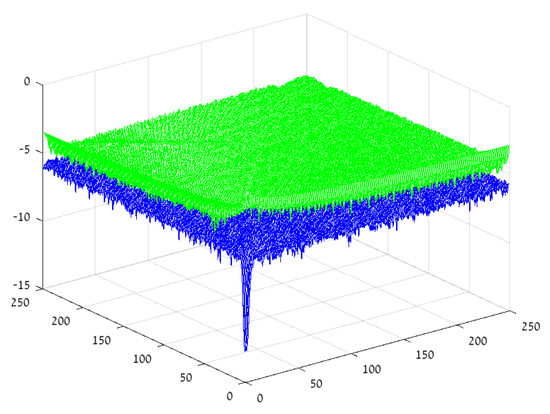

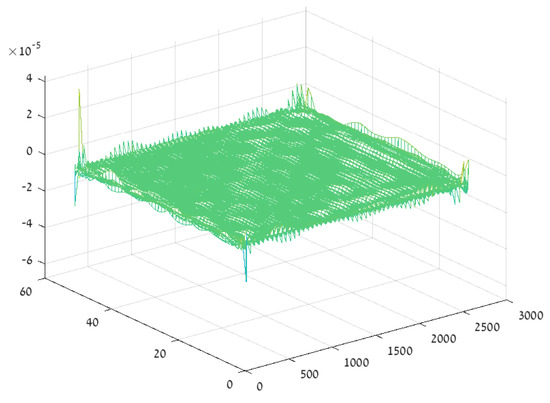

The approximation error is shown in Figure 9. To demonstrate the convergence acceleration of the Fourier series achieved by subtracting the approximation from f, we present in Figure 10 of the absolute values of the Fourier coefficients of f (in green) and of the Fourier coefficients of (in blue), for frequencies . The magnitude of the Fourier coefficients is reduced by a factor of , and even more so for the low frequencies due to the matching strategy used to derive the spline approximation.

Figure 9.

The approximation error .

Figure 10.

of the Fourier coefficients before (green), and after (blue).

3.2. The Non-Smooth 2D Case

Let be open, simply connected domains with the properties

Let be the curve separating the two domains,

and assume is a -smooth curve.

Let f be a piecewise smooth function on , defined by combined two pieces and , and assume that each can be continuously extended to a function in , . Here again, we assume that all we know about f is its Fourier expansion. In particular, we do not know the position of the dividing curve separating and . We denote this curve by , and we assume that it is a -smooth curve. As in the case of a non-periodic function, the existence of a singularity curve in significantly influences the Fourier series coefficients and implies their slow decay. In case of discontinuity of f across , partial sums of the Fourier series exhibit the Gibbs phenomenon near . As demonstrated below, a good matching of the Fourier coefficients requires a good approximation of the singularity location. As in the univariate non-smooth case, the computation algorithm involves finding approximations to and simultaneously with a high precision identification of .

Evidently, finding a high precision approximation of the singularity curve is more involved than finding a high precision approximation to the singularity point in the univariate case. Let be the signed-distance function corresponding to the curve :

In looking for an approximation to , we look for an approximation to . Here again we are using a tensor product spline approximants, the same set of spline functions described in the previous section. Since the curve is , it can be shown that one can construct a spline function of order , with knots’ distance h, which approximates near so that the Hausdorff distance between the zero level set of and is .

Let be a spline approximation to , with spline coefficients :

For a given we define the approximation to f similar to the construction in the univariate case by Equations (7)–(9). We look here for an approximation S to f which is a combination of two bivariate splines components:

such that Fourier coefficients of f and S are matched in the least-squares sense:

We denote by the restriction of to the domain defined by , and by the restriction of to the domain defined by . We concatenate these two sequences of basis functions, and into one sequence , denoting their Fourier coefficients by , and rearranging them (for each n) in vectors of length , . For a given , the normal equations for the least squares problem (21) induce the linear system for the splines’ coefficients , where:

and

For a given choice of , the coefficients are obtained by solving a linear system of equations, and properly rearranging the solution. However, finding the optimal is a non-linear problem that requires an iterative process and is much more expensive.

Remark 3.

Representing the singularity curve of the approximation S as the zero level set of the bivariate spline function is the way to achieve a smooth control over the approximation. As a result, the objective function in (21) varies smoothly with respect to the spline coefficients .

Remark 4.

In principle, the above framework is applicable to cases where f is combined of k functions defined on k disjoint subdomains of . The implementation, however, is more involved. The main challenge is to find a good first approximation to the curves separating the subdomains. In this context, for our case of two subdomains, we further assume for simplicity that the separating curve is bijective.

Here again we choose to demonstrate the whole approximation procedure alongside a specific numerical example.

3.2.1. The Approximation Procedure—A Numerical Example

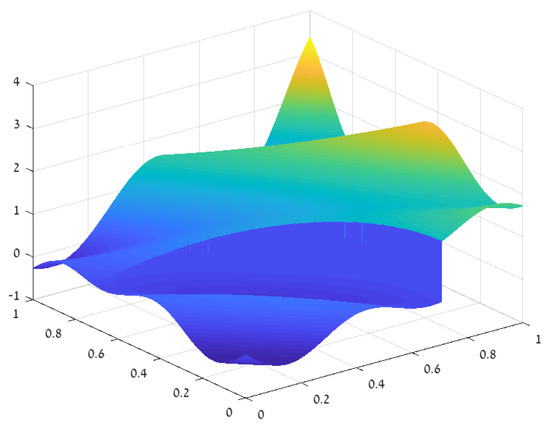

Consider a piecewise smooth function on with a jump singularity across the curve which is the quarter circle defined by . The test function is shown in Figure 11 and is defined as

Figure 11.

The test function for the 2D non-smooth case.

In the univariate case, in Section 2.2.1, we use the Gibbs phenomenon in order to find an initial approximation to the singularity location . The same idea, with some modifications to the 2D case, is applied here. The truncated Fourier sum

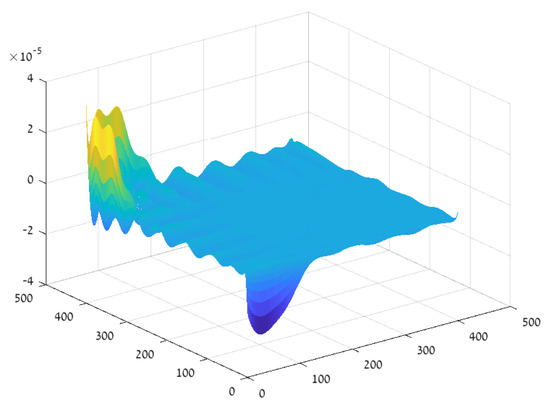

gives an approximation to f, but the approximation suffers from a Gibbs phenomenon near the boundaries of the domain and near the singularity curve . We evaluated on a mesh on , and enhanced the Gibbs effect by applying first order differences along the x-direction. The results are depicted in Figure 12. The locations of large x-direction differences and of large y-direction differences within indicate the location of .

Figure 12.

First order x-direction differences of a truncated Fourier sum—notice the relatively high values at the boundary and near the singularity curve.

Building the initial approximation

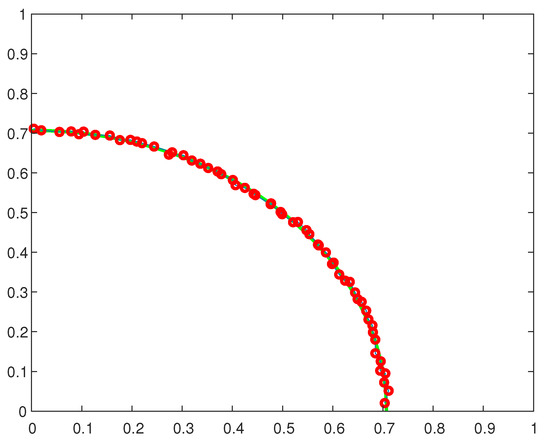

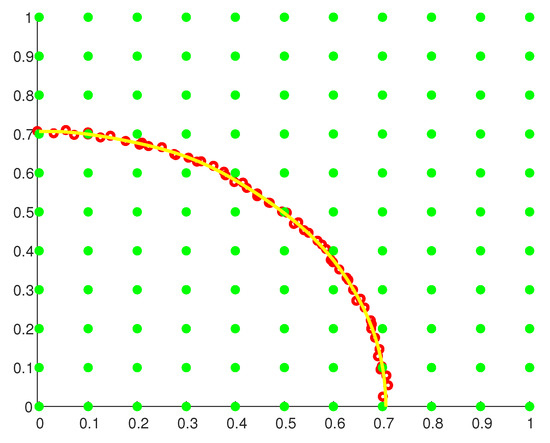

Searching along 50 horizontal lines (x-direction) for maximal x-direction differences, and along 50 vertical lines (y-direction) for maximal y direction differences, we have found 72 such maximum points, which we denote by . We display these points (in red) in Figure 13, on top of the curve (in blue). Now we use these points to construct the spline , whose zero level curve is taken as the initial approximation to . To construct we first overlay on a net of points, . These are the green points displayed in Figure 14.

Figure 13.

The singularity curve (blue) and points of maximal first differences of .

Figure 14.

The singularity curve (blue) and points of maximal first differences of .

To each point in we assign the value of its distance from the set , with a plus sign for points which are on the right or above , and a minus sign for the other points. To each point in we assign the value zero. The spline function is now defined by the least-squares approximation to the values at all the points . We have used here tensor product splines of order 10, on a uniform mesh with knots’ distance . We denote the level curve zero of the resulting as , and this curve is depicted in yellow in Figure 14. It seems that is already a good approximation to (in blue), and thus it is a good starting point for achieving the minimization target (21).

Improving the approximation to , and building the two approximants

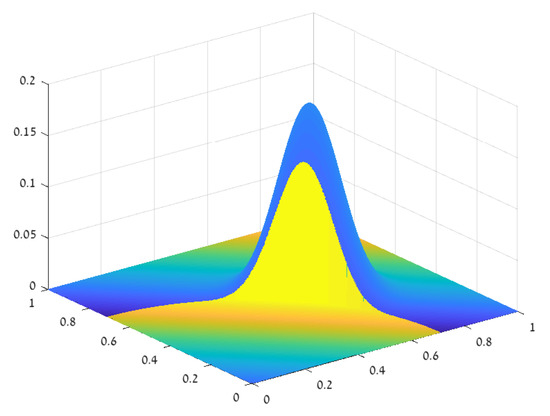

Starting from we use a quasi-Newton method for iterative improvement of the approximation to . The expensive ingredient in the computation procedure is the need to recompute the Fourier coefficients of the B-splines for any new set of coefficients of . We recall that we need of these coefficients for each B-spline, and we have B-splines. In the numerical example we have used and . To illustrate the issue we present in Figure 15 one of those B-spline whose support intersects the singularity curve. When the singularity curve is updated, the Fourier coefficients of this B-spline are recalculated.

Remark 5.

Calculating Fourier coefficients of the B-splinesCalculating the Fourier coefficients of the B-splines is the most costly step in the approximation procedure. For the univariate case the Fourier coefficients of the B-splines can be computed analytically. For a smooth d-variate function , with no singularity within the unit cube , piecewise Gauss quadrature may be used to compute the Fourier coefficients with high precision. The non-smooth multivariate case is more difficult, and more expensive. However, we noticed that using low precision approximations for the Fourier coefficients of the B-splines is fine. For example, in the above example, we have employed a simple numerical quadrature combined with fast Fourier transform, and we obtained the Fourier coefficients with a relative error ∼. Yet the resulting approximation error is small , as seen in Figure 18.

Figure 15.

One of the tensor product B-splines used for the approximation of f, chopped off by the singularity curve.

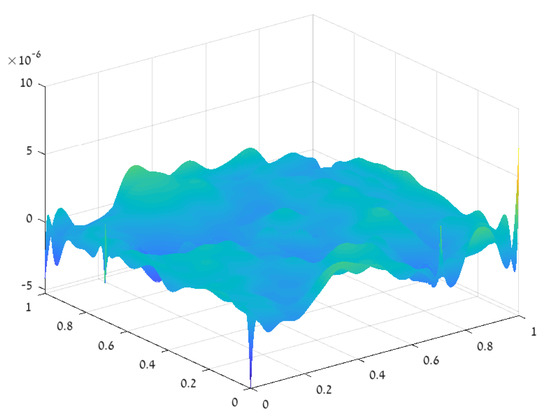

Using one quasi-Newton step we obtained new spline coefficients and an improved approximation to as the zero level set of . Stopping the procedure at this point yields approximation results as shown in the figures below. Figure 16 shows the approximation error on , where U is a small neighborhood of . Figure 17 shows, in green, of the magnitude of the giver Fourier coefficients and, in blue, of the Fourier coefficients of the difference . We observe a reduction of three orders of magnitude between the two.

Figure 16.

The approximation error with .

Figure 17.

The magnitude reduction of the Fourier coefficients with .

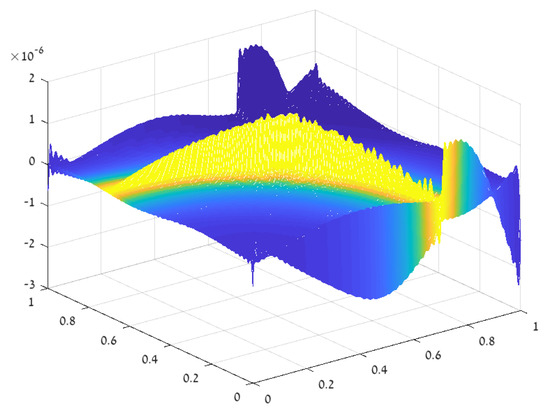

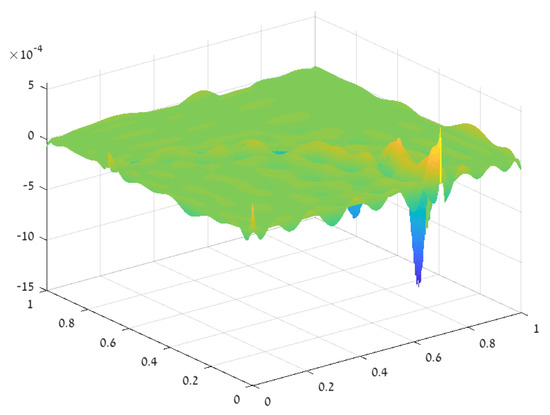

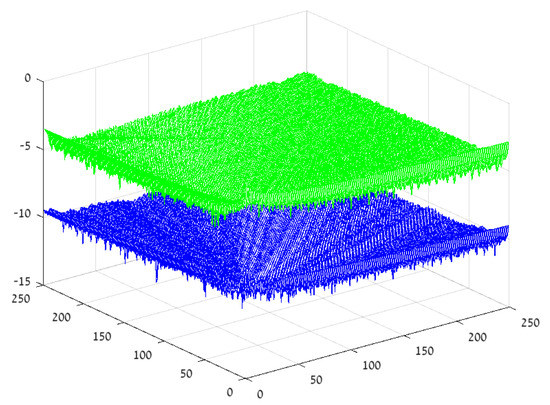

Applying four quasi-Newton iterations took ∼24 min execution time. The approximation of by the zero level set of is now with an error of . The consequent approximation error to f is reduced as shown in Figure 18, and the Fourier coefficients of the error are reduced by 5 orders of magnitude, as shown in Figure 19.

Figure 18.

The approximation error with .

Figure 19.

The magnitude reduction of the Fourier coefficients with .

3.2.2. The 2D Approximation Procedure

Let us sum up the suggested approximation procedure:

- (1)

- Choose the approximation space for approximating and and the approximation space for approximating .

- (2)

- Define the number of Fourier coefficients to be used for building the approximation such that

- (3)

- Find first approximation to :

- (a)

- Compute a partial Fourier sum and locate maximal first order differences along horizontal and vertical lines to find points near , with assigned values 0.

- (b)

- Overlay a net of points as in Figure 14, with assigned signed-distance values.

- (c)

- Compute the least-squares approximation from to the values at , denote it .

- (4)

- Calculate the first Fourier coefficients of the basis functions of , truncated with respect to the zero level curve of .

- (5)

- (6)

- Update to improve the approximation to , by performing quasi-Newton iterations to reduce the objective function in (21).

- (7)

- Go back to (4) to update the approximation.

3.2.3. Lower Order Singularities

Let us assume that is a continuous function, and that is discontinuous across the singularity curve . In this case we cannot use the Gibbs phenomenon effect to approximate the singularity curve. However, the Fourier coefficients

represent a function g that has discontinuity across , and the above procedure for approximating can be applied.

3.3. Error Analysis

We consider the non-smooth bivariate case, where f is a combination of two smooth parts, on and on , separated by a smooth curve . Throughout the paper we approximate f using spline functions. In this section we consider approximations by general approximation spaces. Let be the approximation space for approximating the smooth pieces constituting f, and let be the approximation space used for approximating the singularity curve. The following assumption characterize and quantify the assumptions about the function f and its singularity curve in terms the ability to approximate them using the approximation spaces .

Assumption 1.

We assume that and are finite dimensional spaces of dimensions and respectively.

Assumption 2.

We assume that and are smoothly extendable to and

Assumption 3.

For , let us denote by the zero level curve of p within . we assume there exists such that

where denotes the Hausdorff distance.

We look for an approximation S to f which is a combination of two components, in and in , separated by , , such that Fourier coefficients of f and S are matched in the least-squares sense:

Assumption 4.

Consider the above function S constructed by a triple , , . We assume that there is a Lipschitz continuous inverse mapping from the Fourier coefficients of S to the triple :

Remark 6.

To enable the above property we choose M so that

The topology in the space of triples is in terms of the maximum norm for the first two components and the Hausdorff distance for the third component.

Proposition 1.

Let , , , and satisfy Assumptions 1, 2, 3 and 4. Then the triple minimizing (27) provides the following approximation bounds:

and

where and are separated by .

Proof.

By Assumptions 2, 3 it follows that there exists an approximation S defined as above by a triple , such that

and

Building an approximation to f as above by a triple , we can estimate its Fourier coefficients using the above bounds, and it follows that

Therefore,

Let

The approximation to f is the combination of the two components, in and in , where and are separated by .

Using the bound in (37) it follows that

Validity of the Approximation Assumptions

Let us check the validity of Assumptions 1, 2, 3 and 4 for the approximation tools suggested in Section 3.2 and used in the above numerical tests.

We assume that , and that is a curve. To construct the approximation to and we use the space of kth degree tensor-product splines with equidistant knots’ distance d in each direction, . The approximation to is obtained using the approximation space of ℓth degree tensor product splines with equidistant knots’ distance h in each direction, . , , and for both spaces we use the B-spline basis functions. Assumptions 2 and 3 are fulfilled with and .

Assumption 4 is more challenging. To define the mapping

we use the same procedure Section 3.2.2 for defining the approximation to f:

We represent p and using the B-spline basis function as in (18), (19) and (20), respectively. Each triple defines a piecewise spline approximation , and we look for the approximation T(x,y) such that Fourier coefficients of T match the Fourier coefficients in the least-squares sense:

Out of all the possible solutions of the above problem we look for the one with minimal coefficients’ norm, i.e., minimizing

Following the procedure of Section 3.2.2, we observe that every step in the procedure is smooth with respect to its input. Possible non-uniqueness in solving the linear system of equations on step (5) is resolved by using the generalized inverse. Therefore, the composition of all the steps is also a smooth function of the input, which implies the validity of Assumption 4.

4. The 3D Case

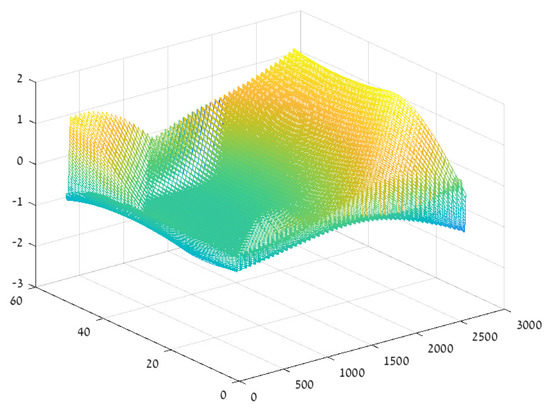

Numerical Example—The Smooth 3D Case

We consider the test function

assuming only its Fourier coefficients are given. We have used only Fourier coefficients and constructed an approximation using 5th-degree tensor product splines with equidistant knots’ distance in each direction. For this case, the matrix A is of size , and . Again, we have employed the iterative refinement algorithm to obtain a high precision solution. The test function is shown in Figure 20. The error in the resulting approximation is displayed in Figure 21.

Figure 20.

The 3D test function reshaped into 2D.

Figure 21.

The approximation error graph, reshaped into 2D.

5. Concluding Remarks

The basic crucial assumption behind the presented Fourier acceleration strategy is that the underlying function is piecewise ‘nice’. That is, piecewisely, the function can be well approximated by a suitable finite set of basis functions. The Fourier series of the function may be given to us as a result of the computational method dictated by the structure of the mathematical problem at hand. In itself, the Fourier series may not be the best tool for approximating the desired solution, and yet it contains all the information about the requested function. Utilizing this information we can derive high accuracy piecewise approximations to that function. The simple idea is to make the approximation match the coefficients of the given Fourier series. The suggested method is simple to implement for the approximation of smooth non-periodic functions in any dimension. The case of non-smooth functions is more challenging, and a special strategy is suggested and demonstrated for the univariate and bivariate cases. The paper contains a descriptive graphical presentation of the approximation procedure, together with a fundamental error analysis.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Gottlieb, D.; Shu, C.W. On the Gibbs phenomenon and its resolution. SIAM Rev. 1977, 39, 644–668. [Google Scholar] [CrossRef]

- Tadmor, E. Filters, mollifiers and the computation of the Gibbs phenomenon. Acta Numer. 2007, 16, 305–378. [Google Scholar] [CrossRef]

- Gelb, A.; Tanner, J. Robust reprojection methods for the resolution of the Gibbs phenomenon. Appl. Comput. Harmon. Anal. 2006, 20, 3–25. [Google Scholar] [CrossRef]

- Eckhoff, K.S. Accurate and efficient reconstruction of discontinuous functions from truncated series expansions. Math. Comput. 1993, 61, 745–763. [Google Scholar] [CrossRef]

- Eckhoff, K.S. Accurate reconstructions of functions of finite regularity from truncated Fourier series expansions. Math. Comput. 1995, 64, 671–690. [Google Scholar] [CrossRef]

- Eckhoff, K.S. On a high order numerical method for functions with singularities. Math. Comput. 1998, 67, 1063–1087. [Google Scholar] [CrossRef]

- Batenkov, D. Complete algebraic reconstruction of piecewise-smooth functions from Fourier data. Math. Comput. 2015, 84, 2329–2350. [Google Scholar] [CrossRef]

- Nersessian, A.; Poghosyan, A. On a rational linear approximation of Fourier series for smooth functions. J. Sci. Comput. 2006, 26, 111–125. [Google Scholar] [CrossRef]

- Levin, D.; Sidi, A. Extrapolation methods for infinite multiple series and integrals. J. Comput. Methods Sci. Eng. 2001, 1, 167–184. [Google Scholar] [CrossRef]

- Sidi, A. Acceleration of convergence of (generalized) Fourier series by the d-transformation. Ann. Numer. Math. 1995, 2, 381–406. [Google Scholar]

- Wilkinson, J.H. Rounding Errors in Algebraic Processes; Prentice-Hall: Englewood Cliffs, NJ, USA, 1963. [Google Scholar]

- Moler, C.B. Iterative refinement in floating point. J. ACM 1967, 14, 316–321. [Google Scholar] [CrossRef]

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).