1. Introduction

In time series prediction problems, the main objective is to obtain results that approximate the real data as closely as possible with minimum error. The use of intelligent systems for working with time series problems is widely utilized [

1,

2]. For example, Zhou et al. [

3] used a dendritic mechanism in a neural model and phase space reconstruction (PSR) for the prediction of a time series, and Hrasko et al. [

4] presented a prediction of a time series with a Gaussian-Bernoulli restricted Boltzmann machine hybridized with the backpropagation algorithm.

For optimization problems, the principle objective is to work in a search space to encounter an optimal choice among a set of potential solutions. In many cases, the search space is too wide, which means that the time used for obtaining an optimal solution is very extensive. To improve the optimization and search problems, a set of methods of computational intelligence was the focus of some recent work in improving the solving of optimization and search problems [

5,

6,

7]. These methods can achieve competitive results, but not the best solutions. Furthermore, heuristic algorithms can be used like alternative methods. Although these algorithms are not guaranteed to find the best solution, they are able to obtain an optimal solution in a reasonable time.

The main contribution is the dynamic adaptation of the parameters for particle swarm optimization (PSO) used to optimize parameters for a neural network with interval type-2 fuzzy numbers weights with different T-norms and S-norms, proposed in Gaxiola et al. [

8] and used to perform the prediction of financial time series [

9,

10,

11].

The adaptation of parameters is performed with a Type-2 fuzzy inference system (T2-FIS) to adjust the

and

parameters for the particle swarm optimization. This adaptation is based on the work of Olivas et al. [

12], who used interval type-2 fuzzy logic to improve the convergence and diversity of PSO to optimize the minimum for several mathematical functions. In this work, control of the

and

parameters is performed using fuzzy rules to achieve a good dynamic adaptation [

13,

14].The use of type-2 fuzzy logic gives a better performance in our work and this is justified by recent research from Wu and Tan [

15] and Sepulveda et al. [

16], who demonstrated that interval type-2 fuzzy systems perform better than type-1 fuzzy systems.

The neural network with interval type-2 fuzzy numbers weights optimized with the proposed approach is different from others works using the adjustment of weights in neural networks [

17,

18], such as James and Donald [

19,

20].

A comparison of the performance of the traditional neural network compared to the proposed optimization for the fuzzy neural network with interval type-2 fuzzy numbers weights is performed in this paper. In this case, a prediction for a financial time series is used to verify the efficiency of the proposed method.

The use of T-norms and S-norms of sum-product can be seen in Hamacher [

21,

22]. Olivas [

23] developed a new method for PSO of parameter adaptation using fuzzy logic. In their work, the authors improved the performance of the traditional PSO. We decided to use the parameter adaptation because in previous works, we have had good results with other metaheuristic methods. For instance, in [

24], we proposed a gravitational search algorithm (GSA) with parameter adaptation to improve the original GSA metaheuristic algorithm. The next section states the theoretical approach to the work in the paper.

Section 3 presents the background research performed to adjust the parameters in PSO, as well as research performed in the neural network area with fuzzy numbers.

Section 4 explains the proposed approach and a description of the problem to be solved in the paper.

Section 5 presents the simulation results for the proposed approach.

Section 6 presents a discussion of the results of the experiments. Finally, in

Section 7, conclusions are shown.

3. Antecedents Development

The PSO bio-inspired algorithm has received many improvements in their performance by many researchers. The most important modifications made to PSO are to increment the diversity of the swarm and to enhance the convergence [

39,

40].

Olivas et al. [

12] proposed a method to dynamically adjust the parameters of particle swarm optimization using type-2 and type-1 fuzzy logic.

Muthukaruppan and Er [

41] presented a hybrid algorithm of particle swarm optimization using a fuzzy expert system in the diagnosis of coronary artery disease.

Taher et al. [

42] developed a fuzzy system to perform an adjustment of the parameters,

,

, and w (inertia weight), for the particle swarm optimization.

Wang et al. [

43] presented a PSO variant utilizing a fuzzy system to implement changes in the velocity of the particle according to the distance between all particles. If the distance is small, the velocity of some particles is modified drastically.

Hongbo and Abraham [

44] proposed a new parameter, called the minimum velocity threshold, to the equation used to calculate the velocity of the particle. The approach consists in that the new parameter performs the control in the velocity of the particles and applies fuzzy logic to make the new parameter dynamically adaptive.

Shi and Eberhart [

45] presented a dynamic adaptive PSO to adjust the inertia weight utilizing a fuzzy system. The fuzzy system works with two input variables, the current inertia weight and the current best performance evaluation, and the change of the inertia weight is the output variable.

In neural networks, the use of fuzzy logic theory to obtain hybrid algorithms has improved the results for several problems [

46,

47,

48]. In this area, some significant papers of works with fuzzy numbers are presented [

49,

50]:

Dunyak et al. [

51] proposed a fuzzy neural network that works with fuzzy numbers for the obtainment of new weights (inputs and outputs) in the step of training. Coroianu et al. [

52] presented the use of the inverse F-transform to obtain the optimal fuzzy numbers, preserving the support and the convergence of the core.

Li Z. et al. [

53] proposed a fuzzy neural network that works with two fuzzy numbers to calculate the results with operations, like subtraction, addition, division, and multiplication. Fard et al. [

54] presented a fuzzy neural network utilizing sum and product operations for two interval type-2 triangular fuzzy numbers in combination with the Stone-Weierstrass theorem.

Molinari [

55] proposed a comparison of generalized triangular fuzzy numbers with other fuzzy numbers. Asady [

56] presented a comparison of a new method for approximation trapezoidal fuzzy numbers with methods of approximation in the literature.

Figueroa-García et al. [

57] presented a comparison for different interval type-2 fuzzy numbers performing distance measurement. Requena et al. [

58] proposed a fuzzy neural network working with trapezoidal fuzzy numbers to obtain the distance between the numbers and utilized a proposed decision personal index (DPI).

Valdez et al. [

59] proposed a novel approach applied to PSO and ACO algorithms. They used fuzzy systems to dynamically update the parameters for the two algorithms. In addition, as another important work, we can mention Fang et al. in [

60], who proposed a hybridized model of a phase space reconstruction algorithm (PSR) with the bi-square kernel (BSK) regression model for short term load forecasting. Also, it is worth mentioning the interesting work of Dong et al. [

61], who proposed a hybridization method using the support vector regression (SVR) model with a cuckoo search (CS) algorithm for short term electric load forecasting.

4. Proposed Method and Problem Description

In this work, the optimization for interval type-2 fuzzy number weights neural networks (IT2FNWNN) using particle swarm optimization (PSO) is proposed. The PSO is dynamically adapted utilizing interval type-2 fuzzy systems. A comparison of the traditional neural network and the IT2FNWNN optimized with PSO is performed. The operations in the neurons are obtained with calculation of T-norms and S-norms of the sum-product, Hamacher and Frank [

62,

63,

64].

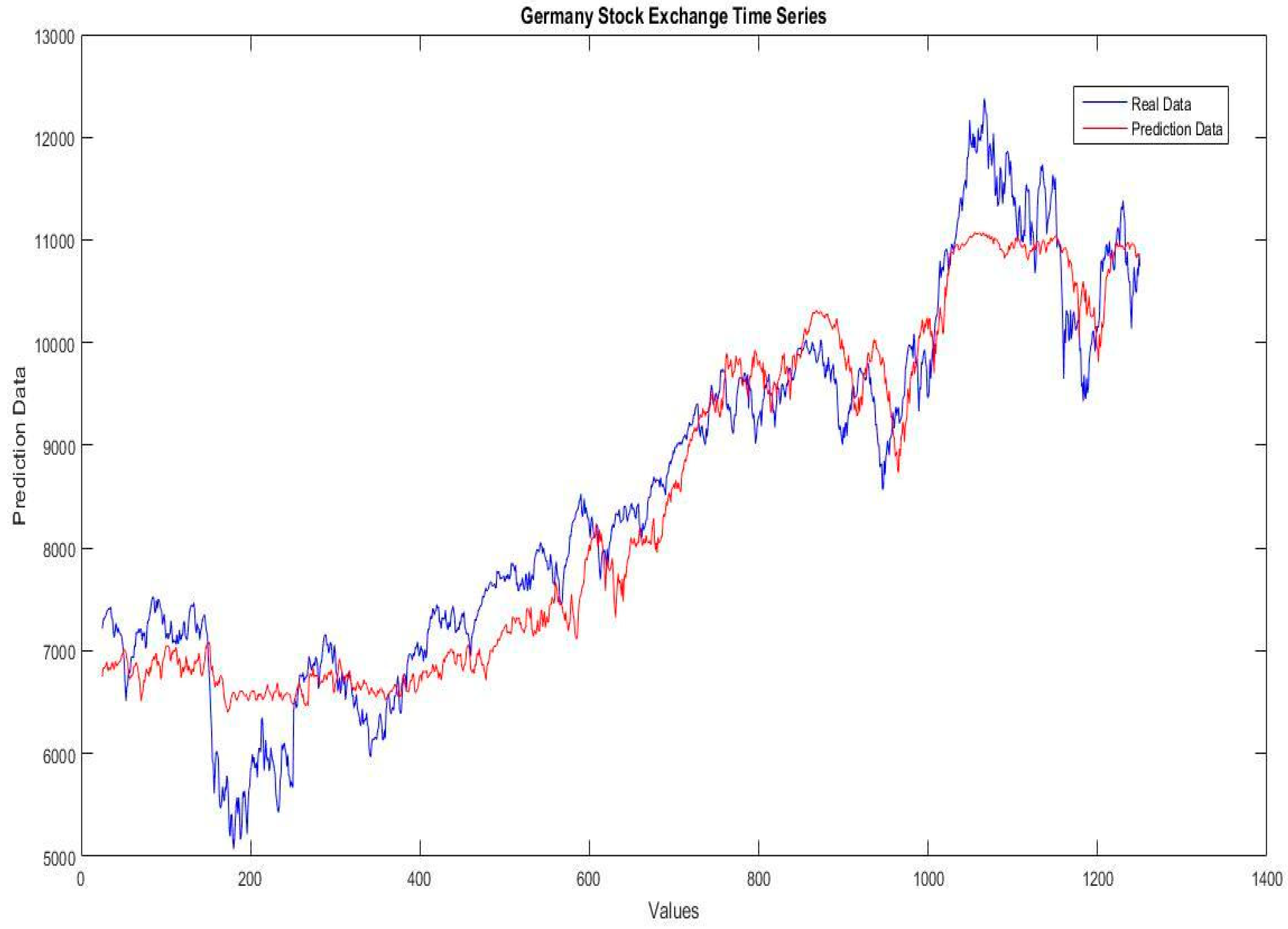

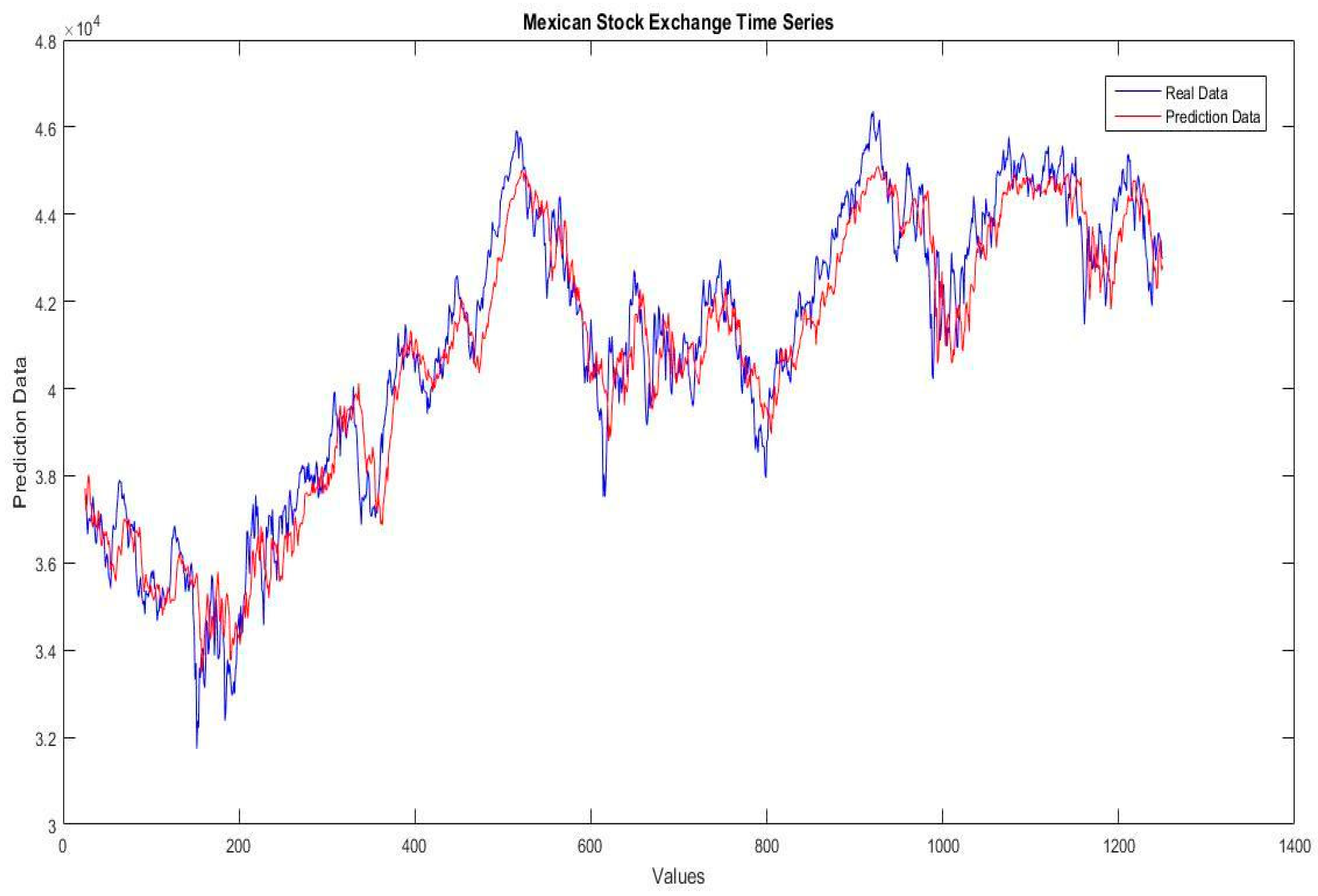

For testing the proposed method, the prediction of the financial time series of the stock exchange markets of Germany, Mexican, Dow-Jones, London, Nasdaq, Shanghai, and Taiwan is performed. The databases consisted of 1250 data for each time series (2011 to 2015).

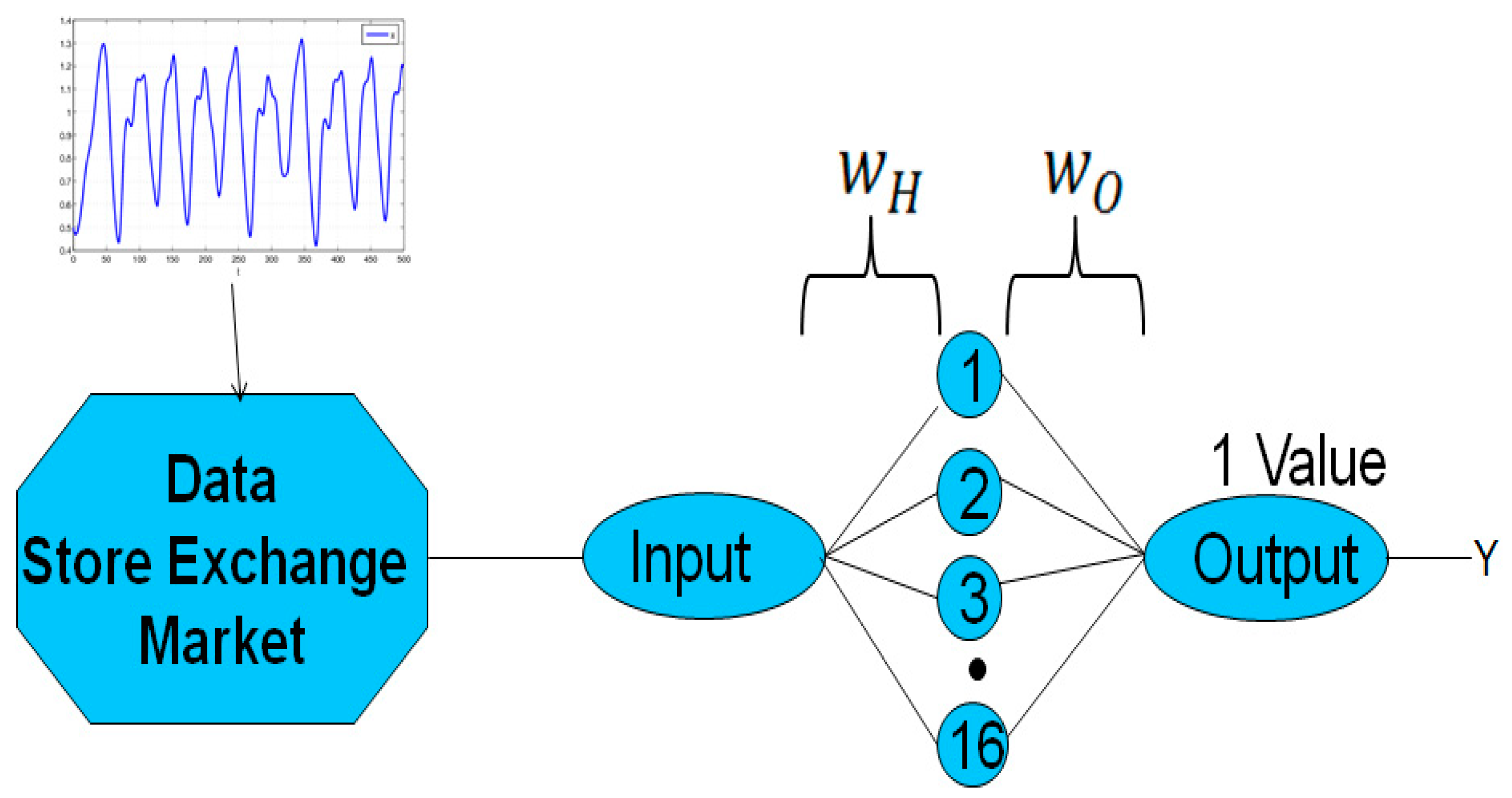

The architecture of the traditional neural network used in this paper (see

Figure 2) consists of 16 neurons for the hidden layer and one neuron for the output layer.

The architecture of the interval type-2 fuzzy number weight neural network (IT2FNWNN) has the same structure of the traditional neural network, with 16, 39, and 19 neurons in the hidden layer for the sum-product, Hamacher and Frank, respectively, and one neuron for the output layer (see

Figure 3) [

8].

The IT2FNWNN work with fuzzy numbers weights in the connections between the layers, and these weights are represented as follows [

8]:

where

and

are obtained using the Nguyen-Widrow algorithm [

65] in the initial execution of the network.

The linear function as the activation function for the output neuron and the secant hyperbolic for the hidden neurons are applied. The S-Norm and T-Norm for obtaining the lower and upper outputs of the neurons are applied, respectively. For the lower output, the S-Norm is used and for the upper output, the T-Norm is utilized. The T-Norms and S-Norms used in this work are described as follows:

The back-propagation algorithm using gradient descent and an adaptive learning rate is implemented for the neural networks.

A modification of the back-propagation algorithm that allows operations with interval type-2 fuzzy numbers to obtain new weights for the forthcoming epochs of the fuzzy neural network is proposed [

8].

The particle swarm optimization (PSO) is used to optimize the number of neurons for the IT2FNWNN for operations with S-norm and T-norm of Hamacher and Frank. Also, for Hamacher, the parameter, γ, is optimized, and for Frank, the parameter, s, is optimized.

The parameters used in the PSO to optimize the IT2FNWNN are presented in

Table 1. The same parameters and design are applied to optimize all the IT2FNWNN for all the financial time series.

In the literature [

27], the recommended values for the

and

parameters are in the interval of 0.5 and 2.5; also, the change of these parameters in the iterations of the PSO can generate better results.

In the PSO used to optimize the IT2FNWNN, the parameters,

and

, from Equation (2) are selected to perform the dynamic adjustment utilizing an interval type-2 fuzzy inference system (IT2FIS).This selection is in the base as these parameters allow the particles to generate movements for performing the exploration or exploitation in the search space. The structure of the IT2FIS utilized in the PSO is presented in

Figure 4.

Based on the literature [

12,

66,

67], the inputs selected for the IT2FIS are the diversity of the swarm and the percentage of iterations. Also, in [

68], a problem of online adaptation of radial basis function (RBF) neural networks is presented. The authors present a new adaptive training, which is able to modify both the structure of the network (the number of nodes in the hidden layer) and the output weights.

The diversity input is defined by Equation (11), in which the degree of the dispersion of the swarm is calculated. This means that for less diversity, the particles are closer together, and for high diversity, the particles are more separated. The diversity equation can be taken as the average of the Euclidean distances amongst each particle and the best particle:

where:

For the diversity variable, normalization is performed based on Equation (12), taking values in the range of 0 to 1:

where:

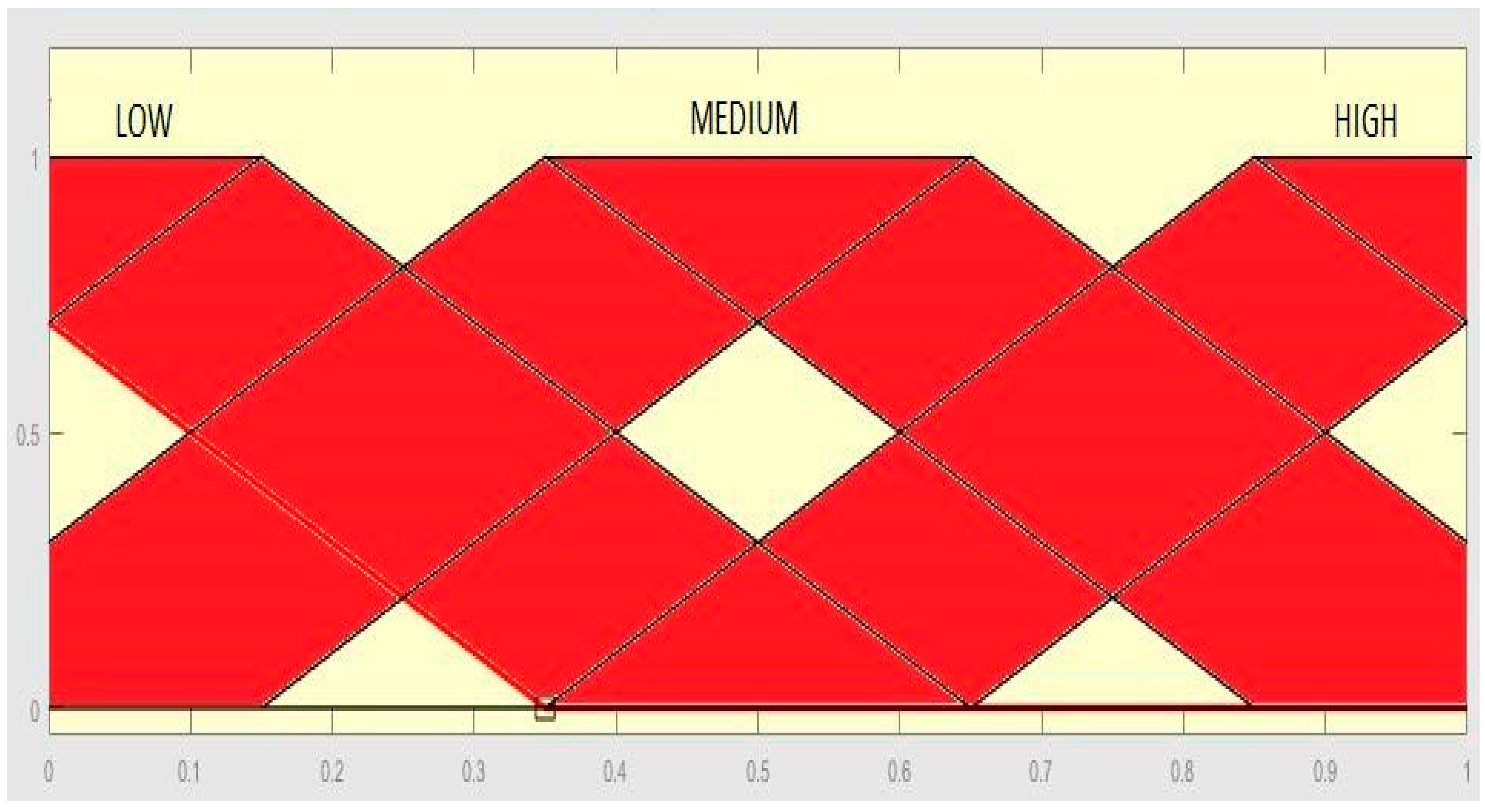

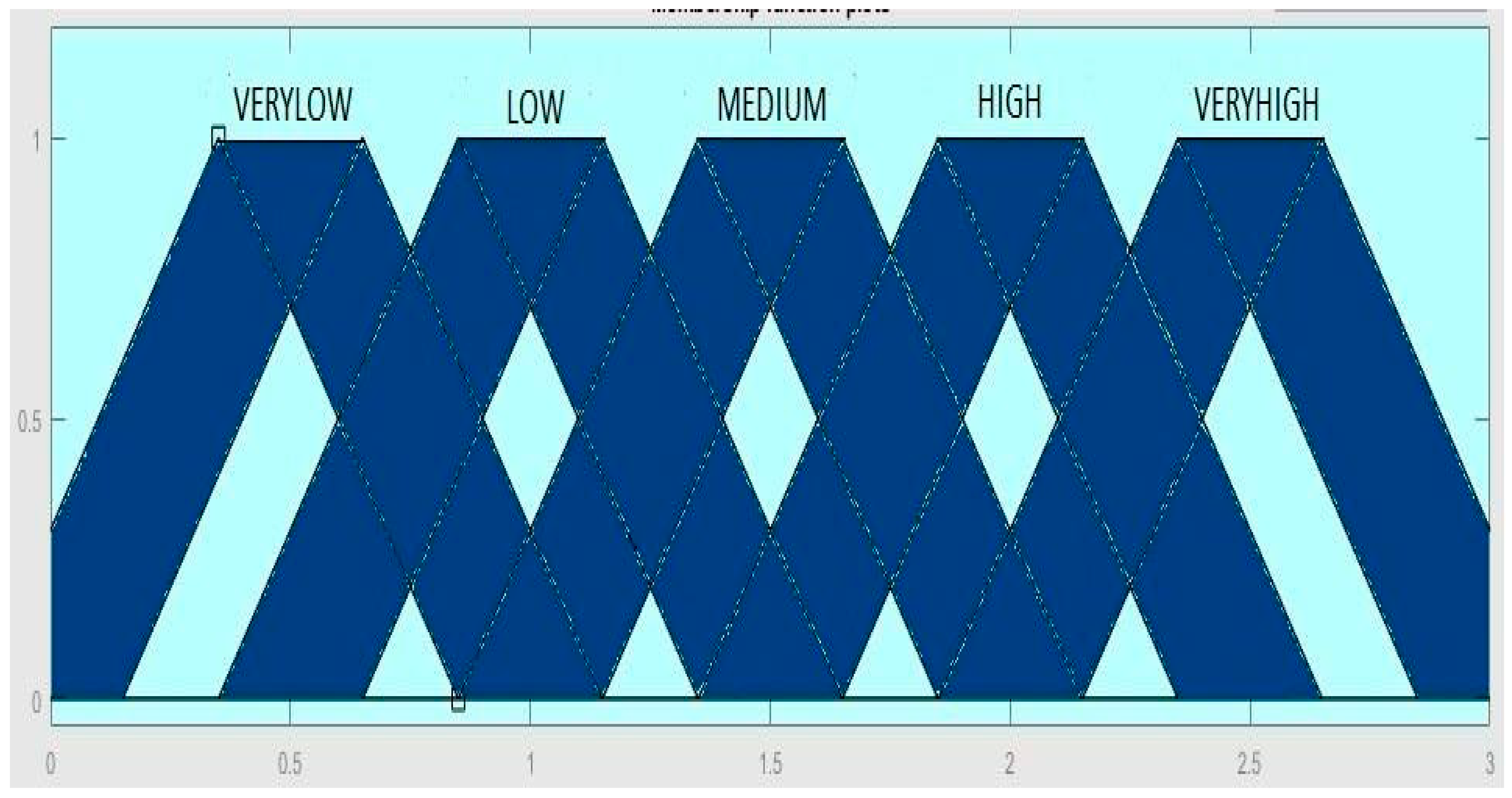

The membership functions for the input diversity are presented in

Figure 5.

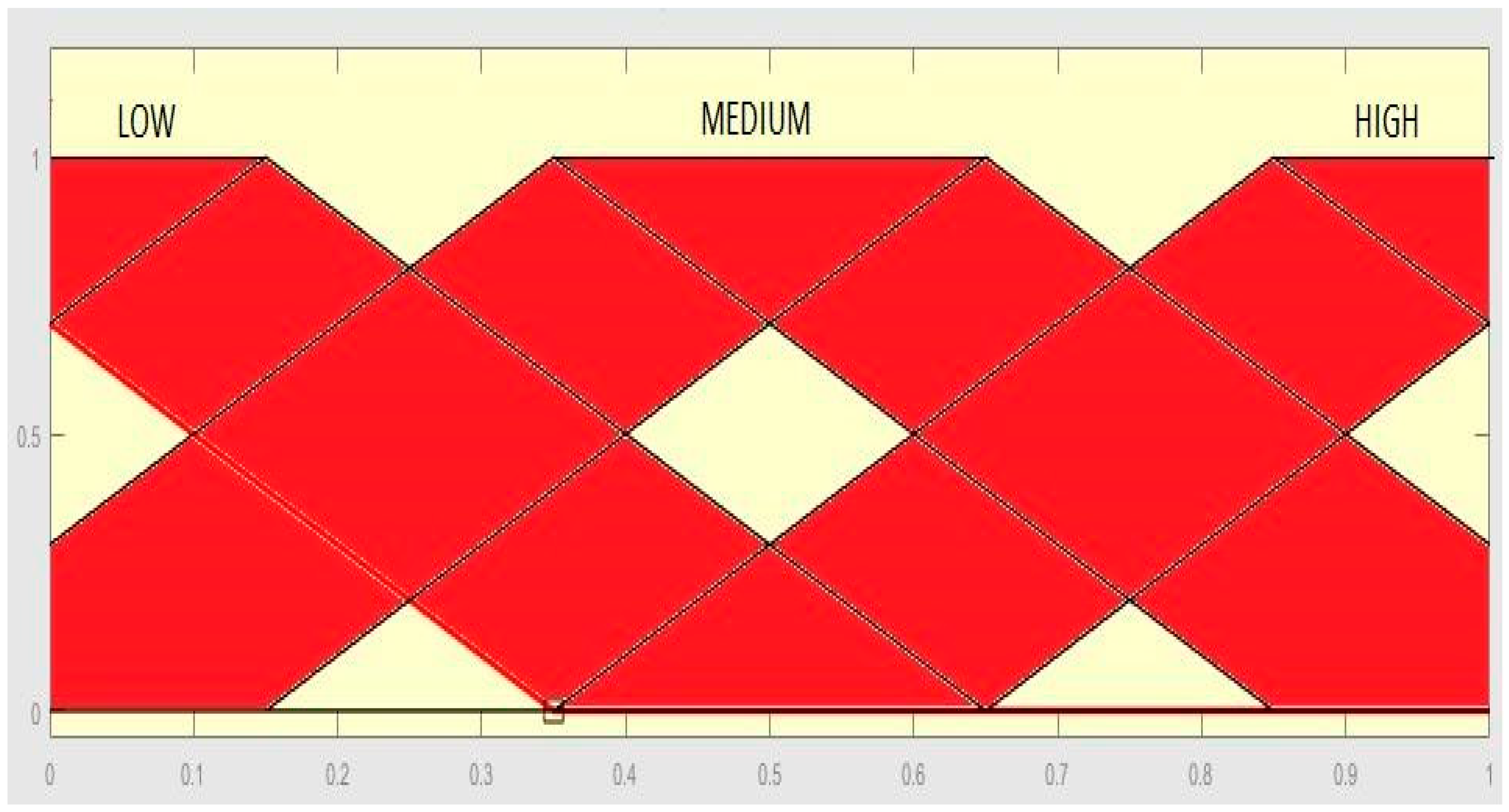

The iteration input is considered as a percentage of iterations during the execution of the PSO, the values for this input are in the range from 0 to 1. In the start of the algorithm, the iteration is considered as 0% and is increased until it reaches 100% at the end of the execution. The values are obtained as follows:

The membership functions for the input iteration are presented in

Figure 6.

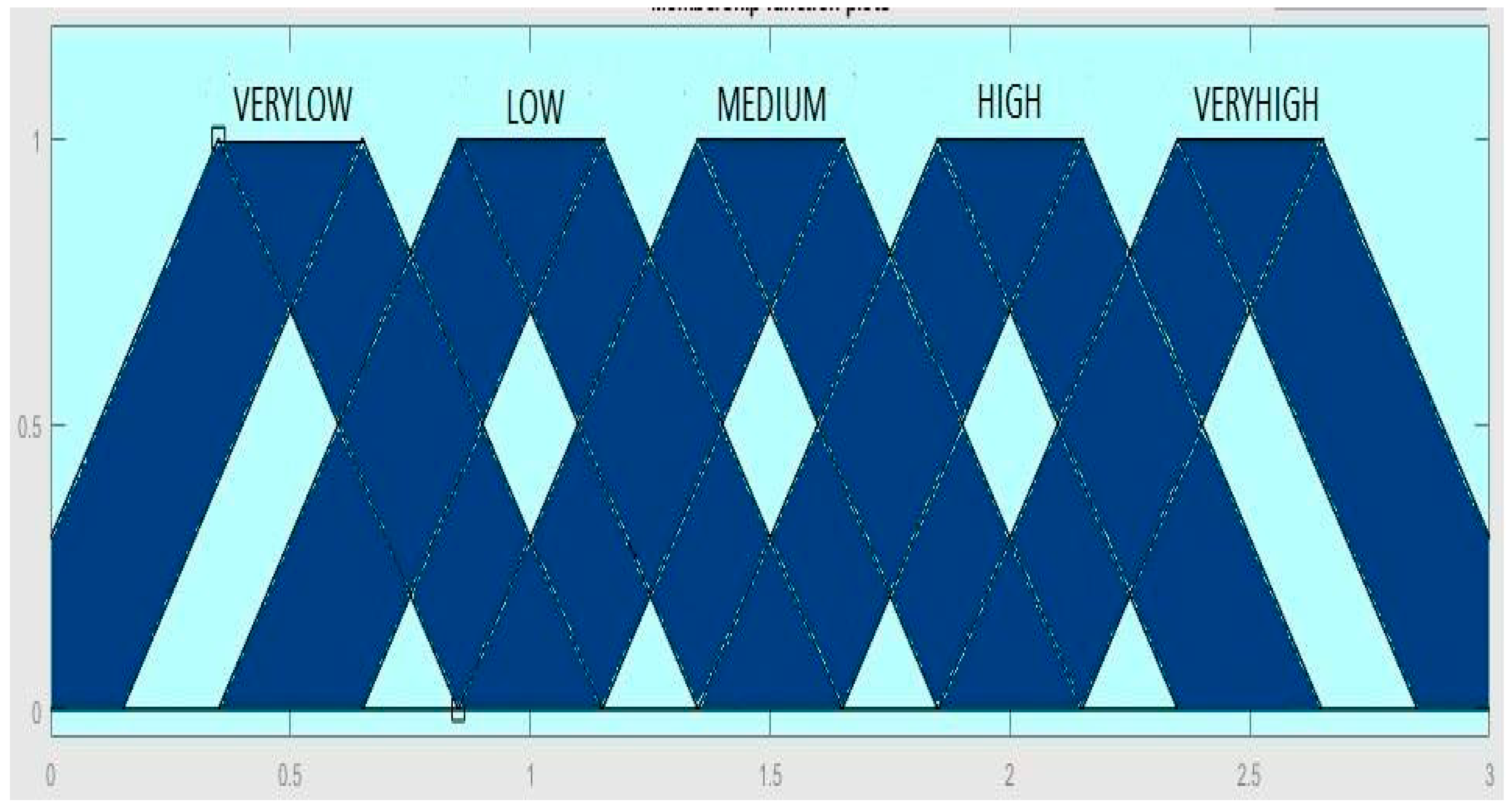

The membership functions for the outputs,

and

, are presented in

Figure 7 and

Figure 8, respectively. The values for the two outputs are in the range from 0 to 3.

Based on the literature of the behavior for the

and

parameters described in

Section 2, for the dynamic adjustment of the

and

parameters in each iteration of the PSO, a fuzzy rules set with nine rules is designed as follows:

If (Iteration is Low) and (Diversity is Low) then ( is VeryHigh) ( is VeryLow).

If (Iteration is Low) and (Diversity is Medium) then ( is High) ( is Medium).

If (Iteration is Low) and (Diversity is High) then ( is High) ( is Low).

If (Iteration is Medium) and (Diversity is Low) then ( is High) ( is Low).

If (Iteration is Medium) and (Diversity is Medium) then ( is Medium) ( is Medium).

If (Iteration is Medium) and (Diversity is High) then ( is Low) ( is High).

If (Iteration is High) and (Diversity is Low) then ( is Medium) ( is VeryHigh).

If (Iteration is High) and (Diversity is Medium) then ( is Low) ( is High).

If (Iteration is High) and (Diversity is High) then ( is VeryLow) ( is VeryHigh).

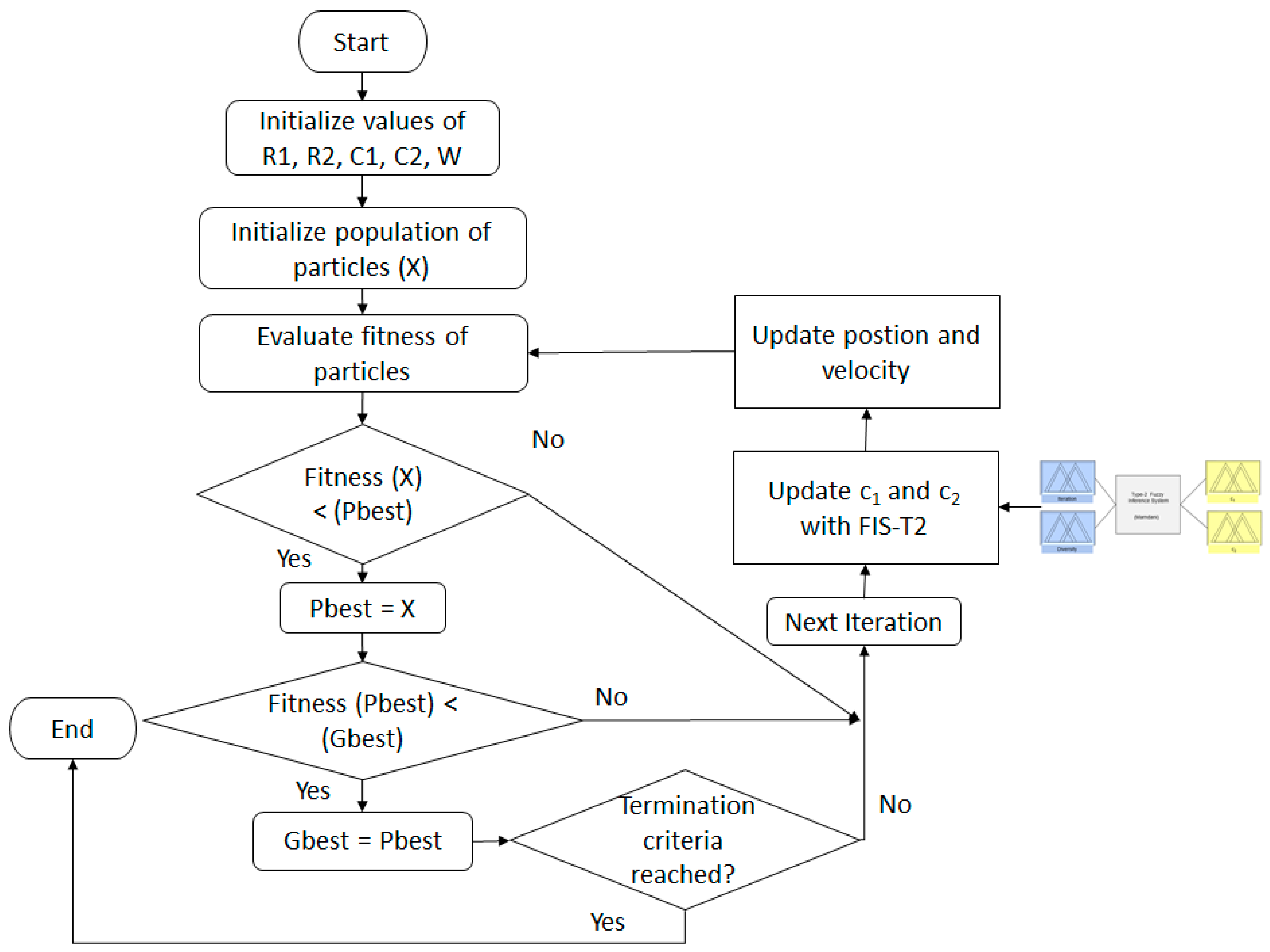

In

Figure 9, a block diagram of the procedure for the proposed approach is presented.

5. Simulation Results

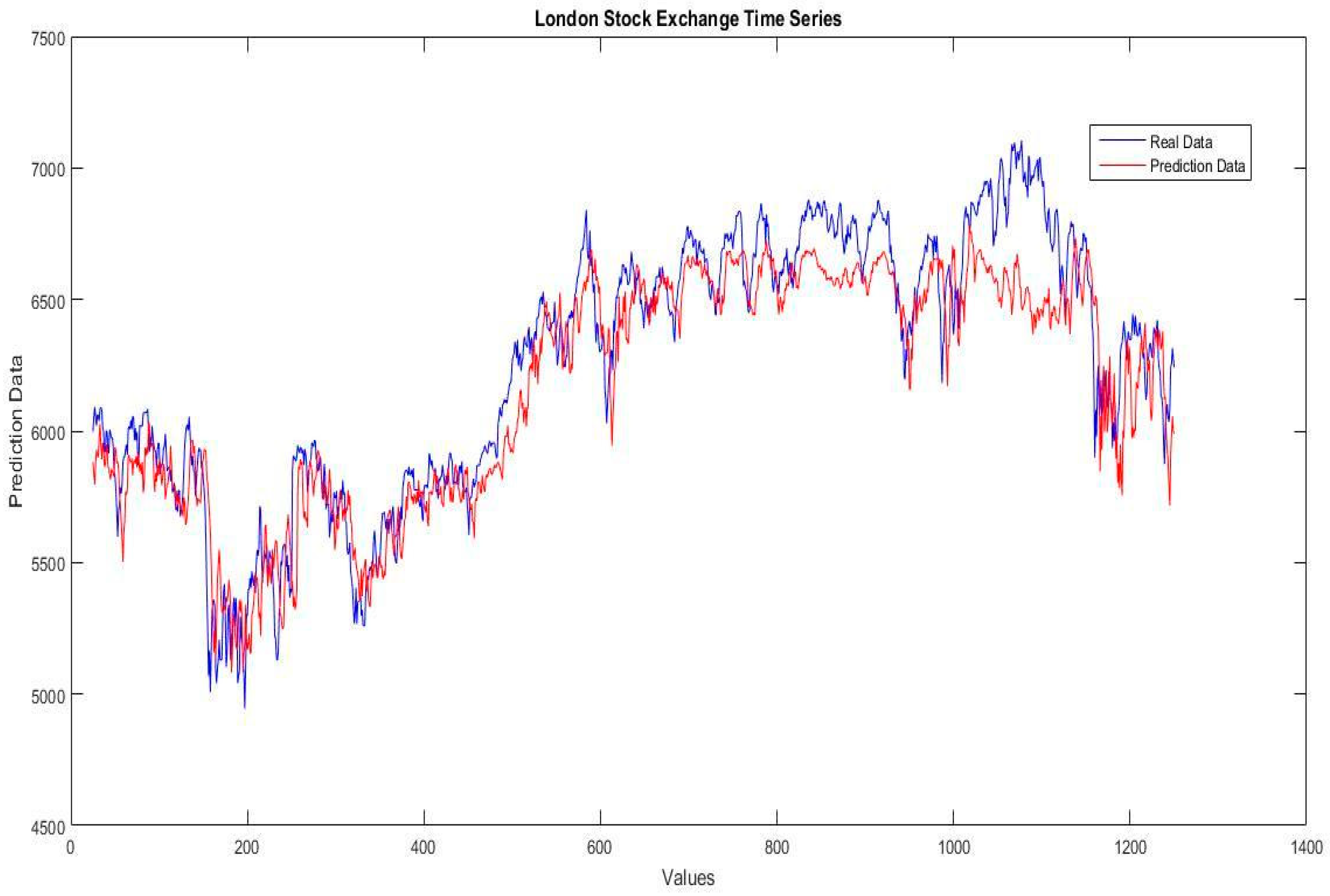

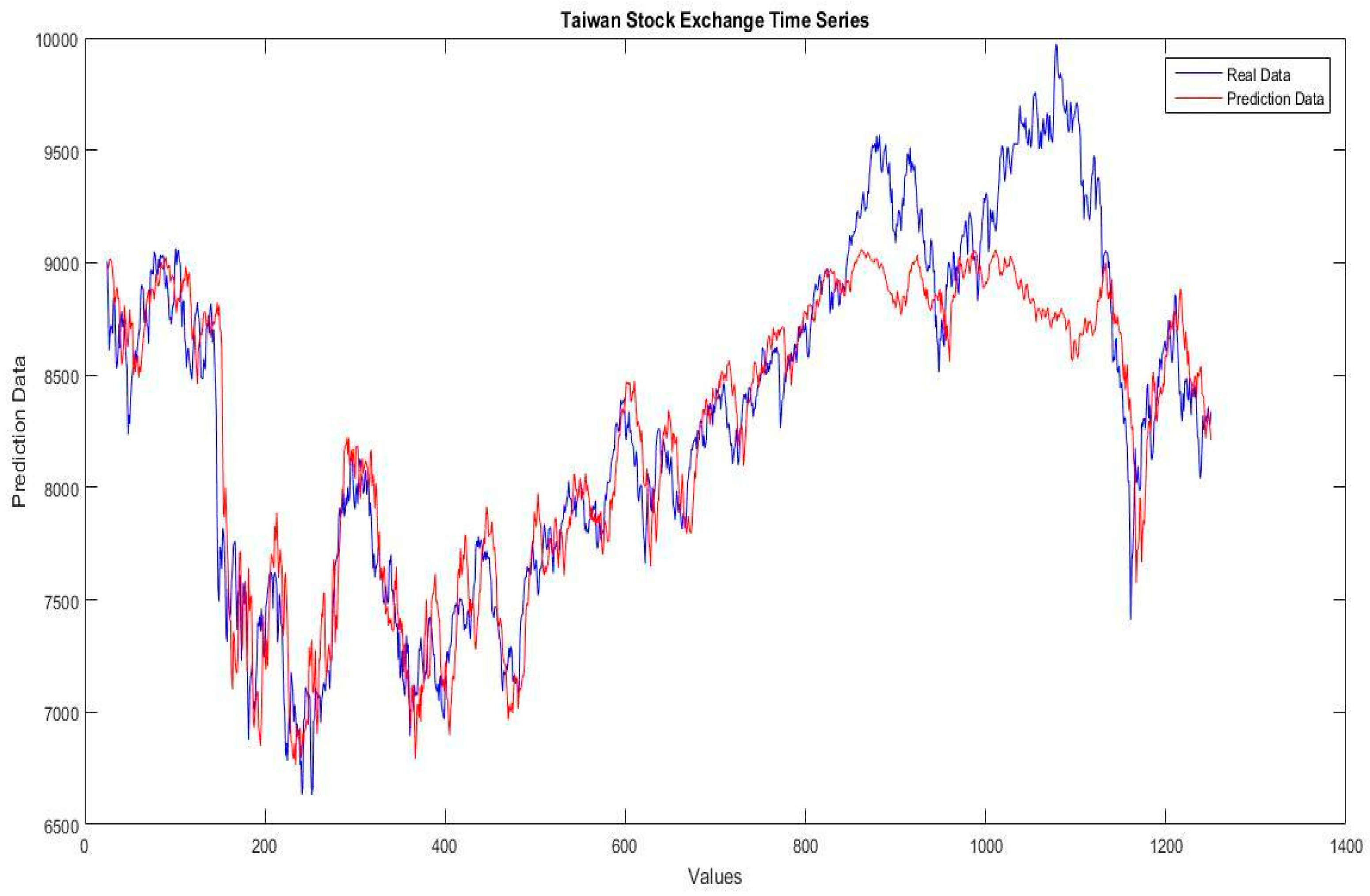

We achieved the experiments for the financial time series of the stock exchange markets of Germany, Mexico, Dow-Jones, London, Nasdaq, Shanghai, and Taiwan, and for all experiments, we used 1250 data points. In this case, 750 data points are considered for the training stage and 500 data points for the testing stage.

In

Table 2, the results of the experiments for the financial time series of stock exchange markets for the traditional neural network with 16 neurons in the hidden layer are presented.

The results of the experiments are obtained with the mean absolute error (MAE) and root mean standard error (RMSE). Thirty experiments with the same parameters and conditions to obtain the average error were performed, but only the best result and average of the 30 experiments are presented.

In

Table 3, the results of the experiments for the financial time series of the stock exchange for the IT2FNWNN with 16 neurons in the hidden layer for the S-norm and the T-norm of the sum of the product are presented (IT2FNWNNSp).

In

Table 4, the results of the experiments for the financial time series of the stock exchange markets for the IT2FNWNN with 39 neurons in the hidden layer for the S-norm and T-norm of Hamacher are presented (IT2FNWNNH). The value for the parameter,

, in the Hamacher IT2FNWNN without optimization is 1.

In

Table 5, the results of the experiments for the financial time series of the stock exchange for the IT2FNWNN with 19 neurons in the hidden layer for the S-norm and T-norm of Frank are presented (IT2FNWNNF). The value for the parameter,

, in the Frank IT2FNWNN without optimization is 2.8.

We performed the optimization of the Hamacher IT2FNWNN and Frank IT2FNWNN for the number of neurons in the hidden layer and the parameters,

and

, respectively, with PSO using the dynamic adjustment for the

and

parameters of Equation (2), applying the interval type-2 fuzzy inference system of

Figure 4 and the parameters of

Table 1.

In

Table 6, the optimization for each financial time series for the Hamacher IT2FNWNN is presented. We presented the best result of the five experiments performed.

In

Table 7, the results of the experiments for the financial time series of the stock exchange for the IT2FNWNN for the Hamacher S-norm and T-norm optimized with dynamic PSO are presented (IT2FNWNNH-PSO). The value for the number of neurons in the hidden layer and the parameter,

, in the Hamacher IT2FNWNN are taken from

Table 6.

In

Table 8, the optimization for each financial time series for the Frank IT2FNWNN is presented. We are only showing the best result of five performed experiments.

In

Table 9, the results of the experiments for the financial time series of the stock exchange for the IT2FNWNN for Frank S-norm and T-norm optimized with dynamic PSO are presented (IT2FNWNNF-PSO). The value for the number of neurons in the hidden layer and the parameter,

, in the Frank IT2FNWNN are taken for

Table 8.

In

Table 10, a comparison of the best results for the prediction for all financial time series of the stock exchange markets with the traditional neural network (TNN), the interval type-2 fuzzy numbers weights neural network with the sum of the product (IT2FNWNNSp), Hamacher (IT2FNWNNH) and Frank (IT2FNWNNF) without optimization, and Hamacher (IT2FNWNNH-PSO) and Frank (IT2FNWNNF-PSO) optimized with dynamic PSO is presented.

A statistical test of T-student is applied to verify the accuracy of the results. In

Table 11, the best results for each financial time series is compared with the TNN. The null hypothesis is H1 = H2 and the alternative hypothesis is H1 > H2 (AVG = average, SD = standard deviation, SEM = standard error of the mean, ED = estimation of the difference, LLD 95% = lower limit 95% of the difference, GL = degrees of liberty) [

69].

7. Conclusions

Based on the experiments, we have reached the conclusion that the interval type-2 fuzzy numbers weights neural network with S-Norms and T-norms Hamacher (IT2FNWNNH) and Frank (IT2FNWNNF) optimized using particle swarm optimization (PSO) with dynamic adjustment achieves better results than the traditional neural network and the interval type-2 fuzzy numbers weights neural network without optimization, in almost all the cases of study, for the stock exchanges time series used in this work. This assertion is based on the comparison of prediction errors for all stock exchange time series shown in

Table 10 and the data of the statistical test of T-student for best results against the traditional neural network (TNN).

The proposed approach of dynamic adjustment in PSO for optimization of interval type-2 fuzzy numbers weights neural network was compared with the traditional neural network to demonstrate the effectiveness of the proposed method.

The proposed optimization applying an interval type-2 fuzzy inference system to perform dynamic adjustment of the and parameters in PSO allows the algorithm find optimal results for the testing problems. In almost all the cases of testing, the results obtained with the IT2FNWNN optimized were the better results, only in the London stock exchanges time series did the IT2FNWNNH obtained better results.

These results are good considering that the number of iterations and the number of particles for the executions of the PSO are relatively small, only 50 iterations with 50 particles. We believe that performing experiments with more iterations and particles will allow it to find better optimal results.