1. Introduction

In practice, the reliability evaluation of units or systems predominantly employs lifespan data collected in various situations and experimental schemes, and obtaining complete data from simple random sampling (SRS) is the traditional approach used in data analysis. Although SRS retains notable and appealing advantages in statistical inference, such as unbiased population representation and a well-established theoretical framework, its practical implementation in reliability testing faces significant challenges in practical engineering contexts. For example, the SRS method frequently requires extensive sample sizes and incurs prohibitive cost and time burdens, particularly when dealing with high-value or low-failure-rate products. Due to these time and cost constraints from SRS, various sampling frameworks have been developed, with one of the most popular schemes being ranked set sampling (RSS). It has emerged as a viable alternative to SRS and strategically incorporates expert judgment into the sampling process. In addition, RSS also effectively addresses some critical constraints of the traditional SRS approach for lifespan data collection. In particular, RSS achieves an estimation accuracy comparable to that of SRS with fewer experimental units and significantly reduces the testing costs for high-failure-cost products. Similarly, through cycle ranking and systematic selection, RSS inherently stratifies population heterogeneity, thereby enhancing detection capability for latent failure patterns that SRS might overlook in complex systems.

The traditional RSS framework was introduced by McIntyre [

1] to determine the mean pasture population, and its high efficiency was demonstrated by various authors by comparing it with traditional SRS from different perspectives. For example, Bouza [

2] used RSS and randomized response techniques to estimate the mean of a sensitive quantitative characteristic. It was found that the use of RSS is highly beneficial and leads to estimators which are more precise than for SRS data. He et al. [

3] investigated the maximum likelihood estimation of the log-logistic distribution under both SRS and RSS frameworks. The results revealed the superior performance of the RSS-based method over that of SRS, particularly under imperfect ranking conditions. Similar work has also been undertaken by Taconeli [

4], Nawaz [

5], Qian [

6] and Dumbgen [

7] (see also the references therein). Generally, the RSS framework can be described as follows. Suppose that there are

identical units randomly selected from the population; then,

n groups of size

n in each group are further defined based on these

units. In each group, units are ranked according to straightforward and inexpensive ranking criterion, such as expert judgment, visual inspection, or use of auxiliary variables, without measuring them. In such a scenario, the unit ranked lowest is chosen for actual quantification from the first group of

n units, while the unit ranked second lowest is measured from the second group of

n. The process is continued until the unit ranked highest is measured from the

n-th set of size

n. The data

are called the RSS observation of size

n, where the notation

denotes the

j-th order statistic in the

s-th set for

. For a visual presentation, the traditional RSS framework is described as follows:

Although traditional RSS has demonstrated advantages in improving statistical efficiency and reducing costs compared to SRS, its reliance on balanced sample sizes and structured ranking assumptions may limit its applicability under certain conditions. Due to these practical and theoretical limitations, numerous modified RSS strategies have been developed to address the constraints. These include extreme RSS ([

8]), mean ranked sampling ([

9]), quartile RSS ([

10]), paired RSS ([

11]), multistage RSS ([

12]), and stratified pair RSS ([

13]), among others. Each of these strategies offers unique advantages for different study designs and data characteristics. Among the different RSS frameworks, one popular modified RSS approach, called maximum ranked set sampling with unequal samples (MaxRSSU), introduced by Bhoj [

14], has attracted much attention in the literature. It modifies the sampling procedure to account for unequal samples, leading to a significant improvement in sampling efficiency and estimation accuracy in certain scenarios. In the context of MaxRSSU, the procedure is structured as follows. There are

identical units selected from the population which are randomly divided into

n groups of sizes

y. Then, the highest rank unit is chosen for the actual quantification of the

i-th group of size

i with

. Therefore, sample

is called the MaxRSSU observation of size

n in this scenario. For illustration, the MaxRSSU scheme is described as follows:

The MaxRSSU procedure has several advantages compared to traditional RSS. For example, the MaxRSSU approach accommodates unequal sample sizes across subgroups, allowing adaptive resource allocation. This is useful in field studies where logistical constraints or costs limit equal-sized sampling. Consequently, unequal sample sizes in this case also allow strategic allocation of measurement efforts, optimizing time and resource usage while maintaining precision. In addition, by prioritizing maximum ranks, MaxRSSU can better capture extreme values, making it ideal for environmental monitoring, epidemiology, or quality control where outliers are of primary interest. Due to its great flexibility and potential advantages, the MaxRSSU approach has received considerable attention and been discussed by many authors (see, for example, the contributions of Eskandarzadeh et al. [

15], Qiu et al. [

16], and Basikhasteh et al. [

17], among others).

The above scenarios are called single-cycle RSS frameworks; however, in practical applications, such single-cycle RSS frameworks are often extended to multiple-cycle RSS to address complexities arising from population heterogeneity, resource constraints, or measurement limitations. This hierarchical approach involves iteratively applying ranking and selection procedures across sequential phases, thereby balancing statistical efficiency with operational feasibility. In addition, this approach is particularly valuable in large-scale surveys involving geographically dispersed populations. The multiple-cycle strategy not only reduces logistical burdens but also enhances the representativeness of the sample when subpopulations exhibit distinct characteristics. Under the MaxRSSU framework with a

k-cycle design, the corresponding multiple-stage MaxRSSU scenario can be described as follows:

where the notation

denotes the

j-th

ranked observation in ascending order from the

s-th group (

) in the

i-th MaxRSSU cycle with

. To simplify notation, MaxRSSU samples drawn from the

i-th cycle are denoted as

. It is noted that the multiple-cycle MaxRSSU framework is applied through sequential sampling phases in a MaxRSSU scenario. Similarly, there are also various cases where the multiple-cycle scenario has been adopted for other RSS schemes. For example, see Wolfe [

18], Wang [

19], Taconeli [

4] and Akdeniz [

20], as well as the references therein.

In practical experimental designs, multiple-cycle RSS is widely adopted for its efficiency in improving estimation accuracy by leveraging stratification within each cycle. However, when extending RSS to multiple-cycle settings for longitudinal or large-scale product evaluations, differences between different cycles (DBDC) emerge as critical sources of bias due to inherent experimental limitations. Specifically, factors such as instrument drift over time, technician-dependent sorting inconsistencies, or shifts in operational protocols across cycles (e.g., modifications in subgroup ranking criteria or uneven allocation of sample units) can disrupt the comparability of rankings and measurements between cycles. These cyclical discrepancies directly violate the assumption of statistical homogeneity commonly imposed in multiple-cycle RSS analyses, making conventional methods—which often pool data across cycles as if they were interchangeable—prone to severe inaccuracies. For example, unaccounted for drift in measurement tools might introduce spurious trends in ranked data, while protocol variations could distort comparison of product performance between cycles. Such biases could lead to misleading inferential outcomes, such as overestimating treatment effects, inflating type I errors, or masking true variability due to the conflation of experimental noise and genuine product-related signals. Therefore, explicitly modeling the effects of DBDC in multiple-cycle RSS frameworks becomes imperative to preserve the integrity of statistical conclusions, ensure robust estimation, and enable valid comparisons across experimental cycles. Therefore, it is necessary to take the DBDC into account in data analysis, otherwise ignoring inter-group variability maybe lead to biased and inaccurate results (see, for example, some recent contributions of Ahmadi et al. [

21], Zhu [

22], Kumari et al. [

23], Wang et al. [

24], as well as references therein). Motivated by the aforementioned reasons and due to the potential applications of the multiple-cycle MaxRSSU framework, this paper is devoted to the discussion of statistical inference of multiple-cycle MaxRSSU data from the Burr Type-III (BIII) population when the DBDC is taken into account.

Given the potential benefits of incorporating cycle effects in improving the flexibility and design of experimental studies, this paper investigates the statistical inference challenges associated with using the BIII distribution for equipment lifetime analysis under MaxRSSU. The main contributions of this study are summarized as follows. Firstly, by applying maximum likelihood estimation (MLE) to the parameters of the multiple-cycle BIII model, we establish the theoretical conditions for the existence and uniqueness of these parameters. This provides a solid foundation for improving the numerical stability and computational performance of likelihood-based methods. Secondly, to more accurately capture the cycle effects, we propose an enhanced hierarchical Bayesian model for statistical inference. By integrating historical data or expert knowledge into the inference framework, the hierarchical Bayesian approach offers notable advantages over traditional frequentist methods in terms of both flexibility and estimation accuracy. Thirdly, this paper has certain practical value in the field of engineering management. We provide engineers and technicians with an alternative multiple-cycle life testing scheme that offers greater flexibility. At the same time, we also propose a more efficient statistical inference method, which can be used to effectively analyze the reliability of equipment or systems under conditions of limited cost and time, where sample sizes are relatively small.

The article is structured as follows.

Section 2 provides a description of the population model and outlines multiple-cycle MaxRSSU data and the associated likelihood function.

Section 3 presents the classical estimation of unknown parameters and the reliability indices. The hierarchical Bayesian model is described in

Section 4. In

Section 5, the likelihood ratio test is examined. Numerical studies and two real-life examples are explored in

Section 6. Finally,

Section 7 provides concluding remarks.

6. Numerical Illustration

In this section, Monte Carlo simulations are performed to compare the performance of the proposed maximum likelihood and Bayes estimation methods. Additionally, two real data examples are presented to illustrate the application of the proposed model.

6.1. Simulation Studies

In this part, extensive simulation studies are conducted to evaluate the performance of various methods. To compare the accuracy of the results, point estimates are assessed using the absolute bias (AB) and mean square error (MSE), while interval estimates are compared based on the average length (AL) because MLE and Bayes estimation have similar coverage rates.

In this paper, various values of parameter

sample sizes are used for simulation. Moreover, to account for the cycle effect among the

k cycles, random noise

, where

, is assumed for the BIII data from the

i-th test facility, with

. This assumption helps to reflect the variability associated with each testing cycle. Moreover, another approach called Algorithm 3 is also presented to generate MaxRSSU multiple-cycle data. For the calculation, the MLE of

c is obtained using a fixed-point iteration method. Meanwhile, hybrid MH sampling is performed with

repetitions, and the first 5000 samples are discarded to eliminate sampling instability in the Gibbs sampling scenario. Consequently, the criteria quantities including AB, MSE, and A for the parameters and the reliability indicators are calculated based on 95% confidence intervals. These results are presented in

Table 1,

Table 2,

Table 3 and

Table 4, where the mission time

t is taken as

in different cases of concision.

| Algorithm 3: Generation of multiple-cycle MaxRSSU data under BIII distribution. |

- Step 1

For each cycle , generate random noise . - Step 2

For each , generate j iid samples namely from BIII. - Step 3

Arrange these j data in ascending order as , and let . Therefore, the k-cycle MaxRSSU data is obtained for .

|

As effective sample sizes increase, the AB and MSE for both MLE and Bayes estimates decrease. This trend indicates that the estimates derived from both classical likelihood and Bayesian approaches exhibit consistency and perform satisfactorily under the designed scenarios.

For fixed sample sizes, Bayes estimates generally exhibit relatively smaller AB and MSE than those of the MLEs in most cases.

For a fixed sample size, the AL of the Bayesian HPD intervals derived from the hierarchical model is typically shorter than those of the ACI. This indicates superior performance of the HPD intervals in terms of interval lengths.

The AL of the ACI and Bayesian HPD intervals decreases with increase in the sample sizes.

There are partial overlaps in the ACI or HPD intervals for parameters , where and . Although these intervals are not entirely identical, indicating inherent differences among the facilities, the overlaps suggest that inter-group variability across different cycles during the experimental process is significant and may not be overlooked.

To summarize, the differences between different cycles in multiple-cycle testing are significant—the Bayes estimation using hierarchical models exhibits superior performance compared to traditional classical likelihood estimation.

6.2. Data Analysis

In this section, two real-life datasets are utilized to demonstrate application of the proposed methods.

Example one (carbon fibers datasets). These datasets represents the strength data of carbon fibers with diameters of 10 mm and 20 mm under tensile test. These data were originally provided by Badar and Priest [

34] and further analyzed by Mead et al. [

35]. Without loss of generality, we have taken a transformation of the actual data for better illustration; the transformed data are presented in

Table 5 with sample sizes 63 and 69.

Before proceeding, assessment of goodness-of-fit is conducted to check if the BIII model can be used as a proper model to fit these data. The Kolmogorov–Smirnov (KS) distances and the associated

p-values (with brackets) are obtained as 0.0984 (0.5137) and 0.1040 (0.4824), respectively. The Anderson–Darling (AD) test statistic and the associated

p-values (with brackets) are 1.0411 (0.3612) and 1.3095 (0.3286), respectively. Therefore, these results indicate that the BIII distribution is a suitable model for these real-world datasets under different cases. In addition,

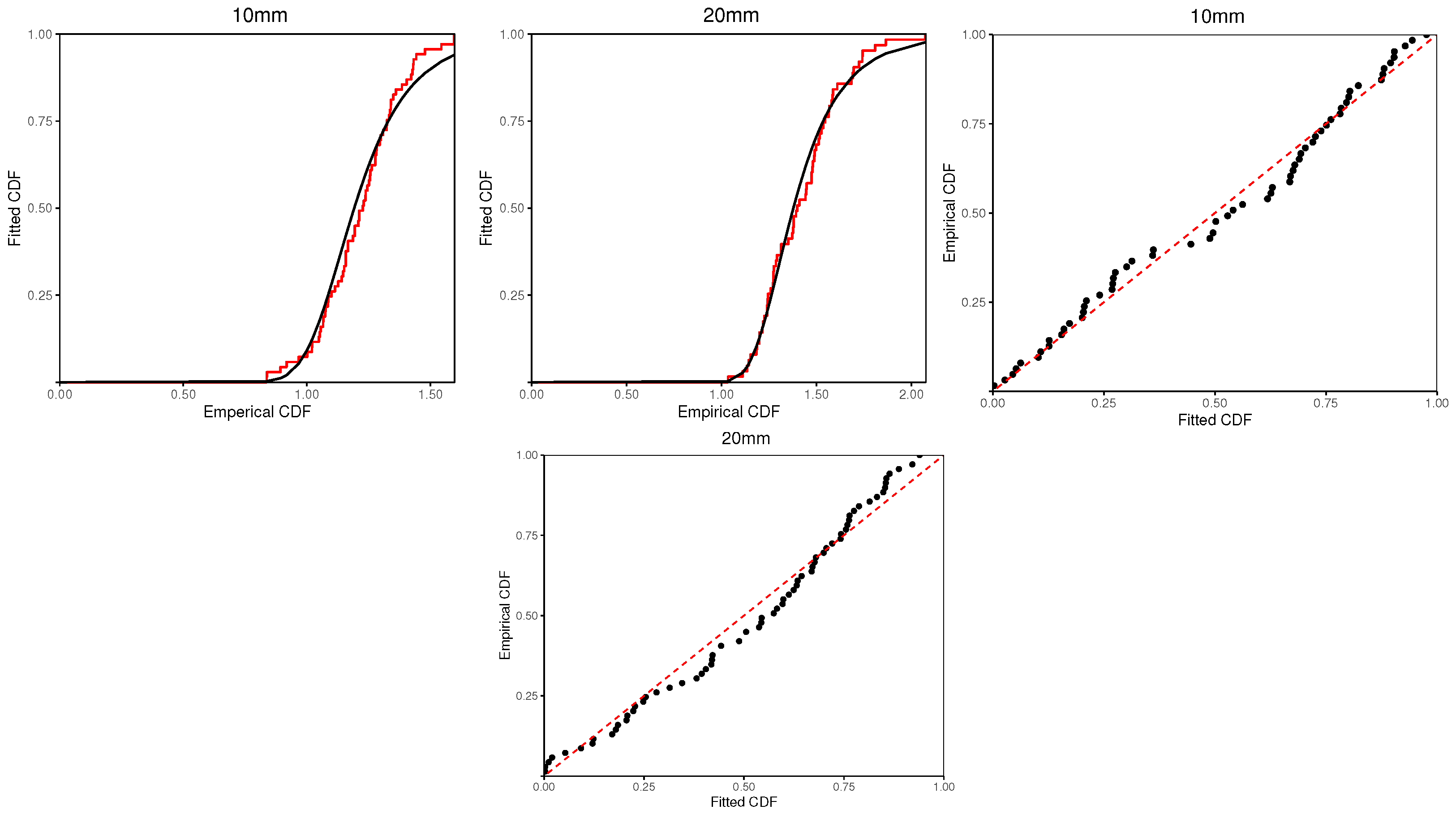

Figure 2 illustrates a comparison between the empirical cumulative distribution function and the fitted BIII distribution, complemented by Probability–Probability (P-P) plots. These plots also show that the BIII distribution can be used as a suitable model to fit the data visually.

In our example, the carbon fiber strength data for 10 mm and 20 mm are treated as two-cycle data to show the application of the proposed methods, i.e., the data for 10 mm are assumed from BIII and the data for 20 mm from BIII, respectively. Further, the null hypothesis is , and the alternative hypothesis is . Based on direct calculation, the value of the LRT statistic and the associated p-value are 0.7867 and 0.8039. Therefore, there is insufficient evidence to reject the null hypothesis , and it is assumed that these two datasets from 10 mm and 20 mm follow the BIII populations with parameters and in consequence.

Following the sampling scenario of MaxRSSU, two sets of the MaxRSSU data of sizes

and

are generated from the original carbon fibers data for 10 mm and 20 mm, respectively. The detailed, procedures are shown as follows:

and

Therefore, two sets of MaxRSSU data are obtained as follows:

Based on the above MaxRSSU data with two-cycle, classical likelihood and Bayes estimates are computed by using the proposed methods when accounting for the DBDC effect. The associated results are presented in

Table 6, where the estimated standard errors (ESE) for the point estimates and the interval lengths are provided in square brackets, and the interval estimates are obtained for a

significance level. In addition, for the Bayes estimates, the results are obtained based on 10,000 reputations, and the first 5000 samples are discarded to ensure convergence.

Based on

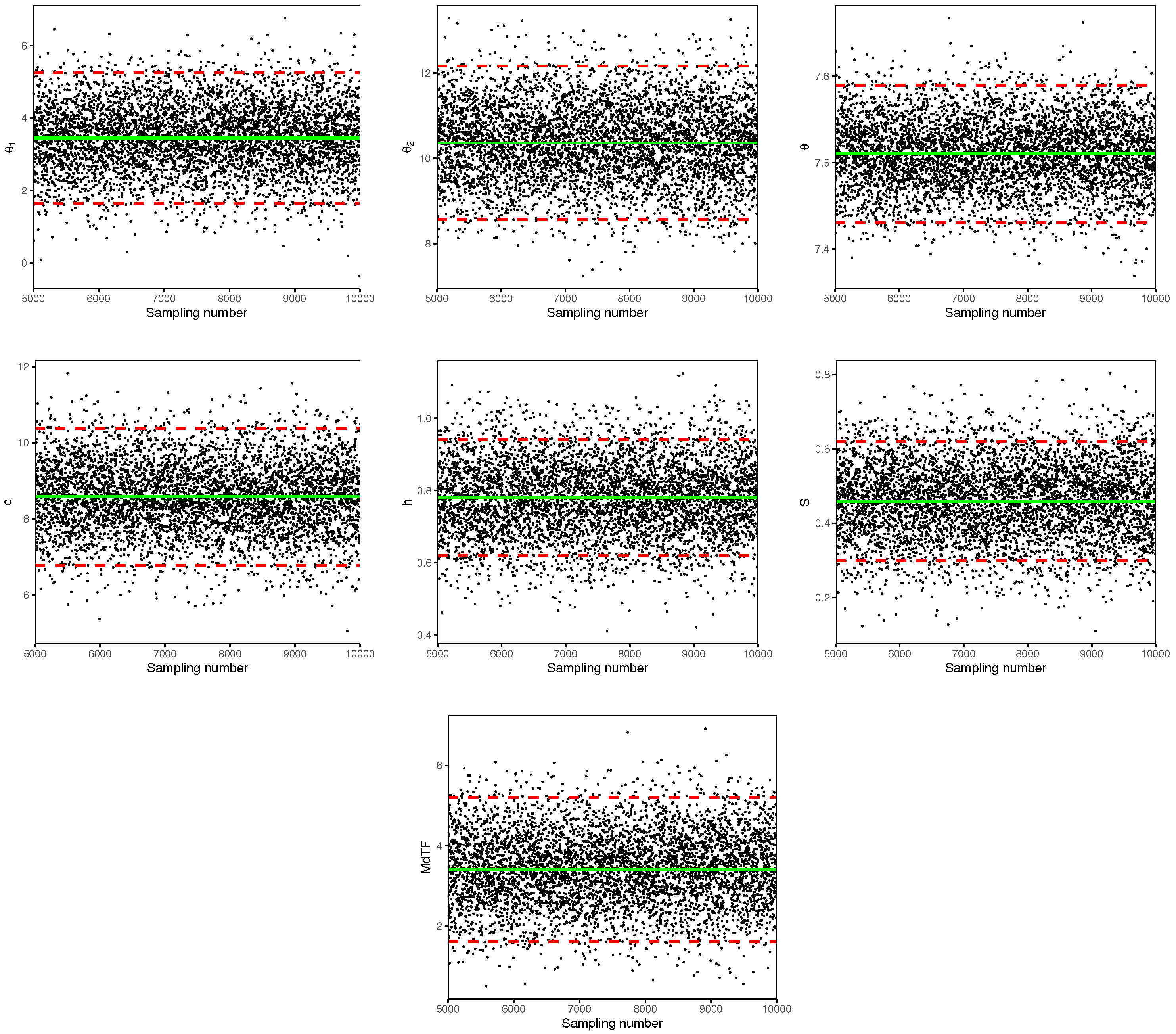

Table 6, it can be observed that, for different parameters and reliability indices, both the MLEs and the Bayes estimates perform satisfactorily. Moreover, in terms of the ESE, the Bayes estimates generally outperform the corresponding MLEs. Similarly, the HPD credible intervals tend to have shorter lengths compared to the ACIs, indicating the superior performance of the hierarchical Bayesian method. In addition, the partial overlapping among different interval estimates for the same parameters and reliability indices under classical and Bayesian approaches suggests that the cycle effects may not be ignorable. Furthermore, to investigate the performance of the Bayesian sampling method,

Figure 3 provides trace plots for parameters

, and reliability indices

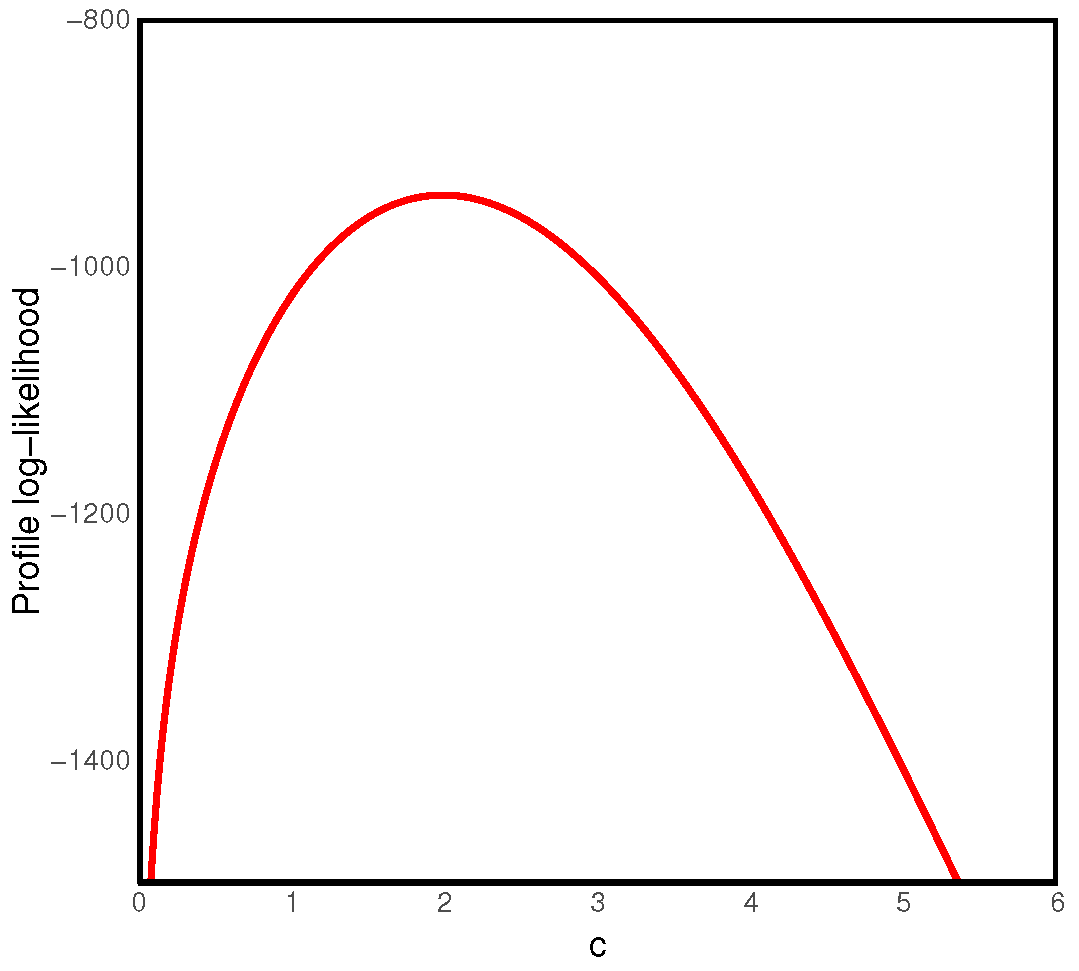

and MdTF, where a solid line denotes the associated Bayes estimate, and the dashed lines give the associated interval bounds. It is noted that there is good mixing performance of the proposed Bayes sampling approach. In addition, the plots of the profile log-likelihood function of the parameter

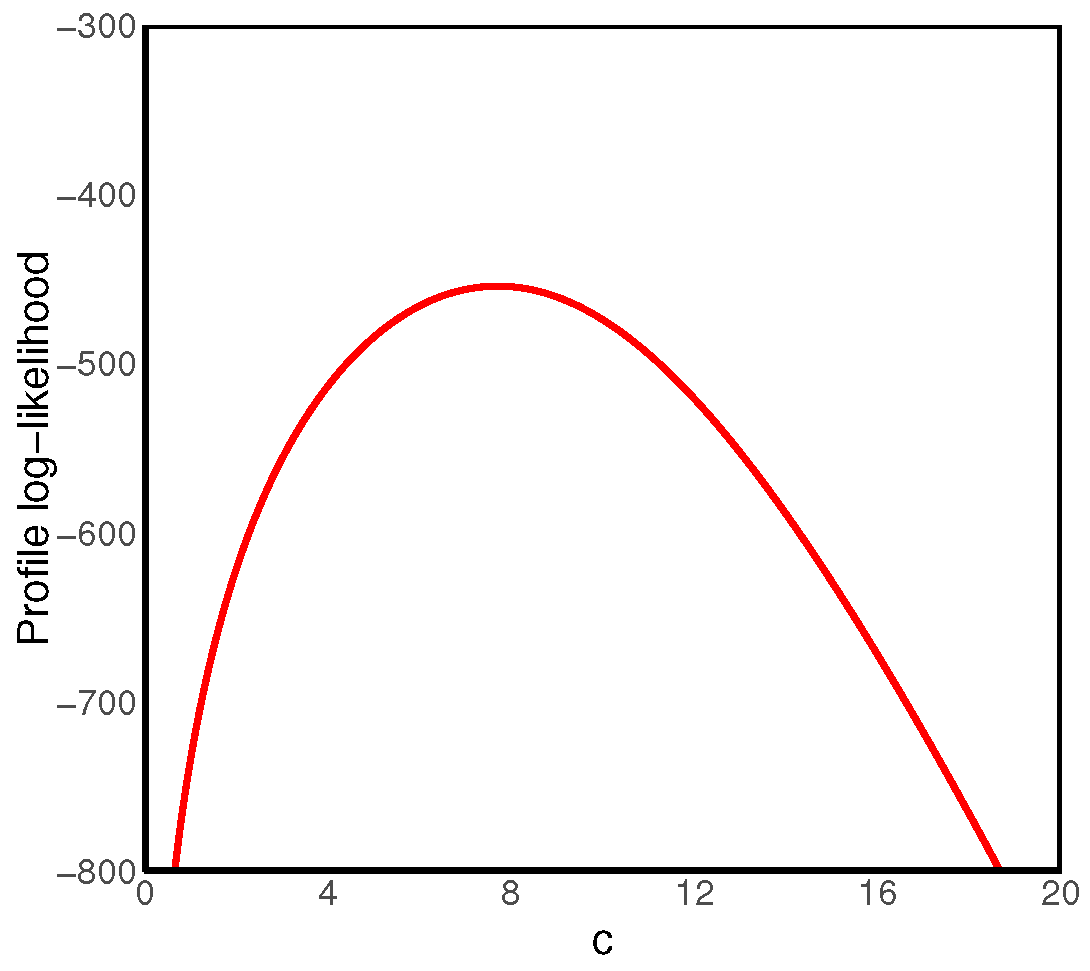

c are also presented in

Figure 4. It is also observed that the profile function is a unimodal function showing the existence and uniqueness of the MLE

that is consistent with Theorem 2.

Example two (bank waiting times datasets). As another example, the waiting times (in minutes) before customer service in two different banks are discussed. The original data were provided in Ghitany et al. [

36]. The detailed waiting time data are shown in

Table 7.

Before proceeding further, the goodness-of-fit is assessed to determine if the BIII distribution can serve as a reasonable model. Based on the complete data shown in

Table 7, the KS distances and the

p-values (with brackets) for the original data from Bank A and Bank B are 0.1062 (0.3124) and 0.1337 (0.2946), respectively. The AD statistic and the associated

p-values (with brackets) are 2.0682 (0.2841) and 1.9037 (0.2593), respectively. These results suggest that the BIII model can be used as an appropriate model for the bank waiting times data. Furthermore,

Figure 5 also shows the empirical cumulative distribution function, the fitted BIII distribution, and the P-P plots. These plots also show that the BIII distribution can be used as a suitable model to fit the data visually.

In this example, the waiting times data from Bank A and Bank B are treated as two-cycle data to demonstrate the application of the proposed methods. Specifically, the data from Bank A are assumed to follow a BIII distribution, while those from Bank B are assumed to follow a BIII distribution. Similarly to Example one, the LRT statistic and the p-value are calculated as 0.5867 and 0.2832. Therefore, the null hypothesis holds and we use BIII and BIII to fit the real-life bank A and bank B data, respectively.

Following the MaxRSSU scenario, a set of MaxRSSU data with size

from Bank A and size

from Bank B are obtained as follows:

Consequently, classical likelihood and Bayes estimates of the parameters and reliability indices are conducted for the bank MaxRSSU data with DBDC effect. The results are summarized in

Table 8 with a

significance level for the confidence level. Based on the results tabulated in

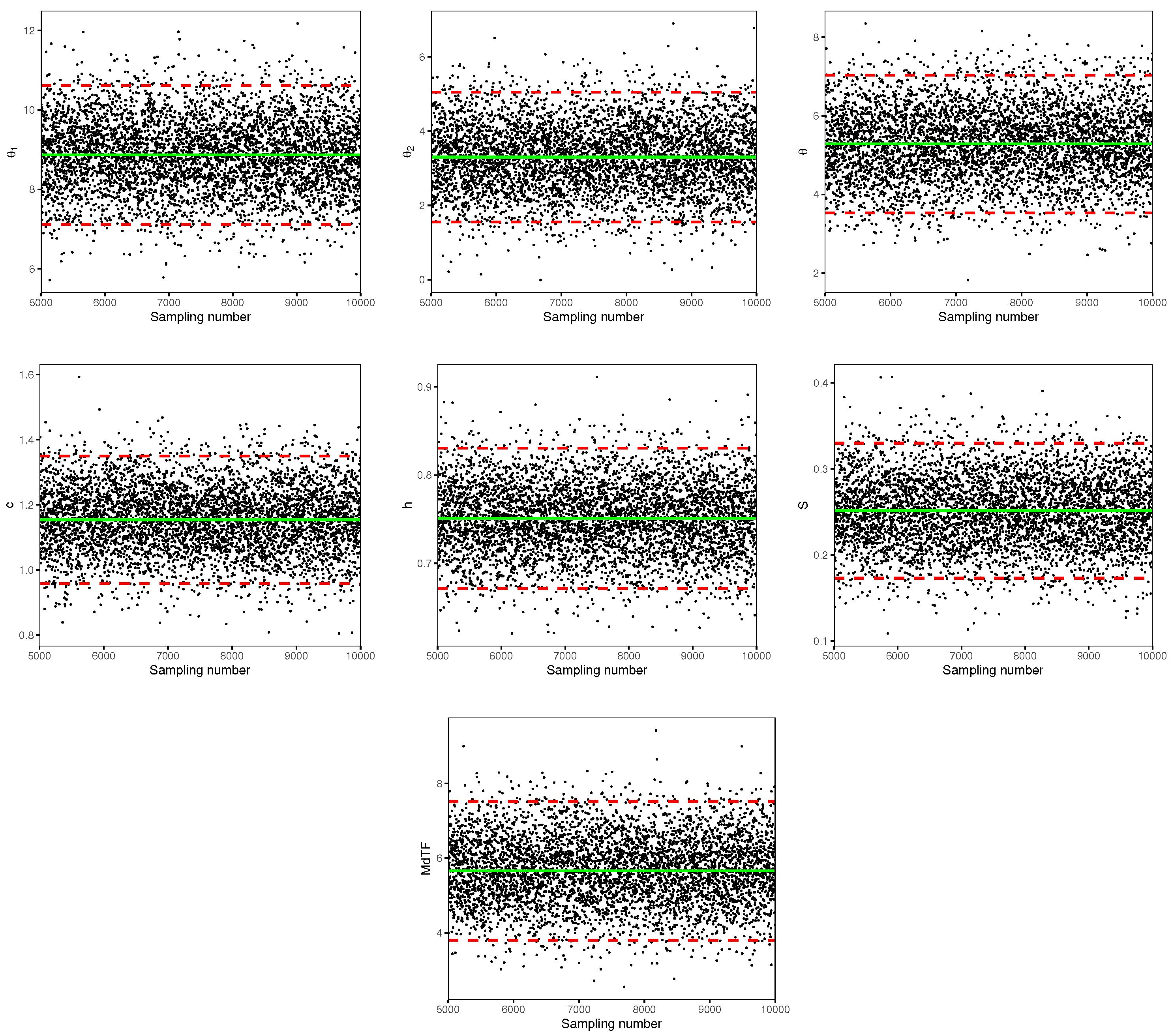

Table 8, it can also be observed that the hierarchical Bayesian method outperforms the traditional likelihood approach for both point and interval estimates in general. For completeness, the trace plots and the curve of the profile log-likelihood function of

c are also shown in

Figure 6 and

Figure 7 for illustration.