Improved Bounds for Integral Jensen’s Inequality Through Fifth-Order Differentiable Convex Functions and Applications

Abstract

1. Introduction

2. Main Results

3. Importance of Main Results

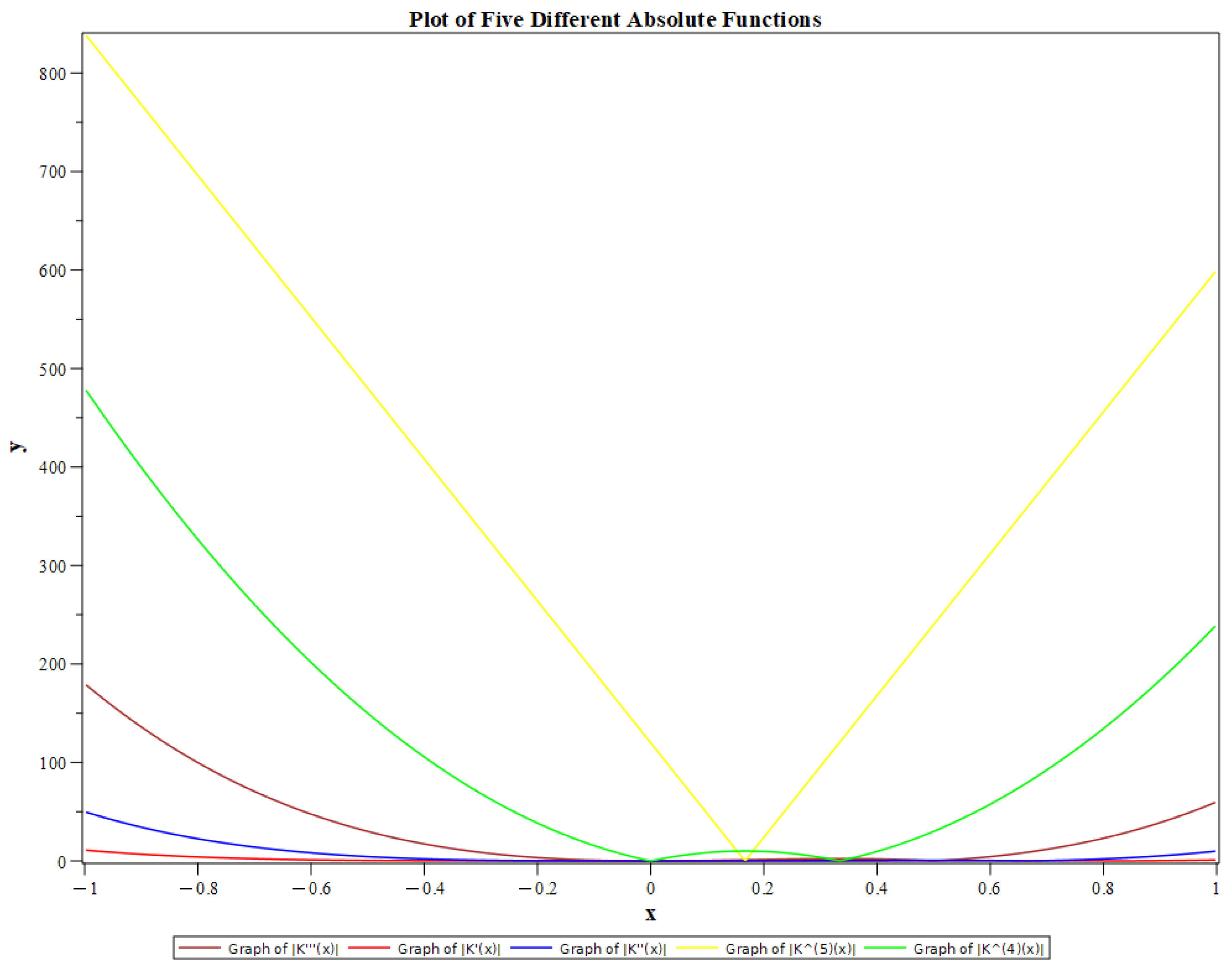

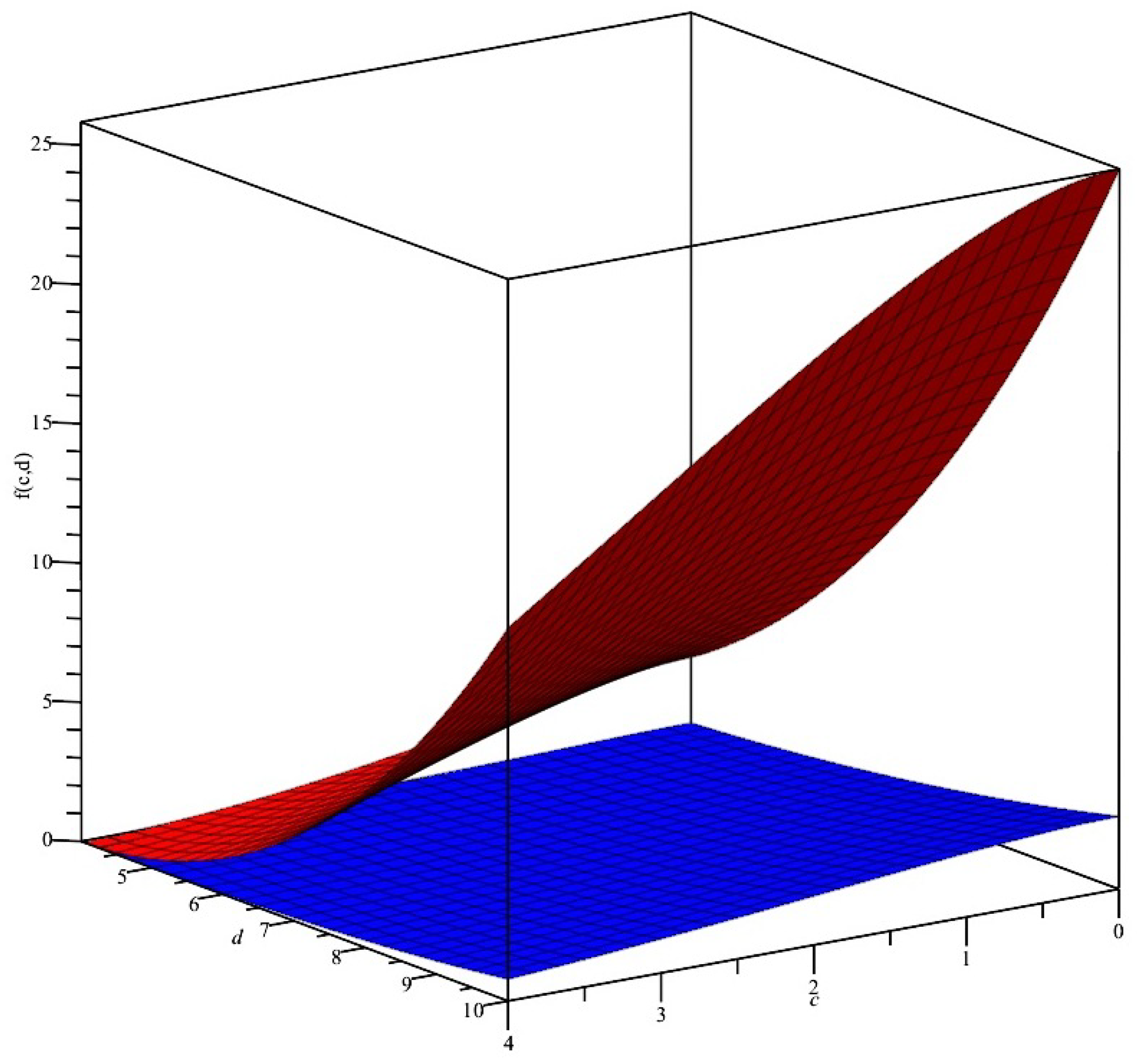

3.1. Functions Fit the Criteria

3.2. Numerical Estimates for the Jensen Difference

4. Applications to Hölder and Hermite–Hadamard Inequalities

5. Applications to Power Means and Quasi-Arithmetic Means

- (i)

- If or or such that then

- (ii)

- If or or such that then (33) holds.

- (i)

- If with then

- (ii)

- If such that then (34) holds.

6. Application in Information Theory

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Pečarič, J.; Persson, L.E.; Tong, Y.L. Convex Functions, Partial Ordering and Statistical Applications; Academic Press: New York, NY, USA, 1992. [Google Scholar]

- Mangasarian, O.L. Pseudo-Convex Functions. SIAM J. Control. 1965, 3, 281–290. [Google Scholar] [CrossRef]

- Hanson, M.A. On sufficiency of the Kuhn-Tucker conditions. J. Math. Anal. Appl. 1981, 80, 545–550. [Google Scholar] [CrossRef]

- Arrow, K.J.; Enthoven, A.D. Quasiconcave Programming. Econometrica 1961, 29, 779–800. [Google Scholar] [CrossRef]

- Mohan, S.R.; Neogy, S.K. On invex sets and preinvex functions. J. Math. Anal. Appl. 1995, 189, 901–908. [Google Scholar] [CrossRef]

- Younness, E.A. E-convex sets, E-convex functions and E-convex programming. J. Optim. Theory. Appl. 1999, 102, 439–450. [Google Scholar] [CrossRef]

- Khan, M.A.; Khan, S.; Chu, Y. A new bound for the Jensen gap with applications in information theory. IEEE Access 2016, 4, 98001–98008. [Google Scholar] [CrossRef]

- Cloud, M.J.; Drachman, B.C.; Lebedev, L.P. Inequalities with Applications to Engineering; Springer: Heidelberg, Germany, 2014. [Google Scholar]

- Butt, S.I.; Mehmood, N.; Pečarić, Đ.; Pečarić, J. New bounds for Shannon, relative and Mandelbrot entropies via Abel-Gontscharoff interpolating polynomial. Math. Inequal. Appl. 2019, 22, 1283–1301. [Google Scholar] [CrossRef]

- Leorato, S. A refined Jensen’s inequality in Hilbert spaces and empirical approximations. J. Multivar. Anal. 2009, 100, 1044–1060. [Google Scholar] [CrossRef][Green Version]

- Lin, Q. Jensen inequality for superlinear expectations. Stat. Probab. Lett. 2019, 151, 79–83. [Google Scholar] [CrossRef]

- Hudzik, H.; Maligranda, L. Some remarks on s–convex functions. Aequationes Math. 1994, 48, 100–111. [Google Scholar] [CrossRef]

- Khan, M.A.; Wu, S.; Ullah, H.; Chu, Y.M. Discrete majorization type inequalities for convex functions on rectangles. J. Math. Anal. Appl. 2019, 2019, 16. [Google Scholar]

- Sezer, S.; Eken, Z.; Tinaztepe, G.; Adilov, G. p-convex functions and some of their properties. Numer. Funct. Anal. Optim. 2021, 42, 443–459. [Google Scholar] [CrossRef]

- Varošanec, S. On h-convexity. J. Math. Anal. Appl. 2007, 326, 303–311. [Google Scholar] [CrossRef]

- Khan, M.A.; Sohail, A.; Ullah, H.; Saeed, T. Estimations of the Jensen gap and their applications based on 6-convexity. Mathematics 2023, 11, 1957. [Google Scholar] [CrossRef]

- Dragomir, S.S. A refinement of Jensen’s inequality with applications to f -divergence measures. Taiwan. J. Math. 2010, 14, 153–164. [Google Scholar] [CrossRef]

- Deng, Y.; Ullah, H.; Khan, M.A.; Iqbal, S.; Wu, S. Refinements of Jensen’s inequality via majorization results with applications in the information theory. J. Math. 2021, 2021, 1–12. [Google Scholar] [CrossRef]

- Ullah, H.; Khan, M.A.; Saeed, T.; Sayed, Z.M.M. Some Improvements of Jensen’s inequality via 4-convexity and applications. J. Funct. Spaces 2022, 2022, 1–9. [Google Scholar] [CrossRef]

- Saeed, T.; Khan, M.A.; Ullah, H. Refinements of Jensen’s inequality and applications. AIMS Math. 2022, 7, 5328–5346. [Google Scholar] [CrossRef]

- Zhu, X.L.; Yang, G.H. Jensen inequality approach to stability analysis of discrete-time systems with time-varying delay. IET Control Theory Appl. 2008, 2, 1644–1649. [Google Scholar] [CrossRef]

- Khan, M.A.; Khan, S.; Erden, S.; Samraiz, M. A new approach for the derivations of bounds for the Jensen difference. Math. Methods Appl. Sci. 2022, 45, 36–48. [Google Scholar] [CrossRef]

- Sohail, A.; Khan, M.A.; Ding, X.; Sharaf, M.; El-Meligy, M.A. Improvements of the integral Jensen inequality through the treatment of the concept of convexity of thrice differential functions. AIMS Math. 2024, 9, 33973–33994. [Google Scholar] [CrossRef]

| Left Inequality | Right Inequality | |

|---|---|---|

| Left Inequality | Right Inequality | |

|---|---|---|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nisar, S.; Zafar, F.; Alamri, H. Improved Bounds for Integral Jensen’s Inequality Through Fifth-Order Differentiable Convex Functions and Applications. Axioms 2025, 14, 602. https://doi.org/10.3390/axioms14080602

Nisar S, Zafar F, Alamri H. Improved Bounds for Integral Jensen’s Inequality Through Fifth-Order Differentiable Convex Functions and Applications. Axioms. 2025; 14(8):602. https://doi.org/10.3390/axioms14080602

Chicago/Turabian StyleNisar, Sidra, Fiza Zafar, and Hind Alamri. 2025. "Improved Bounds for Integral Jensen’s Inequality Through Fifth-Order Differentiable Convex Functions and Applications" Axioms 14, no. 8: 602. https://doi.org/10.3390/axioms14080602

APA StyleNisar, S., Zafar, F., & Alamri, H. (2025). Improved Bounds for Integral Jensen’s Inequality Through Fifth-Order Differentiable Convex Functions and Applications. Axioms, 14(8), 602. https://doi.org/10.3390/axioms14080602