Abstract

Multiple cluster validity indices (CVIs) have been introduced for diverse applications. In practice, clusters exhibit varying shapes, sizes, densities, and closely spaced centers, which are typically unknown beforehand. It is desirable to develop a versatile CVI that performs well in general settings rather than being tailored to specific ones. Drawing inspiration from distance based on local density, where it is observed that cluster centers feature higher densities than their neighbors and are relatively distant from higher-density points, this paper introduces a novel CVI. This CVI employs a modified distance, adjusted for local density, to measure cluster compactness, replacing the traditional Euclidean distance with the minimum distance to a higher-density point. This adjustment accounts for cluster shapes and densities. The experimental results highlight the proposed index’s dual capability: it not only outperforms conventional methods by a remarkable margin of 32 percentage points in controlled synthetic environments but also maintains a 23+ percentage-point accuracy lead in real-world data regimes characterized by noise and heterogeneity. This consistency validates its generalizability across data modalities.

MSC:

62H30; 90C59

1. Introduction

Clustering is a fundamental task in unsupervised learning, aiming to partition data points into homogeneous groups for exploratory analysis across diverse domains, including astronomy, bioinformatics, bibliometrics, and pattern recognition [1]. Despite the proliferation of clustering algorithms—categorized into center-based, density-based, hierarchical, and rational methods [2]—a persistent challenge lies in determining the optimal number of clusters (k) a priori. This challenge arises from the absence of a universally applicable definition for “natural clusters” in complex datasets, which often exhibit irregular shapes, varying densities, and overlapping structures [3].

Cluster validity indices (CVIs) serve as critical tools to address this challenge by quantifying the quality of clustering partitions. Traditional CVIs, such as the Dunn index [4], partition coefficient (PC) [5], Xie–Beni (XB) index [6], and their variants [7,8], typically rely on intra-cluster compactness and inter-cluster separation metrics derived from Euclidean distances. However, these indices often falter under realistic scenarios where clusters exhibit non-convex geometries, density variations, or closely spaced centers. For instance, indices like XB and PC assume spherical cluster shapes and uniform densities, leading to inaccurate k estimation when these assumptions are violated [9].

Recent advancements in CVIs have attempted to address these limitations. The WL index [9] incorporates median center distances to enhance separation measurement, while the I index [10] employs the Jeffrey divergence to account for cluster size and density. The CVM index [11] introduces core-based density estimation for handling complex structures. Nevertheless, challenges remain in robustly identifying clusters with arbitrary shapes, automatically excluding outliers, and maintaining stability across varying dimensionalities.

In this work, we propose the Relative Higher Density (RHD) index, inspired by the concept that cluster centers exhibit higher density than their neighbors while being distant from points of even higher density [1]. The RHD index measures compactness using the minimum distance to higher-density points, enabling it to: (1) identify clusters of arbitrary shapes and dimensionalities; (2) automatically detect and exclude outliers without preprocessing; (3) maintain stability in the presence of closely spaced centers.

We benchmark the RHD index against state-of-the-art CVIs (WL, I, and CVM) to demonstrate its superiority in handling complex clustering patterns, including non-convex geometries, partial overlap, and density variations.

2. Related Work

2.1. Clustering Algorithms and the Challenge of Determining k

Clustering algorithms play a pivotal role in data analysis, with applications spanning scientific and engineering disciplines [1]. Depending on their underlying models, they are broadly classified into center-based (e.g., k-means), density-based (e.g., DBSCAN), hierarchical (e.g., agglomerative clustering), and rational clustering methods [2]. Comprehensive reviews of these algorithms can be found in [12,13].

A critical challenge in clustering is specifying the optimal number of clusters (k). This task is non-trivial due to the lack of a universally accepted definition of clusters, which may vary in shape, density, and scale across datasets [3]. To address this, researchers have developed various methods, including statistical approaches (e.g., Bayesian information criterion [14]), resampling techniques (e.g., gap statistic [15]), and nonparametric methods [3,16]. Among these, CVIs are widely used to evaluate clustering quality by balancing compactness and separation criteria.

2.2. Cluster Validity Indices (CVIs)

CVIs quantify the goodness of clustering partitions by combining measures of intra-cluster cohesion and inter-cluster dispersion. Early CVIs, such as the Dunn index [4], introduced ratio-based metrics to balance compactness and separation. Bezdek’s PC and PE indices [5,17] focused on membership values to assess compactness, while the XB index [6] incorporated inter-center distances for separation. Subsequent variants, like the R index [7], refined these measures by including overlap metrics.

However, traditional CVIs often assume convex, equally sized clusters with uniform densities, limiting their effectiveness in real-world scenarios. For example, the XB index struggles with non-spherical clusters, and the Dunn index is sensitive to noise and outliers. To address these limitations, recent CVIs have introduced more sophisticated measures:

- WL index [9]: Considers both minimum and median center distances to improve separation assessment.

- I index [10]: Uses the Jeffrey divergence to account for cluster size and density variations.

- CVM index [11]: Focuses on core-based density estimation, making it robust to complex structures.

Despite these advancements, challenges persist in handling datasets with arbitrary cluster shapes, closely spaced centers, and outliers. The RHD index proposed in this work addresses these gaps by leveraging density-based distances to capture intrinsic cluster structures, as detailed in the following sections.

The remainder of this paper is organized as follows. In Section 2, some up-to-date CVIs commonly used in practice are briefly reviewed. In Section 3, the proposed RHD index is developed. In Section 4, some experimental studies based on the synthetic and real datasets are presented. Some concluding remarks are given in Section 5.

3. Traditional CVIs

Numerous CVIs have been proposed and extensively studied. For brevity, this section reviews only the up-to-date WL, I, and CVM indices for comparison with the proposed one. These indices represent significant improvements over earlier CVIs. To facilitate discussion, we define the notation as follows: represents the dataset, where for , n is the total number of observations, and d is the dimension of observations; is the j-th cluster (), K is the number of clusters, is the center of ; denotes the Euclidean distance; denotes the membership degree of observation to cluster . means the covariance matrix of the observations with cluster . To maintain consistency with existing research, we set the fuzziness parameter .

3.1. The WL Index

Wu et al. [9] noted that traditional CVIs often assume highly compact, weakly interlinked clusters—an unrealistic premise in practice. To overcome this, they introduced the WL index, which defines separation via two components, representing a departure from traditional definitions.

In Equation (1), represents the minimum pairwise distance between cluster centers. The second component, , represents the median distance among all pairwise distances.

To define cluster compactness, the WL index incorporates both the membership value and the distance between an observation and its cluster center, defining it as:

where is the membership value with , which measures the probability of observation belonging to cluster . The total compactness of all clusters can be expressed as:

The WL index is defined as the ratio of the compactness measure to the new separation measure:

A good partition is characterized by high separation and low compactness. Thus, the optimal partition corresponds to the value of K that minimizes .

3.2. The I Index

The I-index, proposed by Said et al. [10], resolves the issue of closely spaced cluster centers. Traditional Euclidean distance-based separation measures may fail to reflect the true separation, especially for clusters of varying sizes and densities. Consequently, Said et al. suggest the Jeffrey divergence as an alternative inter-cluster separation measure.

The Jeffrey divergence between clusters and is defined as:

where d is the dimension of the data, and are the mean vector (i.e., cluster center) and covariance matrix of all the data observations in cluster , respectively.

The new separation measure is defined as:

where

The compactness measure is computed as the summation of all clusters of the maximum distance of an observation to its center, given by:

The I index is defined as the ratio of to , namely,

A small value of index I is an indicator of a good partition.

3.3. The CVM Index

The CVM index, proposed by Morsier et al. [11], is tailored for complex datasets with overlapping and differently shaped clusters and outliers. It focuses on the cluster core, a homogeneous group of similar samples representing the cluster’s natural variability. The CVM index includes three key terms: core size, core separability, and core homogeneity.

The core size is defined by the maximal distance observed among the samples in the cluster core:

The core separability is the minimum distance from a core sample in one cluster to a core sample in another cluster , defined as:

The distance quantifies the smallest separation between cluster and any other cluster. The core homogeneity is the ratio of the maximum to the average smallest inner distances within the cluster core, defined as:

where is the number of observations in cluster . Hence, the larger the ratio , the more pronounced the heterogeneity of cluster . A ratio close to 1 indicates a highly homogeneous core.

The CVM index is based on:

which maximizes the cluster core sizes rescaled by and the cluster cores separability. A large value of index CVM indicates a good partition. The CVM would be small when the cluster cores are too small, too inhomogeneous (in the presence of outliers), or too close to each other. Therefore, the CVM index can make a trade-off between the cluster core size and outlier rejection.

4. The Proposed Index

4.1. Design Philosophy and Theoretical Motivation

The classical compactness measure relies on distances from observations to cluster centers, leading to significant degradation in CVI efficiency when the dataset deviates from a Gaussian distribution. For example, Figure 1 visualizes two non-Gaussian-distributed clusters (labeled black and red). For sample point 11, its compactness relative to cluster 1 was originally quantified by the Euclidean distance . However, inaccurately implies low compactness due to its large value, despite evident proximity between and . To address this limitation, we extend the method of Rodriguez and Laio [1] to propose a new compactness metric based on distances to higher-density points within the same cluster. Thus, we redefine compactness for as the distance to its nearest higher-density neighbor (), measured by .

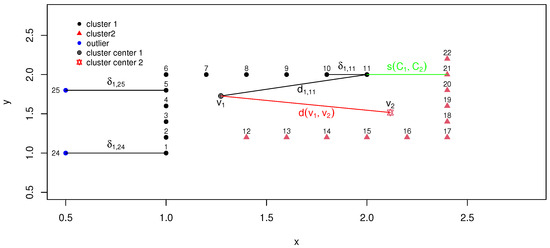

Figure 1.

Traditional and new calculation of compactness within a cluster and separation between clusters.

For outliers and , despite and , their neighborhood densities and are zero. Consequently, their compactness cannot be defined using traditional nearest-neighbor metrics. Instead, we compute compactness by associating each outlier with the nearest density-nonzero observation, i.e., and . This approach could effectively avoid spurious local optima in assignments. The advantage of this novel compactness metric lies in its ability to prevent outliers from being misclassified into local optima due to their large distances from clusters. As shown in Figure 1, observations and are outliers. The distance between them is shorter than their distances to Cluster 1. If the classical neighborhood distance metric is used, these two observations would likely be identified as a new cluster because they are close to each other and far from all other clusters, which contradicts our expectation that outliers should not affect the true number of clusters. Admittedly, this approach increases intra-cluster compactness. However, this issue is common in existing clustering algorithms unless outliers are completely discarded. We argue that a moderate increase in intra-cluster compactness is a more acceptable trade-off than distorting the true cluster structure.

It is worth noting that the neighborhood distance (delta) calculated based on the neighborhood density as mentioned above is computed in the Euclidean space. An interesting question is whether this definition can still exhibit stable robustness when using the Manhattan distance and Mahalanobis distance for calculation. To verify this idea, we designed a simple comparative experiment using R software: the experiment was divided into two groups of data, namely, Gaussian distribution and non-convex distribution. Each group was further divided into two scenarios: with outliers and without outliers. The neighborhood distance delta was calculated using the Euclidean distance, Manhattan distance, and the Mahalanobis distance, respectively, and the boxplots of the results are shown in Figure 2.

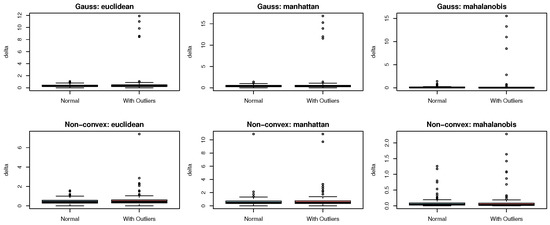

Figure 2.

Boxplot of neighborhood distance based on Euclidean distance, Mahalannobis distance and Manhattan distance.

In both Gaussian and non-convex distribution scenarios, the robustness of calculating with three distance metrics is analyzed via boxplots. The Euclidean distance, as it squares and amplifies deviations, is sensitive to outliers and has weak robustness. In non-convex distributions, it is more obviously disturbed by both the distribution structure and outliers. The Manhattan distance avoids the square amplification of deviations, so its robustness is better than that of the Euclidean distance, yet it does not completely eliminate interferences. The Mahalanobis distance “standardizes” distances using the covariance and adapts to the distribution structure. Regardless of the distribution type, it has the best robustness to outliers. In summary, the Mahalanobis distance has the strongest robustness, followed by the Manhattan distance, and the Euclidean distance is the weakest. For a stable calculation, the Mahalanobis distance is preferred; and for simplified calculations, the Manhattan distance can be an alternative to the Euclidean distance. However, to match the existing mainstream research, subsequent definitions and experiments will be designed in the context of Euclidean space.

Traditional inter-cluster separation metrics, such as the distance between cluster centers (), suffer from critical limitations in non-convex or density-heterogeneous scenarios. As illustrated in Figure 1, the center-based separation exaggerates the perceived dissimilarity between Cluster 1 and Cluster 2 due to their irregular geometries. To address this, we redefine the inter-cluster separation as the minimum distance between density-nonzero samples from distinct clusters. For example, in Figure 1, the separation between cluster 1 and cluster 2 could be measured by . This avoids centroid bias and more accurately reflects the true proximity of clusters with complex boundaries.

4.2. RHD Index

In Equation (14), represents a critical threshold parameter. In the original literature [1], the authors treated as a hyperparameter, suggesting that its value should be chosen such that the number of neighbors for most points falls within 1–2% of the total number of points. The calculation process of can be summarized as the following three steps:

Step 1: Compute Pairwise Distances. Calculating the Euclidean distances (default) or other distance metrics between all pairs of sample points:

Step 2: Sort Distances. Arranging all computed distances in ascending order, denoted as:

with .

Step 3: Determine Threshold. Selecting the cutoff distance such that approximately (typically 1–2%, here we set it as 2%) of all pairwise distances are smaller than . Mathematically, this can be expressed as:

where means rounding down for x.

Basically, is equal to the number of points that are closer than to point . The minimum distance between the point and any other point with higher density is measured by:

For the observation with the highest density, we take , which will be recognized as one of the cluster centers.

The new compactness measure is computed as the summation of all clusters of the average minimum distance of observation to any other point with higher density in the same cluster, given by:

where is the number of observations in cluster , and is the local distance of observation to cluster .

In Equation (20), we redefine cluster compactness to no longer rely on the average distance from all data points to the cluster centroid. Instead, it is defined as the average distance from each data point to its nearest higher-density neighbor within the cluster, extending Rodriguez and Laio’s density-peak concept [1]. This definition is characterized by reduced sensitivity to centroid drift and enhanced robustness against outlier-induced perturbations in global compactness evaluation.

Instead of using the distance between cluster centers to measure separation, the minimum distance between two clusters is used to measure the interconnection. The new measure of separation between two clusters, and , is based on this minimum distance. Instead of measuring separation via the distance between cluster centers, we utilize the minimum inter-cluster distance to quantify connectivity. The dissimilarity between clusters and is then defined as:

where denotes the Euclidean distance between and for points with and . Observing that , the total separation is expressed as:

Clearly, the total separation based on is expected to be less sensitive to centroid shifts due to its independence from cluster centers. Using the compactness and separation defined above, the RHD index is formulated as:

A small value of RHD indicates a good partition.

4.3. Complexity of the RHD

The time complexity of the RHD index primarily arises from five components: (1) The computation of the Euclidean distance matrix, which involves calculating the pairwise Euclidean distances between all data points, resulting in a complexity of ; (2) The calculation of the local density for each data point, also exhibiting a complexity of ; (3) the determination of the minimum neighborhood distance (), where each point requires iterating through all other points (yielding n operations per point, each involving up to n comparisons), thereby leading to an average complexity of ; (4) The computation of compactness (), which only necessitates a single traversal of all data points, corresponding to a complexity of ; and (5) the evaluation of separation (), which entails examining all cluster pairs (up to pairs). Each pair demands pairwise comparisons, and the total complexity across all pairs culminates in . Consequently, the total time complexity of the RHD index is:

To verify the aforementioned analysis conclusions, we conducted simulation experiments using R 4.3.2 software. We compared the computation times of the newly proposed index with those of four other indices under the conditions of sample sizes , and dimension . All experiments were repeated 10 times for each sample size, and the median elapsed time was calculated; the results are presented in Figure 3. It should be noted that this experiment was carried out on a personal computer with the following configurations: Brand: Dell; Processor: Intel(R) Core(TM) i9-10920X CPU @ 3.50 GHz; Installed RAM: 32 GB.

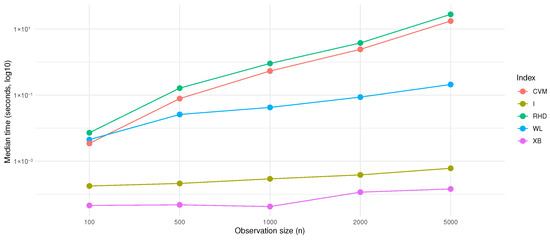

Figure 3.

Running time.

From the analysis of the computation times of the five indices, namely, CVM, I, RHD, WL, and XB, under different sample sizes (n) in Figure 3: The computation time of Index I (the yellow line) is extremely low, and its increase with growth in the sample size is minimal, showing an approximately horizontal straight-line trend. Its time complexity is most likely , and its computation logic may not expand linearly with the sample size. The computation time of Index XB (the purple line) is stable in the early stage and rises slowly in the later stage, with an overall gentle growth trend, which may be close to a linear or low-order polynomial complexity like . The computation time of Index WL (the blue line) shows a moderate linear upward trend with increase in the sample size, which is consistent with the characteristics of linear complexity. Its computation may involve single-round linear operations of traversing samples. The computation times of Index CVM (the red line) and Index RHD (the green line) increase significantly with growth in the sample size, and the upward slope is steeper, tending to or a higher-order polynomial complexity. When the sample size increases, due to the involvement of complex sample interactions (such as nested loops, quadratic statistic calculations, etc.), the computation load expands quadratically with n, resulting in a rapid increase in computation time. However, the strict time complexity needs to be verified by combining the algorithm source code or mathematical derivation. Here, the engineering inference based on the computation time trend can help understand the differences in the computation efficiency of each index.

5. Experimental Studies

To evaluate the proposed CVI’s efficiency, we conducted experiments on synthetic and real datasets using various clustering algorithms for clusters. We compared CVI values across j to identify the optimal value (minimum or maximum), indicating the best cluster count. The proposed CVI’s performance in determining the true number of clusters was benchmarked against the WL, I, CVM, and RHD indices, where lower values indicate better performance for WL/I/RHD, while higher values are preferable for CVM. All experiments were implemented in R statistical software (version 4.3.2).

To evaluate CVI efficiency, an appropriate clustering algorithm must be selected. The field has witnessed numerous methods, each demonstrating specialized advantages for specific data types. For instance, hierarchical clustering and fuzzy C-means (FCM) exhibit high efficacy for ellipsoidal distributions. Meanwhile, k-means variants demonstrate significant advantages in large-scale data due to linear time complexity. Advanced approaches (e.g., spectral clustering, DBSCAN, and FSDP) effectively address non-convex datasets through kernel methods or density-reachability concepts. However, this study aims to systematically validate the universality of the proposed CVI across diverse scenarios, necessitating an algorithm capable of handling ellipsoidal, non-convex, noisy or overlapping, and high-dimensional data structures.

To meet this requirement, the spectral clustering algorithm was selected as the baseline method. Spectral clustering has been validated as effective for both Gaussian-distributed and non-convex datasets [18], and demonstrates robust performance for small-scale high-dimensional data with sparse outliers [19], as well as overlapping datasets [20]. This algorithm enhances classical spectral clustering via Nyström approximation or random Fourier features, preserving its capacity for modeling arbitrarily shaped data while significantly boosting computational efficiency. Such comprehensive capability in handling multivariate complexities enables uniform evaluation of clustering validity indices across diverse data characteristics. Consequently, it provides a robust methodological foundation for constructing a universal evaluation framework.

5.1. Experiments on the Synthetic Datasets

The primary utility of clustering validity indices (CVIs) lies in their capacity to identify the optimal number of clusters when the ground-truth cluster structure is unknown. To rigorously evaluate the effectiveness of various CVIs, benchmark synthetic datasets with well-defined cluster characteristics serve as invaluable tools for performance validation. In the synthetic dataset experiments conducted for visualization purposes, we systematically employ two-dimensional datasets to facilitate geometric interpretability, while maintaining algorithmic complexity comparable to higher-dimensional scenarios. These datasets are specifically designed to exhibit diverse clustering challenges, with the true number of clusters varying from 2 to 10. This experimental configuration enables a comprehensive assessment of CVIs’ discriminative power across varying degrees of cluster separability, density heterogeneity, and structural complexity, providing critical insights into their practical applicability in real-world scenarios where cluster boundaries may be ambiguous or overlapping. The two-dimensional parameterization strikes a strategic balance between computational tractability and the need for intuitive visualization of decision boundaries, ensuring both analytical rigor and interpretative clarity.

5.1.1. Well-Separated Datasets

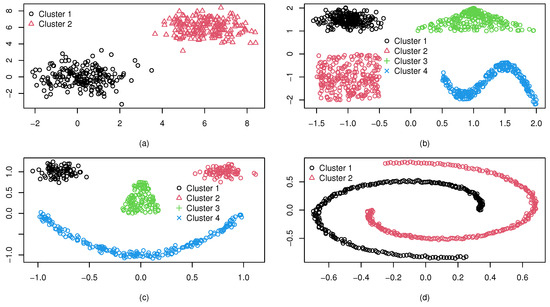

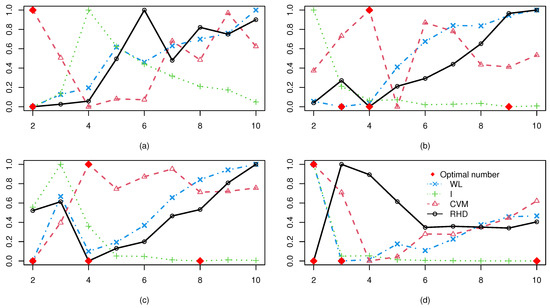

We consider some synthetic datasets with cluster shapes different from an ellipsoid, and denote them as . The dataset (a) consists of two isolated clusters, each with 200 observations generated from Gaussian distributions. Dataset (b) consists of four isolated clusters with different shapes: elliptical, triangular, rectangular, and wave. In dataset (c), there are also four isolated clusters of different shapes. However, the ellipsoid boundaries are not fully isolated, unlike the case in dataset (b). Dataset (d) contains two spiral clusters each of 250 observations. All the synthetic datasets are in two dimensions, which were randomly generated by using the package “‘mlbench’” in R. Figure 4 shows the four synthetic datasets, and it can be seen that the cluster numbers of (a)–(d) are 2, 4, 4, and 2, respectively.

Figure 4.

Four well-separated synthetic datasets : (a) two normally-shaped clusters; (b) four clusters with isolated boundaries; (c) four clusters with ellipsoid boundaries not fully isolated; and (d) two spiral clusters.

To address the substantial discrepancies in results generated by different indices and enable their visualization within a unified coordinate system, we applied Min–Max normalization (Equation (25)) to the outputs. This transformation scales all index values into the interval while preserving the original ordinal relationships between values. By maintaining the relative ordering of scores, this preprocessing step facilitates direct comparative analysis across indices with distinct magnitude ranges, ensuring consistent interpretation within the standardized scale.

To eliminate clustering randomness, each experiment was repeated ten times, with the average results. Figure 5 displays WL, I, CVM, and RHD index values from spectral clustering with varying cluster numbers for (a)–(d). The optimal cluster count for each CVI is marked by a red diamond. Analysis shows that the RHD and CVM indices exhibit consistent reliability across all datasets, accurately identifying the true cluster numbers as 2, 4, 4, and 2 for (a)–(d), respectively. This precision aligns with the ground-truth structures. In contrast, the WL and I indices achieve comparable accuracy only for (a), but fail to determine optimal partitions for the other three datasets. These results highlight RHD and CVM’s superior adaptability to complex scenarios, particularly for non-convex distributions and varying dimensionality in the series. This also proves that the WL and the I-index are only suitable for ellipsoidal clusters, even when well separated.

Figure 5.

The WL, I, CVM, and RHD values with different number of clusters under the synthetic dataset: (a) Dataset (a); (b) Dataset (b); (c) Dataset (c); (d) Dataset (d).

Table 1 evaluates the classification accuracy (%) of four CVIs—WL, I, CVM, and RHD—applied to dataset (a)–(d). Key findings reveal a distinct performance hierarchy: CVM and RHD achieved 100% accuracy across all variants, demonstrating robust adaptability to diverse data characteristics. In contrast, performance disparities emerged among other indices. While all CVIs attained 100% accuracy in (a) (indicating ideal separability), the subsequent variants exposed limitations. Specifically, WL accuracy exhibited a V-shaped trend, declining from 75.00% ((b)) to 50.00% ((c)) before recovering to 86.80% ((d)). Index I displayed marked instability, oscillating between 60.60% ((d)) and 64.80% ((c)) with an intermediate value of 64.00% ((b)). Notably, the synergy between FSDP and CVM/RHD produced flawless classifications even in complex scenarios like (c), where WL and I indices’ accuracy dropped below 65%. This underscores their enhanced resilience to challenges such as non-convex boundaries and noise interference. Collectively, these results highlight the critical role of CVI selection in ensuring algorithmic reliability.

Table 1.

Comparison of classification accuracy rate (%) for different CVIs under datasets (a)–(d).

5.1.2. Overlapped Datasets

In the preceding section, we rigorously demonstrated the exceptional discriminative capability of both CVM and the proposed RHD index for well-separated clusters. Building on this foundation, a more challenging question arises: whether RHD and CVM maintain proficiency in determining optimal cluster numbers when handling overlapping data distributions. This question directly investigates the robustness of the novel index under non-ideal conditions, specifically its ability to overcome the limitations of conventional validity measures that falter in detecting overlapping structures. Resolving this issue is pivotal for validating the algorithm’s practical applicability in complex real-world scenarios with compromised data separability.

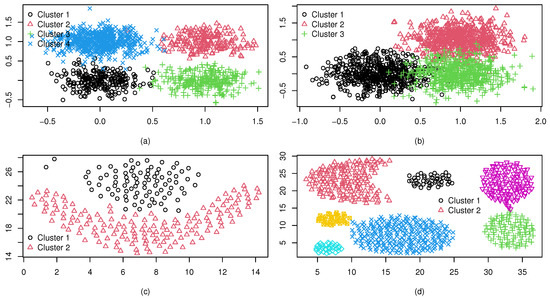

Figure 6 presents four synthetic datasets with varying degrees of cluster overlap, designated as (a)–(d). The first two datasets, (a) and (b), were algorithmically generated using stochastic sampling techniques to simulate controlled overlap scenarios. Specifically, a comprises four clusters with moderate pairwise overlaps, as visualized in Figure 6a, while (b) (panel b) contains three clusters with highly complex inter-cluster boundaries and significant overlap regions. The latter two datasets, (c)–(d), were selected from established clustering datasets https://github.com/milaan9/Clustering-Datasets (accessed on 15 June 2025) benchmarks to introduce real-world complexity. Dataset (c) (Figure 6c) corresponds to the renowned “Frame” structure, featuring a flame-like bimodal distribution with ambiguous decision boundaries between two elongated clusters. Of particular interest is dataset (d) (Figure 6d), the aggregation benchmark, which consists of seven clusters with heterogeneous geometries: a crescent moon, dual balloon-shaped clusters, and four ellipsoidal clusters of varying sizes and densities. This configuration introduces substantial overlaps between adjacent clusters while preserving distinct morphological features, creating a challenging validation scenario for clustering validity indices.

Figure 6.

Four overlapped datasets: (a) Dataset (a); (b) Dataset (b); (c) Dataset (c); (d) Dataset (d).

Table 2 summarizes the clustering validity results for datasets (a)–(d), with denoting the ground-truth cluster count. The boldfaced values indicate correctly identified optimal cluster counts. In Table 2, all experiments were repeated 10 times; the notation represents the algorithm’s determination that a is the optimal cluster number in b out of 10 independent trials. For instance, regarding dataset , the notation under the WL index indicates that, across 10 experiments, the WL index determined the optimal number of clusters as three in six cases and as two in four cases.

Table 2.

The estimated number of clusters based on different CVIs under four overlapped datasets (a)–(d).

The analysis of results presented in Table 2 reveals that for the (a) dataset, both the I index and RHD correctly identified the true cluster count (four clusters). This demonstrates their superior discriminative ability for ellipsoid-shaped clusters with mild overlaps, where conventional metrics may struggle with boundary ambiguity. The consistent success across 10 trials (10/10 for I and RHD) underscores their robustness in this scenario. For the (b) dataset: This highly overlapping dataset (three clusters) posed significant challenges. RHD achieved remarkable performance with 8/10 correct identifications, while all other indices failed completely. This highlights RHD’s enhanced capability to resolve complex decision boundaries in densely overlapping structures, a critical advantage over existing metrics. For the (c) dataset (Frame): The flame-like bimodal distribution with ambiguous boundaries was accurately resolved by WL and RHD (both 10/10). Conversely, I and CVM failed to detect the correct two-cluster structure, indicating their sensitivity to elongated, non-convex geometries where density gradients become critical. For the (d) dataset (Aggregation): This heterogeneous benchmark (seven clusters) introduced morphological diversity and varying overlaps. Interestingly, I demonstrated partial success (3/10) by occasionally capturing dominant density patterns, while RHD and WL failed completely. CVM’s rare success (1/10) suggests its susceptibility to noise in multi-scale cluster configurations. This dataset exposes limitations of all the indices in highly heterogeneous environments.

As evidenced by the cross-algorithm accuracy comparisons in Table 2, the proposed RHD index consistently outperforms existing metrics, achieving 70.00% overall accuracy (28/40 cases)—a 37.5 percentage point improvement over the suboptimal I index (32.50%). While the WL index ranked third (25.00%), the CVM metric exhibited severe limitations in resolving non-linear manifold structures, attaining only 2.50% accuracy. This stark contrast underscores the vulnerability of CVM’s feature-space partitioning strategy to local optima within high-dimensional, geometrically complex data regimes.

The experiments reveal RHD’s exceptional versatility across mild-to-severe cluster overlaps and geometric complexities. Its superior performance in (b) and (c) validates its robustness to boundary ambiguity and non-convex structures. While Index I exhibits niche strengths in specific morphologies ((a) and (d)), Index I’s inconsistency across datasets contrasts with RHD’s reliable pattern recognition. These findings underscore RHD’s potential for real-world clustering challenges with compromised data separability, offering significant methodological advances over traditional validity indices.

Based on the cross-dataset results in Table 2, the RHD index exhibits significant advantages in low-to-moderate overlap scenarios (e.g., 100% correct identification in (a)). Crucially, in the highly overlapping (d), it maintains an 80% correct identification rate. Although Index I matches RHD’s performance in simple structures ((a)), its discriminative ability deteriorates significantly with increasing data complexity ((c)–(d)). This contrast highlights RHD’s robustness to inter-cluster boundary ambiguity, with its core advantage lying in joint modeling of the local density and global separation.

However, RHD exhibits a key limitation in hybrid cluster structures, when datasets contain both well-separated clusters and overlapping clusters (e.g., the aggregation structure (d)), as this index tends to over-merge overlapping regions. Mechanism analysis reveals that this phenomenon stems from the definition of the inter-class difference measure . Specifically, yields higher values for well-separated clusters but decreases significantly for overlapping clusters. When both types coexist, the global mean is depressed by low-value overlapping regions; according to the formula, a reduced denominator s inversely amplifies the index value. Consequently, the algorithm mistakenly believes merging overlapping clusters improves clustering quality. The failure of CVM arises because increasing cluster counts shrink , causing the compactness to rise progressively. This ultimately elevates the CVM values. Since larger CVM values indicate better clustering, the cluster number may be overestimated.

5.2. Experiments on Real Datasets

In the final chapter, we thoroughly expanded the systematic evaluation of the proposed RHD index against benchmark methods for two scenarios: sufficient class separation and class overlap. Crucially, the combined effects of convex and non-convex data distributions were rigorously examined under both experimental paradigms. Multidimensional simulations revealed that these combined effects significantly influenced most test scenarios, with the RHD index demonstrating superior clustering validity assessment. To ensure a comprehensive scope, real-world datasets were analyzed alongside synthetic ones.

In view of the large-scale, high-dimensional, and multi-category characteristics of real-world scene data, the following experiments will focus on classical benchmark datasets to systematically examine these features and their interactions.

Firstly, we considered two public benchmark datasets: Iris and Seeds, sourced from the UCI database [21]. The Iris dataset comprises three classes with 50 instances each, where one class is linearly separable from the other two. The Seeds dataset contains 210 measurements of kernel geometrical properties from three wheat varieties, with each group approximately Gaussian-distributed.

Secondly, we evaluate the proposed index on three high-dimensional real-world datasets exemplifying complex data characteristics. The Ionosphere dataset, collected by a phased-array radar system in Goose Bay, Labrador, comprises 351 instances with 15 features encoding ionospheric reflection properties. The Landsat Satellite Imagery dataset contains 2000 multispectral images across 36 spectral bands, capturing terrestrial surface characteristics in visible and infrared wavelengths. The Yeast dataset, widely used for predicting protein cellular localization sites, contains 10 localization categories. All datasets are publicly accessible via the UCI Machine Learning Repository (https://archive.ics.uci.edu/datasets, accessed on 15 June 2025) and present distinct challenges: Ionosphere exhibits moderate dimensionality with limited samples, while Landsat features high-dimensional spectral measurements with inherent non-linear structures. These properties establish them as ideal benchmarks for validating clustering validity indices under real-world conditions.

Lastly, the Face dataset is publicly accessible via https://www.mcm.edu.cn/html_cn/block/8579f5fce999cdc896f78bca5d4f8237.html (accessed on 15 June 2025), containing 20 facial images from two individuals under varying lighting conditions. Featuring 2016 dimensions—significantly exceeding the sample size—this dataset is characterized as high-dimensional. Summary statistics for all five real datasets are provided in Table 3.

Table 3.

Characteristics of the five real datasets.

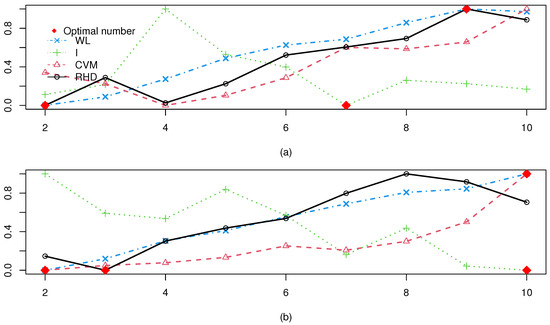

Figure 7 presents the WL, I, CVM, and RHD values for varying cluster numbers K across both real datasets. The optimal value for each CVI is marked with a red diamond. The RHD index correctly determined the cluster count for one dataset (Seeds). Although it misidentified the number as 2 for Iris, this result remains close to the true count. In contrast, the other three CVIs failed completely on all real datasets.

Figure 7.

Test results of two real datasets: (a) Iris; (b) Seeds.

Table 4, Table 5, Table 6 and Table 7 summarize the mean performance (over 10 repetitions) of four clustering validity indices evaluated on four benchmark datasets: Ionosphere, Landsat satellite imagery, Yeast gene expression, and Faces. Table 4 (classic Iris dataset analysis) reveals that both the WL index and our proposed RHD index identified two clusters, whereas the I and CVM indices detected 10 clusters (indicating structural complexity overestimation). This discrepancy stems from the Iris dataset’s intrinsic properties: the Virginica class is well-separated in feature space, but Setosa and Versicolor exhibit substantial overlap, reflecting a partially overlapping cluster structure. Furthermore, experiments on the synthetic dataset (designed with hybrid cluster separation-overlap patterns) corroborate that the RHD index systematically underestimates the true cluster count in mixed scenarios with coexisting distinct and overlapping clusters.

Table 4.

The WL, CVM, and RHD values performed on the Ionosphere dataset.

Table 5.

The WL, CVM, and RHD values performed on the Landsat dataset.

Table 6.

The WL, CVM, and RHD values performed on the Yeast dataset.

Table 7.

The WL, CVM, and RHD performed on the Faces dataset.

As shown in Table 5, the RHD index exclusively achieved perfect alignment with the six ground-truth land cover types in Landsat’s 36-dimensional spectral feature space. Validation on the facial image dataset (Table 7) revealed that both WL and RHD indices correctly identified two distinct facial identities (each with multiple pose variations), demonstrating efficacy in modeling complex image manifolds. In contrast, the CVM index consistently overestimated cluster counts (predicting 10 clusters), highlighting limitations in high-dimensional feature-space partitioning. The I index failed critically due to non-invertible covariance matrices—a known computational challenge when processing ultra-high-dimensional data like facial features.

The experimental results on the Yeast dataset (Table 6) revealed consistent failure of all four clustering validity indices to detect intrinsic functional modules, underscoring fundamental limitations of traditional evaluation paradigms in addressing domain-specific complexity. These results collectively emphasize that current clustering assessment frameworks face critical challenges in interpreting biological systems—systems inherently governed by emergent self-organization, functional redundancy, and multiscale invariance. To bridge this gap, future methodologies may necessitate hybrid strategies integrating biologically informed constraints (e.g., gene ontology-driven feature embeddings) with advanced geometric tools such as persistent homology from topological data analysis (TDA) to capture multiscale hierarchical structures.

As summarized in Table 8, the RHD index significantly outperformed other indices in cluster count estimation, achieving 61.67% accuracy (37/60 correct identifications) across 60 trials—a 23.33% improvement over the second-best WL index (38.33%). Its peak accuracy (100%) on datasets with well-defined manifold structures (e.g., Landsat satellite imagery and Faces) confirms the robustness of its density-sensitive manifold learning framework. Conversely, traditional methods revealed critical limitations: the CVM index showed near-random performance (one success in synthetic data), and the I index failed completely in ultra-high-dimensional biological domains (e.g., Yeast gene expression) due to non-invertible covariance matrices. These failures underscore the inadequacy of Euclidean-centric metrics for non-linear topological spaces and high-dimensional pathological conditions.

Table 8.

The estimated number of clusters based on different CVIs under real datasets over 10 experiments.

Notably, all four indices, including the proposed one, failed to identify the true number of clusters in the Yeast dataset. The primary reason is that the expression levels of yeast genes exhibit continuous gradation during biological processes (e.g., cell cycle, metabolic regulation) rather than discrete cluster-like distributions. Specifically, there are no clear boundaries between clusters. While the new index can effectively recognize clusters with low overlap, it still cannot accurately identify such continuously gradational cluster distributions. This also represents a major challenge in the recognition of biological images, gene data, and similar datasets, warranting more in-depth research on such data in future studies.

6. Conclusions

Determining the optimal number of clusters remains a critical yet unresolved challenge in unsupervised learning, as prevailing cluster validity indices (CVIs) often exhibit context-dependent limitations. Despite extensive efforts to develop CVIs for specific clustering scenarios (e.g., spherical or linearly separable clusters), their generalizability to complex real-world data, characterized by unknown cluster geometries, density heterogeneity, and partial overlaps, remains questionable. To bridge this gap, we propose a novel density-aware CVI based on the density peak hypothesis [1], which mitigates the inherent limitations of Euclidean-based metrics regarding centroid instability under noise and outlier interference.

The proposed Robust Hierarchical Distance (RHD) index introduces a paradigm shift by replacing conventional pairwise Euclidean distances with minimal separation distances to higher-density neighbors, effectively decoupling compactness measurement from cluster morphology. This innovation enables the RHD index to adaptively quantify intra-cluster cohesion while remaining invariant under non-convex topologies and density fluctuations. Theoretical analysis confirms its resilience to proximal cluster centers and skewed distributions, overcoming the “centration bias” inherent in centroid-based CVIs.

Comprehensive experiments using synthetic and real datasets evaluated diverse scenarios, including overlapping clusters, irregular shapes, density variations, and proximal cluster centroids. The results demonstrate a 23–32% improvement in accuracy for cluster count estimation over state-of-the-art cluster validity indices (CVIs).

Although the RHD index exhibits its ability in specific scenarios, it also has two key limitations: high computational complexity due to multiple local density comparisons and distance calculations (especially for large datasets), and poor performance in identifying clusters with indistinct density differences (e.g., Yeast dataset) or continuous gradient changes, as it relies on distinct density variations for partitioning. Future work will optimize algorithm structure (e.g., using approximate nearest neighbor search) to reduce complexity and improve density measurement by incorporating continuous gradient-capturing mechanisms and domain knowledge to enhance adaptability to complex data.

Author Contributions

Conceptualization, B.Y.; methodology, B.Y. and Y.Y.; software, B.Y. and P.L.; validation, B.Y., Y.Y. and P.L.; formal analysis, Y.Y.; resources, P.L.; data curation, B.Y.; writing—original draft preparation, B.Y.; writing—review and editing, Y.Y. and P.L.; visualization, B.Y.; supervision, P.L.; funding acquisition, P.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by The National Natural Science Foundation of China (No. 12204540), The Excellent Youth Project of Hunan Provincial Education Department (No. 24B0865) and Hunan Social Science Research Base Project (NO. XJK22ZDJD35).

Data Availability Statement

The synthetic datasets are randomly generated by functions in the R package ‘mlbench’ https://cran.r-project.org/web/packages/mlbench/index.html, and the real datasets in the experiments are available in the UCI Machine Learning Repository https://archive.ics.uci.edu/, and the public Clustering Datasets https://github.com/milaan9/Clustering-Datasets. All links accessed on 15 June 2025.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Rodriguez, A.; Laio, A. Clustering by fast search and find of density peaks. Science 2014, 344, 1492–1496. [Google Scholar] [CrossRef] [PubMed]

- Wu, G.; Zhao, X.; Luo, S.; Shi, H. Histological image segmentation using fast mean shift clustering method. Biomed. Eng. Online 2015, 14, 24. [Google Scholar] [CrossRef] [PubMed]

- Fujita, A.; Takahashi, D.Y.; Patriota, A.G. A non-parametric method to estimate the number of clusters. Comput. Stat. Data Anal. 2014, 73, 27–39. [Google Scholar] [CrossRef]

- Dunn, J.C. A fuzzy relative of the ISODATA process and its use in detecting compact well-separatedclusters. J. Cybern. 1974, 3, 32–57. [Google Scholar] [CrossRef]

- Bezdek, J.C. Cluster validity with fuzzy sets. J. Cybern. 1973, 3, 58–73. [Google Scholar] [CrossRef]

- Xie, X.L.; Beni, G. A validity measure for fuzzy clustering. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 841–847. [Google Scholar] [CrossRef]

- Gindy, N.; Ratchev, T.; Case, K. Component grouping for GT applications—A fuzzy clustering approach with validity measure. Int. J. Prod. Res. 1995, 33, 2493–2509. [Google Scholar] [CrossRef]

- Pakhira, M.K.; Bandyopadhyay, S.; Maulik, U. Validity index for crisp and fuzzy clusters. Pattern Recognit. 2004, 37, 487–501. [Google Scholar] [CrossRef]

- Wu, C.H.; Ouyang, C.S.; Chen, L.W.; Lu, L.W. A new fuzzy clustering validity index with a median factor for centroid-based clustering. IEEE Trans. Fuzzy Syst. 2015, 23, 701–718. [Google Scholar] [CrossRef]

- Said, A.B.; Hadjidj, R.; Foufou, S. Cluster validity index based on Jeffrey divergence. Pattern Anal. Appl. 2017, 20, 21–31. [Google Scholar] [CrossRef]

- Morsier, F.D.; Tuia, D.; Borgeaud, M.; Gass, V.; Thiran, J.P. Cluster validity measure and merging system for hierarchical clustering considering outliers. Pattern Recognit. 2015, 48, 1478–1489. [Google Scholar] [CrossRef]

- Hruschka, E.R.; Campello, R.J.G.B.; Freitas, A.A. A survey of evolutionary algorithms for clustering. IEEE Trans. Syst. Man Cybern. Part C 2009, 39, 133–155. [Google Scholar] [CrossRef]

- Everitt, B.S.; Landau, S.; Leese, M.; Stahl, D. Miscellaneous clustering methods. In Cluster Analysis, 5th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2011; pp. 215–255. [Google Scholar]

- Celeux, G.; Govaert, G. A classification EM algorithm for clustering and two stochastic versions. Comput. Stat. Data Anal. 1992, 14, 315–332. [Google Scholar] [CrossRef]

- Tibshirani, R.; Walther, G.; Hastie, T. Estimating the Number of Clusters in a Data Set via the Gap Statistic. J. R. Stat. Soc. 2001, 63, 411–423. [Google Scholar] [CrossRef]

- Fang, Y.; Wang, J. Selection of the number of clusters via the bootstrap method. Comput. Stat. Data Anal. 2012, 56, 468–477. [Google Scholar] [CrossRef]

- Bezdek, J.C. Numerical taxonomy with fuzzy sets. J. Math. Biol. 1974, 1, 57–71. [Google Scholar] [CrossRef]

- Xu, Y.; Srinivasan, A.; Xue, L. A selective overview of recent advances in spectral clustering and their applications. In Modern Statistical Methods for Health Research; Springer: Berlin/Heidelberg, Germany, 2021; pp. 247–277. [Google Scholar]

- Wu, S.; Feng, X.; Zhou, W. Spectral clustering of high-dimensional data exploiting sparse representation vectors. Neurocomputing 2014, 135, 229–239. [Google Scholar] [CrossRef]

- Li, Y.; He, K.; Kloster, K.; Bindel, D.; Hopcroft, J. Local spectral clustering for overlapping community detection. ACM Trans. Knowl. Discov. Data (TKDD) 2018, 12, 1–27. [Google Scholar] [CrossRef]

- Bache, K.; Lichman, M. UCI Machine Learning Repository. 2013. Available online: http://archive.ics.uci.edu/ml (accessed on 15 June 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).