Reinforcing Moving Linear Model Approach: Theoretical Assessment of Parameter Estimation and Outlier Detection

Abstract

1. Introduction

2. A Review of the ML and EML Model Approaches

2.1. The ML Model Approach

2.1.1. The Basic Model

2.1.2. State-Space Presentation of the ML Model

2.1.3. Method for Estimating the Parameters

2.2. The EML Model Approach for Outlier Detection

2.2.1. The Basic Model

2.2.2. Bayesian Approach to Outlier Estimation

2.2.3. Outlier Detection and Estimation

3. New Development to Reinforce Previous Findings

3.1. The Aims

3.2. Reinforcing the ML Model Approach

3.2.1. Variance-Preserving Adjustment of the Decomposed Components

3.2.2. Structural Examination of Variances for the Decomposed Components

3.2.3. Assessing the Structural Changes in Decomposed Components

3.2.4. Evaluation Metrics for Assessing Decomposition Stability

3.2.5. Bidirectional Processing and Recursive Decomposition Strategies

3.3. Reinforcing the EML Model Approach

3.3.1. Determining the Potential Locations of Outliers

3.3.2. Estimating Outliers

3.3.3. Updating the Locations and Determining the Number of Outliers

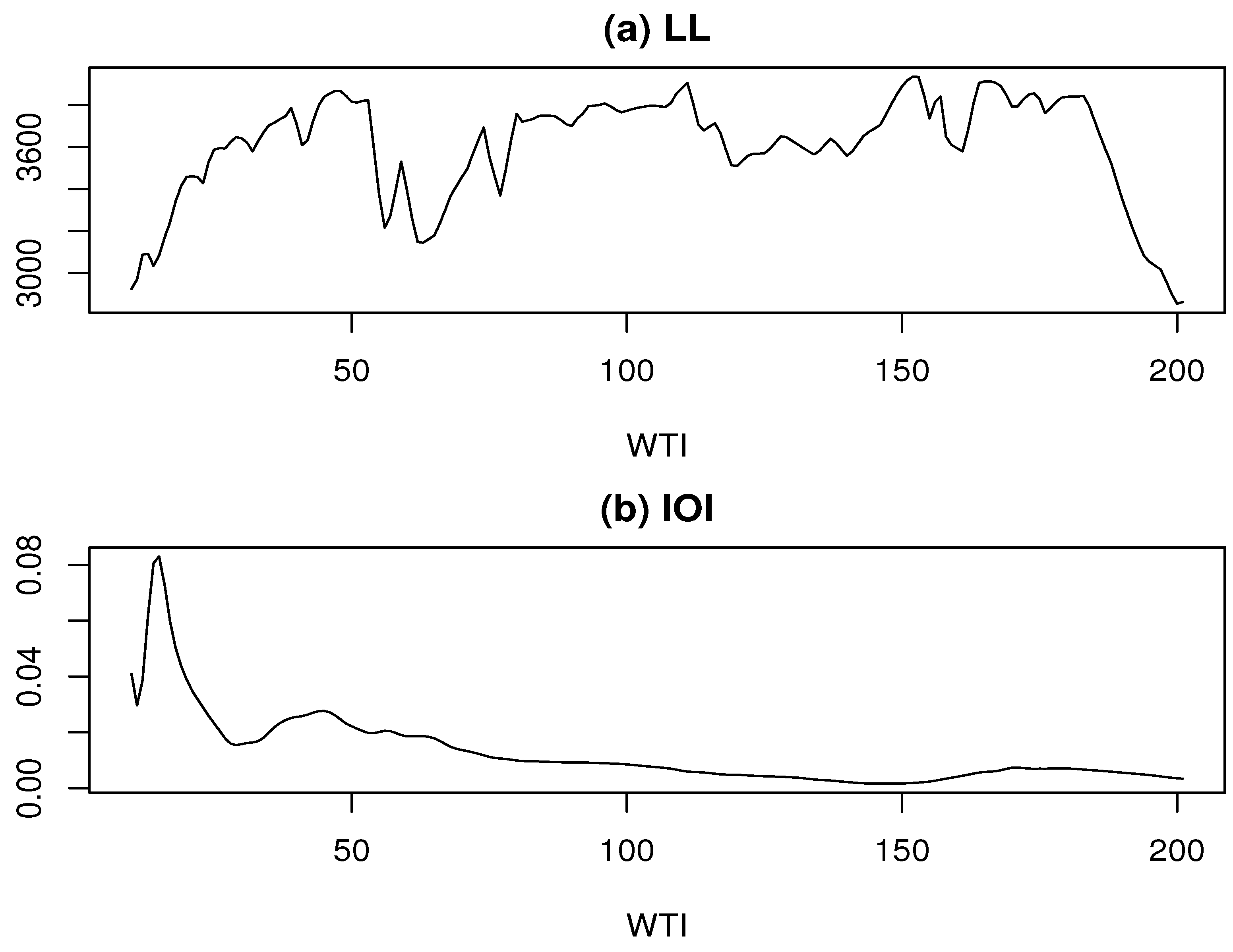

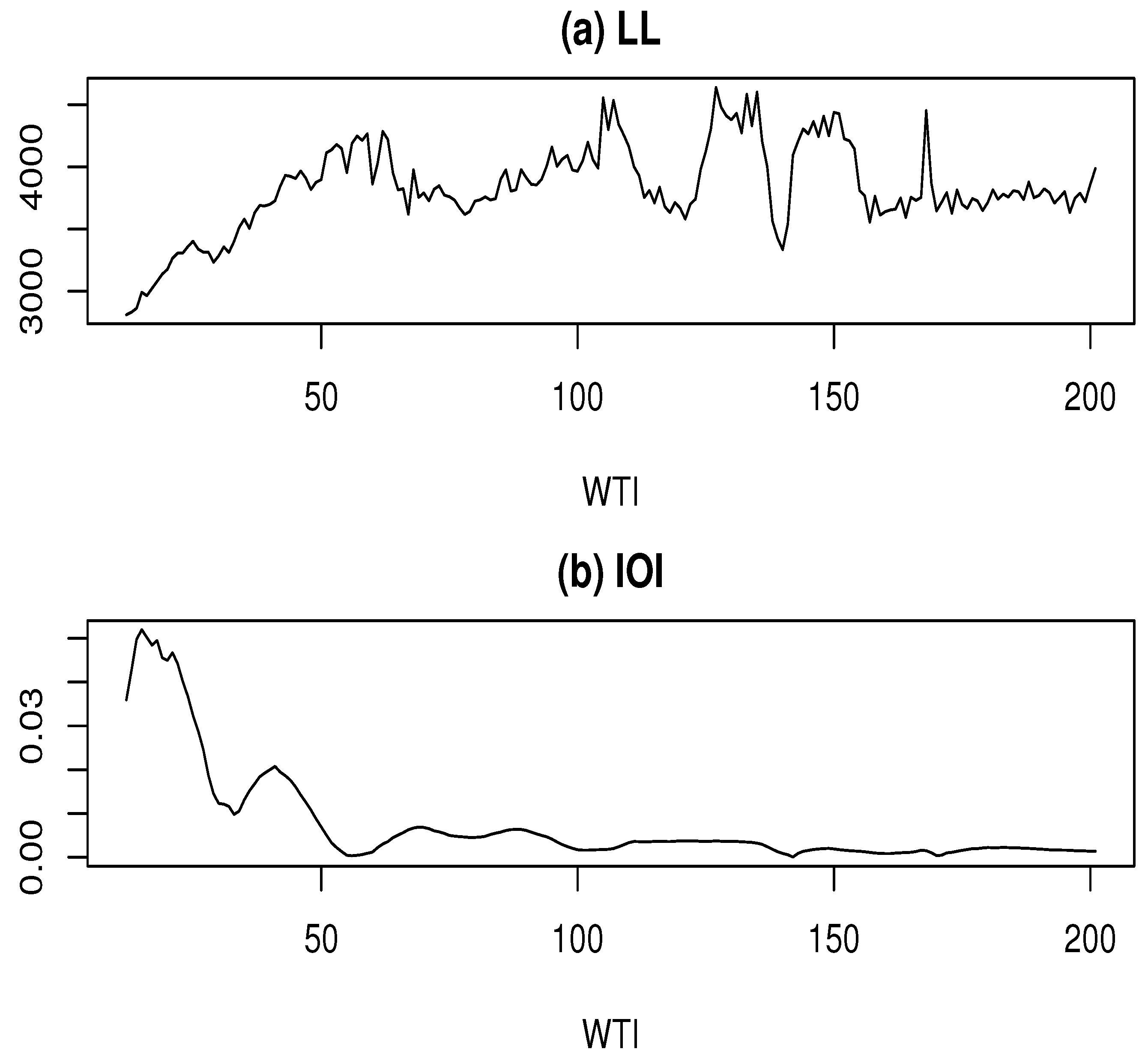

3.3.4. Handling WTI Determination in Outlier Detection and Estimation

- For each candidate value of k, estimate the outliers for based on the potential outlier locations and calculate the corresponding AIC values. Use these results to update the AIC sequence in Equation (22).

- Generate the DAIC sequence in Equation (23) based on the AIC sequence and update the outlier locations. Then, identify the outlier estimates and their corresponding locations that yield the greatest AIC reduction according to the updated DAIC values.

- Recalculate the AIC values for all candidate values of k based on the estimated outliers and determine the final value of k according to the minimum AIC criterion.

4. Empirical Examples

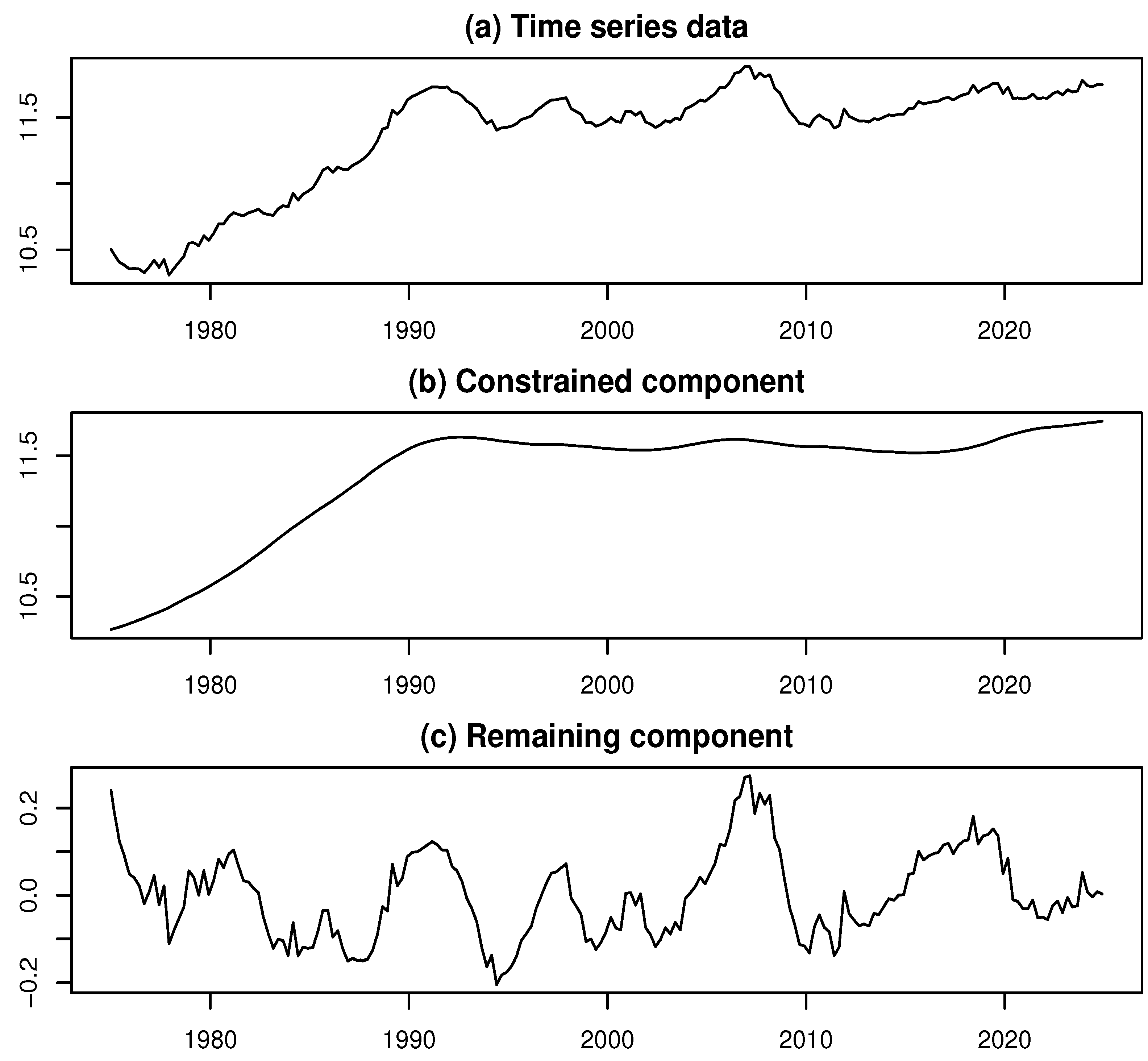

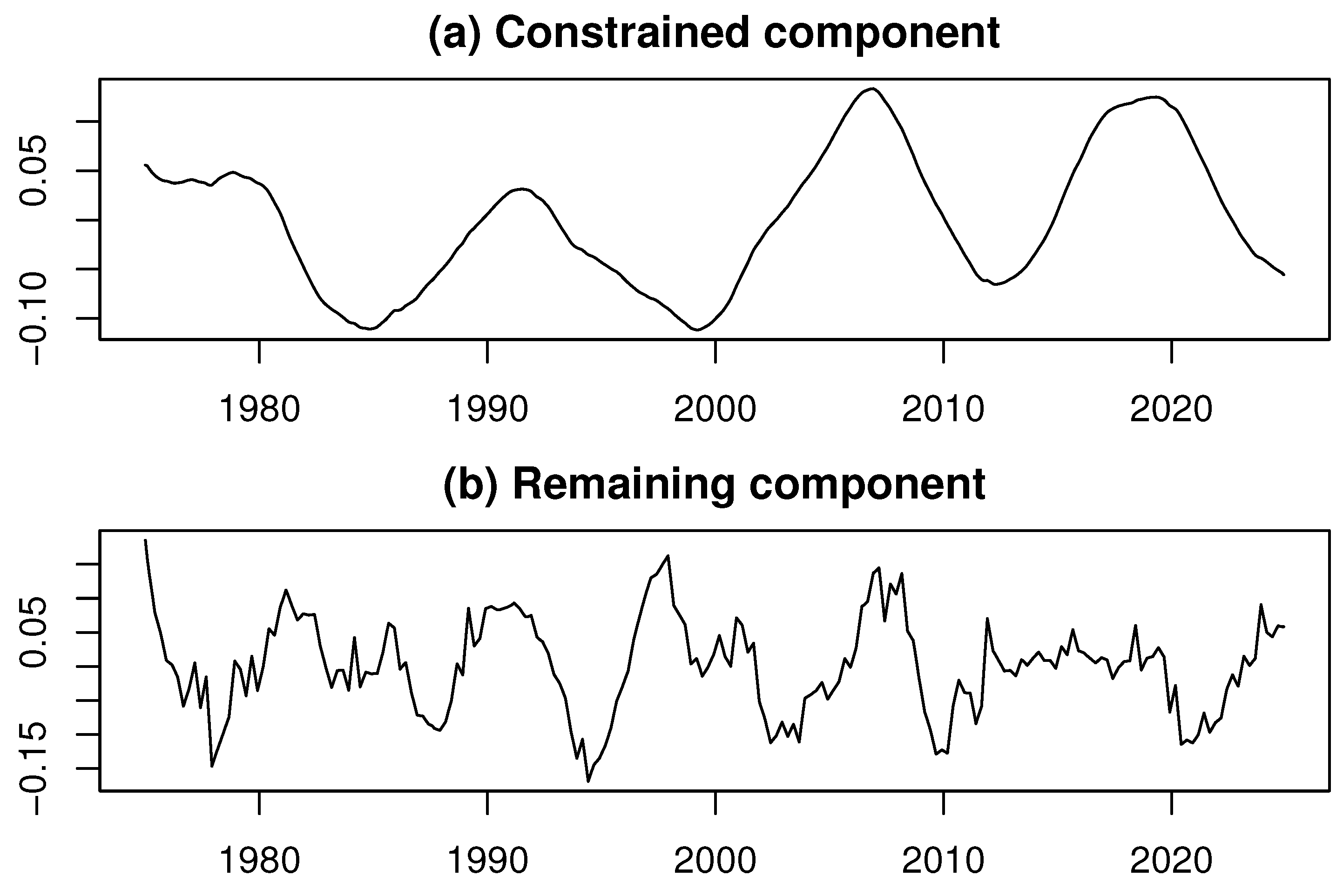

4.1. Empirical Analysis of Capital Investment in Japan

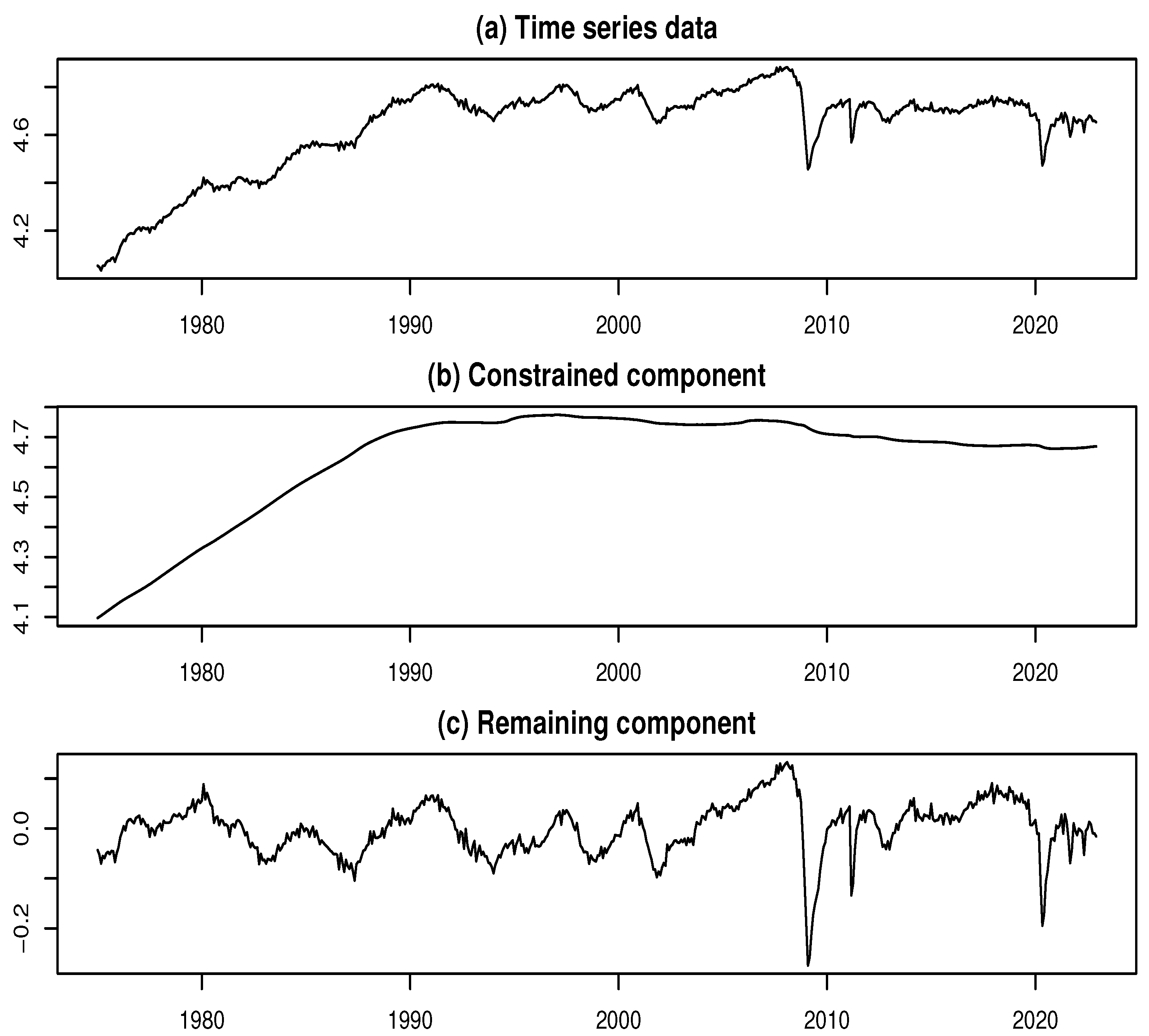

4.2. Empirical Analysis of Industrial Production in Japan

5. Summary and Discussion

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kyo, K.; Kitagawa, G. A moving linear model approach for extracting cyclical variation from time series data. J. Bus. Cycle Res. 2023, 19, 373–397. [Google Scholar] [CrossRef]

- Kyo, K.; Noda, H.; Fang, F. An integrated approach for decomposing time series data into trend, cycle and seasonal components. Math. Comput. Model. Dyn. Syst. 2024, 30, 792–813. [Google Scholar] [CrossRef]

- Ren, H.; Xu, B.; Wang, Y.; Yi, C.; Huang, C.; Kou, X.; Xing, T.; Yang, M.; Tong, J.; Zhang, Q. Time-series anomaly detection service at Microsoft. In Proceedings of the KDD ’19: The 25th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Anchorage, AK, USA, 4–8 August 2019. [Google Scholar] [CrossRef]

- Vishwakarma, G.K.; Paul, C.; Elsawah, A.M. An algorithm for outlier detection in a time series model using backpropagation neural network. J. King Saud Univ.—Sci. 2020, 32, 3328–3336. [Google Scholar] [CrossRef]

- Jamshidi, E.J.; Yusup, Y.; Kayode, J.S.; Kamaruddin, M.A. Detecting outliers in a univariate time series dataset using unsupervised combined statistical methods: A case study on surface water temperature. Ecol. Inform. 2022, 69, 101672. [Google Scholar] [CrossRef]

- Kyo, K. An approach for the identification and estimation of outliers in a time series with a nonstationary mean. In Proceedings of the 2023 World Congress in Computer Science, Computer Engineering, & Applied Computing (CSCE’23), Las Vegas, NV, USA, 24–27 July 2023; IEEE Computer Society: Washington, DC, USA, 2003; pp. 1477–1482. [Google Scholar]

- Kyo, K. Enhancing business cycle analysis by integrating anomaly detection and components decomposition of time series data. Stat. Methods Appl. 2025, 34, 129–154. [Google Scholar] [CrossRef]

- Kyo, K.; Noda, H. Analyzing mechanisms of business fluctuations involving time-varying structure in Japan: Methodological proposition and empirical study. Comput. Econ. 2025. [Google Scholar] [CrossRef]

- Kitagawa, G. Introduction to Time Series Modeling with Application in R, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Kitagawa, G.; Gersch, W. A smoothness priors state space modeling of time series with trend and seasonality. J. Am. Stat. Assoc. 1984, 79, 378–389. [Google Scholar]

- Akaike, H. A new look at the statistical model identification. IEEE Trans. Autom. Control 1974, AC-19, 716–723. [Google Scholar] [CrossRef]

- Japanese Cabinet Office. Coincident Index. 2025. Available online: https://www.esri.cao.go.jp/en/stat/di/di-e.html (accessed on 6 May 2025).

- Schumpeter, J.A. Business Cycles: A Theoretical, Historical, and Statistical Analysis of the Capitalist Process; McGraw-Hill: New York, NY, USA, 1939; Volumes I & II. [Google Scholar]

- Kyo, K. Identifying and estimating outliers in time series with nonstationary mean through multi-objective optimization method. In Big Data, Data Mining and Data Science: Algorithms, Infrastructures, Management and Security; De Gruyter: Berlin, Germany, 2025. [Google Scholar]

| WTI (k) | Estimate of Outlier Number | AIC Without Outliers | AIC with Outliers | Reduction in AIC |

|---|---|---|---|---|

| 15 | 16 | −5980.28 | −6350.23 | 369.94 |

| 25 | 16 | −6802.79 | −7218.42 | 415.63 |

| 43 | 24 | −7862.04 | −8165.75 | 303.71 |

| 62 | 25 | −8568.73 | −8803.30 | 234.57 |

| 105 | 9 | −9108.88 | −9127.49 | 18.61 |

| 127 | 15 | −9277.28 | −9304.63 | 27.35 |

| 150 | −8875.54 | −8877.63 | 2.09 |

| k Value | 15 | 25 | 43 | 62 | 105 | 127 | 150 |

|---|---|---|---|---|---|---|---|

| AIC value | −6347.9 | −7218.4 | −8043.7 | −8685.9 | −8987.9 | −9162.2 | −8567.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kyo, K. Reinforcing Moving Linear Model Approach: Theoretical Assessment of Parameter Estimation and Outlier Detection. Axioms 2025, 14, 479. https://doi.org/10.3390/axioms14070479

Kyo K. Reinforcing Moving Linear Model Approach: Theoretical Assessment of Parameter Estimation and Outlier Detection. Axioms. 2025; 14(7):479. https://doi.org/10.3390/axioms14070479

Chicago/Turabian StyleKyo, Koki. 2025. "Reinforcing Moving Linear Model Approach: Theoretical Assessment of Parameter Estimation and Outlier Detection" Axioms 14, no. 7: 479. https://doi.org/10.3390/axioms14070479

APA StyleKyo, K. (2025). Reinforcing Moving Linear Model Approach: Theoretical Assessment of Parameter Estimation and Outlier Detection. Axioms, 14(7), 479. https://doi.org/10.3390/axioms14070479