A Totally Relaxed, Self-Adaptive Tseng Extragradient Method for Monotone Variational Inequalities

Abstract

1. Introduction

- The combination of the inertial and relaxation techniques for speeding up the convergence rate the iterative scheme.

- The presence of a simple self-adaptive stepsize, which is generated at each iteration by some simple computations.

- The algorithm is independent of the use of the Lipschitz continuity assumption which is commonly employed by authors when solving the monotone variational inequality problem (MVIP).

- Strong convergence of the generated sequence to a minimum-norm solution to the problems.

- Computation of only one projection onto some half space.

2. Preliminaries

- (i)

- (ii)

- (iii)

- If with we have

- (i)

- or

- (ii)

- and there exist (depending on the point ) and such that where denotes the boundary of the set and is the convex hull of the set

3. Proposed Algorithm

- (1)

- The solution set is nonempty.

- (2)

- The mapping is monotone and -Lipschitz-continuous on .

- (3)

- For all , the family of functions satisfy the following conditions.

- (i)

- Any is convex on .

- (ii)

- Any is weakly lower semi-continuous on

- (iii)

- Any is Gâteaux-differentiable and is -Lipschitz-continuous on

- (iv)

- There exists a positive constant M such that for all the following holds:where is defined as in Lemma 3.

- (4)

- and are non-negative sequences satisfying the following conditions:

- (i)

- (ii)

- such that

- (iii)

- (iv)

- Let be a nonnegative sequence such that

| Algorithm 1: TRSTEM |

Initialization: Given Let be two initial points and set Given the and iterates, choose such that with defined by Iterative steps: Calculate the next iterate as follows: |

- We do not require the knowledge of the Lipschitz constant of the cost operator or the Lipschitz constant of each Gteaux differential of to implement our proposed algorithm, as most often used by some researchers (for instance, see [27]).

- Computation of only one projection onto some half space is another feature of our algorithm that makes it computationally efficient to implement.

4. Convergence Analysis

- In the following theorem, we state and prove the strong convergence theorem for our proposed algorithm.

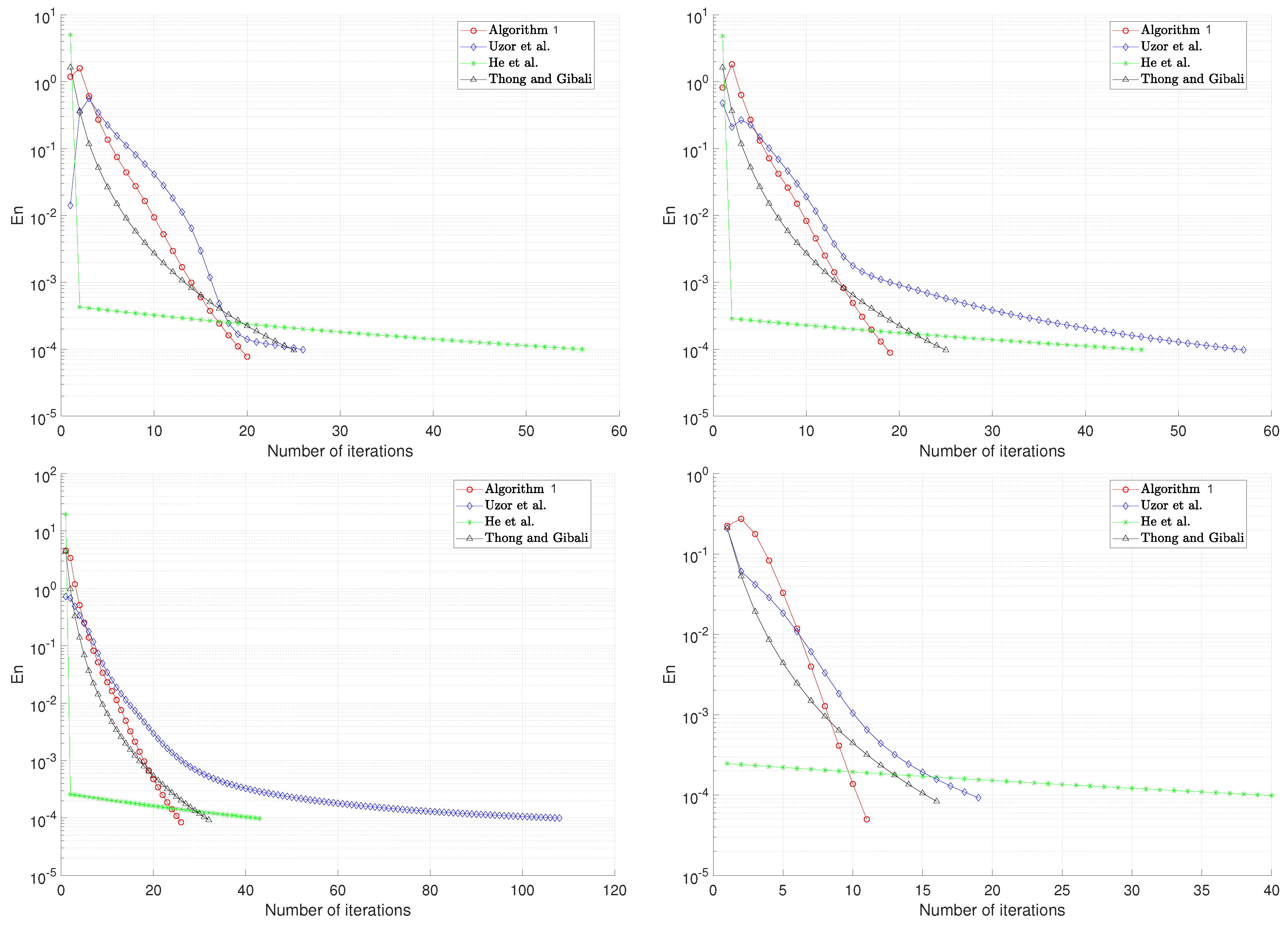

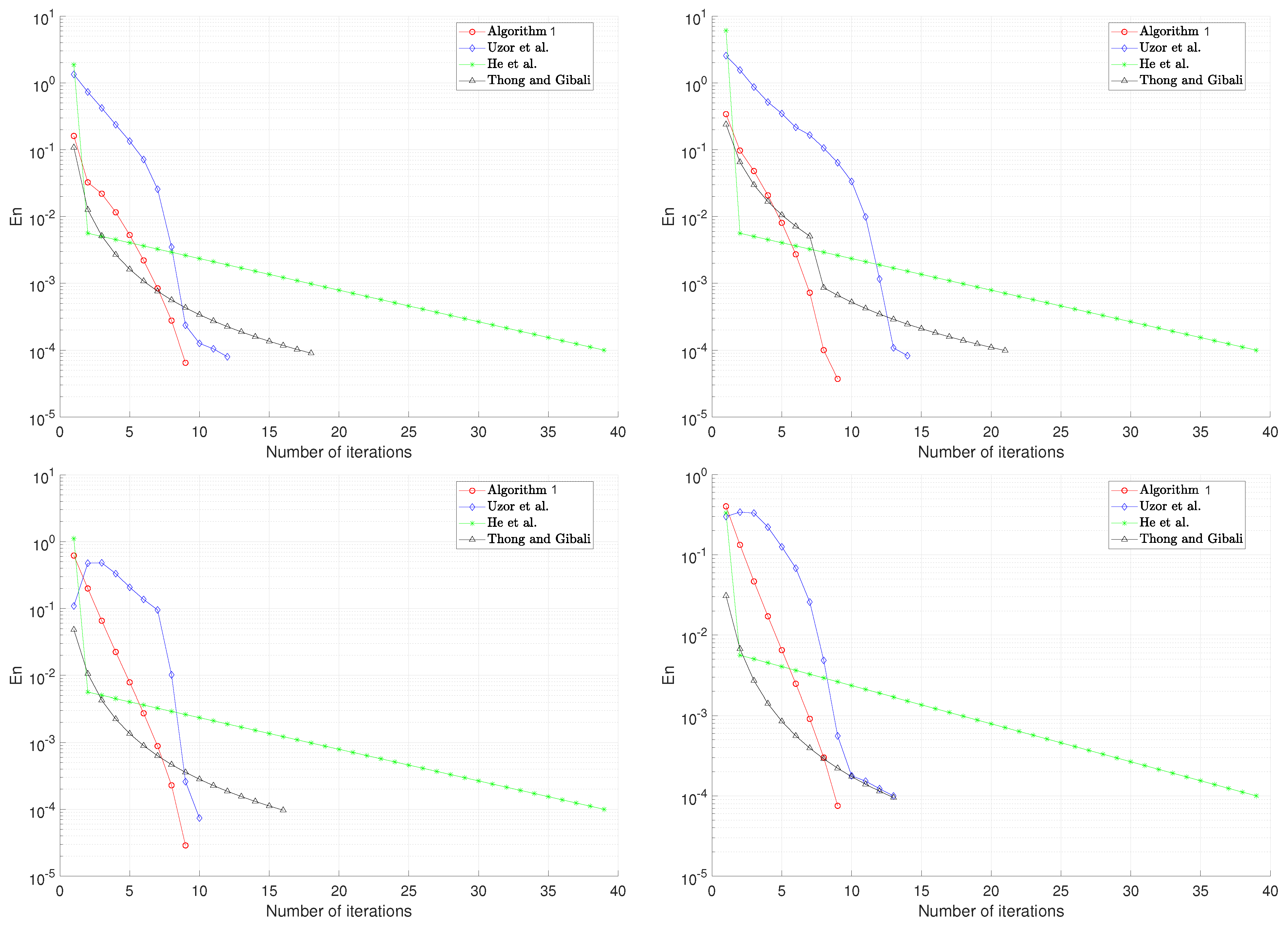

5. Numerical Example

- (Case 1):

- and

- (Case 2):

- and

- (Case 3):

- and

- (Case 4):

- and

- (Case i)

- and

- (Case ii)

- and

- (Case iii)

- and

- (Case iv)

- and

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Fichera, G. Sul problem elastostatico di signorini con ambigue condizioni al contorno. Atti Accad. Naz. Lincei Cl Sci. Fis. Mat. Nat. 1963, 34, 138–142. [Google Scholar]

- Stampacchia, G. Variational Inequalities. In Theory and Applications of Monotone Operators, Proceedings of the NATO Advanced Study Institute, Venice, Italy, 17–30 June 1968; Edizioni Odersi: Gubbio, Italy, 1968; pp. 102–192. [Google Scholar]

- Censor, Y.; Gibali, A.; Reich, S. Extensions of Korpelevich’s extragradient method for the variational inequality problem in Euclidean space. Optimization 2012, 61, 1119–1132. [Google Scholar] [CrossRef]

- Fukushima, M. A relaxed projection method for variational inequalities. Math. Program. 1986, 35, 58–70. [Google Scholar] [CrossRef]

- Gu, Z.; Mani, G.; Gnanaprakasam, A.J.; Li, Y. Solving a System of Nonlinear Integral Equations via Common Fixed Point Theorems on Bicomplex Partial Metric Space. Mathematics 2021, 9, 1584. [Google Scholar] [CrossRef]

- He, S.; Yang, C. Solving the variational inequality problem defined on intersection of finite level sets. Abstr. Appl. Anal. 2013, 2013, 942315. [Google Scholar] [CrossRef]

- Korpelevich, G.M. An extragradient method for finding saddle points and for other problems. Ekon. Mat. Metody 1976, 12, 747–756. [Google Scholar]

- Nallaselli, G.; Baazeem, A.S.; Gnanaprakasam, A.J.; Mani, G.; Javed, K.; Ameer, E.; Mlaiki, N. Fixed Point Theorems via Orthogonal Convex Contraction in Orthogonal b-Metric Spaces and Applications. Axioms 2023, 12, 143. [Google Scholar] [CrossRef]

- Beg, I.; Mani, G.; Gnanaprakasam, A.J. Best proximity point of generalized F-proximal non-self contractions. J. Fixed Point Theory Appl. 2021, 23, 49. [Google Scholar] [CrossRef]

- Gnanaprakasam, A.J.; Nallaselli, G.; Haq, A.U.; Mani, G.; Baloch, I.A.; Nonlaopon, K. Common Fixed-Points Technique for the Existence of a Solution to Fractional Integro-Differential Equations via Orthogonal Branciari Metric Spaces. Symmetry 2022, 14, 1859. [Google Scholar] [CrossRef]

- Ramaswamy, R.; Mani, G.; Gnanaprakasam, A.J.; Abdelnaby, O.A.A.; Stojiljković, V.; Radojevic, S.; Radenović, S. Fixed Points on Covariant and Contravariant Maps with an Application. Mathematics 2022, 10, 4385. [Google Scholar] [CrossRef]

- Alakoya, T.O.; Taiwo, A.; Mewomo, O.T.; Cho, Y.J. An iterative algorithm for solving variational inequality generalized mixed equilibrium, convex minimization and zeros problems for a class of nonexpansive-type mappings. Ann. Univ. Ferrara Sez. VII Sci. Mat. 2021, 67, 1–31. [Google Scholar] [CrossRef]

- Dong, Q.L.; Cho, Y.J.; Zhong, L.L.; Rassias, T.M. Inertial projection and contraction algorithms for variational inequalities. J. Glob. Optim. 2017, 70, 687–704. [Google Scholar] [CrossRef]

- He, S.; Dong, Q.-L.; Tian, H. Relaxed projection and contraction methods for solving Lipschitz-continuous monotone variational inequalities. Rev. De La Real Acad. De Cienc. Exactas Fis. Y Nat. Ser. A Mat. 2019, 113, 2773–2791. [Google Scholar] [CrossRef]

- Ogwo, G.N.; Izuchukwu, C.; Mewomo, O.T. A modified extragradient algorithm for a certain class of split pseudo-monotone variational inequality problem. Numer. Algebra Control Optim. 2022, 12, 373–393. [Google Scholar] [CrossRef]

- Ceng, L.; Petrușel, A.; Qin, X.; Yao, J. A modified inertial subgradient extragradient method for solving pseudomonotone variational inequalities and common fixed point problems. Fixed Point Theory 2020, 21, 93–108. [Google Scholar] [CrossRef]

- Iusem, A.N.; Nasri, M. Korpelevich’s method for variational inequality problems in Banach spaces. J. Glob. Optim. 2011, 50, 59–76. [Google Scholar] [CrossRef]

- Ogwo, G.N.; Izuchukwu, C.; Mewomo, O.T. Inertial methods for finding minimum-norm solutions of the split variational inequality problem beyond monotonicity. Numer. Algorithms 2021, 88, 1419–1456. [Google Scholar] [CrossRef]

- Tseng, P. A Modified forward-backward splitting method for maximal monotone mappings. SIAM J. Control Optim. 2000, 38, 431–446. [Google Scholar] [CrossRef]

- Yang, J.; Liu, H. Strong convergence result for solving monotone variational inequalities in Hilbert space. Numer. Algorithms 2019, 80, 741–752. [Google Scholar] [CrossRef]

- Censor, Y.; Gibali, A.; Reich, S. The Split Variational Inequality Problem; The Technion-Israel Institute of Technology: Haifa, Israel, 2010. [Google Scholar]

- He, S. A class of projection and contraction methods for monotone variational inequalities. Appl. Math. Optim. 1997, 35, 69–76. [Google Scholar] [CrossRef]

- He, B.; Yuan, X.; Zhang, J.J. Comparison of two kinds of prediction-correction methods for monotone variational inequalities. Comput. Optim. Appl. 2004, 27, 247–267. [Google Scholar] [CrossRef]

- Solodov, M.V.; Tseng, P. Modified projection-type methods for monotone variational inequalities. SIAM J. Control Optim. 1996, 34, 1814–1830. [Google Scholar] [CrossRef]

- Sun, D. A class of iterative methods for solving nonlinear projection equations. J. Optim. Theory Appl. 1996, 91, 123–140. [Google Scholar] [CrossRef]

- Polyak, B. Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Cao, Y.; Guo, K. On the convergence of inertial two-subgradient extragradient method for solving variational inequality problems. Optimization 2020, 69, 1237–1253. [Google Scholar] [CrossRef]

- Alvarez, F. Weak convergence of a relaxed and inertial hybrid projection-proximal point algorithm for maximal monotone operators in hilbert space. SIAM J. Optim. 2004, 14, 773–782. [Google Scholar] [CrossRef]

- Attouch, H.; Cabot, A. Convergence of a relaxed inertial forward–backward algorithm for structured monotone inclusions. Appl. Math. Optim. 2019, 80, 547–598. [Google Scholar] [CrossRef]

- Iutzeler, F.; Hendrickx, J.M. A generic online acceleration scheme for optimization algorithms via relaxation and inertia. Optim. Methods Softw. 2019, 34, 383–405. [Google Scholar] [CrossRef]

- He, S.; Wu, T.; Gibali, A.; Dong, Q.-L. Totally relaxed, self-adaptive algorithm for solving variational inequalities over the intersection of sub-level sets. Optimization 2018, 67, 1487–1504. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces, 2nd ed.; Springer: New York, NY, USA, 2017. [Google Scholar]

- Nguyen, H.Q.; Xu, H.K. The supporting hyperplane and an alternative to solutions of variational inequalities. J. Nonlinear Convex Anal. 2015, 16, 2323–2331. [Google Scholar]

- Saejung, S.; Yotkaew, P. Approximation of zeros of inverse strongly monotone operators in Banach spaces. Nonlinear Anal. Theory Methods Appl. 2012, 75, 742–750. [Google Scholar] [CrossRef]

- Tan, K.K.; Xu, H.K. Approximating Fixed Points of Nonexpansive Mappings by the Ishikawa Iteration Process. J. Math. Anal. Appl. 1993, 178, 301–308. [Google Scholar] [CrossRef]

- Thong, D.V.; Gibali, A. Two strong convergence subgradient extragradient methods for solving variational inequalities in Hilbert spaces. Jpn. J. Ind. Appl. Math. 2019, 36, 299–321. [Google Scholar] [CrossRef]

- Uzor, V.A.; Mewomo, O.T.; Alakoya, T.O.; Gibali, A. Outer approximated projection and contraction method for solving variational inequalities. J. Inequalities Appl. 2023, 2023, 141. [Google Scholar] [CrossRef]

| Algorithm | Case 1 | Case 2 | Case 3 | Case 4 | ||||

|---|---|---|---|---|---|---|---|---|

| Iter. | CPU Time | Iter. | CPU Time | Iter. | CPU Time | Iter. | CPU Time | |

| Algorithm 1 | 20 | 0.0073 | 19 | 0.0065 | 26 | 0.0061 | 11 | 0.0057 |

| He et al. [6] | 57 | 0.0118 | 47 | 0.0129 | 43 | 0.0108 | 40 | 0.0157 |

| Thong and Gibali [36] | 25 | 0.0094 | 25 | 0.090 | 32 | 0.0087 | 16 | 0.0085 |

| Uzor et al. [37] | 26 | 0.0217 | 57 | 0.0213 | 108 | 0.0202 | 19 | 0.0089 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ogunsola, O.J.; Oyewole, O.K.; Moshokoa, S.P.; Abass, H.A. A Totally Relaxed, Self-Adaptive Tseng Extragradient Method for Monotone Variational Inequalities. Axioms 2025, 14, 354. https://doi.org/10.3390/axioms14050354

Ogunsola OJ, Oyewole OK, Moshokoa SP, Abass HA. A Totally Relaxed, Self-Adaptive Tseng Extragradient Method for Monotone Variational Inequalities. Axioms. 2025; 14(5):354. https://doi.org/10.3390/axioms14050354

Chicago/Turabian StyleOgunsola, Olufemi Johnson, Olawale Kazeem Oyewole, Seithuti Philemon Moshokoa, and Hammed Anuoluwapo Abass. 2025. "A Totally Relaxed, Self-Adaptive Tseng Extragradient Method for Monotone Variational Inequalities" Axioms 14, no. 5: 354. https://doi.org/10.3390/axioms14050354

APA StyleOgunsola, O. J., Oyewole, O. K., Moshokoa, S. P., & Abass, H. A. (2025). A Totally Relaxed, Self-Adaptive Tseng Extragradient Method for Monotone Variational Inequalities. Axioms, 14(5), 354. https://doi.org/10.3390/axioms14050354