1. Introduction

Lyapunov functions are fundamental tools for analyzing the stability of nonlinear systems [

1,

2,

3] based on energy-based reasoning. If a system consistently dissipates energy everywhere except at the origin, it must eventually converge to the equilibrium point. Consequently, a Lyapunov function—serving as an energy-like measure—must be strictly positive definite for all states. This principle extends naturally to general nonlinear systems. Lyapunov’s direct method involves constructing a scalar function representing the system’s dynamics and analyzing its evolution over time. However, identifying such a function remains a significant challenge. Importantly, failing to find a Lyapunov function does not imply that the system is unstable, adding complexity to the task.

Numerous classical methods for identifying or approximating Lyapunov functions have been proposed, including the variable gradient method, Krasovskii’s method, and basis-function linearization; see [

1,

2,

3,

4,

5,

6] for a comprehensive overview. However, no universal technique exists, and constructing a Lyapunov function is generally as difficult as solving the system itself. In recent decades, advances in computational power have spurred research into numerical approaches such as sum-of-squares polynomials [

7,

8], piecewise affine functions [

9], formal synthesis via satisfiability modulo theories (SMT) [

10], and deep neural networks [

11]. An in-depth survey of these methods can be found in [

12], and studies on the stability of evolutionary systems are presented in [

13,

14].

This work introduces a numerical method for synthesizing polynomial Lyapunov functions tailored to exponentially stable dynamical systems utilizing the genetic programming (GP) paradigm [

15]. GP is an evolutionary computation technique, an extension of genetic algorithms [

16], which operates directly on symbolic expressions, enabling the automatic discovery and optimization of mathematical formulas. A key advantage of this approach is its ability to generate interpretable, symbolic representations of Lyapunov functions, facilitating further analysis of system dynamics.

Several prior works have explored related methodologies. Grosman and Lewin [

17] applied GP techniques to the analysis of asymptotic stability. McGough et al. [

18] utilized Grammatical Evolution, a specialized variant of GP. Feng et al. [

19] combined deep neural networks with symbolic regression to synthesize Lyapunov functions; additional insights are provided in [

20].

While these methods have demonstrated significant progress, many lack rigorous mathematical guarantees or general-purpose construction procedures. Our contributions address this gap by establishing existence criteria for polynomial Lyapunov functions in exponentially stable systems and developing a robust algorithm for their automated synthesis. The proposed method was validated across multiple benchmark systems, demonstrating its strong performance and high accuracy.

2. Exponential Stability of Dynamical Systems

In this section, we recall several fundamental concepts of exponential stability in dynamical systems. For additional details, we refer the reader to [

1,

2,

3].

We consider an autonomous system of ordinary differential equations of the form

where

is a locally Lipschitz continuous map defined on a domain

.

We analyzed an exponentially stable equilibrium according to the following definition.

Definition 1. The equilibrium point of (1)

is exponentially stable if there exist positive constants , , and such thatand globally exponentially stable if satisfied for any initial state The classical Lyapunov theorem states that if one can find a continuously differentiable function that satisfies specified bounding conditions, then the equilibrium at the origin is exponentially stable.

Theorem 1 (Khalil [

2])

. Let be an equilibrium point for the nonlinear systemwhere is piecewise continuous in and locally Lipschitz in on and is a domain that contains the origin . Let be a continuously differentiable function such that and , where , and are positive constants. Then, is exponentially stable. If the assumption holds globally, then is globally exponentially stable.

For nonlinear systems, stability can also be analyzed via linearization. The following theorem relates exponential stability of the nonlinear system to the stability of its linearization using the Jacobian matrix.

Theorem 2 (Khalil [

2])

. Let be an equilibrium point for the nonlinear systemwhere is continuously differentiable, , and the Jacobian matrix is bounded and Lipschitz on , uniformly in . Then, the origin is an exponentially stable equilibrium point for the nonlinear system if and only if it is an exponentially stable equilibrium point for the linear system For the autonomous system (1), the stability of can be determined by the eigenvalues of the constant linearization matrix.

Definition 2. A squared matrix is called a Hurwitz matrix if all its eigenvalues satisfy Corollary 1. Let be an equilibrium point for the autonomous nonlinear system (1), where is continuously differentiable in a neighborhood of . Let be a linearization matrix of (1). Then, is an exponentially stable equilibrium point for the nonlinear system if and only if is a Hurwitz matrix.

The following converse theorem presented by Peet [

21] guarantees the existence of a polynomial Lyapunov function for an exponentially stable equilibrium under sufficient smoothness and boundedness conditions.

Theorem 3 (Peet [

21])

. Consider the system defined by Equation (1)

, where . Suppose there exist constants such thatfor all and . Then, there exists a polynomial and constants such thatfor all . 3. Genetic Programming Method for Exponential Stability Certification

In this section, we outline our proposed method, which leverages genetic programming to compute a polynomial Lyapunov function for a given dynamical system. We provide a comprehensive explanation of the underlying theoretical principles and describe the construction process of our approach.

3.1. Existence Criterion for a Polynomial Lyapunov Function

We now present a converse Lyapunov theorem, which provides the main criterion for the applicability of the proposed method.

Theorem 4. Let be an equilibrium for the nonlinear system (1)

, where and Let linearization matrix of the system (1)

be a Hurwitz matrix. Then, there exists a polynomial and constants such thatfor all . Proof of Theorem 4. By Corollary 1, the Hurwitz condition on the linearization implies that is an exponentially stable equilibrium for the nonlinear system. Then, by Theorem 3, under the assumed smoothness of and the exponential stability of the origin, there exists a polynomial Lyapunov function satisfying the required quadratic bounds and dissipation condition on domain . □

3.2. The Lyapunov Fitness

For the remainder of our work, we focus on dynamical systems that satisfy the conditions of Theorem 4, and our objective is to develop methods for constructing a polynomial Lyapunov function that meets the following criteria for exponential stability on domain :

Quadratic Bounds: There exist constants

such that

Dissipation Condition: There exist

such that the orbital derivative of

satisfies

Rather than strictly enforcing these conditions, we introduce a fitness measure that quantifies the degree of violation by formulating the search for a Lyapunov function as a minimax optimization problem:

where

is a space of candidate function (in our case, polynomials on

) and

is a fitness functional that penalizes deviations from the desired Lyapunov conditions.

Definition 3. Let be a chosen space of continuously differentiable functions , . Define the Lyapunov fitness functional bywith the following penalty terms: - 1.

- 2.

- 3.

Here, are positive constants, is the vector field of a dynamic system, and the hyperbolic tangent functionserves to smooth and bound the penalty values. Definition 4. For a candidate Lyapunov function and the vector field , the Lyapunov fitness over domain is defined as This integral measures the total violation of the Lyapunov conditions over the domain .

Definition 5. Given a probability distribution on and a set of sample points drawn according to , the empirical Lyapunov fitness is defined as This discrete approximation converges to as

It is straightforward to verify that if is a Lyapunov function satisfying the exponential stability conditions on , then is a global minimizer of both (3) and (4) as well as a solution to the minimax problem (2).

3.3. Lyapunov Function Representation

In our formulation, a candidate Lyapunov function is represented as a binary tree with the following structure:

where

denote the state variables and

are constant coefficients.

where

, and

.

Although the sets and can be expanded, they suffice for our purposes, as our focus is on constructing polynomial functions.

3.4. Evolution Strategy

Our genetic algorithm employs the

—evolution strategy (

− ES). Here,

denotes the number of individuals selected for the next generation, and

is the number of offspring generated at each iteration. In

, both parents and offspring are retained in a combined selection pool of size

. This strategy, commonly known as elitism, unlike the alternative

, guarantees that the best individual found so far is preserved across generations. Under suitable conditions, such elitist selection ensures the global convergence of the evolution strategy. For further theoretical details and an in-depth explanation of evolution strategies, we refer the reader to [

22].

4. Step-by-Step Schema of the Algorithm

Our method implements the classic flow of a genetic algorithm. It begins with an Initialization phase to create the initial population of individuals, followed by an Initial Fitness Evaluation to prepare this population for further evolution. Next, the Evolutionary Cycle, the main body of the algorithm, allows candidates to evolve toward a solution. Then, a New Generation is formed by evaluating the fitness of newly generated individuals for the next evolution cycle. Finally, a Termination Check is performed to determine whether a solution has been found or if the algorithm is trapped in a local minimum.

- Step 1.

Initialization.

Generate an initial population of individuals , where is the size of the initial population, and each individual is a candidate solution represented as a binary tree. Each tree is constructed using two sets: the set of terminal nodes (5) and the set of nonterminal nodes (6).

The tree is constructed recursively. At a given depth

, the node type (terminal or nonterminal) is selected according to a probability that decreases with depth. Specifically, the probability of choosing a nonterminal node is defined as

where

is a predetermined decay parameter. Consequently, the probability of selecting a terminal node at depth

is

When a nonterminal node is selected, a node from the set is placed, and its children are recursively generated (for binary trees, two children), with the depth incremented by one at each recursive call. Conversely, if a terminal node is selected, a node from is placed, ending that branch of the recursion.

This strategy ensures that as the depth increases, the likelihood of selecting a terminal node increases, thereby regulating the overall tree size and preventing uncontrolled growth. Each individual tree in the initial population is constructed independently according to this scheme, resulting in a diverse set of candidate solutions for the algorithm.

- Step 2.

Initial Fitness Evaluation.

The numerical evaluation of proceeds as follows:

Domain Sampling:

Let be a predefined domain of interest. To calculate the fitness function, we generate a set of test points by sampling independently from the uniform distribution over These points form a well-distributed mesh covering the domain and serve as the basis for numerical approximation of the fitness values.

Fitness Computation:

For each individual in the population , we evaluate its fitness value on the set of test points using the empirical Lyapunov fitness (4), which quantifies the degree to which violates the exponential stability conditions on domain .

The computed fitness values determine the likelihood of each individual being selected for reproduction or mutation in subsequent generations. Individuals with lower fitness values (i.e., those that better satisfy the exponential stability conditions) typically have a greater chance of producing offspring, guiding the population toward better solutions over successive generations.

- Step 3.

Evolutionary Cycle.

The evolutionary cycle simulates Darwinian natural selection by iteratively applying selection, crossover, and mutation to evolve the population toward improved solutions. These genetic operations balance exploration (discovering new regions of the solution space) and exploitation (refining high-quality candidates).

The selection operator is defined as

where

is the set of individuals in the current generation, and

are their corresponding fitness values. We employ tournament selection [

23] in our implementation. In each tournament, a fixed number

of individuals is randomly sampled from the population, and the individual with the lowest fitness is selected for reproduction. This approach ensures that high-quality candidates have a higher chance of producing offspring while maintaining sufficient diversity to prevent premature convergence.

The crossover operator is defined as

where

and

are two parent individuals chosen via selection, and

is the predefined crossover probability. With probability

, a random node is selected in the tree representations of both parents

and

. The corresponding subtrees are swapped, generating two offspring that inherit genetic material from both parents, introducing new variations. If crossover is not performed (with probability

), the parent individuals are passed forward unchanged.

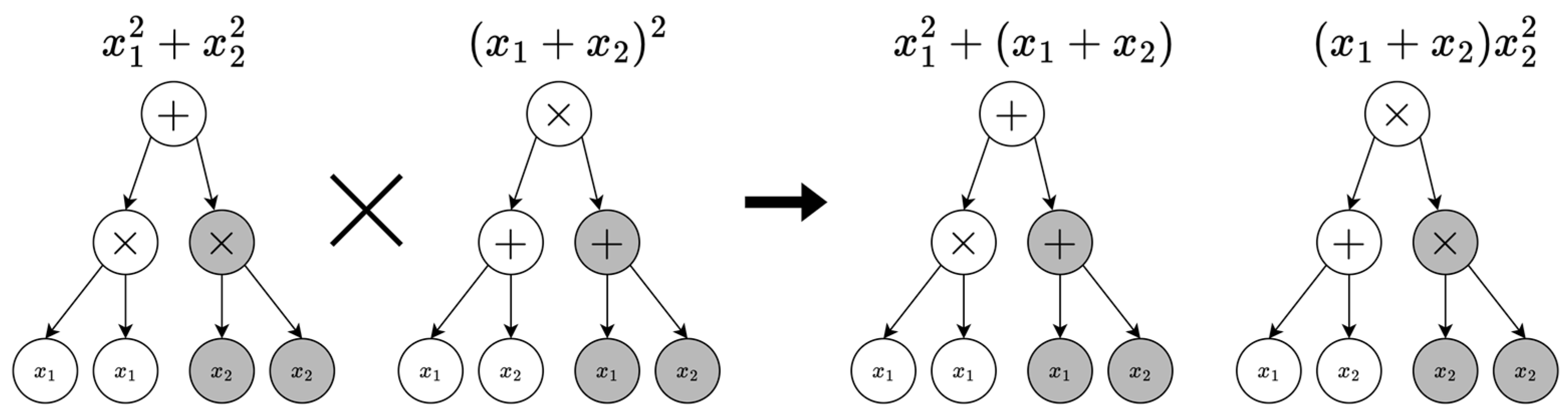

Figure 1 illustrates the crossover operation, where shaded nodes are selected to be swapped between parents.

The mutation operator is defined as

where

is an individual (either offspring of crossover or a direct parent copy), and

is the mutation probability. With probability

, a random node in the tree representation of

is replaced with a newly generated subtree constructed using the recursive initialization procedure. If no mutation occurs (with probability

), then

is retained in the offspring pool if it results from crossover; however, if

is a direct copy of a parent, it is removed to avoid stagnation and promote population diversity.

Figure 2 illustrates the mutation operation, where shaded nodes from the individual were replaced by a randomly generated subtree.

These operations are repeated until the offspring pool reaches the desired size of . After the offspring pool is fully populated, each individual’s fitness from the offspring pool is evaluated using the empirical Lyapunov fitness (4).

- Step 4.

Forming a New Generation.

Following the , we merge the current generation with the offspring pool, forming a combined pool of size .

All individuals in this pool are ranked by fitness, and the top (i.e., those with the lowest fitness values) are selected to form the next generation. This elitist strategy ensures the survival of the best-performing individuals from the previous generation while allowing new variations to improve diversity. This balance enhances convergence and maintains stability in the evolutionary process.

- Step 5.

Termination Check.

The algorithm continues to evolve until one of the following stopping criteria is met:

Maximum Generations Reached: The algorithm runs for a predefined number of generations , after which the algorithm terminates.

Early Stopping: If an individual achieves a predefined target fitness value of 0.

No Improvement: If the best fitness value remains unchanged for consecutive generations, the algorithm is considered converged, and execution stops.

These termination conditions ensure computational efficiency, preventing unnecessary iterations while striving for optimal solutions.

5. Experiments

In this section, we validate the proposed algorithm by constructing polynomial Lyapunov functions for several nonlinear dynamical systems.

We implemented an application for numerical experiments. The environment parameters of the workstation and environment are as follows:

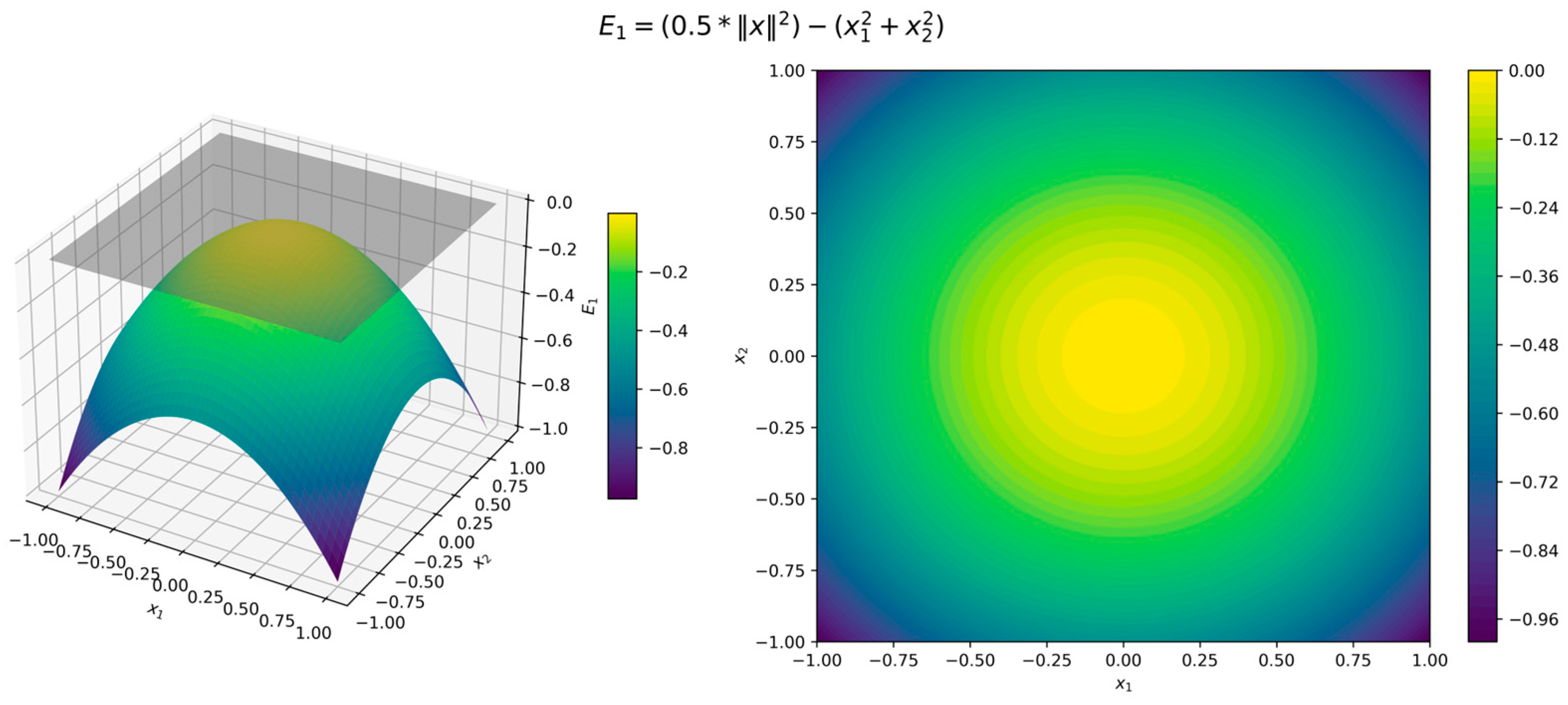

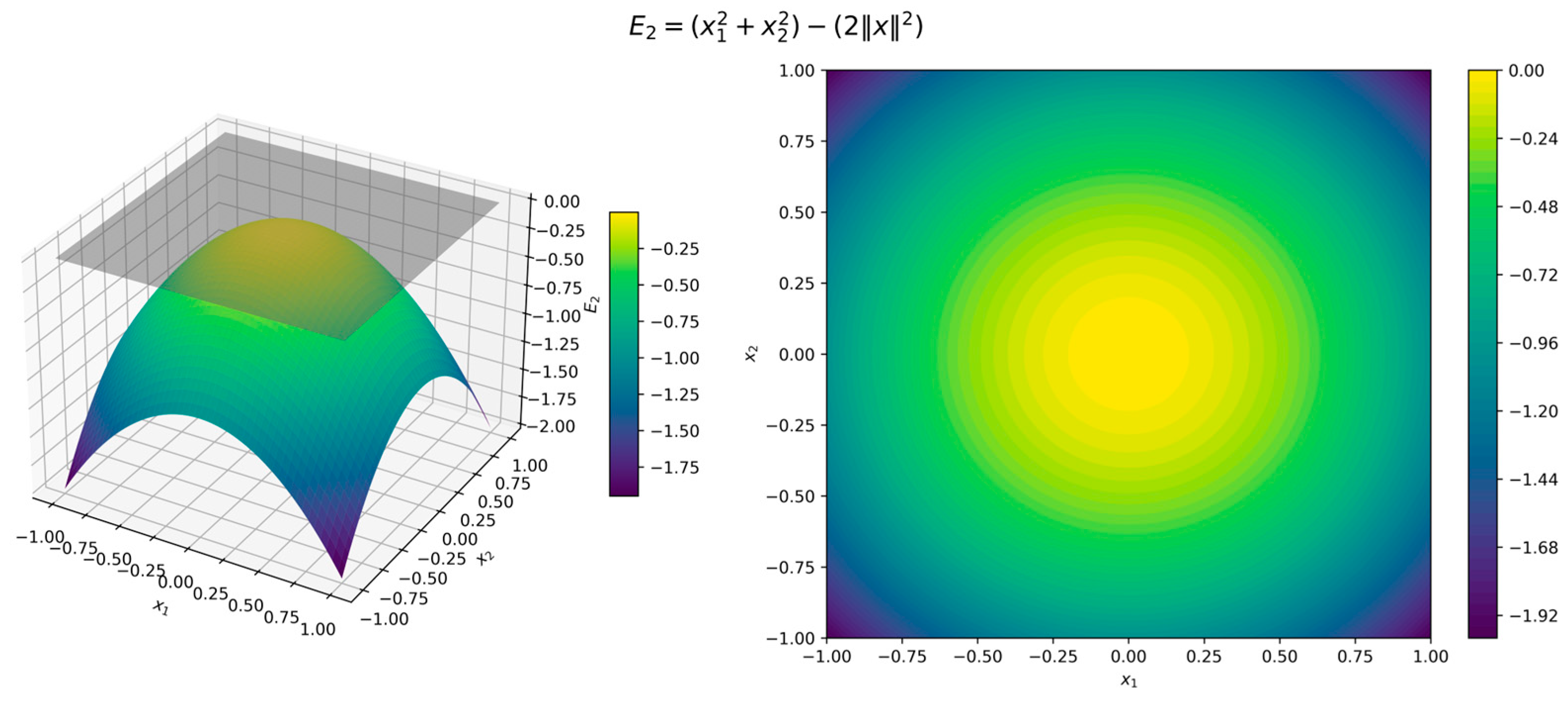

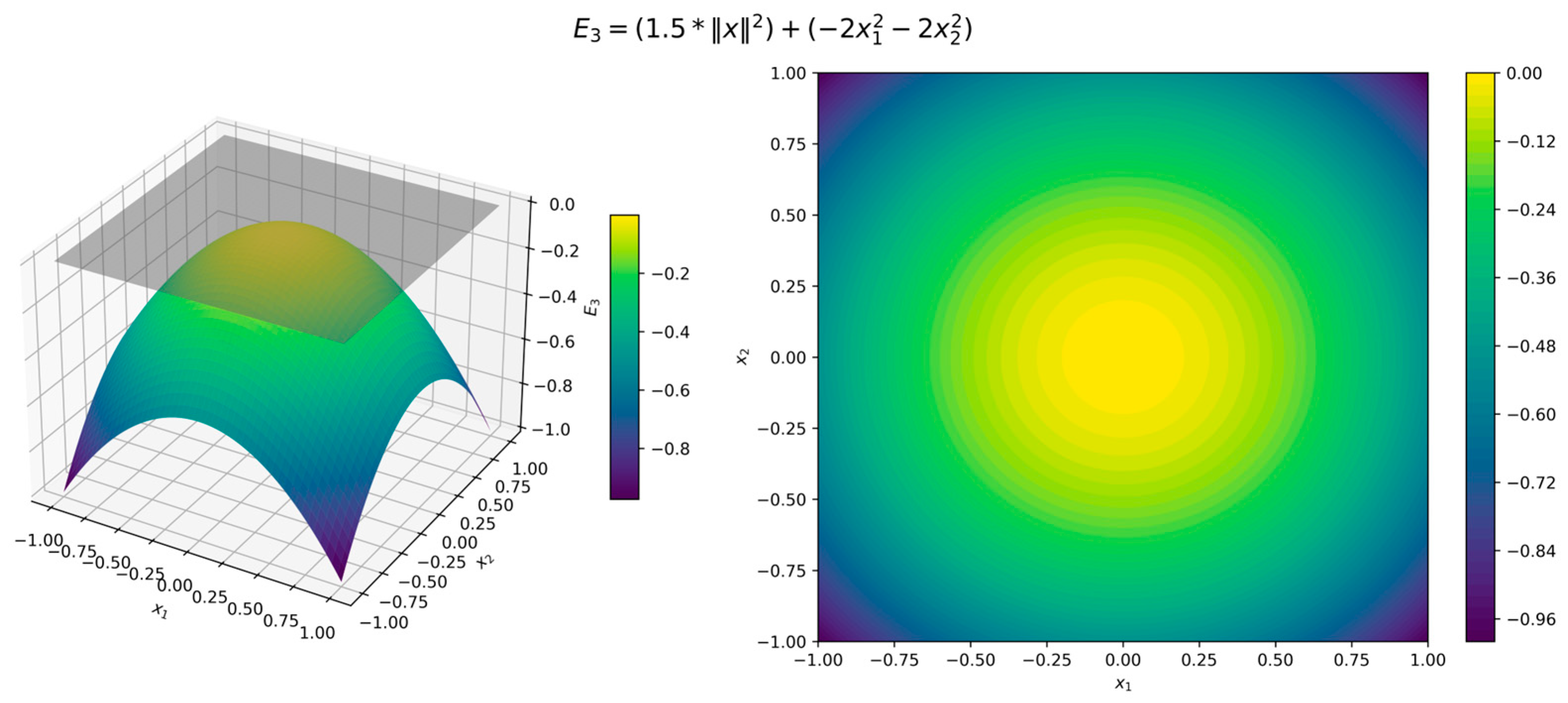

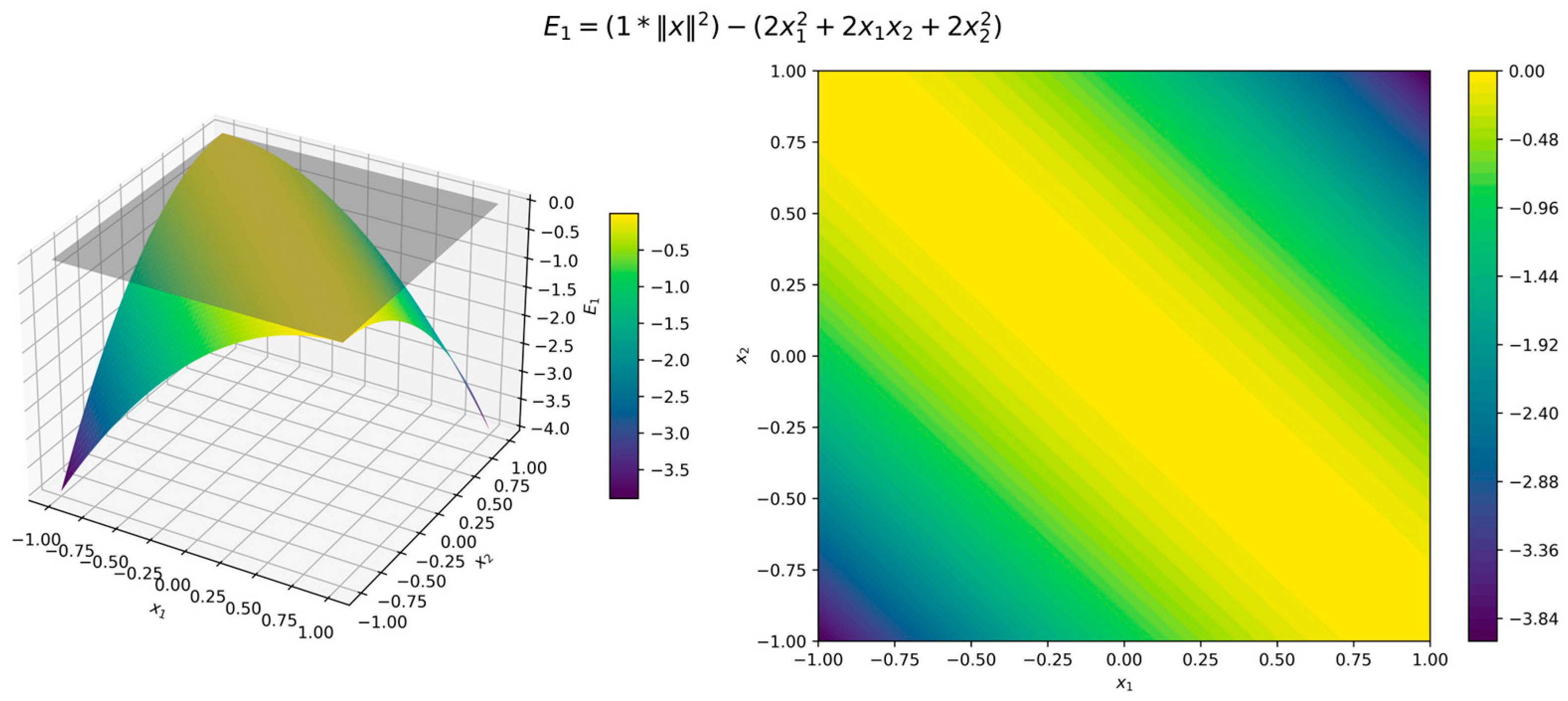

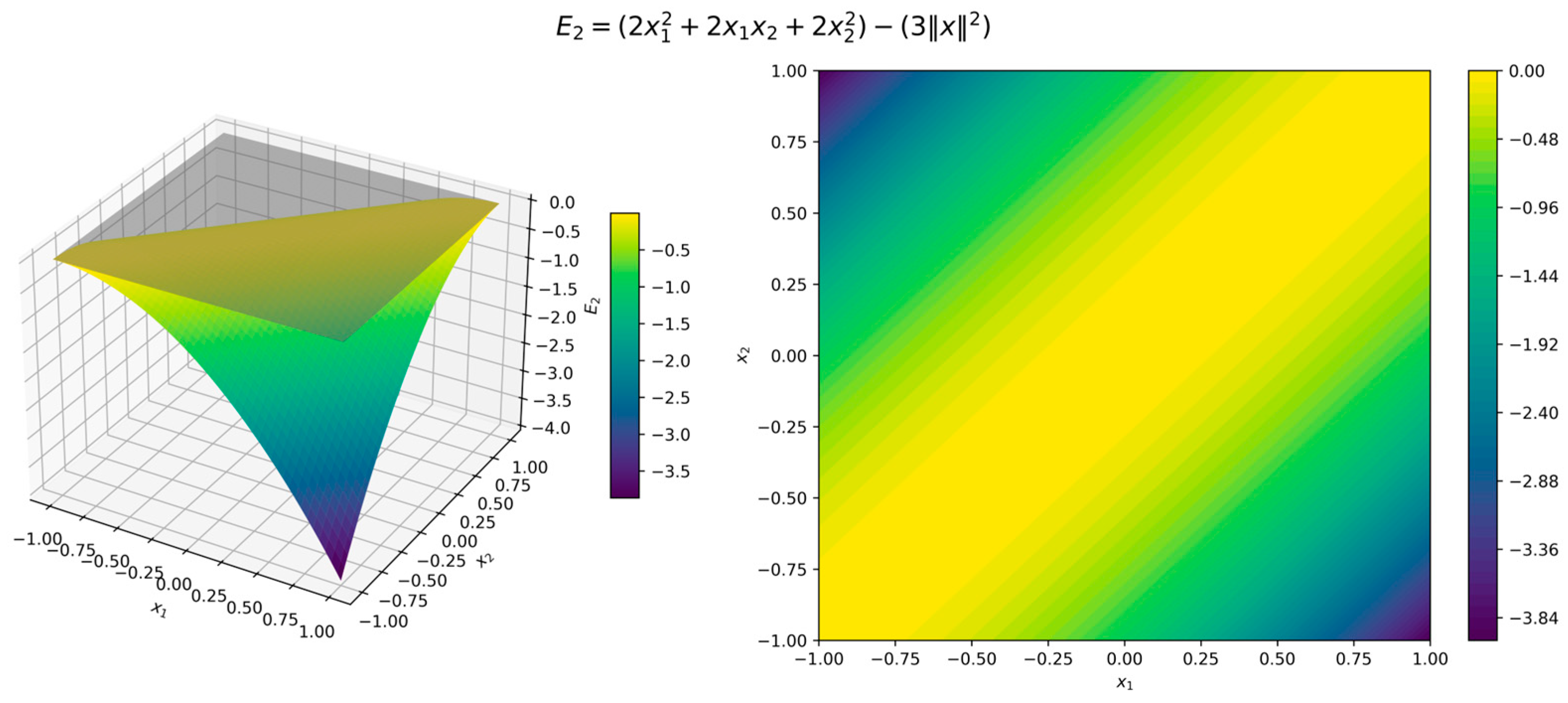

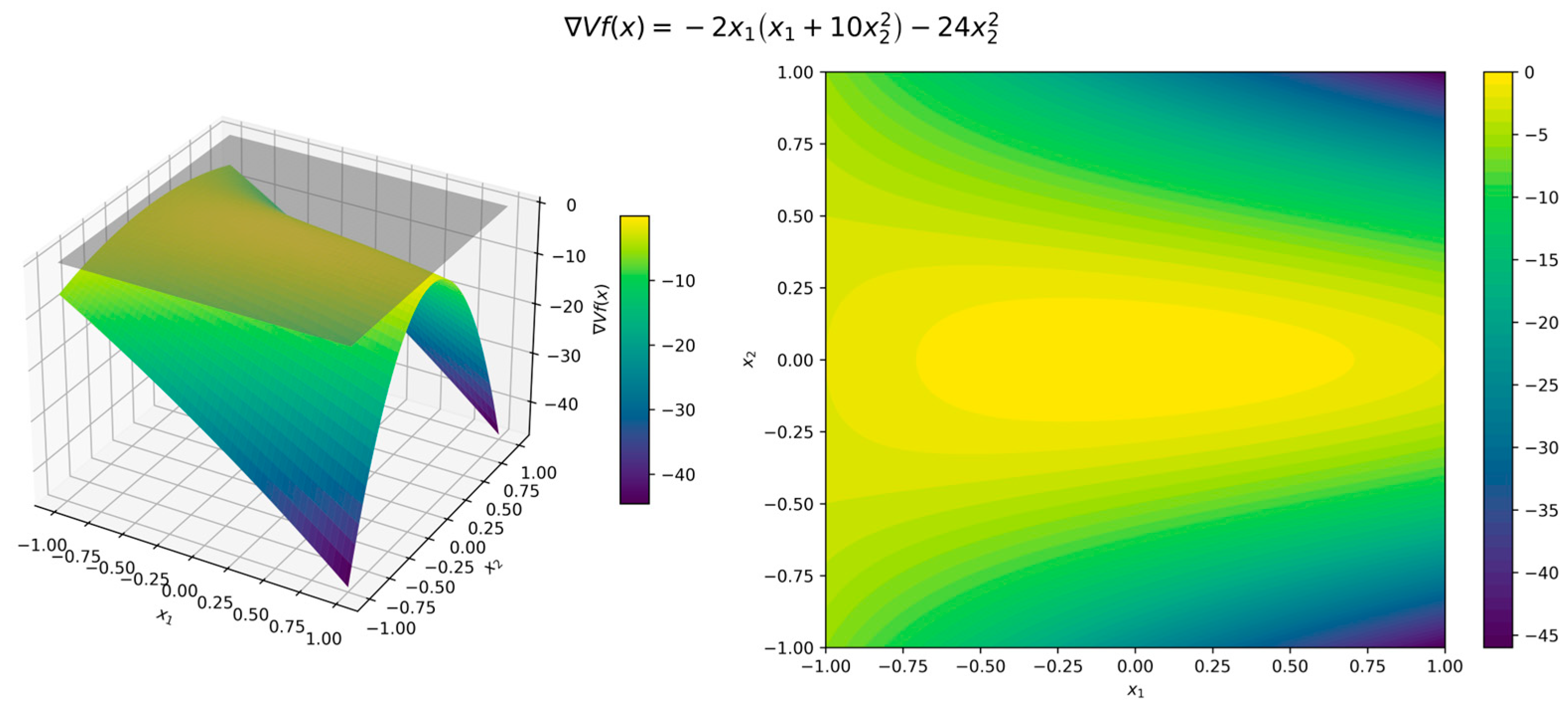

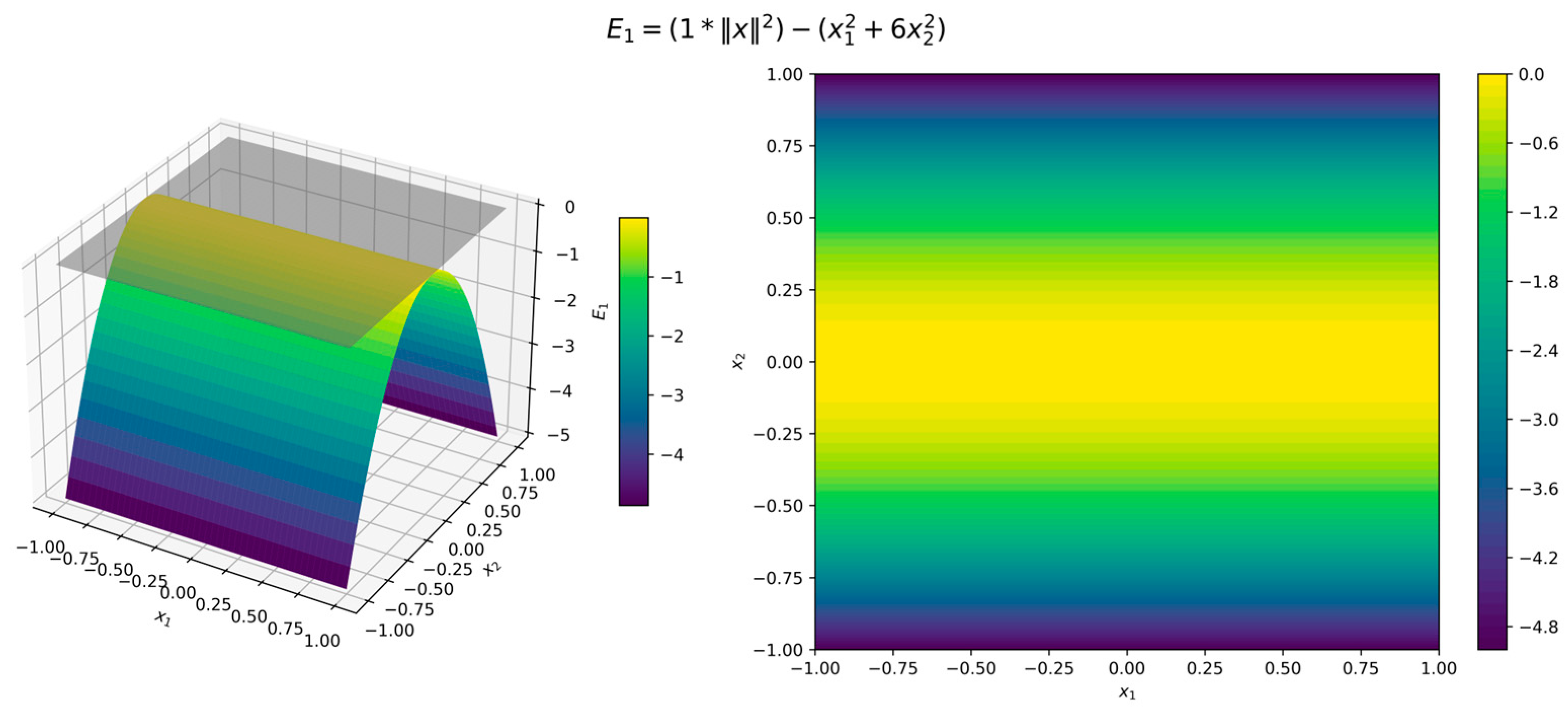

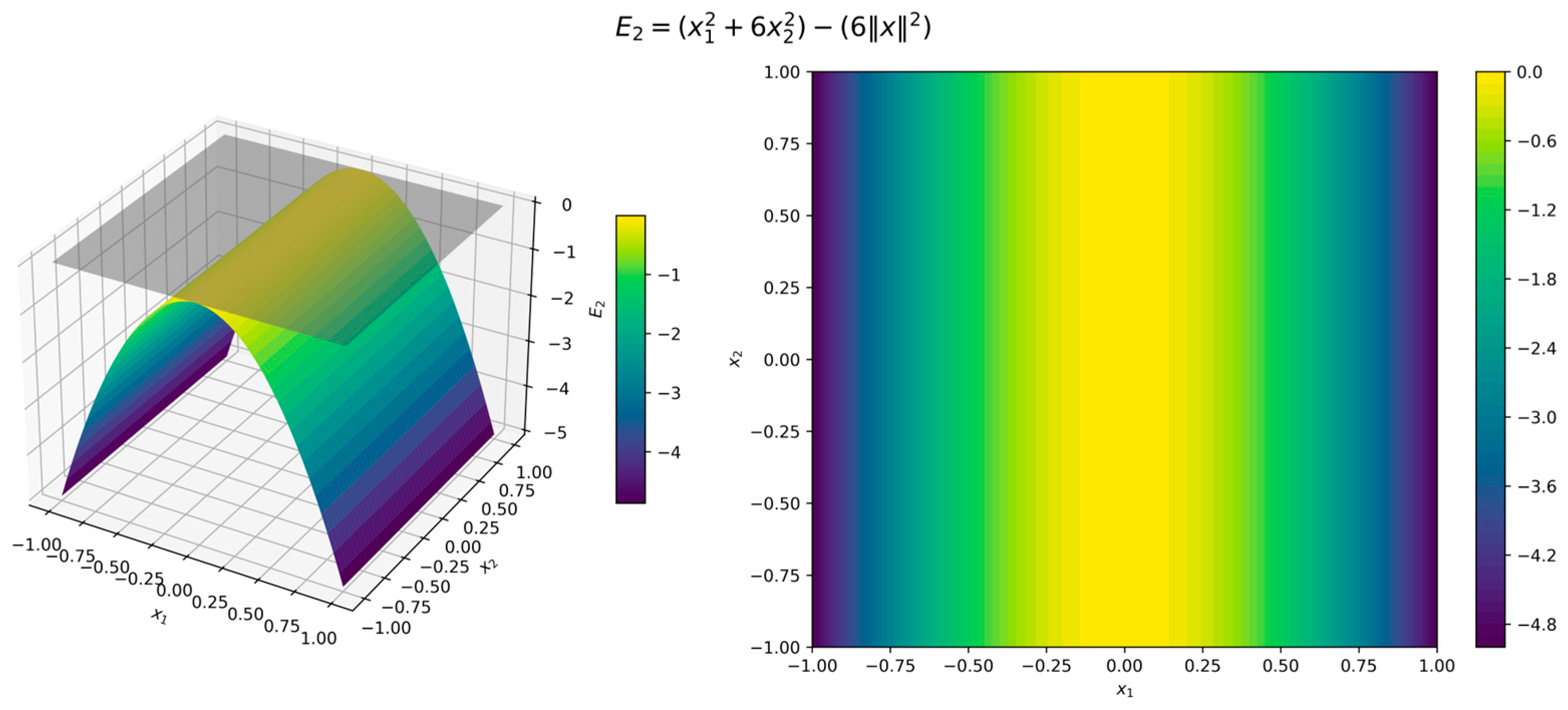

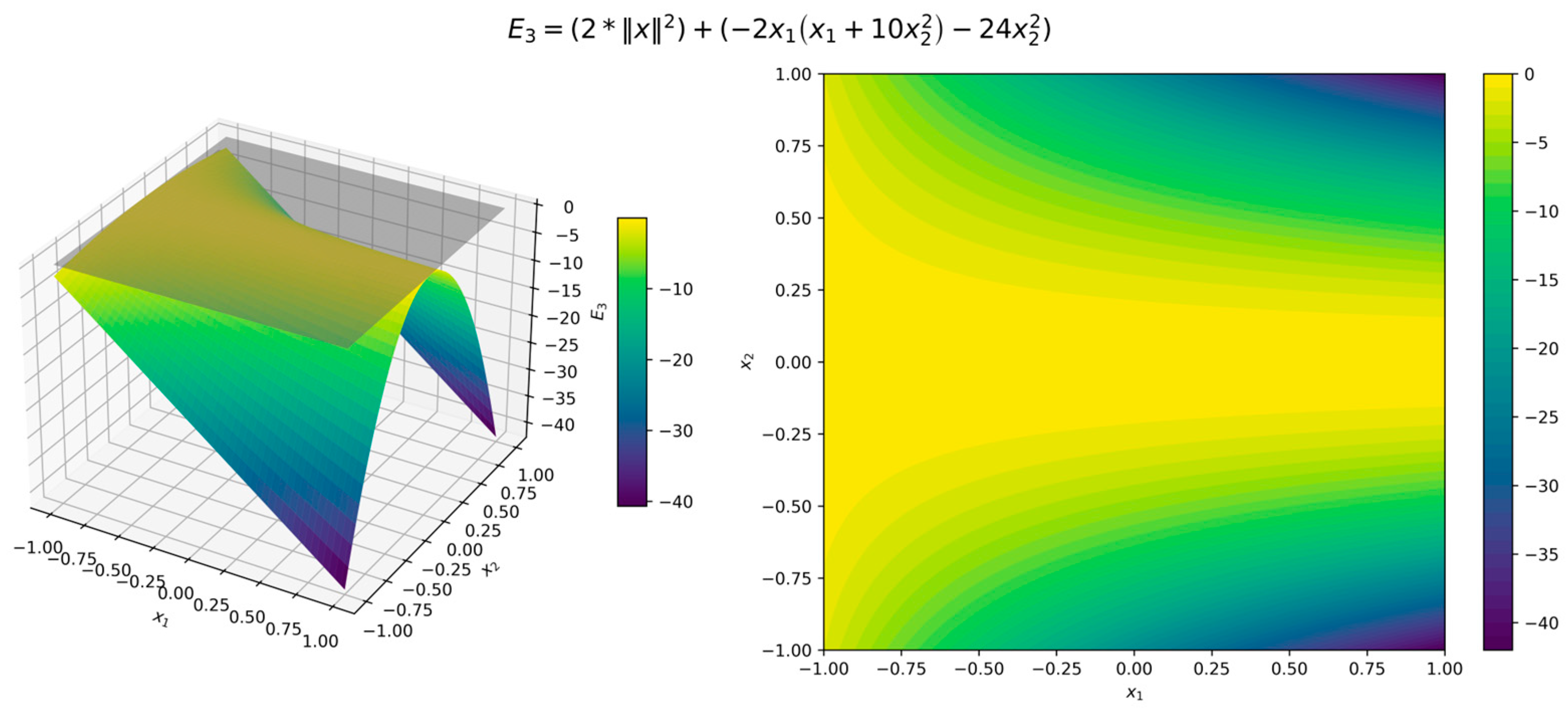

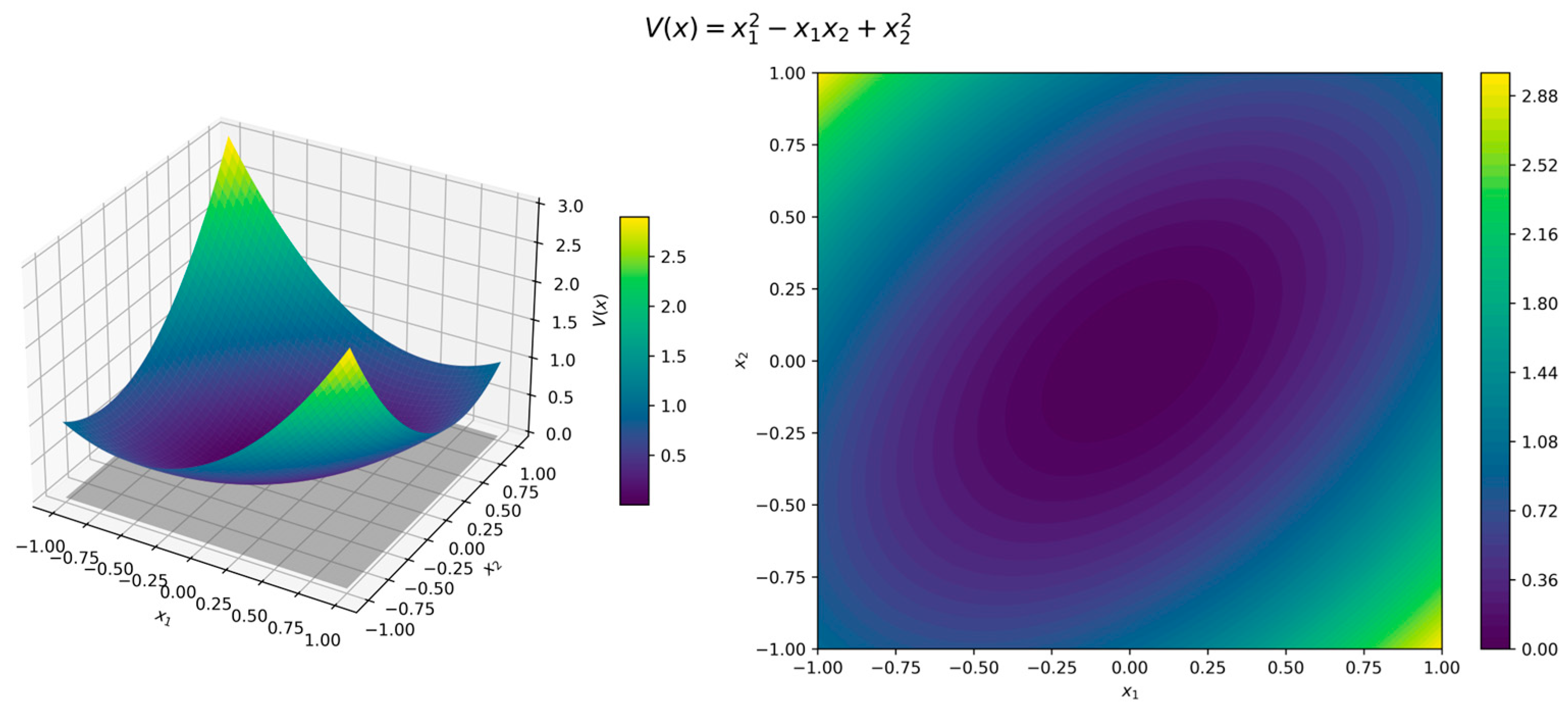

For visualization purposes, we define three error functions:

which correspond to the lower-bound, upper-bound, and dissipation penalties, respectively. They are each expected to be non-positive on a chosen domain to satisfy (2) and to ensure the exponential stability conditions are met.

Example 1: Simple Two-Dimensional Linear System This system describes two independent, exponentially stable modes, where each state variable decays exponentially to the origin.

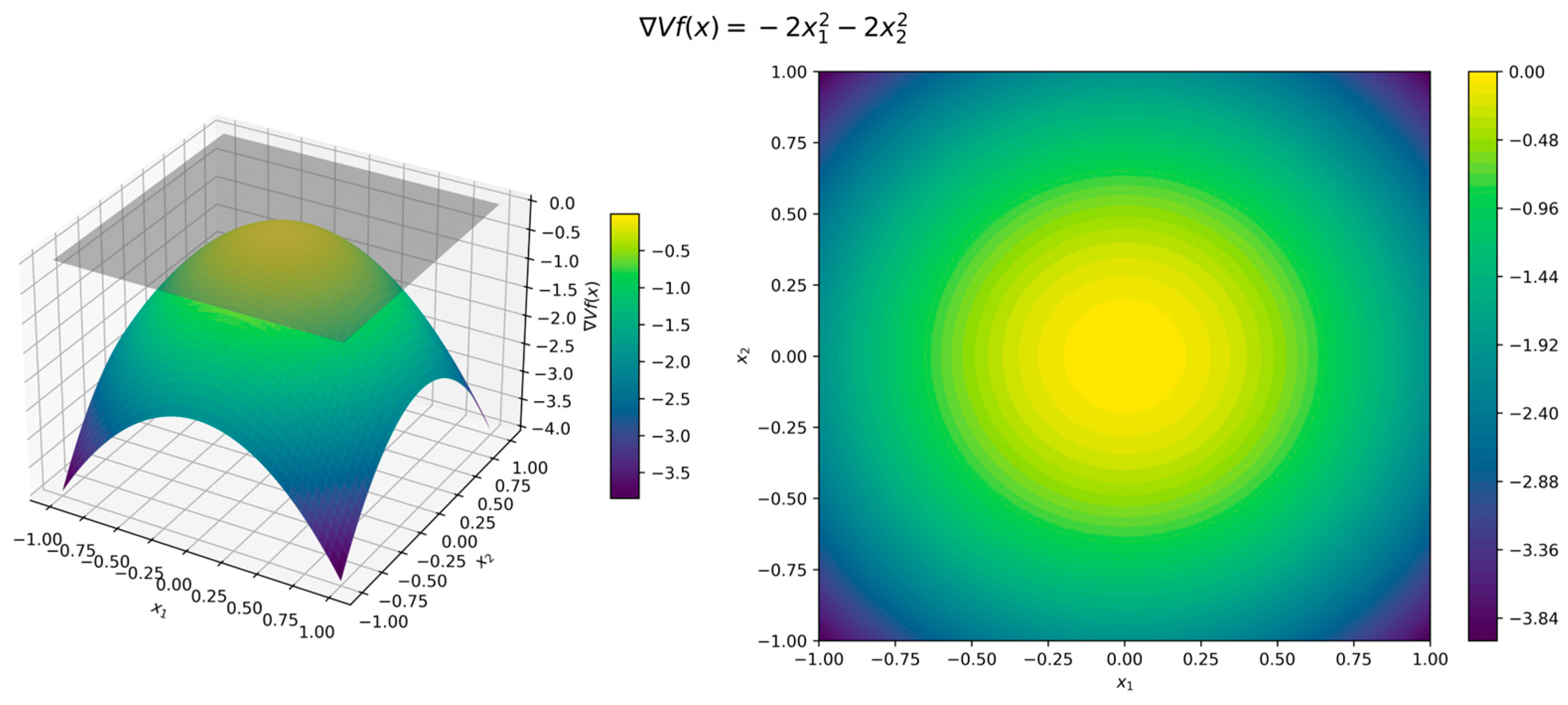

After two iterations, the algorithm identified the following quadratic function, which is commonly used as an initial guess for a Lyapunov function: It is straightforward to verify that is a valid Lyapunov function for this system. Its orbital derivative is Thus, the exponential stability conditions are satisfied for any neighborhood of the origin with the constants and .

The surface and contour plots of the Lyapunov function (11) in Figure 3 illustrate its quadratic form, where the level sets form concentric circles centered at the origin, while the orbital derivative (12) in Figure 4 confirms strict negativity across all directions. Although the error functions (7)–(9) are 0 when and , for illustration we used , , and . Figure 5, Figure 6 and Figure 7 present surface and contour plots of the errors using illustrative parameters. This is a classic example of a nonlinear dynamical system. This system satisfies the conditions of Theorem 4, where the Hurwitz linearization matrix at the equilibrium point confirms the exponential stability of the origin.

The algorithm successfully identified the following quadratic Lyapunov function in the ninth generation: The orbital derivative is The Lyapunov function guarantees the exponential stability of the damped pendulum system (13) within the domain and constants , and .

Figure 8 presents the surface and contour plots of the Lyapunov function (14), highlighting its quadratic form, where the level sets form concentric ellipses centered at the origin. The corresponding orbital derivative (15), shown in Figure 9, confirms strict negativity across all directions. Additionally, Figure 10, Figure 11 and Figure 12 present the surface and contour plots of the errors , visually confirming that the exponential stability conditions of the origin are met. Example 3: A Two-Dimensional Polynomial System This example, presented in [11], examines a two-dimensional system that employs a compositional Lyapunov function. This system meets the criteria of Theorem 4, where the Hurwitz linearization matrix at the equilibrium point confirms the exponential stability of the origin. The algorithm successfully identified the Lyapunov function after 16 iterations: The orbital derivative is The Lyapunov function (17) ensures that the polynomial system (16) is exponentially stable within the domain and constants , and .

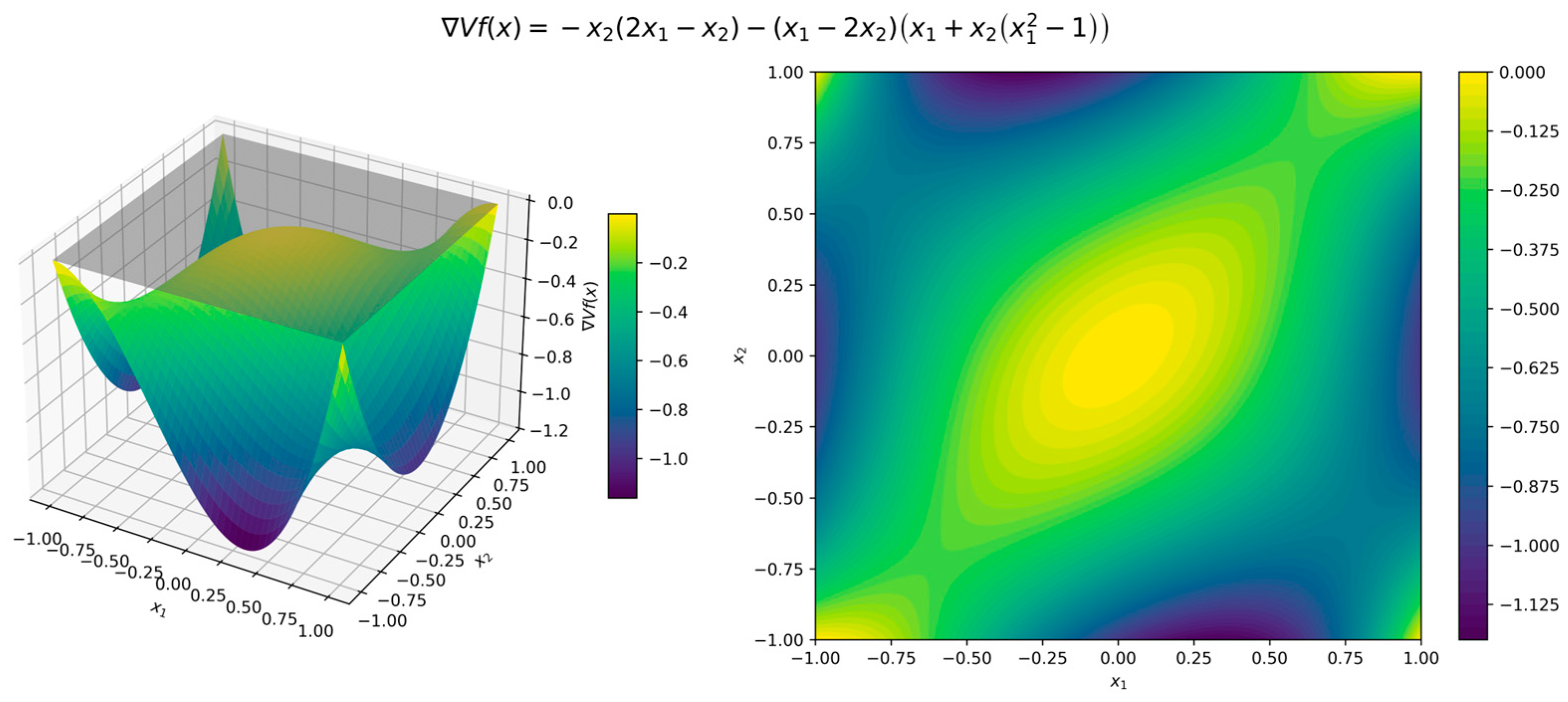

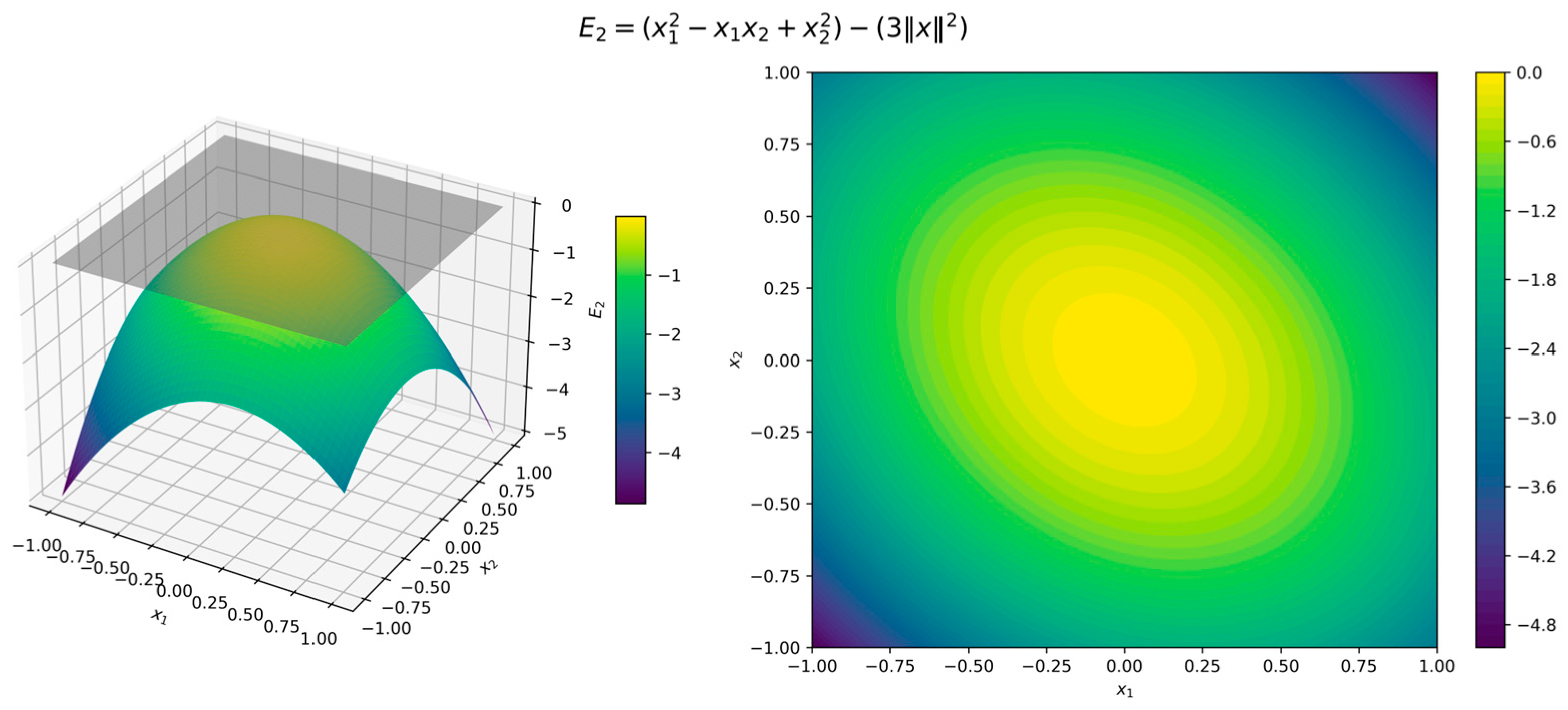

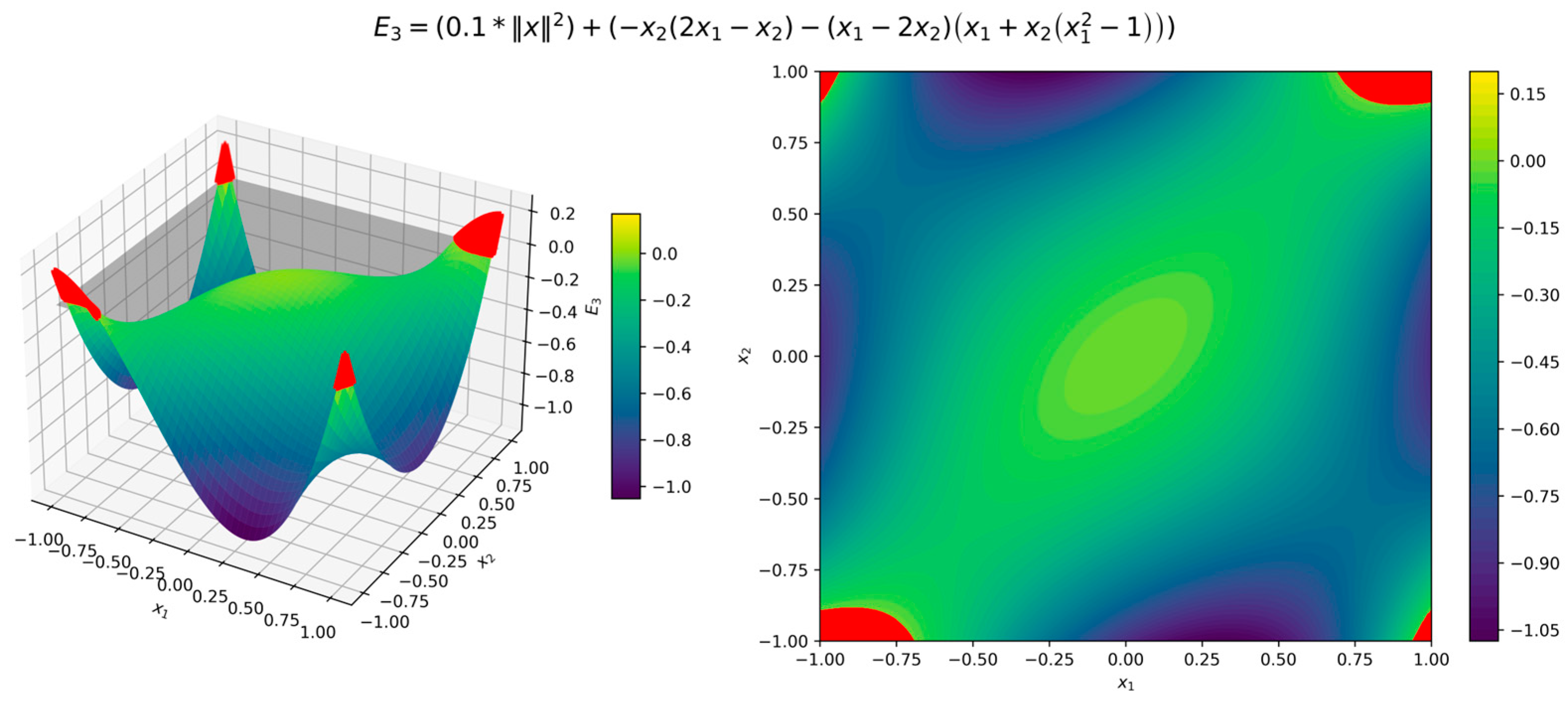

Figure 13 shows the surface and contour plots of function (17), visually confirming its properties. Figure 14 shows that the orbital derivative is negative over the considered domain. Figure 15, Figure 16 and Figure 17 display the surface and contour plots of the error functions , and , providing additional insight into the effectiveness of (17) in capturing the stability characteristics of the polynomial system (16). Example 4: The Van der Pol Oscillator This system provides another classic example of a nonlinear dynamical system—a Van der Pol equation in reverse time, that is, with replaced by . It fulfills the conditions outlined in Theorem 4, with the Hurwitz linearization matrix at the equilibrium point , thus verifying the exponential stability of the origin.

The algorithm successfully identified the Lyapunov function in the twelfth generation: The orbital derivative is The Lyapunov function guarantees the exponential stability of the Van der Pol system (19) within the domain and constants , and .

Figure 18 shows the surface and contour plots of function (20), visually confirming its properties. Figure 19 depicts the orbital derivative, revealing the intricate nonlinear dynamics of the system. Figure 20, Figure 21 and Figure 22 display the surface and contour plots of the error functions , offering further insight into the accuracy with which function (20) represents the stability properties of the Van der Pol system (19). The red region in Figure 22 indicates areas where , meaning the dissipation conditions are not met. However, this region falls outside the domain 6. Discussion

Our numerical experiments demonstrate that a GP-based search for polynomial Lyapunov functions reliably verifies stability of nonlinear systems. The fitness metric (4) employed in our GP-based method was specifically designed to enforce both positivity and dissipation conditions, ensuring that every Lyapunov criterion is satisfied. This metric effectively steered the evolutionary process toward valid polynomial solutions in our numerical tests. Additionally, the method’s inherent flexibility allows it to adapt to a variety of problem configurations and complexities. However, further work is needed to optimize the algorithm for higher-dimensional systems.

Future research can build on this work in several promising directions:

Enlarging the domain of attraction: Refinements are required to systematically expand the region over which the Lyapunov function remains valid. By prioritizing domain extensions in GP, the resulting Lyapunov function could cover a larger portion of the state space, yielding stronger stability guarantees.

Enhanced constant selection and optimization: This approach can be strengthened further by developing advanced heuristics and adaptive strategies for tuning the numerical constants, including the terminal node constants and the Lyapunov fitness coefficients , and . These enhancements could mitigate convergence to suboptimal solutions, optimize computational load, and improve the method’s effectiveness in estimating viable solutions.

Compositional analytical functions for high-dimensional systems: For larger-scale systems, adapting the algorithm to construct compositional (separable or modular) Lyapunov functions is promising. Such an adaptation could mitigate the curse of dimensionality while preserving solution interpretability in complex, real-world applications.

Additionally, our GP-based method can be extended to the control domain by generating control Lyapunov functions to facilitate the design of robust feedback controllers. Future work could also explore theoretical convergence guarantees, performance bounds, and the integration of these evolutionary methods with classical control techniques to develop a unified framework that combines the strengths of both data-driven and analytical approaches in stability analysis.

7. Conclusions

In this paper, we proposed a genetic programming-based method to construct polynomial Lyapunov functions for exponentially stable nonlinear systems. In Theorem 4, we established the existence criterion for these functions, which serves as the primary indicator of the method’s applicability. We also introduced the Lyapunov fitness (3) to quantify deviations from exponential stability conditions.

We validated the method through numerical experiments on several dynamical systems, including a simple linear system (10), a damped pendulum (13), a polynomial system (16), and the Van der Pol oscillator (19). These tests demonstrate that the proposed method effectively constructs Lyapunov functions and can be used as an automated tool for stability analysis.

The future directions outlined highlight the potential to further enhance and extend the proposed approach. This work offers a flexible, automated method for Lyapunov-based stability certification, bridging evolutionary computation with classical control theory.