1. Introduction

When the subject of surveys is sensitive in nature (potentially embarrassing, shameful, or even illegal) respondents may not be truthful when they answer. Warner (1969) and Greenberg (1969) first pioneered models that provided some means through which respondents could hide their true responses to such sensitive questions, thereby removing their incentive to lie [

1,

2]. While these first models were binary in nature (are designed for questions with yes/no responses), there are also sensitive questions that are of a quantitative nature. While misrepresentation in responses to quantitative questions is less black and white, it is clear that a social desirability bias (SDB) impacts them too, and as Lanke (2017) described, causes respondents “to underreport socially undesirable attributes… and to over report more desirable attributes” [

3]. Both Warner (1971) and Greenberg (1971) therefore followed up their models with new models that were applicable to sensitive questions with quantitative responses [

4,

5].

Following Warner’s and Greenberg’s inventions, many new RRT models were introduced, and they generally added ever greater levels of complexity. For instance, Pollock and Bek (1976), Eichhorn and Hayre (1983), and Diana, G and Perri (2011) all proposed models that included additive and/or multiplicative scrambling [

6,

7,

8]. Perri (2008) combined Warner and Greenberg features into a single blank card model [

9].

All of this complexity was intended to foster greater levels of scrambling and therefore to encourage more truthful RRT responses, but it came at a significant price: the potential for error. A flurry of research involving measurement error followed. Blattman et al. (2016) developed a survey validation technique that uses qualitative work to check for measurement error in potentially sensitive behaviors [

10]. Sharma and Singh (2015) proposed the use of auxiliary information to improve efficiency, assuming that non-response and measurement error are present in both the study and auxiliary variables [

11]. Khalil et al. (2018) proposed a generalized estimator in the presence of measurement error [

12]. Makhdum et al. analyzed scenarios where non-response and measurement error are simultaneously present, and Singh and Vishwakarma (2019) proposed a method to measure the combined effect of measurement error and non-response when auxiliary information is used [

13,

14]. Priyanka et al. (2023) investigated the impact of measurement error in RRT successive sampling [

15]. Beyond just accounting for ME, Audu et al. (2020) proposed a class of estimators that has superior efficiency when measurement errors are present [

16].

In

Section 2 of this study, we review the Mixture Optional Enhanced Trust (MOET) model proposed by Parker et al. (2024), as well as the ratio estimator for the MOET model proposed by Gupta et al. (2024); these will serve as the basis of this study [

17,

18]. Then, in

Section 3, we derive basic and ratio estimators that reflect the impact of measurement error. In

Section 4, we recognize that ME reduces efficiency, and study (1) the circumstances under which the ME resulting from the collection of auxiliary information is so large that it undermines the benefit of collecting such information, and (2) the circumstances under which ME is so large that it undermines the RRT’s overall benefit (the elimination of bias).

We then, in

Section 5, turn our attention to an aspect of auxiliary information that has not been adequately explored. It is well known that auxiliary information can improve efficiency. However, auxiliary comes at a cost—its presence may reduce privacy. Indeed, if auxiliary information (represented by

) was perfectly correlated with the response to the sensitive question (

), then knowledge of

would lead directly to knowledge of

. We explore this dynamic, and we also recognize that at the same time auxiliary information reduces privacy, measurement error inadvertently increases privacy. In

Section 6, we provide simulations that validate the estimators developed in

Section 3 and explore the behavior of these estimators.

2. MOET Model (2024)

Here, we present the model that will serve as the basis of our study. The Mixture Optional Enhanced Trust (MOET) model was first proposed by Parker et al. (2024) [

17], and features several recently innovated RRT features—mixture, optionality, and enhanced trust.

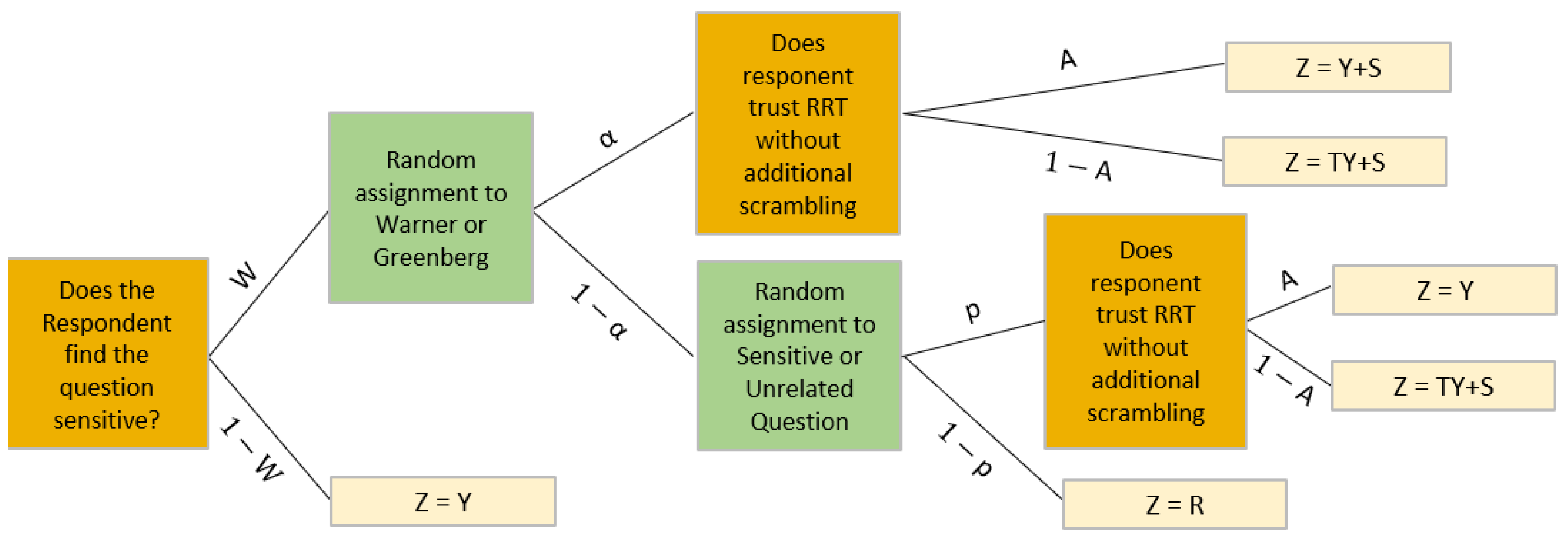

Figure 1 provides a diagram of the model. In this section, we discuss certain common RRT manipulations that could trigger measurement error (ME) in RRT models like this one. Then, we discuss the basic and ratio mean estimators for this model, as proposed in earlier papers.

In the MOET model decision tree, two of the decisions (those involving α and ) must be made by a random process where the outcome is unknown to the researcher; therefore, the respondent themselves has to, in some sense, perform the randomization. This might be achieved by the respondent shuffling and picking a card from a deck. We mention this because although the process may be very simple, it none the less presents an opportunity for confusion and error. Note that ME includes any unintentional error (including confusion) that results in a recorded response being different from the respondent’s intended response. Additionally, some respondents will need to scramble their true responses by addition and/or multiplication ( or ); again, these calculations must be performed by the respondent. There are many ways to keep these calculations simple (making it certain that and take on only positive whole-number values, providing a calculator or charts of computations to the respondent, etc.) but, nevertheless, the fact that calculations must be performed by the respondent presents the opportunity for ME.

MOET Parker Model Review [17]

We now review MOET model estimators. Detailed below are the basic mean estimator that Parker et al. (2024) proposed based on a split sample approach and an expression for the model’s efficiency (MSE), which will be used as a basis for comparison later in this study [

17]. To distinguish it from the ratio estimator (which will also be considered in this study), we will call this mean estimator the basic mean estimator (or the basic estimator); the basic estimator along with its mean square error (MSE) is given in Equations (2) and (3).

where

In Equations (2)–(6), the following symbols are used:

The true response to the sensitive question. This random variable has mean and variance .

The response collected from the respondent in the ith sub-sample, . This random variable has mean and variance .

an additive scrambling variable with mean and variance .

A multiplicative scrambling variable with mean and variance . is independent of S and Y.

response to unrelated question with mean and variance .

The sample size. In split sampling, is split into and , where .

the probability that an individual that has been assigned to the Greenberg sub-model within sub-sample is assigned the sensitive question, as opposed to the unrelated question.

the probability that a respondent will trust the RRT methodology without additional scrambling.

the sensitivity level of the sensitive question, that is, a proportion of the respondents do not consider the question sensitive and are willing to provide true responses without scrambling.

The auxiliary variable with known mean and known variance . This variable is known to have a strong positive correlation with .

The privacy provided by the MOET model is given by

where

Gupta et al. further proposed a ratio estimator for the MOET model, consistent with standard ratio estimator formulation as represented by Thompson (2012) [

18,

19]. The estimator and an expression for the model’s efficiency (MSE) are given in Equations (5) and (6). These can be used when high-quality auxiliary information is available.

4. Impacts of Measurement Error

We have discussed the fact that RRT administrative complexities can heighten the advent of measurement error. In this section, we will study the size of measurement error impact on estimation in

Section 4.1. Then, in

Section 4.2, we will determine how significant ME would have to be to offset the value of using RRT in the first place.

4.1. Impact of Measurement Error on Estimator Efficiency

Measurement error will of course be more impactful on MSE when it is large. In

Figure 2, we consider a scenario that is consistent with other RRT papers (see, for example, the scenarios assumed in

Section 6 of Parker et al. (2024)), but with the addition of measurement error [

17]. We consider both the error in measuring

(represented by the random variable

) and in measuring

(represented by the random variable

).

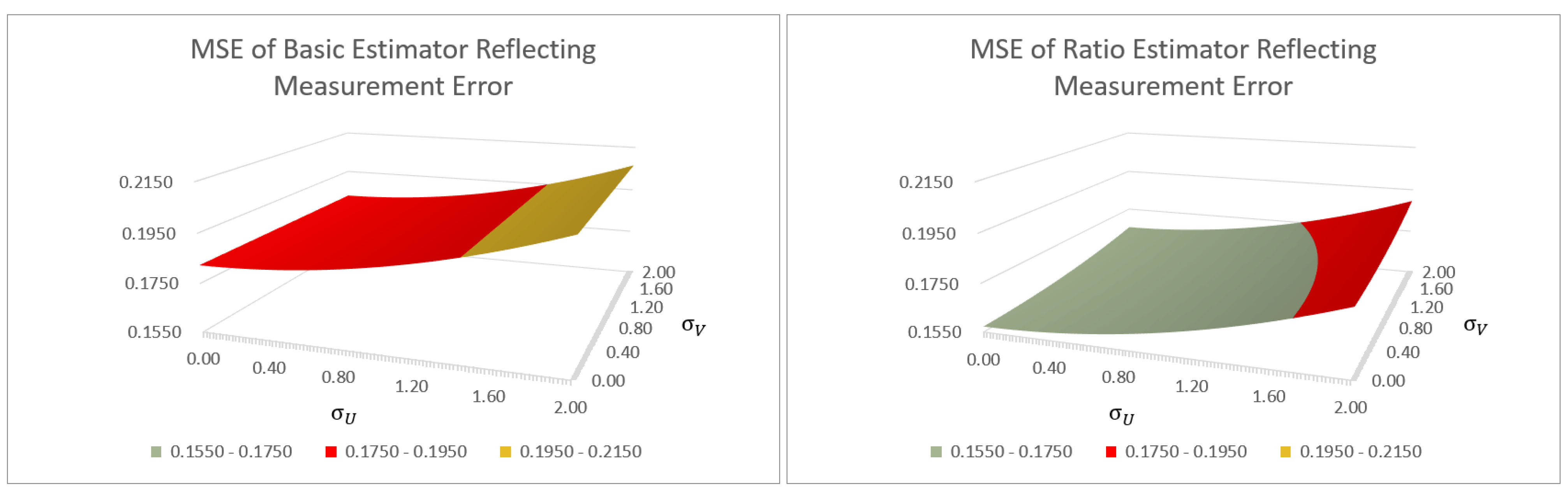

Figure 2 shows the MSE of the basic and ratio estimators across possible levels of measurement error, as represented by their standard deviations (each ranging from 0 to 2) in a standard scenario represented by the values listed immediately following the figure. Note that

is represented in the leftside graph to enable direct visual comparison between the two graphs, in spite of the fact that the leftside graph does not contain auxiliary information and therefore MSE does not vary across the

dimension.

The value of 2 was chosen as the upper limit for standard deviation (see

Figure 2) to represent a high measurement error (a standard deviation of 2 implies that the measurement error equals 20% of the mean response value).

We make several observations. First, we see, not surprisingly, that as the measurement error rises, the estimator efficiency declines (MSE increases). We also see that the rate of increase in MSE for the ratio error (which is subject to two kinds of measurement errors) is steeper than that of the basic estimator. The highest MSE shown for the basic estimator (corresponding to

) is 13.3% greater than the MSE observed when the measurement error is not accounted for. This can be thought of as the extent to which MSE would be under-represented if the measurement error was in fact at the

level but was not taken into account. In the righthand graph of

Figure 2, we see that for the ratio estimator, the MSE rises both when

rises and when

rises, that is, MSE increases when there is significant error in the measurement of

and/or

. When there is high error in measuring both (corresponding to

), then the MSE of the ratio estimator will be 20.6% greater than the MSE observed when the measurement error is not accounted for. But even with maximum measurement error, the MSE of the ratio estimator remains smaller than the MSE of the basic estimator, in this scenario where

. The gain in efficiency realized by making use of auxiliary data is greater than the loss in efficiency attributable to the errors in measuring the data. While

Figure 2 represents only one particular scenario, this key result can be generalized by solving the following inequality:

A comparison between Equations (13) and (22) makes it immediately clear that this inequality can be reduced to

Under the standard assumption that

, the expression can then be simplified and restated as follows:

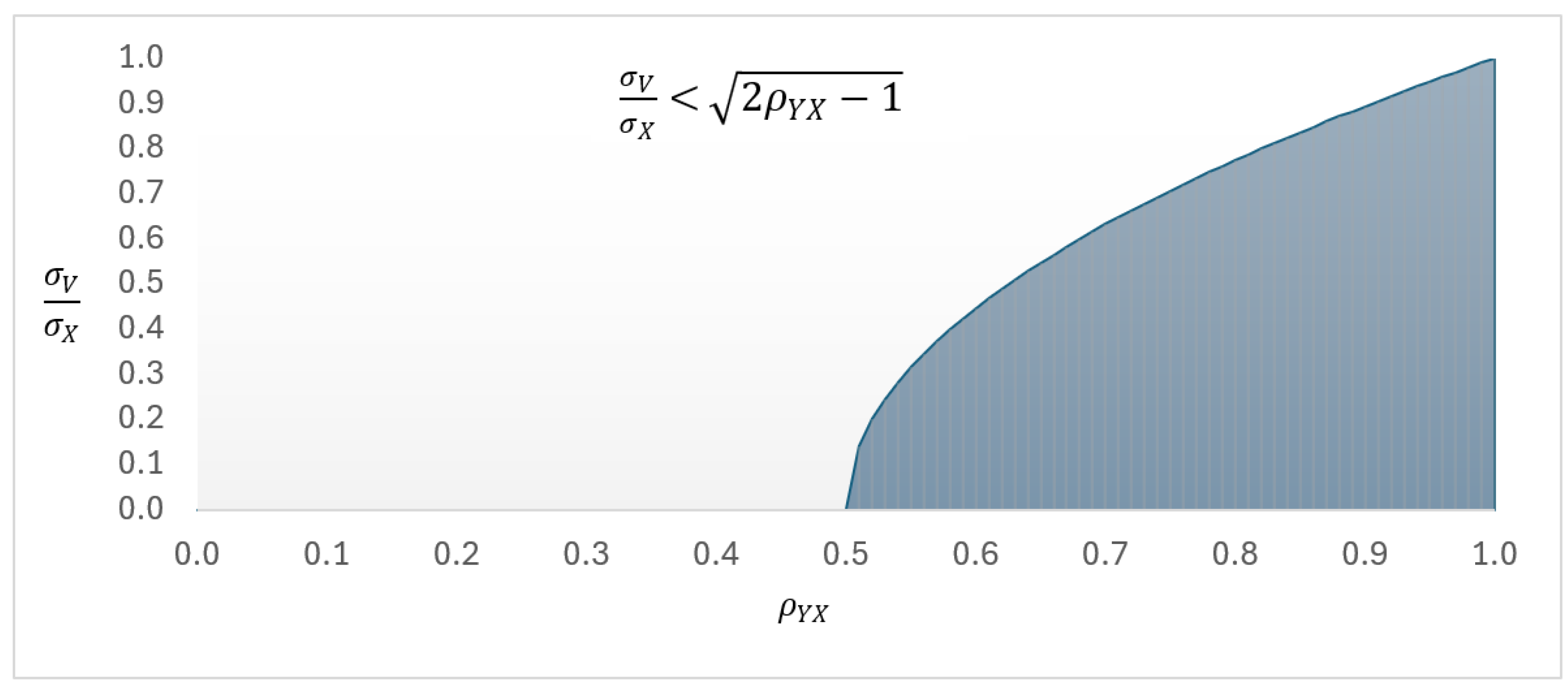

This condition is represented by the shaded region in

Figure 3.

This figure shows us, for example, that if , then estimation will benefit from auxiliary information provided that the standard deviation attributable to ME is less than ~45% of the standard deviation associated with the auxiliary variable. In short, Equation (24) does not impose a restrictive condition, as will generally be much larger than . In short, we can conclude that the specter of measurement error will infrequently discourage the use of well-correlated auxiliary information.

4.2. Measurement Error Impact on RRT Objective: Inducing Truthfulness

In this subsection, we study whether the benefit of RRT (removal of lying bias) outweighs its cost (addition of measurement error). Because RRT methods often require the respondent to consider whether they want to answer the sensitive question directly, to scramble their true response, to answer an unrelated question, to opt for additional levels of scrambling, and so on, the administration of RRT can result in elevated levels of measurement error.

For this reason, we now turn our focus to an important question: is there a point at which measurement error becomes so significant that it nullifies the RRT model’s ability to secure truthful responses efficiently? We develop a model to study this issue. The model relies on several key assumptions. The reasonableness of these assumptions will be discussed at the end of this subsection.

Our model is constructed as follows:

We define a parameter (lying bias), which represents the extent to which a group’s mean response will be impacted by untruthfulness, without some means of mitigation like RRT. As some questions are more sensitive than others, we will consider sensitive questions with 1%, 5%, and 10% lying bias (LB). For example, if the mean group response to a sensitive question had a +5% lying bias, and the true mean response to the sensitive question was 10, then a direct survey that employed no tactics to reduce the bias would see an average response of 10.5.

We will model untruthfulness as a normal random variable with mean and standard deviation . We choose this value of because it fixes the range of lie-adjusted responses so that the lie-adjusted mean of is precisely two standard deviations from the true mean , and therefore approximately 97.5% of lie-adjusted responses will be adjusted in the same direction as the assumed bias.

We define as a random variable that reflects the responses that would be yielded by a survey soliciting direct responses to a sensitive question. Responses will equal truthful responses plus the impact of lying. Specifically,

In various scenarios representing different levels of measurement error and lying bias, we want to calculate the probability that our basic MOET estimator outperforms estimation based on a direct survey (that will yield responses with some level of untruthfulness, but will not face material measurement error). Specifically, we calculate the probability that the MOET estimator will result in an estimate closer to the true value than the direct survey estimate will:

Using basic inequality identities, expression (28) can be represented as

which can be rewritten as

To calculate the probabilities in the expression above, we need the distributions of

and

. Recalling that

and

, we can write

Before concerning ourselves with

and

, we find the distributions of

and

. As

and

are both estimates of the quantity

based on the same set of sampled

values, they are correlated. None the less,

+/−

have normal distributions as represented below:

and

are normal and independent of each other and independent of both

and

. We can therefore find the distributions of

and

shown in Equations (31) and (32). We conclude that

Written in more simplified terms,

The pair of random variables

and

is bivariate normal, so

where

With this joint distribution specified, Equation (28), which represents the probability that the basic estimator results in an estimate closer to the true value of than a direct survey would, can be studied.

Note that a similar theoretical expression to Equation (28), representing the probability that the ratio estimator would result in an estimate closer to the true value of than a direct survey would (i.e., ), could not be derived because the distribution of the key quantity equals the ratio of two correlated normal variables, and this expression has no known distribution.

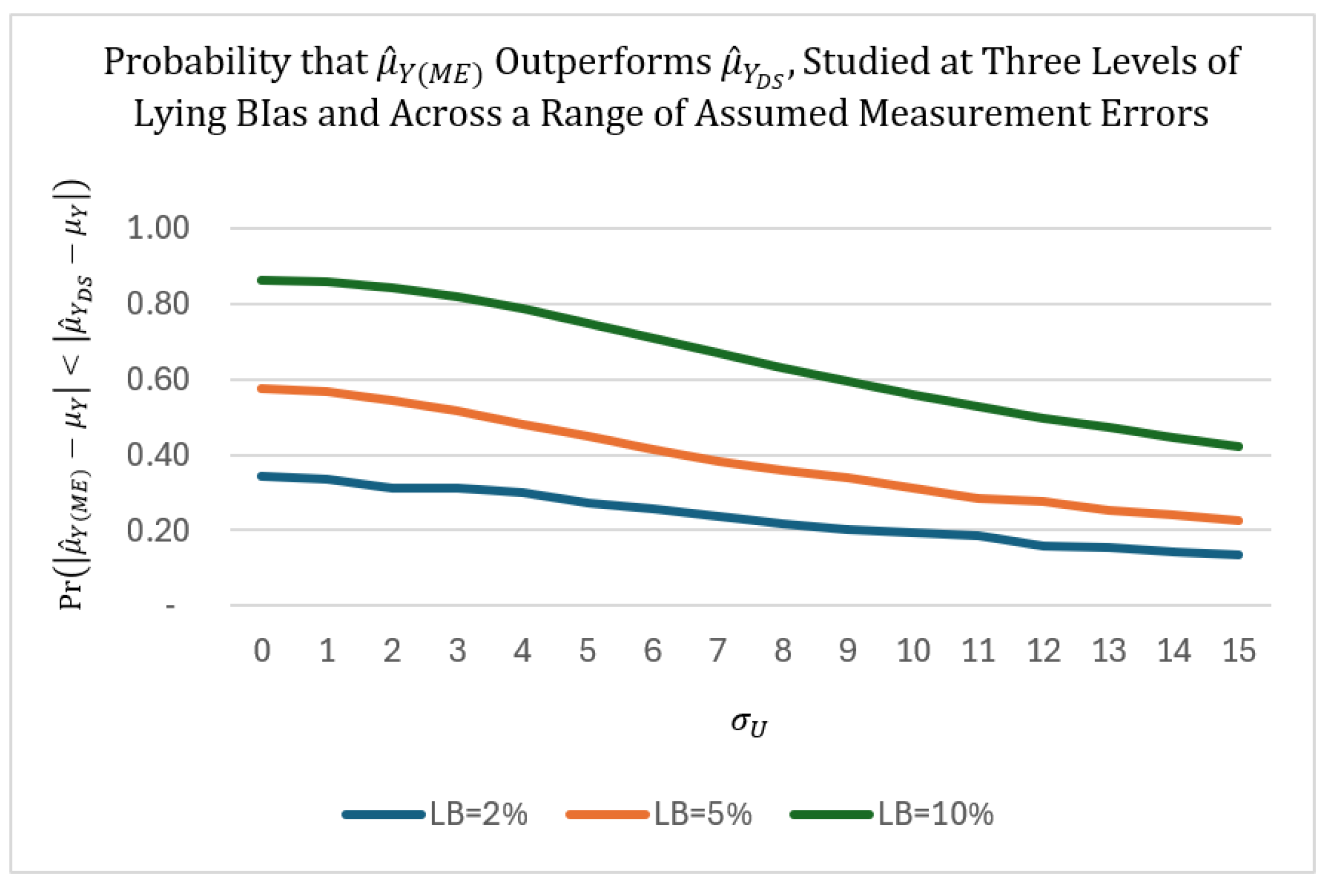

Figure 4 shows the probability that

will be closer to the true value

than

is, over a range of measurement errors (

) in a standard scenario represented by the values listed immediately following the figure. For illustrative purposes, we assume that the direct survey, which does not involve the administrative complexities inherent to RRT models, is not subject to measurement error. However, the direct survey will be impacted by untruthfulness (lying bias = LB). In contrast, the RRT model will eliminate untruthfulness but will be subject to measurement error. The probabilities represented in the figure are based on theoretical calculations that use empirical estimates for correlations; the calculated theoretical probabilities match empirical simulations closely.

As would be expected, when higher levels of untruthfulness are present and measurement error is low, the RRT-based estimate will be dramatically more reliable than the direct survey estimate . For example, when there is no measurement error at all and respondents overreport their true responses by 10% (LB = +10%), will yield an estimate closer to the true mean response than with 86% likelihood. Also, as expected, the superiority of declines as the measurement error increases. But the most important observation we make is that the probability of MOET superiority deteriorates only gradually as ME rises. Therefore, for a given level of LB and sample size, small levels of ME do not excessively undermine MOET estimation. For example, when , will continue to be superior to (closer to the true value with more than 50% likelihood) except in implausible scenarios where .

While our model is specific both in terms of assumptions and in terms of scenario, its conclusions are strong, as seen in

Section 6. When LB is material and sample sizes are adequate, plausible levels of ME do not reduce MOET performance so drastically that its benefit (estimating truthful responses accurately) is thwarted.

5. Measurement Error Accentuates Privacy

Privacy is an absolutely critical element of all RRT methodologies. Lanke (1976) intimated that in fact the most important quality of an RRT model is the extent to which it “protect[s] the privacy of interviewees” [

20]. Indeed, it is the fact that the respondent’s true response to a sensitive question is scrambled (as in Warner-type quantitative RRT models) or hidden among similar-looking answers to nonsensitive questions (as in Greenberg-type quantitative RRT models) that gives the respondent the confidence to respond truthfully, free from possible shame, embarrassment, or even legal repercussions. But the collection of auxiliary information may serve to undermine privacy, unmasking the respondent’s identity and revealing their true response to the sensitive question, thereby undercutting the very fundamentals of RRT. Arnab and Dorffner (2006) grappled with this issue, noting that “most surveys are complex” and that usually “information of more than one character is collected at a time. Some of them are of a confidential nature while the others are not” [

21]. The collection of additional auxiliary information can importantly undermine privacy; at an extreme level, if auxiliary information was perfectly correlated with the response to the sensitive question, knowledge of

would immediately lead to knowledge of

.

Parker et al. (2024) pointed out that when auxiliary information (

) is collected, privacy can be represented as in [

17]:

because

is bound by 0 and

and

are defined as follows:

It follows that privacy loss due to auxiliary information is

Using Yan et al.’s (2008) definition of privacy

, privacy in the presence of measurement error would be as follows [

22]:

Recalling that

is uncorrelated with

or

, and that

= 0, this expression can be simplified to

and can be further reduced to

Equation (44) makes it clear that privacy, surprisingly, improves as a result of measurement error. This is because measurement error inadvertently results in extra response scrambling.

When auxiliary information that reduces privacy is collected, privacy can be represented:

Note that auxiliary information will reduce the privacy resulting from RRT perturbation, but not the privacy resulting from measurement error. With this in mind, the privacy loss that occurs when ME is present will be the same as when ME is not present:

For the MOET model, Parker et al. calculated privacy as

where the superscript

in the above expression reminds us that this measure has been adjusted to reflect the fact that optionality does not undermine privacy for the proportion of respondents

who do not consider the sensitive question to be sensitive to them, as per Gupta et al. (2002) [

23].

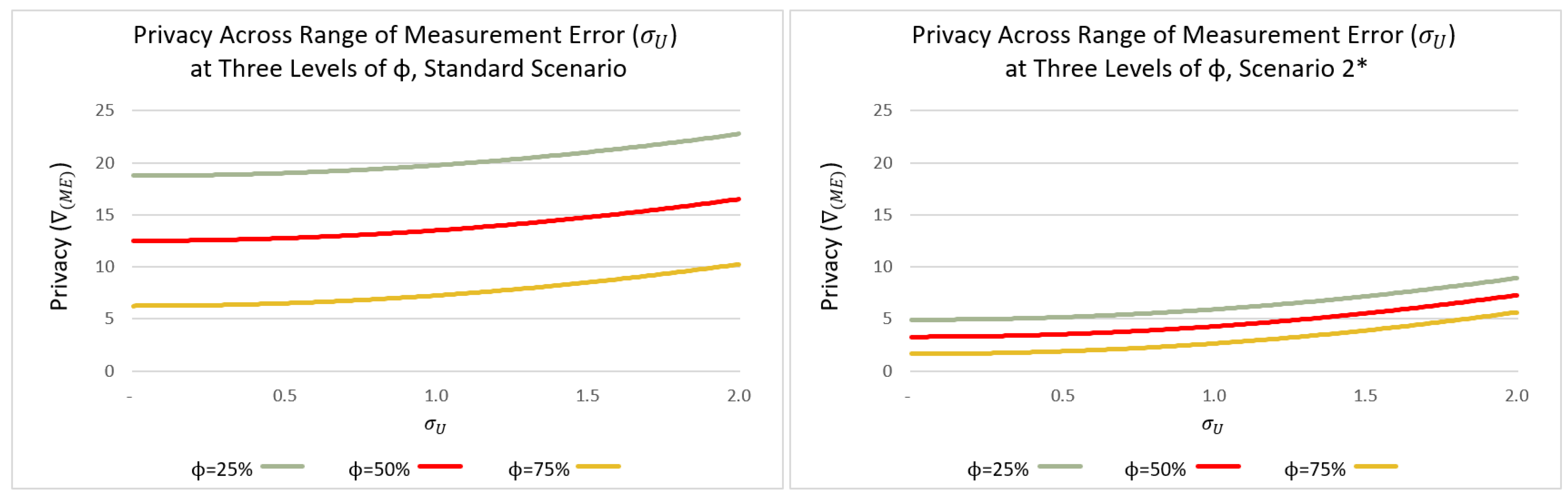

In

Figure 5, we show the privacy that the MOET model provides when auxiliary information is collected, across a range of ME and at three different levels of privacy reduction due to auxiliary information (

) in two reasonable scenarios, as represented by the values listed immediately following the figure.

The graph on the left represents the standard baseline scenario underlying the other graphs and figures throughout this study, with . In the standard scenario, privacy is higher by 21%, 32%, and 64% at than at at the three assumed levels of . The graph on the right represents another plausible scenario, in which the natural variability of is smaller: . We also chose in this scenario because it is standard to assume , and we set because the parameters of the unrelated question should be similar to the parameters of the sensitive question. In this scenario, privacy is higher by 81%, 122%, and 234% at than at at the three assumed levels of . All of this is to say that ME provides an additional source of privacy to RRT models, especially when privacy is low.

ME decreases efficiency (increases MSE), as per Equations (13) and (22), but we have seen that ME also inadvertently increases privacy, as per Equations (44) and (45), that is, the same phenomenon (in this case, measurement error) causes one aspect of model quality (efficiency) to deteriorate while it causes the other (privacy) to improve. Singh et al. (2020) and other statisticians have faced this same problem of assessing overall model value when “level of [privacy] protection” and “efficient estimation” are competing considerations [

24]. Gupta et al. (2018) offered a solution to this dilemma when they proposed a unified measure (UM) which enables the quantification of overall model quality, taking both efficiency and privacy into account [

25]:

The superscript

indicates that this measure has been adjusted to reflect Gupta et al.’s (2002) assertion that optionality does not reduce privacy for the proportion of respondents

who do not consider the question to be sensitive [

23]. Small values of UM reflect superior model performance. In

Figure 6, we study UM across a range of ME values under three different privacy reduction assumptions in a standard scenario represented by the values listed immediately following the figure. We assume

across a range of 0 to 2; results are not sensitive to this assumption.

In our illustrative scenario, the ME impact on privacy (numerator) overpowers the ME impact on efficiency (denominator), so UM improves modestly as ME rises. This result can be generalized by calculating the relative change in UM when ME is present:

But when

is large, Equation (50) can be reduced to

The observation of this expression makes it clear that the change in UM will be negative when ME is introduced. Since small UM values indicate superior performance, this means that UM will in fact decrease (modestly) with a larger ME, if the sample size is large. Such a reduction will be the most significant when the adjusted privacy () is small. The major conclusion of this analysis is not that we expect UM gains from ME, but simply that we do not expect material UM deterioration as a result of ME.

6. Simulations

We show two tables of simulated values in this section. All tabular values represent model output that reflects the impact of measurement error on the MOET quantitative RRT model, and all simulations assume the same base parameter values, with minor deviations as noted. As a matter of full disclosure so that the values in the tables can be independently reproduced, scenario values are listed immediately preceding the tables. In both tables, the means of

and

are set equal to 10 for convenience, as it is only their values relative to their standard deviations that matter. The standard deviations of

and

are set equal to half of their means (5), as is consistent with other recent RRT studies such as Parker et al. (2024) [

17]. The mean and variance of

are set equal to the mean and variance of

because it is important that the unrelated question behaves similarly to the sensitive question.

,

are standard choices for additive and multiplicative scrambling values, and the choices of

are chosen because they lead to high model efficiency, as per Parker et al. (2024) [

17]. When not otherwise specified,

,

, and

are set to the moderate values of

,

, and

, respectively. Each line of each table can be thought of as a unique scenario, based on a particular set of model parameters, and each scenario is run 10,000 times in R software version 4.3.1 to show the close match between simulated and theoretical values.

Table 1 is provided for two reasons. First, to exhibit the close match between theoretical and empirical values (denoted by subscripts T and E, respectively). Second,

Table 1 is intended to show the behavior of the basic estimator and the ratio estimator across a range of assumed measurement errors (

and

range from values of 0 to 2). We also show results across

values because we want to witness the performance of the ratio estimator in comparison to the basic estimator, and the ratio estimator’s performance depends heavily on the correlation between auxiliary information (

) and the true response to the sensitive question (

). We do not show results varying by

,

, and α, because, as noted in

Section 3.1 and

Section 3.2 (see Equations (13) and (22)), measurement error does not depend on these quantities.

Table 1 is split into sections (A) and (B), where

Table 1A shows scenarios where the standard deviation of measurement error arising from the measurement of

and

(represented by

and

) rises in lockstep.

Table 1B shows scenarios where

remains relatively low regardless of the value of

. This represents the idea that the collection of RRT data, which is by nature complex, may result in more measurement error than the collection of auxiliary information.

It is clear throughout

Table 1 that theoretical and empirical values match closely. For example, in the third row of the table, we see that the basic estimator

= 10.0007 is close to the theoretical value of 10.0000. Similarly, the theoretical and empirical values of

for the basic estimator are 0.1884 and 0.1881. For the ratio estimator, the theoretical and empirical values of

are 10.0053 and 10.0051 and the theoretical and empirical values of

are 0.1454 and 0.1464. In all cases, theoretical and empirical values match closely, implying that the theoretical results are validated by the simulations.

Analytically, we make several additional observations. We note that, as expected, when measurement error increases, MSE rises (i.e., efficiency declines). However, we also note that the rate of decline is greater for the ratio estimator than it is for the basic estimator, even in

Table 1B where

is held constant. But because the ratio estimator is naturally more efficient when auxiliary data are of high quality, the ratio estimator generally remains more efficient than the basic estimator, even when high levels of measurement error are encountered. The only exceptions to this rule come when

. This consequence of low correlation was anticipated by Equation (25), as demonstrated in

Figure 3. But in cases where correlation is low, the ratio estimator should typically not be used.

For each grouping of simulations (for example the first five rows of

Table 1A), it is easy to think of the successive lines as representations of increasing levels of measurement error. However, it is more important to conceptualize this information in reverse. That is, if true measurement error is in reality

(as in line 5) but is not correctly recognized and accounted for (so, values are calculated based on

, as in line 1), then the true level of MSE will be significantly understated. For example, the true MSE, when using the ratio estimator, would be 23.5% higher than it would appear to be if the measurement error was not taken into account (0.1696 versus 0.1373).

In

Table 2 (below), we study whether the benefit of RRT (removal of lying bias) outweighs its cost (addition of measurement error). To do this, we simulate two surveys—a direct survey which does not implement RRT or any other SDB-reduction mechanism, and an MOET RRT-based survey. We know that with RRT absent, the direct survey will be subject to lying bias, but will be simple to administer and therefore will provoke very little measurement error. In contrast, an RRT survey is administratively complex and will provoke significant measurement error but very little inaccuracy due to lying bias. Based on 10,000 iterations, we estimate the probability the RRT survey will result in a closer estimate of the mean response to the sensitive question by finding the proportion of iterations in which RRT estimates are closer to true values than direct survey estimates are. We compare results across three lying bias levels (2%, 5%, 10%) and across five levels of measurement error (0, 0.5, 1.0, 1.5, 2.0). We also study results across three different sample sizes (50, 250, 500). Note that, as discussed in

Section 4.2, the distributions of

and (

) do not follow known distributions; therefore, no theoretical expression for

could be derived.

For the basic estimator, the probability that the MOET RRT model improves estimation () is calculated on both a theoretical and empirical basis. Theoretical and empirical results match closely as seen in the eighth and ninth columns of the table, a fact which implies that the theoretical probability expressions shown in Equations (28)–(30) are correct and that the assumption of bivariate normality represented in Equation (39) is justified. Because the distribution underlying the ratio estimator could not be identified, only empirical estimates of were calculated. As expected, in all cases, the ratio estimator’s performance was similar but superior to the basic estimator’s performance.

We note that there are three factors represented in the table that increase the probability that and will result in better estimates than : large sample sizes, high levels of lying bias, and low levels of measurement error. Indeed, when , , and , it is only 29% likely that will be closer to than . But when , , and , the probability that is closer to than is 95%. Importantly to this study, measurement error is the least impactful of the three factors.

The tabular findings imply that the MOET model should not be used when the sample size is low and LB is expected to be small. However, the table also implies that MOET should definitely be used when the sample size is adequate and LB is expected to be significant (the exact circumstance that the RRT models were developed for). The table further indicates the important conclusion that while measurement error will reduce MOET performance, reasonable levels of measurement error should not impact a researcher’s decision to use or not use the model.

7. Conclusions

Measurement error is particularly important to RRT models because the administration of surveys based on these models is often complicated and can therefore lead to excess measurement error. In this study, we developed basic and ratio estimators that reflect measurement error for the recently proposed MOET RRT model by Parker et al. (2024) [

17]. Using them, we identified circumstances where a failure to reflect ME could result in underestimating efficiency by more than 20%, confirming our expectation that the reflection of measurement error can be important. The accuracy of our new estimators was validated through simulation. Researchers should take standard precautions to avoid excessive measurement error, such as providing simple, clear instructions to respondents, providing only positive whole-number values as scrambling variables, and providing a calculator or charts of computations. And researchers would benefit from using the revised estimators proposed in this study to better quantify the efficiency of their estimations.

We further considered two important questions involving measurement error. The first involved determining if and when measurement error associated with the collection of auxiliary data was so high that it should not be collected. Equation (25) showed us that it would only be in unusual circumstances—such as when the correlation of auxiliary information with the sensitive question was low and the variance that comes from collecting auxiliary information (measurement error) was high relative to the variance inherent in the auxiliary information—that auxiliary information should not be collected. Of course, low correlation of auxiliary information with the sensitive question is a circumstance where ratio estimation is unlikely to be effective regardless of measurement error. Researchers should use Equation (25) to verify, according to a priory estimates of variance and correlation, that auxiliary information should be collected in their study.

Second, we considered whether measurement error might be so large that it undermined the MOET model’s ability to extract truthful answers to sensitive questions. Toward this objective, we derived relationships that allowed us to calculate the probability that an MOET estimate of the mean response to the sensitive question would be closer to the true response than an estimate based on a direct survey (which would be biased by untruthfulness). We concluded that the MOET model yielded superior estimates in spite of measurement error, provided that lying bias was significant and sample size was adequate. Finally, we recognized that while auxiliary information improves efficiency, it comes at a cost—it reduces privacy.