1. Introduction

Spherical distributions are of special importance when working with directional data [

1]. They are used to represent orientation of objects or individuals and identify movement directions. Recently, spherical distributions have been particularly important in the modelling of directed motion in biology. Examples in ecology that have been modelled include the directed motion of wolves along seismic lines [

2], the orientation of sea turtles along geomagnetic lines [

3], hilltopping of butterflies [

4], and migration of whales [

5]. Examples of directed cell movement include the movement of melanoma cells [

6], spread of glioma in the brain [

7], oriented motion of cells on microfabricated structures [

8], and directed motion of immune cells [

9].

Mathematically, a spherical distribution is a probability distribution on the unit sphere

in

, where

denotes the space dimension. We usually denote it as

,

with the basic properties

For application to glioma, three spherical distributions have been proposed (we give explicit definitions later): the von Mises distribution (

5) (also called von Mises–Fisher for higher dimensions), the peanut distribution (

6), and the ordinary distribution function (ODF) (

7) [

7,

10,

11,

12]. The von Mises distribution has been used in a model that was fit to data for 10 patients diagnosed with glioma in [

7], while the ODF has been used for a theoretical model for glioma growth incorporating the effect from endothelial cells as well as the acidity from the environment in [

12]. Here, we focus on the peanut and von Mises distributions. We generalize the distributions to any space dimensions

and we compute new formulas for the expectation and variance–covariance matrices for these distributions. We also applied the method to the ODF distribution, but, unfortunately, we did not obtain an explicit formula for the second moment of the ODF distribution. Other important spherical distributions are the Bingham, the Fisher–Bingham, and the Kent distributions. These have not been used extensively in biological modelling; hence, we discuss them only briefly in the conclusion section.

1.1. Anisotropic Transport Equations

The popular use of spherical distributions in ecology and cell biology relies on the modelling of movement data with transport equations. In this short subsection, we recall some of the essential steps of this framework, to set the stage for the following calculations. Among the above applications, let us choose the example of wolf movement along seismic lines. The arguments for the other applications (glioma, immune cells, sea turtles, whales) are very similar.

Seismic lines are clear-cut lines in the Northern Canadian forests, which are cut by oil exploration companies to search for oil and gas [

13]. Wolves in these forests can use these lines to migrate further than normal and increase their hunting success. Needless to say, this has a severe ecological impact. Using GPS data on wolf movement, it was shown by McKenzie et al. [

2,

14] that the directional orientation of wolves near seismic lines follows a von Mises distribution. Let us call it

,

.

To formulate a mathematical model for the wolf movement, we introduce a wolf density

that depends on time

t, location

x, and orientation

and consider the anisotropic transport equation

Here

is a rate of change of direction and

s is an average speed. The arguments of

have been suppressed in three of the terms.

The analysis of these types of models is well established, many papers are available [

15,

16,

17] and we will not recall any details here. However, we will discuss the important

parabolic scaling. Parabolic scaling is a scaling transformation of the transport Equation (

2) into macroscopic time and space scales. It can be shown that, to leading order the integrated particle density

satisfies an advection diffusion equation

where the drift velocity and the diffusion coefficient are proportional to the first and second moments of

:

Hence, very clearly, knowledge of the first and second moments of

is essential here.

In the application to wolf data, the distribution

encodes the directional network of seismic lines in the environment [

2,

14]. In the case of sea turtles,

describes the orientation towards the target site [

3]. Applied to glioma data,

is informed from an MRI imaging technique called

diffusion tensor imaging (DTI) [

7,

12,

18,

19,

20] (see also

Section 5 for a simplified example).

Hillen et al. [

10] calculated the explicit forms of the expectation and the variance–covariance matrix for the von Mises–Fisher distribution in two and three dimensions. It appears that the use of the divergence theorem in suitable domains was the key to obtaining the explicit formulas for the moments of these distributions.

1.2. Outline of the Paper

Here, we extend that methodology to higher dimensions. The advantage of knowing the explicit form of the moments for spherical distributions is that one can easily calculate the expectation and variance–covariance matrix without having to do the integrals numerically; hence, significantly reducing the computational cost for large dimensions. We find explicit forms for n-dimensional von Mises–Fisher and peanut distributions which, to our knowledge, are new formulas.

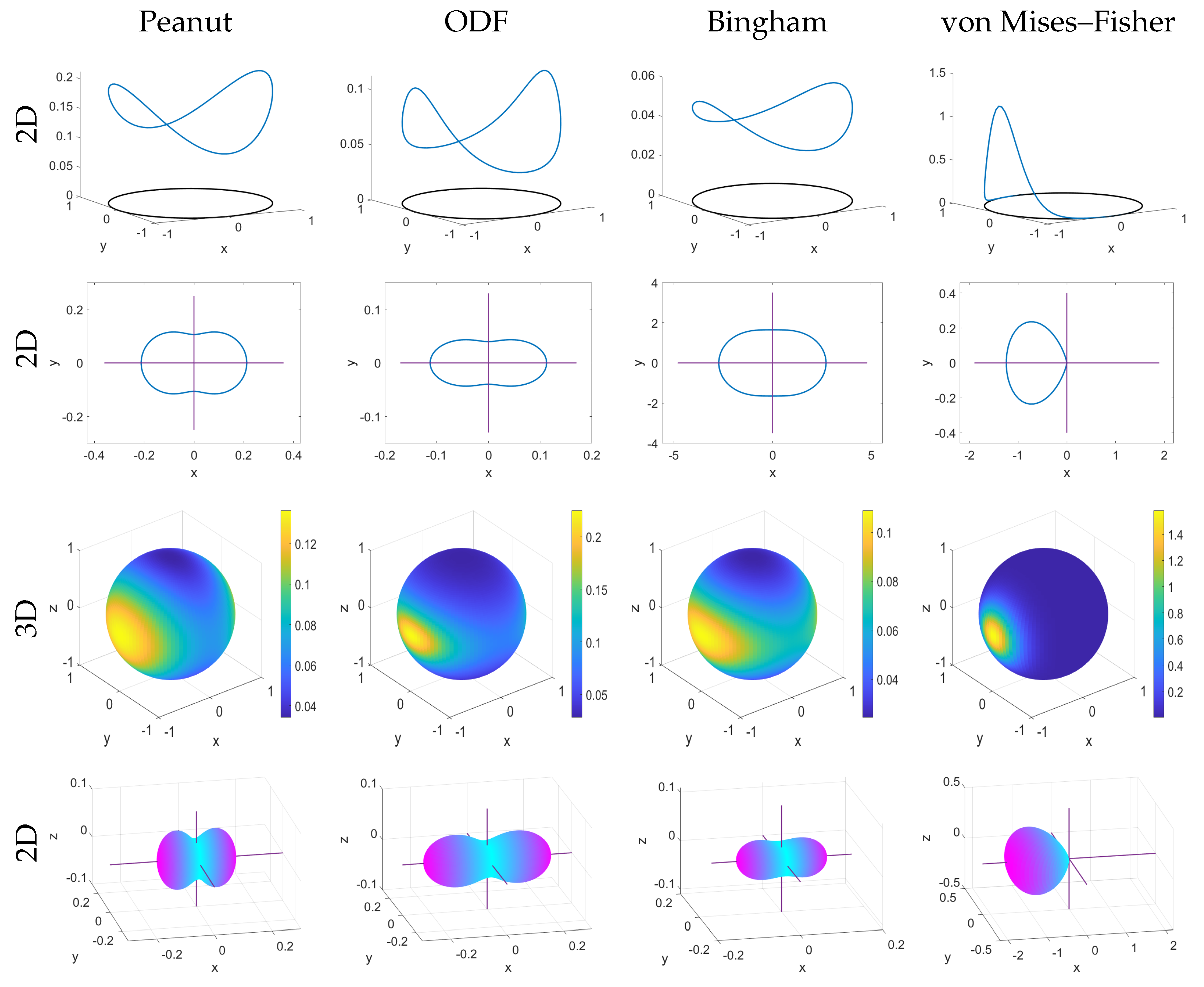

In addition, we consider the level of anisotropies that arise from these different formulations (peanut, ODF, von Mises–Fisher). The anisotropy is a measure of the distortion of the distribution in certain directions. It can be easily visualized by plotting level sets of the corresponding distributions

(see

Figure 1). To measure the anisotropy, we first compute the eigenvalues of the corresponding variance–covariance matrices, and then compute the fractional anisotropy (

) and the maximal eigenvalue ratio

R. We find that peanut distribution has a limit to how much anisotropy it can account for, however the von Mises–Fisher does not have such a limitation, showing that the von Mises–Fisher is the better choice for capturing anisotropy. Please note that, here, we focus on theoretical results, specific applications are treated in the cited literature [

2,

3,

7,

14,

17].

1.3. Definitions of Some Spherical Distributions

In this section, we give the explicit definitions of the spherical distributions that we will work with. We use given parameters where

is a concentration parameter,

is a given vector, and

is a given positive definite matrix. In the case of glioma modelling,

A is estimated from the diffusion tensor data (see

Section 5).

The

n-dimensional

von Mises–Fisher distribution is defined as [

10]

where

and

with

defines the modified Bessel function of the first kind of order

p,

k is the concentration parameter, and

defines the likeliest direction of the distribution. Here, we employ a formulation that uses Bessel functions. An alternative definition uses the Gamma function and can be found in

Appendix A.1.

The

n-dimensional

peanut distribution is defined as [

11]

where

denotes the trace of A,

, and

is the surface area of an

dimensional sphere.

The

n-dimensional

ODF distribution is defined as [

12,

21]

where

and

A hold the same definitions as in the peanut distribution defined above. In (

7),

denotes the inverse of

A and

.

We only briefly discuss the

Bingham distribution [

1,

22], which is defined as

where

,

A,

hold the same definitions as above and

is the diffusion time.

The

Fisher–Bingham distribution [

1] is defined as

where

k is a concentration parameter and

u is the mean direction and

is a normalization constant. In the case of a diagonal matrix

A, the Fisher–Bingham distribution is also known as

Kent distribution [

1,

23].

We show the 2D and 3D versions of the peanut, ODF, Bingham, and von Mises–Fisher distributions in

Figure 1.

1.4. Definitions of First and Second Moments

Since we will be calculating the first and second moments of the spherical distributions in

n dimensions to obtain the expectation and the variance–covariance matrix, we provide the explicit definitions here. In general, the expectation [

10] is defined as

and variance–covariance matrix [

10] is defined as

Here,

for

denotes the tensor product between matrices. For simplicity of notation, we give names to the zeroth, first, and second moment. The zeroth moment is defined by

the first moment is defined by

and the second moment is defined by

2. First and Second Moments of von Mises–Fisher Distributions

In this section, we calculate the first and second moments of the general

n-dimensional von Mises–Fisher distributions (

5), in order to get general formulas for expectation and the variance–covariance matrix for these distributions.

For simplicity of notation, sums over crossed repeated indices are understood as in tensor calculus [

10]

We also recommend referring to Appendices

Appendix A.2,

Appendix A.4 and

Appendix A.5 for the basic properties of Bessel functions, definitions of

and

, as well as formulas that are used in the preceeding calculations.

Theorem 1. Consider the von Mises–Fisher distribution (

5)

in any dimension n. The expectation and variance–covariance matrix are Proof. Expectation: We abbreviate the normalization constant in (

5) as

Following a similar procedure as in [

10] we calculate the expectation. Let

be an arbitrary vector, then, using (

5) we obtain

where we use the summation convention (

15). Note, that

is a unit vector and also the outside normal vector at

on

. Hence, we apply the divergence theorem on

and continue the calculation as follows

where, in the third equivalence, we divide the integral on a ball into radial and angular components, and in the last step we applied (

1).

Making use of the Bessel identities (

A5) and (

A6), we rewrite the term within the integral, that is

Using (

21), we simplify further and apply the fundamental theorem of calculus to obtain

Therefore, we find that

After simplifying, and noting that

b is an arbitrary vector, it follows that

Note that, from this calculation, we also obtain the following useful formula

by equating the right-hand term of the fourth equivalence in (

20) with the right-hand term in the last equivalence of (

22).

Second moment: We now calculate the second moment as well as the variance–covariance matrix for the von Mises–Fisher distribution (

5).

Since the expectation for (

5) is nonzero, we directly calculate the second term in the variance–covariance matrix formula (

11). Using (

23), it is simply

Now, we calculate the first term in the variance–covariance matrix formula (

11), which is the second moment, by following the approach in [

10].

Let

c be defined by (

18) and

. Again, we use the fact that

is the outside normal vector at

on

to apply the divergence theorem. Thus, we obtain

The first integral is solved in (

24). We calculate the integral in the second term as

by applying (

1) at the second equivalence.

By using the Bessel identities (

A5) and (

A6), we can rewrite the term in the integral as

Using (

26) and (

A5), we obtain

Thus,

Using (

22) and (

28), we find that

since

are arbitrary.

Substituting (

25) and (

29) into the variance formula (

11), we obtain

□

Corollary 1. Consider the bimodal von Mises–Fisher distributionThe expectation and the variance–covariance matrix for (

31)

is given by Proof. We can rewrite (

31) as

where

is defined by (

5) and

is also defined by (

5), but with

u replaced by

. Using this, we can calculate the expectation and the variance–covariance matrix using the results in Theorem 1. The expectation is calculated as follows

Since the expectation is 0, the variance–covariance matrix is given by its second moment. Therefore, using (

29), we obtain

□

Example for : The von Mises–Fisher distribution in two dimensions is called the von Mises distribution. It has been used in various applications that we mentioned above. Hence, here, we recall the result for the bimodal von Mises distribution in two dimensions [

10]:

Example for : It is interesting to consider the important case of the von Mises distribution (

5) for

. For

, we find

In [

10], these terms were found as

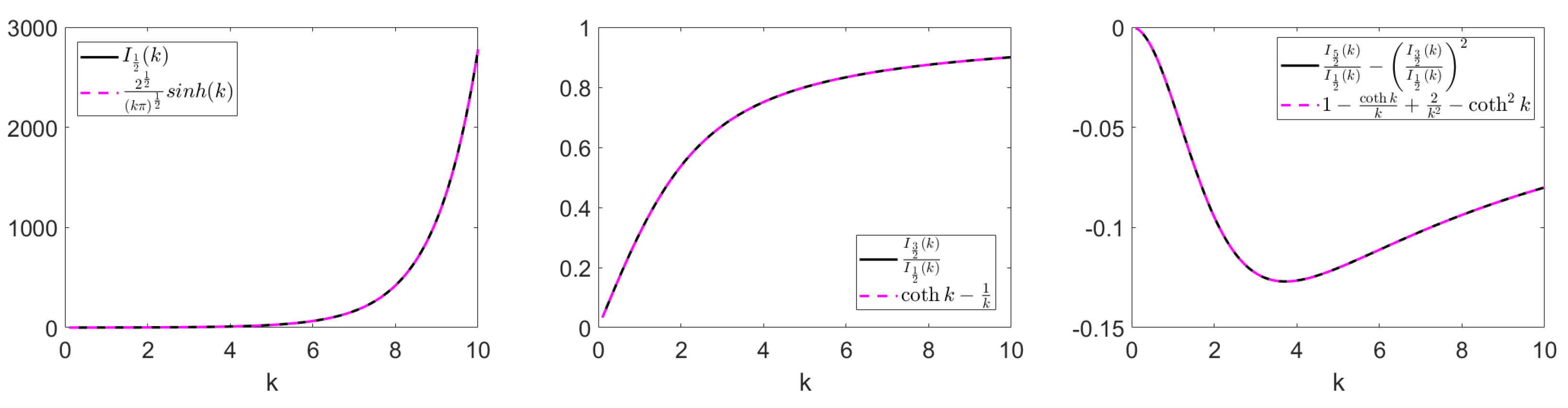

Hence, we can compare the coefficients and find three new identities for Bessel functions:

Note that (

37) is equivalent with (

38) for

. These formulas can also be derived by using the trigonometric form of Bessel function identities for

,

,

defined in [

24], where

We confirm that the above identities hold numerically in

Figure 2.

In this section, we computed the first and second moments of the von Mises–Fisher distribution (

5) in the proof of Theorem 1 to obtain the explicit forms of the expectation and variance–covariance matrix for

n dimensions. To our knowledge, these formulas for the expectation and the variance–covariance matrix in the general dimension

n are new. Corollary 1 provides a method on how to generalize the explicit formulas in Theorem 1 to obtain the analytical form of the expectation and variance–covariance matrix for mixed modal von Mises–Fisher distributions, where we used the bimodal von Mises–Fisher distribution as an example. In this section, we also find new versions of Bessel function identities as a side result.

3. First and Second Moment of Peanut Distribution

We first note that the peanut distribution (

6), the ODF (

7), and the Bingham distribution (

8) are all of the form

with an appropriate function

F, where

A is a given matrix. In the case of ODF, we actually use

, but the structure is the same. Due to symmetry in

, the first moment equals zero:

Lemma 1. If A is a given matrix and q is of the form , then . Moreover, all odd moments are zero too.

Proof. Let

denote the first coordinate of

in

. We split the sphere

according to the positive and negative

component, obtaining two hemispheres:

This allows us to exploit symmetry to get the following result

□

3.1. Second Moment of the Peanut Distribution

Here, we calculate the variance–covariance matrix for the peanut distribution (

6). Note that, since

, the variance–covariance matrix is simply the second moment of (

6), that is

With this, we can formulate our result, which to our knowledge is a new formula for the variance–covariance matrix of the peanut distribution (

6) in

n dimensions.

Theorem 2. Consider the peanut distribution (6). Then Proof. We have seen in Lemma 1 that

. For simplicity, we define the coefficient in (

6) as

To compute the second moment, we multiply

by two arbitrary vectors

in order to obtain a scalar value. Then, we use the fact that

is the outside normal vector at

on

to apply the divergence theorem. Using (

6) and (

44), we obtain the following result

where, in the third equivalence, the divergence theorem was applied and, in the last equivalence, the product rule was used.

Next, we simplify the final two terms in the above calculation. The first term simplifies as

since

, implying that

For the second term, we set

and simplify

In the above calculation, the divergence theorem was applied at the second equivalence and the last equivalence made use of (

A10).

Therefore, since

are arbitrary, it follows that

□

In this section, we computed the first and second moments of the peanut distribution (

6) to obtain explicit forms for the expectation and variance–covariance matrix in Theorem

6. To our knowledge, the analytical formulas for expectation and variance–covariance matrix for the peanut distribution are new. We also showed that spherical distributions in the form

have

. We applied the same method for the ODF and the Bingham distributions, but unfortunately, no closed-form expressions could be derived.

4. Eigenvalues and Anisotropy

When spherical distributions are used in diffusion models, the variance–covariance matrix describes the anisotropic movement of the random walkers [

17]. The level of anisotropy can be measured by the eigenvalues of the diffusion tensor and by the

fractional anisotropy (

) [

7]. The fractional anisotropy

is an index between 0 and 1, where 0 is the case of full radial symmetry giving isotropy and 1 is the case of extreme anisotropic alignment to a particular direction. Another indicator of anisotropy is the ratio

R of the largest to the smallest eigenvalue of the diffusion tensor. This quantity ranges between 1 (isotropic) to

∞ (maximal anisotropic).

Note that the fractional anisotropy and the anisotropy ratio are only used for symmetric distributions of the form

. In this case,

and

Hence, the following applies to the peanut distribution and to the bimodal von Mises Fisher distribution (

31). The ODF and the Bingham distributions are also symmetric, but since we do not have explicit forms of the second moments, we cannot compute their anisotropy values for general dimensions.

Recall that, in (

4), we identified the diffusion tensor as

where

s is the speed and

is the turning rate of a cell or individual. Hence, the eigenvalues of

are scaled versions of the eigenvalues of

.

The fractional anisotropy (FA) formulas are defined separately for two and three dimensions and we summarize them in the following definition.

Definition 1. - 1.

The fractional anisotropy in two dimensions is given bywhere are the eigenvalues of a two-dimensional tensor and is the average of the two eigenvalues [7]. - 2.

The fractional anisotropy in three dimensions is given bywhere are the eigenvalues of a three-dimensional tensor and is the average of the three eigenvalues [7]. - 3.

The anisotropy ratio R is defined as

Theorem 3. Given a symmetric, positive definite matrix A with eigenvalue and eigenvector pairs , , the diffusion tensor arising from the peanut distribution (

6)

isThe diffusion tensor (

51)

has the eigenvaluesThe fractional anisotropies and the ratio of eigenvalues are bounded where Proof. To compute the eigenvalues, we multiply both sides of (

51) by the eigenvector

of

A and obtain

The formulas for the fractional anisotropy follow directly, by substituting the eigenvalues of (

51) into (

48) and (

49), respectively. The upper bound follows from taking

. For the ratio

R, we write

where the maximum eigenvalue and minimum eigenvalues of

A are denoted by

and

, respectively. All other eigenvalues are called

and the trace becomes the sum of the eigenvalues. □

It is very surprising to see that the anisotropy ratio is bounded by a finite value of 3 in any space dimension, and the fractional anisotropies are uniformly bounded by a value less than 1. This means the peanut distribution does not allow for arbitrary anisotropies, and for that reason it is of limited use in the modelling of biological movement data.

Next, we consider the anisotropy of the bimodal von Mises–Fisher distribution (

31).

Theorem 4. Let be a given direction and k the concentration parameter. The diffusion tensor arising from the bimodal von Mises–Fisher distribution (

31)

is of the formThe diffusion tensor (

55)

has eigenvaluesThe anisotropies and the ratio of the eigenvalues satisfy Proof. To compute the eigenvalues for (

55), we first take the vector

u and show that it is indeed an eigenvector of (

55), since

Hence,

. Now, we take any

(where

) and show that it is an eigenvector of (

55) as well, since

Thus,

is an eigenvector with an eigenvalue

. Since we can define

perpendicular vectors, we conclude that

The formulas for the anisotropies and for

R follow from substituting the determined eigenvalues into the definitions. To determine the upper bounds for the formulas, we compute the limits of

and

. We start with the isotropic case of

. We apply the expansion of Bessel functions as shown in (

A4)

and

To evaluate the limit as

, we apply the expansion (

A7) for large

k to obtain

and

Now, we compute the bounds for the ratio

. We see that if

,

by the above calculations. Further,

as

, since

And finally,

So,

and

as

. Further,

and

as

. □

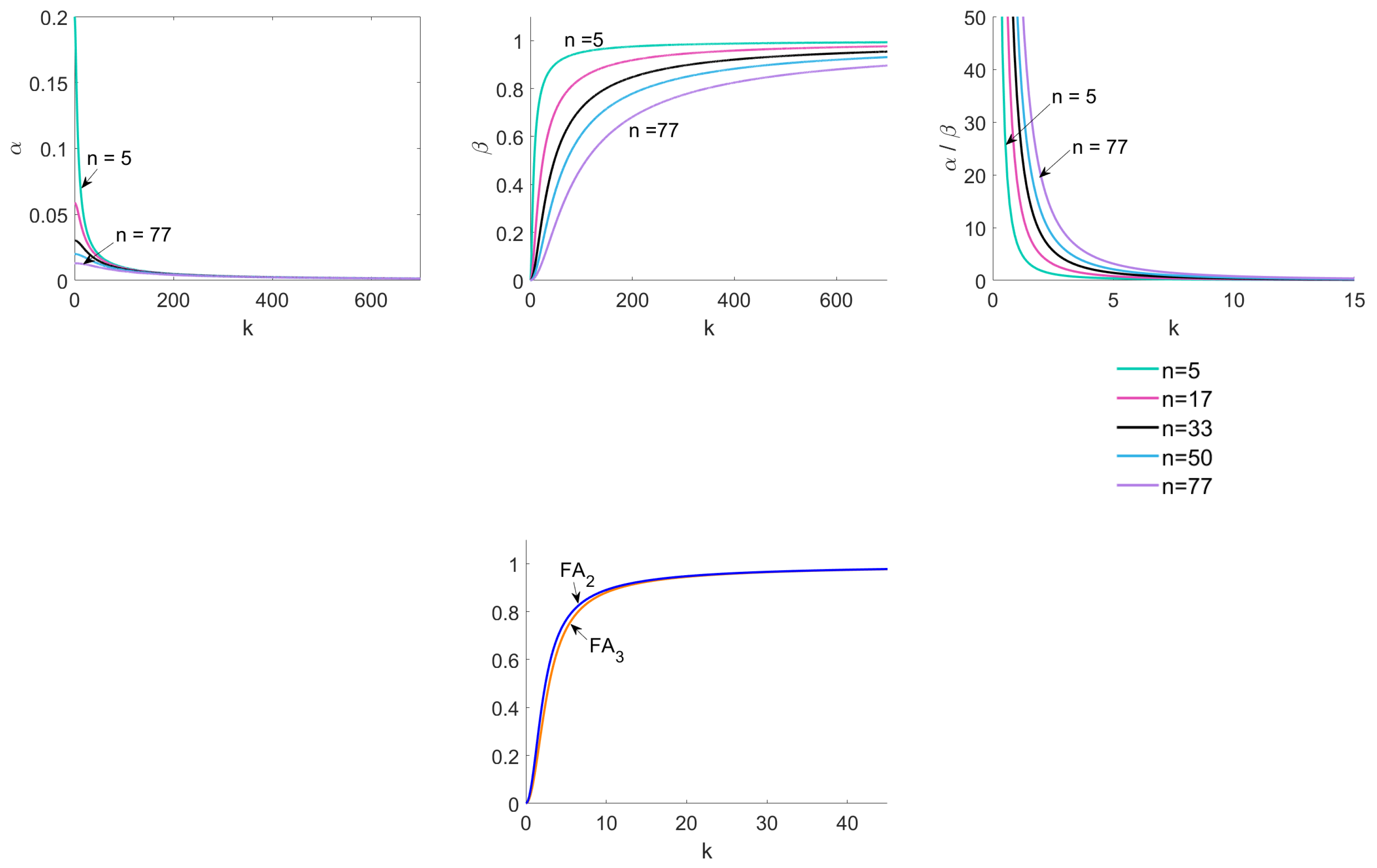

In

Figure 3, we confirm our calculations numerically. We plot

,

,

,

,

with respect to

k and illustrate that they converge to the limits computed above. Note that, for some images in

Figure 3, we truncated the intervals to see the functions more clearly.

Summary

In this section, we computed the eigenvalues for the peanut (

6) and the bimodal von Mises–Fisher distributions (

31) for their respective diffusion tensors in Theorems 3 and 4. We also found explicit versions of the

and

formulas as well as the

R ratio in Theorems 3 and 4. The

,

, and

R formulas for the peanut distribution have been shown to be bounded, unlike for the von Mises–Fisher distribution.

5. An Illustrative Example of Glioma Spread

To illustrate the main results, we consider a glioma spread model in a simplified scenario. Glioma are highly aggressive brain tumors which invade into healthy tissue guided by white matter fibre tracks [

25]. This process has been successfully modelled using Fisher–KPP-type equations (see model (

66) below) [

7,

26,

27]. The underlying anisotropy of white matter tracks can be measured through diffusion tensor MRI (DTI) [

18,

28,

29], and this is where we start our illustrative example. We consider a two-dimensional domain and assume that a diffusion tensor is measured as

This indicates a strong bias in the

x direction and much less movement in the

y direction. The measurements of DTI record the movement of water molecules [

18,

29], which we need to transfer into a movement model for cancer cells. We do this using the transport equation approach mention in

Section 1.1 and the peanut distribution and the bimodal von Mises distributions, respectively. Both methods will give us an effective diffusion tensor for the glioma cells.

We also assume, for simplification, that movement speed and turning rate are normalized such that .

Taking

A from above, with

, we compute the two-dimensional diffusion tensor of the peanut distribution (

51) as

To compute the corresponding diffusion tensor for the bimodal von Mises distribution (

55) for

, we need the dominant eigenvector

u and the concentration parameter

k. In [

7], the model was fit to glioma patient data and it was found that the concentration parameter

k is about 3–5 times the fractional anisotropy of the patients DTI data. Hence, here, we assume

The dominant direction is

. Then, from (

36) we find

We see immediately that the von Mises tensor is more anisotropic than the peanut tensor above.

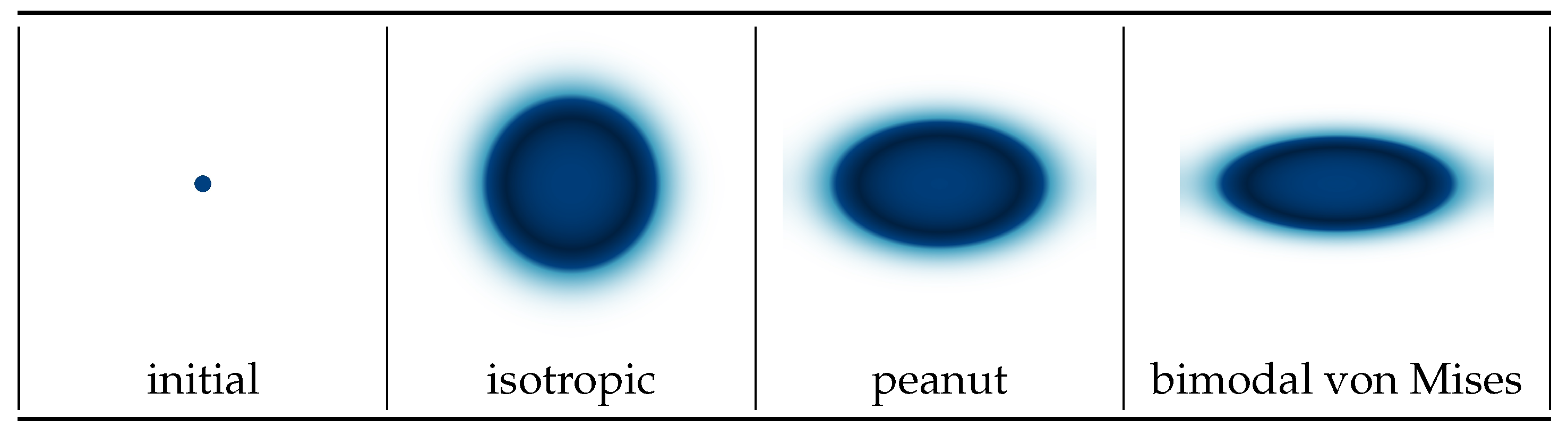

We now take these two diffusion tensors, (

64) and (

65), and an isotropic tensor,

, for comparison and solve the corresponding anisotropic glioma model [

7]

on a square domain with no-flux boundary conditions. Here

describes the glioma cell density as a function of space and time and

r is the maximal cancer growth rate. We choose

and we initialize the simulation with a small cancer seed in the middle of the domain. In

Figure 4, we show the initial condition and the model results after 40 time units. We see that the von Mises distribution is able to describe a stronger directional bias as compared to the peanut. Note that, for this example,

A does not change with space and remains constant at any given spatial point, meaning that this example is applicable to a small region in the tissue, such as a small section of a white matter tract in the brain. For larger domains, like the entire brain,

depends on space, and a more detailed modelling is needed. This was done, for example, in [

7,

11,

20].

6. Discussion

The standard way to compute moments of spherical distributions would be to use polar coordinates and engage in a series of trigonometric integrations. While this is often possible in two and three dimensions, it gets tedious, or impossible, in higher dimensions. Here, we present an alternative method that can be used in any space dimension. This method was first used in [

10] in two and three dimensions. It uses the divergence theorem on the unit ball to transform and simplify the integrals as much as possible. As a result, we obtain explicit formulas for the variance–covariance matrices of the

n-dimensional von Mises–Fisher distribution (Theorem 1) and the

n-dimensional peanut distribution (Theorem 2) as new results. The formulas derived in Theorems 1 and 2 involve Bessel functions, which are standard functions in any software package. Hence, the numerical implementation of these formulas is straightforward. We tried to apply the method for computing the first and second moments of other spherical distributions such as the ordinary distribution function (ODF) (

7) and the Bingham distribution (

8), which, unfortunately, did not lead to closed form expressions. However, because Bingham and ODF distributions are symmetric, we do show that their expectation is zero. We also note that, based on our experience, Bingham and Kent distributions have not been used in the biological context yet. They seem to be more relevant in geophysical applications [

30]. Hence, they are not the main focus of our paper.

Once the variance–covariance matrices were established, we considered levels of anisotropy as measured through the fractional anisotropies and the ratio of maximal over minimal eigenvalues. Surprisingly, the anisotropy of the peanut distribution is bounded away from 1 (Theorem 3) making it problematic to use in modelling. The anisotropy of the von Mises–Fisher distribution covers the entire range from isotropic to fully anisotropic (Theorem 4), and seems to have no restriction if used for modelling.

Using the explicit formulas derived here will significantly decrease the computation time in problems having a large amount of data that require a higher-dimensional von Mises–Fisher distribution [

31] and decrease the computation time when simulating the transport equations (partial differential equations) that utilize the biological movement data [

3,

4,

7]. Moreover, the formulas derived for the generalized von Mises–Fisher distribution can be easily extended to compute the expectation and variance covariance matrices of mixed von Mises–Fisher distributions by substituting the appropriate directional vectors and adding up the terms.

As we compute the higher dimensional integrals, we showcase a geometric interpretation of these integrals and the use of the divergence theorem. Hence, we combine the theory of distributions with calculus in a new way. Besides our explicit formulas, we believe our approach can open the door for further computations of explicit formulas for expectation, variance, and even higher moments of spherical distributions and enable further applications in biology.