1. Introduction

In this paper, we investigate a mixed density model as follows. The random vectors

are independent and identically distributed, and share a same density

satisfying

In the above equation,

is a compact support subset of

, and

is a known mixture parameter,

. Both

and

are bounded densities. The model aims to recover the unknown density

using the observed sample

.

This mixed density model has many practical applications. In the contamination problem [

1,

2], the density function

standing for a reasonable assumption distribution is contaminated by an arbitrary assumption

. In addition, this model is also widely used in microarray analysis [

3,

4,

5], neuroimaging [

6] and other testing problems [

7,

8]. During the multiple testing, Efron et al. [

9] used the above mixed model to estimate the local false discovery rate.

For the density estimation problem (

1), significant results have been established through various methods, including the kernel method, maximum likelihood estimation, and polynomial techniques. Olkin and Spiegelman [

10] estimated the mixture parameter

by the maximum likelihood method, and proposed a kernel density estimator of the true density function

. Priebe and Marchette [

11] proposed using parameter estimates as weights for kernel density estimation. James et al. [

12] constructed a semiparametric density estimator, and studied the almost sure convergence rate of this estimator under some mild conditions. Robin et al. [

13] estimated the unknown density function employing a weighted kernel function and adaptively selected the weights. The pointwise quadratic risk for the randomly weighted kernel estimator mentioned above was derived by [

14], assuming the unknown density function pertains to a Hölder class. The convergence rates and asymptotic behavior of the density estimator were derived by [

15], considering the cases of a known and an unknown mixture parameter, respectively. Deb et al. [

16] proposed likelihood based methods for estimating the distribution function of the density function

, and discussed the statistical and computational properties of this method. To the best of our knowledge, there is no paper focus on wavelet method for estimating the unknown density function

.

This paper focuses on developing wavelet-based methods to address the density estimation problem (

1). Wavelet has an important and satisfactory property of local time–frequency analysis. Due to this unique property, wavelet estimator can choose suitable scale parameters for estimating functions which have different functional properties in different intervals. Wavelet methods are now a staple tool in nonparametric statistics; see [

17,

18,

19,

20,

21,

22]. In this paper, a linear wavelet density estimator is initially introduced via the wavelet projector approach. It is noteworthy that this linear estimator exhibits unbiasedness. A convergence rate of the linear estimator over

-risk is proved in Besov spaces. Although it achieves the optimal convergence rate typical of nonparametric wavelet estimation, this linear estimator lacks adaptability. Secondly, a nonlinear wavelet estimator is derived using the hard thresholding method, which is an adaptive density estimator. Compared to its linear estimator, the nonlinear estimator achieves a superior convergence rate when

. To conclude, a series of numerical experiments are provided to study the performances of those two wavelet estimators.

The rest of the paper proceeds as follows.

Section 2 will provide the definitions of two wavelet density estimators and the convergence rates of those two estimators under

-risk. The performances of two wavelet estimators are studied through numerical experiments in

Section 3. The proof of the main results and some auxiliary results are presented in

Section 4.

2. Wavelet Estimators and Main Results

This work investigates wavelet-based density estimation for mixed models within Besov spaces. Let us first review several fundamental concepts in wavelet theory. The orthogonal multiresolution analysis (MRA) [

23] defines a sequence of nested and closed linear subspaces

, which belong to the space of squared integrable functions

for any

,

- (i)

,

- (ii)

if and only if

- (iii)

There exists a function for which the set forms an orthonormal basis of .

Given an orthonormal scaling function

, the corresponding wavelets are denoted by

for

. Then

form an orthonormal basis of

. For every integer

, the function

can be represented by

S in the following wavelet series:

In this equation,

,

,

.

Let

denote the orthogonal projection operator from

onto the subspace

. Then, for any

,

For nonparametric density estimations based on wavelet methods, it is very common to assume that the unknown density function belong to Besov spaces. It is generally acknowledged that Besov spaces are very general function spaces and can be characterized simply in terms of wavelet coefficients. Following [

23], we next define Besov spaces via their wavelet coefficient characterization.

Lemma 1. Suppose the scale function Φ is regular of order ω, , let , , the following statements are thus logically equivalent:

- (i)

- (ii)

- (iii)

.

One can characterize the Besov norm of f as follows:with . We now introduce the linear wavelet estimator as follows

In the above definition,

and

. In these definitions,

stands for a known mixture parameter of the model (

1). For the scale function

, this paper uses the Daubechies wavelets [

24]. It is well known that the simplest of these is the Haar wavelet. The corresponding scale functions of Daubechies wavelets can be obtained by a iterative scheme with Haar scale function [

24,

25]. Next, we derive the convergence rate of the linear wavelet estimator, using the notation:

. There exists a constant

,

denotes

;

denotes

; and

denotes both

and

.

Theorem 1. Consider the model (1), , where , . Define the linear wavelet estimator by (4), taking where , then Remark 1. When , the convergence rate of the linear wavelet estimator matches the optimal convergence rate [26] for standard nonparametric wavelet estimation problems. Compared with the optimal convergence rate

[

26], the linear wavelet estimator results in a lower convergence rate in the case of

. Moreover, the definition of the linear wavelet estimator

requires knowledge of the smoothness parameter

s of the unknown density

. However, the smoothness parameter

s of the density function is usually unknown in many practical applications. As a result, this linear wavelet estimator is not adaptive. In order to overcome those shortages of the linear estimator, this paper employs a hard thresholding method to construct a nonlinear wavelet estimator.

We establish the following nonlinear wavelet estimator

In this equation,

and

. Then, the wavelet function

can be constructed by the scaling relation and the scale function

. For more details, we can refer to [

24,

25]. Here, the function

is an indicator function on

G and

. The convergence rate of this nonlinear wavelet estimator is presented as follows.

Theorem 2. Consider the model (1), , where , . The nonlinear estimator is constructed by (6) together with , ; then Remark 2. Note that the convergence rate of this nonlinear wavelet estimator matches the optimal convergence rate up to the factor.

Remark 3. Unlike linear estimators, both attain optimal rates for . However, the nonlinear wavelet estimator gets better convergence rate when . More importantly, the definition of the nonlinear estimator is independent of the smoothness parameter of the unknown density function, making the wavelet estimator adaptive.

Remark 4. According to the model (1), we can easily to see that the density function has a compact support Ω on . It should be pointed out that this compact support property play a important role in the definitions of two wavelet estimators and the proof of two theorems. For details, in the definitions of two wavelet estimators, and rely on the compact support property. Then the cardinalities of and satisfy and , respectively. These results are used in some steps of the proofs of the two theorems, such as (12), (13), (27) and so on. Hence, similar to the classical and important work of Donoho et al. [26], this paper considers the nonparametric estimation of density function with compact support condition. On the other hand, for the nonparametric density estimation with non-compact support assumption, some significant stidoes have been conducted by [27,28]. 3. Numerical Experiments

This section presents numerical experiments using

R2024 software to delve into the effect of approximation of the linear and nonlinear wavelet estimators. During the experiments, the density function

is estimated from the observed data set

. Because during the definitions of those two wavelet estimators the scale parameters

and

are related to the sample size

n, we choose the sample size

in the following simulation studies. In the model (

1), the mixture parameter

is selected to be

. To assess the performance of both estimators, we adopt the mean square error (MSE) as the evaluation criterion, i.e.,

, which is a classical and effective evaluation method in nonparametric estimations.

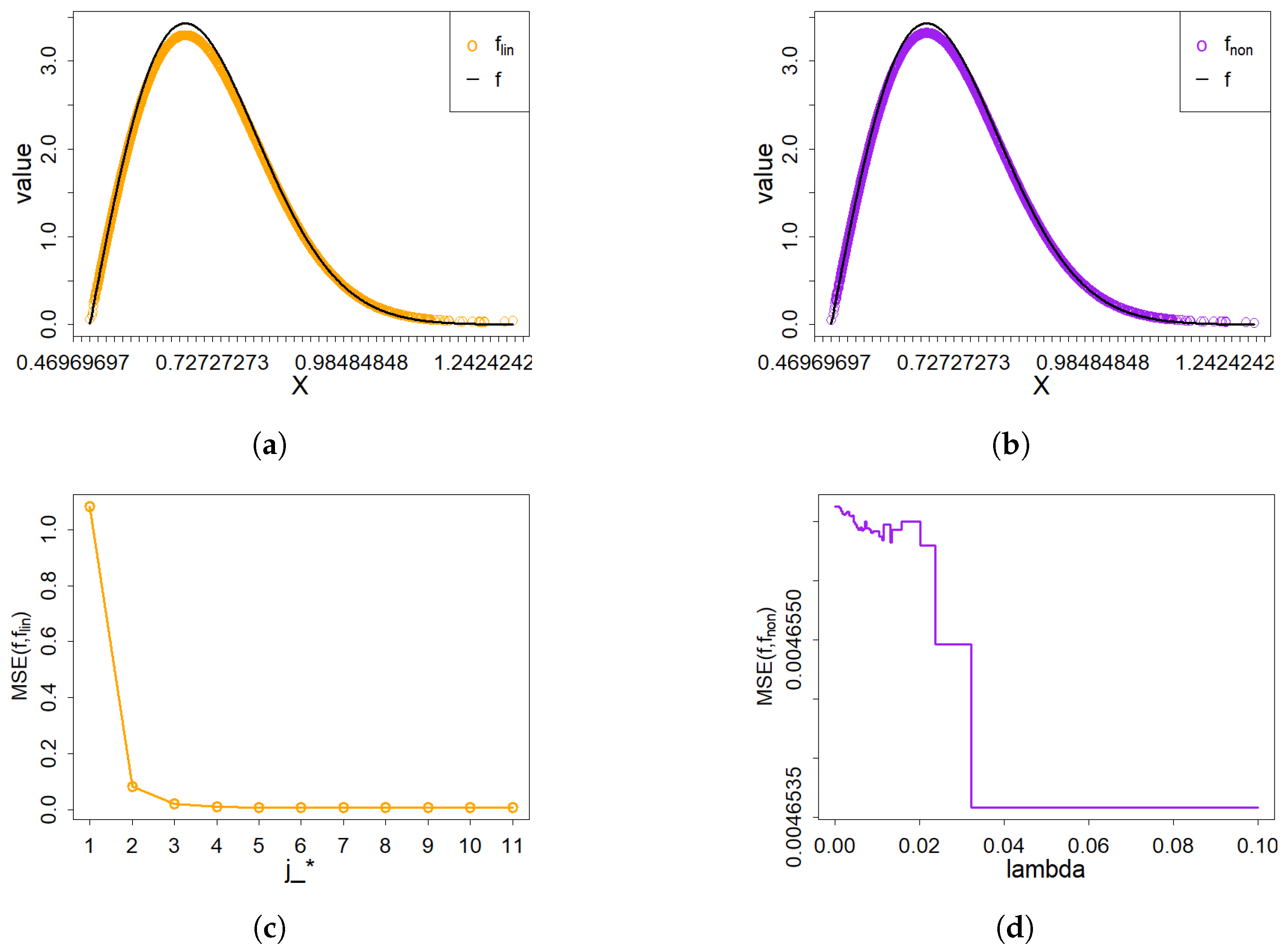

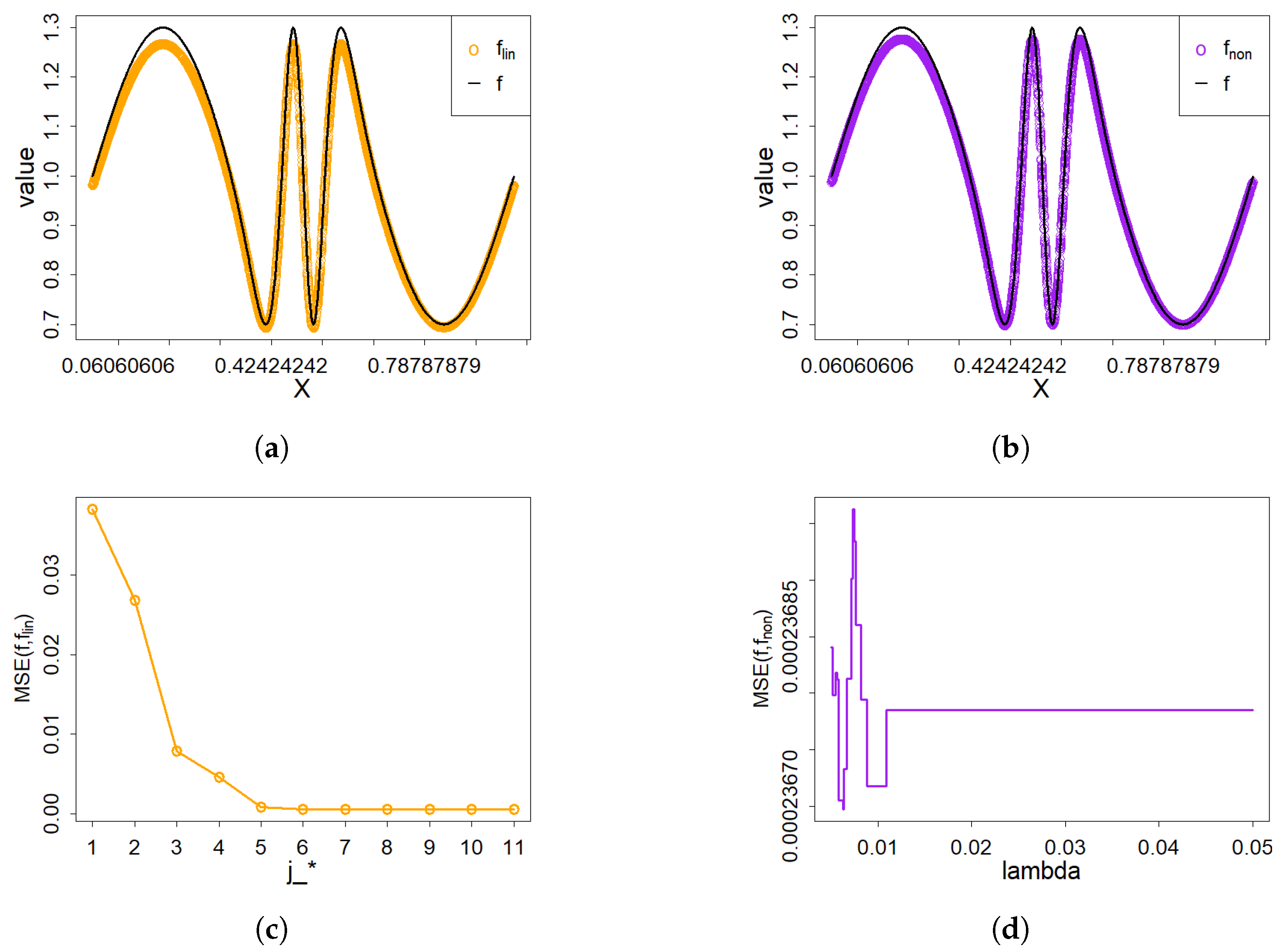

Based on the definition of linear wavelet estimator, we construct a set of linear estimators

with different scales

. During the following simulation studies, we use

to stand for the scale parameter

, which means that

. By minimizing the mean square error, we can obtain the best linear wavelet estimator with the optimal scale parameters

. For example, in the Example 1, the MSE results of the linear wavelet estimator with different scale parameter

are shown in

Figure 1c. Then, it is easy to see that the MSE of linear wavelet estimator is least when the scale parameter

is 5, 6, 7, 8, 9 and 10. For simplicity and high efficiency, we choose the minimum scale parameter value 5, i.e.,

.

The nonlinear estimator, according to the definition (

6), has two scale parameters,

and

. For the first scale parameter, we used the same optimal parameter

as for the linear estimator. The other scale parameter

is set at the maximum level permitted in the wavelet decomposition (i.e.,

with

). In addition, the optimal thresholding parameter

is selected by minimizing the mean square error of the nonlinear wavelet estimator. For example, in the Example 1, the two scale parameters of nonlinear wavelet estimator are

and

. On the other hand, the MSE of nonlinear wavelet estimator with different thresholding values are shown in

Figure 1d. Then we can select the optimal thresholding parameter

. According to the model (

1), six different functions will be selected as the density function

in the following simulation study.

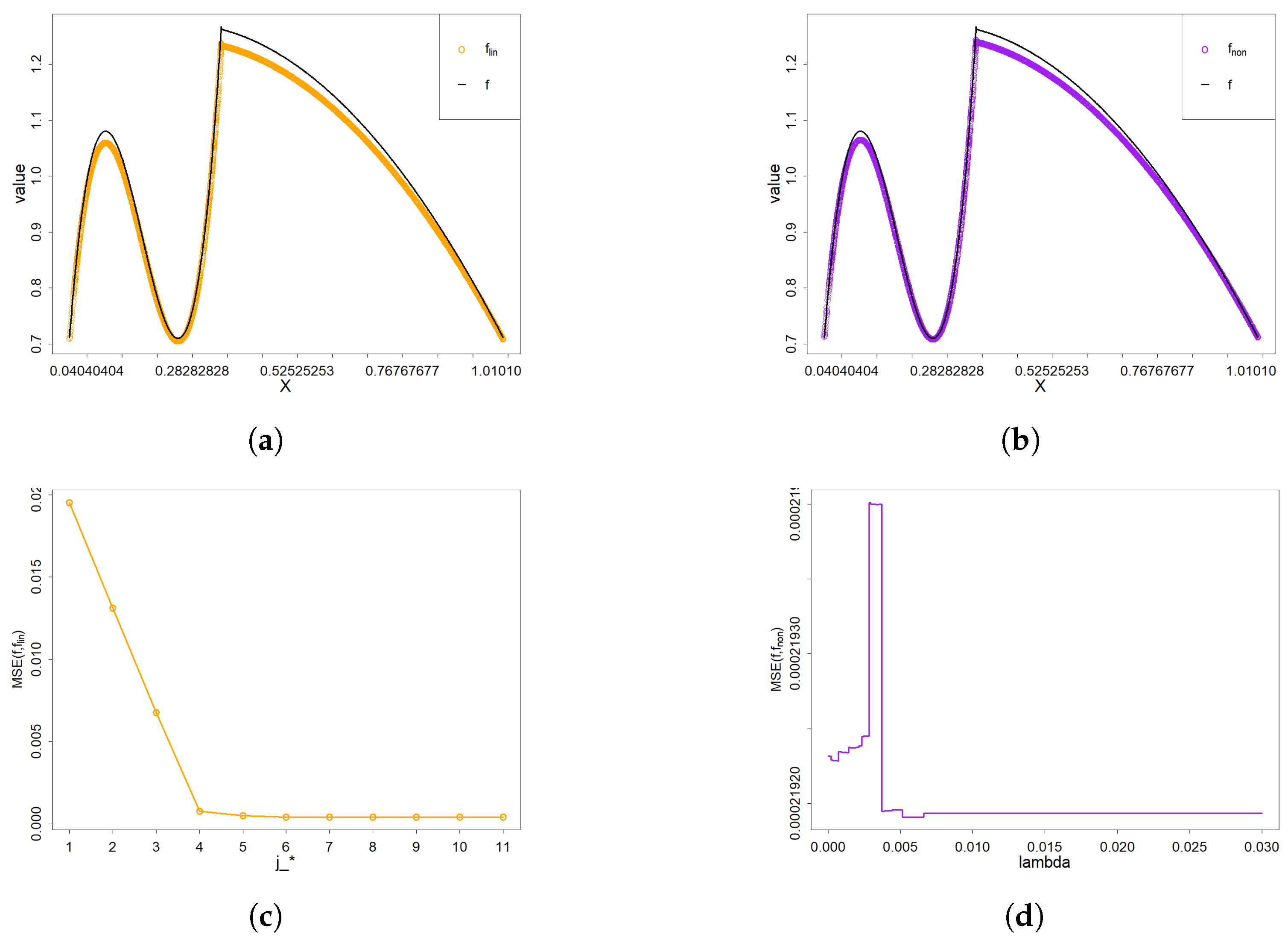

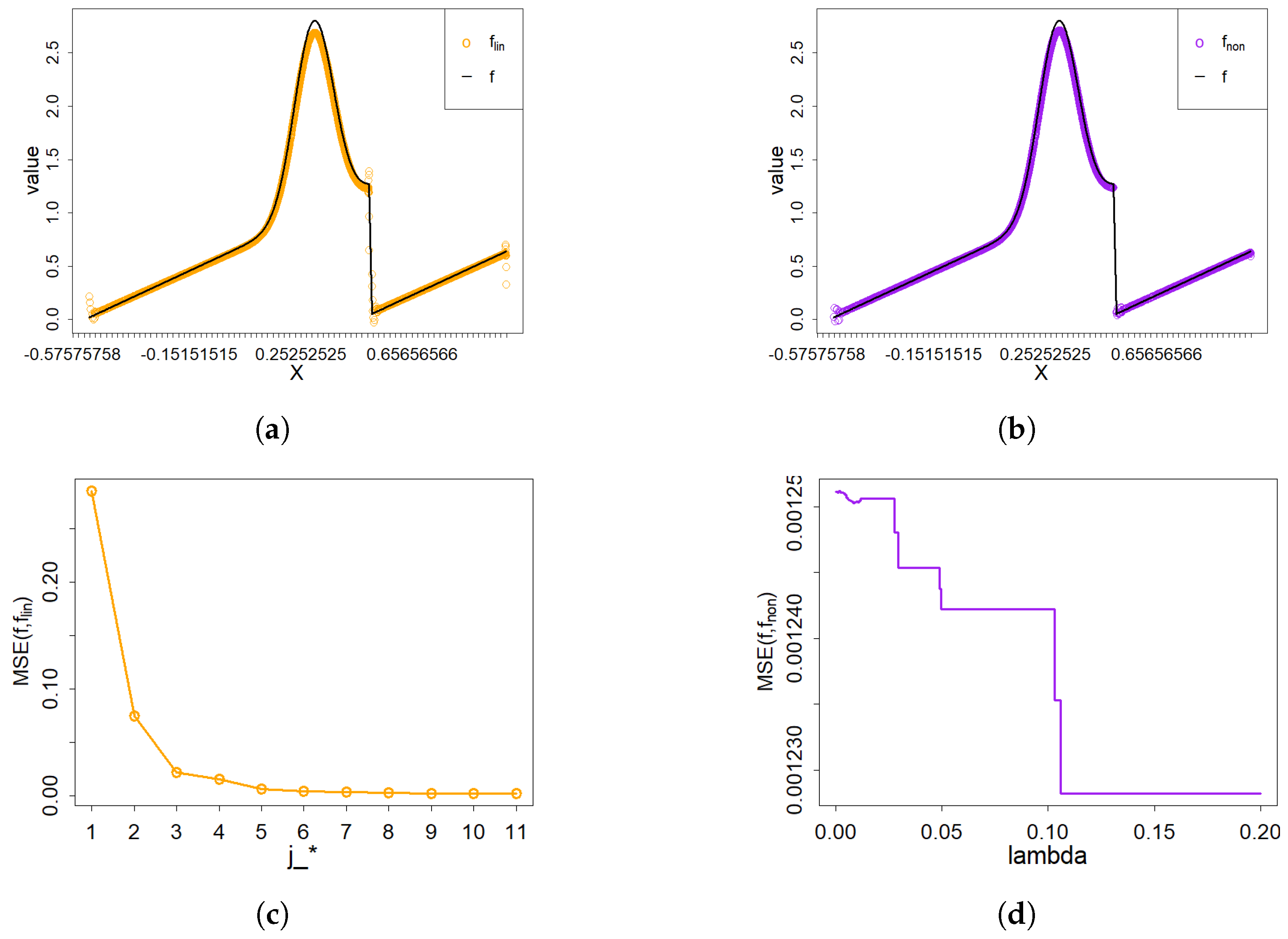

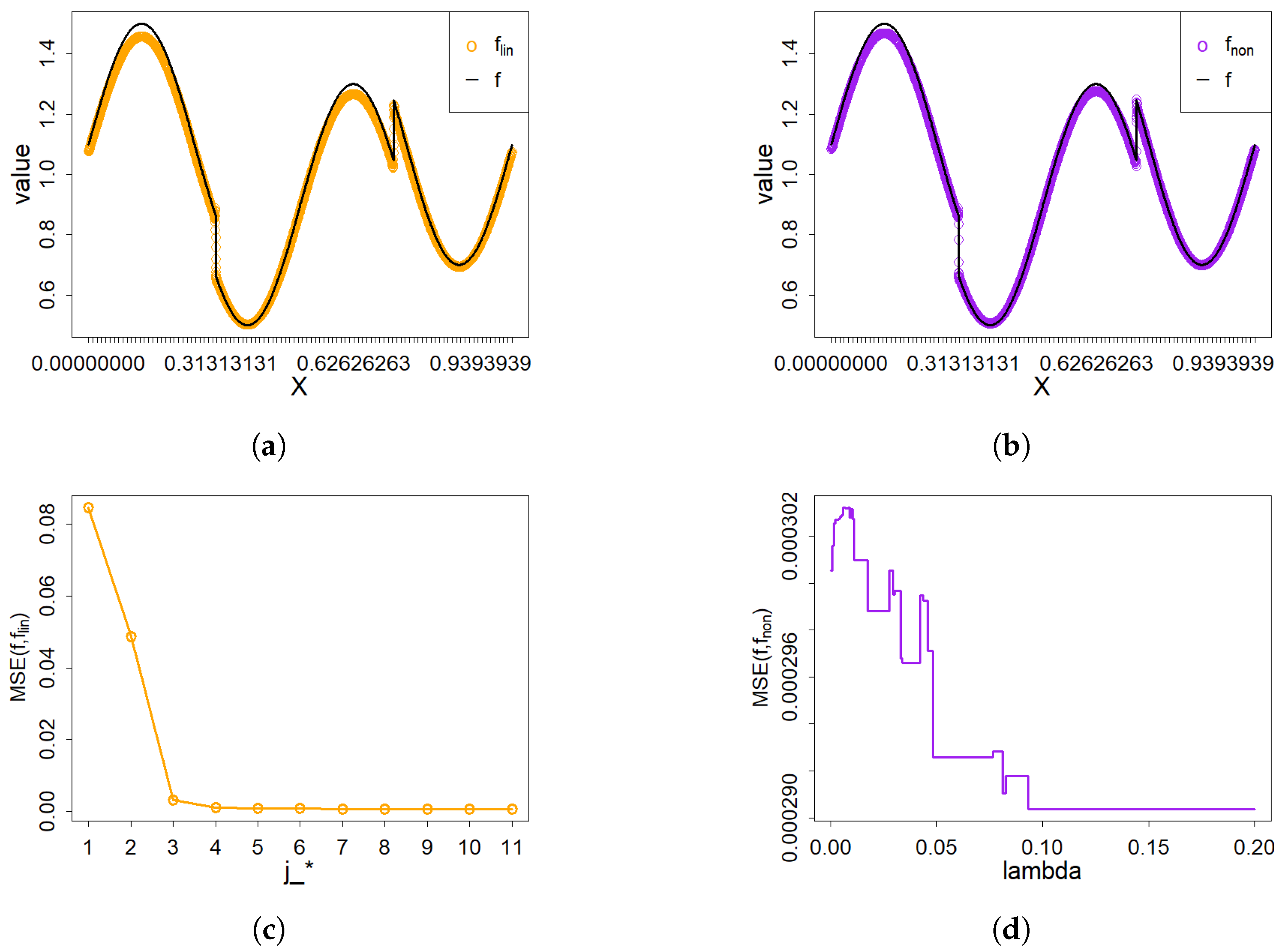

Example 1. In model (1), we take the density to be . The density function and . The performance of both linear and nonlinear wavelet estimators is illustrated in Figure 1a,b, respectively. As can be observed, each estimator provides an effective approximation of the density function . As clearly shown in Figure 1c, the optimal scale parameter is . The optimal threshold parameter is depicted in Figure 1d. Example 2. In model (1), we set the unknown density to , and . The performance of both linear and nonlinear wavelet estimators is illustrated in Figure 2a,b, respectively. Based on Figure 2c,d, the optimal scale parameter and the optimal threshold parameter are obtained. From those results, it is evident that the nonlinear wavelet estimator outperforms the linear estimator, particularly in capturing sharp features. Example 3. In model (1), we take on and set . The efficacy of the two wavelet estimators in approximating the density function is evidenced in Figure 3a,b. The corresponding best parameters of two wavelet estimators are presented in Figure 3c,d. Example 4. In model (1), the density function is specified as with and . The performance of the linear and nonlinear wavelet estimators is illustrated in Figure 4a,b, respectively. Both estimators effectively approximate the density function, but the nonlinear estimator demonstrates superior performance near discontinuities. Example 5. For the experiment, we set the target density to , and . According to Figure 5c,d, the optimal scale parameter and the optimal thresholding parameter are obtained. Under these conditions, the density function can be estimated by the two wavelet estimators shown in Figure 5a,b. Example 6. For the experiment, we select the “Time Shift Sine” as the density function [29]. In addition, and . Figure 6c,d show that the optimal scale parameter is and the optimal thresholding parameter is . From the following results, both wavelet estimators accurately estimate the unknown density. For both the linear and nonlinear wavelet estimators, the best scale parameter, the optimal thresholding parameter and the values of MSE are shown in

Table 1. Based on

Table 1 and the preceding results, both wavelet estimators effectively approximate the density function, with the nonlinear variant exhibiting superior performance. More importantly, according to the results of Example 2, 4, 5 and 6, both wavelet estimators perform well in cases with discontinuities and spikes.