Abstract

In this paper, we derive new probabilistic bounds on the sensitivity of invariant subspaces, deflation subspaces and singular subspaces of matrices. The analysis exploits a unified method for deriving asymptotic perturbation bounds of the subspaces under interest and utilizes probabilistic approximations of the entries of random perturbation matrices implementing the Markoff inequality. As a result of the analysis, we determine with a prescribed probability asymptotic perturbation bounds on the angles between the corresponding perturbed and unperturbed subspaces. It is shown that the probabilistic asymptotic bounds proposed are significantly less conservative than the corresponding deterministic perturbation bounds. The results obtained are illustrated by examples comparing the known deterministic perturbation bounds with the new probabilistic bounds.

Keywords:

perturbation analysis; probabilistic bounds; invariant subspaces; deflating subspaces; singular subspaces MSC:

47A55; 15A18; 65F15; 65F25

1. Introduction

In this paper, we are concerned with the derivation of realistic perturbation bounds on the sensitivity of various important subspaces arising in matrix analysis. Such bounds are especially needed in the perturbation analysis of high dimension subspaces when the known bounds may produce very pessimistic results. We show that much tighter bounds on the subspace sensitivity can be obtained by using a probabilistic approach based on the Markoff inequality.

The sensitivity of invariant, deflation and singular subspaces of matrices is considered in detail in the fundamental book of Stewart and Sun [1], as well as in the surveys of Bhatia [2] and Li [3]. In particular, perturbation analysis of the eigenvectors and invariant subspaces of matrices affected by deterministic and random perturbations is presented in several papers and books, see for instance [4,5,6,7,8,9,10,11,12]. Survey [13] is entirely devoted to the asymptotic (first-order) perturbation analysis of eigenvalues and eigenvectors. The algorithmic and software problems in computing invariant subspaces are discussed in [14]. The sensitivity of deflating subspaces arising in the generalized Schur decomposition is considered in [15,16,17,18], and numerical algorithms for analyzing this sensitivity are presented in [19]. Bounds on the sensitivity of singular values and singular spaces of matrices that are subject to random perturbations are derived in [20,21,22,23,24,25,26], to name a few. The stochastic matrix theory that can be used in case of stochastic perturbations is developed in the papers of Stewart [27], and Edelman and Rao [28].

In [29], the author proposed new componentwise perturbation bounds of unitary and orthogonal matrix decomposition based on probabilistic approximations of the entries of random perturbation matrices implementing the Markoff inequality. It was shown that using such bounds it is possible to decrease significantly the asymptotic perturbation bounds of the corresponding similarity or equivalence transformation matrices. Based on the probabilistic asymptotic estimates of the entries of random perturbation matrices, presented in [29], in this paper we derive new new probabilistic bounds on the sensitivity of invariant subspaces, deflation subspaces and singular subspaces of matrices. The analysis and the examples given demonstrate that, in contrast to the known deterministic bounds, the probabilistic bounds are much tighter with a sufficiently high probability. The analysis performed exploits a unified method for deriving asymptotic perturbation bounds of the subspaces under interest, developed in [30,31,32,33,34], and utilizes probabilistic approximations of the entries of random perturbation matrices implementing the Markoff inequality. As a result of the analysis, we determine, with a prescribed probability, asymptotic perturbation bounds on the angles between the perturbed and unperturbed subspaces. It is proved that the new probabilistic asymptotic bounds are significantly less conservative than the corresponding deterministic perturbation bounds. The results obtained are illustrated by examples comparing the deterministic perturbation bounds derived by Stewart [16,35] and Sun [11,18] with the probabilistic bounds derived in this paper.

The paper is structured as follows. In Section 2, we briefly present the main results concerning the derivation of lower magnitude bounds on the entries of a random matrix using only its Frobenius norm. In the next three sections, Section 3, Section 4 and Section 5, we show the application of this approach to derive probabilistic perturbation bounds for the invariant, deflating and singular subspaces of matrices, respectively. We illustrate the theoretical results by examples demonstrating that the probability bounds of such subspaces are much tighter than the corresponding deterministic asymptotic bounds. We note that the known deterministic bounds for the invariant, deflating and singular subspaces are presented briefly as theorems without proof, only with the purpose to compare them with the new bounds.

All computations in the paper are performed with MATLAB® Version 9.9 (R2020b) [36] using IEEE double-precision arithmetic. M-files implementing the perturbation bounds described in the paper can be obtained from the author.

2. Probabilistic Bounds for Random Matrices

Consider an random matrix, , with uncorrelated elements. In the componentwise perturbation analysis of matrix decompositions, we have to use a matrix bound , so that , i.e.,

where and is some matrix norm. However, if for instance we use the Frobenius norm of , we have that and

which produces very pessimistic results for a large m and n. To reduce , in [29] it is proposed to decrease the entries of , taking a bound with entries , where . Of course, in the general case, such a bound will not satisfy (1) for all i and j. However, we can allow to exist some entries, , of the perturbation that exceed in magnitude, with some prescribed probability, the corresponding bound, . This probability can be determined by the Markoff inequality ([37], Section 5-4)

where is the probability that the random variable is greater or equal to a given number, a, and is the average (or mean value) of . Note that this inequality is valid for arbitrary distribution of , which makes it conservative for a specific probability distribution. Applying the Markoff inequality, with equal to the entry and a equal to the corresponding bound , we obtain the following result [29].

Theorem 1.

For an random perturbation, , and a desired probability , the estimate , where

and

satisfies the inequality

Theorem 1 allows the decrease of the mean value of the bound, , and hence the magnitude of its entries by the quantity , choosing the desired probability, , less than 1. The value corresponds to the case of the deterministic bound, , with entries , when it is fulfilled that all entries of are larger than or equal to the corresponding entries of the perturbation, . The value corresponds to , where . As mentioned above, the probability bound produced by the Markoff inequality is very conservative, with the actual results being much better than the results predicted by the probability, . This is due to the fact that the Markoff inequality is valid for the worst possible distribution of the random variable .

According to Theorem 1, the using of the scaling factor (3) guarantees that the inequality

holds for each i and j with a probability no less than . Since the entries of are uncorrelated, this means that, for sufficiently large m and n, the number also gives a lower bound on the relative number of the entries that satisfy the above inequality.

In some cases, for instance in the perturbation analysis of the Singular Value Decomposition, tighter perturbation bounds are obtained if instead of the norm we use the spectral norm . The following result is an analogue of Theorem 1 that allows us to use at the price of producing smaller values of .

Theorem 2.

For an random perturbation, , and a desired probability , the estimate , where

and

satisfies the inequality

This result follows directly from Theorem 1, replacing by its upper bound .

Since, frequently, is of the order of , for a large n, Theorem 2 may produce pessimistic results in the sense that the actual probability of fulfilling the inequality is much larger than the value predicted by (5).

In several instances of the perturbation analysis, we have to determine a bound on the elements of the vector

where M is a given matrix and is a random vector with a known probabilistic bound on the elements. In accordance with (6), we have that the following deterministic asymptotic (linear) componentwise bound is valid,

A probability bound on can be determined by the following theorem [29].

Theorem 3.

If the estimate of the parameter vector x is chosen as , where Ξ is determined according to

then

Since

the inequality (9) shows that the probability estimate of the component can be determined if in the linear estimate (7) we replace the perturbation norm by the probability estimate , where the scaling factor, , is taken as shown in (8) for a specified probability, . In this way, instead of the linear estimate, , we obtain the probabilistic estimate

3. Perturbation Bounds for Invariant Subspaces

3.1. Problem Statement

Let

be the Schur decomposition of the matrix , where contains a given group of the eigenvalues of A ([38], Section 2.3). The matrix, U, of the unitary similarity transformation can be partitioned as

where the columns of are the basis vectors of the invariant subspace, , associated with the eigenvalues of the block , and is the unitary complement of , . The invariant subspace satisfies the relation . Note that the eigenvalues of A can be reordered in the desired way on the diagonal of T (and hence of ) using unitary similarity transformations ([39], Chapter 7).

The invariant subspace, , is called simple if the matrices and have no eigenvalues in common.

If matrix A is a subject to a perturbation, , then, instead of the decomposition (10), we have the decomposition

with a perturbed matrix of the unitary transformation

The columns of matrix are basis vectors of the perturbed invariant subspace, . We shall assume that matrix A has distinct eigenvalues, i.e., is a simple invariant subspace that ensures finite perturbations and for small perturbations of A.

Let and be two subspaces of dimension k, where . The distance between and can be characterized by the gap between these subspaces, defined as [11]

where and are the orthogonal projections onto and , respectively. Further on, we shall measure the sensitivity of an invariant subspace of dimension k by the canonical angles

between the perturbed and unperturbed subspaces ([35], Chapter 4). The maximum angle is related to the value of the gap between and by the relationship [40]

We note that the maximum angle between and can be computed efficiently from [41]

3.2. -Based Global Bound

Define

and

where ⊗ denotes the Kronecker product ([42], Chapter 4). The norm of the matrix is closely related to the quantity separation between two matrices. The separation between given matrices and characterizes the distance between the spectra and and is defined as

Note that , if and only if A and B have eigenvalues in common.

In the given case, the separation

between the two blocks and can be determined from

which is equivalent to

Assume that the spectra of and are disjoint, so that . Then the following theorem gives an estimate of the sensitivity of an invariant subspace of A.

Theorem 4

If and

then there is a unique matrix, P, satisfying

such that the columns of span a right invariant subspace of .

It may be shown that the singular values of the matrix

are the sines of the canonical angles between the invariant subspaces and . That is why, if P has singular values , then the singular values of are

and

Hence

Thus, Theorem 4 bounds the tangents of the canonical angles between the perturbed, , and unperturbed, , invariant subspace of A. The maximum canonical angle fulfils

3.3. Perturbation Expansion Bound

A global perturbation bound for invariant subspaces is derived by Sun [11,40] using the perturbation expansion method. The essence of this method is to expand the perturbed basis in infinite series in the powers of and then estimate the series sum.

Theorem 5

Then the simple invariant subspace, , of the matrix has the following qth-order perturbation estimation for any natural number, q:

Theorem 5 can be used to estimate the canonical angles between the perturbed and unperturbed invariant subspaces. Taking into account (13), we obtain the bound

The implementation of Theorem 5 to estimate the sensitivity of an invariant subspace shows that the bound (23) tends to overestimate severely the true value of the angle for and large n. In practice, it is possible to obtain reasonable results if we use only the first-order term () in the expansion (23), i.e., if we use the linear bound

3.4. Bound by the Splitting Operator Method

The essence of the splitting operator method for perturbation analysis of matrix problems [31] consists in the separate deriving of perturbation bounds on and . For this aim, we introduce the perturbation parameter vector

where the components of x are the entries of the strictly lower triangular part of the matrix . This vector is then used to find bounds on the various elements of the Schur decomposition.

Let

and construct the vector

The equation for the perturbation parameters represents a linear system of equations [32]

where is a matrix whose elements are determined from the entries of T, and the components of the vector contain higher-order terms in the perturbations . Specifically, matrix M is determined by

where

Note that matrix M is non-singular although the matrix is not of full rank.

Equation (25) is independent from the equations that determine the perturbations of the elements of the Schur form T. This first allows us to solve (25) and estimate and then to use the solution obtained to determine bounds on the elements of .

Neglecting the second-order term in (25), we obtain the first-order (linear) approximation of x,

Since , we have that

where

is the asymptotic bound on .

The matrix can be estimated as

where

is a first-order approximation of , and contains higher-order terms in x. Thus, an asymptotic (linear) approximation of the matrix can be determined as

Since

one has that

Equation (32) shows that the sensitivity of the invariant subspace, , of dimension k is connected to the values of the perturbation parameters . Consequently, if the perturbation parameters are known, it is possible to find at once sensitivity estimates for all invariant subspaces with dimension . More specifically, let

where * is an unspecified entry. Then we have that the maximum angle between the perturbed and unperturbed invariant subspaces of dimension k is

In this way, we obtain the following result.

Theorem 6.

The proof of Theorem 6 follows directly from (33), replacing the matrix by its linear approximation, , and substituting each by its approximation (29). Note that, as always in the case of perturbation bounds, the equality can be achieved only for specially constructed perturbation matrices.

Denote by

the changes of the diagonal elements of T, i.e., the perturbations of the eigenvalues of A. Then the first-order eigenvalue perturbations satisfy

where

and

The obtained linear bound (35) coincides numerically with the well-known asymptotic bounds from the literature [12,35,39]. The quantity is equal to the condition number of the eigenvalue .

3.5. Probabilistic Perturbation Bound

The idea of determining tighter perturbation bounds of matrix subspaces consists in replacing the Frobenius or 2-norm of the matrix perturbation by a much smaller probabilistic estimate of the perturbation entries, obtained using Theorems 1 and 3. This allows us to decrease, with a specified probability, the perturbation bounds for the different subspaces, achieving better results for higher dimensional problems. In simple terms, we replace in the corresponding asymptotic estimate by the ratio , thus decreasing the perturbation bound by the quantity , which is determined by the desired probability, . We shall illustrate this idea considering first the case of invariant subspaces.

Using Theorem 3, the probabilistic perturbation bounds of x and in the case of the Schur decomposition can be found from (29) and (31), respectively, replacing in (29) the perturbation norm by the quantity , where is determined according to (3) from the desired probability, , and the problem order, n. In this way, we obtain the probabilistic asymptotic estimate

of the maximum angle between the perturbed and unperturbed invariant subspaces of dimension k. In the same way, from (35), we obtain a probabilistic asymptotic estimate

of the eigenvalue perturbations.

3.6. Bound Comparison

In the next example, we compare the invariant subspace deterministic perturbation bounds, obtained by the -based approach, the perturbation expansion method and the splitting operator method, with the probabilistic bound obtained by using the Markoff inequality.

Example 1.

Consider a matrix A, taken as

where

and the matrix is constructed as [43]

where are elementary reflections, σ is taken equal to and . The eigenvalues of A,

are complex conjugated. The perturbation of A is taken as , where is a matrix with random entries with normal distribution and . The matrix M in (25) is of order and its inverse satisfies , which shows that the eigenvalue problem for A is ill-conditioned since the perturbations of A can be “amplified” times in x and, consequently, in and .

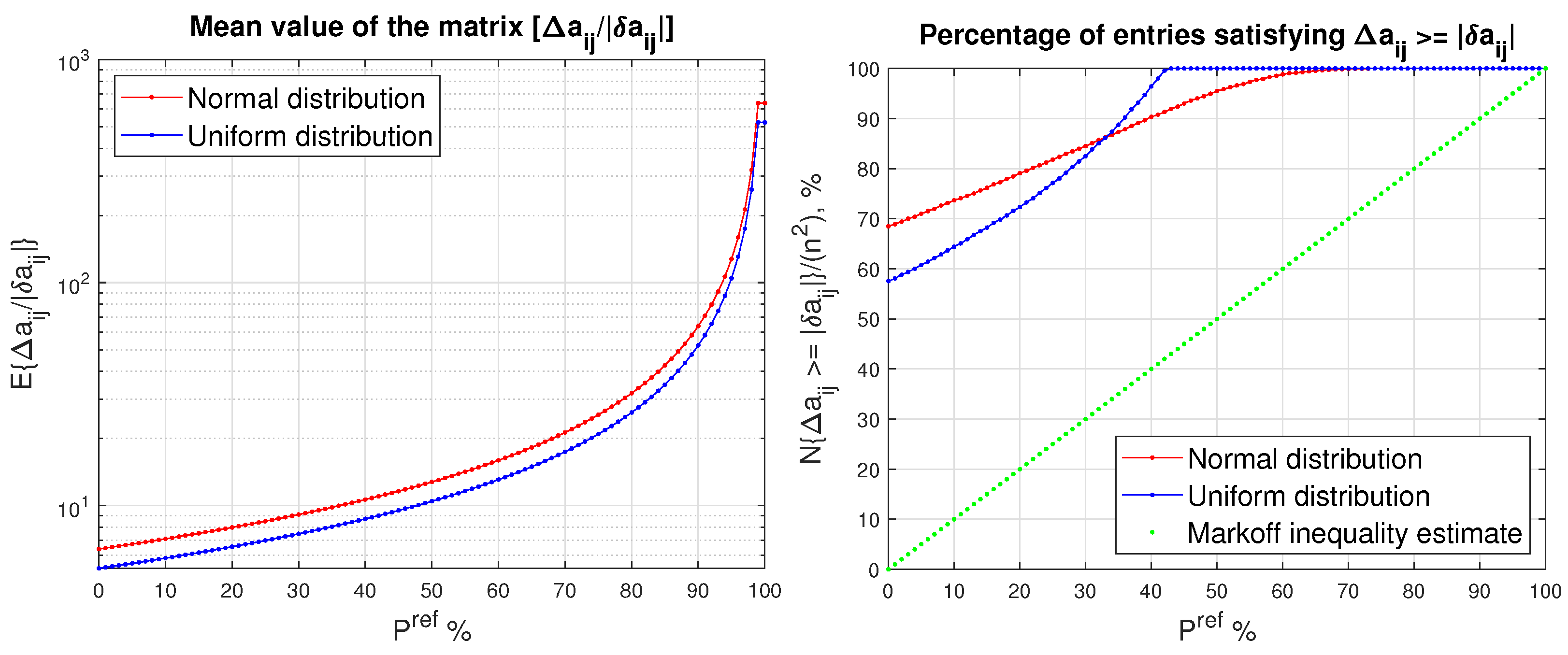

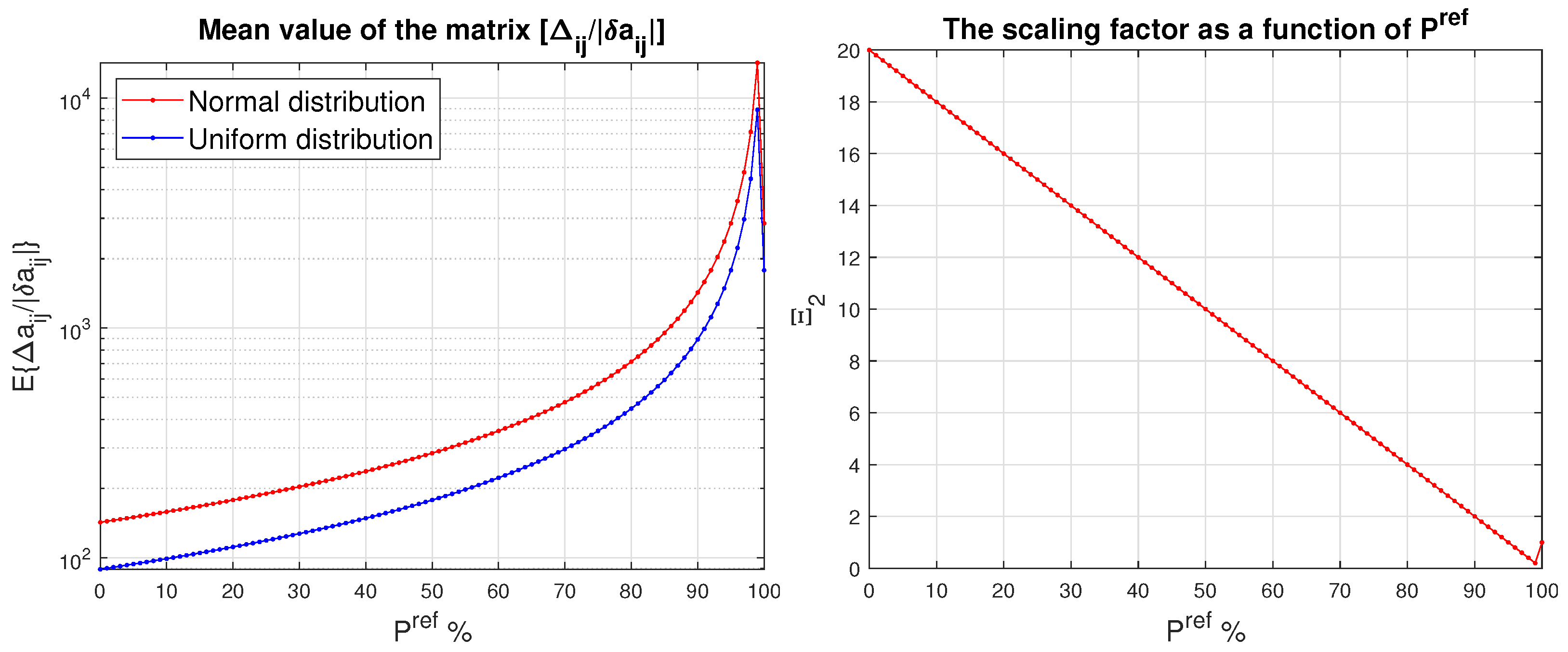

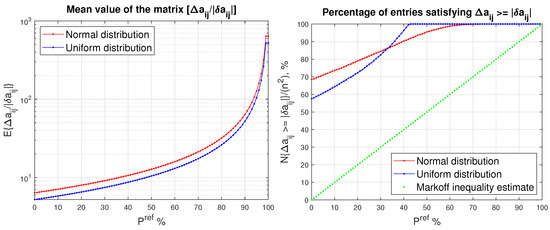

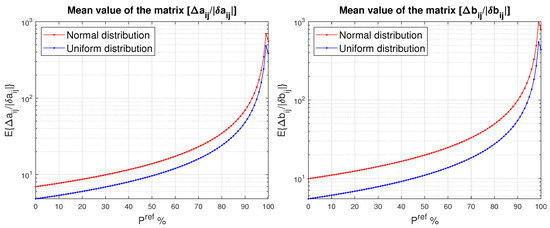

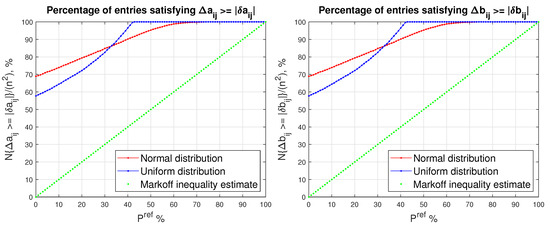

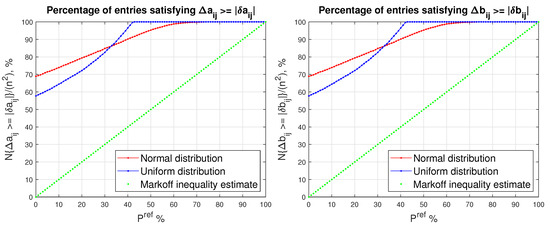

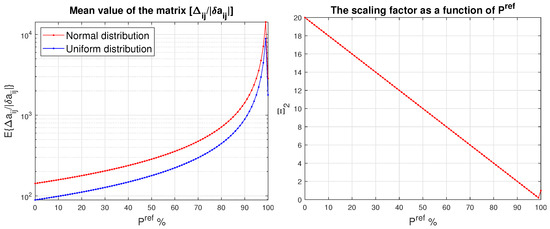

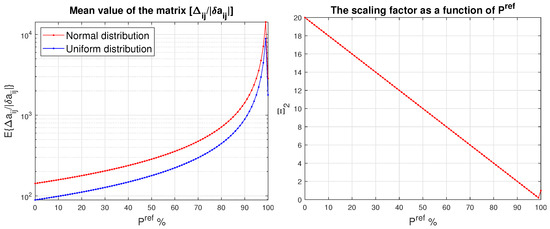

In Figure 1, we show the mean value of the matrix and the relative number of the entries of the matrix for which , obtained for normal and uniform distribution of the entries of and for different values of the desired probability, . For the case of normal distribution and , the size of the probability entry bound, , decreases 10 times in comparison with the size of the entry bound , which allows the decrease of the mean value of the ratio from to (Table 1). For , the probability bound, , is 60 times smaller than the bound , and even for this small desired probability the number of entries for which is still .

Figure 1.

Mean value of as a function of (left) and the mean value of (right) as a function of for two random distributions of the entries of a matrix.

Table 1.

The mean value of the ratios and the relative number of entries for which , obtained for five values of , .

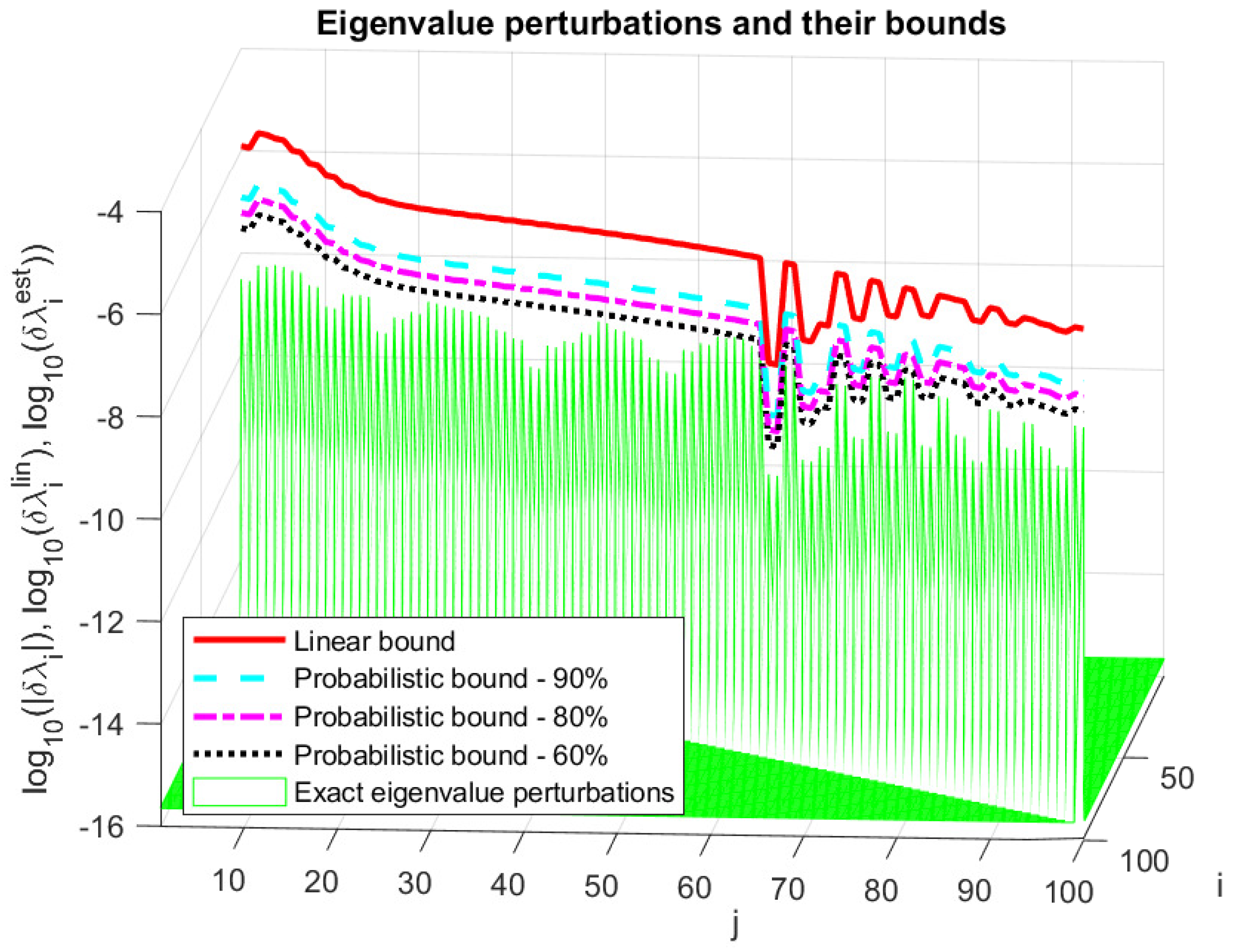

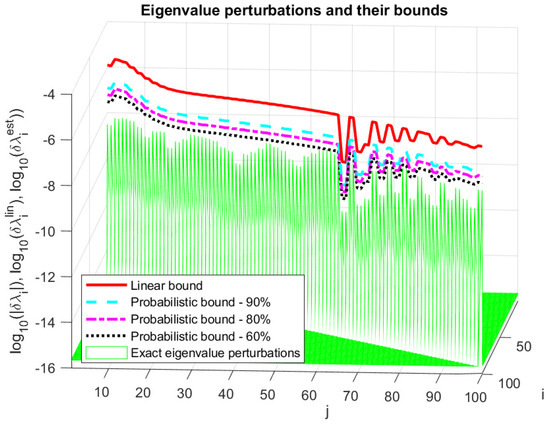

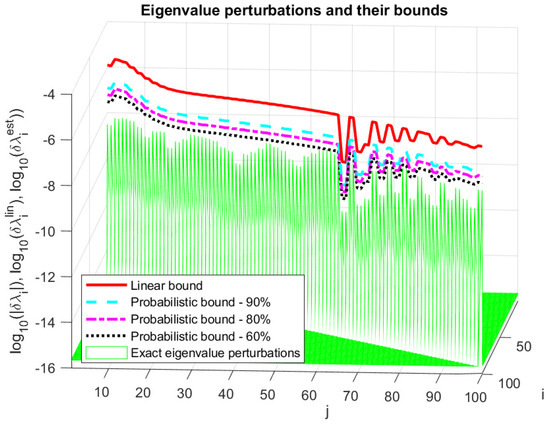

In Figure 2, we compare the asymptotic bound, , and the probabilistic estimate, , with the actual eigenvalue perturbations for normal distribution of perturbation entries and probabilities and . (For clarity, the perturbations of the superdiagonal elements of T are hidden). The probabilistic bound, , is much tighter than the linear bound, , and the inequality is satisfied for all eigenvalues and all chosen probabilities. In particular, the size of the estimate, , is 10 times smaller than the linear estimate, , for , 20 times for and 40 times for .

Figure 2.

Eigenvalue perturbations and their linear and probabilistic bounds.

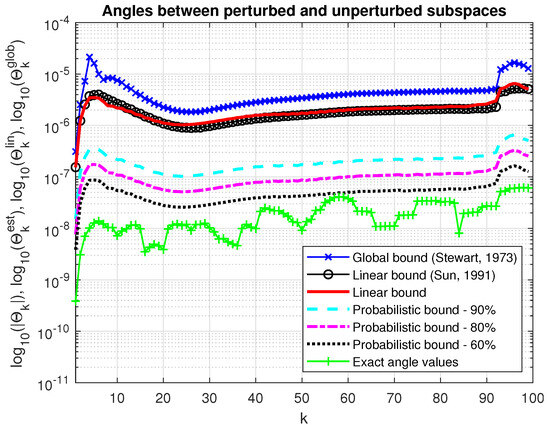

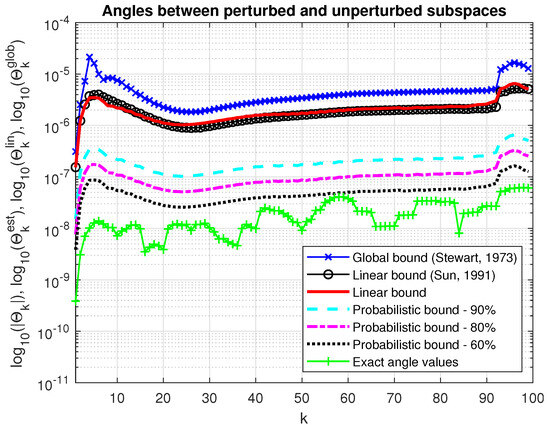

In Figure 3, we show the asymptotic bound, , and the probabilistic estimate, , along with the actual value of the maximum angle between the perturbed and unperturbed invariant subspace of dimensions for the same probabilities and . The probability estimate satisfies for all . For comparison, we give the global bound on the maximum angle between the perturbed and unperturbed invariant subspaces computed by (20) and the first-order bound determined by (24). (The computation of the -based estimate is performed by using the numerical algorithm, presented in [14].) The global bound is slightly larger than the asymptotic bounds, and the asymptotic bounds (24) and (34) coincide.

Figure 3.

Angles between the perturbed and unperturbed invariant subspaces and their bounds.

4. Perturbation Bounds for Deflating Subspaces

4.1. Problem Statement

Let be a square matrix pencil [35]. Then there exist unitary matrices U and V, such that and are both upper triangular. The pair constitute the generalized Schur form of the pair . The eigenvalues of are then equal to , the ratios of the diagonal entries of T and R. Further on, we consider the case of regular pencils for which and in addition the matrix B is non-singular, i.e., the generalized eigenvalues are finite.

Suppose that the generalized Schur form of the pencil is reordered as

where contains k specified eigenvalues. Partitioning conformally the unitary matrices of the equivalence transformation as , we obtain orthonormal bases and of the left, , and right, , deflating subspace, respectively. The deflating subspaces satisfy the relations and .

Further on, we are interested in the sensitivity of the deflating subspaces corresponding to specified generalized eigenvalues of the pair . Suppose that the matrices of the pencil are perturbed as and . The upper triangular matrices in the generalized Schur decomposition are represented as

where

are the modified equivalence transformation matrices.

4.2. -Based Global Bound

Let the unperturbed left and right deflation subspaces corresponding to the first k eigenvalues be denoted by and , respectively, and their perturbed counterparts as and .

If and are small, we may expect that the spaces and will be close to the spaces and , respectively.

Define the matrices

and

Note that matrix M is invertible if and only if the pencils and have no eigenvalues in common.

Define the difference between the spectra of and as [15]

It is possible to prove the relationship

so that

Assume that the spectra of and are disjoint so that

The following theorem gives an estimate of the sensitivity of a deflating subspace of a regular pencil.

Theorem 7

If and

there are matrices P and Q satisfying

such that the columns of and span right and left deflating subspaces for . Note that

It is possible to show that Equation (39) bounds the tangents of the canonical angles between and or and , similarly to the ordinary eigenvalue problem. Specifically, we have that

Theorem 7 can be considered as a generalization of Theorem 4 for the ordinary eigenvalue problem.

4.3. Perturbation Expansion Bound

Global perturbation bounds for deflating subspaces that produce individual perturbation bounds for each subspace in a pair of deflating subspaces are presented in [18].

Theorem 8.

Let be an regular matrix pair represented as in (38), and let and . Moreover, let be defined by

where

and let

If

then there exists a pair and of k-dimensional deflating subspaces of such that

4.4. Bound by the Splitting Operator Method

The application of the splitting operator method to the perturbation analysis of the generalized Schur decomposition is performed in [34].

Consider again the generalized Schur decomposition (38). To derive perturbation bounds of the matrices and , we introduce the perturbation parameter vectors

and

where and . Let

and construct the vectors

Then, asymptotic bounds of x and y can be found by solving the linear system of equations

where

and

Hence, linear approximations of the elements of x and y can be determined from

where

The matrices and can be estimated as

where

and contain higher-order terms in . Thus, asymptotic approximation of the matrices and can be determined as

Let and be the orthonormal bases of the perturbed and unperturbed right deflation subspace, , of dimension k, and and be, respectively, the orthonormal bases of the perturbed and unperturbed left deflation subspace, , of the same dimension. Since

we have that

Using these expressions, it is possible to show that

Implementing the asymptotic approximations of the elements of the vectors x and y, we obtain the following result.

Theorem 9.

Let the pair be decomposed, as in (38), and assume that the Frobenius norms of the perturbations and are known. Set

Then, the following asymptotic bounds of the angles between the perturbed and unperturbed deflation subspaces of dimension are valid,

Consider the sensitivity of the generalized eigenvalues, i.e., the sensitivity of a simple finite generalized eigenvalue, , under perturbations of the matrices A and B. If the perturbed pencil is denoted by

we want to know how the difference between and depends on the size of the perturbation measured by the quantity

The distance between two generalized eigenvalues and is measured by the so-called chordal distance, defined as [15]

Substituting

we find that

or, in a first order-approximation,

The asymptotic approximations of the diagonal element perturbations of the matrices R and T satisfy

where the numbers and are determined from

Replacing the expressions for the perturbations and in (58), we find that

where the number

can be considered as a condition number of the generalized eigenvalue, .

4.5. Probabilistic Perturbation Bound

Implementing again Theorem 3, the probabilistic perturbation bounds of the perturbation parameter vectors x and y can be found from (49) and (50), respectively, replacing the quantity by the ratio , where is determined according to (3) from the desired probability, , and the problem order, n. This means that the probabilistic asymptotic estimates of and can be obtained from (54) and (55), replacing the linear estimates , by

respectively. As a result, we obtain the probabilistic bounds on the angles between the perturbed and unperturbed deflating subspaces as

In the same way, we may obtain a probabilistic asymptotic estimate of the eigenvalue perturbations, replacing in (61) the expression by

. This yields

4.6. Bound Comparison

Example 2.

Consider a matrix pencil , where

the matrices and are constructed as in Example 1,

are elementary reflections, is taken equal to and is taken equal to .

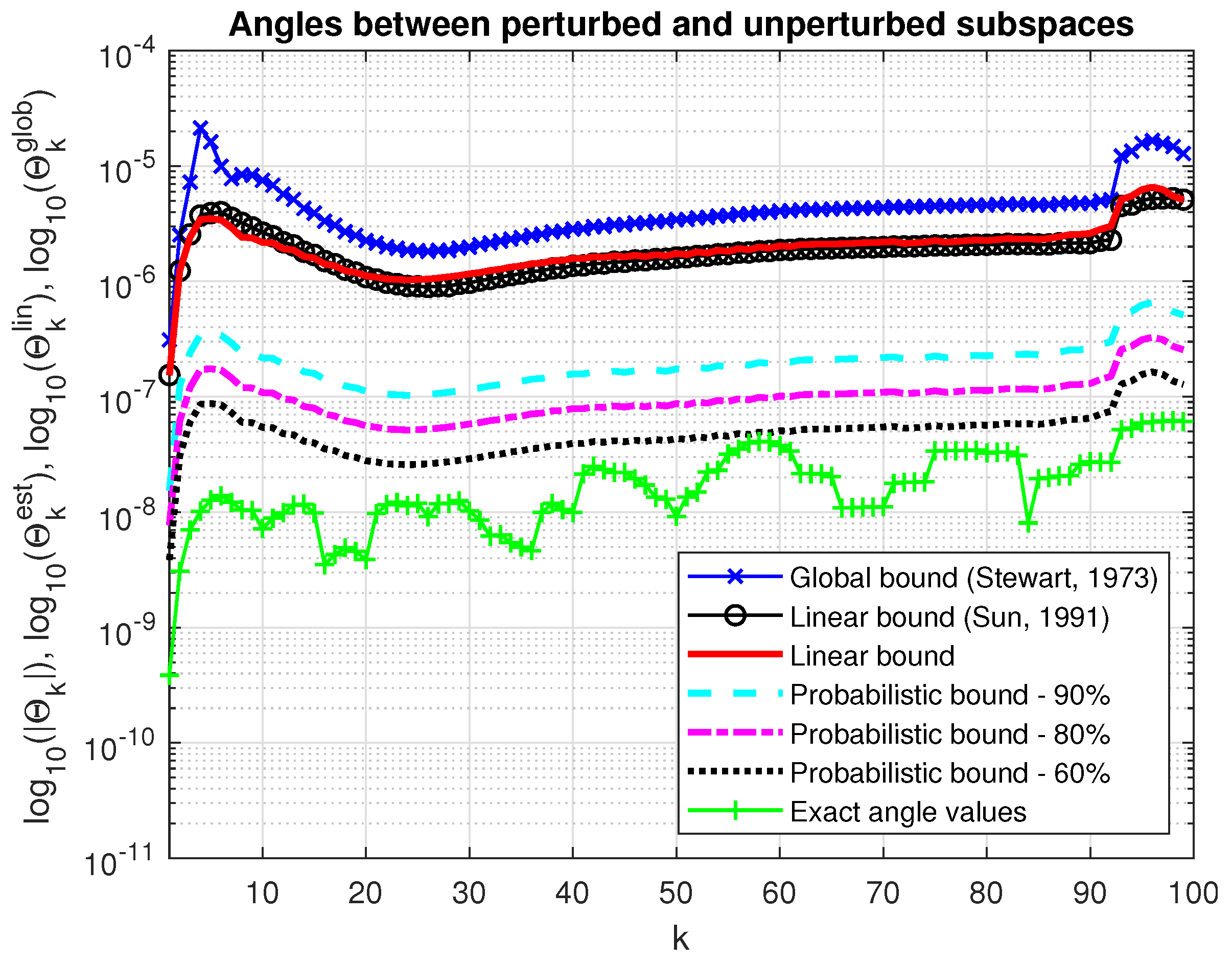

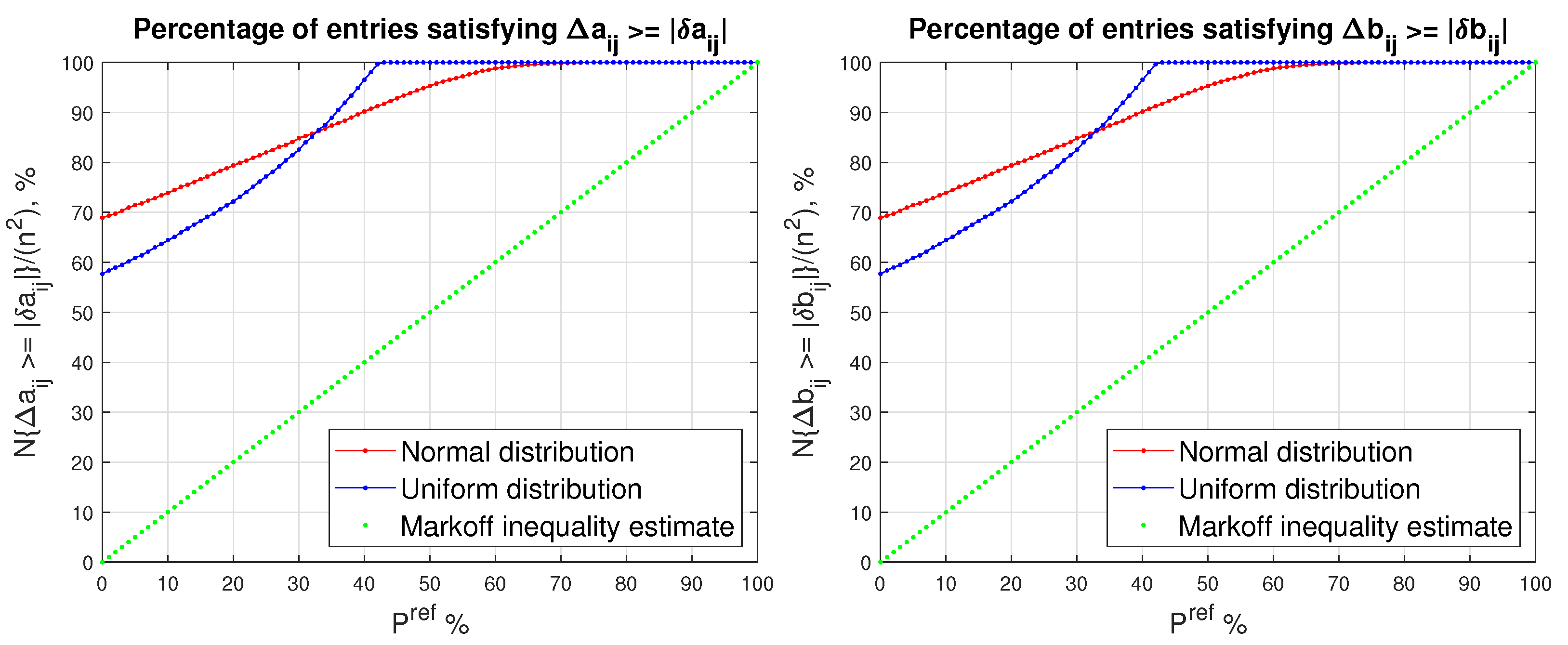

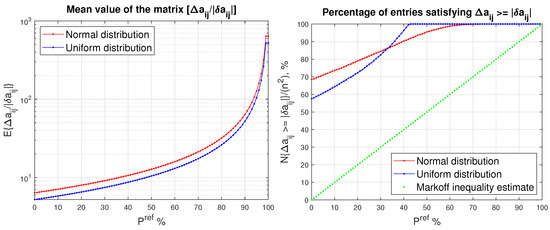

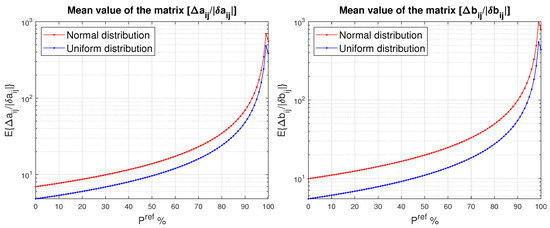

In Figure 4, we give the mean value of the ratios and obtained for two random distributions and different values of between 100% and 0%, where is the probabilistic bound of and is the probabilistic bound of . For each , the scaling factor, Ξ, is determined from (3). The same ratios are represented numerically in Table 2 for the perturbation with normal distribution and three values of . The results show that if, instead of the deterministic estimates of and , we use the corresponding probabilistic estimates and with %, then the mean values of and decrease by a factor of eight. A further reduction in to 80 % leads to the decreasing of the mean values by a factor of 16.

Figure 4.

Mean values of and for two random distributions as functions of .

Table 2.

The mean value of the ratios and , obtained for three values of , .

In Figure 5, we show the relative numbers and of the entries for which and , respectively. In both cases, the relative number of entries for which and remains 100 %, which shows that the decreasing of the estimates of and can be done safely.

Figure 5.

Mean values of and for two random distributions as functions of .

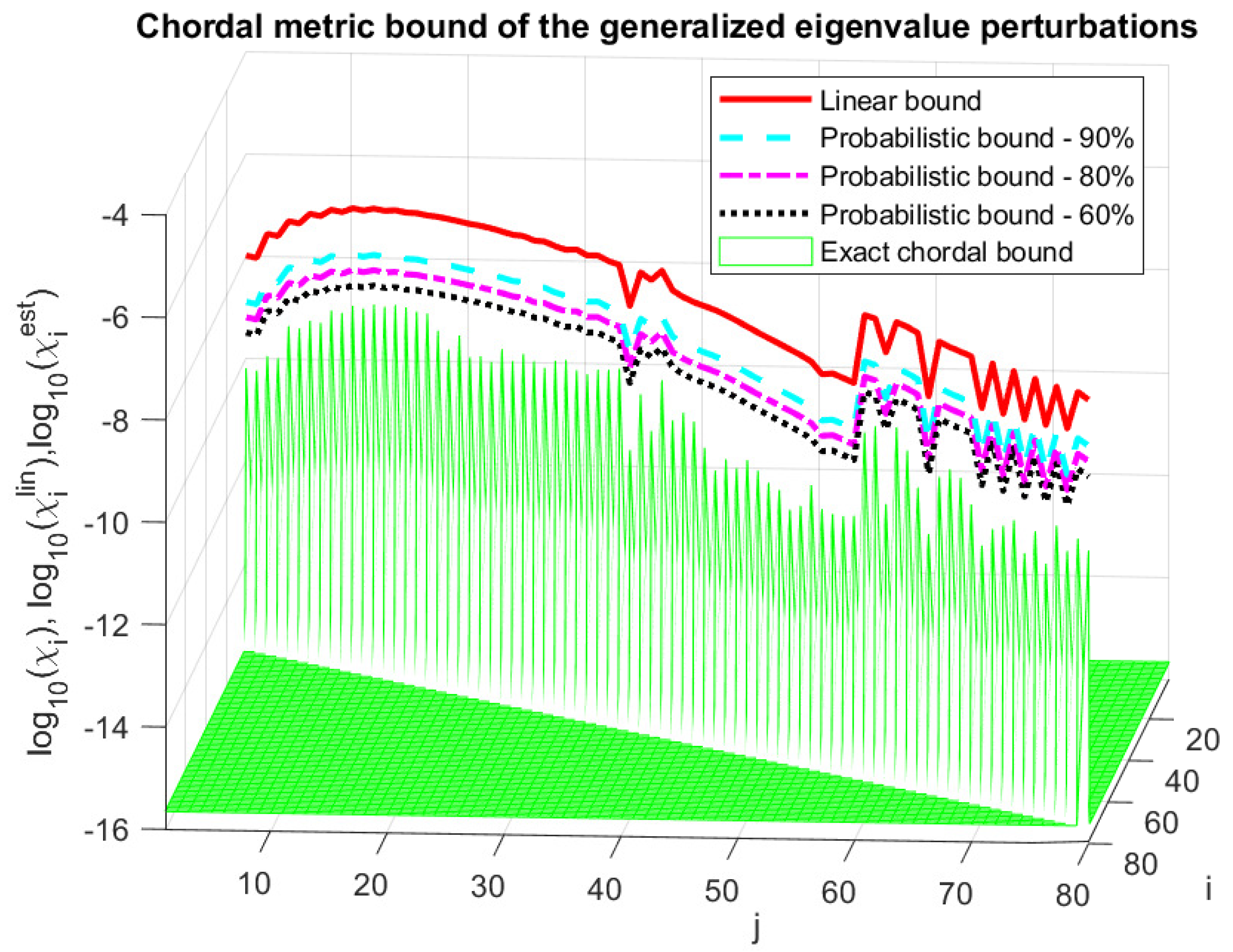

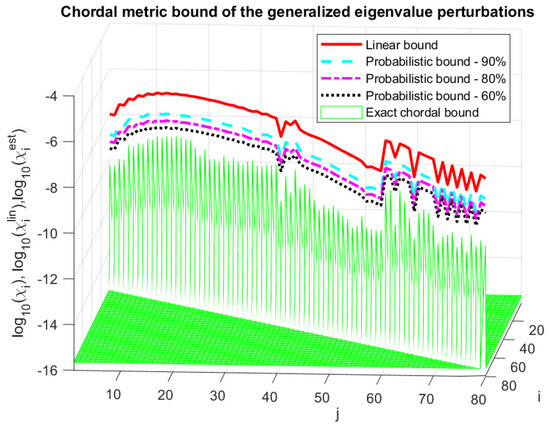

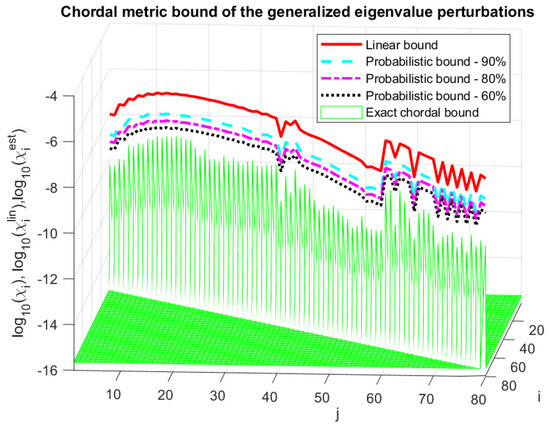

In Figure 6, we show the asymptotic chordal metric bound, , of the generalized eigenvalue perturbations along with the probabilistic estimates, , and the actual eigenvalue perturbations for normal distribution of perturbation entries and probabilities and . The probabilistic bound, , is much tighter than the linear bound, , and the inequality is satisfied for all eigenvalues. In particular, the size of the estimate, , is 8 times smaller than the linear estimate, , for , 16 times for and 32 times for .

Figure 6.

Chordal metric perturbation bounds of the generalized eigenvalues.

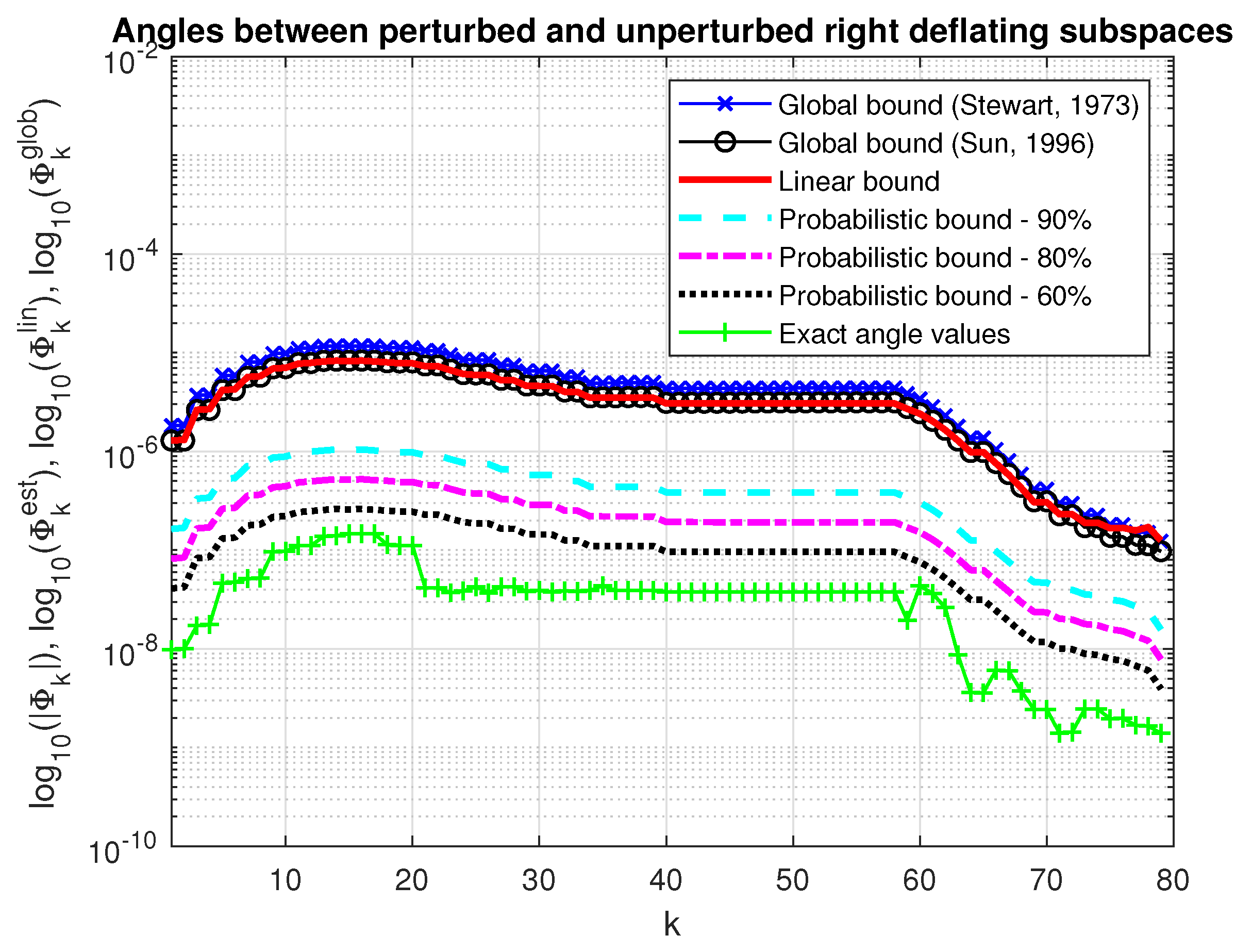

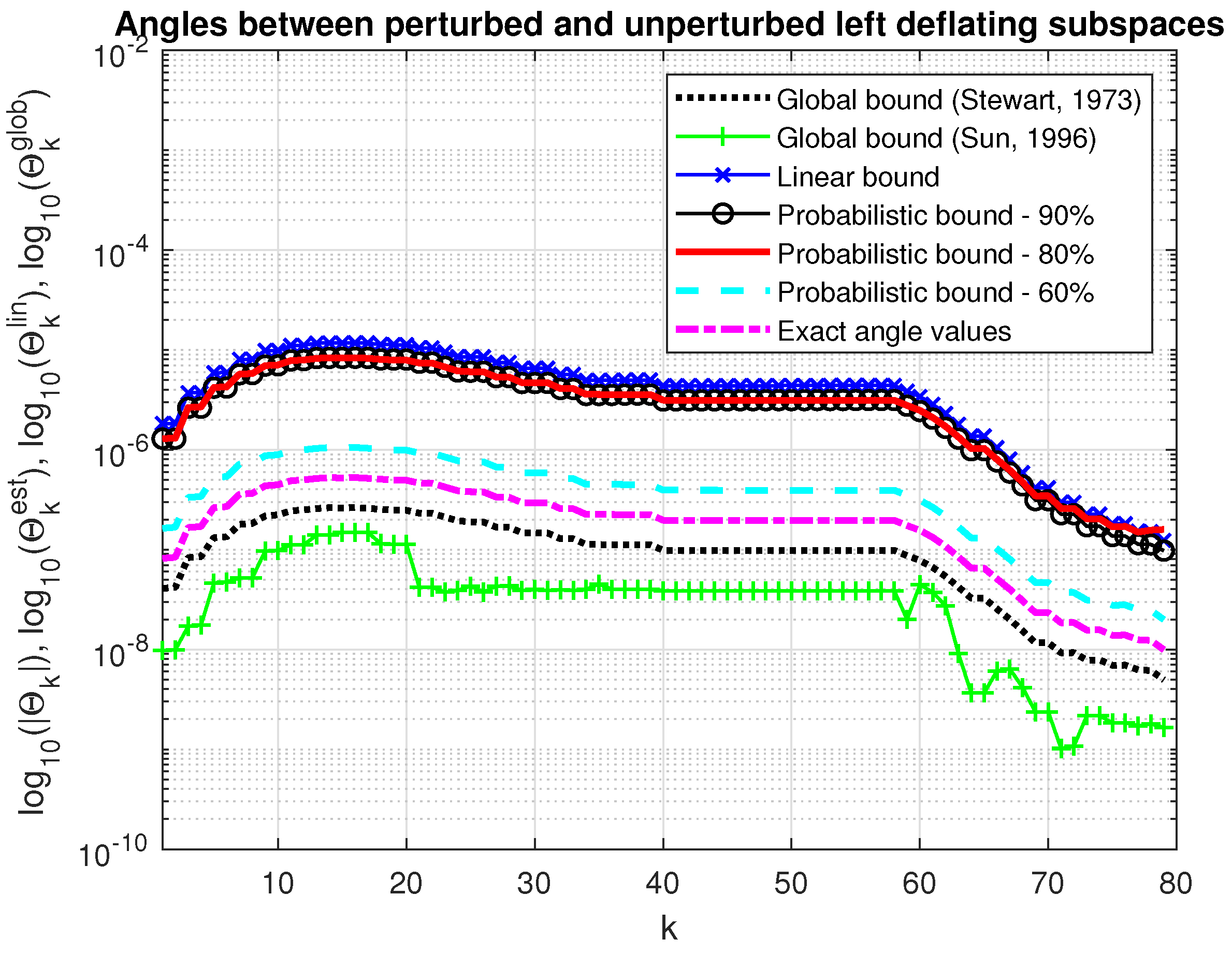

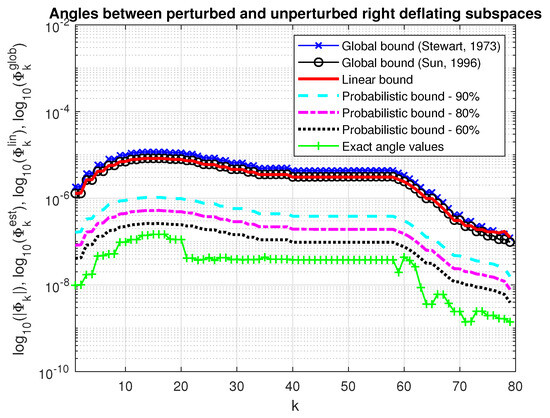

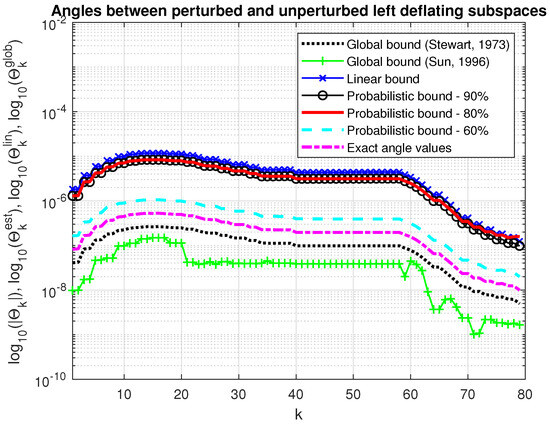

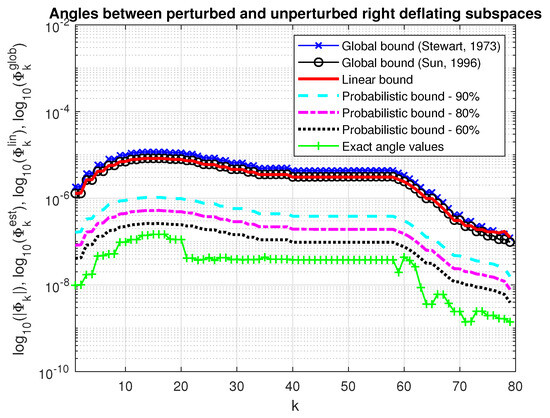

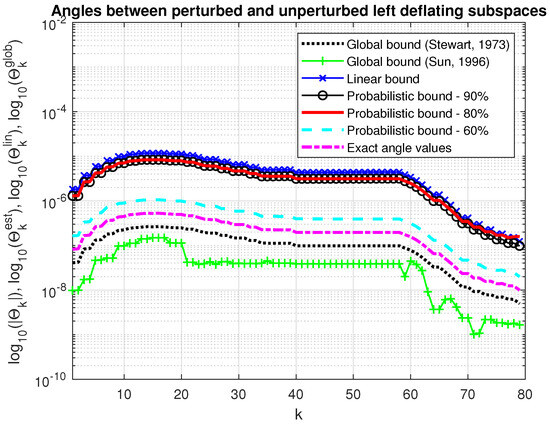

In Figure 7 and Figure 8, we show the asymptotic bounds, and , of the maximum angles between the perturbed and unperturbed deflating subspaces of dimension along with the probabilistic estimates, , , and the actual values of these angles for the same probabilities and . The probability estimates satisfy , for all . For comparison, we give also the global bounds on the maximum angles between the perturbed and unperturbed invariant subspaces computed by (40) and (43), (44). Since the determination of the corresponding bounds requires us to know the norms of parts of the perturbations E and F, which are unknown, these norms are replaced by the Frobenius norms of the whole corresponding matrices. The global bounds practically coincide with the corresponding asymptotic bounds obtained by the splitting operator method.

Figure 7.

Angles between perturbed and unperturbed right deflating subspaces.

Figure 8.

Angles between perturbed and unperturbed left deflating subspaces.

5. Perturbation Bounds for Singular Subspaces

5.1. Problem Statement

Let . The factorization

where are orthogonal matrices and is a diagonal matrix, is called the singular value decomposition of A ([38], Section 2.6). If , matrix has the form

where the numbers are the singular values of A. If and are partitioned such that , then , form a pair of singular subspaces for A. These subspaces satisfy and .

If matrix A is subject to a perturbation, , then there exists another pair of orthogonal matrices, , , and a diagonal matrix, , such that

where

The aim of the perturbation analysis of singular subspaces consists in bounding the angles between the perturbed, , and unperturbed, , right singular subspaces, and the angles between the perturbed, , and unperturbed, , left singular subspaces.

5.2. Global Bound

Consider first the global perturbation bound on the singular subspaces derived by Stewart [16].

Theorem 10.

Let matrix A be decomposed, as in (65), and let and form a pair of singular subspaces for A. Let be given and partition conformingly with U and V as

Let

and let

where, if , Σ is understood to have zero singular values. Set

If

then there are matrices and satisfying

such that and form a pair of singular subspaces of .

Theorem 10 bounds the tangents of the angles between the perturbed, , and unperturbed, , right singular subspaces and the angles between the perturbed, , and unperturbed, , left singular subspaces of A. Thus, the maximum angles between perturbed and unperturbed singular subspaces fulfil

Note that Theorem 10 produces equal bounds on the maximum angles between the perturbed and unperturbed right and left singular subspaces.

5.3. Perturbation Expansion Bound

Global perturbation bounds for singular subspaces that produce individual perturbation bounds for each subspace in a pair of singular subspaces are presented in [18].

Theorem 11.

Let and let be orthogonal matrices with such that

Assume that for each j and the singular values of are different from the singular values of . Let and . For , let

Moreover, let

where we define if and let

If

then there exists a pair, and , of k-dimensional singular subspaces of , such that

5.4. Bound by the Splitting Operator Method

Similarly to the perturbation analysis of the generalized Schur decomposition, performed by using the splitting operator method, it is appropriate first to find bounds on the entries of the matrices and , where is a matrix that consists of the first n columns of U. The matrices and are related to the corresponding perturbations and by orthogonal transformations.

Let us define the vectors of the subdiagonal entries of the matrices and ,

We have that

where

It is fulfilled that

Further on the quantities and will be considered as perturbation parameters since they determine the perturbations and of the singular vectors.

Let us represent the matrix as

Define the vectors

where

It is possible to show that

Following the analysis performed in [30], it can be shown that the unknown vectors and y satisfy the system of linear equations

where

and contain higher-order terms in and .

In this way, the determining of the vectors and y reduces to the solution of the system of symmetric coupled equations (75) with diagonal matrices of size . The vector can be found from the separate equation

where contains higher-order terms in and , defined in (66).

Neglecting the higher-order terms in (75), we obtain

where, taking into account that and commute, we have that

The matrices and are diagonal matrices whose nontrivial entries are determined by the singular values .

Hence, the components of the vectors and y satisfy

Taking into account the diagonal form of , we obtain that

Since only one element of f and g participates in (79) and (80), these elements can be replaced by , and we find that the linear approximations of the vectors and y fulfil

where , .

An asymptotic estimate of the vector is obtained from (78), neglecting the higher-order term . Since each element of depends only on one element of , we have that

As a result of determining the linear estimates (82)–(84), we obtain an asymptotic approximation of the vector x as

where

The matrices and can be estimated as

where

and the matrices contain higher-order terms in . The matrices and are asymptotic approximations of the matrices and , respectively.

We have that

Assume that the singular value decomposition of A is reordered as

where and are orthogonal matrices with and , and contains the desired singular values. The matrices and are the orthonormal bases of the perturbed and unperturbed left singular subspace, , of dimension , and and are the orthonormal bases of the perturbed and unperturbed right deflation subspace, , of the same dimension. We have that

Using the asymptotic approximations of the elements of the vectors x and y, we obtain the following result.

Theorem 12.

Let matrix A be decomposed, as in (92). Given the spectral norm of the perturbation , set the matrices and in (88), (89) using the linear estimates of the perturbation parameters x and y determined from (81)–(84). Then, the asymptotic bounds of the angles between the perturbed and unperturbed singular subspaces of dimension k are given by

The perturbed matrix of the singular values satisfies

where and contains higher-order terms in and . Neglecting the higher-order terms, we obtain for the singular value perturbation the asymptotic bound

Bounding each diagonal element by , we find the normwise estimate

which is in accordance with Weyl’s theorem ([44], Chapter 1). We have in a first-order approximation that

5.5. Probabilistic Perturbation Bound

Implementing a derivation similar to the one used in the proof of Theorem 3, the probabilistic estimates and of the parameter vectors x and y can be obtained from the deterministic estimates and . For this aim, the value of in the expressions (82) and (83) for and , respectively, is replaced by the value of , where is determined from (4) for the specified value of . According to (85), the probabilistic perturbation bound of x fulfils

where the estimates and satisfy (81) and (84), respectively. The bound of y is found from

where satisfies (83).

5.6. Bound Comparison

Example 3.

Consider a matrix, taken as

where

the matrices and are constructed as in Example 2,

and the matrices are elementary reflections. The condition numbers of and with respect to the inversion are controlled by the variables σ and τ and are equal to and , respectively. In the given case, , and , .

The perturbation of A is taken as , where is a matrix with random entries with normal distribution generated by the MATLAB®function

randn. This perturbation satisfies . The linear estimates and , which are of size 19900, are found by using (49) and (50), respectively, computing in advance the diagonal matrices and . These matrices satisfy

which means that the perturbations in A can be increased nearly times in x and y.

In Figure 9, we represent the mean value of the matrix and the scaling factor as a function of . Since, in the given case we use instead of , the value of for a given probability is relatively small. For instance, if %, then we have that and the mean value of is equal to 285.23 (Table 3).

Figure 9.

Mean value of the matrix (left) and the scaling factor (right) as a function of .

Table 3.

The mean value of the ratios and the relative number of entries for which , obtained for five values of , .

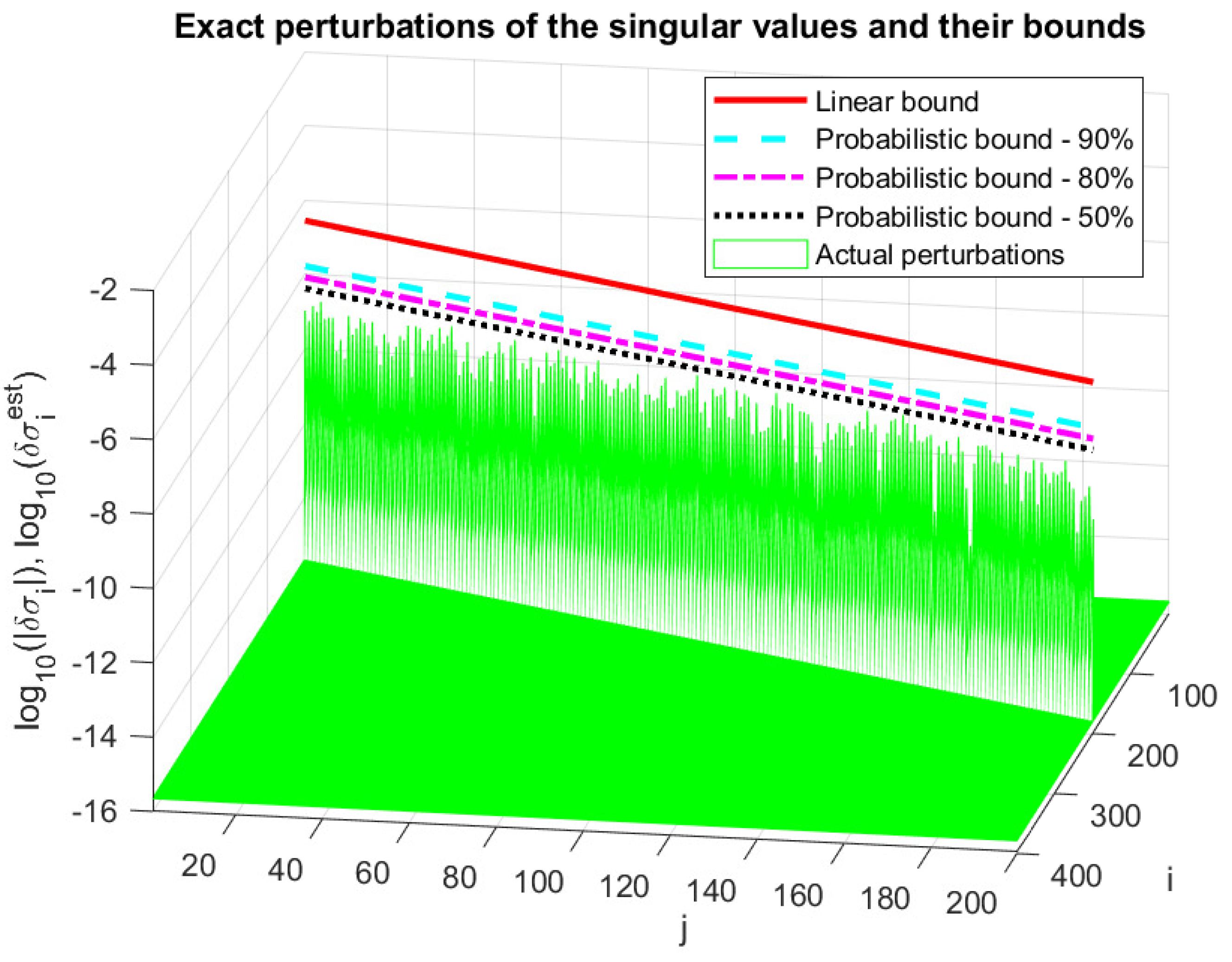

In Figure 10, we compare the actual perturbations, , of the singular values with the normwise bound (96) and the probabilistic bound (97) of the singular value perturbations for and . The probabilistic perturbation bound is tighter than the normwise bound . Specifically, is 2 times smaller than for , 4 times for and 10 times for . The inequality is satisfied for all singular values and probabilities due to the small values of . Note that tighter probability estimates can be obtained if instead of we use and the scaling parameter .

Figure 10.

Singular value perturbations and their bounds.

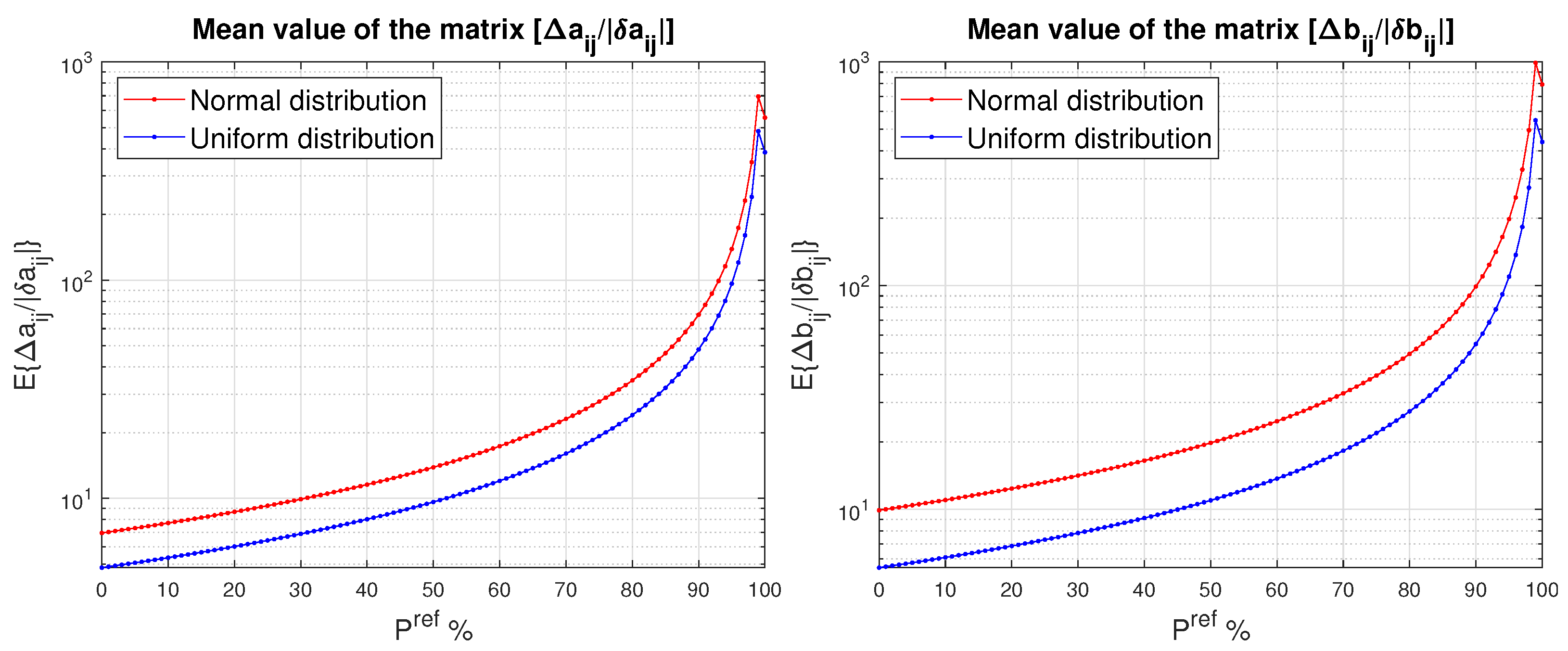

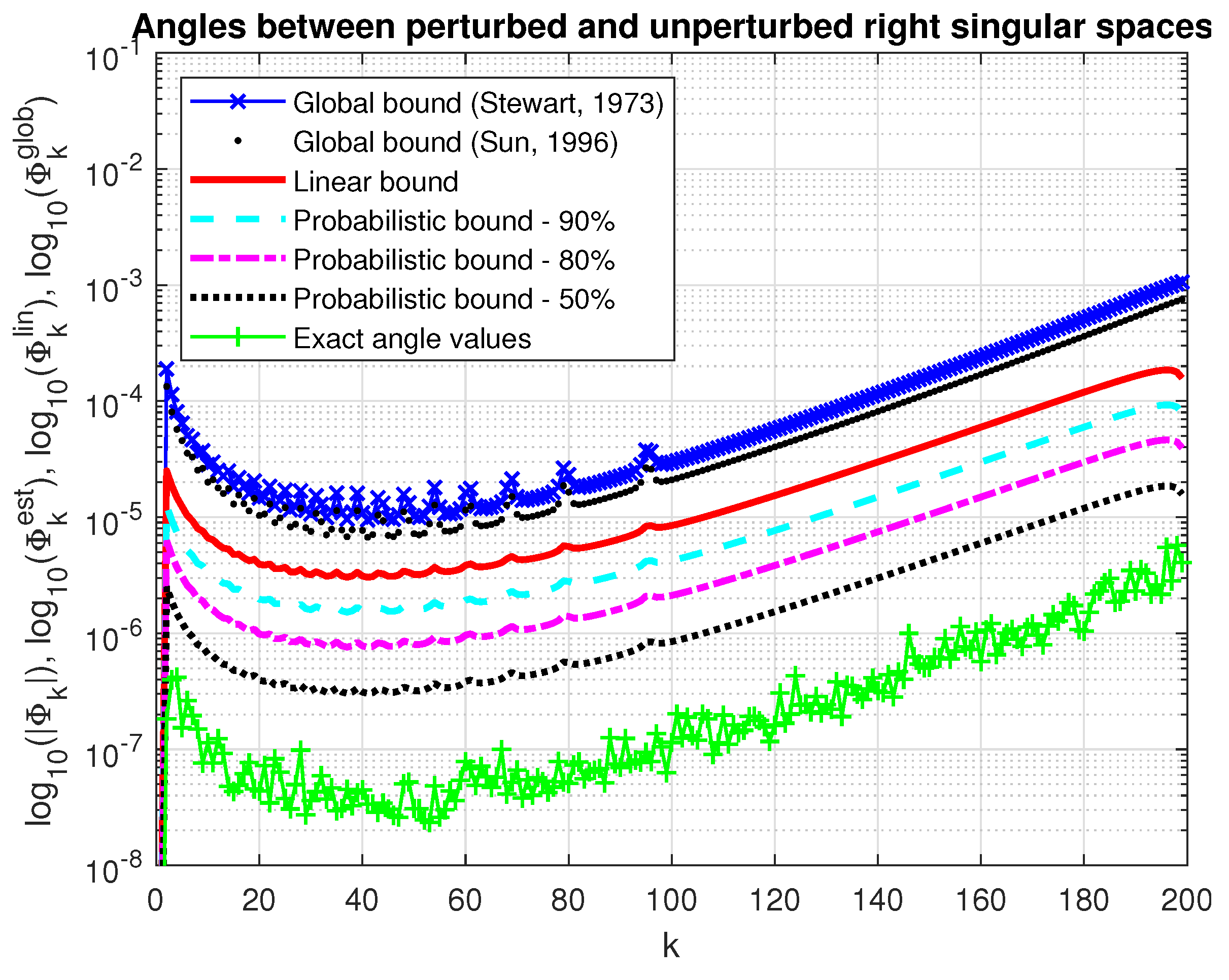

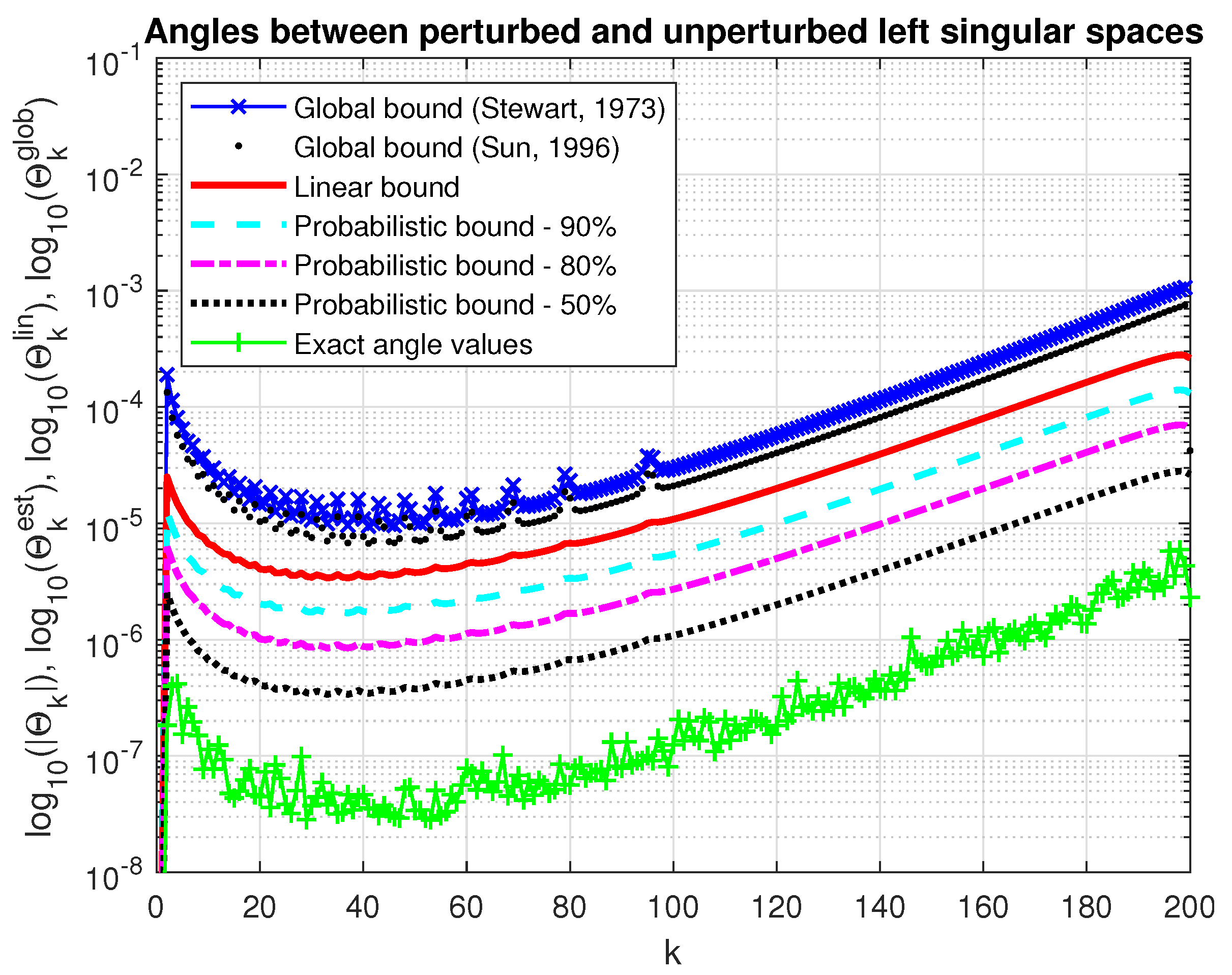

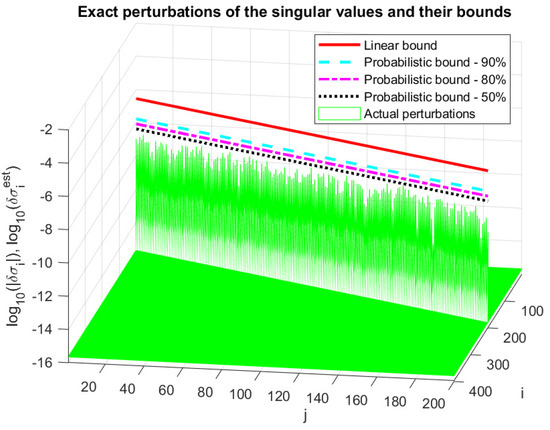

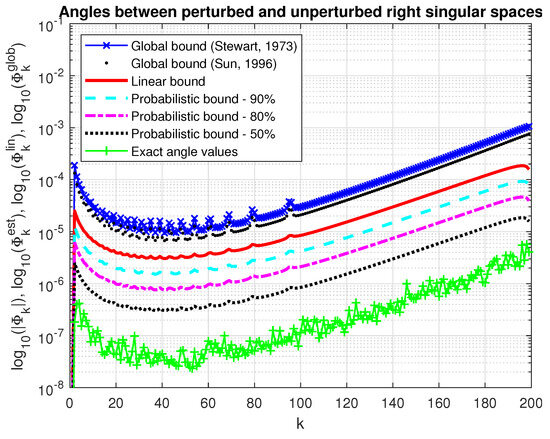

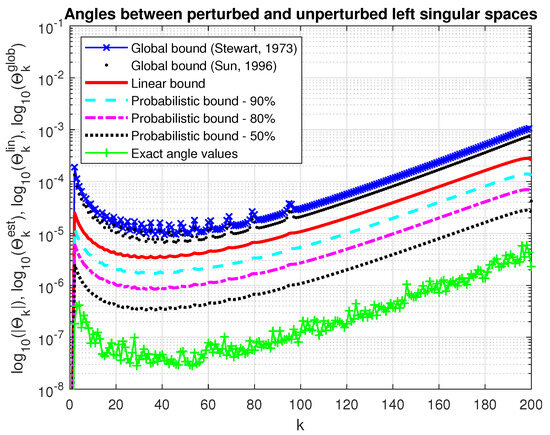

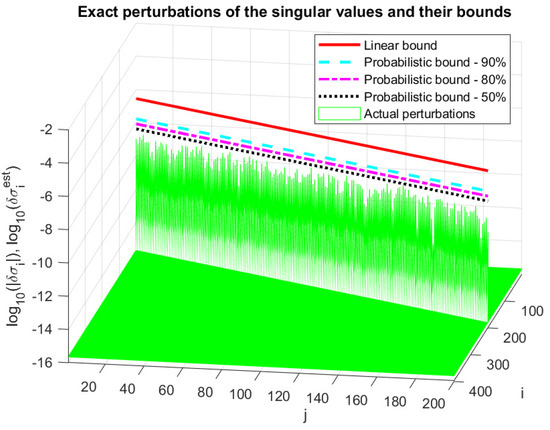

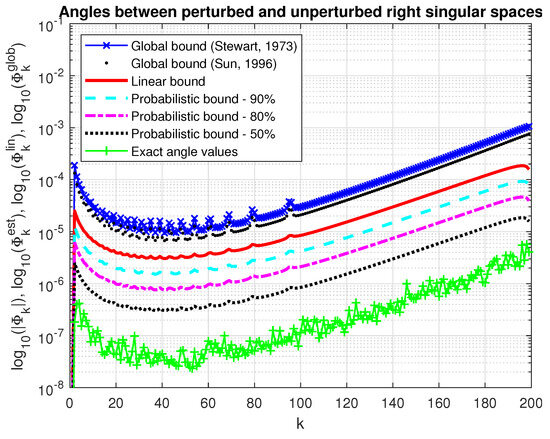

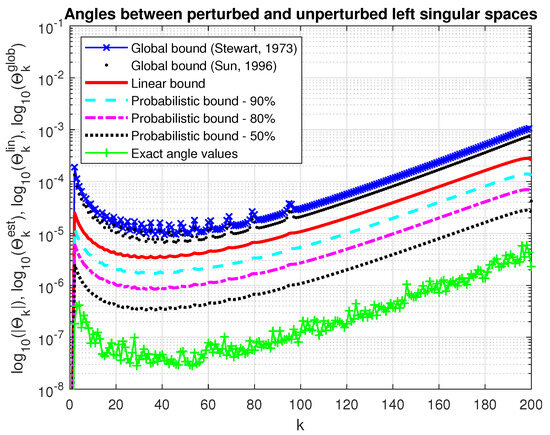

In Figure 11 and Figure 12, we show the actual values of the angles between the perturbed and unperturbed right and left singular subspaces, respectively, along with the corresponding linear bounds and probability bounds. For comparison, we give also the global bounds (69), (70) and (73), (74). As in the case of determining the deflation subspace global bounds, since the norms of parts of the perturbation matrix E are unknown, these norms are approximated by the 2-norms of the whole corresponding matrices. Clearly, the probabilistic bounds outperformall deterministic bounds. For instance, if , the probabilistic bounds are 10 times smaller than the deterministic asymptotic bound, as predicted by the analysis.

Figure 11.

Angles between the perturbed and unperturbed right singular subspaces.

Figure 12.

Angles between the perturbed and unperturbed left singular subspaces.

6. Conclusions

The splitting operator approach used in this paper allows us to derive unified asymptotic perturbation bounds of invariant, deflation and singular matrix subspaces that are comparable with the known perturbation bounds. These unified bounds make it possible to find easily probabilistic perturbation estimates of the subspaces that are considerably less conservative than the corresponding deterministic bounds, especially for high-order problems.

The proposed probability estimates have two disadvantages. First, they can be conservative due to the properties of the Markoff inequality so that the actual probability of the results obtained can be much better than those predicted by these estimates. Secondly, in the case of high-order problems, their computation requires much more memory than the known bounds due to the use of the Kronecker products.

Funding

This research received no external funding.

Notation

| , | the set of complex numbers; |

| , | the imaginary unit; |

| , | the set of real numbers; |

| , | the space of complex matrices; |

| , | the space of real matrices; |

| , | a matrix with entries ; |

| , | the jth column of A; |

| , | the complex conjugate of ; |

| , | the ith row of an matrix A; |

| , | the part of matrix A from row |

| to and from column to ; | |

| , | the strictly lower triangular part of A; |

| , | the strictly upper triangular part of A; |

| , | the diagonal of A; |

| , | the square matrix with diagonal elements equal to |

| . | |

| , | the matrix of absolute values of the elements of A; |

| , | the transposed A; |

| , | the Hermitian conjugate of A; |

| , | the inverse of A; |

| , | the zero matrix; |

| , | the unit matrix; |

| , | the jth column of ; |

| , | the perturbation of A; |

| , | the determinant of A; |

| , | the ith singular value of A; |

| , | the spectral norm of A; |

| , | the Frobenius norm of A; |

| , | equal by definition; |

| ⪯, | relation of partial order. If , then means |

| ; | |

| , | the subspace spanned by the columns of X; |

| , | the unitary complement of U, ; |

| , | the Kronecker product of A and B; |

| , | the gap between the subspaces and ; |

| , | the separation between A and B; |

| , | the difference between the spectra of and ; |

| , | the vec mapping of . If A is partitioned |

| columnwise as , then | |

| ; | |

| , | the vec-permutation matrix. ; |

| , | the probability of the event ; |

| , | the average value or mean of the random variable ; |

| , | the number of the entries of A that are |

| greater or equal to the corresponding entries of B. |

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets generated during the current study are available from the author upon reasonable request.

Acknowledgments

The author is grateful to the anonymous reviewers whose remarks and suggestions helped to improve the manuscript.

Conflicts of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as potential conflicts of interest.

References

- Stewart, G.W.; Sun, J.-G. Matrix Perturbation Theory; Academic Press: New York, NY, USA, 1990; ISBN 978-0126702309. [Google Scholar]

- Bhatia, R. Matrix factorizations and their perturbations. Linear Algebra Appl. 1994, 197–198, 245–276. [Google Scholar] [CrossRef][Green Version]

- Li, R. Matrix perturbation theory. In Handbook of Linear Algebra, 2nd ed.; Hogben, L., Ed.; CRC Press: Boca Raton, FL, USA, 2014; ISBN 978-1-4665-0729-6. [Google Scholar]

- Adhikari, S.; Friswell, M.I. Random matrix eigenvalue problems in structural dynamics. Int. J. Numer. Methods Eng. 2006, 69, 562–591. [Google Scholar] [CrossRef]

- Benaych-Georges, F.; Enriquez, N.; Michail, A. Eigenvectors of a matrix under random perturbation. Random Matrices Theory Appl. 2021, 10, 2150023. [Google Scholar] [CrossRef]

- Benaych-Georges, F.; Nadakuditi, R.R. The eigenvalues and eigenvectors of finite, low rank perturbations of large random matrices. Adv. Math. 2011, 227, 494–521. [Google Scholar] [CrossRef]

- Cape, J.; Tang, M.; Priebe, C.E. Signal-plus-noise matrix models: Eigenvector deviations and fluctuations. Biometrika 2019, 106, 243–250. [Google Scholar] [CrossRef]

- Michaïl, A. Eigenvalues and Eigenvectors of Large Matrices under Random Perturbations. Ph.D. Thesis, Université Paris Decartes, Paris, France, 2018. Available online: https://theses.hal.science/tel-02468213 (accessed on 20 August 2024).

- O’Rourke, S.; Vu, V.; Wang, K. Eigenvectors of random matrices: A survey. J. Combin. Theory Ser. A 2016, 144, 361–442. [Google Scholar] [CrossRef]

- O’Rourke, S.; Vu, V.; Wang, K. Optimal subspace perturbation bounds under Gaussian noise. In Proceedings of the 2023 IEEE International Symposium on Information Theory (ISIT), Taipei, Taiwan, 25–30 June 2023; pp. 2601–2606. [Google Scholar] [CrossRef]

- Sun, J.-G. Perturbation expansions for invariant subspaces. Linear Algebra Appl. 1991, 153, 85–97. [Google Scholar] [CrossRef][Green Version]

- Wilkinson, J. The Algebraic Eigenvalue Problem; Clarendon Press: Oxford, UK, 1965; ISBN 978-0-19-853418-1. [Google Scholar]

- Greenbaum, A.; Li, R.-C.; Overton, M.L. First-order perturbation theory for eigenvalues and eigenvectors. SIAM Rev. 2020, 62, 463–482. [Google Scholar] [CrossRef]

- Bai, Z.; Demmel, J.; Mckenney, A. On computing condition numbers for the nonsymmetric eigenproblem. ACM Trans. Math. Softw. 1993, 19, 202–223. [Google Scholar] [CrossRef]

- Stewart, G.W. On the sensitivity of the eigenvalue problem Ax=λBx. SIAM J. Numer. Anal. 1972, 9, 669–686. [Google Scholar] [CrossRef]

- Stewart, G.W. Error and perturbation bounds for subspaces associated with certain eigenvalue problems. SIAM Rev. 1973, 15, 727–764. [Google Scholar] [CrossRef]

- Sun, J.-G. Perturbation bounds for the generalized Schur decomposition. SIAM J. Matrix Anal. Appl. 1995, 16, 1328–1340. [Google Scholar] [CrossRef]

- Sun, J.-G. Perturbation analysis of singular subspaces and deflating subspaces. Numer. Math. 1996, 73, 235–263. [Google Scholar] [CrossRef]

- Kågström, B.; Poromaa, P. Computing eigenspaces with specified eigenvalues of a regular matrix pair (A,B) and condition estimation: Theory, algorithms and software. Numer. Algorithms 1996, 12, 369–407. [Google Scholar] [CrossRef]

- Benaych-Georges, F.; Nadakuditi, R.R. The singular values and vectors of low rank perturbations of large rectangular random matrices. J. Multivariate Anal. 2012, 111, 120–135. [Google Scholar] [CrossRef]

- Konstantinides, K.; Yao, K. Statistical analysis of effective singular values in matrix rank determination. IEEE Trans. Acoustic. Speech Signal Proc. 1988, 36, 757–763. [Google Scholar] [CrossRef]

- Liu, H.; Wang, R. An Exact sin Θ Formula for Matrix Perturbation Analysis and Its Applications; ArXiv e-prints in Statistics Theory [math.ST]; Cornell University Library: Ithaca, NY, USA, 2020; pp. 1–31. [Google Scholar] [CrossRef]

- O’Rourke, S.; Vu, V.; Wang, K. Random perturbation of low rank matrices: Improving classical bounds. Lin. Algebra Appl. 2018, 540, 26–59. [Google Scholar] [CrossRef]

- O’Rourke, S.; Vu, V.; Wang, K. Matrices with Gaussian noise: Optimal estimates for singular subspace perturbation. IEEE Trans. Inform. Theory 2024, 70, 1978–2002. [Google Scholar] [CrossRef]

- Wang, K. Analysis of Singular Subspaces under Random Perturbations; ArXiv e-Prints in Statistics Theory [math.ST]; Cornell University Library: Ithaca, NY, USA, 2024; pp. 1–68. [Google Scholar] [CrossRef]

- Wang, R. Singular vector perturbation under Gaussian noise. SIAM J. Matrix Anal. Appl. 2015, 36, 158–177. [Google Scholar] [CrossRef]

- Stewart, G.W. Stochastic perturbation theory. SIAM Rev. 1990, 32, 579–610. [Google Scholar] [CrossRef]

- Edelman, A.; Rao, N.R. Random matrix theory. Acta Numer. 2005, 14, 1–65. [Google Scholar] [CrossRef]

- Petkov, P. Probabilistic perturbation bounds of matrix decompositions. Numer Linear Alg. Appl. 2024. [Google Scholar] [CrossRef]

- Angelova, V.; Petkov, P. Componentwise perturbation analysis of the Singular Value Decomposition of a matrix. Appl. Sci. 2024, 14, 1417. [Google Scholar] [CrossRef]

- Konstantinov, M.; Petkov, P. Perturbation Methods in Matrix Analysis and Control; NOVA Science Publishers, Inc.: New York, NY, USA, 2020; Available online: https://novapublishers.com/shop/perturbation-methods-in-matrix-analysis-and-control (accessed on 20 August 2024).

- Petkov, P. Componentwise perturbation analysis of the Schur decomposition of a matrix. SIAM J. Matrix Anal. Appl. 2021, 42, 108–133. [Google Scholar] [CrossRef]

- Petkov, P. Componentwise perturbation analysis of the QR decomposition of a matrix. Mathematics 2022, 10, 4687. [Google Scholar] [CrossRef]

- Zhang, G.; Li, H.; Wei, Y. Componentwise perturbation analysis for the generalized Schur decomposition. Calcolo 2022, 59. [Google Scholar] [CrossRef]

- Stewart, G.W. Matrix Algorithms; Vol. II: Eigensystems; SIAM: Philadelphia, PA, USA, 2001; ISBN 0-89871-503-2. [Google Scholar]

- The MathWorks, Inc. MATLAB, Version 9.9.0.1538559 (R2020b); The MathWorks, Inc.: Natick, MA, USA, 2020; Available online: http://www.mathworks.com (accessed on 20 August 2024).

- Papoulis, A. Probability, Random Variables and Stochastic Processes, 3rd ed.; McGraw Hill, Inc.: New York, NY, USA, 1991; ISBN 0-07-048477-5. [Google Scholar]

- Horn, R.; Johnson, C. Matrix Analysis, 2nd ed.; Cambridge University Press: Cambridge, UK, 2013; ISBN 978-0-521-83940-2. [Google Scholar]

- Golub, G.H.; Van Loan, C.F. Matrix Computations, 4th ed.; The Johns Hopkins University Press: Baltimore, MD, USA, 2013; ISBN 978-1-4214-0794-4. [Google Scholar]

- Sun, J.-G. Stability and Accuracy. Perturbation Analysis of Algebraic Eigenproblems; Technical Report; Department of Computing Science, Umeå University: Umeå, Sweden, 1998; pp. 1–210. [Google Scholar]

- Davis, C.; Kahan, W. The rotation of eigenvectors by a perturbation. III. SIAM J. Numer. Anal. 1970, 7, 1–46. [Google Scholar] [CrossRef]

- Horn, R.; Johnson, C. Topics in Matrix Analysis; Cambridge University Press: Cambridge, UK, 1991; ISBN 0-521-30587-X. [Google Scholar]

- Bavely, C.A.; Stewart, G.W. An algorithm for computing reducing subspaces by block diagonalization. SIAM J. Numer. Anal. 1979, 16, 359–367. [Google Scholar] [CrossRef][Green Version]

- Stewart, G.W. Matrix Algorithms; Vol. I: Basic Decompositions; SIAM: Philadelphia, PA, USA, 1998; ISBN 0-89871-414-1. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).