Abstract

Recently, the author developed a theory for the computation of the phase lag and amplification factor for explicit and implicit multistep methods for first-order differential equations. In this paper, we will investigate the role of the derivatives of the phase lag and the derivatives of the amplification factor on the efficiency of the newly developed methods. We will also present the stability regions of the newly developed methods. We will also present numerical experiments and conclusions on the newly developed methodologies.

Keywords:

numerical solution; initial value problems (IVPs); Adams–Bashforth methods; trigonometric fitting; multistep methods MSC:

65L05; 65L06

1. Introduction

An equation or system of equations that looks like the following:

These substances are used to address issues in several domains, such as astrophysics, chemistry, electronics, nanotechnology, materials science, physics, and chemistry. Additional attention should be given to the class of equations that have oscillatory or periodic solutions (see [1,2]).

A lot of work has been done over the last 20 years in trying to figure out the numerical solution to the given problem or system of equations (for examples, see [3,4,5,6,7] and the references therein). Review [3,4,8], and the references therein, for a more detailed examination of the methods employed to resolve (1) with solutions exhibiting oscillating behavior, and Quinlan and Tremaine, [5,9], among others. The presence of numerical techniques in the literature for solving (1) is characterized by certain similarities, the most notable of which is that they are either multistep or hybrid approaches. One other thing: most of these methods were created for solving second-order differential equations numerically. The following methodological frameworks are mentioned, along with their respective bibliographies:

- Exponentially-fitted, trigonometrically-fitted, phase-fitted, and amplification-fitted Runge–Kutta and Runge–Kutta–Nyström methods and Runge–Kutta and Runge–Kutta–Nyström methods with minimal phase lag (see [10,11,12,13,14,15,16,17]);

- Exponentially-fitted and trigonometrically-fitted phase-fitted and amplification-fitted multistep methods and multistep methods with minimal phase lag (see [18,19,20,21,22,23,24,25,26,27,28]).

The theory for the construction of multistep techniques with minimum phase lag, or phase-fitted multistep methods for first-order IVPs, was recently developed by Simos in [29,30]. His theory for estimating the phase lag and amplification error of multistep techniques for first-order IVPs was developed, more precisely, by him (explicit [29] and implicit [30]). Also, recently, Saadat et al. [31] presented a theory on the development of backward differentiation formulae () for problems with oscillating solutions. It is known that methods are implicit methods and are specially constructed for stiff problems. The phase-fitted and amplification-fitted methods are methods which can be applied to large integration intervals. In the paper [31], we did not see applications with large integration areas (all the examples have integration areas ). It would be interesting to see how the specific methods behave in intervals or .

In this study, we will investigate how the derivatives of the phase lag and the amplification factor affect the effectiveness of multistep approaches for first-order IVPs.

This paper is structured as follows:

- In Section 2, we mention the theory for the derivatives of the phase lag and the derivative of the amplification factor of the multistep methods for first-order IVPs.

- In Section 3, we present methodologies for the vanishing of the following:

- -

- The derivative of the phase lag;

- -

- The derivative of the amplification factor;

- -

- The derivative of the phase lag and derivative of the amplification factor.

- In Section 10, we study the stability of the newly obtained algorithms.

- In Section 11, we present the numerical results and a conclusion on the role of the derivative of the phase lag and the derivative of the amplification factor.

2. The Theory

In order to study the phase lag of multistep methods for problem (1), the following scalar test equation is used:

The solution of the above equation is given by the following:

The numerical solution of Equation (2) is given by the following:

When one integrates problem (2), for one step h, we have the following:

Theoretical solution.

where .

Numerical solution

Definition 1.

Phase lag

Amplification factor

The order of the phase lag is equal to q if and only if the quantity as . The order of the amplification factor is equal to p if and only if the quantity as .

Taking Taylor series of the above formulae (7) and (8) about point , we have the following:

where is the n-th derivative of and is the n-th derivative of .

From the above formulae, (9) and (10), it is easy to see that the more derivatives of the phase lag and the amplification factor are eliminated, the more accurate the achieved approximation of the phase lag and the amplification factor.

Consider the multistep methods for the numerical solution of the above mentioned problem (1):

where are polynomials of and h are the step lengths of the integration.

The characteristic equation of the above difference equation is given by the following:

Based on the theory developed in [29], we have the following formulae:

This is the direct formula for the computation of the amplification factor of the multistep method (11) (for more information, see [29]).

In order to eliminate the derivatives of the phase lag and the amplification factor, we follow the following algorithm:

- We differentiate using v the formulae produced in the previous step.

- We request the formulae of the derivatives of the phase lag and the amplification factor, produced in the previous steps, to be equal to zero.

In the Appendix A, we present direct formulae for the calculation of the derivatives of the phase lag and the amplification factor. The development of these formulae is based on the differentiation using v of the formulae (17) and (18).

3. Vanishing of the Derivatives of the Phase Lag and the Amplification Factor

In this paper, we will present methodologies for the elimination of the derivatives of the phase lag and the derivatives of the amplification factor.

More specifically, we will present methodologies on the following:

- The vanishing of the derivative of the phase lag;

- The vanishing of the derivative of the amplification factor;

- The vanishing of the derivative of the phase lag and the amplification factor.

The main characteristic of the above mentioned new methodologies is that the methods that will be applied are phase-fitted and amplification-fitted.

4. Phase-Fitted and Amplification-Fitted Adams–Bashforth Fourth-Order Method with the Vanished First Derivative of the Phase Lag

We will investigate the following Adams–Bashforth approach:

which, for , , and , achieves the fourth algebraic order:

where () is the local truncation error of the method.

In order for the method (19) to be phase-fitted, the following relation must be true, according to the formula (17) described earlier:

To ensure that the method (19) may be amplification-fitted, the following relation must be true, according to the formula (18), which was mentioned earlier:

In order to achieve the elimination of the derivative of the phase lag of the method, we follow the algorithm as follows:

Algorithm for the Elimination of the First Derivative of the Phase Lag

- We calculate the first derivative of the above formula, i.e., .

- We request the formula of the previous step to be equal to zero, i.e., .

Based on the above algorithm, we obtain that in order for the method (19) to have vanished the first derivative of the phase lag, the following relation must hold:

where

This novel algorithm has the following features:

The following are the formulae for the Taylor series expansion of the coefficients , , and :

5. Phase-Fitted and Amplification-Fitted Adams–Bashforth Fourth-Order Method with the Vanished First Derivative of the Amplification Factor

We will investigate the Adams–Bashforth approach (19).

Based on the formula (17) mentioned above, we know that in order for the method (19) to be phase-fitted, the relation (21) must hold.

Based on the formula (18) mentioned above, we know that in order for the method (19) to be amplification-fitted, the relation (22) must hold.

In order to achieve the elimination of the derivative of the amplification factor of the method, we follow the algorithm as follows:

Algorithm for the Elimination of the First Derivative of the Amplification Factor

- We calculate the first derivative of the above formula, i.e., .

- The request the formula of the previous step to be equal to zero, i.e., .

Based on the above algorithm, we obtain that in order the method (19) to have vanished first derivative of the phase lag, the following relation must hold:

where is given by (A5), which can be found in Appendix B.

This novel algorithm has the following features:

The following are the formulae for the Taylor series expansion of the coefficients , , and :

6. Phase-Fitted and Amplification-Fitted Adams–Bashforth Fifth-Order Method

We will investigate the following Adams–Bashforth approach:

which, for , , and , achieves the fifth algebraic order:

where () is the local truncation error of the method.

Let us consider the method (38) with and .

In order for the method (38) to be phase-fitted, the following relation must be true, according to the formula (17) that was described earlier:

where

Based on the formula (18) mentioned above, we know that in order for the method (38) to be amplification-fitted, the following relation must hold:

where

This novel algorithm has the following features:

The following are the formulae for the Taylor series expansion of the coefficients and :

7. Phase-Fitted and Amplification-Fitted Adams–Bashforth Fourth-Order Method with the Vanished First Derivative of the Phase Lag

We will investigate the Adams–Bashforth approach (38) with .

Based on the formula (17) mentioned above, we know that in order for the method (38) to be phase-fitted, the following relation must hold:

where

Based on the formula (18) mentioned above, we know that in order for the method (38) to be amplification-fitted, the following relation must hold:

where

Applying the algorithm of Section 4 to the (38), we obtain that in order for the method (38) to have vanished the first derivative of the phase lag, the following relation must hold:

where

Requesting the satisfaction of Equations (49), (51) and (53), we obtain

where the formulae , , and are in Appendix C.

This novel algorithm has the following features:

The following are the formulae for the Taylor series expansion of the coefficients , , and :

8. Phase-Fitted and Amplification-Fitted Adams–Bashforth Fifth-Order Method with the Vanished First Derivative of the Amplification Factor

We will investigate the Adams–Bashforth approach (38) with .

Based on the formula (17) mentioned above, we know that in order for the method (38) to be phase-fitted, the relation (49) must hold.

Based on the formula (18) mentioned above, we know that in order for the method (38) to be amplification-fitted, the relation (51) must hold.

Applying the algorithm of Section 5 to (38), we obtain that in order for the method (38) to have vanished the first derivative of the amplification factor, the following relation must hold:

where is given in Appendix D.

Requesting the satisfaction of Equations (49), (51), and (60), we obtain

where the formulae , , and are in Appendix E.

This novel algorithm has the following features:

The following are the formulae for the Taylor series expansion of the coefficients , , and :

9. Phase-Fitted and Amplification-Fitted Adams–Bashforth Fifth-Order Method with the Vanished First Derivative of the Phase Lag and the First Derivative of the Amplification Factor

We will investigate the Adams–Bashforth approach (38).

Based on the formula (17) mentioned above, we know that in order for the method (38) to be phase-fitted, the following relation must hold:

where

Based on the formula (18) mentioned above, we know that in order for the method (38) to be amplification-fitted, following relation must hold:

where

Applying the algorithm of Section 4 to the method (38), we obtain that in order for the method (38) to have vanished the first derivative of the phase lag, the following relation must hold:

where

Applying the algorithm of Section 5 to the method (38), we obtain that in order for the method (38) to have vanished the first derivative of the amplification factor, the following relation must hold:

where is given in Appendix F.

Requesting the satisfaction of Equations (66), (68), (70), and (72), we obtain

where the formulae , , , and are in Appendix G.

This novel algorithm has the following features:

The following are the formulae for the Taylor series expansion of the coefficients , , , and :

10. Stability Analysis

10.1. Five-Step Adams–Bashforth Methods

The five-step methods proposed by Adams–Bashforth are broadly described as follows:

where

The produced methods in Section 4 and Section 5, i.e., methods (29) and (36), belong to method (79).

Applying the scheme (79) to the scalar test equation

we obtain the following difference equation

where and

The characteristic equation of (81) is given by

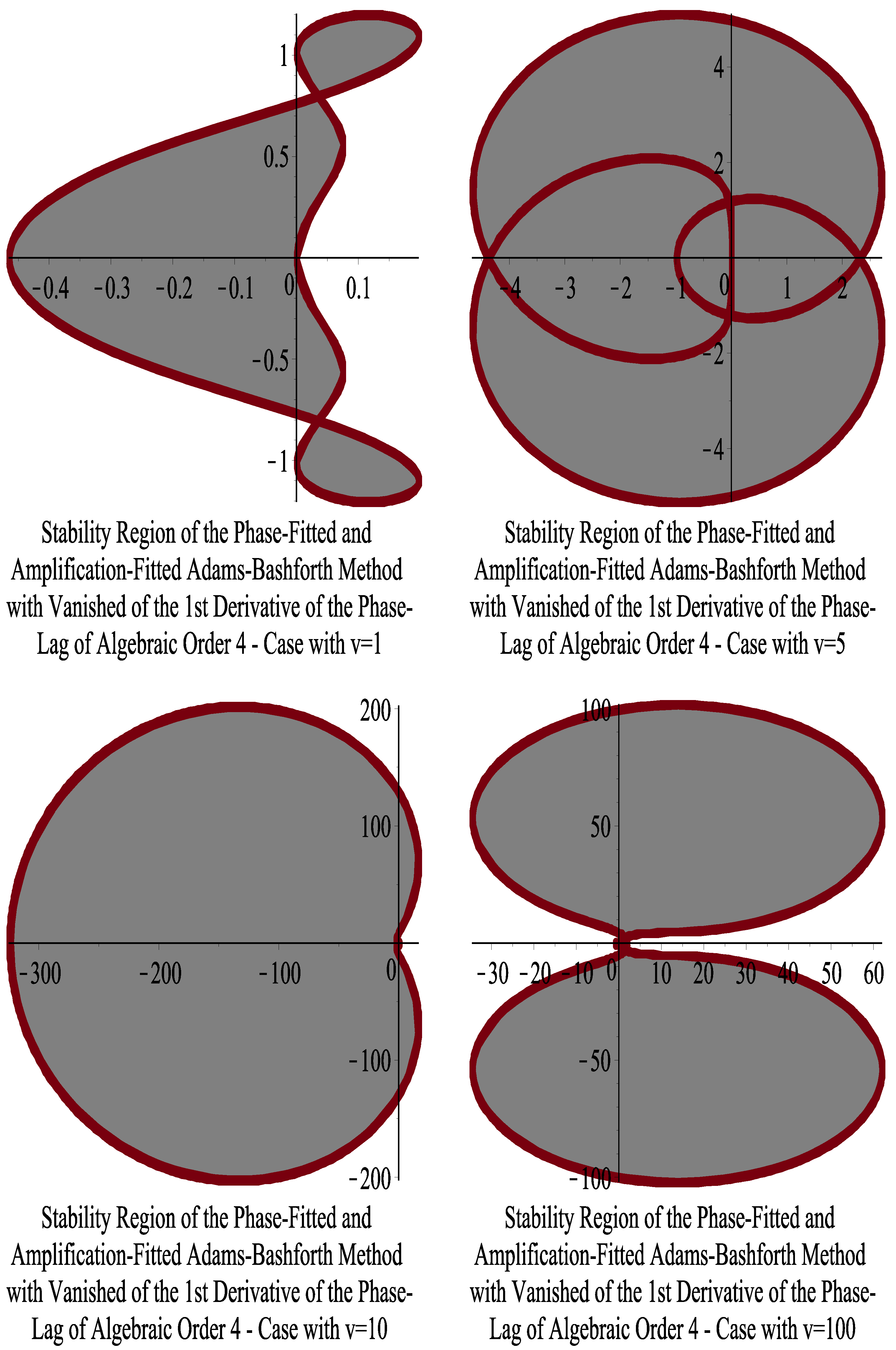

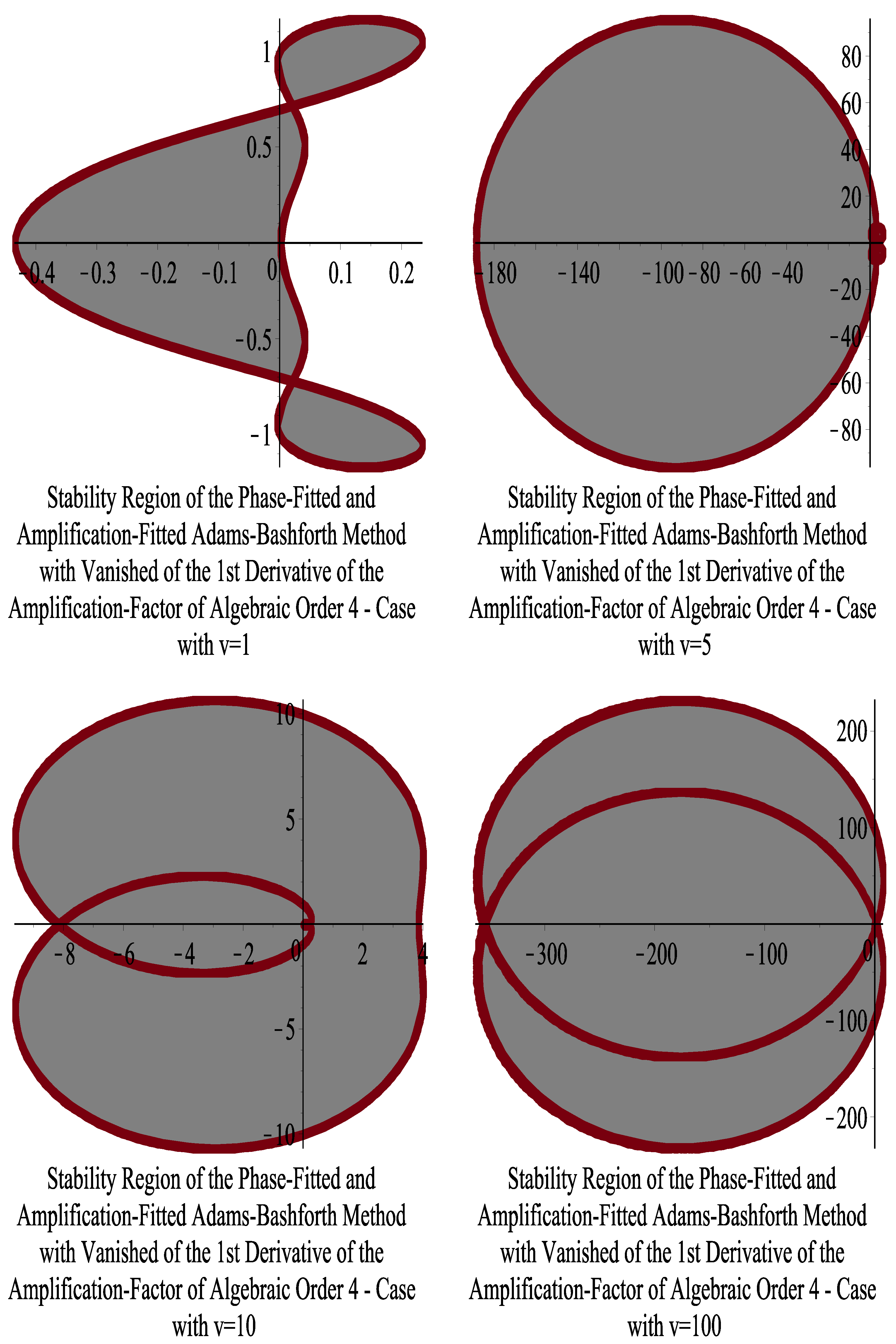

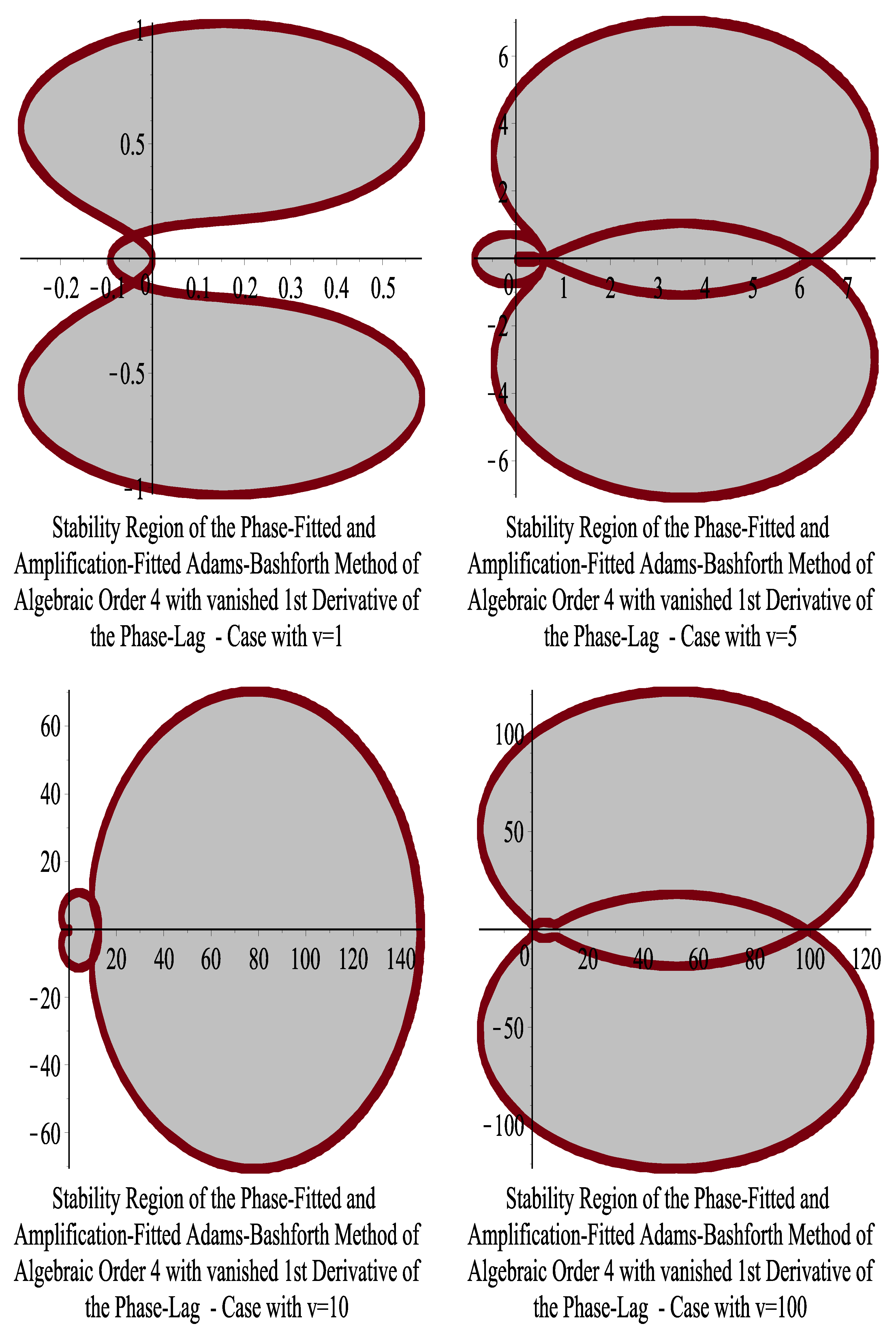

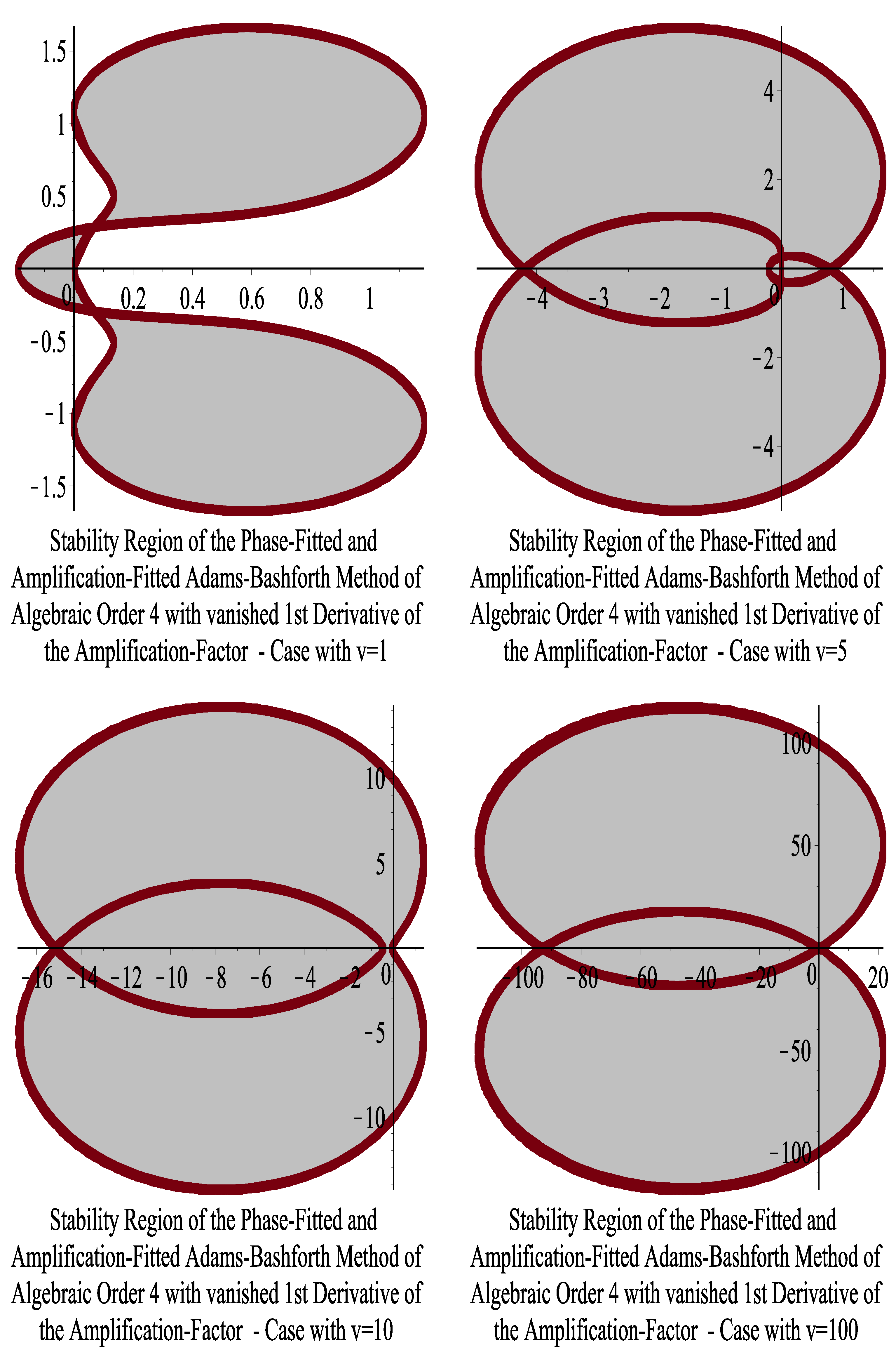

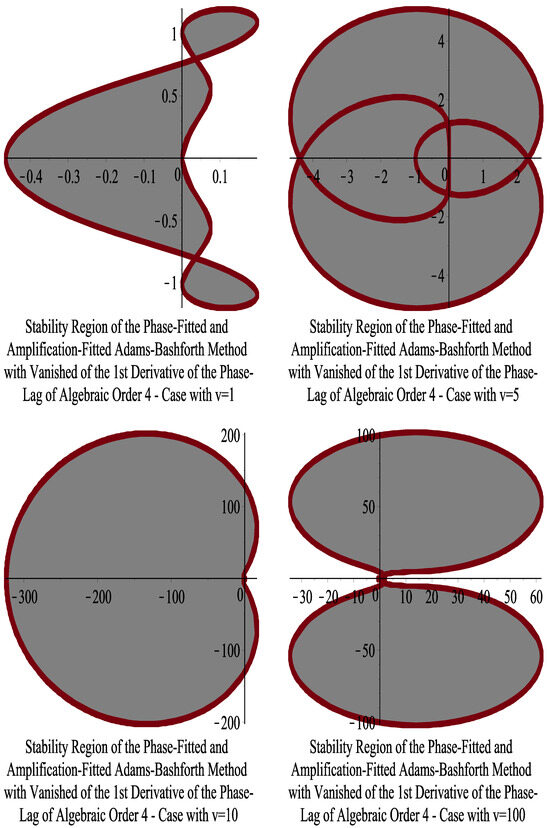

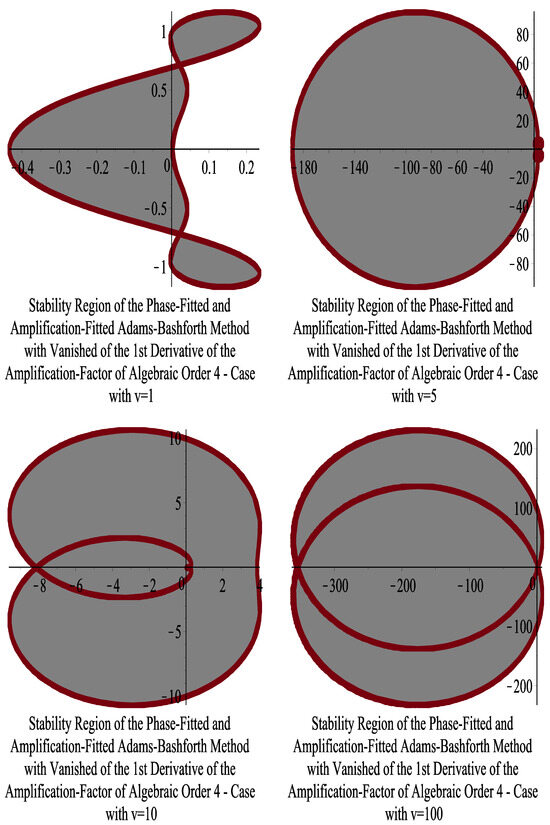

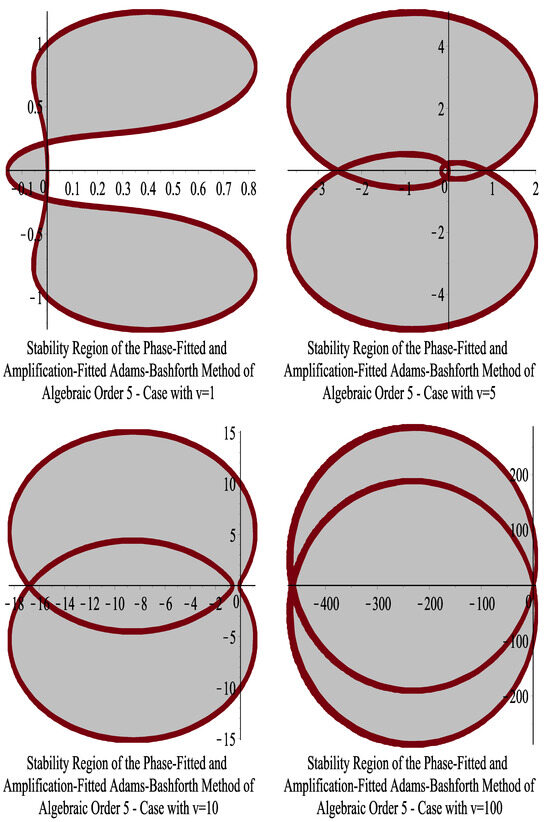

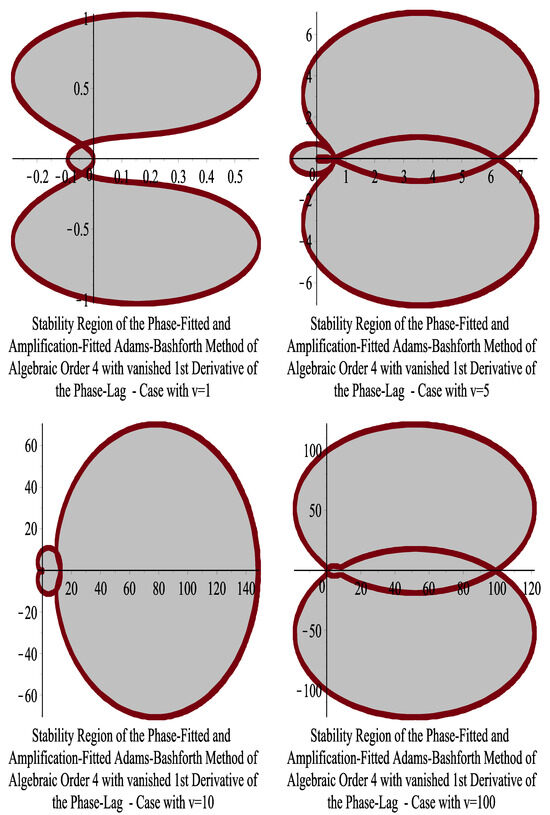

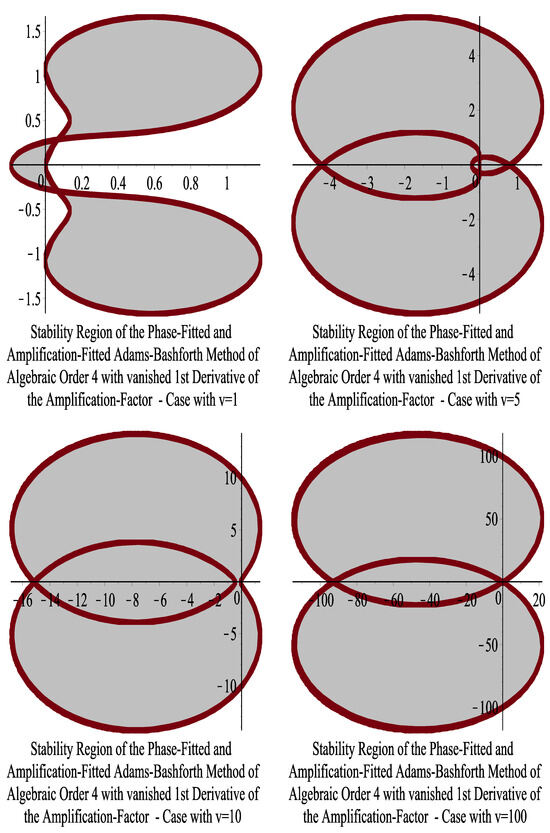

If we solve the above equation using V and replace with , we can draw the stability areas for . Figure 1 and Figure 2 show the stability region for the algorithms obtained in Section 4 and Section 5. For these cases, we present the stability regions for , , , and .

Figure 1.

Stability region for the Adams–Bashforth method developed in Section 4.

Figure 2.

Stability region for the Adams–Bashforth method developed in Section 5.

10.2. Six-Step Adams–Bashforth Methods

The six-step methods proposed by Adams–Bashforth are broadly described as follows:

where

The produced methods in Section 6, Section 7, Section 8 and Section 9, i.e., methods (47), (58), (64), and (77), belong to method (84).

Applying the scheme (84) to the scalar test Equation (80), we obtain the following difference equation:

where and

The characteristic equation of (85) is given by

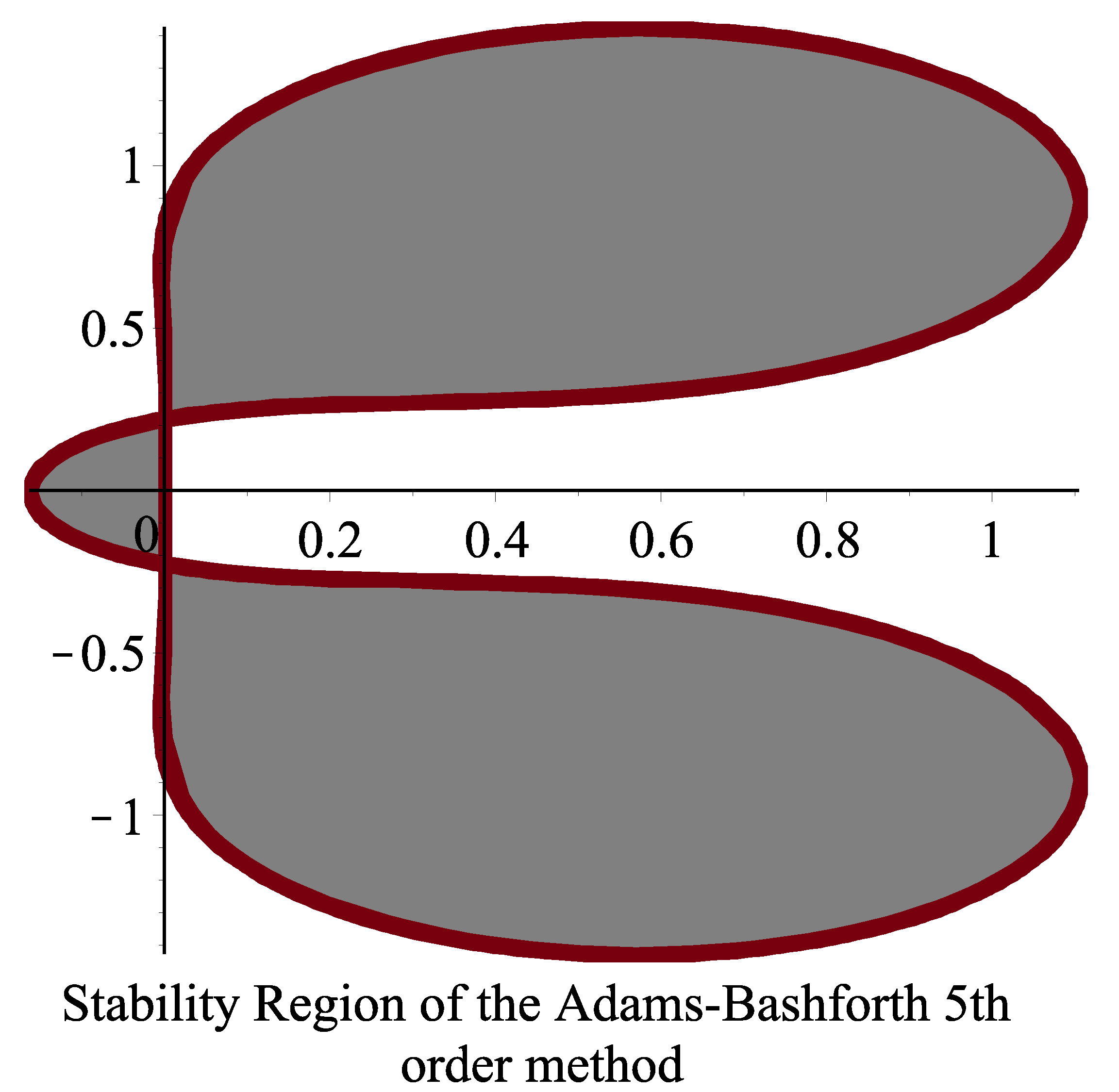

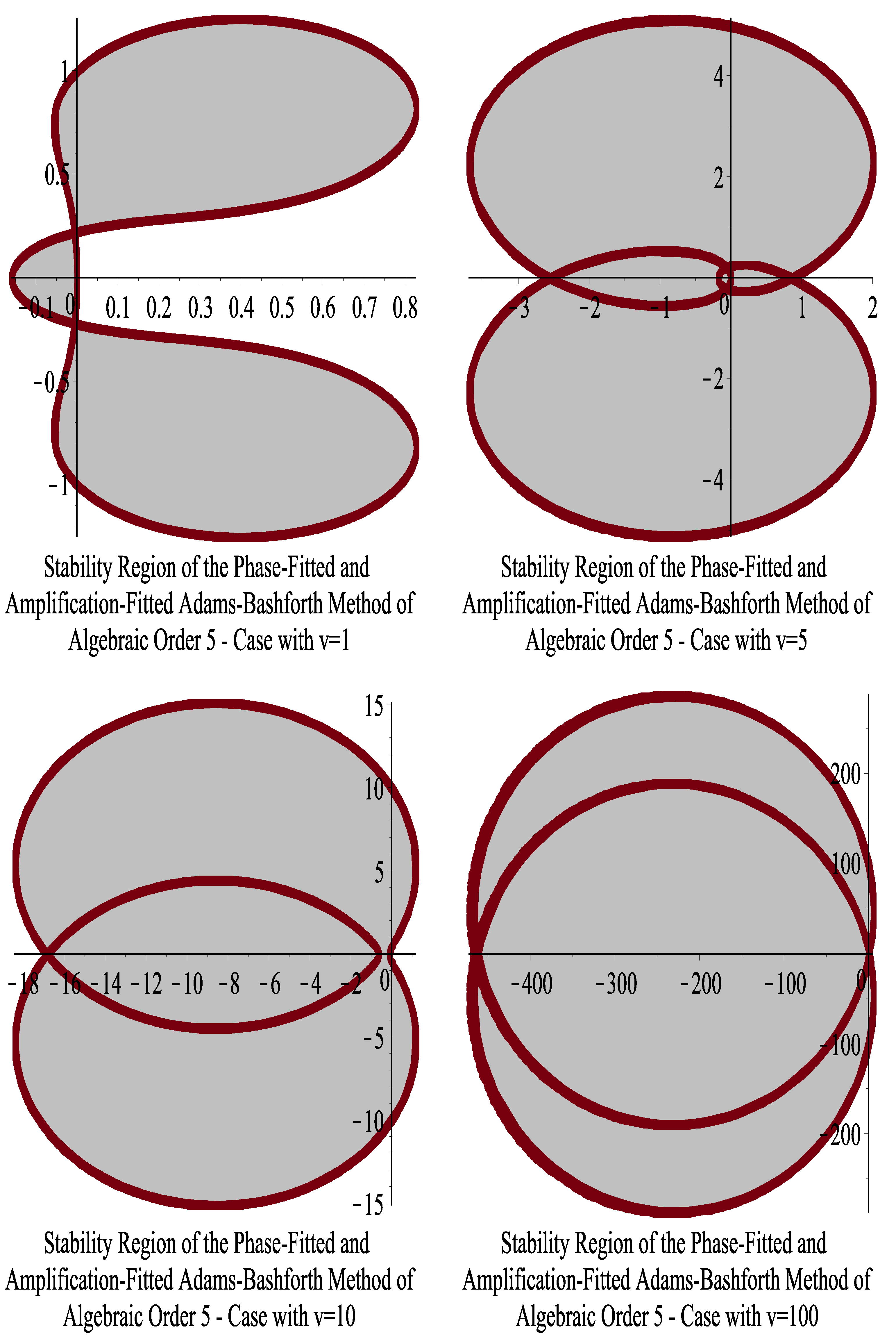

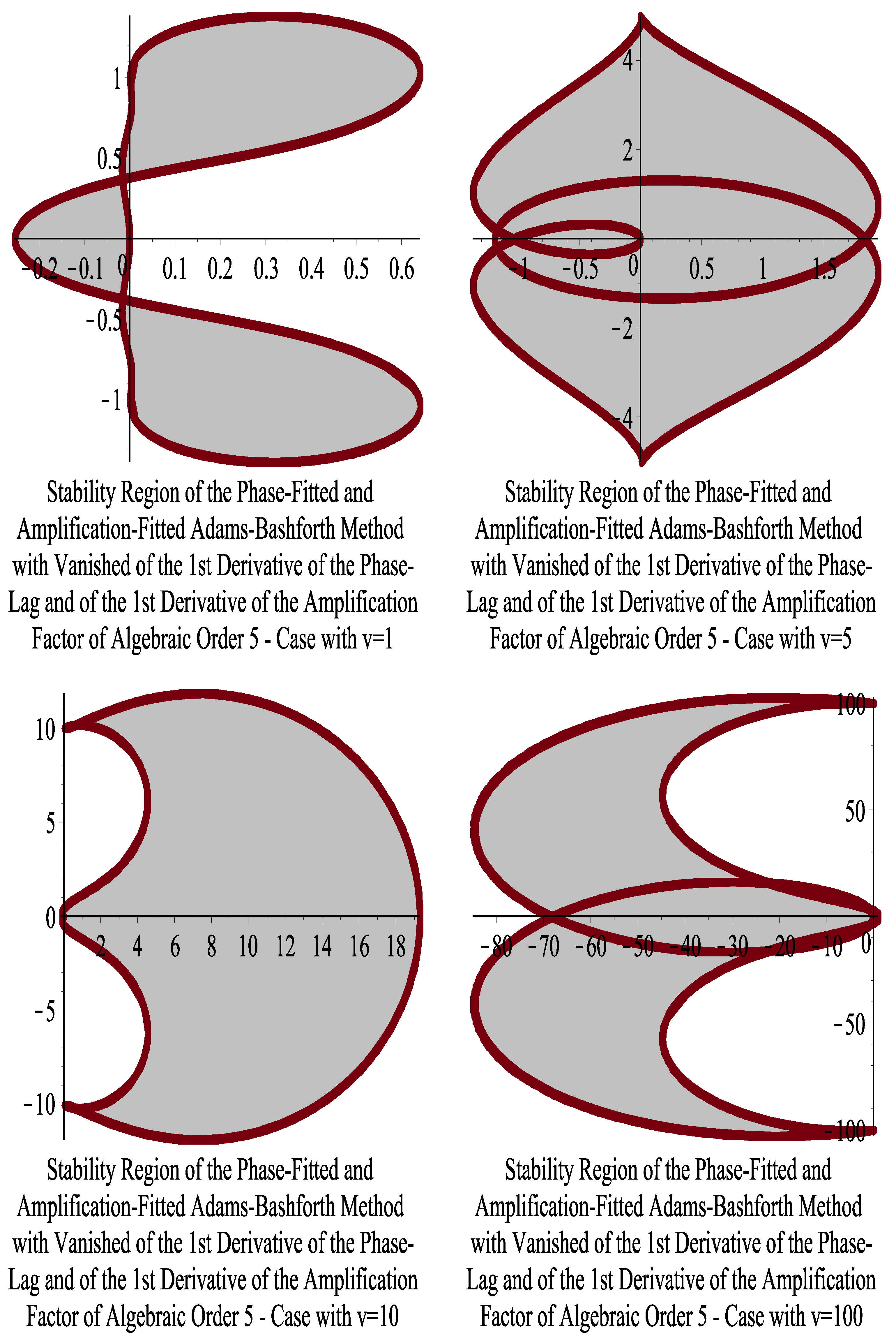

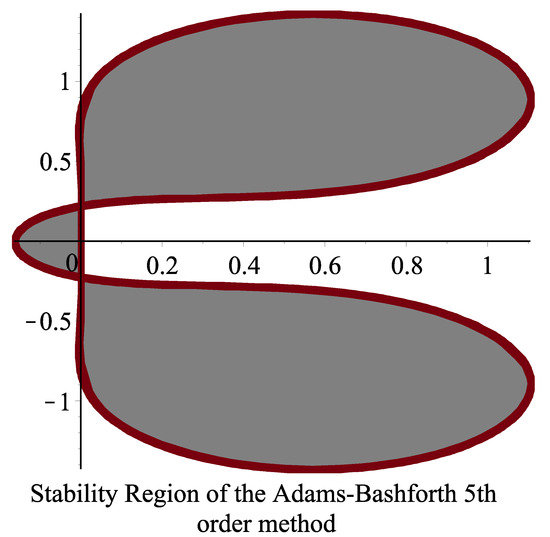

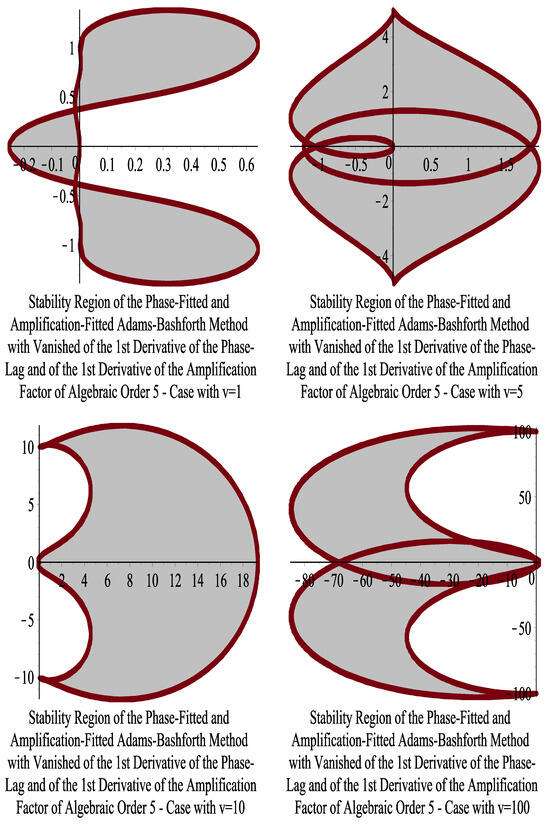

If we solve the above equation using V and replace with , we can draw the stability areas for . Figure 3 shows the stability of the six-step Adams–Bashforth method with constant coefficients. Figure 4, Figure 5, Figure 6 and Figure 7 show the stability region for the algorithms obtained in Section 6, Section 7, Section 8 and Section 9. For these cases, we present the stability regions for , , , and .

Figure 3.

Stability region for the classical fifth-order Adams–Bashforth method.

Figure 4.

Stability region for the Adams–Bashforth method developed in Section 6.

Figure 5.

Stability region for the Adams–Bashforth method developed in Section 7.

Figure 6.

Stability region for the Adams–Bashforth method developed in Section 8.

Figure 7.

Stability region for the Adams–Bashforth method developed in Section 9.

11. Numerical Results

11.1. Problem of Stiefel and Bettis

Stiefel and Bettis [32] investigated the following nearly periodic orbit problem, which we take into consideration:

The exact solution is

For this problem, we use .

Numerical solutions to the problem (88) have been found for using the respective methods:

- The classical Adams–Bashforth method of the fourth order, which is mentioned as Numeric. Proced. I;

- The classical Adams–Bashforth method of the fifth order, which is mentioned as Numeric. Proced. II;

- The classical Adams–Bashforth method of the sixth order, which is mentioned as Numeric. Proced. III;

- The Runge–Kutta Dormand and Prince fourth-order method [16], which is mentioned as Numeric. Proced. IV;

- The Runge–Kutta Dormand and Prince fifth order method [16], which is mentioned as Numeric. Proced. V

- The Runge–Kutta Fehlberg fourth-order method [33], which is mentioned as Numeric. Proced. VI;

- The Runge–Kutta Fehlberg fifth-order method [33], which is mentioned as Numeric. Proced. VII;

- The Runge–Kutta Cash and Karp fifth-order method [34], which is mentioned as Numeric. Proced. VIII;

- The phase-fitted and amplification-fitted Adams–Bashforth fourth-order method, which is developed in Section 8 of [30], which is mentioned as Numeric. Proced. IX;

- The phase-fitted and amplification-fitted Adams–Bashforth fourth-order method with the vanished first derivative of the phase lag which is developed in Section 4, which is mentioned as Numeric. Proced. X;

- The phase-fitted and amplification-fitted Adams–Bashforth fourth-order method with the vanished first derivative of the amplification factor, which is developed in Section 5, which is mentioned as Numeric. Proced. XI;

- The phase-fitted and amplification-fitted Adams–Bashforth fifth-order method which is developed in Section 6, which is mentioned as Numeric. Proced. XII;

- The phase-fitted and amplification-fitted Adams–Bashforth fifth-order method with the vanished first derivative of the phase lag which is developed in Section 7, which is mentioned as Numeric. Proced. XIII;

- The phase-fitted and amplification-fitted Adams–Bashforth fifth-order method with the vanished first derivative of the amplification factor which is developed in Section 8, which is mentioned as Numeric. Proced. XIV;

- The phase-fitted and amplification-fitted Adams–Bashforth fifth-order method with vanished the first derivative of the phase lag and the vanished first derivative of the amplification factor which is developed in Section 9, which is mentioned as Numeric. Proced. XV.

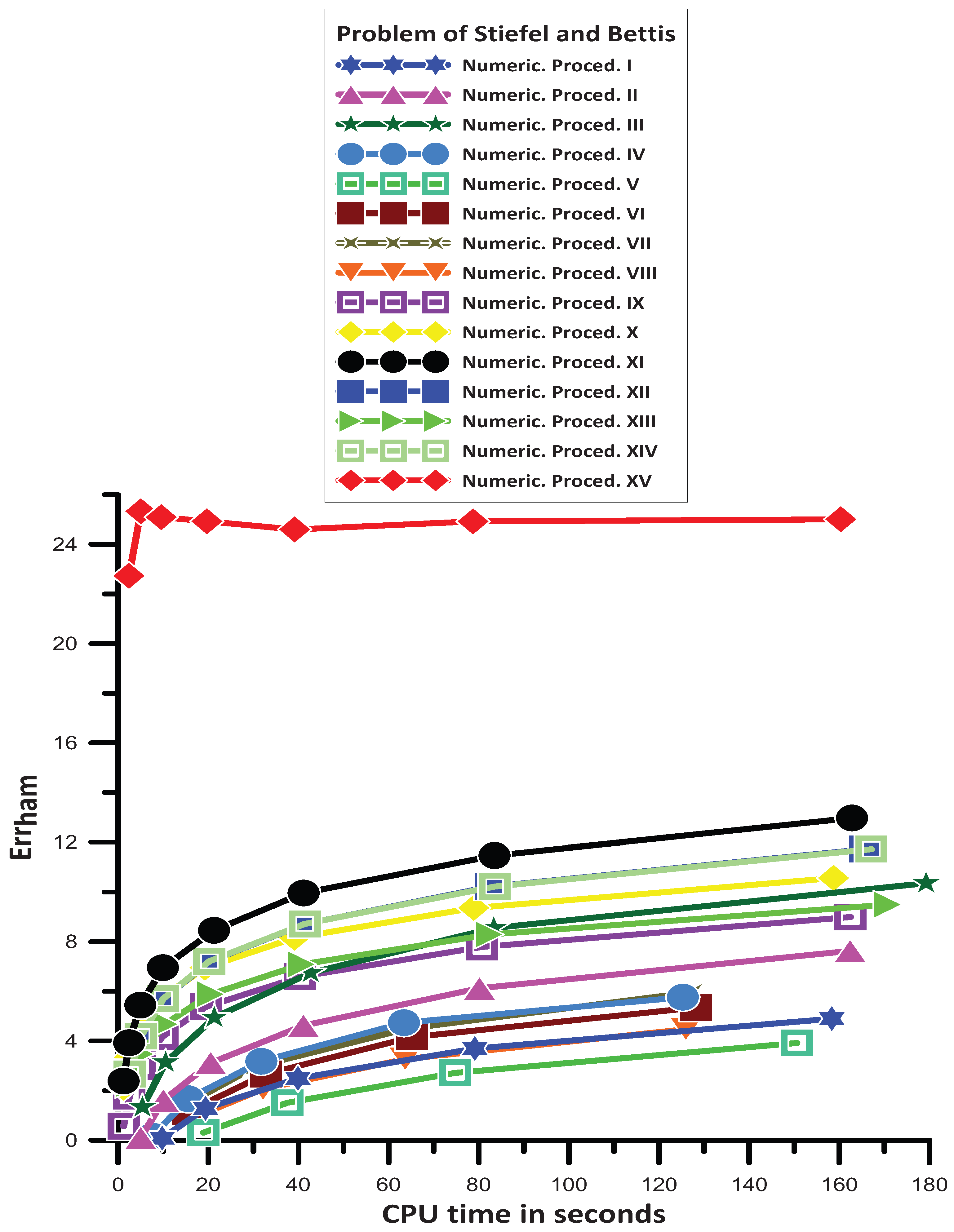

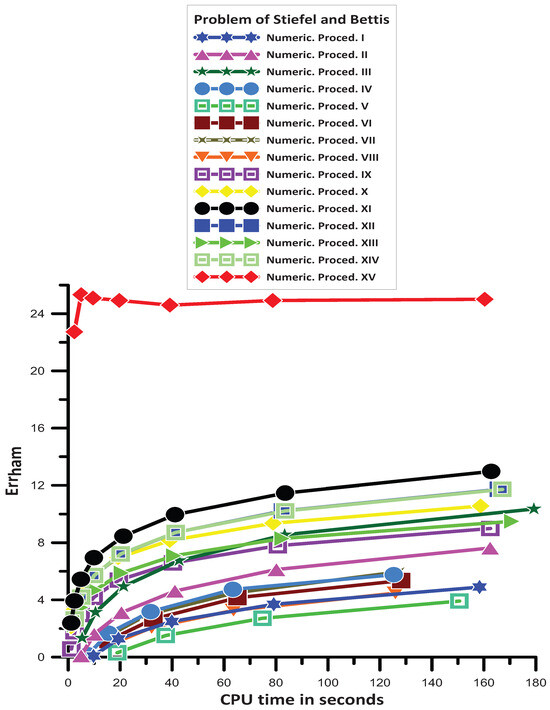

The highest absolute error of the solution obtained by each of the numerical approaches outlined earlier for the Stiefel and Bettis [32] problem is shown in Figure 8.

Figure 8.

Numerical results for the problem of Stiefel and Bettis [32].

The information in Figure 8 allows us to see the following:

- Numeric. Proced. I is more efficient than Numeric. Proced. V;

- Numeric. Proced. I and Numeric. Proced. VIII give approximately the same accuracy;

- Numeric. Proced. VI is more efficient than Numeric. Proced. I;

- Numeric. Proced. IV is more efficient than Numeric. Proced. VI;

- Numeric. Proced. IV and Numeric. Proced. VII give approximately the same accuracy;

- Numeric. Proced. II is more efficient than Numeric. Proced. IV;

- Numeric. Proced. III is more efficient than Numeric. Proced. II;

- Numeric. Proced. IX gives mixed results. For big step sizes, it is more efficient than Numeric. Proced. III. For small step sizes, it is less efficient than Numeric. Proced. III;

- Numeric. Proced. XIII is more efficient than Numeric. Proced. IX;

- Numeric. Proced. X is more efficient than Numeric. Proced. XIII;

- Numeric. Proced. XIV is more efficient than Numeric. Proced. X;

- Numeric. Proced. XII and Numeric. Proced. XIV give approximately the same accuracy;

- Numeric. Proced. XI is more efficient than Numeric. Proced. XIV;

- Finally, Numeric. Proced. XV is the most efficient one.

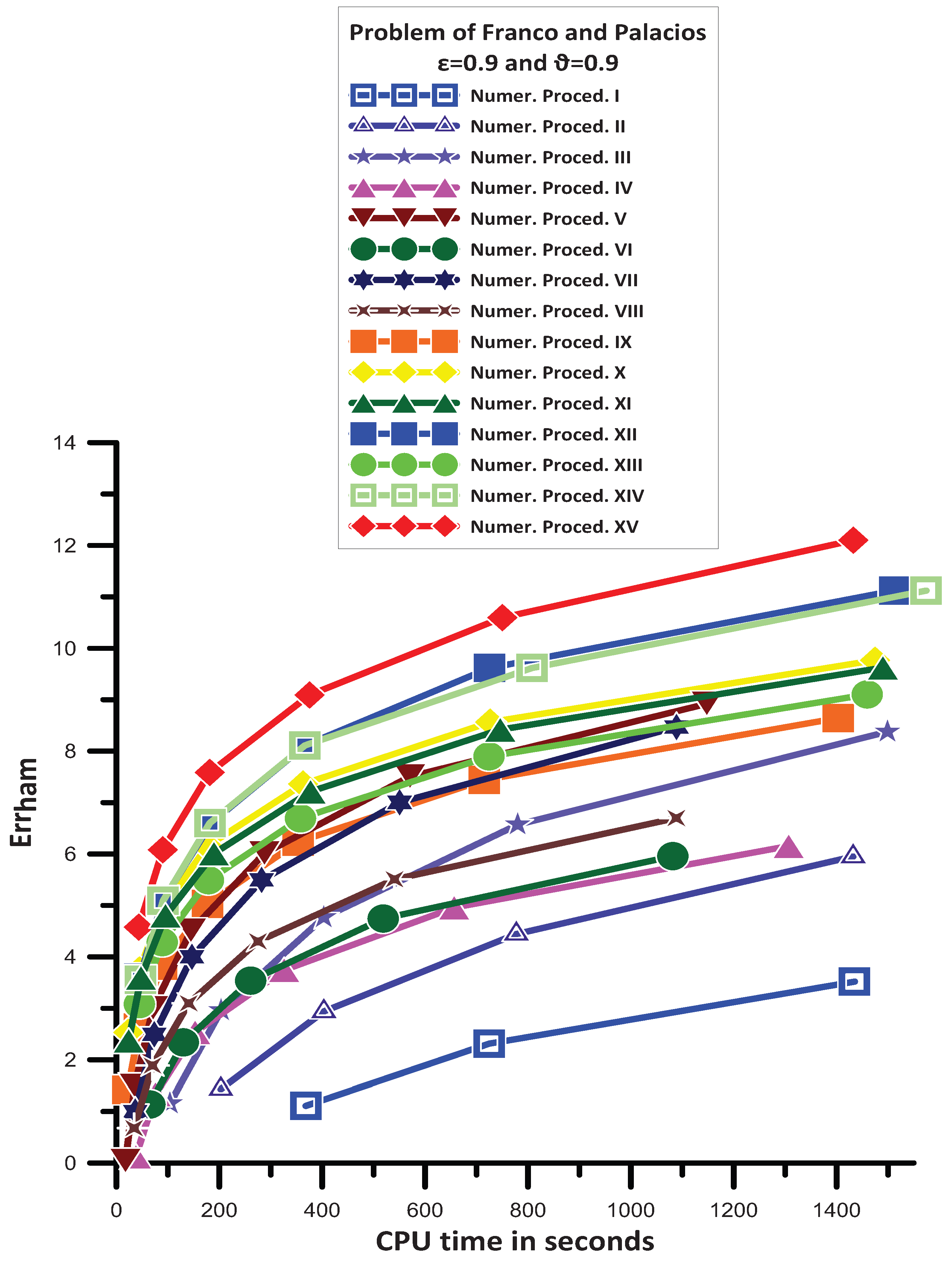

11.2. Problem of Franco and Palacios [35]

Franco and Palacios [35] investigated the following problem, which we take into consideration:

The exact solution is

where and . For this problem, we use .

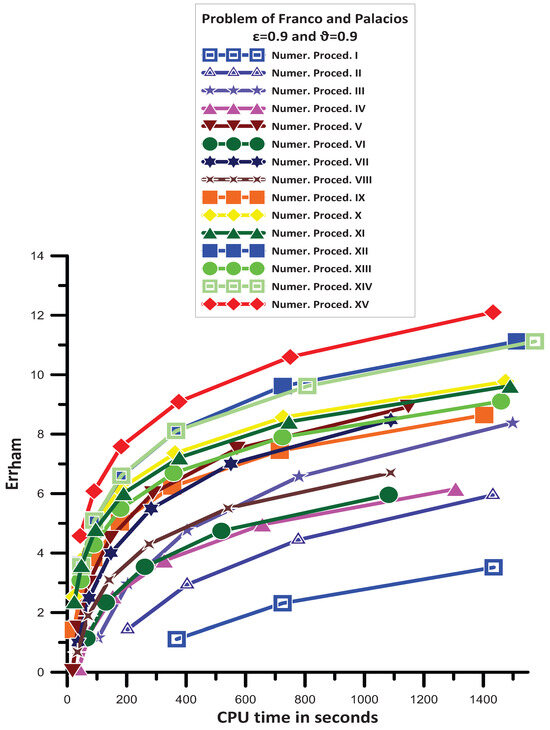

Using the techniques outlined in Section 11.1, the numerical solution to the system of Equations (90) has been found for .

The information in Figure 9 allows us to see the following:

Figure 9.

Numerical results for the problem of Franco and Palacios [35].

- Numeric. Proced. II is more efficient than Numeric. Proced. I;

- Numeric. Proced. IV is more efficient than Numeric. Proced. II;

- Numeric. Proced. IV and Numeric. Proced. VI give approximately the same accuracy;

- Numeric. Proced. III is more efficient than Numeric. Proced. IV;

- Numeric. Proced. VIII gives mixed results. For big step sizes, it is more efficient than Numeric. Proced. III. For small step sizes, it is less efficient than Numeric. Proced. III;

- Numeric. Proced. VII is more efficient than Numeric. Proced. III and Numeric. Proced. VIII;

- Numeric. Proced. XIII is more efficient than Numeric. Proced. VII;

- Numeric. Proced. IX gives mixed results. For big step sizes, it is more efficient than Numeric. Proced. VII. For small step sizes, it is less efficient than Numeric. Proced. VII;

- Numeric. Proced. V is more efficient than Numeric. Proced. IX;

- Numeric. Proced. XI is more efficient than Numeric. Proced. V;

- Numeric. Proced. X is more efficient than Numeric. Proced. XI;

- Numeric. Proced. XIV is more efficient than Numeric. Proced. X;

- Numeric. Proced. XII and Numeric. Proced. XIV give approximately the same accuracy;

- Finally, Numeric. Proced. XV is the most efficient one.

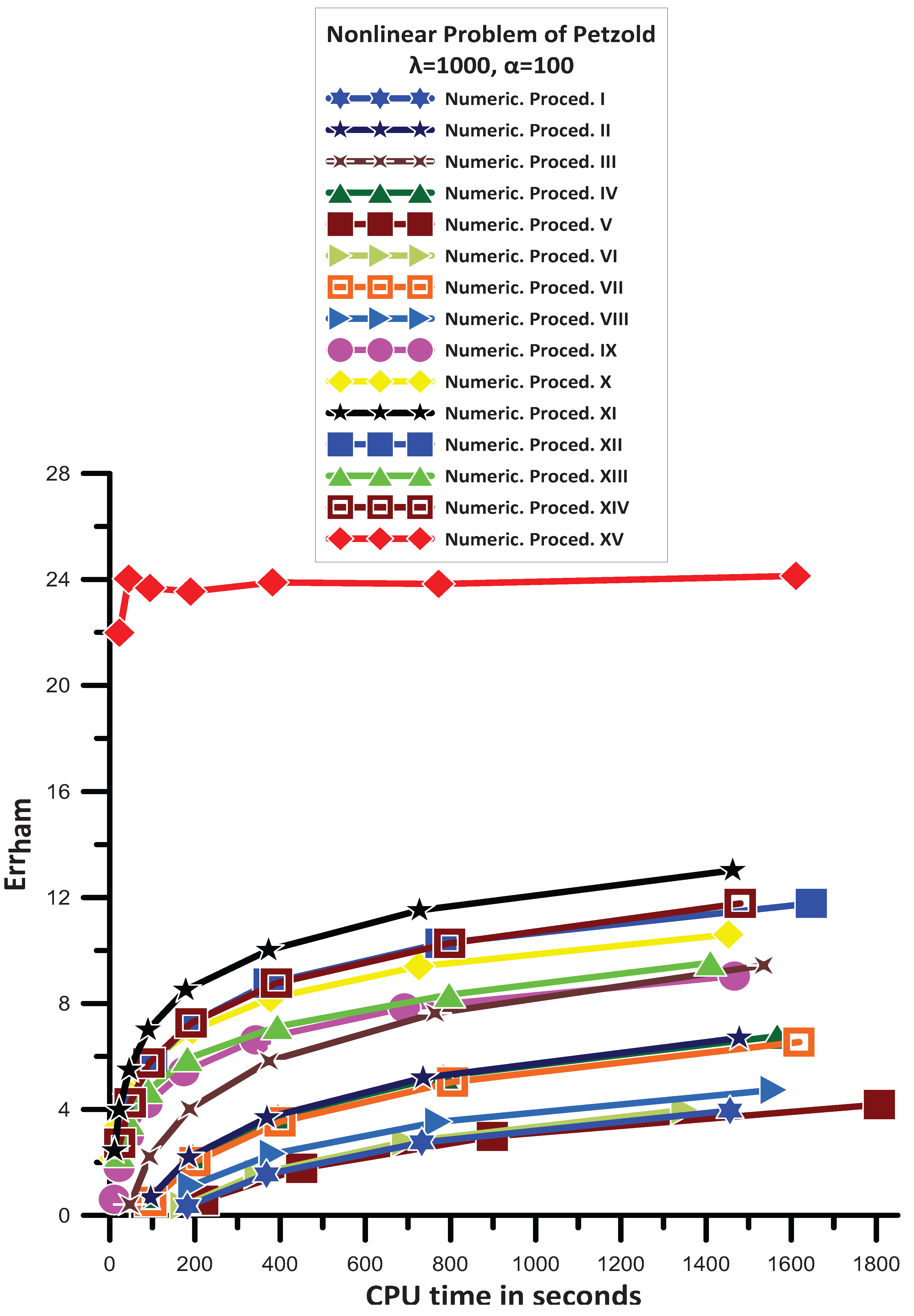

11.3. Nonlinear Problem of Petzold [36]

Petzold [36] investigated the following problem, which we take into consideration:

The exact solution is

where , . For this problem, we use .

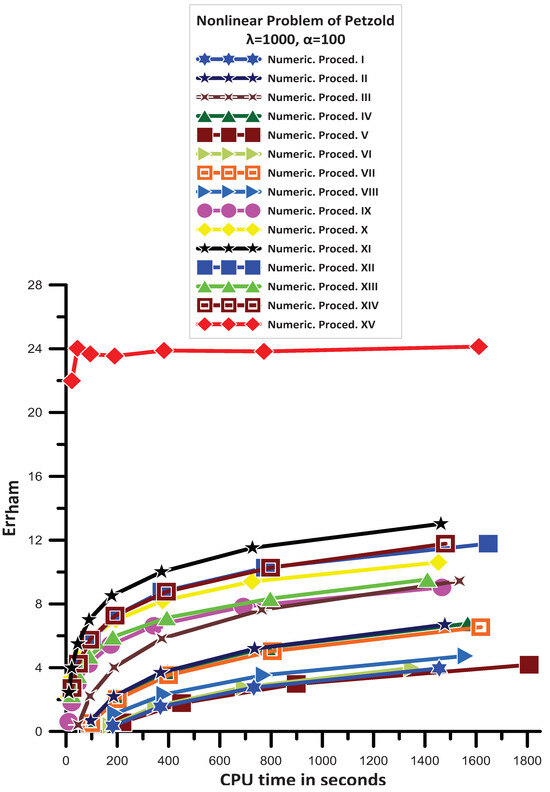

Using the techniques outlined in Section 11.1, the numerical solution to the system of Equation (92) has been found for .

The information in Figure 10 allows us to see the following:

Figure 10.

Numerical results for the nonlinear problem of [36].

- Numeric. Proced. I, Numeric. Proced. V and Numeric. Proced. VI give approximately the same accuracy;

- Numeric. Proced. VIII is more efficient than Numeric. Proced. VI;

- Numeric. Proced. VII is more efficient than Numeric. Proced. VIII;

- Numeric. Proced. II, Numeric. Proced. IV, and Numeric. Proced. VII give approximately the same accuracy;

- Numeric. Proced. III is more efficient than Numeric. Proced. II;

- Numeric. Proced. IX is more efficient than Numeric. Proced. III;

- Numeric. Proced. XIII is more efficient than Numeric. Proced. IX;

- Numeric. Proced. X is more efficient than Numeric. Proced. XIII;

- Numeric. Proced. XII is more efficient than Numeric. Proced. X;

- Numeric. Proced. XII and Numeric. Proced. XIV give approximately the same accuracy;

- Numeric. Proced. XI is more efficient than Numeric. Proced. XIV;

- Finally, Numeric. Proced. XV is the most efficient one.

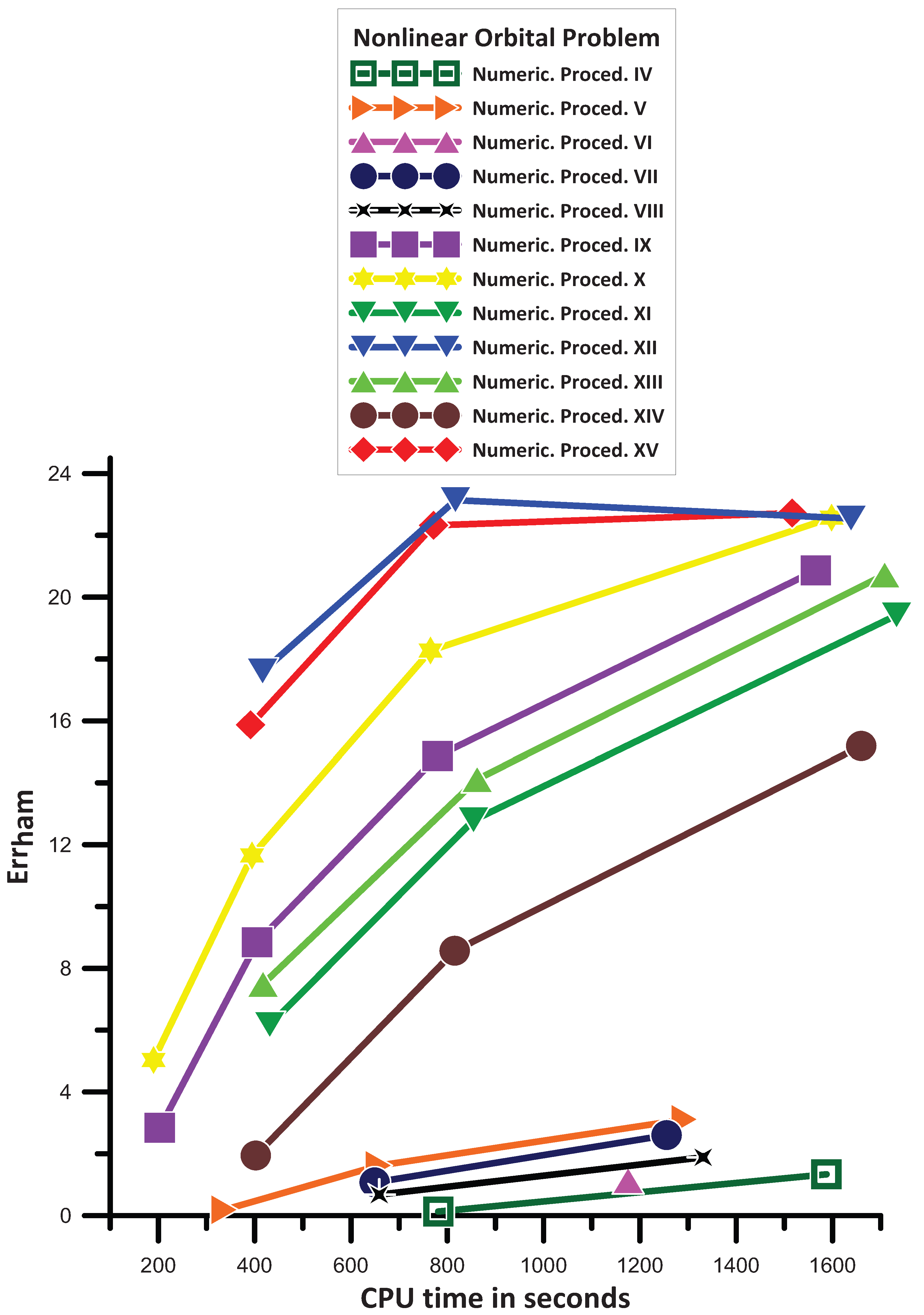

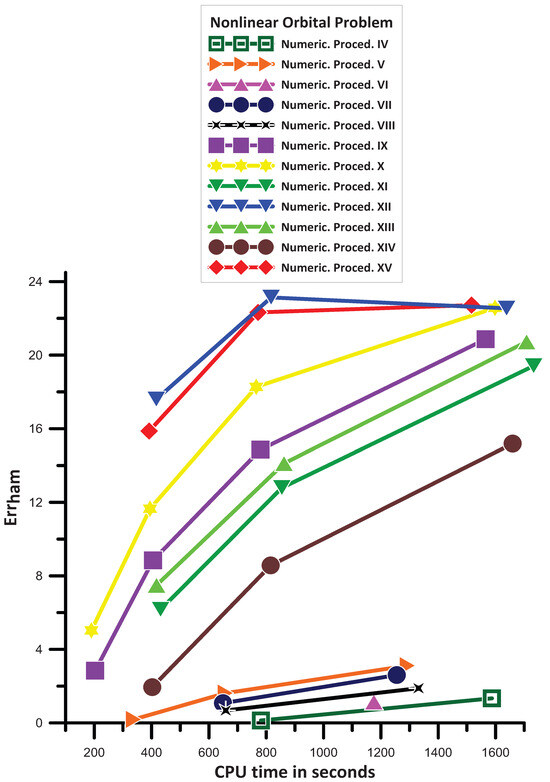

11.4. A Nonlinear Orbital Problem [37]

Simos in [37] investigated the following nonlinear orbital problem, which we take into consideration:

The exact solution is

where . For this problem, we use .

Using the techniques outlined in Section 11.1, the numerical solution to the system of Equations (92) has been found for .

The information in Figure 11 allows us to see the following:

Figure 11.

Numerical results for the Nonlinear Orbital problem of [37].

- Numeric. Proced. I, Numeric. Proced. II, and Numeric. Proced. III are divergent for the data of the specific problem;

- Numeric. Proced. VI gives the same accuracy as Numeric. Proced. IV in the one point of its convergence;

- Numeric. Proced. VIII is more efficient than Numeric. Proced. IV;

- Numeric. Proced. VII is more efficient than Numeric. Proced. VIII;

- Numeric. Proced. V is more efficient than Numeric. Proced. VII;

- Numeric. Proced. XIV is more efficient than Numeric. Proced. V;

- Numeric. Proced. XI is more efficient than Numeric. Proced. XIV;

- Numeric. Proced. XIII is more efficient than Numeric. Proced. XI;

- Numeric. Proced. IX is more efficient than Numeric. Proced. XIII;

- Numeric. Proced. X is more efficient than Numeric. Proced. IX;

- Numeric. Proced. XV and Numeric. Proced. XII give approximately the same accuracy;

- Finally, Numeric. Proced. XV and Numeric. Proced. XII are the most efficient.

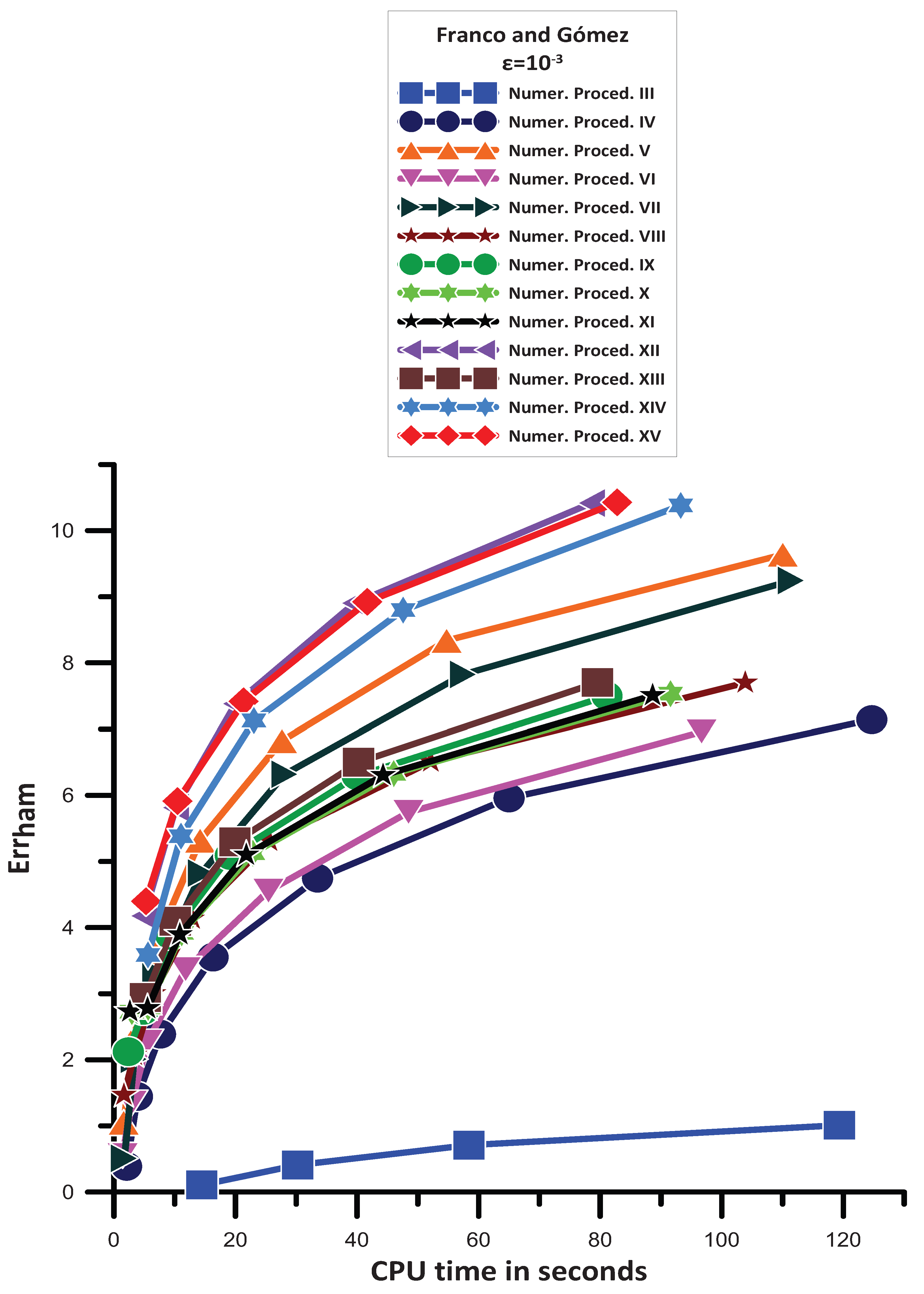

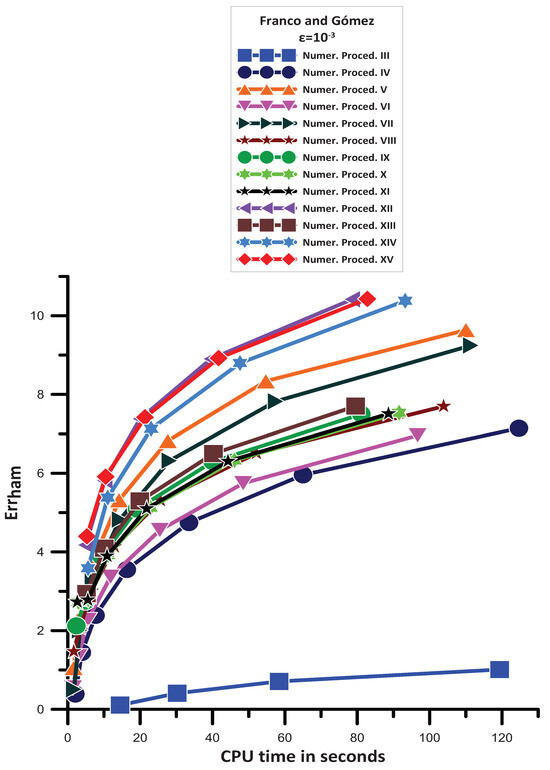

11.5. Problem of Franco and Gómez [38]

Franco and Gómez [38] investigated the following problem, which we take into consideration:

The exact solution is

where . For this problem, we use .

Using the techniques outlined in Section 11.1, the numerical solution to the system of Equations (90) has been found for .

The information in Figure 12 allows us to see the following:

Figure 12.

Numerical results for the semi−linear problem of Franco and Gómez [38].

- Numeric. Proced. I and Numeric. Proced. II are divergent in the data of the specific problem;

- Numeric. Proced. IV is more efficient than Numeric. Proced. III;

- Numeric. Proced. VI is more efficient than Numeric. Proced. IV;

- Numeric. Proced. VIII is more efficient than Numeric. Proced. VI;

- Numeric. Proced. VIII, Numeric. Proced. X, and Numeric. Proced. XI give approximately the same accuracy;

- Numeric. Proced. IX is more efficient than Numeric. Proced. XI;

- Numeric. Proced. XIII is more efficient than Numeric. Proced. IX;

- Numeric. Proced. VII is more efficient than Numeric. Proced. XIII;

- Numeric. Proced. V is more efficient than Numeric. Proced. VII;

- Numeric. Proced. XIV is more efficient than Numeric. Proced. V;

- Numeric. Proced. XV is more efficient than Numeric. Proced. XIV;

- Numeric. Proced. XII and Numeric. Proced. XV give approximately the same accuracy, and they are the most efficient.

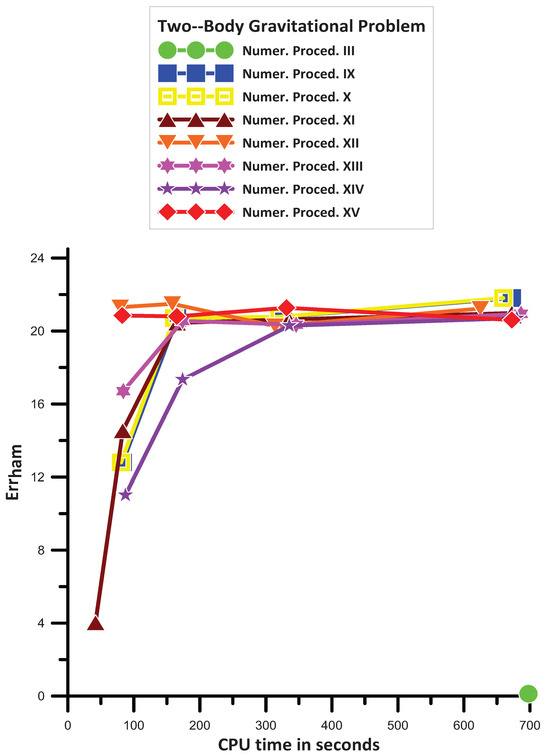

11.6. Two-Body Gravitational Problem

We take into consideration the two-body gravitational problem

The exact solution is

For this problem, we use .

Using the techniques outlined in Section 11.1, the numerical solution to the system of Equations (98) has been found for .

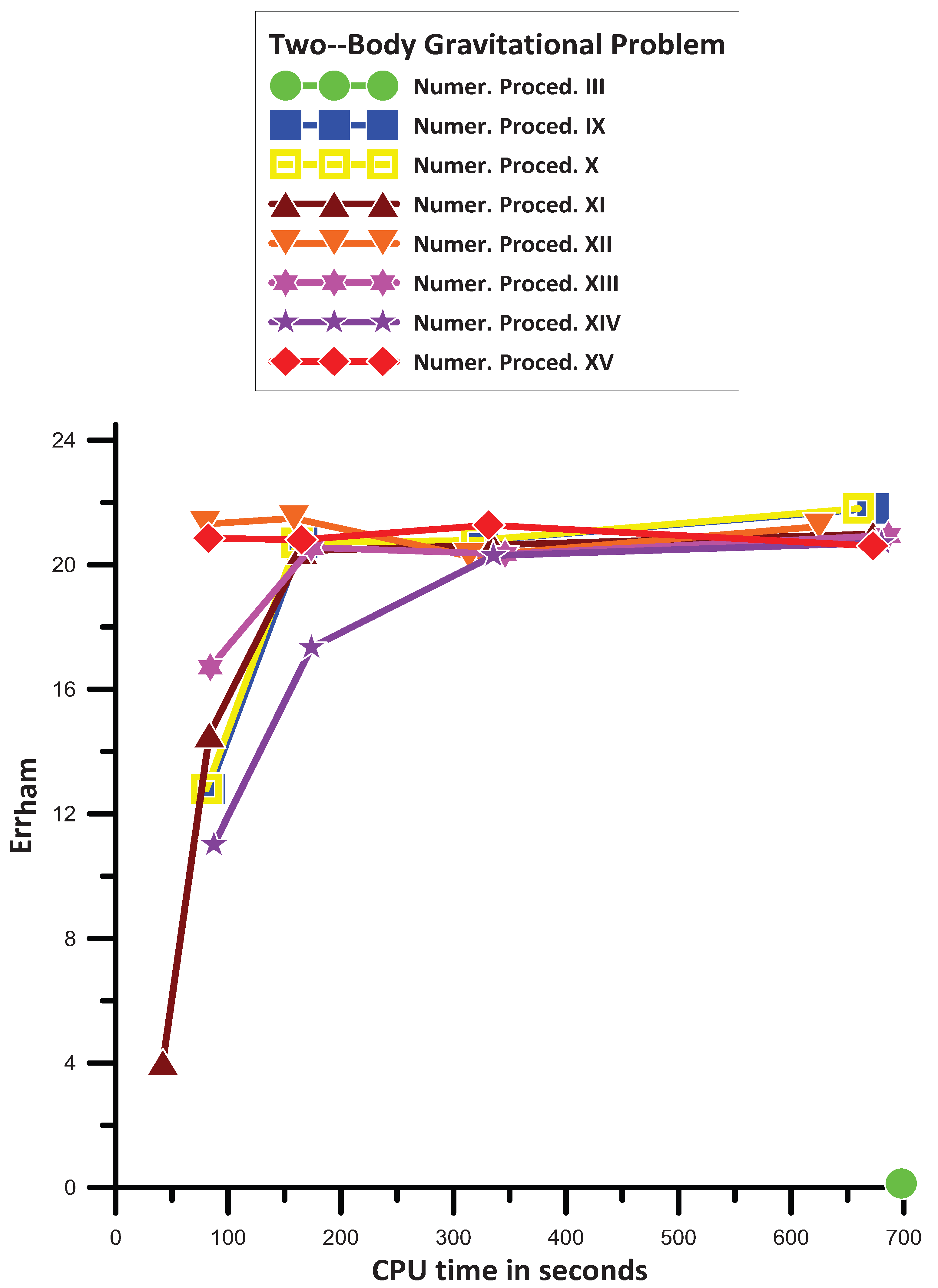

The information in Figure 13 allows us to see the following:

Figure 13.

Numerical results for two-body gravitational problem (Kepler’s plane problem).

- Numeric. Proced. I, Numeric. Proced. II, Numeric. Proced. IV, Numeric. Proced. V, Numeric. Proced. VI, Numeric. Proced. VII, and Numeric. Proced. VIII are divergent in the data of the specific problem.

- Numeric. Proced. III is convergent but gives one result of low accuracy.

- Numeric. Proced. IX, Numeric. Proced. X, Numeric. Proced. XI, Numeric. Proced. XII, Numeric. Proced. XIII, Numeric. Proced. XIV, and Numeric. Proced. XV give approximately the same accuracy for small step sizes. The accuracy given by the above methods is high.

- For large step sizes, we have the following remarks:

- -

- Numeric. Proced. IX and Numeric. Proced. X are more efficient than Numeric. Proced. XIV;

- -

- Numeric. Proced. XI is more efficient than Numeric. Proced. IX and Numeric. Proced. X;

- -

- Numeric. Proced. XIII is more efficient than Numeric. Proced. XI;

- -

- Numeric. Proced. XV is more efficient than Numeric. Proced. XIII;

- -

- Numeric. Proced. XII is more efficient than Numeric. Proced. XV.

- Based on the above, Numeric. Proced. IX, Numeric. Proced. X, Numeric. Proced. XI, Numeric. Proced. XII, Numeric. Proced. XIII, Numeric. Proced. XIV, and Numeric. Proced. XV are the most efficient for small step sizes. For large step sizes Numeric. Proced. XII is the most efficient.

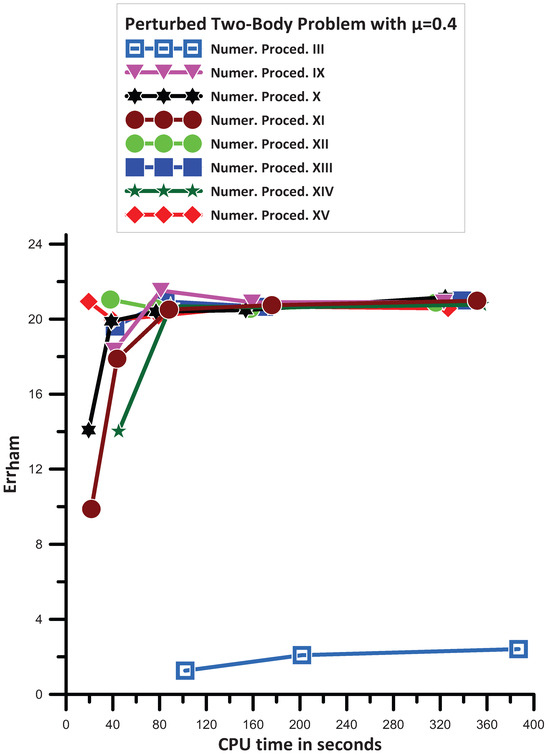

11.7. Perturbed Two–Body Gravitational Problem-Case

The perturbed two–body Kepler’s problem is considered:

The exact solution is

For this problem, we use .

Using the techniques outlined in Section 11.1, the numerical solution to the system of Equation (100) has been found for with

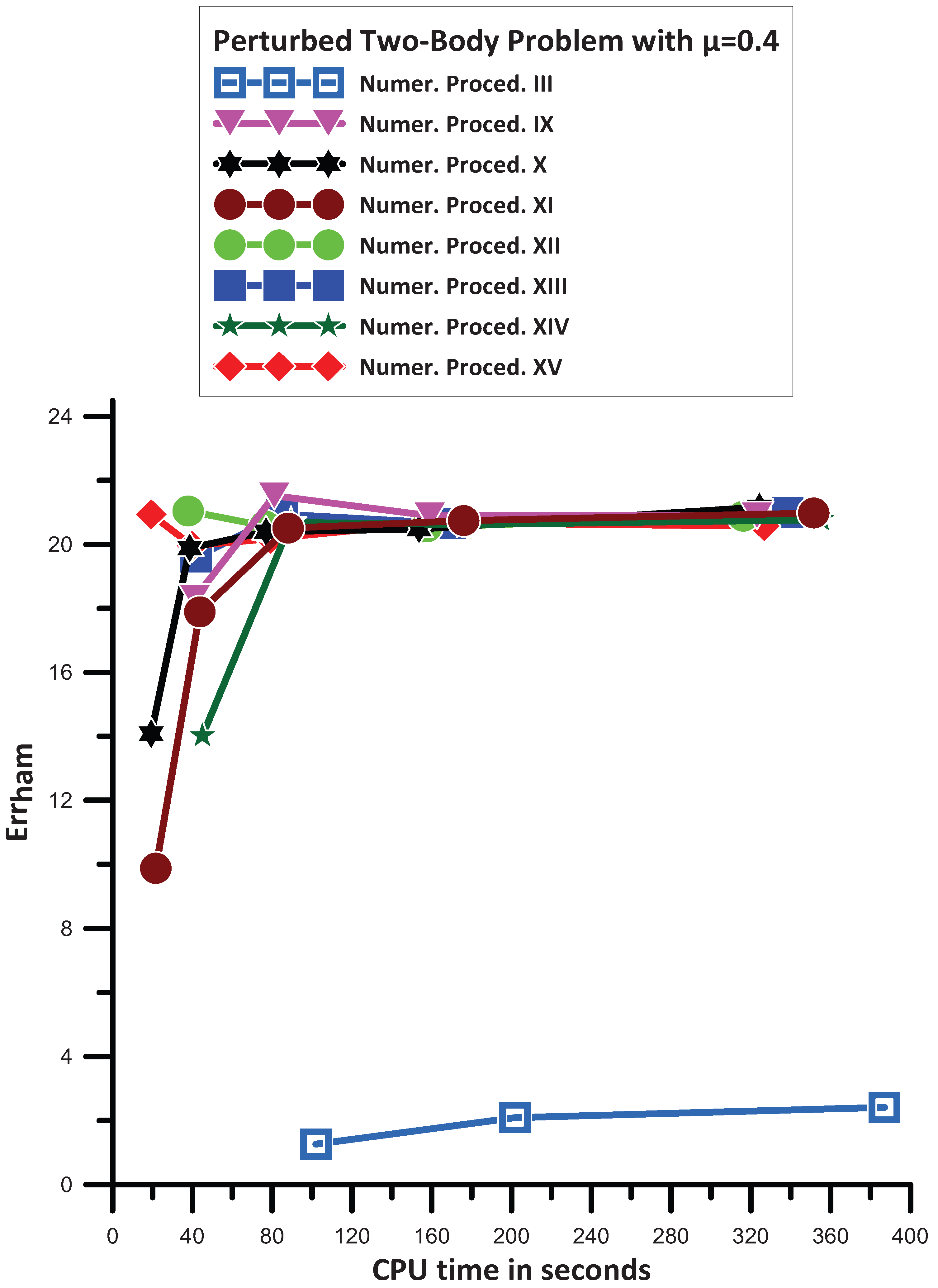

The information in Figure 14 allows us to see the following:

Figure 14.

Numerical results for perturbed two-body gravitational problem (perturbed Kepler’s problem) with .

- Numeric. Proced. I, Numeric. Proced. II, Numeric. Proced. IV, Numeric. Proced. V, Numeric. Proced. VI, Numeric. Proced. VII, and Numeric. Proced. VIII are divergent for the data of the specific problem;

- Numeric. Proced. III is convergent, but the given results are of low accuracy;

- Numeric. Proced. IX, Numeric. Proced. X, Numeric. Proced. XI, Numeric. Proced. XII, Numeric. Proced. XIII, Numeric. Proced. XIV, and Numeric. Proced. XV give approximately the same accuracy. The accuracy given by the above methods is high, even for large step sizes.

- For large step sizes, we have the following remarks:

- -

- Numeric. Proced. XI is more efficient than Numeric. Proced. XIV;

- -

- Numeric. Proced. IX is more efficient than Numeric. Proced. XI;

- -

- Numeric. Proced. X is more efficient than Numeric. Proced. IX;

- -

- Numeric. Proced. XIII and Numeric. Proced. X give approximately the same accuracy;

- -

- Numeric. Proced. XII is more efficient than Numeric. Proced. XIII;

- -

- Numeric. Proced. XV is more efficient than Numeric. Proced. XII.

- Based on the above, Numeric. Proced. IX, Numeric. Proced. X, Numeric. Proced. XI, Numeric. Proced. XII, Numeric. Proced. XIII, Numeric. Proced. XIV, and Numeric. Proced. XV are the most efficient for small step sizes. For large step sizes, Numeric. Proced. XV is the most efficient.

Taking everything into consideration, the most effective methodologies shown here are:

- The methodology which is laid out in Section 9 that focuses on the vanishing of phase lag and amplification factor, as well as the eradication of their first derivatives.

- The methodology which is laid out in Section 6 that focuses on the vanishing of phase lag and amplification factor (the phase-fitted and amplification-fitted method).

- The methodology which is laid out in Section 7 that focuses on the vanishing of phase lag and amplification factor (the phase-fitted and amplification-fitted method) as well as the eradication of the first derivative of the phase lag.

- The methodology which is laid out in Section 8 that focuses on the vanishing of phase lag and amplification factor (the phase-fitted and amplification-fitted method) as well as the eradication of the first derivative of the amplification factor.

Finding the optimal value of the parameter v determines the efficacy of frequency-dependent algorithms, such as the recently proposed ones. Many problems have clear definitions for this decision in the problem model. There are methods in the literature for determining the parameter v that have been proposed for situations when this is not straightforward (see [39,40]).

11.8. High-Order Ordinary Differential Equations and Partial Differential Equations

When applying the recently introduced techniques to solve systems of high-order ordinary differential equations, it is important to remember that there are already established ways to simplify such systems into first-order differential equations. Some examples of such methods include substituting variables, adding new variables, rewriting the system with new variables for each derivative, and so on (refer to [41] for more information).

It should be noted that there are already established methods for reducing a system of partial differential equations to a system of first-order differential equations, such as the characteristics method (refer to [42]), which can be used to solve systems of partial differential equations using the newly introduced techniques mentioned earlier.

12. Conclusions

Our goal in this work was to examine how the numerical approaches’ efficiency is affected by the phase lag and amplification factor derivatives. In light of the above, we laid out a number of approaches to efficient method creation based on the phase lag and/or amplification factor derivatives.Particularly, we established procedures for the following:

- Methodology which includes the elimination of the derivatives of the phase lag;

- Methodology which includes the elimination of the derivatives of the amplification factor;

- Methodology which includes the elimination of the derivatives of the phase lag and the derivatives of the amplification factor.

Several multistep approaches were created using the methodologies stated above. The Adams–Bashforth fourth algebraic order method and the Adams-Bashforth fifth algebraic order method served as our foundational approaches.

In order to evaluate the efficacy of the aforementioned methodologies, they were applied to several problems involving oscillating solutions.

All our calculations adhered to the IEEE Standard 754 and were executed on a personal computer featuring an x compatible architecture and utilizing a quadruple precision arithmetic data type consisting of 64 bits.

Funding

This research received no external funding.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Direct Formulae for the Calculation of the Derivatives of the Phase Lag and the Amplification Factor

Appendix A.1. Direct Formula for the Derivative of the Phase Lag

Appendix A.2. Direct Formula for the Derivative of the Amplification Factor

Appendix B. Formula

Appendix C. Formulae , , and

Appendix D. Formula

Appendix E. Formulae , , and

Appendix F. Formula

Appendix G. Formulae , , , and

References

- Landau, L.D.; Lifshitz, F.M. Quantum Mechanics; Pergamon: New York, NY, USA, 1965. [Google Scholar]

- Prigogine, I.; Rice, S.A. (Eds.) Advances in Chemical Physics Vol. 93: New Methods in Computational Quantum Mechanics; John Wiley & Sons: Hoboken, NJ, USA, 1997. [Google Scholar]

- Simos, T.E. Numerical Solution of Ordinary Differential Equations with Periodical Solution. Ph.D. Dissertation, National Technical University of Athens, Athens, Greece, 1990. (In Greek). [Google Scholar]

- Ixaru, L.G. Numerical Methods for Differential Equations and Applications; Book Series: Mathematics and Its Applications; Springer: Dordrecht, The Netherlands, 1984; ISBN 978-90-277-1597-5. [Google Scholar]

- Quinlan, G.D.; Tremaine, S. Symmetric multistep methods for the numerical integration of planetary orbits. Astron. J. 1990, 100, 1694–1700. [Google Scholar] [CrossRef]

- Lyche, T. Chebyshevian multistep methods for ordinary differential equations. Numer. Math. 1972, 10, 65–75. [Google Scholar] [CrossRef]

- Konguetsof, A.; Simos, T.E. On the construction of Exponentially-Fitted Methods for the Numerical Solution of the Schrödinger Equation. J. Comput. Meth. Sci. Eng. 2001, 1, 143–165. [Google Scholar] [CrossRef]

- Simos, T.E. Atomic Structure Computations in Chemical Modelling: Applications and Theory; Hinchliffe, A., UMIST, Eds.; The Royal Society of Chemistry: Washington, DC, USA, 2000; pp. 38–142. [Google Scholar]

- Simos, T.E.; Vigo-Aguiar, J. On the construction of efficient methods for second order IVPs with oscillating solution. Int. J. Mod. Phys. C 2001, 10, 1453–1476. [Google Scholar] [CrossRef]

- Dormand, J.R.; El-Mikkawy, M.E.A.; Prince, P.J. Families of Runge-Kutta-Nyström formulae. IMA J. Numer. Anal. 1987, 7, 235–250. [Google Scholar] [CrossRef]

- Franco, J.M.; Gomez, I. Some procedures for the construction of high-order exponentially fitted Runge-Kutta-Nyström Methods of explicit type. Comput. Phys. Commun. 2013, 184, 1310–1321. [Google Scholar] [CrossRef]

- Franco, J.M.; Gomez, I. Accuracy and linear Stability of RKN Methods for solving second-order stiff problems. Appl. Numer. Math. 2009, 59, 959–975. [Google Scholar] [CrossRef]

- Chien, L.K.; Senu, N.; Ahmadian, A.; Ibrahim, S.N.I. Efficient Frequency-Dependent Coefficients of Explicit Improved Two-Derivative Runge-Kutta Type Methods for Solving Third- Order IVPs. Pertanika J. Sci. Technol. 2023, 31, 843–873. [Google Scholar] [CrossRef]

- Zhai, W.J.; Fu, S.H.; Zhou, T.C.; Xiu, C. Exponentially-fitted and trigonometrically-fitted implicit RKN methods for solving y"=f(t,y). J. Appl. Math. Comput. 2022, 68, 1449–1466. [Google Scholar] [CrossRef]

- Fang, Y.L.; Yang, Y.P.; You, X. An explicit trigonometrically fitted Runge-Kutta method for stiff and oscillatory problems with two frequencies. Int. J. Comput. Math. 2020, 97, 85–94. [Google Scholar] [CrossRef]

- Dormand, J.R.; Prince, P.J. A family of embedded Runge-Kutta formulae. J. Comput. Appl. Math. 1980, 6, 19–26. [Google Scholar] [CrossRef]

- Kalogiratou, Z.; Monovasilis, T.; Psihoyios, G.; Simos, T.E. Runge–Kutta type methods with special properties for the numerical integration of ordinary differential equations. Phys. Rep. 2014, 536, 75–146. [Google Scholar] [CrossRef]

- Anastassi, Z.A.; Simos, T.E. Numerical multistep methods for the efficient solution of quantum mechanics and related problems. Phys. Rep. 2009, 482–483, 1–240. [Google Scholar] [CrossRef]

- Chawla, M.M.; Rao, P.S. A Noumerov-Type Method with Minimal Phase-Lag for the Integration of 2nd Order Periodic Initial-Value Problems. J. Comput. Appl. Math. 1984, 11, 277–281. [Google Scholar] [CrossRef]

- Gr, L. Ixaru and M. Rizea, A Numerov-like scheme for the numerical solution of the Schrödinger equation in the deep continuum spectrum of energies. Comput. Phys. Commun. 1980, 19, 23–27. [Google Scholar]

- Raptis, A.D.; Allison, A.C. Exponential-fitting Methods for the numerical solution of the Schrödinger equation. Comput. Phys. Commun. 1978, 14, 1–5. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, D.; Dai, Y.; Wu, D. An improved trigonometrically fitted P-stable Obrechkoff Method for periodic initial-value problems. Proc. R. Soc.-Math. Phys. Eng. Sci. 2005, 461, 1639–1658. [Google Scholar] [CrossRef]

- Wang, C.; Wang, Z. A P-stable eighteenth-order six-Step Method for periodic initial value problems. Int. J. Mod. Phys. C 2007, 18, 419–431. [Google Scholar] [CrossRef]

- Shokri, A.; Khalsaraei, M.M. A new family of explicit linear two-step singularly P-stable Obrechkoff methods for the numerical solution of second-order IVPs. Appl. Math. Comput. 2020, 376, 125116. [Google Scholar] [CrossRef]

- Abdulganiy, R.I.; Ramos, H.; Okunuga, S.A.; Majid, Z.A. A trigonometrically fitted intra-step block Falkner method for the direct integration of second-order delay differential equations with oscillatory solutions. Afr. Mat. 2023, 34, 36. [Google Scholar] [CrossRef]

- Lee, K.C.; Senu, N.; Ahmadian, A.; Ibrahim, S.N.I. High-order exponentially fitted and trigonometrically fitted explicit two-derivative Runge-Kutta-type methods for solving third-order oscillatory problems. Math. Sci. 2022, 16, 281–297. [Google Scholar] [CrossRef]

- Fang, Y.L.; Huang, T.; You, X.; Zheng, J.; Wang, B. Two-frequency trigonometrically-fitted and symmetric linear multi-step methods for second-order oscillators. J. Comput. Appl. Math. 2021, 392, 113312. [Google Scholar] [CrossRef]

- Chun, C.; Neta, B. Trigonometrically-Fitted Methods: A Review. Mathematics 2019, 7, 1197. [Google Scholar] [CrossRef]

- Simos, T.E. A New Methodology for the Development of Efficient Multistep Methods for First–Order IVPs with Oscillating Solutions. Mathematics 2024, 12, 504. [Google Scholar] [CrossRef]

- Simos, T.E. Efficient Multistep Algorithms for First–Order IVPs with Oscillating Solutions: II Implicit and Predictor—Corrector Algorithms. Symmetry 2024, 16, 508. [Google Scholar] [CrossRef]

- Saadat, H.; Kiyadeh, S.H.H.; Karim, R.G.; Safaie, A. Family of phase fitted 3-step second-order BDF methods for solving periodic and orbital quantum chemistry problems. J. Math. Chem. 2024, 62, 1223–1250. [Google Scholar] [CrossRef]

- Stiefel, E.; Bettis, D.G. Stabilization of Cowell’s method. Numer. Math. 1969, 13, 154–175. [Google Scholar] [CrossRef]

- Fehlberg, E. Classical Fifth-, Sixth-, Seventh-, and Eighth-order Runge-Kutta Formulas with Stepsize Control. NASA Technical Report 287 (1968). Available online: https://ntrs.nasa.gov/api/citations/19680027281/downloads/19680027281.pdf (accessed on 12 December 2022).

- Cash, J.R.; Karp, A.H. A variable order Runge–Kutta method for initial value problems with rapidly varying right-hand sides. ACM Trans. Math. Softw. 1990, 16, 201–222. [Google Scholar] [CrossRef]

- Franco, J.M.; Palacios, M. High-order P-stable multistep methods. J. Comput. Appl. Math. 1990, 30, 1–10. [Google Scholar] [CrossRef]

- Petzold, L.R. An efficient numerical method for highly oscillatory ordinary differential equations. SIAM J. Numer. Anal. 1981, 18, 455–479. [Google Scholar] [CrossRef]

- Simos, T.E. New Open Modified Newton Cotes Type Formulae as Multilayer Symplectic Integrators. Appl. Math. Model. 2013, 37, 1983–1991. [Google Scholar] [CrossRef]

- Franco, J.; Gómez, I. Trigonometrically–fitted nonuliear two–step methods for solving second order oscillatory IVPs. Appl. Math. Comput. 2014, 232, 643–657. [Google Scholar]

- Ramos, H.; Vigo-Aguiar, J. On the frequency choice in trigonometrically fitted methods. Appl. Math. Lett. 2010, 23, 1378–1381. [Google Scholar] [CrossRef]

- Ixaru, L.G.; Berghe, G.V.; De Meyer, H. Frequency evaluation in exponential fitting multistep algorithms for ODEs. J. Comput. Appl. Math. 2002, 140, 423–434. [Google Scholar] [CrossRef]

- Boyce, W.E.; DiPrima, R.C.; Meade, D.B. Elementary Differential Equations and Boundary Value Problems, 11th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2017; NJ07030-5774. [Google Scholar]

- Evans, L.C. Partial Differential Equations, 2nd ed.; American Mathematical Society: Providence, RI, USA, 2010; Chapter 3; pp. 91–135. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).