2.1. 1D Convolutional Codes

Definition 1. An convolutional code is an -submodule of of rank k. A matrix whose columns constitute a basis of is called a generator matrix of , i.e., The vector is the codeword corresponding to the information sequence .

Given a convolutional code

, two generator matrices of this code,

and

, are said to be equivalent. In addition, they differ by right multiplication with a unimodular matrix

(a

invertible polynomial matrix with a polynomial inverse or, equivalently, a

polynomial matrix with determinant in

), i.e.,

A convolutional code is non-catastrophic when it admits a right prime generator matrix , i.e., if with and , then must be a unimodular matrix. A polynomial matrix is said to be left prime if its transpose is a right prime. Note that, since two generator matrices of a convolutional code differ by a right multiplication through a unimodular matrix, if a code admits a right prime generator matrix, then all its generator matrices are right prime and it will be called non-catastrophic code.

The degree of an convolutional code is the maximum degree of the full-size minors of any generator matrix. Note that the degree can be computed with any generator matrix since two generator matrices of a code differ by the right multiplication of an unimodular matrix, as was previously said. A matrix is said to be column-reduced if the sum of its column degrees is equal to the maximum degree of its full-size minors. A polynomial matrix is said to be row-reduced if its transpose is column-reduced. An convolutional code with degree is said to be an convolutional code.

Another important matrix associated with some convolutional codes

is the (full row rank

)

parity-check matrix noted as

. This matrix is the generator matrix of the dual code of

; therefore, we can generate

as its kernel as follows:

The parity-check matrix plays a central role in the decoding process for block codes and convolutional codes. Although there is always a parity-check matrix for a block code, this is not the case for convolutional codes. In [

11], it was shown that a convolutional code admits a parity-check matrix if and only if it is non-catastrophic. Any non-catastrophic convolutional code admits a left prime and row-reduced parity-check matrix [

12].

When transmitting information through erasure channels we may lose some parts of the information. Convolutional codes have been proven to be good for communication over these channels [

13].

In [

13], a decoding algorithm for these kinds of channels was presented, and it is based on solving linear systems of equations. Consider that we receive the codeword

, and that

are correct (have no erasures) but the coefficients afterward may have erasures. Let

be a parity-check matrix of the code. We define the matrix as follows:

Then,

. By reordering these equations we can obtain a linear system of the form

where

and

are the columns of

and the coordinates of

that correspond with the erasures, respectively; and

, where

and

are analogously defined concerning the correctly received information. Therefore, we can correct the erasures by solving a linear system with as many unknowns as erasures in the received codeword. Note that we have considered only part of the coefficients of

, i.e., the coefficients

. We refer to this sequence as a

window, and we say that it is a window of

size.

Notice that, in order to correct the erasures produced in a codeword, we need a previous

safe space where all coefficients are correctly recovered. In the construction of the system (

2), the

vectors,

—which were previous to

and the first coefficient of

with some erasure—conformed to this safe space.

Once we have a method for correcting the erasures produced by a channel, we may want to know the correction capability that a code can achieve. This capacity is described in terms of the distances of the code. For convolutional codes, there exist two different distances: the free distance and the column distance. The

Hamming weight of a vector

is given by the expression

where

is the number of non-zero coordinates of

.

Definition 2. Let be an convolutional code, then the

free distance of is The next theorem establishes an upper bound on the free distance of a convolutional code, and it is called the generalized Singleton bound.

Theorem 1 ([

14]).

Let be an convolutional code. Then, The free distance of a convolutional code allows us to know the correction capacity for errors and erasures once the whole codeword is received.

An advantage of convolutional codes over block codes is that they permit us to do a partial decoding, i.e., we can start recovering erasures even though we do not have the complete codeword. The measurement of the capability of this kind of correction is given by the

column distances, which are defined for non-catastrophic convolutional codes [

15].

Given a vector and with , we defined the truncation of to the interval as .

Definition 3. Let be an non-catastrophic convolutional code, then the j-column distance of is defined by The following inequalities can be directly deduced from the previous definitions [

15]:

In addition to the free distance having a bound, the column distances also have a Singleton-like bound, which is given by the following theorem.

Theorem 2 ([

15]).

Let be an non-catastrophic convolutional code. Then, for , we haveMoreover, if for some , then for .

As seen in (

3), the column distances cannot be greater than the free distance. In fact, there exists an integer

for which

can be equal to

, i.e., the bound can be held for

and

, as well as for

[

15]. An

convolutional code with

for

(or equivalently with

) is called a

maximum distance profile (MDP) code. Next, we detail a characterization of these codes in terms of their generator and parity-check matrices. For that, we need the definition of a superregular matrix.

The determinant of a square matrix

over a field

is given by

where

is the symmetric group. We will refer to

term as the addends of

, that is, to the product of the form

and as a

component of the factor of a term, i.e., the multiplicands of the form

for

. We will also say that a

term is trivial if at least one of its components is zero, that is, the term is zero. Now, let

A be a square submatrix of a matrix

B; if all the terms of

are trivial, then we say that

is a trivially zero minor of

B or that it is a trivial minor of

B.

Definition 4 ([

16,

17]).

Let and , then B is superregular if all of its non-trivial zero minors are non-zero. Theorem 3 ([

15]).

Let be a non-catastrophic convolutional code with a right prime and column-reduced generator matrix , as well as a left prime and row-reduced parity-check matrix , then the following statements are equivalent:- 1.

is an MDP code

- 2.

, where for , and it also has the property that every full-size minor that is not trivially zero is non-zero.

- 3.

, where for , and it also has the property that every full-size minor that is not trivially zero is non-zero.

Lemma 1 ([

13]).

Let be an MDP convolutional code. If any sliding window of length at most erasures occur with , then we can completely recover the transmitted sequence. This family of optimal codes requires a great deal of regularity in the sense of the previous theorem, and this causes the construction of the MDP convolutional codes to usually require big base fields. From the applied point of view, this is a concern. In last few years, the efforts of the community have been directed to improving this situation by either giving different constructions [

18,

19,

20,

21] or finding bounds [

22,

23]. Research has also led to the development of MDP convolutional codes over finite rings [

24].

When MDP codes cannot perform a correction due to the accumulation of erasures, we have to consider some of the packets to be lost and to continue until a safe space is found again. To solve this situation, in [

13,

25,

26,

27], a definition for

Reverse-MDP convolutional codes was provided. While the usual MDP convolutional codes can only carry out a forward correction, i.e., their decoding direction is from left to right, the reverse-MDP codes will allow a backward correction, i.e., a correction from right to left.

Proposition 1 ([

26], Prop 2.9).

Let be an convolutional code with a right prime and column-reduced generator matrix . Let be the matrix obtained by replacing each entry of with , where is the i-th column degree of . Then, is a right prime, and column-reduced generator matrix of an convolutional code andWe call the reverse code of . Similarly, we denote the parity-check matrix of by .

Definition 5. Let be an MDP convolutional code. We can then say that is a reverse-MDP convolutional code if the reverse code is also an MDP code.

In [

13], it was proven that reverse-MDP codes can perform a correction from right to left as efficiently as an MDP code does in the opposite direction. However, to perform a backward correction, a safe space is required on the right side of the erasures. This can be easily seen in the following example.

Example 1. Let us assume that we are communicating through an erasure channel. If so, then we have recovered the information correctly up to an instant t and we can later receive the following pattern:where ★ indicates that the corresponding component has been erased and means that the component has been correctly received. If we use a MDP convolutional code, we cannot perform a correction due to the accumulation of erasures at the beginning of the sequence. Nevertheless, if we consider a reverse-MDP convolutional code and take the first 60 symbols of as a safe space, then we can correct the erasures in . We can repeat this method by taking the first 60 symbols in and recovering the section , that is, we build the linear system as in (2) by considering the following sets of symbols: This example shows that reverse-MDP convolutional codes can perform better than MDP convolutional codes when transmitting over an erasure channel since we can exploit its backward capability correction. However, this type of approach depends on the fact that over the communication there exists a long enough sequence of symbols that have been correctly received to play the role of a safe space for any of the decoding directions. The following codes are aimed at solving the situation in which a safe space cannot be found.

Definition 6 ([

13]).

Let be a parity-check matrix of an convolutional code and , then we obtain the matrixwhich is called a partial parity-check matrix of . Moreover, is said to be a

complete-MDP

convolutional code if, for any of its parity-check matrices , every full-size minor of that is non-trivially zero is non-zero. Complete-MDP convolutional codes have an extra feature on top of being able to perform a correction as a MDP or as reverse-MDP convolutional codes. If, in the process of decoding the code, we cannot accomplish the correction due to the accumulation of too many erasures, it can compute a new safe space to continue with the process as soon as a correctable sequence of symbols is found.

Theorem 4 ([

13], Theorem 6.6).

Given a code sequence from a complete-MDP convolutional code. If, in a window of size , there are not more than erasures, and if they are distributed in such a way that between position 1 and and between positions and , for , i.e., there are no more than erasures, then the full correction of all symbols in this interval will be possible. In particular, a new safe space can be computed. For more constructions, examples, and further content on complete-MDP convolutional codes, we refer the reader to [

12,

13,

17].

Example 2. Again, let us assume that we are using an erasure channel. In this case, we are not able to recover some of the previous symbols and we thus receive the following pattern: Note that, if we use a MDP or reverse-MDP convolutional code, we require a safe space of 48 symbols to correct the erasures in any of the directions, which we cannot find.

Nevertheless, if we use a complete-MDP convolutional code, we still have one more feature to use. We can compute a new safe space by using Theorem 4, that is, find a window of size where not more than 25 erasures occur. In the received pattern of erasures we can find the following sequence: When erasures are recovered, we have a new safe space and we can perform the usual correction.

In [

13], an algorithm to recover the information over an erasure channel by performing correction in both directions, i.e., forward and backward, is given.

We provide Algorithm 1, which is a new version of the abovementioned algorithms and includes the case for which the code can decode by using the complete-MDP property. We will maintain the notation given in [

13], that is, the value 0 means that a symbol or a sequence of symbols has not been received and that 1 is correctly recovered. The function

findzeros returns a vector with the positions of the zeros in

, as well as

forward,

backward, and

complete, which are the forward, backward, and complete recovering functions, respectively. Note that these functions use the parity-check matrices of

and

to recover the erasures that appear in

within a window of size

(when necessary).

2.2. 2D Convolutional Codes

Definition 7 ([

1]).

A 2D finite support convolutional code of rate is a free -submodule of with rank k. As for the 1D case, a full column rank polynomial matrix

, whose columns form a basis for the code, is such that we can express it as follows:

which is called a

generator matrix of

.

A polynomial matrix

is said to be right factor prime if

, then for some

and

,

is unimodular (i.e.,

has a polynomial inverse). Again, similarly to the 1D case, if the code admits a right factor prime generator matrix, then it can be defined by using its full rank polynomial parity-check matrix

as follows:

| Algorithm 1 Decoding algorithm for complete-MDP codes |

- Input:

the received sequence. - Output:

the corrected sequence. - 1:

- 2:

while

do - 3:

- 4:

- 5:

- 6:

if then - 7:

if then - 8:

- 9:

while and do - 10:

if length(findzeros()) then - 11:

≔forward - 12:

- 13:

- 14:

- 15:

if then - 16:

Aux=findzeros - 17:

length - 18:

while and do - 19:

if then - 20:

- 21:

while and do - 22:

if length(findzeros()) then - 23:

≔backward - 24:

- 25:

- 26:

- 27:

- 28:

if then - 29:

- 30:

while and do - 31:

- 32:

- 33:

if and for all then - 34:

≔

complete - 35:

- 36:

- 37:

- 38:

|

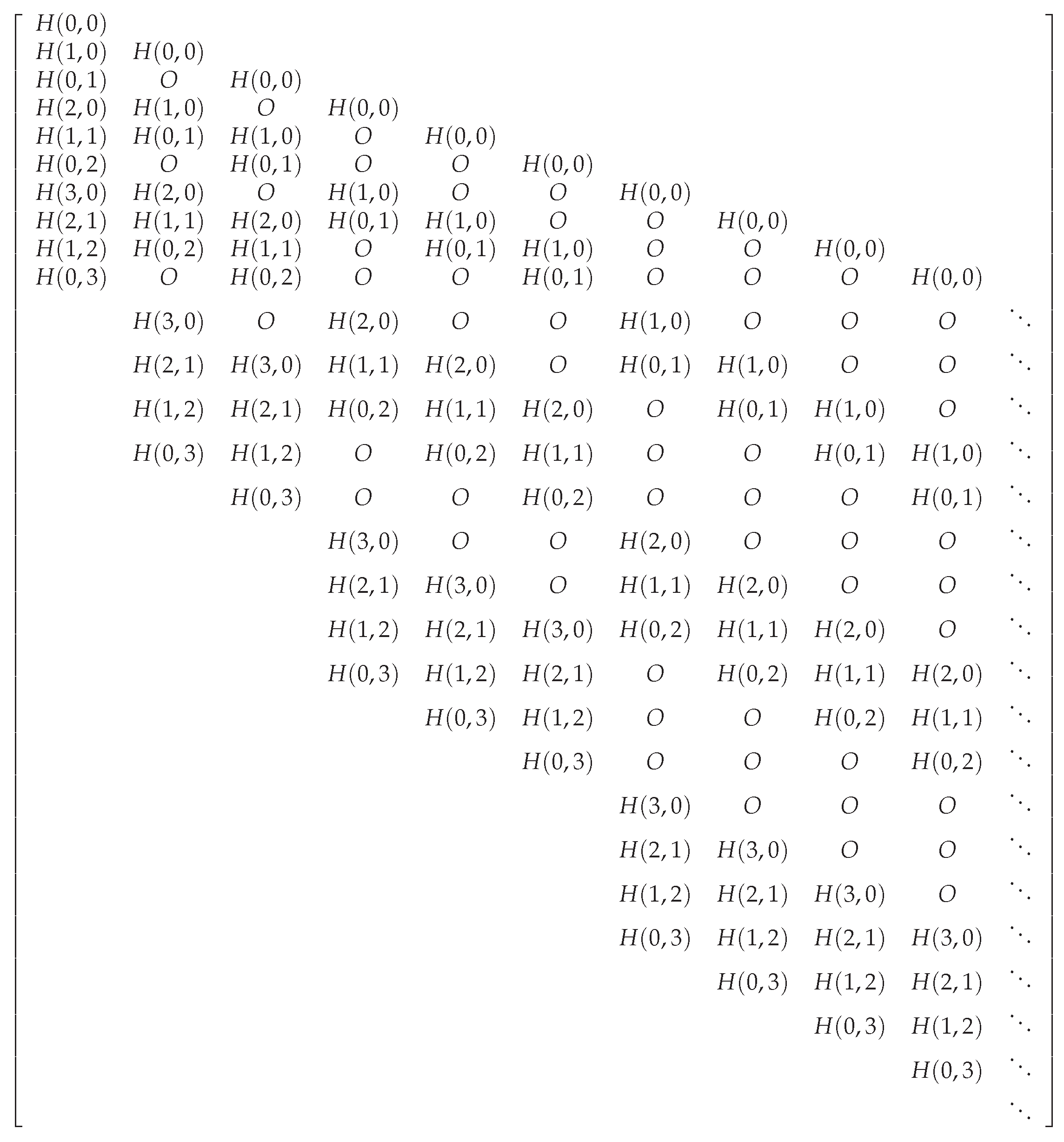

Since we are going to deal with a situation in which the elements of the codewords are distributed in the plane

, we will consider the order given by

The codeword

and the matrix

are represented with its coefficients in order, respectively, as follows:

and

Note that this depiction allows us to see the kernel representation in a more detailed manner as

where

if

or

. It is also possible to denote this product using constant matrices

where

is a vector in

, and

is a

matrix over

. An example of this matrix correspondent to a parity-check matrix

is presented in

Figure 1. Is easy to see that it does not follow the same pattern of construction as the partial parity-check matrix in the 1D case. Note that all the matrix coefficients

of

appeared in all the columns following the previously established order ≺ with the particularity that, for

in the block columns with indices

, the coefficients

with

for

were separated from the matrices

with

by

t zero blocks.

Consider now that

has been transmitted over an erasure channel. We define the support of

as the set of indices of the coefficients of

that are non-zero, i.e.,

Let

be the set of indices of the support of

in which there are erasures in the corresponding coefficients of

and

, i.e., the set of indices of the support of

correspond to the coefficients that were correctly received. For the sake of simplicity, and if the context allows it, we will denote

as

. In

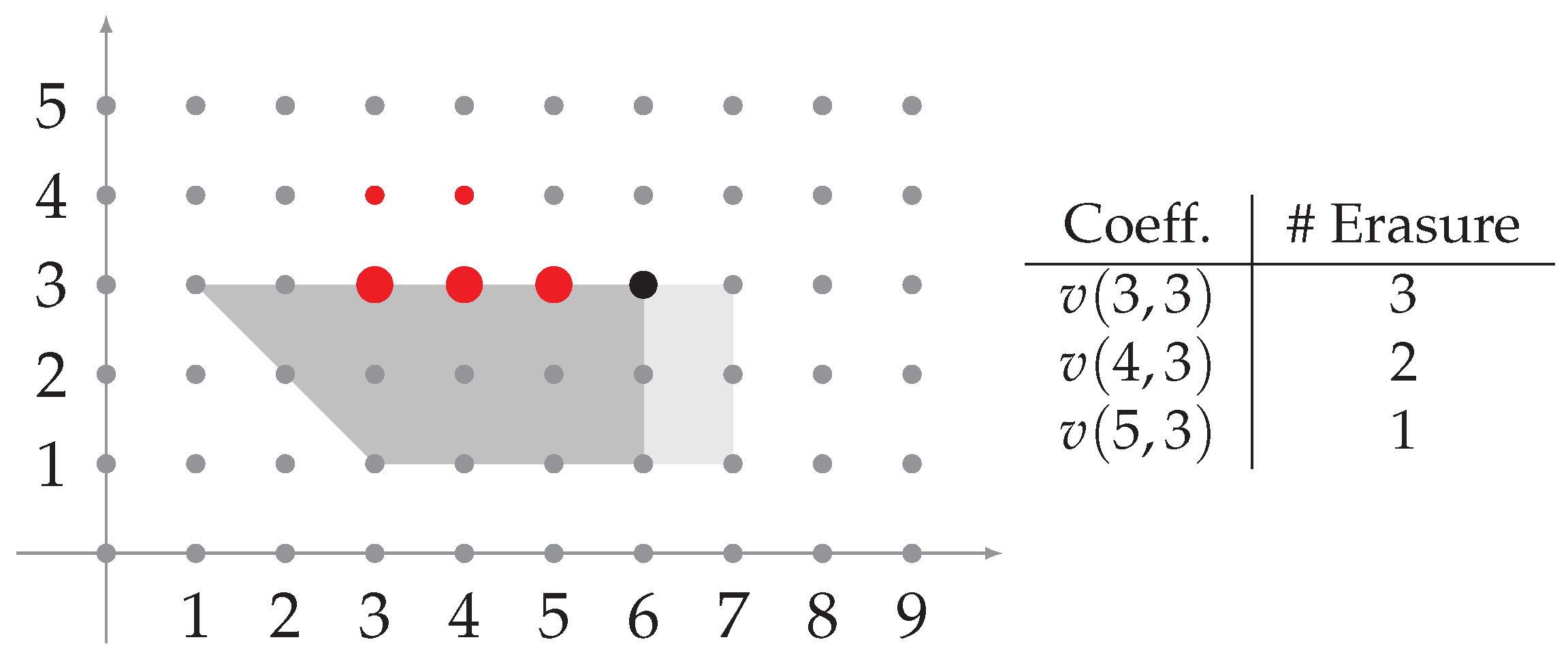

Figure 2 an example of erasures distributed in the plane is presented.

Since we have the kernel representation (

4) of the code in a block fashion, we can consider the equivalent linear system

, where

and

denote the submatrices of

where block columns are indexed by

and

, respectively. Correspondingly,

and

refer to the subvectors of

, whose block rows are indexed by

and

, respectively. In order to recover the erasures, we solved the linear system

by considering the erased components of

as the unknowns. Note that

is known.

As pointed out in [

9], this system is massive even when the parameters of the code are small. The authors in this paper developed an algorithm to deal with this situation in being able to recover the information more efficiently. They proposed to decode it by a set of lines, that is, to choose a set of erasures in a line

(i.e., horizontal (

), vertical (

), or diagonal (

)), as well as to define a neighborhood for this set of erasures and then use all the correct information within these related coefficients to construct and solve a linear system.

Next, we describe such a method for the correction of erasures in horizontal lines, wherein the method for vertical and diagonal will be analogous. Let us consider a subset of

,

, where its subindices lie in an horizontal line with the vertical coordinate

s, that is,

With this in mind we can “rewrite” (

4) as

where—similar to the above—

and

indicate the submatrices of

that are indexed by

and

block-wise, respectively, as well as where

and

are defined analogously. (Note that

may contain erasures.)

Definition 8. Let be a horizontal window of length . i.e.,for some . We then define the following neighborhood of as Example 3. Let , which is represented in Figure 3 as big red dots. In addition, is depicted in Figure 3. Note that the neighborhood is constituted of an aligned set of triangles. The main role of the above defined neighbors in the decoding process is described in the next set of results from [

9].

Lemma 2 ([

9]).

Let with . Suppose that is a transmitted codeword, then is the support of its coefficients with erasures and is the support of the coefficients with erasures that are distributed on a horizontal line in in a window W of length such that . Consider and as in (4), as well as and as in (6). Then, define the vector by selecting the coefficients of with . Define as a submatrix of accordingly. Then, it holds thatwhereis an matrix. It is easy to see that the structure of the matrix in (

8) is the same as the partial parity-check matrix of a 1D convolutional code. By taking into account this similarity we define the 1D convolutional code

, which is associated with a 2D convolutional code

as follows:

where

with column distances

,

.

Lemma 3 ([

9]).

Let with . Suppose that is a transmitted codeword, is the support of its coefficients with erasures, and is the support of the coefficients with its erasures distributed on a horizontal line in in a window W of length such that . If contains only indices in (and not in ), we havewhere is known and is as in (8). Moreover, consider the 1D convolutional code with a parity check as defined in (10) and with a column distance . If there exists such that at most there are erasures that occur in any consecutive components of , then we can completely recover the vector . The requirement for to contain just the erasures in plays the role of the safe space for the 1D convolutional codes.

Example 4. Let be a 2D convolutional code with a parity-check matrix as follows: We define the 1D convolutional codes , where Let us assume that we receive the pattern of erasures that are shown in Figure 4 (red dots), and let us consider the erasures on the first line (big red dots), i.e.,and set . To correct , we use the code by Lemma 3. To do so, we build a system as in (3), whereand In Lemma 3, it is said that one can conduct a partial correction in line by using the column distances of the used convolutional code. In Example 5, we can see how this partial recovery is performed.

Example 5. Let be a 2D convolutional code with a parity-check matrix . Let us assume that we receive the pattern of erasures that are shown in Figure 4 (red dots). As mentioned above, we will try to recover the horizontal lines from below to above; therefore, we will try to recover the erasures on the first line (big red dots), i.e., . Since we have some erasures on the top line, we will consider the code associated with the neighbor (i.e., the whole gray area). We assumed that the 1D convolutional code has the column distances , , , and . Note that the total amount of erasures holds that ; therefore, we have to consider a window of a size (the big red dots and black dot). By following the proof of Lemma 3 in [9], we picked the first equations from the system and obtained the following system: As proven in [13], once we solved this system we were able to successfully recover the erasures in . We then shifted the window and repeated the process until the recovery was fully completed. As we mentioned at the beginning of this section, analogous methods can be described by taking lines of erasures in a vertical or diagonal fashion. In [

9], it was said that, to decode a set of erasures in the grid

, one can carry out the horizontal decoding as was explicitly conducted above in the “from below to above” direction until is not possible to correct anymore. Afterward, execute the algorithm by taking the vertical lines of the erasures and correcting them in the “from left to right” direction until no more corrections can be made. Finally, we followed the same procedure with diagonal lines of erasures, and we then repeated this cycle until any of them were able to recover further information.