Abstract

This paper presents a comparative analysis of the performance of Equivariant Quantum Neural Networks (EQNNs) and Quantum Neural Networks (QNNs), juxtaposed against their classical counterparts: Equivariant Neural Networks (ENNs) and Deep Neural Networks (DNNs). We evaluate the performance of each network with three two-dimensional toy examples for a binary classification task, focusing on model complexity (measured by the number of parameters) and the size of the training dataset. Our results show that the EQNN and the QNN provide superior performance for smaller parameter sets and modest training data samples.

Keywords:

quantum computing; deep learning; quantum machine learning; equivariance; invariance; supervised learning; classification; particle physics; Large Hadron Collider MSC:

81P68; 68Q12

1. Introduction

The rapidly evolving convergence of machine learning (ML) and high-energy physics (HEP) offers a range of opportunities and challenges for the HEP community. Beyond simply applying traditional ML methods to HEP issues, a fresh cohort of experts skilled in both areas is pioneering innovative and potentially groundbreaking approaches. ML methods based on symmetries play a crucial role in improving data analysis as well as expediting the discovery of new physics [1,2]. In particular, classical Equivariant Neural Networks (ENNs) exploit the underlying symmetry structure of the data, ensuring that the input and output transform consistently under the symmetry [3]. ENNs have been widely used in various applications including deep convolutional neural networks for computer vision [4], AlphaFold for protein structure prediction [5], Lorentz equivariant neural networks for particle physics [6], and many other HEP applications [7,8,9,10,11].

Meanwhile, the rise of readily available noisy intermediate-scale quantum computers [12] has sparked considerable interest in using quantum algorithms to tackle high-energy physics problems. Modern quantum computers boast impressive quantum volume and are capable of executing highly complex computations, driving a collaborative effort within the community [13,14] to explore their applications in quantum physics, particularly in addressing theoretical challenges in particle physics. Recent research on quantum algorithms for particle physics at the Large Hadron Collider (LHC) covers a range of tasks, including the evaluation of Feynman loop integrals [15], simulation of parton showers [16] and structure [17], development of quantum algorithms for helicity amplitude assessments [18], and simulation of quantum field theories [19,20,21,22,23,24].

An intriguing prospect in this realm is the emerging field of quantum machine learning (QML), which harnesses the computational capabilities of quantum devices for machine learning tasks. With classical machine learning algorithms already proving effective for various applications at the LHC, it is very natural to explore whether QML can enhance these classical approaches [25,26,27,28,29,30,31,32,33,34]. In recent years, significant development has been made in their quantum counterparts, Equivariant Quantum Neural Networks (EQNNs) [35,36,37,38,39].

In this paper we benchmark the performance of EQNNs against various classical and/or non-equivariant alternatives for three two-dimensional toy datasets, which exhibit a symmetry structure. Such patterns often appear in high-energy physics data, e.g., as kinematic boundaries in the high-dimensional phase space describing the final state [40,41]. By a clever choice of the kinematic variables for the analysis, these boundaries can be preserved in projections onto a lower-dimensional feature space [42,43,44,45,46]. For example, one can form various combinations of possible invariant mass for the generic decay chain considered in Ref. [44], , where Particles A, B, C, and D are hypothetical particles in new physics beyond the standard model of masses , while the corresponding standard model decay products consist of a jet j, a “near” lepton , and a “far” lepton . The two-dimensional (bivariate) distribution shows distributions similar to those in Figure 1, Figure 2 and Figure 3, where is the mass square ratio. Symmetric, anti-symmetric, or non-symmetric structures provide information of particle masses involved in the cascade decays.

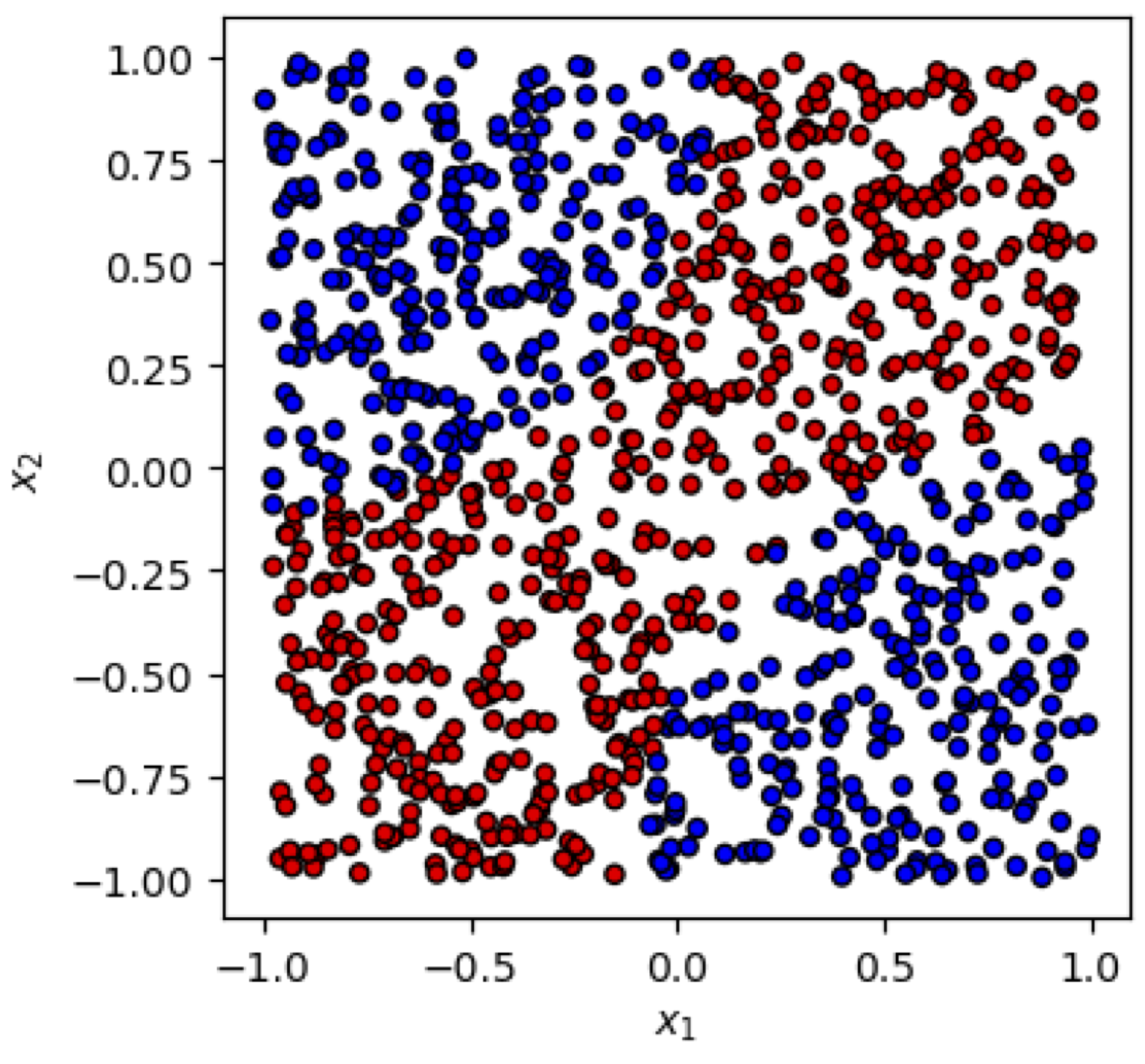

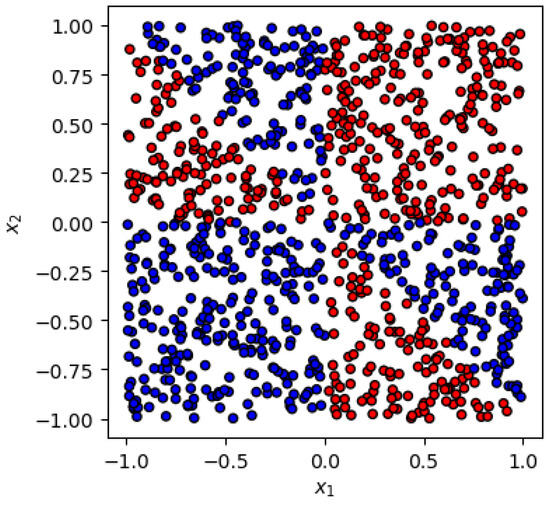

Figure 1.

Pictorial illustration of the first dataset used in this study—the symmetric case (1).

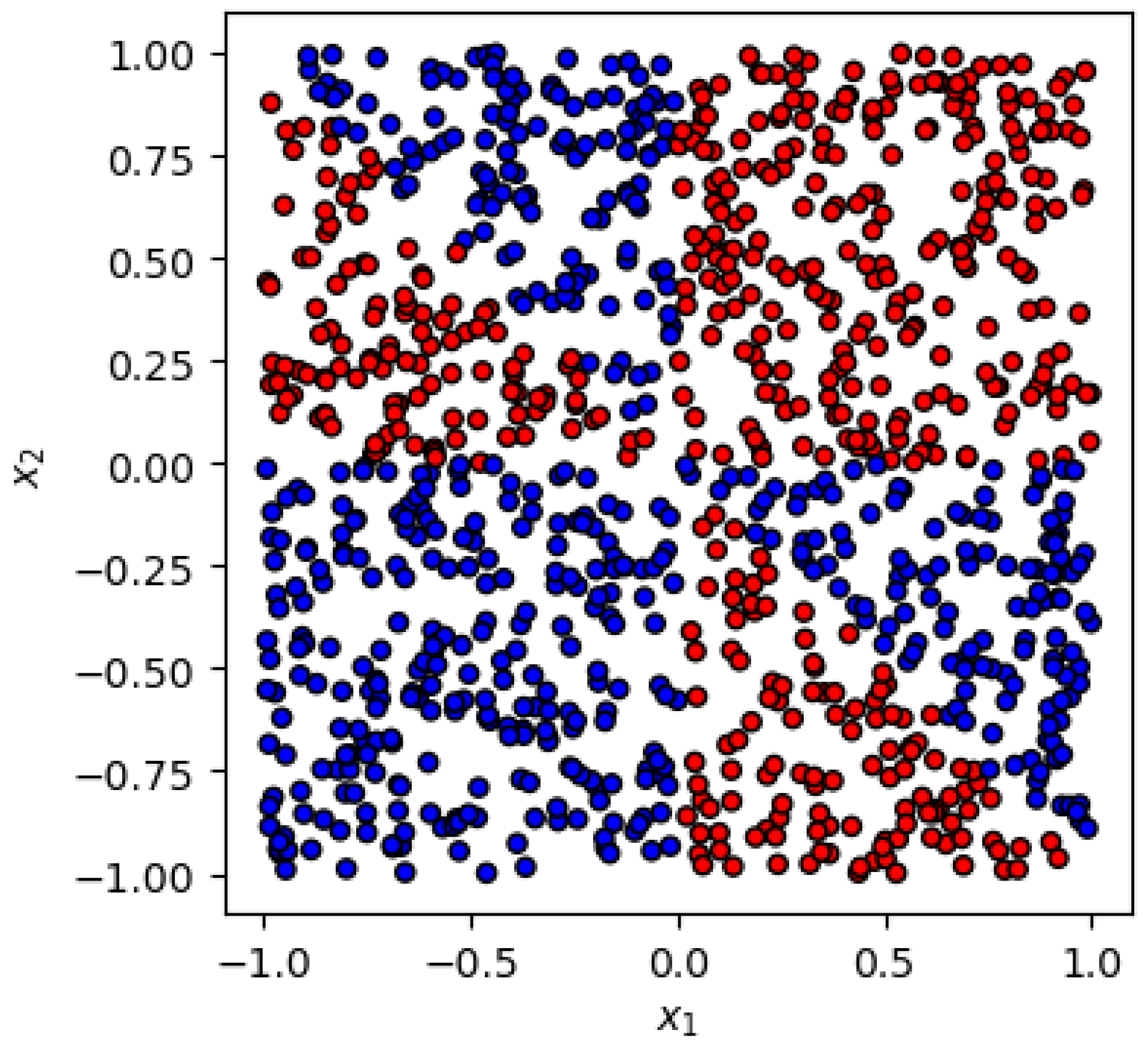

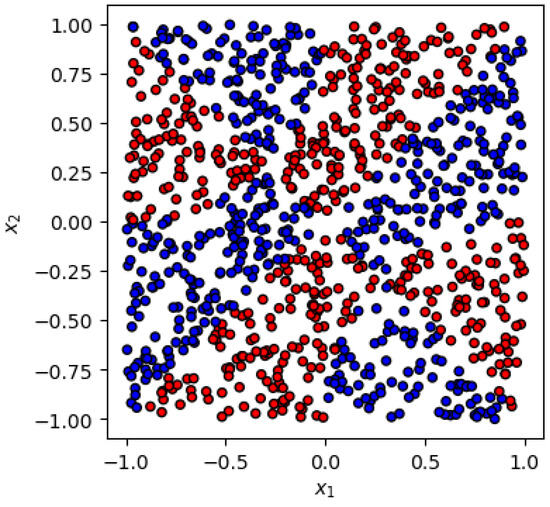

Figure 2.

Pictorial illustration of the second dataset used in this study—the anti-symmetric case (4).

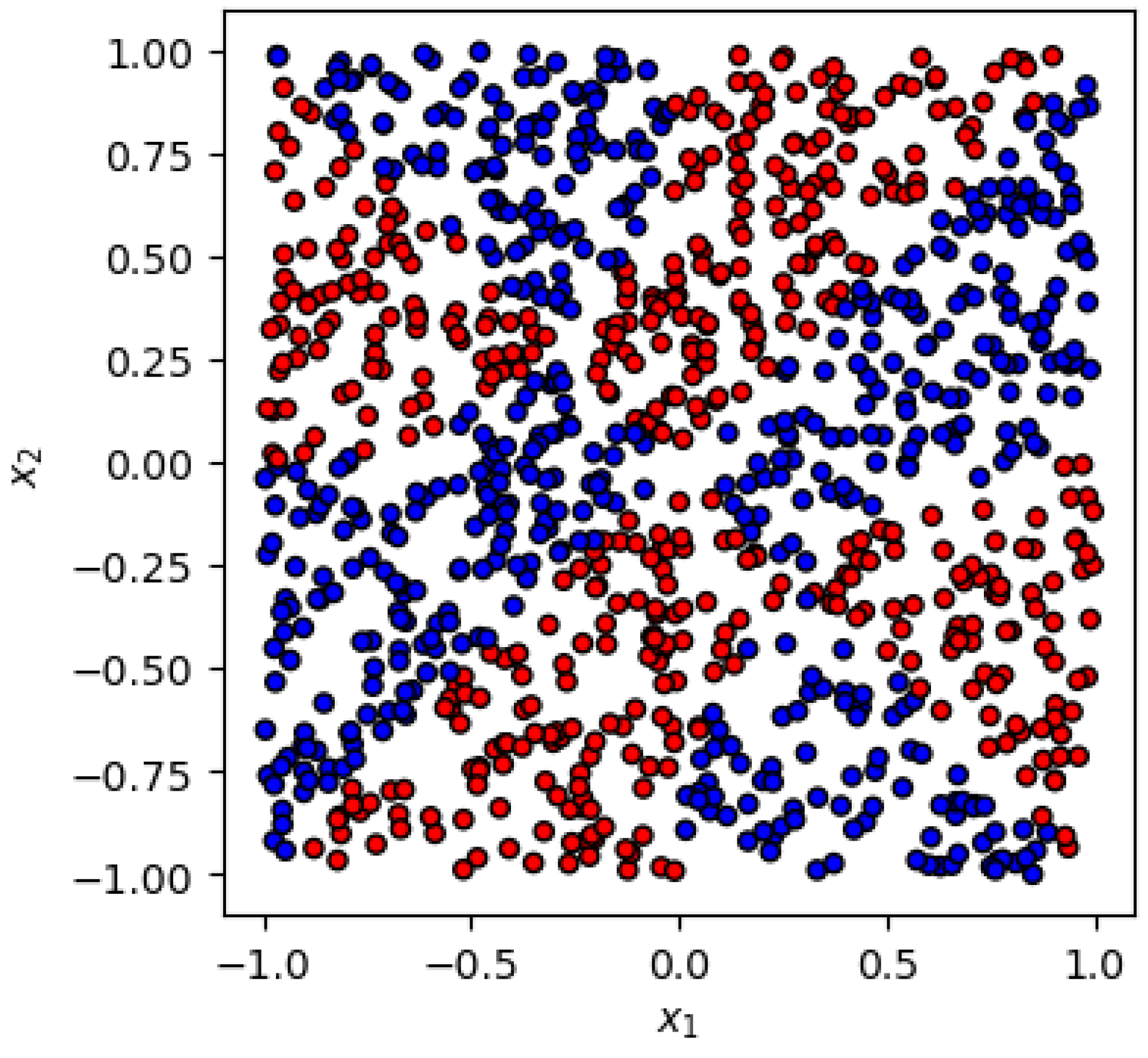

Figure 3.

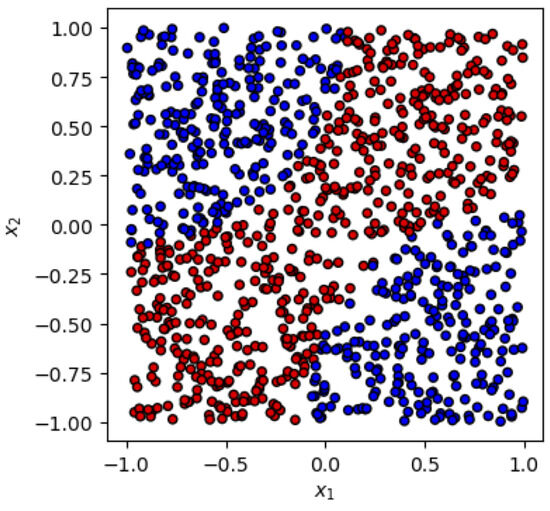

Pictorial illustration of the third dataset used in this study—the fully anti-symmetric case (6).

In this study, we consider simplified two-dimensional datasets that mimic the data arising in such projections. This setup allows us to focus on the comparison between different methods, avoiding unnecessary issues that may arise when dealing with actual particle physics simulation data such as sampling statistics, parton distribution functions, unknown particle mass spectrum, unknown width, detector effects, etc. We explore EQNNs and benchmark them against classical neural network models. We find that the variational quantum circuits learn the data better with the smaller number of parameters and the smaller training dataset compared to their classical counterparts.

2. Dataset Description

In all three examples, we consider two-dimensional data on the unit square (). The data points belong to two classes: (blue points) and (red points).

- (i)

- Symmetric case:In the first example (Figure 1), the labels are generated by the functionwhere is the Heaviside step function and for definiteness we choose . The function (1) respects a symmetry, where the first is given by a reflection about the diagonalwhile the second corresponds to a reflection about the diagonalThis example was studied in Ref. [37] and we shall refer to it as the symmetric case since the y label is invariant.

- (ii)

- Anti-symmetric case:The second example is illustrated in Figure 2. The labels are generated by the functionThe first is still realized as in (2). However, this time, the labels are flipped under a reflection along the diagonal:which is why we shall refer to this case as anti-symmetric.

- (iii)

- Fully anti-symmetric case:The last example is depicted in Figure 3. The labels are generated by the functionwhere is the Heaviside step function and for definiteness we choose . In this case, the labels are flipped under both reflections along the diagonal as well as the diagonal, which is why we shall refer to this case as fully anti-symmetric. As we will see later, it is straightforward to incorporate both symmetric and anti-symmetric properties in variational quantum circuits, while it is not obvious how to consider the anti-symmetric case in the classical neural networks.

3. Network Architectures

To assess the importance of embedding the symmetry in the network, and to compare the classical and quantum versions of the networks, we study the performance of the following four different architectures: (i) Deep Neural Network (DNN), (ii) Equivariant Neural Network (ENN), (iii) Quantum Neural Network (QNN), and (iv) Equivariant Quantum Neural Network (EQNN). In each case, we adjust the hyperparameters to ensure that the number of network parameters is roughly the same.

- (i)

- Deep Neural Networks:In our DNN, for the symmetric (anti-symmetric) case, we use one (two) hidden layer(s) with four neurons. For both types of classical networks, we use the softmax activation function, Adam optimizer, and a learning rate of . We use the binary cross-entropy for both the DNN and ENN.

- (ii)

- Equivariant Neural Networks:A given map between an input space X and an output space Y is said to be equivariant under a group G if it satisfies the following relation:where () is a representation of a group element acting on the input (output) space. In the special case when is the trivial representation, the map is called invariant under the group G, i.e., a symmetry transformation acting on the input data x does not change the output of the map. The goal of ENNs, or equivariant learning models in general, is to design a trainable map f which would always satisfy Equation (7). In tasks where the symmetry is known, such equivariant models are believed to have an advantage in terms of the number of parameters and training complexity. Several studies in high-energy physics have attempted to use classical equivariant neural networks [6,47,48,49,50]. Our ENN model utilizes four symmetric copies for each data point, which are fed into the input layer, followed by one equivariant layer with three (two) neurons and one dense layer with four (four) neurons in the symmetric (anti-symmetric) case.

- (iii)

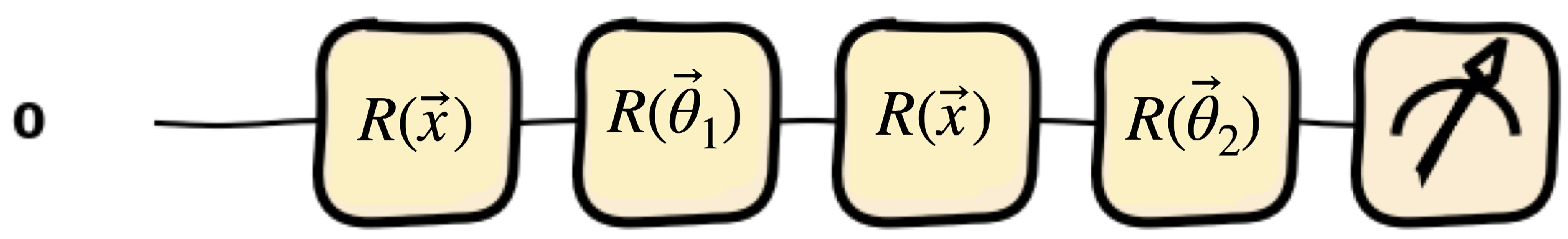

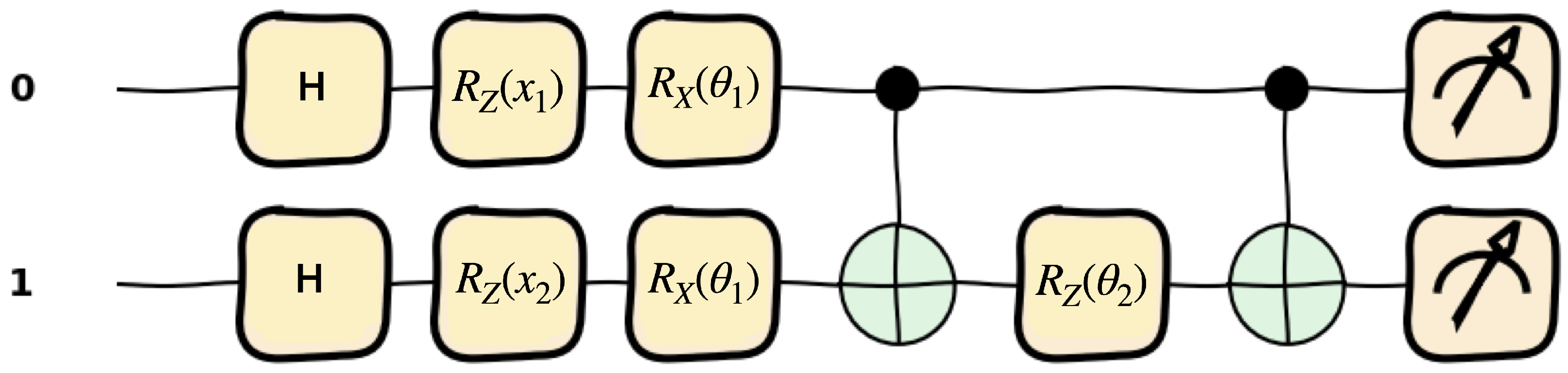

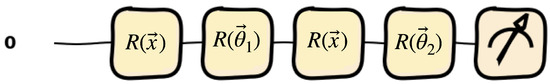

- Quantum Neural Networks:For the QNN, we utilize the one-qubit data-reuploading model [51], as shown in Figure 4, with depth four (eight) for the symmetric (anti-symmetric and fully anti-symmetric) case, using the angle embedding and three parameters at each depth. This choice leads to a similar number of parameters as in the classical networks. We use the Adam optimizer and the lossfor any choice of two orthogonal operators and (see Ref. [52] for more details.). In this paper, we usefor all three datasets considered in this paper.

Figure 4. Illustration of the quantum circuit used for QNN at depth 2. This circuit is repeated up to depth four (five) times with different parameters for the symmetric (anti-symmetric and fully anti-symmetric) case. The data points are loaded via angle embedding with rotation gates, followed by another rotation with arbitrary angle parameters.

Figure 4. Illustration of the quantum circuit used for QNN at depth 2. This circuit is repeated up to depth four (five) times with different parameters for the symmetric (anti-symmetric and fully anti-symmetric) case. The data points are loaded via angle embedding with rotation gates, followed by another rotation with arbitrary angle parameters. - (iv)

- Equivariant Quantum Neural Networks.In EQNN models, symmetry transformations acting on the embedding space of input features are realized as finite-dimensional unitary transformations , . Consider the simplest case where one trainable operator acts on a state : . If for a symmetry transformation , the conditionis satisfied, then the operator U is equivariant, i.e., the equivariant gate should commute with the symmetry. In general, the operators on the two sides of Equation (10) do not necessarily have to be in the same representation but are often assumed so for simplicity. The output of a QNN is the measurement of the expectation value of the state with respect to some observable O. If the gates are equivariant and we apply some symmetry transformation , then this is equivalent to measuring the observable . Hence, if O commutes with the symmetry , the model as a whole would be invariant under , which is the case in our symmetric example. Otherwise the model is equivariant, as in our anti-symmetric example.

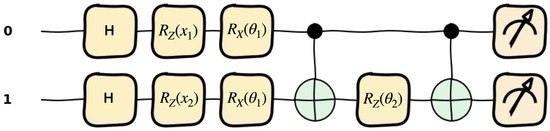

Our EQNN uses the two-qubit quantum circuit depicted in Figure 5 for depth 1. This circuit is repeated five (ten) times with different parameters for the symmetric (anti-symmetric and fully anti-symmetric). The two gates embed and , respectively. The gates share the same parameter () and the gate uses another parameter (). The invariant model (for the symmetric case) uses the same observable O for both classes in the data. In the anti-symmetric case, we use two different observables and that correspond to each label. They transform into one another under reflection , i.e., .

Figure 5.

Illustration of the quantum circuit used for EQNN at depth 1. This circuit is repeated five (ten) times with different parameters for the symmetric (anti-symmetric and fully anti-symmetric) case. The data points are loaded via angle embedding with two gates, and . The remaining circuits are parameterized by and .

In the symmetric case, we use binary cross-entropy loss, assuming the true label y is either 0 or 1,

The observables O and the reflection along are defined as follows:

In the anti-symmetric and fully anti-symmetric cases, we used the same loss as in QNN

For the anti-symmetric case () is the observable corresponding to ()

For the fully anti-symmetric case, we use another set of observables, so one will transform into the other with reflection along any of the two diagonals. They are given as follows:

Since it is anti-symmetric with respect to each of the diagonals, the result is invariant if both reflections are applied. It is difficult to build a classical equivariant neural network using these anti-symmetries since classical equivariant models are built based on the assumption that the target is invariant under certain transformations. When discussing the theory of classical equivariant machine learning models, the models that transform non-trivially under the symmetry group are often discussed mathematically but rarely implemented in code. For our classical model on partially anti-symmetric data, we only implemented the invariant part of the symmetry () and ignored the anti-symmetric portion of the data. While it may not be impossible to consider such asymmetric cases in classical neural networks, implementation can be quite involved.

On the other hand, it is straightforward to build quantum equivariant models. For this purpose, we would only need to exploit the transformation properties of the observables. If one observable transforms to the other under the transformation of interest (reflection along the diagonal in this case), then measurement made on one observable is equivalent to the measurement of the other observable given the transformed input.

We can consider equivariant quantum models with anti-symmetric transformation from the point of view of representation theory. The fully invariant (symmetric) case can be considered as the model transform under the trivial representation of the group, where all the transformations defined by the group do not change the output of the model. The asymmetric (either anti-symmetric or fully anti-symmetric) cases that we considered here can be interpreted as transforms under some other (one-dimensional) representation of the group, where some transformations change the output of the model to its opposite value, while other transformations do not change the output.

4. Results

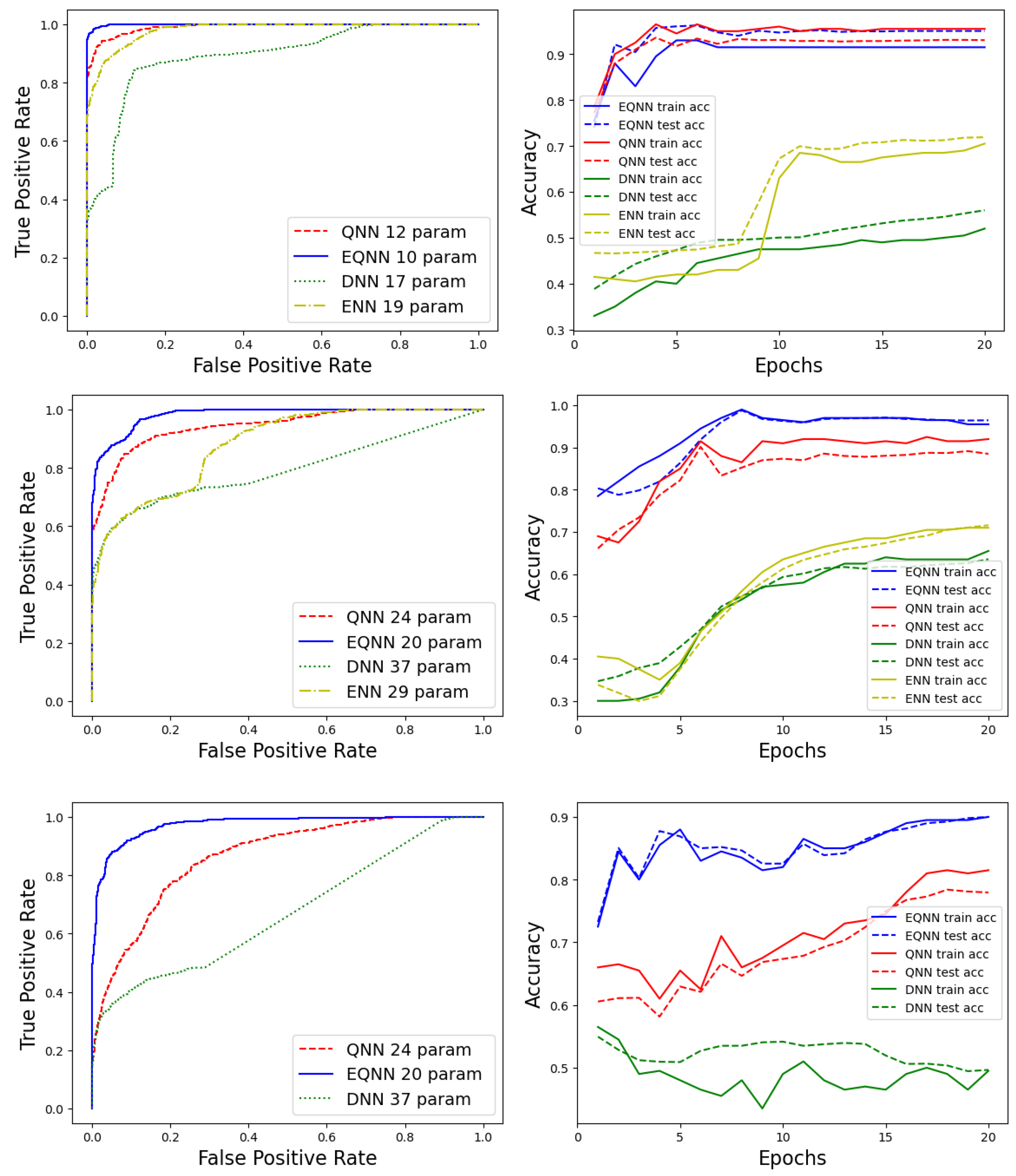

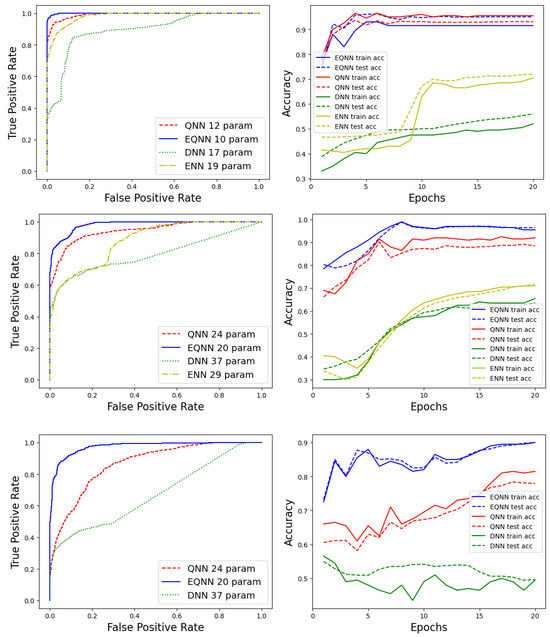

The left panels in Figure 6 show the receiver operating characteristic (ROC) curves for each network with and samples for the symmetric (top), anti-symmetric (middle), and fully anti-symmetric (bottom) dataset. The results for the DNN, ENN, QNN, and EQNN are shown in (green, dotted), (yellow, dotdashed), (red, dashed), and (blue, solid), respectively. As expected, networks with an equivariance structure (EQNN and ENN) improve the performance of the corresponding networks (QNN and DNN) without the symmetry. We also observe that quantum networks perform better than the classical analogs. In the legends, numerical values followed by network acronyms represent the number of parameters used for each network. For the symmetric example, the EQNN uses only 10 parameters; thus, for fair comparison, we constructed the other networks with (10) parameters as well. For the anti-symmetric example, we use 20 parameters for the EQNN.

Figure 6.

ROC (left) and accuracy (right) curves for the symmetric (top), anti-symmetric (middle), and fully anti-symmetric (bottom) example.

The evolution of the accuracy during training and testing is shown in the right panels of Figure 6. The accuracy converges faster (after only 5 epochs) for the QNN and EQNN in comparison to their classical counterparts (10–20 epochs). The same color-scheme is used, but this time, solid curves represent training accuracy, while dashed curves show test accuracy.

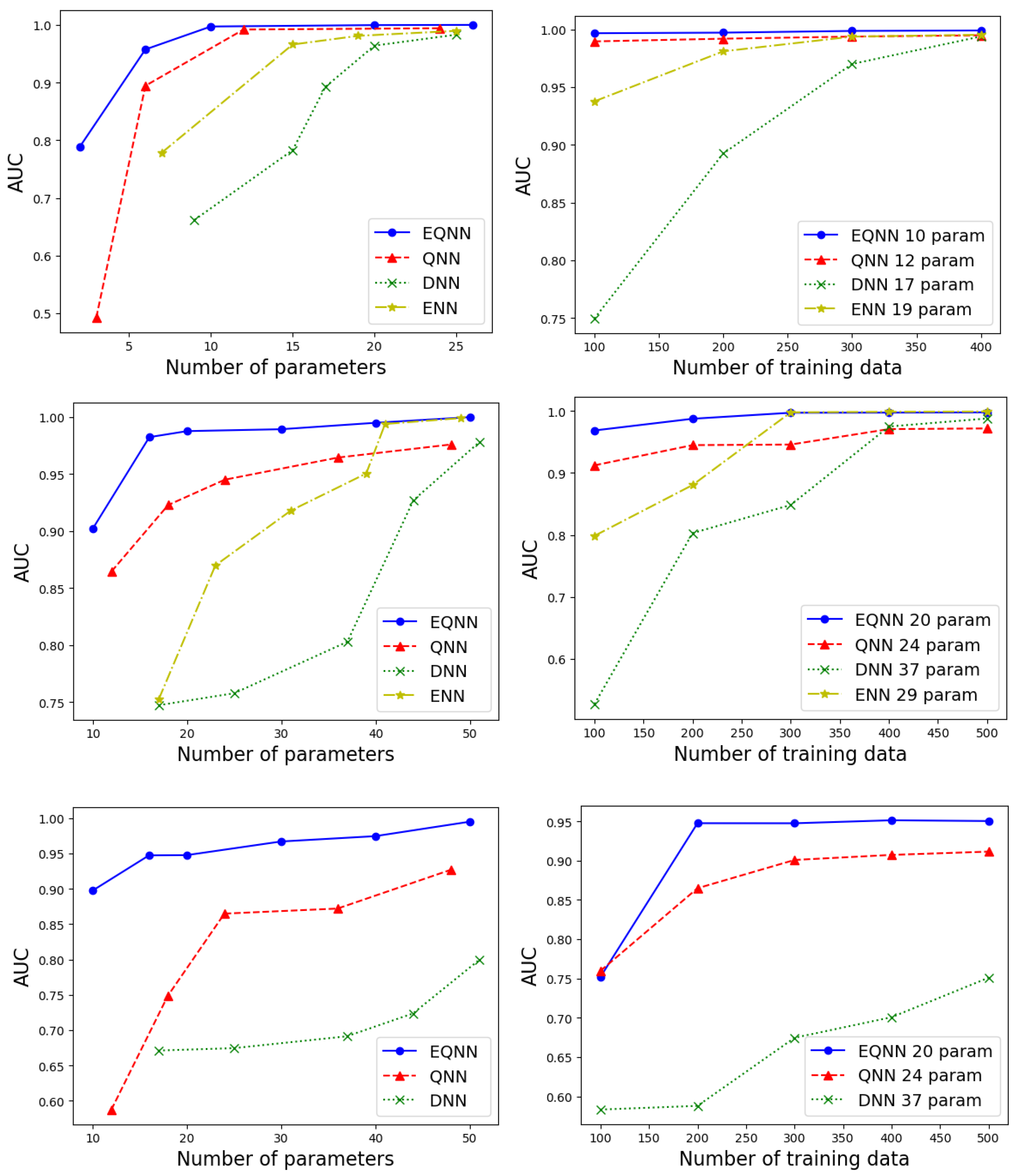

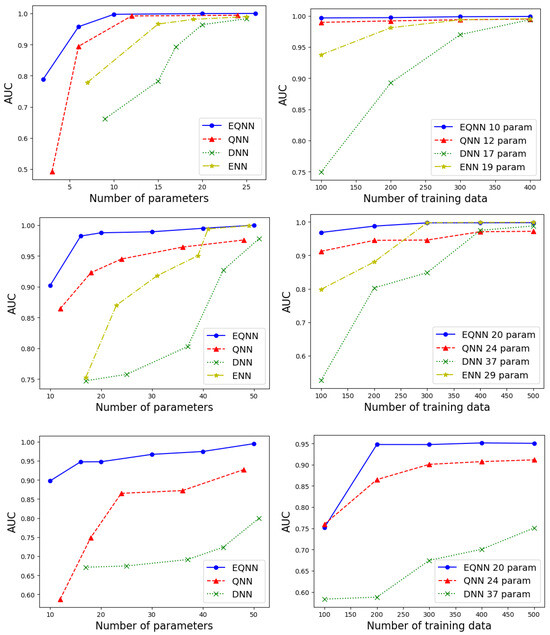

To further quantify the performance of our quantum networks, in Figure 7, we show the AUC (Area under the ROC Curve) as a function of the number of parameters (left panels) with a fixed size of the training data (), and as a function of the number of training samples (right panels) with a fixed number of parameters (). The top, middle, and bottom panels show results for the symmetric, anti-symmetric, and fully anti-symmetric dataset. As the number of parameters increases, the performance of all networks improves. All AUC values become similar when () for the symmetric (anti-symmetric) case. As shown in the bottom panels, the performances of all networks become comparable to each other for both examples once the size of the training data reaches ∼400, except for the fully anti-symmetric case. We observe that from the top panel to the bottom panel, the relative improvement from the QNN to the EQNN grows, indicating the importance of symmetry implementation on the network. Similar relative improvement exists from the DNN to the QNN, emphasizing the importance of quantum algorithms. Note that the ENN curves are missing in the bottom panel of both Figure 6 and Figure 7. This is due to the non-trivial implementation of the anti-symmetric property in classical ENNs.

Figure 7.

AUC as a function of the number of parameters (left) for fixed and , and as a function of (right) with a fixed number of parameters as shown in the legend, for the symmetric (top), anti-symmetric (middle), and fully anti-symmetric example (bottom).

Finally Table 1 shows the accuracy of the DNN for the fully anti-symmetric dataset. The different rows and columns represent different choices of the number of parameters and the number of training samples, respectively. These numbers are compared against those in right-bottom panel of Figure 7. The EQNN achieves 0.95 accuracy with 20 parameters and 200 training samples, while the DNN requires more parameters and/or more training samples.

Table 1.

Accuracy of DNN for the fully anti-symmetric dataset. The different rows (columns) represent different choices of the number of parameters (the number of training samples).

5. Conclusions

In this paper, we examined the performance of Equivariant Quantum Neural Networks and Quantum Neural Networks, compared against their classical counterparts, Equivariant Neural Networks and Deep Neural Networks, considering two toy examples for a binary classification task. Our study demonstrates that EQNNs and QNNs outperform their classical counterparts, particularly in scenarios with fewer parameters and smaller training datasets. This highlights the potential of quantum-inspired architectures in resource-constrained settings. This point has been emphasized in a similar study recently in Ref. [35], which showed that an EQNN outperforms the non-equivariant one in terms of generalization power, especially with a small training set size. We note a more significant enhancement in the performance of an EQNN and QNN compared to an ENN and DNN, particularly evident in the anti-symmetric example rather than the symmetric one. This underscores the robustness of quantum algorithms. The code used for this study is publicly available at https://github.com/ZhongtianD/EQNN/tree/main (accessed on 7 March 2024).

While our current study has primarily focused on an EQNN with discrete symmetries, it is crucial to acknowledge the significant role that continuous symmetries, such as Lorentz symmetry or gauge symmetries, play in particle physics. In our future research, we aim to compare an EQNN with continuous symmetries against classical neural networks. Exploring more complex datasets with high-dimensional features is another direction we plan to pursue. However, handling such examples would necessitate an increase in the number of network parameters, prompting an investigation into related issues like overparameterization, barren plateaus, and others.

Author Contributions

Conceptualization, Z.D.; methodology, M.C.C., G.R.D., Z.D., R.T.F., S.G., D.J., K.K., T.M., K.T.M., K.M. and E.B.U.; software, Z.D.; validation, M.C.C., G.R.D., Z.D., R.T.F., T.M. and E.B.U.; formal analysis, Z.D.; investigation, M.C.C., G.R.D., Z.D., R.T.F., T.M. and E.B.U.; resources, Z.D., K.T.M. and K.M.; data curation, G.R.D., S.G. and T.M.; writing—original draft preparation, Z.D.; writing—review and editing, S.G., D.J., K.K., K.T.M. and K.M.; visualization, Z.D.; supervision, S.G., D.J., K.K., K.T.M. and K.M.; project administration, S.G., D.J., K.K., K.T.M. and K.M.; funding acquisition, S.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research used resources of the National Energy Research Scientific Computing Center, a DOE Office of Science User Facility supported by the Office of Science of the U.S. Department of Energy under Contract No. DE-AC02-05CH11231 using NERSC award NERSC DDR-ERCAP0025759. SG is supported in part by the U.S. Department of Energy (DOE) under Award No. DE-SC0012447. KM is supported in part by the U.S. Department of Energy award number DE-SC0022148. KK is supported in part by the US DOE DE-SC0024407. CD is supported in part by the College of Liberal Arts and Sciences Research Fund at the University of Kansas. CD, RF, EU, MCC, and TM were participants in the 2023 Google Summer of Code.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| API | Application Processing Interface |

| AUC | Area Under the Curve |

| DNN | Deep Neural Network |

| ENN | Equivariant Neural Network |

| EQNN | Equivariant Quantum Neural Network |

| HEP | High-Energy Physics |

| LHC | Large Hadron Collider |

| MDPI | Multidisciplinary Digital Publishing Institute |

| ML | Machine Learning |

| NN | Neural Network |

| QML | Quantum Machine Learning |

| QNN | Quantum Neural Network |

| ROC | Receiver Operating Characteristic |

References

- Shanahan, P.; Terao, K.; Whiteson, D. Snowmass 2021 Computational Frontier CompF03 Topical Group Report: Machine Learning. arXiv 2022, arXiv:2209.07559. [Google Scholar]

- Feickert, M.; Nachman, B. A Living Review of Machine Learning for Particle Physics. arXiv 2021, arXiv:2102.02770. [Google Scholar]

- Cohen, T.; Welling, M. Group Equivariant Convolutional Networks. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016; Balcan, M.F., Weinberger, K.Q., Eds.; Volume 48, pp. 2990–2999. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Pereira, F., Burges, C., Bottou, L., Weinberger, K., Eds.; Curran Associates, Inc.: Sydney, Australia, 2012; Volume 25. [Google Scholar]

- Jumper, J.M.; Evans, R.; Pritzel, A.; Green, T.; Figurnov, M.; Ronneberger, O.; Tunyasuvunakool, K.; Bates, R.; Zídek, A.; Potapenko, A.; et al. Highly accurate protein structure prediction with AlphaFold. Nature 2021, 596, 583–589. [Google Scholar] [CrossRef]

- Bogatskiy, A.; Anderson, B.; Offermann, J.; Roussi, M.; Miller, D.; Kondor, R. Lorentz Group Equivariant Neural Network for Particle Physics. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; Volume 119, pp. 992–1002. [Google Scholar]

- Cohen, T.S.; Weiler, M.; Kicanaoglu, B.; Welling, M. Gauge Equivariant Convolutional Networks and the Icosahedral CNN. arXiv 2019, arXiv:1902.046152. [Google Scholar]

- Boyda, D.; Kanwar, G.; Racanière, S.; Rezende, D.J.; Albergo, M.S.; Cranmer, K.; Hackett, D.C.; Shanahan, P.E. Sampling using SU(N) gauge equivariant flows. Phys. Rev. D 2021, 103, 074504. [Google Scholar] [CrossRef]

- Favoni, M.; Ipp, A.; Müller, D.I.; Schuh, D. Lattice Gauge Equivariant Convolutional Neural Networks. Phys. Rev. Lett. 2022, 128, 032003. [Google Scholar] [CrossRef]

- Dolan, M.J.; Ore, A. Equivariant Energy Flow Networks for Jet Tagging. Phys. Rev. D 2021, 103, 074022. [Google Scholar] [CrossRef]

- Bulusu, S.; Favoni, M.; Ipp, A.; Müller, D.I.; Schuh, D. Generalization capabilities of translationally equivariant neural networks. Phys. Rev. D 2021, 104, 074504. [Google Scholar] [CrossRef]

- Preskill, J. Quantum Computing in the NISQ era and beyond. Quantum 2018, 2, 79. [Google Scholar] [CrossRef]

- Feynman, R.P. Simulating physics with computers. Int. J. Theor. Phys. 1982, 21, 467–488. [Google Scholar] [CrossRef]

- Georgescu, I.M.; Ashhab, S.; Nori, F. Quantum Simulation. Rev. Mod. Phys. 2014, 86, 153. [Google Scholar] [CrossRef]

- Ramírez-Uribe, S.; Rentería-Olivo, A.E.; Rodrigo, G.; Sborlini, G.F.R.; Vale Silva, L. Quantum algorithm for Feynman loop integrals. JHEP 2022, 5, 100. [Google Scholar] [CrossRef]

- Bepari, K.; Malik, S.; Spannowsky, M.; Williams, S. Quantum walk approach to simulating parton showers. Phys. Rev. D 2022, 106, 056002. [Google Scholar] [CrossRef]

- Li, T.; Guo, X.; Lai, W.K.; Liu, X.; Wang, E.; Xing, H.; Zhang, D.B.; Zhu, S.L. Partonic collinear structure by quantum computing. Phys. Rev. D 2022, 105, L111502. [Google Scholar] [CrossRef]

- Bepari, K.; Malik, S.; Spannowsky, M.; Williams, S. Towards a quantum computing algorithm for helicity amplitudes and parton showers. Phys. Rev. D 2021, 103, 076020. [Google Scholar] [CrossRef]

- Jordan, S.P.; Lee, K.S.M.; Preskill, J. Quantum Algorithms for Fermionic Quantum Field Theories. arXiv 2014, arXiv:1404.7115. [Google Scholar]

- Preskill, J. Simulating quantum field theory with a quantum computer. PoS 2018, LATTICE2018, 024. [Google Scholar] [CrossRef]

- Bauer, C.W.; de Jong, W.A.; Nachman, B.; Provasoli, D. Quantum Algorithm for High Energy Physics Simulations. Phys. Rev. Lett. 2021, 126, 062001. [Google Scholar] [CrossRef]

- Abel, S.; Chancellor, N.; Spannowsky, M. Quantum computing for quantum tunneling. Phys. Rev. D 2021, 103, 016008. [Google Scholar] [CrossRef]

- Abel, S.; Spannowsky, M. Quantum-Field-Theoretic Simulation Platform for Observing the Fate of the False Vacuum. PRX Quantum 2021, 2, 010349. [Google Scholar] [CrossRef]

- Davoudi, Z.; Linke, N.M.; Pagano, G. Toward simulating quantum field theories with controlled phonon-ion dynamics: A hybrid analog-digital approach. Phys. Rev. Res. 2021, 3, 043072. [Google Scholar] [CrossRef]

- Mott, A.; Job, J.; Vlimant, J.R.; Lidar, D.; Spiropulu, M. Solving a Higgs optimization problem with quantum annealing for machine learning. Nature 2017, 550, 375–379. [Google Scholar] [CrossRef]

- Blance, A.; Spannowsky, M. Unsupervised event classification with graphs on classical and photonic quantum computers. JHEP 2020, 21, 170. [Google Scholar] [CrossRef]

- Wu, S.L.; Chan, J.; Guan, W.; Sun, S.; Wang, A.; Zhou, C.; Livny, M.; Carminati, F.; Di Meglio, A.; Li, A.C.; et al. Application of quantum machine learning using the quantum variational classifier method to high energy physics analysis at the LHC on IBM quantum computer simulator and hardware with 10 qubits. J. Phys. G 2021, 48, 125003. [Google Scholar] [CrossRef]

- Blance, A.; Spannowsky, M. Quantum Machine Learning for Particle Physics using a Variational Quantum Classifier. JHEP 2021, 2, 212. [Google Scholar] [CrossRef]

- Abel, S.; Blance, A.; Spannowsky, M. Quantum optimization of complex systems with a quantum annealer. Phys. Rev. A 2022, 106, 042607. [Google Scholar] [CrossRef]

- Wu, S.L.; Sun, S.; Guan, W.; Zhou, C.; Chan, J.; Cheng, C.L.; Pham, T.; Qian, Y.; Wang, A.Z.; Zhang, R.; et al. Application of quantum machine learning using the quantum kernel algorithm on high energy physics analysis at the LHC. Phys. Rev. Res. 2021, 3, 033221. [Google Scholar] [CrossRef]

- Chen, S.Y.C.; Wei, T.C.; Zhang, C.; Yu, H.; Yoo, S. Hybrid Quantum-Classical Graph Convolutional Network. arXiv 2021, arXiv:2101.06189. [Google Scholar]

- Terashi, K.; Kaneda, M.; Kishimoto, T.; Saito, M.; Sawada, R.; Tanaka, J. Event Classification with Quantum Machine Learning in High-Energy Physics. Comput. Softw. Big Sci. 2021, 5, 2. [Google Scholar] [CrossRef]

- Araz, J.Y.; Spannowsky, M. Classical versus quantum: Comparing tensor-network-based quantum circuits on Large Hadron Collider data. Phys. Rev. A 2022, 106, 062423. [Google Scholar] [CrossRef]

- Ngairangbam, V.S.; Spannowsky, M.; Takeuchi, M. Anomaly detection in high-energy physics using a quantum autoencoder. Phys. Rev. D 2022, 105, 095004. [Google Scholar] [CrossRef]

- Chang, S.Y.; Grossi, M.; Saux, B.L.; Vallecorsa, S. Approximately Equivariant Quantum Neural Network for p4m Group Symmetries in Images. In Proceedings of the 2023 International Conference on Quantum Computing and Engineering, Bellevue, WA, USA, 17–22 September 2023. [Google Scholar]

- Nguyen, Q.T.; Schatzki, L.; Braccia, P.; Ragone, M.; Coles, P.J.; Sauvage, F.; Larocca, M.; Cerezo, M. Theory for Equivariant Quantum Neural Networks. arXiv 2022, arXiv:2210.08566. [Google Scholar]

- Meyer, J.J.; Mularski, M.; Gil-Fuster, E.; Mele, A.A.; Arzani, F.; Wilms, A.; Eisert, J. Exploiting Symmetry in Variational Quantum Machine Learning. PRX Quantum 2023, 4, 010328. [Google Scholar] [CrossRef]

- West, M.T.; Sevior, M.; Usman, M. Reflection equivariant quantum neural networks for enhanced image classification. Mach. Learn. Sci. Technol. 2023, 4, 035027. [Google Scholar] [CrossRef]

- Skolik, A.; Cattelan, M.; Yarkoni, S.; Bäck, T.; Dunjko, V. Equivariant quantum circuits for learning on weighted graphs. npj Quantum Inf. 2023, 9, 47. [Google Scholar] [CrossRef]

- Kim, I.W. Algebraic Singularity Method for Mass Measurement with Missing Energy. Phys. Rev. Lett. 2010, 104, 081601. [Google Scholar] [CrossRef] [PubMed]

- Franceschini, R.; Kim, D.; Kong, K.; Matchev, K.T.; Park, M.; Shyamsundar, P. Kinematic Variables and Feature Engineering for Particle Phenomenology. Rev. Mod. Phys. 2022, 95, 045004. [Google Scholar] [CrossRef]

- Kersting, N. On Measuring Split-SUSY Gaugino Masses at the LHC. Eur. Phys. J. C 2009, 63, 23–32. [Google Scholar] [CrossRef]

- Bisset, M.; Lu, R.; Kersting, N. Improving SUSY Spectrum Determinations at the LHC with Wedgebox Technique. JHEP 2011, 5, 095. [Google Scholar] [CrossRef]

- Burns, M.; Matchev, K.T.; Park, M. Using kinematic boundary lines for particle mass measurements and disambiguation in SUSY-like events with missing energy. JHEP 2009, 5, 094. [Google Scholar] [CrossRef]

- Debnath, D.; Gainer, J.S.; Kim, D.; Matchev, K.T. Edge Detecting New Physics the Voronoi Way. EPL 2016, 114, 41001. [Google Scholar] [CrossRef][Green Version]

- Debnath, D.; Gainer, J.S.; Kilic, C.; Kim, D.; Matchev, K.T.; Yang, Y.P. Detecting kinematic boundary surfaces in phase space: Particle mass measurements in SUSY-like events. JHEP 2017, 6, 092. [Google Scholar] [CrossRef]

- Bogatskiy, A.; Hoffman, T.; Miller, D.W.; Offermann, J.T. PELICAN: Permutation Equivariant and Lorentz Invariant or Covariant Aggregator Network for Particle Physics. arXiv 2022, arXiv:2211.00454. [Google Scholar]

- Hao, Z.; Kansal, R.; Duarte, J.; Chernyavskaya, N. Lorentz group equivariant autoencoders. Eur. Phys. J. C 2023, 83, 485. [Google Scholar] [CrossRef] [PubMed]

- Buhmann, E.; Kasieczka, G.; Thaler, J. EPiC-GAN: Equivariant point cloud generation for particle jets. SciPost Phys. 2023, 15, 130. [Google Scholar] [CrossRef]

- Batatia, I.; Geiger, M.; Munoz, J.; Smidt, T.; Silberman, L.; Ortner, C. A General Framework for Equivariant Neural Networks on Reductive Lie Groups. arXiv 2023, arXiv:2306.00091. [Google Scholar]

- Pérez-Salinas, A.; Cervera-Lierta, A.; Gil-Fuster, E.; Latorre, J.I. Data re-uploading for a universal quantum classifier. Quantum 2020, 4, 226. [Google Scholar] [CrossRef]

- Ahmed, S. Data-Reuploading Classifier. Available online: https://pennylane.ai/qml/demos/tutorial_data_reuploading_classifier (accessed on 7 March 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).