Abstract

This paper introduces a novel parallel method for solving common variational inclusion and common fixed-point (CVI-CFP) problems. The proposed algorithm provides a strong convergence theorem established under specific conditions associated with the CVI-CFP problem. Numerical simulations demonstrate the algorithm’s efficacy in the context of signal recovery problems involving various types of blurred filters. The results highlight the algorithm’s potential for practical applications in image processing and other fields.

Keywords:

process innovation; common fixed-point problem; common variational inclusion problem; demicontractive mapping; parallel method; strong convergence MSC:

65Y05; 68W10; 47H05; 47J25; 49M37

1. Introduction

Throughout this paper, let , and be the set of all positive integers, the set of real numbers and the set of positive real numbers, respectively. We also denote Let be a Hilbert space with the induced norm For , we denote . The following classes of mappings are often used in this article:

The problem to find , such that

for all and , is known as the common variational inclusion problem, also known as CVIP. In particular, when the CVIP (1) is simply the variational inclusion problem, and in this case, its solution set is denoted by Here, the set of all solutions of (1) is given by . In other words,

These types of problems have been applied to real-world approaches, including signal recovery and image recovery problems, which require finding solutions satisfying a number of constraints formulated using nonlinear functional models [1,2]. Interestingly, we can effectively exploit these problems by combining them with the concept of fixed-point theory. For a map , the set of all fixed points of Z is the set Let be a collection of mappings for all We define the set of all common fixed points of all as

Recently, the problem to find , such that

was introduced. It is called a common variational inclusion and common fixed-point problem (CVI-CFP problem), see [3]. As CVI-CFP problems encompass both (common) variational inclusion and (common) fixed-point problems as special cases, this allows for a more comprehensive approach to solving a wider range of problems.

Over the past decades, various algorithms have been developed to solve such problems. These include the forward–backward splitting method [4,5] and the inertial proximal algorithm [6]. Tseng then proposed the forward–backward–forward method, also known as Tseng’s method, in [7]. For recent advancements, refer to [8,9,10,11,12,13]. Recently, a parallel algorithm was developed to determine a common fixed point for G-nonexpansive maps in H, and to solve signal recovery problems in a situation that the noise type is unknown in [1]. A parallel algorithm based on the inertial Mann iteration was presented in [3] to establish a weak convergence for solving (3) in H. For other results related to the problem (3), see [14,15,16,17,18,19]. Moreover, Antón-Sancho [20] investigated fixed points of automorphisms in the moduli space of vector bundles over compact Riemann surfaces, highlighting their effects on the space’s structure and symmetries.

The aforementioned problems have been recognized as valuable tools in mathematical models, including variational inequality problems and split feasibility problems. These models are applied in many areas, such as signal and image processing, economics, engineering, and especially machine learning (see [21,22,23,24,25], for example). In particular, a generalized time iteration (GTI) method, introduced in [26], effectively solves dynamic optimization problems with occasionally binding constraints, significantly advancing macroeconomic, financial, and labor economics modeling (see also the references cited herein). In recent years, environmental concerns, particularly PM2.5 pollution, have become pressing issues. In [27], the authors employed machine learning algorithms to estimate ground-level PM2.5 concentrations using micro-satellite image processing. Machine learning has emerged as a powerful tool for PM2.5 prediction due to its ability to analyze vast datasets and utilize complex algorithms, see [28,29,30]. It can effectively address the PM2.5 problem, which can help to improve air quality management.

2. Preliminaries

In this section, we introduce essential notations, definitions, and preliminary results that will be utilized in the subsequent sections. Here, ⇀ and → denote weak convergence and strong convergence, respectively.

Proposition 1.

For ,

Proposition 2.

Let , and let , such that . Then, for any ,

Lemma 1

([31]). Let and be sequences in , such that , and let be a sequence in , such that . Suppose that for any , where is a sequence in with Then, the sequence converges to zero.

Lemma 2

([32]). Let be a sequence in , such that there exists a subsequence with for all . Then, the following hold.

- (i)

- The sequence given byis nondecreasing and

- (ii)

- For all , and

Definition 1.

A mapping is called L-Lipschitz (or Lipschitz when there is no ambiguity) if exists satisfying

It is called L-contractive when and nonexpansive when

Let C be a nonempty, closed, and convex subset of H. For , define by The inequality

has been known to distinguish the metric projection for any and .

Next, we recall some background and notation from fixed-point theory in the context of our setting.

Definition 2.

Let be a map with Then,

- (i)

- [33] Z is μ-demicontractive if there exists , such that for all and for all ,

- (ii)

- [34] Z is demiclosed at zero if for any ,

Definition 3

([35]). Let be a map. Then, Y is called monotone if for any pair in the graph of Y (denoted by Moreover, it is called maximal if its graph is not a proper subgraph of the graph of another monotone map.

Equivalently, Y is maximally monotone if and only if for each , whenever for all

Lemma 3

([36]). Let be a mapping, and let be a maximally monotone mapping. If and , then .

Lemma 4

([37]). Let be a Lipschitz and monotone mapping, and let be a maximally monotone mapping. Then, is maximally monotone.

Lemma 5

([38]). Suppose that is a μ-demicontractive mapping with . Let where . Then

- (i)

- for all and all ;

- (ii)

- is closed and convex.

3. Main Result

We first list all conditions required for our purposed algorithm below.

- (C1)

- Each is -Lipschitz and monotone, and each is maximally monotone.

- (C2)

- is a sequence in such that and .

- (C3)

- Each is -demicontractive, and the map is differentiable.

- (C4)

- is nonempty.

- (C5)

- is a sequence in , and for some .

- (C6)

- is demiclosed at zero and for all .

- (C7)

- for some , , and is -contractive.

We now proceed to present our algorithm, namely Algorithm 1.

Lemma 6.

Assume that conditions (C1) and (C2) are satisfied. Then, Algorithm 1 generates a sequence converging to within the range where .

Proof.

We first note that since is -Lipschitz for all Then,

Following the method outlined in the proof of Lemma 3.1 in [39], we can conclude that

□

Remark 1.

By the proof of Lemma 6, the mapping must satisfy the Lipschitz condition, which is a necessary condition for establishing the strong convergence of Algorithm 1, as demonstrated in Theorem 1.

Lemma 7.

Assume that conditions (C1) and (C2) are satisfied. If for all in Algorithm 1, then

Proof.

The Lipschitz condition of and implies that for all ,

This means that for all . Hence, for all and so . From , we obtain that, for all ,

By Lemma 3, . Therefore, . □

| Algorithm 1 Modified parallel algorithm. |

|

Lemma 8.

Assume that conditions (C1)–(C5) are satisfied. If , then for each ,

and

where .

Proof.

Let From the definitions of and , we obtain that

By (4) and (12) with the conditions of and ,

By the property of , we have that

This implies that there is such that

Since is maximally monotone,

Consequently,

Then, by (13),

From Lemma 5 , we have that for all ,

Finally, by the triangle inequality and (12), we obtain the inequality (11). □

Lemma 9.

Assume that conditions (C1)–(C5) are satisfied. If there exists a subsequence of , such that and for all , then .

Proof.

As and , we have that From (11) and ,

Then, for all . It follows from and (C6) that .

Next, we show that . Let for all . Thus for all . By the definition of ,

for all . By the condition of , for all ,

Thus, for each ,

By the Lipschitz continuity of , and By Lemma 6, we have that for all By the maximal monotonicity of , we obtain that for all which means that and thus . □

Lemma 10.

If is maximally monotone and is Lipschitz and monotone, then the set is closed and convex.

Proof.

Firstly, let be a sequence in converging to v. Then, . By Lemma 4, we obtain that the mapping is maximally monotone, and so

for all . This follows that

for all . Similarly, by the maximal monotonicity of , which means that . Therefore, is closed. Finally, let and . Then, . We subsequently have that

for all . Thus

for all . It follows that . Therefore, the set is convex. □

Now, we are ready to present our strong convergence result.

Theorem 1.

Assume that conditions (C1)–(C7) are satisfied. Then, the sequence , which is generated by Algorithm 1, strongly converges to .

Proof.

Let . As there exists , such that for all and all , where . By (10), (C5), the definition of and , we have that, for all and all ,

for some . Thus,

for all .

Next, set , and for all . Then

It follows from (14) that

for all . By the -contractivity of and (14), we have the following calculation:

for all . Thus, for all . Consequently, the sequences and are bounded. It implies by Lemma 5 and Lemma 10 that there is a unique , such that . It follows from (9) that for any ,

Now, for each , set

Applying (14), we obtain that for all ,

for some . Let . By using the same technique as (16), we have that, for all ,

From the property of , (5), (6), (15), and (19), we have the following:

Then, from (7) and (18), we obtain that for all ,

This implies that, for all ,

for some .

Next, we shall consider the two possible cases.

Case I. There exists , such that for all , holds. By the boundedness of , the sequence is convergent. By Assumption 7 and (21),

Then, we have that

Also, by the triangle inequality with (22) and (23),

From the property of

for all . By the inequality (10), we have, for all ,

for some . Combining (24) with Assumption 5 and , we obtain that for all ,

From the definition of , and (22), we have

By the boundedness of , there is , such that as for some subsequence of . Then, by (23), as . Lemma 9 together with (25) implies that . From (17), we immediately have that

Combining (26), we obtain that

From (20) and Lemma 1, we finally have that .

Case II. There exists a subsequence of , such that for all . It follows from Lemma 2 that for all for some . Employing similar arguments as in Case I, it follows that

and

Finally, from and (20), for all , we obtain that

Consequently,

It follows that and so . By Lemma 2,

Therefore, as . □

4. Signal Restoration from Blurred Observations in the Context of Image Processing

In this section, we assess the computational efficiency of the proposed algorithm. All computational analyses were conducted utilizing MATLAB R2021a, executed on an iMac computer equipped with an Apple M1 processor and 16 gigabytes of random access memory (RAM). Let be the signal space equipped with the Euclidean norm . We denote the original signal as and the observed signal corrupted by noise as . Additionally, we define () as the filter matrix for each . The mathematical formulation of the problem is as follows:

for all . Next, we consider the following problem:

for all . According to Proposition 3.1 (iii) of [40], this problem can be regarded as the problem (3) with the following parameter settings: , and , where , for all . It is known that is -Lipschitz and monotone and is maximally monotone. Since is nonexpansive for , it is then 0-demicontractive.

To evaluate the performance of the proposed algorithm, we conduct a numerical comparison of Algorithm 1 with Algorithm 2.2 from [1] and Algorithm 3 from [3]. The experimental setup is detailed as follows.

- -

- The signal size is set to and .

- -

- The original signal x is generated from a uniform distribution in with m nonzero elements.

- -

- The Gaussian matrix is generated using the command .

- -

- The observation is generated by adding white Gaussian noise with a signal-to-noise ratio (SNR) of 40.

- -

- The initial points are random vectors, and for all .

The restoration accuracy is quantitatively assessed using the mean-squared error (MSE) metric,

The control parameters are listed below.

- (i)

- Algorithm 2.2: .

- (ii)

- Algorithm 3: and

- (iii)

- Algorithm 1: and

We present the obtained results below.

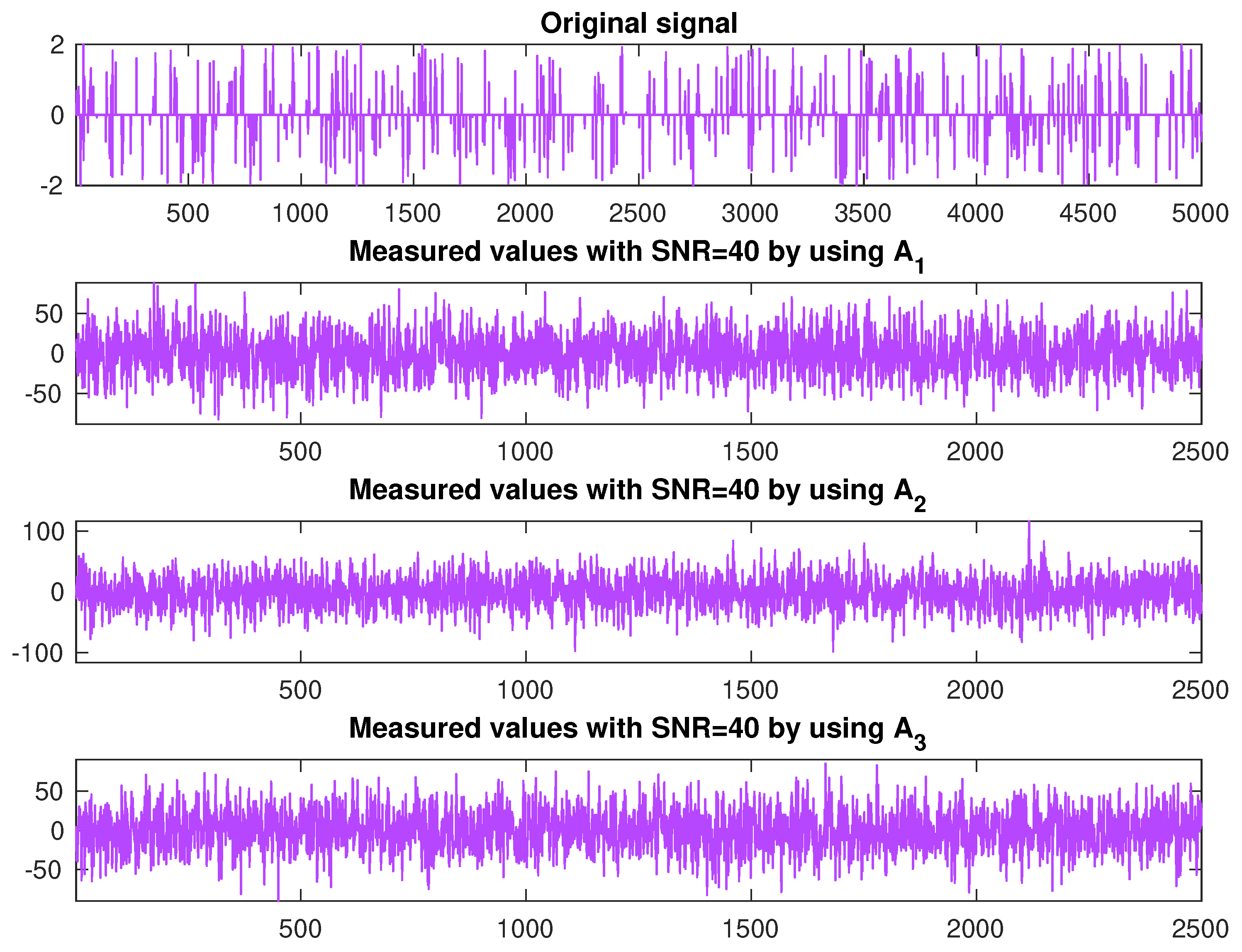

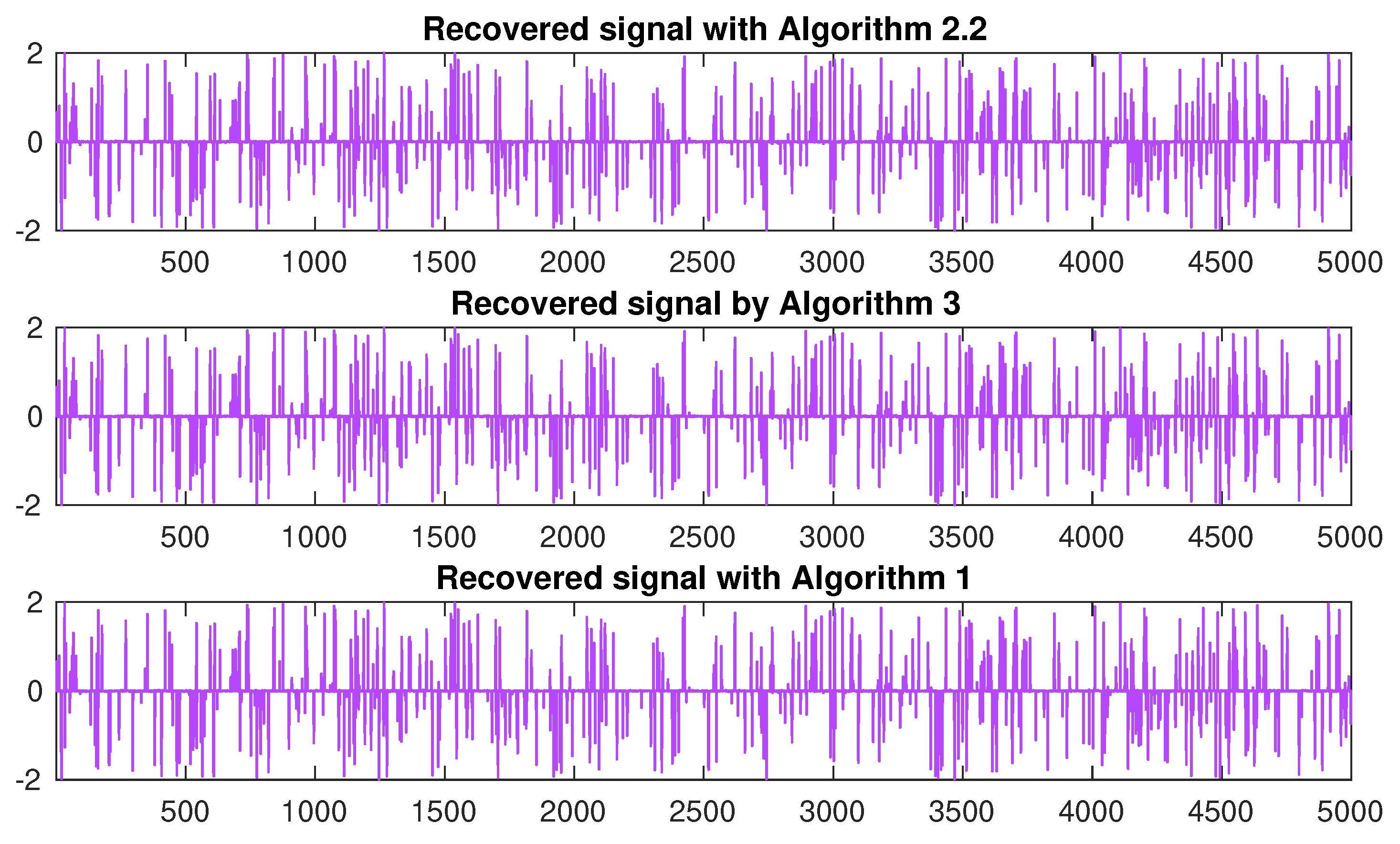

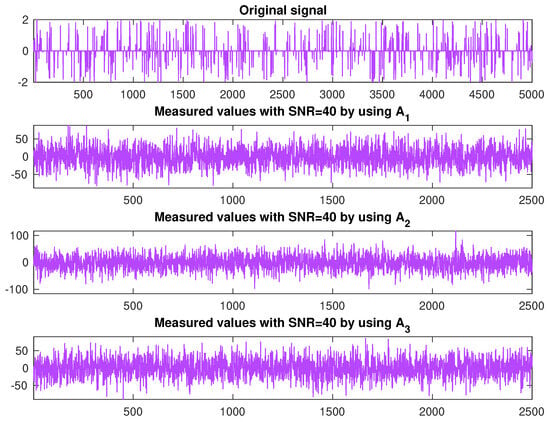

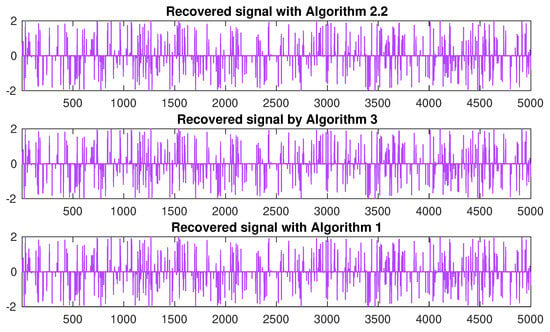

In Table 1, varying numbers of nonzero elements are employed in the numerical experiments: , and 500. The elapsed time and iteration count for each algorithm are recorded. Algorithm 1 consistently outperforms the others in terms of both time and iterations. Additionally, the recovery signal results for are presented in Figure 1 and Figure 2.

Table 1.

Numerical comparison of three algorithms.

Figure 1.

From top to bottom: the original signal and the measurement by using , and , respectively, with .

Figure 2.

Reconstructed signals obtained using three different algorithms for are displayed from top to bottom. Algorithm 2.2 from [1] and Algorithm 3 from [3].

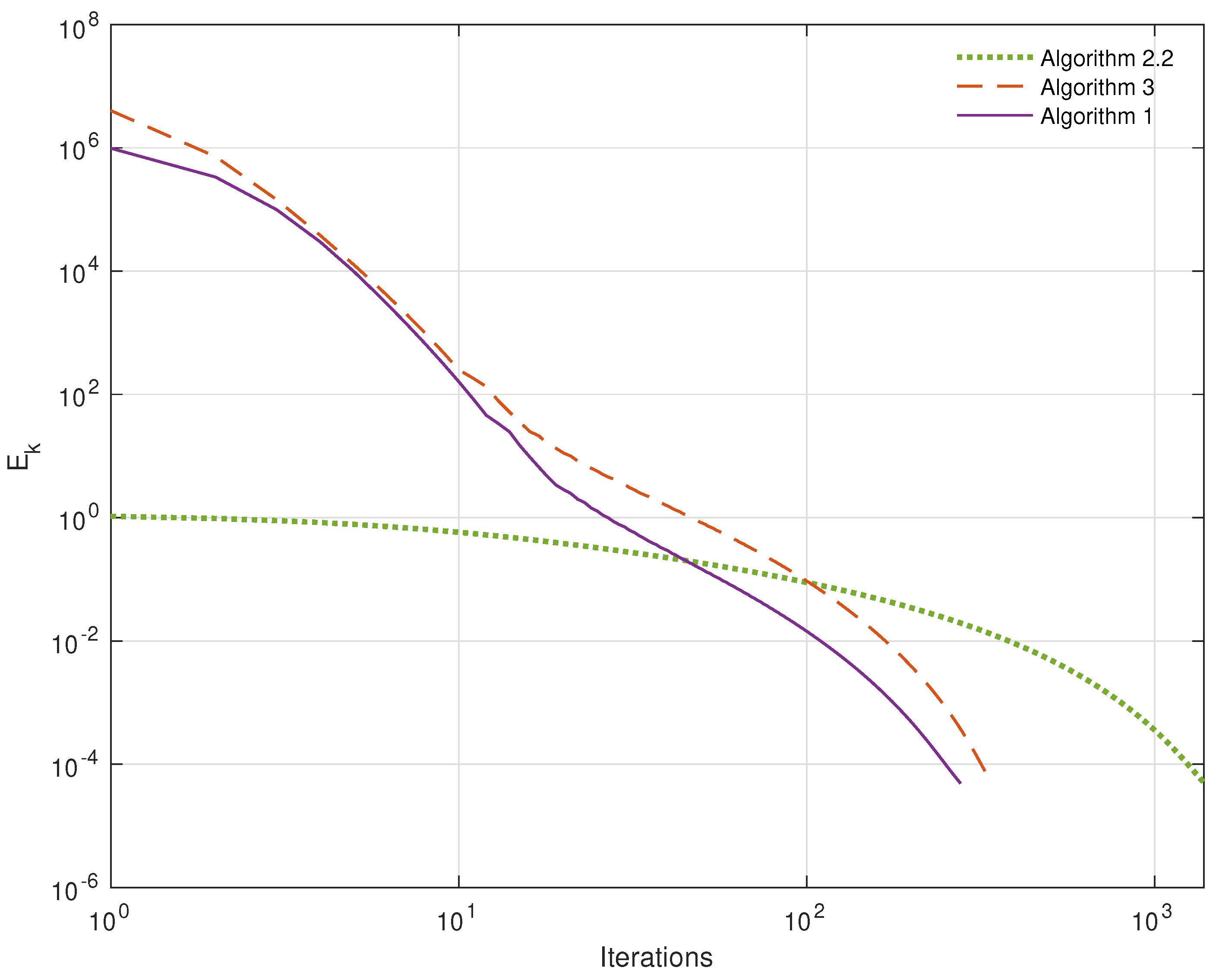

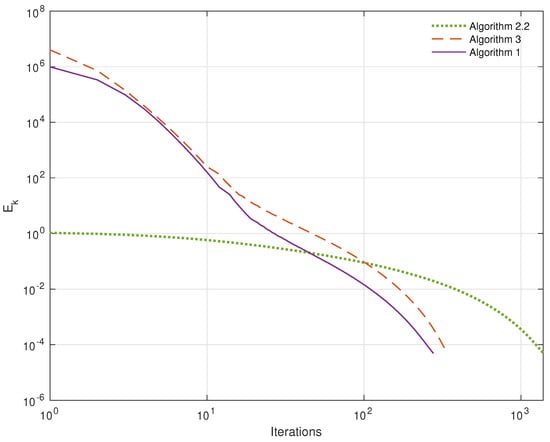

In these scenarios, it is evident that iteration improves the numerical outcomes. To quantify the differences between these results, we calculate the mean-squared errors of each reconstructed signal in Figure 3. In Table 1, different numbers of nonzero elements are used in the numerical experiments: , and The elapsed periods and number of iterations for each algorithm are recorded. As a consequence of the control parameter settings, we observe that Algorithm 1 uses less time on average than the other algorithms, and has a lower number of iterations. Also, we show the recovery signal results for in Figure 1 and Figure 2.

Figure 3.

Plots of over iterations when . Algorithm 2.2 from [1] and Algorithm 3 from [3].

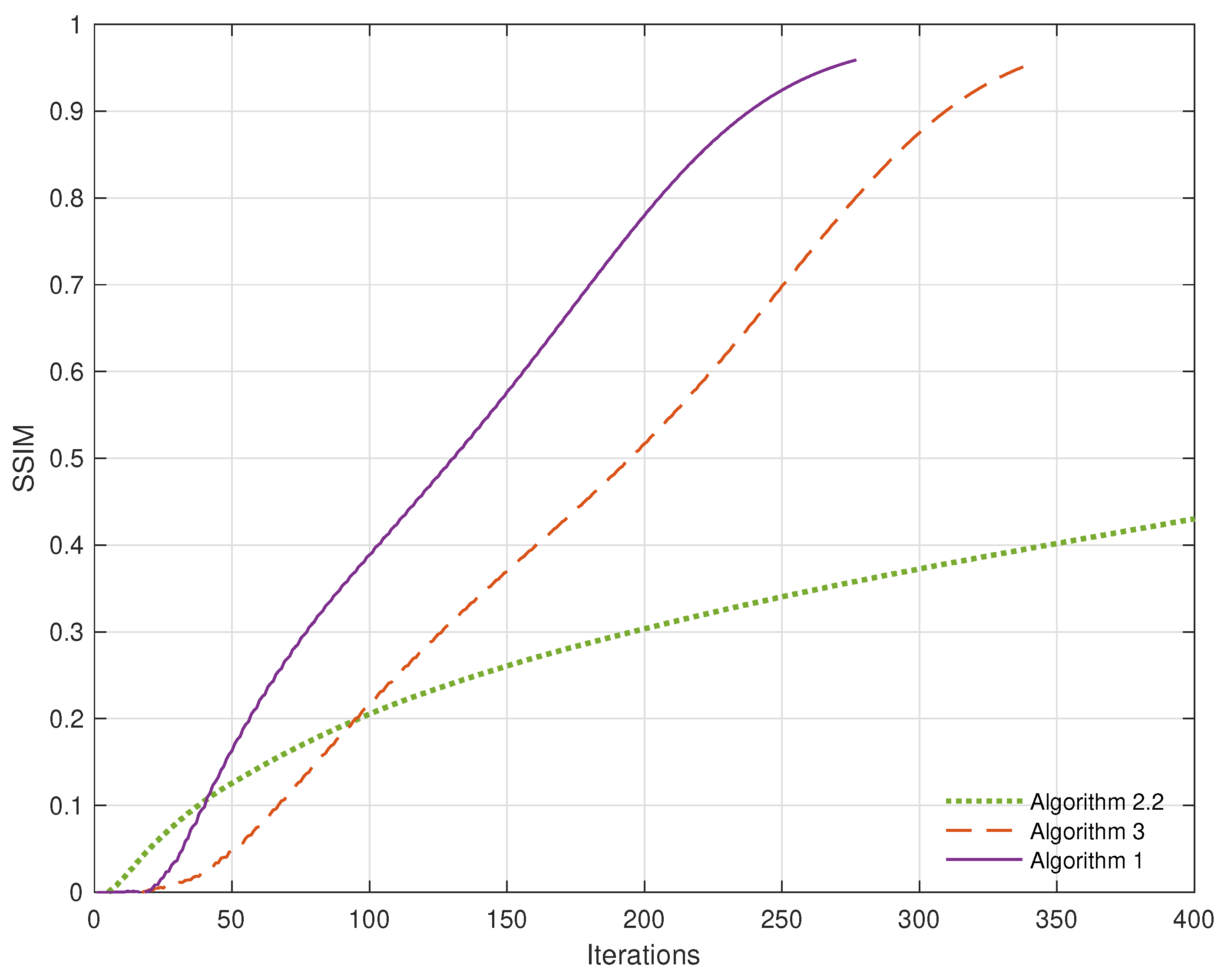

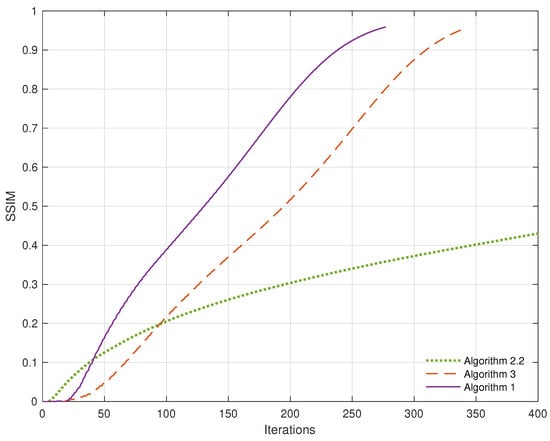

It is clear that iteration enhances the numerical results. We compute the mean-squared errors of each reconstructed signal in Figure 3 to detect the differences between these outcomes. Figure 4 presents the plot of the structural similarity index measure (SSIM), developed by Wang et al. [41], across iterations. The SSIM value ranges from 0 to 1, where a value of 1 indicates complete signal recovery.

Figure 4.

Plots of SSIM over iterations when . Algorithm 2.2 from [1] and Algorithm 3 from [3].

5. Conclusions

We develop a novel parallel algorithm for solving the common variational inclusion and common fixed-point (CVI-CFP) problem by integrating inertial and Tseng’s techniques within a variational framework and incorporating fixed-point methods. This method achieves strong convergence, generalizing and improving upon existing results. A strong convergence theorem guarantees its reliability under conditions involving demicontractive and Lipschitz mappings. It is worth noting that the algorithm effectively merges variational inclusion and fixed-point problem solutions. Numerical experiments on signal recovery problems with various blurred filters demonstrate accuracy and efficiency compared with existing methods. This study makes substantial theoretical and practical advancements, providing a valuable tool for solving real-world problems in signal recovery.

Author Contributions

Conceptualization, C.T. and R.S.; Methodology, R.S.; Formal analysis, C.T., T.C. and K.C.; Writing—original draft, K.C.; Writing—review & editing, T.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially supported by (1) Fundamental Fund 2025, Chiang Mai University, Chiang Mai, Thailand; (2) Chiang Mai University, Chiang Mai, Thailand; (3) Faculty of Science, Chiang Mai University, Chiang Mai, Thailand; and (4) Centre of Excellence in Mathematics, MHESI, Bangkok, Thailand.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Suantai, S.; Kankam, K.; Cholamjiak, P.; Cholamjiak, W. A parallel monotone hybrid algorithm for a finite family of G-nonexpansive mappings in Hilbert spaces endowed with a graph applicable in signal recovery. Comput. Appl. Math. 2021, 40, 145. [Google Scholar] [CrossRef]

- Suparatulatorn, R.; Chaichana, K. A strongly convergent algorithm for solving common variational inclusion with application to image recovery problems. Appl. Numer. Math. 2022, 173, 239–248. [Google Scholar] [CrossRef]

- Mouktonglang, T.; Poochinapan, K.; Suparatulatorn, R. A parallel method for common variational inclusion and common fixed point problems with applications. Carpathian J. Math. 2023, 39, 189–200. [Google Scholar] [CrossRef]

- Lions, P.L.; Mercier, B. Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 1979, 16, 964–979. [Google Scholar] [CrossRef]

- Passty, G.B. Ergodic convergence to a zero of the sum of monotone operators in Hilbert space. J. Math. Anal. Appl. 1979, 72, 383–390. [Google Scholar] [CrossRef]

- Moudafi, A.; Oliny, M. Convergence of a splitting inertial proximal method for monotone operators. J. Comput. Appl. Math. 2003, 55, 447–454. [Google Scholar] [CrossRef]

- Tseng, P. A modified forward-backward splitting method for maximal monotone mappings. SIAM J. Control. Optim. 2000, 38, 431–446. [Google Scholar] [CrossRef]

- Lorenz, D.A.; Pock, T. An inertial forward-backward algorithm for monotone inclusions. J. Math. Imaging Vis. 2015, 51, 311–325. [Google Scholar] [CrossRef]

- Cholamjiak, W.; Cholamjiak, P.; Suantai, S. An inertial forward-backward splitting method for solving inclusion problems in Hilbert spaces. J. Fixed Point Theory Appl. 2018, 30, 42. [Google Scholar] [CrossRef]

- Gibali, A.; Thong, D.V. Tseng type methods for solving inclusion problems and its applications. Calcolo 2018, 55, 49. [Google Scholar] [CrossRef]

- Abubakar, J.; Kumam, P.; Garba, A.I.; Abdullahi, M.S.; Ibrahim, A.H.; Sitthithakerngkiet, K. An inertial iterative scheme for solving variational inclusion with application to Nash-Cournot equilibrium and image restoration problems. Carpathian J. Math. 2021, 37, 361–380. [Google Scholar] [CrossRef]

- Cholamjiak, P.; Hieu, D.V.; Cho, Y.J. Relaxed forward-backward splitting methods for solving variational inclusions and applications. J. Sci. Comput. 2021, 88, 85. [Google Scholar] [CrossRef]

- Suparatulatorn, R.; Cholamjiak, W.; Gibali, A.; Mouktonglang, T. A parallel Tseng’s splitting method for solving common variational inclusion applied to signal recovery problems. Adv. Differ. Equ. 2021, 2021, 492. [Google Scholar] [CrossRef]

- Suantai, S.; Cholamjiak, W.; Cholamjiak, P. Strong convergence theorems of a finite family of quasi-nonexpansive and Lipschitz multi-valued mappings. Afr. Mat. 2015, 26, 345–355. [Google Scholar] [CrossRef]

- Anh, P.K.; Hieu, D.V. Parallel and sequential hybrid methods for a finite family of asymptotically quasi ϕ-nonexpansive mappings. J. Appl. Math. Comput. 2015, 48, 241–263. [Google Scholar] [CrossRef]

- Cholamjiak, W.; Khan, S.A.; Yambangwai, D.; Kazmi, K.R. Strong convergence analysis of common variational inclusion problems involving an inertial parallel monotone hybrid method for a novel application to image restoration. Revista de la Real Academia de Ciencias Exactas. Físicas Nat. Ser. A Matemát. 2020, 114, 99. [Google Scholar]

- Eslamian, M. Strong convergence theorem for common zero points of inverse strongly monotone mappings and common fixed points of generalized demimetric mappings. Optimization 2021, 71, 4265–4287. [Google Scholar] [CrossRef]

- Eslamian, M.; Kamandi, A. A novel algorithm for approximating common solution of a system of monotone inclusion problems and common fixed point problem. J. Ind. Manag. Optim. 2021, 19, 868–889. [Google Scholar] [CrossRef]

- Wairojjana, N.; Younis, M.; Rehman, H.U.; Pakkaranang, N.; Pholasa, N. Modified viscosity subgradient extragradient-like algorithms for solving monotone variational inequalities problems. Axioms 2020, 9, 118. [Google Scholar] [CrossRef]

- Antón-Sancho, Á. Fixed points of automorphisms of the vector bundle moduli space over a compact Riemann surface. Mediterr. J. Math. 2024, 21, 20. [Google Scholar] [CrossRef]

- Beck, A.; Teboulle, M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2009, 2, 183–202. [Google Scholar] [CrossRef]

- Shehu, Y.; Cholamjiak, P. Iterative method with inertial for variational inequalities in Hilbert spaces. Calcolo 2019, 56, 4. [Google Scholar] [CrossRef]

- Mouktonglang, T.; Suparatulatorn, R. Inertial hybrid projection methods with selection techniques for split common fixed point problems in Hilbert spaces. Univ. Politeh. Buchar. Sci. Bull. Ser. A 2022, 54, 47–54. [Google Scholar]

- Kesornprom, S.; Cholamjiak, P. A modified inertial proximal gradient method for minimization problems and applications. AIMS Math. 2022, 7, 8147–8161. [Google Scholar] [CrossRef]

- Peeyada, P.; Suparatulatorn, R.; Cholamjiak, W. An inertial Mann forward-backward splitting algorithm of variational inclusion problems and its applications. Chaos Solitons Fractals 2022, 158, 112048. [Google Scholar] [CrossRef]

- Kabukçuoğlu, A.; Martínez-García, E. A Generalized Time Iteration Method for Solving Dynamic Optimization Problems with Occasionally Binding Constraints. Comput. Econ. 2021, 58, 435–460. [Google Scholar] [CrossRef]

- Zheng, T.; Bergin, M.H.; Hu, S.; Carlson, D.E. Estimating ground-level PM2.5 using micro-satellite images by a convolutional neural network and random forest approach. Atmos. Environ. 2020, 230, 117451. [Google Scholar] [CrossRef]

- Doreswamy, H.; Harishkumar, K.S.; Yogesh, K.M.; Ibrahim, G. Forecasting Air Pollution Particulate Matter (PM2.5) Using Machine Learning Regression Models. Procedia Comput. Sci. 2020, 171, 2057–2066. [Google Scholar] [CrossRef]

- Gokul, P.R.; Mathew, A.; Bhosale, A.; Nair, A.T. Spatio-temporal air quality analysis and PM2.5 prediction over Hyderabad City, India using artificial intelligence techniques. Ecol. Inform. 2023, 76, 102067. [Google Scholar] [CrossRef]

- Mathew, A.; Gokul, P.R.; Raja Shekar, P.; Arunab, K.S.; Ghassan Abdo, H.; Almohamad, H.; Abdullah Al Dughairi, A. Air quality analysis and PM2.5 modelling using machine learning techniques: A study of Hyderabad city in India. Cogent Eng. 2023, 10, 2243743. [Google Scholar] [CrossRef]

- Xu, H.K. Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 2002, 66, 240–256. [Google Scholar] [CrossRef]

- Maingé, P.E. Strong convergence of projected subgradient methods for nonsmooth and nonstrictly convex minimization. Set-Valued Anal. 2008, 16, 899–912. [Google Scholar] [CrossRef]

- Goebel, K.; Kirk, W. Topics in Metric Fixed Point Theory; Cambridge Studies in Advanced Mathematics; Cambridge University Press: Cambridge, UK, 1990; Volume 28. [Google Scholar]

- Marino, G.; Xu, H.K. Weak and strong convergence theorems for strict pseudo-contractions in Hilbert spaces. J. Math. Anal. Appl. 2007, 329, 336–346. [Google Scholar] [CrossRef]

- Browder, F.E. Nonlinear maximal monotone operators in Banach space. Math. Ann. 1968, 175, 89–113. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; CMS Books in Mathematics: New York, NY, USA, 2011. [Google Scholar]

- Brézis, H. Opérateurs Maximaux Monotones et Semi-groupes de Contractions dans les Espaces de Hilbert; Mathematical Studies: Amsterdam, The Netherlands, 1973; Volume 5. [Google Scholar]

- Tan, B.; Zhou, Z.; Li, S. Viscosity-type inertial extragradient algorithms for solving variational inequality problems and fixed point problems. J. Appl. Math. Comput. 2022, 68, 1387–1411. [Google Scholar] [CrossRef]

- Liu, H.; Yang, J. Weak convergence of iterative methods for solving quasimonotone variational inequalities. Comput. Optim. Appl. 2020, 77, 491–508. [Google Scholar] [CrossRef]

- Combettes, P.L.; Wajs, V.R. Signal recovery by proximal forward-backward splitting. Multiscale Model. Simul. 2005, 4, 1168–1200. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).