Remarks on the Mathematical Modeling of Gene and Neuronal Networks by Ordinary Differential Equations

Abstract

1. Introduction

2. Problem Formulation

3. Preliminary Results

3.1. Invariant Set

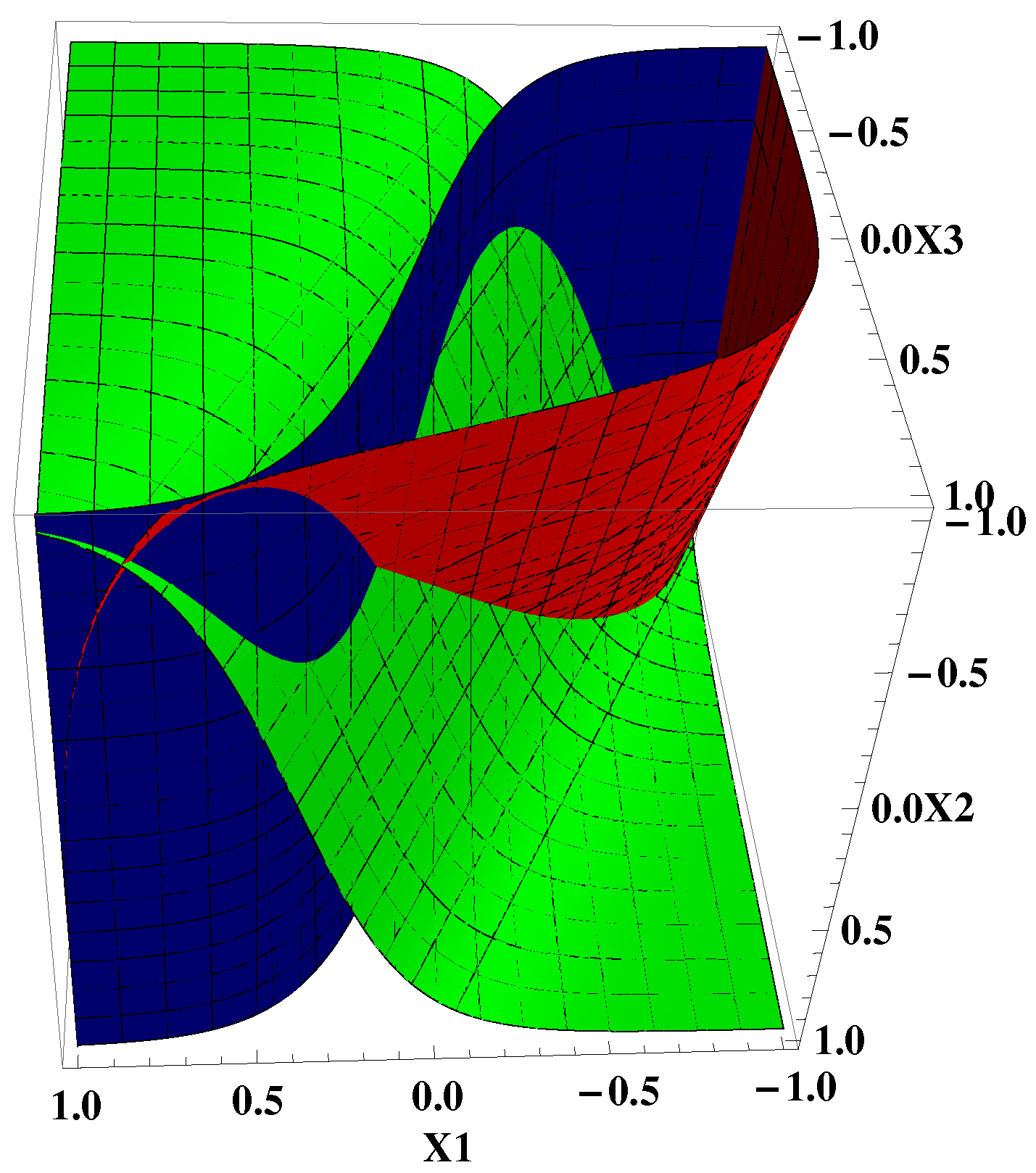

3.2. Nullclines

3.3. Critical Points

3.4. Linearization at a Critical Point

3.5. Regulatory Matrices With Zero Diagonal Elements

4. Focus Type Critical Points

5. Inhibition-Activation

6. The Case of Triangular Regulatory Matrix

6.1. Critical Points

6.2. Linearized System

7. Systems with Stable Periodic Solutions: Andronov–Hopf Type Bifurcations

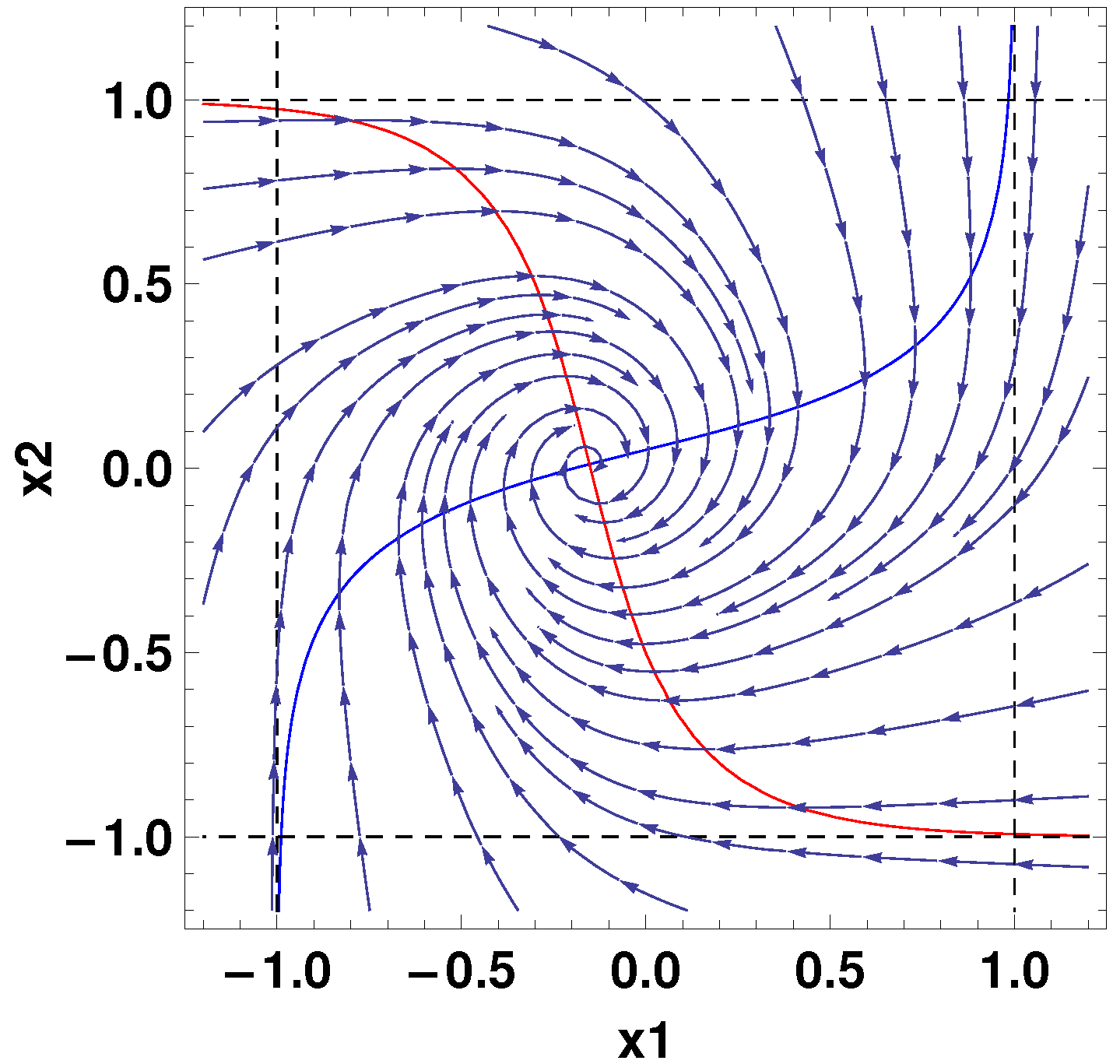

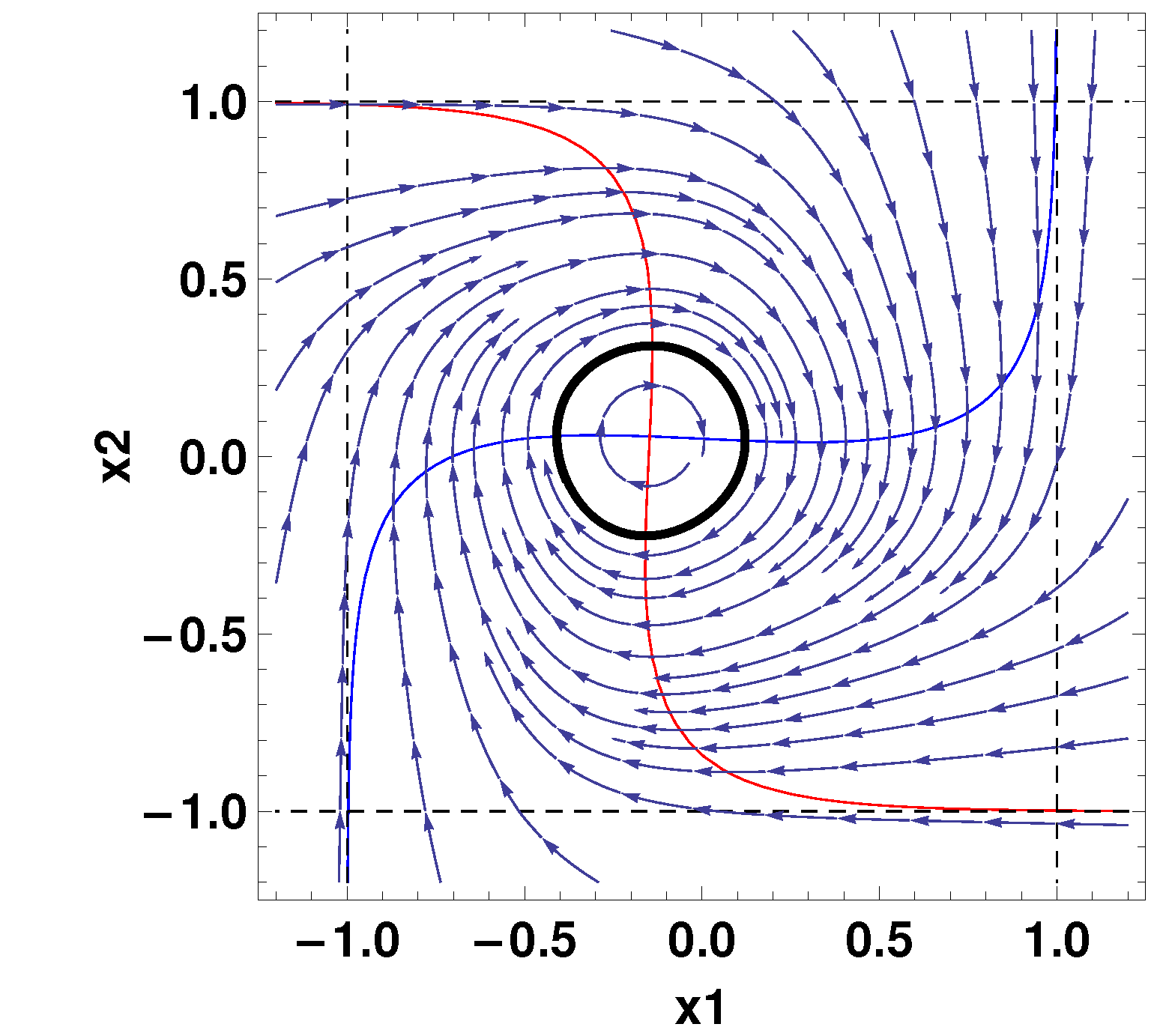

7.1. 2D Case

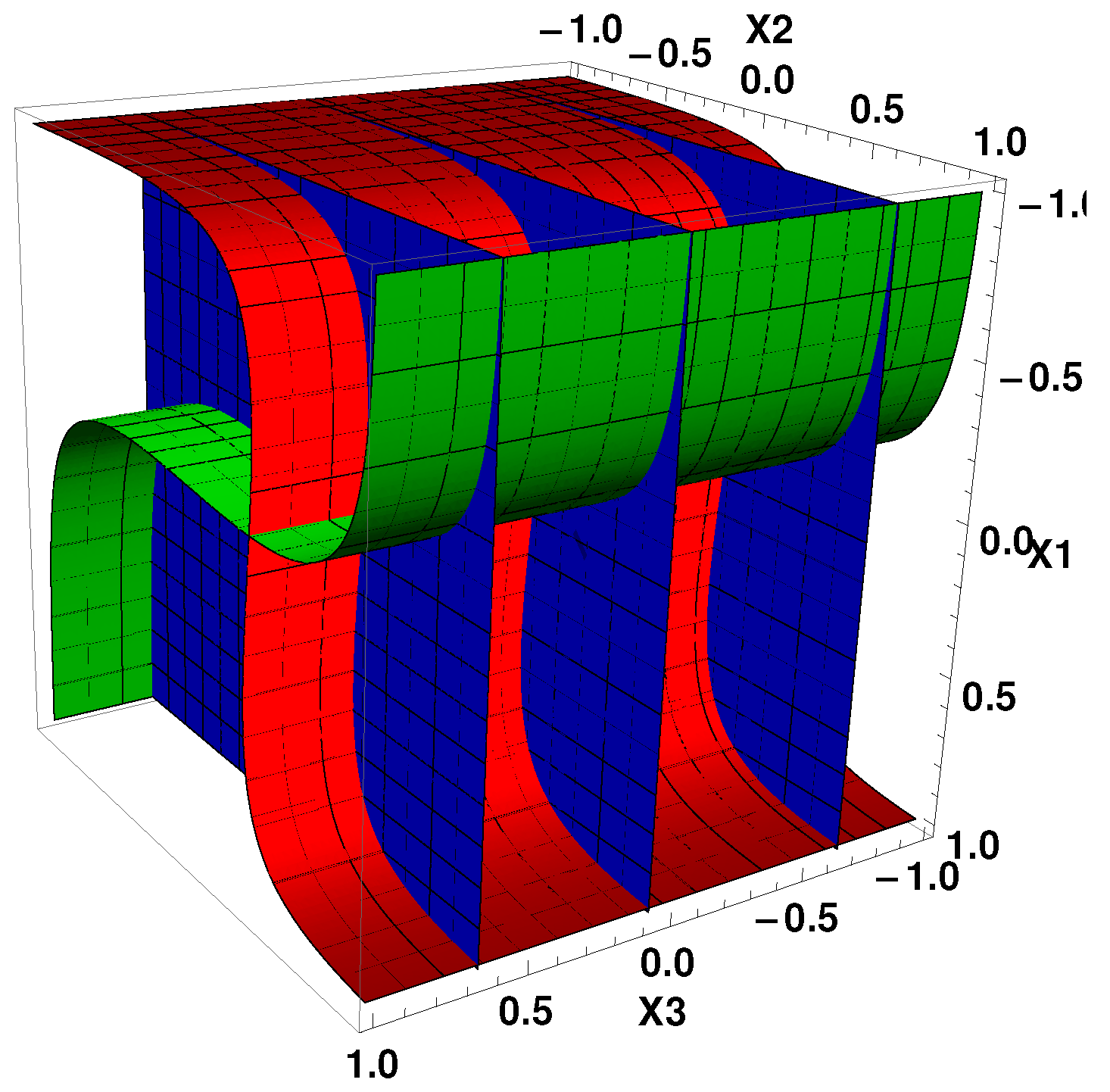

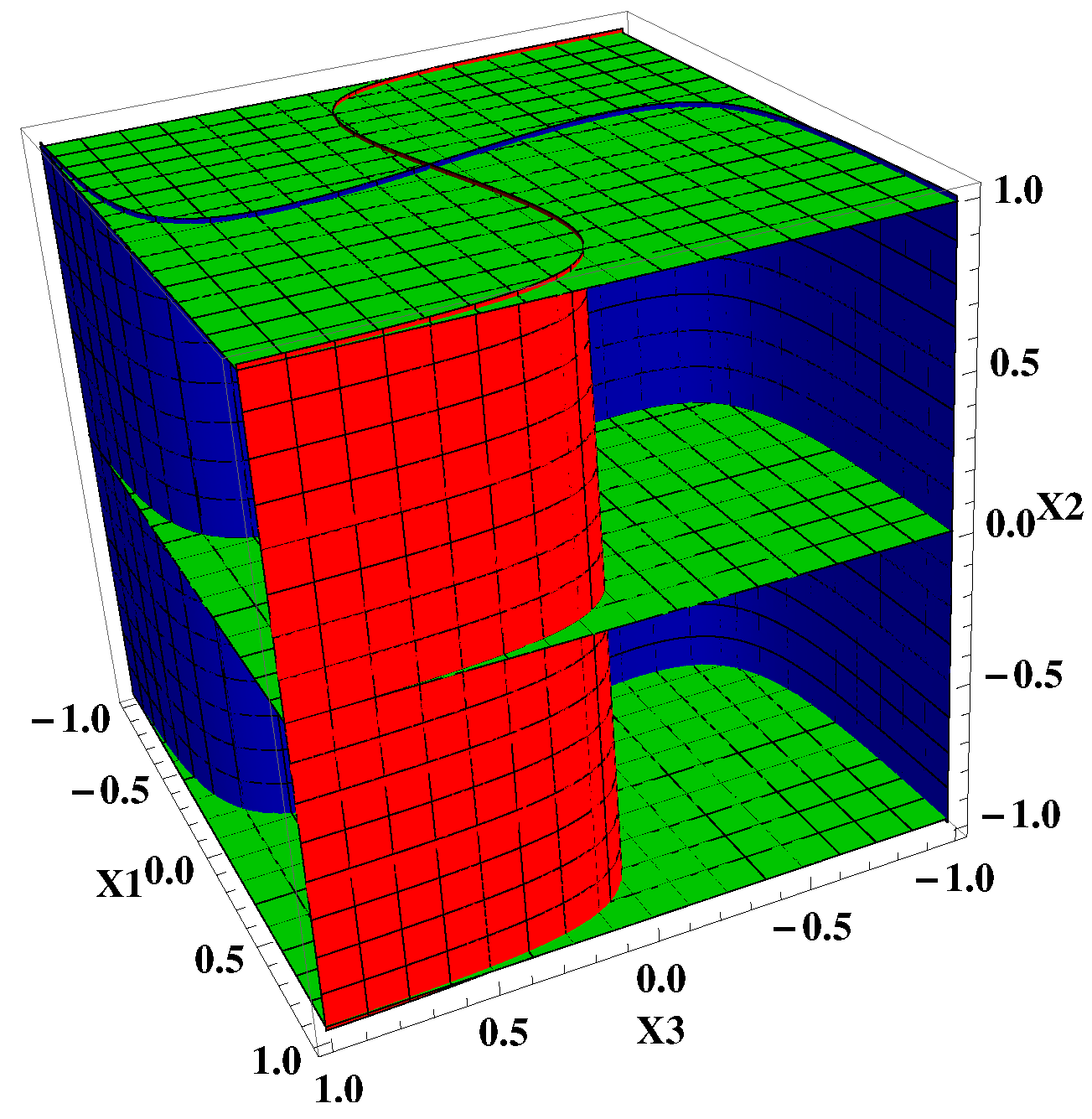

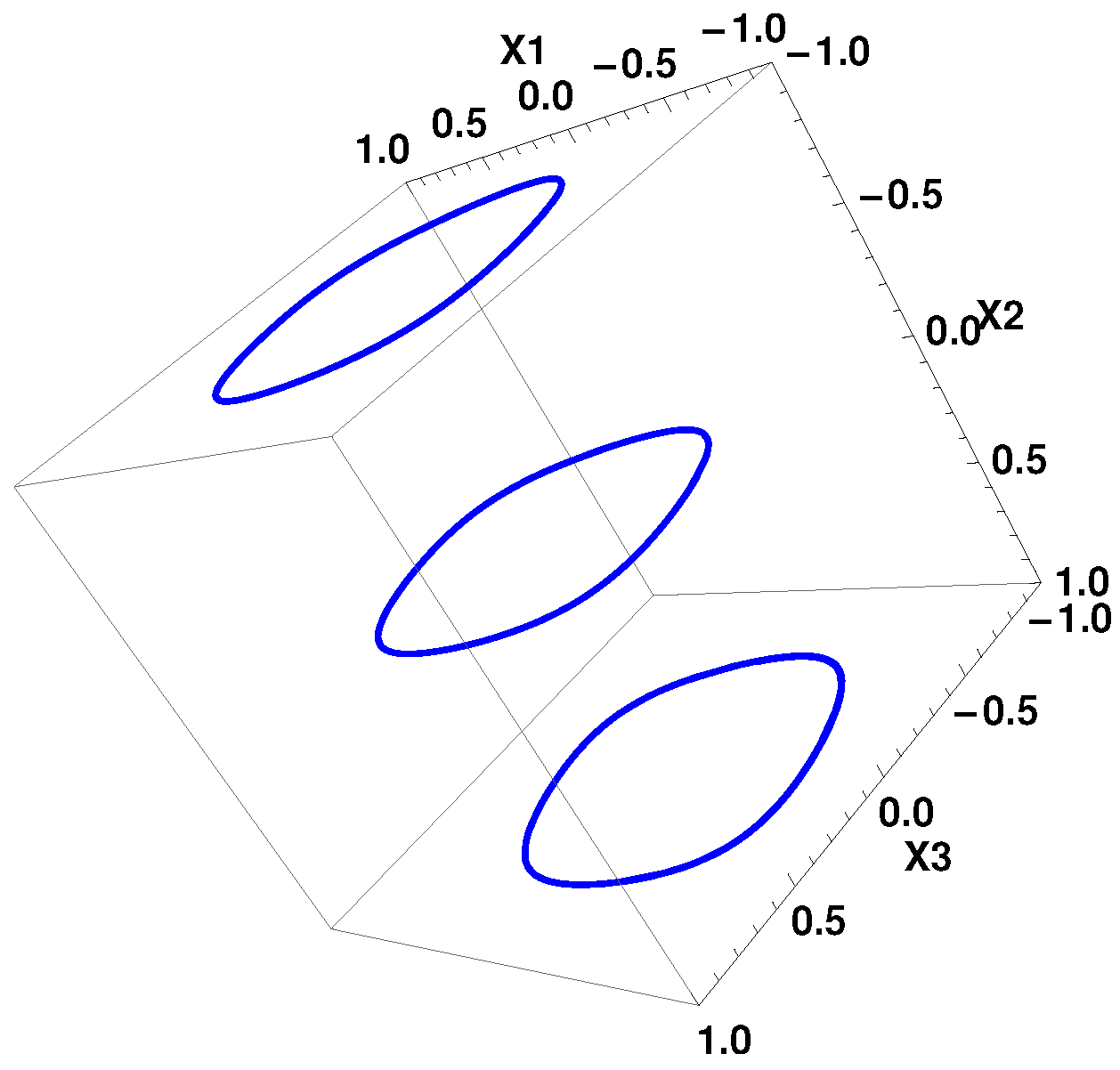

7.2. 3D Case

8. Control and Management of ANN

9. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Haykin, S. Neural networks. In A Comprehensive Foundation, 2nd ed.; Prentice Hall: Hoboken, NJ, USA, 1999. [Google Scholar]

- Chapeau-Blondeau, F.; Chauvet, G. Stable, Oscillatory, and Chaotic Regimes in the Dynamics of Small Neural Networks with Delay. Neural Netw. 1992, 5, 735–743. [Google Scholar]

- Funahashi, K.; Nakamura, Y. Approximation of dynamical systems by continuous time recurrent neural networks. Neural Netw. 1993, 6, 801–806. [Google Scholar]

- Das, A.; Roy, A.B.; Das, P. Chaos in a three dimensional neural network. Appl. Math. Model. 2000, 24, 511–522. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Yamazaki, K.; Vo-Ho, V.K.; Darshan, B.; Le, N. Spiking Neural Networks and Their Applications: A Review. Brain Sci. 2022, 12, 63. [Google Scholar] [CrossRef]

- Abiodun, O.I.; Jantan, A.; Omolara, A.E.; Dada, K.V.; Umar, A.M.; Linus, O.U.; Arshad, H.; Kazaure, A.A.; Gana, U.; Kiru, M.U. Comprehensive Review of Artificial Neural Network Applications to Pattern Recognition. IEEE Access 2019, 7, 158820–158846. [Google Scholar] [CrossRef]

- Vohradský, J. Neural network model of gene expression. Faseb J. 2001, 15, 846–854. [Google Scholar] [CrossRef]

- Kraynyukova, N.; Tchumatchenko, T. Stabilized supralinear network can give rise to bistable, oscillatory, and persistent activity. Proc. Natl. Acad. Sci. USA 2018, 115, 3464–3469. [Google Scholar]

- Ahmadian, Y.; Miller, K.D. What is the dynamical regime of cerebral cortex? Neuron 2021, 109, 3373–3391. [Google Scholar] [CrossRef]

- Sprott, J.C. Elegant Chaos; World Scientific: Singapore, 2010. [Google Scholar]

- Wilson, H.R.; Cowan, J.D. Excitatory and inhibitory interactions in localized populations of model neurons. Biophys. J. 1972, 12, 1–24. [Google Scholar]

- Furusawa, C.; Kaneko, K. A generic mechanism for adaptive growth rate regulation. PLoS Comput. Biol. 2008, 4, e3. [Google Scholar] [CrossRef]

- Alakwaa, F.M. Modeling of Gene Regulatory Networks: A Literature Review. J. Comput. Syst. Biol. 2014, 1, 1. [Google Scholar] [CrossRef]

- Brokan, E.; Sadyrbaev, F. On a differential system arising in the network control theory. Nonlinear Anal. Model. Control. 2016, 21, 687–701. [Google Scholar] [CrossRef]

- Schlitt, T. Approaches to Modeling Gene Regulatory Networks: A Gentle Introduction. In Silico Systems Biology; Methods in Molecular Biology (Methods and Protocols); Humana: Totowa, NJ, USA, 2013; Volume 1021, pp. 13–35. [Google Scholar] [CrossRef]

- Jong, H.D. Modeling and Simulation of Genetic Regulatory Systems: A Literature Review. J. Comput Biol. 2002, 9, 67–103. [Google Scholar] [CrossRef]

- Ogorelova, D.; Sadyrbaev, F.; Sengileyev, V. Control in Inhibitory Genetic Regulatory Network Models. Contemp. Math. 2020, 1, 421–428. [Google Scholar] [CrossRef]

- Sadyrbaev, F.; Ogorelova, D.; Samuilik, I. A nullclines approach to the study of 2D artificial network. Contemp. Math. 2019, 1, 1–11. [Google Scholar] [CrossRef]

- Wang, L.Z.; Su, R.Q.; Huang, Z.G.; Wang, X.; Wang, W.X.; Grebogi, C.; Lai, Y.C. A geometrical approach to control and controllability of nonlinear dynamical networks. Nat. Commun. 2016, 7, 11323. [Google Scholar] [CrossRef]

- Koizumi, Y.; Miyamura, T.; Arakawa, S.I.; Oki, E.; Shiomoto, K.; Murata, M. Adaptive Virtual Network Topology Control Based on Attractor Selection. J. Light. Technol. 2010, 28, 1720–1731. [Google Scholar] [CrossRef]

- Vemuri, V. Artificial Neural Networks in Control Applications. Adv. Comput. 1993, 36, 203–254. [Google Scholar]

- Kozlovska, O.; Samuilik, I. Quasi-periodic solutions for a three-dimensional system in gene regulatory network. WSEAS Trans. Syst. 2023, 22, 727–733. [Google Scholar] [CrossRef]

- Ogorelova, D.; Sadyrbaev, F.; Samuilik, I. On Targeted Control over Trajectories on Dynamical Systems Arising in Models of Complex Networks. Mathematics 2023, 11, 2206. [Google Scholar] [CrossRef]

| - | |||

|---|---|---|---|

| −0.9268 | −1.0366 − 0.6101 i | −1.0366 + 0.6101 i | |

| 1.1972 | −2.0986 − 0.8406 i | −2.0986 + 0.8406 i | |

| −0.9821 | −1.0090 − 0.2189 i | −1.0090 + 0.2189 i |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ogorelova, D.; Sadyrbaev, F. Remarks on the Mathematical Modeling of Gene and Neuronal Networks by Ordinary Differential Equations. Axioms 2024, 13, 61. https://doi.org/10.3390/axioms13010061

Ogorelova D, Sadyrbaev F. Remarks on the Mathematical Modeling of Gene and Neuronal Networks by Ordinary Differential Equations. Axioms. 2024; 13(1):61. https://doi.org/10.3390/axioms13010061

Chicago/Turabian StyleOgorelova, Diana, and Felix Sadyrbaev. 2024. "Remarks on the Mathematical Modeling of Gene and Neuronal Networks by Ordinary Differential Equations" Axioms 13, no. 1: 61. https://doi.org/10.3390/axioms13010061

APA StyleOgorelova, D., & Sadyrbaev, F. (2024). Remarks on the Mathematical Modeling of Gene and Neuronal Networks by Ordinary Differential Equations. Axioms, 13(1), 61. https://doi.org/10.3390/axioms13010061