Abstract

In this paper, we propose a parameterized variable metric three-operator algorithm for finding a zero of the sum of three monotone operators in a real Hilbert space. Under some appropriate conditions, we prove the strong convergence of the proposed algorithm. Furthermore, we propose a parameterized variable metric three-operator algorithm with a multi-step inertial term and prove its strong convergence. Finally, we illustrate the effectiveness of the proposed algorithm with numerical examples.

Keywords:

variable metric; monotone inclusion; three-operator algorithm; multi-step inertial algorithm MSC:

47H07; 49J45; 65J22; 90C25

1. Introduction

Let H be a real Hilbert space with inner product and the induced norm . We consider the following monotone inclusion problem of the sum of three operators: find such that

where are maximally monotone operators and is a -cocoercive operator, . There have been numerous algorithms for solving the problem (1) when because of the wide applications of this problem in compressed sensing, image recovery, sparse optimization, machine learning, etc.; see [1,2,3,4,5,6,7], to name a few. Although in theory these algorithms can be used to solve the problem (1) by bundling as with , is hard to compute in practice. For the past few years, problem (1) has received a lot of attention, and several algorithms have been constructed for solving it.

In 2017, Davis and Yin [8] proposed the following three-operator algorithm:

Here , , , . Expression (2) also has the form

where the averaged operator T is defined by

Then [8] proved that the sequence generated by (2) converges weakly to a fixed point of T under some suitable conditions by utilizing the averageness of T. In turn, solves problem (1).

Soon after, Cui, Tang and Yang [9] put forward the inertial version of the algorithm (2):

to improve the convergence speed of the algorithm (2). For the problem (1) in which , B is monotone and L-Lipschitz continuous, Malitsky and Tam [10] proposed the forward-reflected-backward algorithm to solve problem (1), which consists of iterating

Under some appropriate conditions, they proved the convergence of (6). Furthermore, Zong et al. [11] introduced the inertial semi-forward-reflected-backward splitting algorithm to address (1):

and proved the weak convergence of the algorithm. Zhang and Chen [12] proposed the parameterized three-operator splitting algorithm:

which generalizes the parameterized Douglas–Rachford algorithm [13]. They proved that the sequence generated by the regularization of (8) converges to the least-norm solution of problem (1). Other algorithms for solving (1) have also been investigated in recent years; see [14,15,16].

In order to speed up the convergence of iterative algorithms, scholars often use acceleration techniques. The inertial extrapolation technique and variable metric technique are two popular acceleration methods. The inertial extrapolation technique, or heavy ball method [17], has been widely studied in past decades; please see [18,19,20,21,22,23] and the references therein. The variable metric technique is a method to improve the convergence speed of the corresponding algorithm by changing the step size in each iteration. This method has been widely used in various optimization problems in the past decades. To solve the monotone inclusion problem of the sum of two operators, Rockafeller [24] first combined the variable metric strategy with the forward-backward algorithm in 1997. For solving the monotone inclusion of the sum of two operators, Combettes [25] proposed the following variable metric forward-backward splitting algorithm

where and are absolutely summable sequences in H, is a linear bounded self-adjoint positive operator sequence defined on H, and proved its strong convergence. Bonettini [26] proposed an inexact inertial variable metric proximity gradient algorithm. In [27], the author studied the variable metric forward-backward splitting algorithm for convex minimization problems without the Lipschitz continuity assumption of the gradient and proved the weak convergence of the iteration. Further, in [28], Audrey and Yves proposed a variable metric forward-backward-based algorithm for solving problems of the sum of two nonconvex functions. In [29], the authors addressed the weak convergence of a nonlinearly preconditioned forward-backward splitting method for the sum of a maximally hypermonotone operator A and a hypercocoercive operator B. For other applications of variable metric techniques, see [30,31,32] and the references therein.

Inspired by the above work, we propose a parameterized variable metric three-operator algorithm and a multi-step inertial parameterized variable metric three-operator algorithm. Furthermore, instead of requiring the averageness or nonexpansiveness of the operator, we directly analyze the convergence of the proposed iterative algorithm.

The rest of this paper is organized as follows. In Section 2, we recall some basic definitions and important lemmas. In Section 3, we propose the parameterized variable metric three-operator algorithm and prove its strong convergence. Then we introduce a multi-step inertial parameterized variable metric three-operator algorithm and prove a strong convergence result of it. In Section 4, we show the performance of the proposed iterative algorithm under different parameters and illustrate the feasibility of the algorithm with numerical examples.

2. Preliminaries

In this section, we list the necessary symbols, notations, definitions and lemmas and certify some results used in this paper. Let be the space of bounded linear operators from H to H. Set , where denotes the adjoint of L, and , . Given , define the M-inner product on H by for all . So the corresponding M-norm on H is defined as for all . The strong convergence of a sequence is denoted by → and the symbol ⇀ means weak convergence. means the identity operator. denotes the set of sequences in such that . Let be an operator. We denote the fixed point set of T by , that is, . Let be a set-valued operator. We denote its graph and domain by and , respectively. In this paper, let S be the solution set of problem (1) and we always assume that .

Definition 1.

Let be an operator. T is said to be

([33]) τ-cocoercive, if

([34]) demiregular at if with and as , it follows that as .

Definition 2

([33]). Let be an operator. A is said to be

monotone if

strictly monotone if

β-strongly monotone if

maximally monotone if

uniformly monotone with modulus if ϕ is increasing and vanishes only at 0 and

Definition 3

([33]). Let D be a nonempty subset of H. Let be a sequence in H. is called Fejr monotone with respect to D if

Lemma 1

([33]). Let be a maximally monotone operator and let be a linear bounded self-adjoint and strongly monotone operator. Then

is maximally monotone operator.

, is firmly nonexpansive with respect to M-norm, that is,

.

Lemma 2

([35]). Let , , , we have

Lemma 3

([33]). Let Ω be a nonempty subset of H. Let be a sequence in H. If the following conditions hold:

, exists;

every weak sequential cluster point of is in Ω.

- Then the sequence converges weakly to a point in Ω.

Lemma 4

([36]). Let be a sequence in , , such that

Then converges.

3. Iterative Algorithms and Convergence Analyses

In this section, we propose the parameterized variable metric three-operator algorithm and multi-step inertial parameterized variable metric three-operator algorithm to solve the approximate solution of the problem (1). The weak and strong convergence results are obtained. Both algorithms require the following assumptions:

Assumption 1.

are maximally monotone operators, is a β-cocoercive operator, .

Assumption 2.

and the solution set of is nonempty.

The following Proposition is mainly used to prove the convergence of the proposed algorithm.

Proposition 1.

Let be maximally monotone operators, be a β-cocoercive operator and . Let , and . Denote by

Then and , where . Moreover,

Proof of Proposition 1.

Let . We have

In turn, if , there exists such that . Each of the above steps can be worked backward. It follows that .

That is a trivial result.

Next, we show that (10) holds.

The proof is completed. □

Proposition 2.

Let be maximally monotone operators, be an operator. Let , , , . Given , denote by

Then we have

Further, if A or B is uniformly monotone with modulus ϕ, then the above inequality holds with 0 on the left replaced by or .

Proof of Proposition 2.

Adding the above two inequalities, we obtain

Notice that

and that

By , we have,

Further, if A or B is uniformly monotone, we just need to replace 0 with or from (12). □

3.1. Parameterized Variable Metric Three-Operator Algorithm

In this subsection, we study the following parameterized variable metric three-operator algorithm and its convergence.

Pick any ,

where , , . .

Theorem 1.

Let be generated by Algorithm (13). Suppose that the following conditions hold:

(C1) , ;

(C2) , ;

(C3) , .

- Then we have

- 1.

- is bounded;

- 2.

- , , , , , where , is defined as Proposition 1;

- 3.

- Suppose that one of the following holds:

- (a)

- A is uniformly monotone on every nonempty bounded subset of ;

- (b)

- B is uniformly monotone on every nonempty bounded subset of ;

- (c)

- , C is demiregular at x,

then .

Proof of Theorem 1.

1. Set . By (13), we have

Let . Then there exist and , respectively, such that and in view of Proposition 1, where . By taking , , and noting that , , in Proposition 2, we obtain

Multiplying the first two terms on the right of (15) by , we obtain

Since C is a -cocoercive operator, it follows from Young’s inequality that

Furthermore, the last term of (15) can be expressed by utilizing Young’s inequality again as

where the positive constant satisfies

which implies that .

Now, substituting (16)–(18) into (15), we derive

Thereby,

It yields

since by (19). Hence, we obtain from and Proposition 1 that and

Note that because . Thus we have exists by Lemma 4. As a result, is bounded.

In addition, we have by applying Lemma 1

So is a bounded sequence. Similarly, is bounded.

2. In Proposition 2, we take , , , , then , , and . Thus,

Similar to (17) and (18), we obtain the following estimations of the third and the last terms of the right side in (21), respectively,

and

where is given by (19).

Multiplying (21) by 2 and substituting (22) and (23) into (21), we conclude that

From , we have

since by (19). Let

We have by virtue of the fact that and , , are bounded sequences. Therefore, converges from Lemma 4.

Next, we show that converges to 0 as n goes to infinity.

Rearranging terms in (20), we have

where due to the boundedness of and (C2), (C3).

Summing on both sides of (24) from 0 to k, we obtain as k goes to infinity

By and (19), it has . Thus, in view of (25). Hence, , that is as .

For simplicity, denote by . From 1., , , and are bounded sequences. Assume that is a weak limit point of the sequence . Then there exists its subsequence such that .

Define by

Then F is a maximally monotone operator (see [33] (Example 20.35, Corollary 25.5(i))). From (14), we obtain

Noting that , , by , and that is sequentially closed in , we deduce

In other words,

Thus . This gives according to Lemma 3. Furthermore, by Proposition 1. Since is the unique weak limit point of , and are the unique weak limit points of , , respectively. So, , . By , we have .

Taking , , and in Proposition 2 and applying (17) and (18), we have

Then,

That is, as .

3. As seen in 2., there exists such that as .

Set . Obviously, O is a bounded subset of . Since A is uniformly monotone on O, which means that there exists an increasing function satisfying that if and only if , which allows us to write the result of Proposition 2 as if we set , , ,

Hence, we have by using (18) and the results of 2.

where is a positive constant satisfying (19). follows from and the definition of . Moreover, converges strongly to holds due to .

(b) The proof is the same as (a).

(c) From 2., we have and . The demiregularity of C at guarantees as . □

Remark 1.

The operator T defined in Proposition 1 is similar in form to the operators that appeared in [8] (Proposition 2.1) and [12] (Lemma 3.4), but it is no longer an averaged operator. We prove the weak and strong convergence results of the proposed algorithm in this more general case.

3.2. Multi-Step Inertial Parameterized Variable Metric Three-Operator Algorithm

In this subsection, we present the multi-step inertial parameterized variable metric three-operator algorithm, which combines Algorithm (13) and the multi-step inertial technique. Meanwhile, we show the convergence of the proposed algorithm.

Let and . Let . Choose , , and set

where , .

Theorem 2.

Let be defined by Algorithm (26), be defined as Proposition 1. Set , and provided the following conditions hold:

(C3) , ;

(C4) such that ;

(C5) , , and

Then the following assertions hold:

- 1.

- For every , exists;

- 2.

- , converge weakly to the same point of , , converge weakly to the same point of ;

- 3.

- Suppose that one of the following holds:

- (a)

- A is uniformly monotone on every nonempty bounded subset of ;

- (b)

- B is uniformly monotone on every nonempty bounded subset of ;

- (c)

- , C is demiregular at x,

- then , converge strongly to the same point of .

Proof of Theorem 2.

1. Given , arbitrarily, there exists such that , and for this , there exists such that according to Proposition 1. We choose , , in Proposition 2. Then we have , , , and

Applying Lemma 2, we have

As same as (17) and (18), we obtain

where .

Combining (28)–(31), we have

from which it follows that

Since , it has

which implies

By (C4), (C5) and Lemma 4, we have exists and is bounded. Hence, , and are bounded.

2. Since and (27) holds, for all , it has

Particularly,

Further, we have

By (32) and (33), we have

which indicates that .

Since . Then, .

Set . Let be a weak limit point of the sequence . Then there exists a subsequence such that . Without loss of generality, we assume that such that . In addition, we have as by (C4).

Define a maximally monotone operator by

By (26), we have

Therefore, from (34), we obtain

Since is sequentially closed in ,

it yields that

Hence, . Using Lemma 3, . Meanwhile, by . Furthermore, by Proposition 1. From that , and , we conclude that , . Using , we obtain .

By (30) and (31) and Proposition 2 with , , , , we have

Hence, .

3. Taking , , , and using similar arguments in the proof of 3. in Theorem 1, we obtain and , where . □

Remark 2.

If , (27) reduces to

(35) can be implemented by the following simple online updating rule

where , and are summable sequences for each . For example, one can choose

4. Numerical Results

We consider the following LASSO problem with a nonnegative constraint:

where , , . Let be the indicator function of the closed convex set C, that is,

Set , and . Obviously, , is -Lipschitz continuous and the i-th component of is

where . Similarly, we have

Problem (36) is equivalent to the problem of finding such that

Select any initial value , , , . Set , , , , , , and take eps as the stopping criteria. In the following, we denote Algorithm (13) as PVMTO and Algorithm (26) as MIPVMTO.

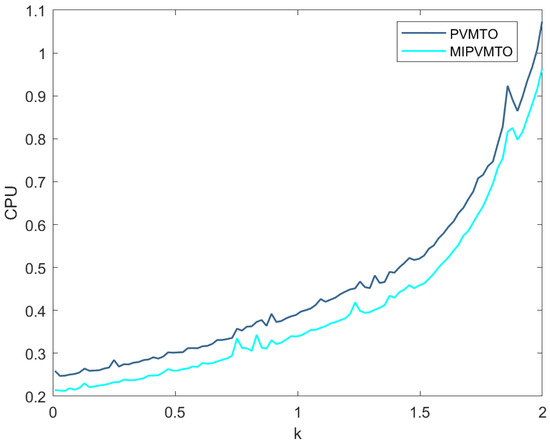

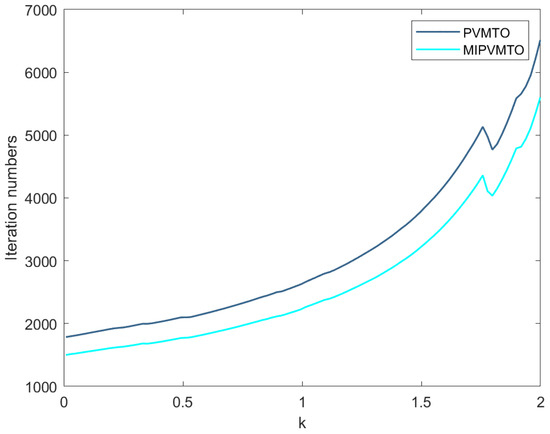

In Algorithms (13) and (26) take , , and take , , eps . In Figure 1 and Figure 2, we show the effect of the parameter D on the CPU time and iteration numbers of the PVMTO and the MIPVMTO. As can be seen from the figure, compared with PVMTO, MIPVMTO has a great improvement in the number of iterations and CPU. Furthermore, we list the CPU time of PVMTO and MIPVMTO at different stopping criteria and different D in Table 1.

Figure 1.

The effect of on CPU (s).

Figure 2.

The effect of on iteration numbers.

Table 1.

Numerical results of the PVMTO (Algorithm (13)) and the MIPVMTO (Algorithm (26)).

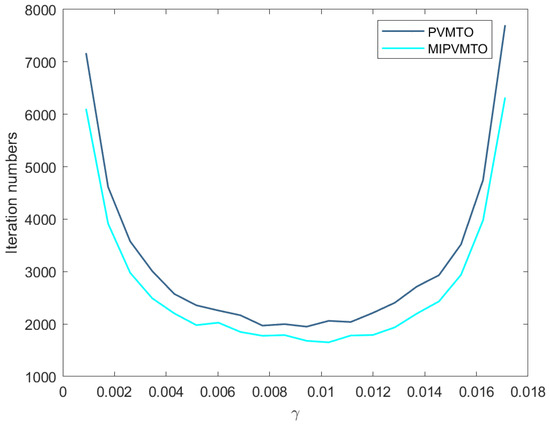

In Algorithms (13) and (26) take , , , and eps . The effect of choosing different on the number of iterations of the two algorithms is shown in Figure 3. It can be concluded that, when , the number of iterations of our two proposed algorithms is the least, and the number of iterations of MIPVMTO is less than that of the PVMTO.

Figure 3.

Different with .

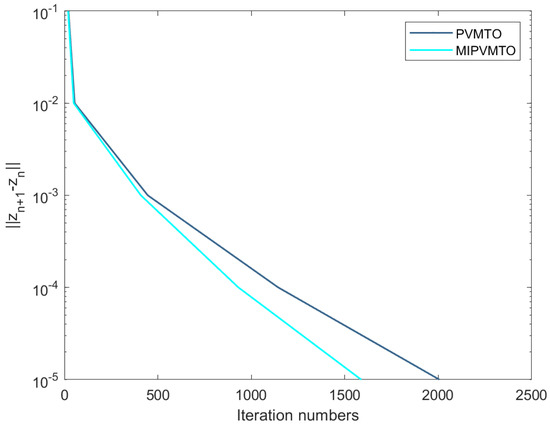

In Algorithms (13) and (26) take , and , . In Figure 4, we compare the number of iterations of PVMTO and MIPVMTO under different stopping criteria, and it can be seen that the parameterized variable metric three-operator algorithm with inertia term has more advantages.

Figure 4.

Numerical results with different stopping criterion.

5. Conclusions

In this paper, we propose a parameterized variable metric three-operator algorithm to solve the monotone inclusion problem involving the sum of three operators and prove the strong convergence of the algorithm under some appropriate conditions. The multi-step inertial parameterized variable metric three-operator algorithm is also proposed and its strong convergence is analyzed in order to speed up the parameterized variable metric three-operator algorithm. To a certain extent, the proposed algorithm can be seen as a generalization of the parameterized three-operator algorithm [12]. The constructed numerical examples show the efficiency of the proposed algorithms and the effects of the choices of the parameter operator D on running time. In future development, we can consider proving that the regularization of parameterized variable metric three-operator algorithm converges to the least norm solution of the sum of three maximally monotone operators as shown in [12] and show the real applications of the algorithms in practice. Another direction of consideration is to study the self-adaption version of the currently proposed algorithms.

Author Contributions

All authors contributed equally to this article. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Fundamental Research Funds for the Central Universities under Grant No. 3122019185.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Acknowledgments

The authors sincerely thank the reviewers and the editors for their valuable comments and suggestions, which will make this paper more readable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Qin, X.; An, N.T. Smoothing algorithms for computing the projection onto a minkowski sum of convex sets. Comput. Optim. Appl. 2019, 74, 821–850. [Google Scholar] [CrossRef]

- Combettes, P.L.; Wajs, V.R. Signal recovery by proximal forward-backward splitting. Multiscale Model. Simul. 2005, 4, 1168–1200. [Google Scholar] [CrossRef]

- Izuchukwu, C.; Reich, S.; Shehu, Y. Strong convergence of forward–reflected–backward splitting methods for solving monotone inclusions with applications to image restoration and optimal control. J. Sci. Comput. 2023, 94, 73. [Google Scholar] [CrossRef]

- Briceño-Arias, L.M.; Combettes, P.L. Monotone operator methods for nash equilibria in non-potential games. Comput. Anal. Math. 2013, 50, 143–159. [Google Scholar]

- An, N.T.; Nam, N.M.; Qin, X. Solving k-center problems involving sets based on optimization techniques. J. Glob. Optim. 2020, 76, 189–209. [Google Scholar] [CrossRef]

- Nemirovski, A.; Juditsky, A.B.; Lan, G.; Shapiro, A. Robust stochastic approximation approach to stochastic programming. SIAM J. Optim. 2009, 19, 1574–1609. [Google Scholar] [CrossRef]

- Tang, Y.; Wen, M.; Zeng, T. Preconditioned three-operator splitting algorithm with applications to image restoration. J. Sci. Comput. 2022, 92, 106. [Google Scholar] [CrossRef]

- Davis, D.; Yin, W. A three-operator splitting scheme and its optimization applications. Set-Valued Var. Anal. 2017, 25, 829–858. [Google Scholar] [CrossRef]

- Cui, F.; Tang, Y.; Yang, Y. An inertial three-operator splitting algorithm with applications to image inpainting. arXiv 2019, arXiv:1904.11684. [Google Scholar]

- Malitsky, Y.; Tam, M.K. A forward-backward splitting method for monotone inclusions without cocoercivity. SIAM J. Optim. 2020, 30, 1451–1472. [Google Scholar] [CrossRef]

- Zong, C.; Tang, Y.; Zhang, G. An inertial semi-forward-reflected-backward splitting and its application. Acta Math. Sin. Engl. Ser. 2022, 38, 443–464. [Google Scholar] [CrossRef]

- Zhang, C.; Chen, J. A parameterized three-operator splitting algorithm and its expansion. J. Nonlinear Var. Anal. 2021, 5, 211–226. [Google Scholar]

- Wang, D.; Wang, X. A parameterized Douglas-Rachford algorithm. Comput. Optim. Appl. 2021, 164, 263–284. [Google Scholar] [CrossRef]

- Ryu, E.K.; Vũ, B.C. Finding the forward-Douglas–Rachford-forward method. J. Optim. Theory Appl. 2020, 184, 858–876. [Google Scholar] [CrossRef]

- Yan, M. A primal-dual three-operator splitting scheme. arXiv 2016, arXiv:1611.09805v1. [Google Scholar]

- Briceño-Arias, L.M.; Davis, D. Forward-backward-half forward algorithm for solving monotone inclusions. SIAM J. Optim. 2018, 28, 2839–2871. [Google Scholar] [CrossRef]

- Polyak, B.T. Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Chen, C.; Chan, R.H.; Ma, S.; Yang, J. Inertial proximal ADMM for linearly constrained separable convex optimization. SIAM J. Imaging Sci. 2015, 8, 2239–2267. [Google Scholar] [CrossRef]

- Combettes, P.L.; Glaudin, L.E. Quasi-nonexpansive iterations on the affine hull of orbits: From Mann’s mean value algorithm to inertial methods. SIAM J. Optim. 2017, 27, 2356–2380. [Google Scholar] [CrossRef]

- Qin, X.; Wang, L.; Yao, J.C. Inertial splitting method for maximal monotone mappings. J. Nonlinear Convex. Anal. 2020, 21, 2325–2333. [Google Scholar]

- Dey, S. A hybrid inertial and contraction proximal point algorithm for monotone variational inclusions. Numer. Algorithms 2023, 93, 1–25. [Google Scholar] [CrossRef]

- Ochs, P.; Chen, Y.; Brox, T.; Pock, T. iPiano: Inertial proximal algorithm for nonconvex optimization. SIAM J. Imaging Sci. 2014, 7, 1388–1419. [Google Scholar] [CrossRef]

- Dong, Q.L.; Lu, Y.Y.; Yang, J.F. The extragradient algorithm with inertial effects for solving the variational inequality. Optimization 2016, 65, 2217–2226. [Google Scholar] [CrossRef]

- Chen, G.H.G.; Rockafellar, R.T. Convergence rates in forward-backward splitting. SIAM J. Optim. 1997, 7, 421–444. [Google Scholar] [CrossRef]

- Combettes, P.L.; Vũ, B.C. Variable metric forward-backward splitting with applications to monotone inclusions in duality. Optimization 2014, 63, 1289–1318. [Google Scholar] [CrossRef]

- Bonettini, S.; Porta, F.; Ruggiero, V. A variable metric forward-backward method with extrapolation. SIAM J. Sci. Comput. 2016, 38, A2558–A2584. [Google Scholar] [CrossRef]

- Salzo, S. The variable metric forward-backward splitting algorithm under mild differentiability assumptions. SIAM J. Optim. 2017, 27, 2153–2181. [Google Scholar] [CrossRef]

- Audrey, R.; Yves, W. Variable metric forward-backward algorithm for composite minimization problems. SIAM J. Optim. 2021, 31, 1215–1241. [Google Scholar]

- Vũ, B.C.; Papadimitriou, D. A nonlinearly preconditioned forward-backward splitting method and applications. Numer. Funct. Anal. Optim. 2022, 42, 1880–1895. [Google Scholar] [CrossRef]

- Bonettini, S.; Rebegoldi, S.; Ruggiero, V. Inertial variable metric techniques for the inexact forward-backward algorithm. SIAM J. Sci. Comput. 2018, 40, A3180–A3210. [Google Scholar] [CrossRef]

- Lorenz, D.; Pock, T. An inertial forward-backward algorithm for monotone inclusions. J. Math. Imaging Vis. 2015, 51, 311–325. [Google Scholar] [CrossRef]

- Cui, F.; Tang, Y.; Zhu, C. Convergence analysis of a variable metric forward-backward splitting algorithm with applications. J. Inequal. Appl. 2019, 141, 1–27. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. CMS books in mathematics. In Convex Analysis and Monotone Operator Theory in Hilbert Spaces, 2nd ed.; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Aragón-Artacho, F.J.; Torregrosa-Belén, D. A Direct Proof of Convergence of Davis-Yin Splitting Algorithm Allowing Larger Stepsizes. Set-Valued Var. Anal. 2022, 30, 1011–1029. [Google Scholar] [CrossRef]

- Marino, G.; Xu, H.K. Convergence of generalized proximal point algorithms. Commun. Pure Appl. Anal. 2004, 3, 791–808. [Google Scholar] [CrossRef]

- Combettes, P.L.; Vũ, B.C. Variable metric quasi-Fejér monotonicity. Nonlinear Anal. 2012, 78, 17–31. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).