Abstract

In this paper, a novel method for the automatic classification of coronary stenosis based on a feature selection strategy driven by a hybrid evolutionary algorithm is proposed. The main contribution is the characterization of the coronary stenosis anomaly based on the automatic selection of an efficient feature subset. The initial feature set consists of 49 features involving intensity, texture and morphology. Since the feature selection search space was O(), being , it was treated as a high-dimensional combinatorial problem. For this reason, different single and hybrid evolutionary algorithms were compared, where the hybrid method based on the Boltzmann univariate marginal distribution algorithm (BUMDA) and simulated annealing (SA) achieved the best performance using a training set of X-ray coronary angiograms. Moreover, two different databases with 500 and 2700 stenosis images, respectively, were used for training and testing of the proposed method. In the experimental results, the proposed method for feature selection obtained a subset of 11 features, achieving a feature reduction rate of and a classification accuracy of using the training set. In the testing step, the proposed method was compared with different state-of-the-art classification methods in both databases, obtaining a classification accuracy and Jaccard coefficient of and in the first one, and and in the second one, respectively. In addition, based on the proposed method’s execution time for testing images (0.02 s per image), it can be highly suitable for use as part of a clinical decision support system.

1. Introduction

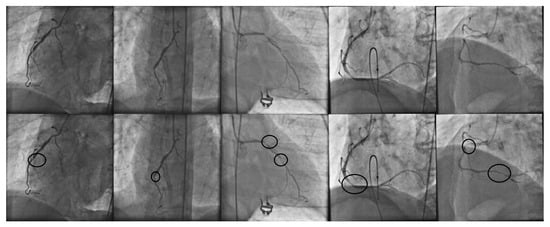

Coronary heart disease is the main cause of morbidity all over the world [1]. Consequently, it is highly important for coronary stenosis to be detected and diagnosed by cardiologists and addressed in computational science. Nowadays, X-ray coronary angiography is the main source of decision making in stenosis diagnosis. In order to detect coronary stenosis, a specialist performs an exhaustive visual examination of the entire angiogram, and based on their knowledge, the stenosis regions are labeled. In order to illustrate the challenging and laborious task carried out by the specialist in terms of the visual examination of coronary angiograms, in Figure 1, a set of X-ray angiograms along with manually detected stenosis regions is presented.

Figure 1.

(First row): set of X-ray coronary angiograms and the corresponding stenosis regions manually detected by cardiologist (second row).

The main disadvantages of working with X-ray coronary angiograms are the high noise levels and low-contrast regions, which make automatic vessel identification, measurement and classification tasks difficult. Moreover, for the automatic stenosis classification problem, some approaches have been reported. The method proposed by Saad [2] detects the presence of atherosclerosis in a coronary artery image using a vessel-width variation measure. The measurements are computed from a previously segmented image containing only vessel pixels and its corresponding skeleton in order to determine the vessel center line, from which the orthogonal line length of a fixed-size window is computed, moving through the image. Kishore and Jayanthi [3] applied a manually fixed-size window from an enhanced image. The vessel pixels were measured, adding them to intensity values in order to obtain a coronary stenosis grading measure. Other approaches make use of the Hessian matrix properties to enhance or extract vessel trees at the first stage. For instance, the works of Wan et al. [4], Sameh et al. [5], and Cervantes-Sanchez et al. [6] applied the Hessian matrix properties in order to enhance vessel pixels in coronary angiograms. The response image allows for the measurement and extraction of features related with vessel shapes that are used for the automatic classification and grading of coronary stenosis.

The use of classification techniques and search metaheuristics are additional approaches that have been used to address vessel disease problems. Cervantes-Sanchez et al. [7] proposed a Bayesian-based method using a feature vector that was extracted from the image histogram in order to classify stenosis cases. Taki et al. [8] achieved a competitive result in the categorization of calcified and noncalcified coronary artery plaques using a Bayesian-based classifier. The proposal of Welikala et al. [9] works with retinal vessels, applying a genetic algorithm to reduce the number of needed features that perform a correct classification of proliferative diabetic retinopathy cases. Sreng et al. [10], proposed a hybrid simulated annealing method to select relevant features that are then used with an ensemble bagging classifier in order to produce a suitable screening of the eye. The method of Chen et al. [11] works with a feature vector related with the morphology of bifurcated vessels in order to detect coronary artery disease. A fuzzy criterion was used by Giannoglou et al. [12] in order to select features in characterization of atherosclerotic plaques, and Wosiak and Zakrzewska [13], proposed an automatic feature selection method by integrating correlation and clustering strategies in cardiovascular disease diagnosis.

On the other hand, emergent evolution of deep learning techniques such as the convolutional neural network (CNN) have made it possible for them to be applied to the coronary artery disease problem [14]. CNN contains a set of layers (convolutional layers) focused on the automatic segmentation of the image in order to keep only the data that allow the CNN to achieve correct classification rates [15,16,17]. Antczak and Liberadzki [18], proposed a method that is able to generate synthetic coronary stenosis and nonstenosis patches in order to improve the CNN training rates. The strategy proposed by Ovalle et al. [19] makes use of a transfer learning [20,21,22] strategy in order to achieve correct training and classification rates with complex CNN architectures. One of the main drawbacks of CNN is the need for large-instance databases in order to achieve correct training rates. Data augmentation techniques [23,24,25,26,27] are commonly used as a way to generate large amounts of instances that are used in the training and testing of the CNN. In addition, it is difficult for a CNN to identify which features are really useful for a correct classification and what they represent [28,29].

In the present paper, a novel method for the automatic classification of coronary stenosis based on feature selection and a hybrid evolutionary algorithm in X-ray angiograms is presented. The proposed method uses the evolutionary computation technique for addressing the high-dimensional problem of selecting an efficient subset of features from a bank of 49 features, where the problem is a computational complexity of O. To select the best evolutionary technique, different population-based strategies were compared in terms of feature reduction and classification accuracy using a training set of coronary stenosis images. From the comparative analysis, the Boltzmann univariate marginal distribution algorithm (BUMDA) and simulated annealing (SA) were selected for further analysis. In the experiments, two different databases were used. The first database was provided by the Mexican Institute of Social Security (IMSS), and it contains 500 images. The second database corresponds to Antczak [18], which is publicly available and contains 2700 patches. Finally, the proposed method was compared with different state-of-the-art classification methods in terms of classification accuracy and Jaccard coefficient, working with both databases in order to show the classification robustness achieved by the subset of 11 features, which were obtained from the feature selection step using the hybrid BUMDA-SA evolutionary technique.

The remaining of this paper is as follows. In Section 2, the background methodology is described. Section 3 presents the proposed method and the hybrid approach that performs the automatic feature selection task. In Section 4, the experiment details and results are described, and finally, conclusions are given in Section 5.

2. Methods

In this section, the strategies and techniques related to the proposed method are described in detail. Section 2.1 starts describing feature selection techniques from the literature in order to extract distinct types of them, such as texture, intensity and morphology. Consequently, in Section 2.2, the Boltzmann univariate marginal distribution algorithm and the simulated annealing strategies are described, since they comprise the hybrid evolutionary approach used in the automatic feature selection stage. Finally, in Section 2.3, the support vector machine technique is described, because it is used as the classifier in order to determine if a given instance, which is composed of a feature vector, is classified as positive (stenosis case) or negative (nonstenosis case).

2.1. Feature Extraction

In digital image processing, feature extraction is an important task, because it allows properties or interest objects of an image (global features) to be described, and it is also possible to extract features from specific regions (local features) [30,31]. Different feature types can be extracted from an image, as reported in [32,33,34]. Based on their type, features can be classified as being related to texture, intensity or morphology.

2.1.1. Texture Features

Texture features have had high relevance in different cardiovascular problems [9,35,36,37,38,39]. The most widely used approach in texture feature extraction for grayscale images is the gray-level co-occurrence matrix (GLCM) [40,41,42,43]. The GLCM measures the frequency of variation between gray levels from a given point in the image. It is represented as a matrix, whose rows and columns correspond to the intensity pixels of the entire image or a region from it. The variation frequencies are computed based on a specific spatial relationship (offset) denoted by between a pixel with intensity i and another pixel with intensity j, as follows:

where is the frequency at which two pixels with intensities i and j at an specific offset occur; n and m represent the height and width of the image, respectively. and are the pixel values in image I.

In addition, the Radon transform is also used for texture analysis in medical image processing and feature extraction [44,45,46]. The Radon transform is an alternative way to represent an image. Instead of the original spatial domain of the image, the Radon transform is the projection of the image intensity along with a radial line oriented at some specific angle. It can be computed as follows:

where is the Radon transform of a function at an angle ; is the Dirac delta function, which is zero, except when and in the definition of the Radon transform forces the integration of along the line .

2.1.2. Shape Features

Shape-based features allow measurable information to be extracted about different aspects related to the shape of the arteries, such as the length of a segment, its tortuosity, the number of bifurcations of a segment, the vessel width, etc. However, in order to obtain correct data from shape-based features, a previous segmentation of the original image is required to discriminate noninterest information such as noise and background. In the present work, the Frangi method [47] was used in order to extract vessel information. The Frangi method works with the Hessian matrix, which is the result of the second-order derivative of a Gaussian kernel that is convolved with the original image. The Gaussian kernel is represented as follows:

where is the spread of the Gaussian profile and L is the length of the vessel segment.

The resultant Hessian matrix is expressed as follows:

where , , and are the different convolution responses of the original image with each second-order partial derivative of the Gaussian kernel.

The segmentation function defined by Frangi for 2D vessel detection is as follows:

The parameter is used with to control the shape discrimination. The parameter is used by for noise elimination. and are calculated as follows:

where and are the eigenvalues of Hessian matrix.

Since the filter response of the Frangi method can be represented as a grayscale image, an automatic thresholding strategy has to be applied in order to classify vessel and nonvessel pixels. In the Otsu method [48], the threshold value is computed automatically based on the pixel intensities, from which a weighted sum of variance of the two classes is performed. The threshold is computed as follows:

where and weights are the probabilities of the two classes separated by a threshold t, and and are the statistical variances of and , respectively.

On the other hand, several vessel shape-based features are computed from the skeleton of the arteries. In order to extract the vessel skeleton from a previously enhanced image, the medial axis transform is widely used. It is commonly implemented using the Voronoi method, expressed as follows:

where is the Voronoi region associated with the site (a tuple of nonempty subsets in space X), which contains the set with all points in X whose distance to is not greater than their distance to the other sites . j is any index different from k. is a closeness measure from point x to point . In this part, as a measure of closeness, the Euclidean distance is the most commonly used norm, which is defined as follows:

where is the Euclidean distance between points p and q, i is the value of the points in each corresponding dimension and n is the number of dimensions in which p and q are represented.

2.2. Metaheuristics

Selecting features that are relevant for classification in a specific problem is a challenging task. The total number of different feature combinations that can occur is denoted by , where n is the number of features involved in the studied problem. In this context, the use of search metaheuristics and evolutionary strategies are convenient for addressing the problem.

2.2.1. Simulated Annealing

The simulated annealing (SA) algorithm is a stochastic optimization technique that was inspired from the annealing procedure in metallurgy and ceramics. The goal is a reduction in defects in solid materials by performing controlled heating and cooling steps. In the annealing process, the material is exposed to high temperatures. When a determined temperature is reached, the material is exposed to a controlled cooling process, keeping an optimal equilibrium of their molecules at all times through its correct alignment. The heating and cooling procedures are decisive in order to obtain the final structure; if the initial temperature was not high enough or the cooling process was too slow or too fast, the resultant material will present defects called metastable states. Kirkpatrick et al. [49] adapted the procedure to the computational field. It is useful for combinatorial and continuous problems where the search space is high-dimensional and difficult to explore exhaustively. The algorithm starts with an initial random solution. In each iteration, the parameters , and are used to generate a new solution by varying the old one according to a probability that depends on the current temperature and the decreasing parameter, based on the objective function. The probability is computed by applying the Boltzmann distribution as follows:

where is the probability based on the Boltzmann distribution; is the response of the objective function evaluating the current solution denoted by ; is the value of the objective function evaluated with the previous solution (denoted by s).

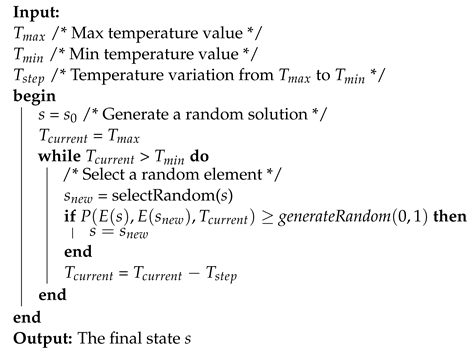

The simulated annealing pseudocode is described in Algorithm 1.

| Algorithm 1: Simulated annealing pseudocode. |

|

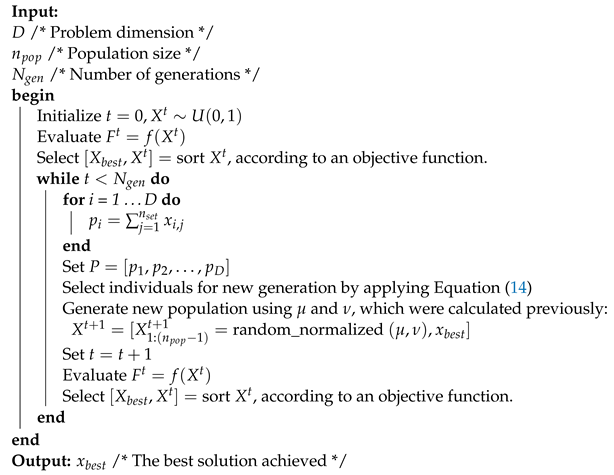

2.2.2. Boltzmann Univariate Marginal Distribution Algorithm

The Boltzmann univariate marginal distribution algorithm (BUMDA) [50] is an evolutionary computation technique from the family of estimation of distribution algorithms (EDAs) [51]. In EDAs, new populations are generated based on the probability distribution over the search space of the current generation [52]. BUMDA uses the Boltzmann probability distribution, which makes use of the mean and variance as follows:

where y are the objective function mean and variance obtained from the population, respectively. is the value of the objective function obtained by the individual , which belongs to population X. Consequently, similar to UMDA [53], a fraction of the best individuals are considered to generate the new population. However, in BUMDA, the selection rate for the new population is computed according to a selection threshold , as follows:

where npop is the population size.

Only those individuals whose objective function value is higher or equal than will be considered for the Boltzmann distribution and the generation of the new population. The BUMDA pseudocode is described in Algorithm 2.

| Algorithm 2: BUMDA pseudocode |

|

BUMDA presents several advantages with respect to another population-based metaheuristics. For instance, in BUMDA, only the population size and the max number of generations are required, because the selection rate is computed automatically. In addition, the use of the Boltzmann distribution helps to generate populations with widely dispersed individuals, which decreases the risk of falling into a local-optima solution.

2.3. Support Vector Machines

Support vector machines (SVMs) are supervised learning strategies designed at first as lineal separators for binary classification [54,55]. When the instances are not linearly separable (classifiable) in their original representation space, the SVM projects the instances from their original representation space to higher-dimensional orders, where the linear classification can be made [56]. In order to perform the projections, the SVM makes use of those instances lying in both sides of the separation line (), plane () or hyperplane ( or higher). The hyperplane depends only on the support vectors and not on any other observations. The projection of the training instances in a space to a higher-dimensional feature space is performed via a Mercer kernel operator. For given training data , that are vectors in some spaces ; the support vectors can be considered as a set of classifiers expressed as follows [57]:

When K satisfies the Mercer condition [58], it can be expressed as follows:

where and “·” denotes an inner product. With this assumption, f can be rewritten as follows:

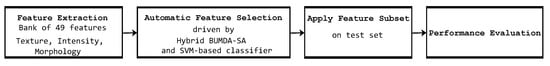

3. Proposed Method

The proposed method consists of the steps of feature extraction, automatic feature selection, feature subset testing and performance evaluation. The first stage is focused in the extraction of 49 features from the image database by considering texture, intensity and morphology feature types. The extracted texture features were those proposed by Haralik [59], and the morphological features were based on Welikala [9]. The bank of 49 features is described below.

- 1.

- The minimum pixel intensity present in the patch.

- 2.

- The maximum pixel intensity present in the patch.

- 3.

- The mean pixel intensity in the patch.

- 4.

- The standard deviation of the pixel intensities in the patch.

- 5–18.

- Features 5 to 18 are composed of the Haralik features: angular second moment (energy), contrast, correlation, variance, inverse difference moment (homogeneity), sum average, sum variance, sum entropy, entropy, difference variance, difference entropy, information measure of correlation 1, information measure of correlation 2, maximum correlation coefficient.

- 19–32.

- The Haralik features applied to the Radon transform response of the patch: angular second moment (energy), contrast, correlation, variance, inverse difference moment (homogeneity), sum average, sum variance, sum entropy, entropy, difference variance, difference entropy, information measure of correlation 1, information measure of correlation 2, maximum correlation coefficient.

- 33.

- The Radon ratio-X measure.

- 34.

- The Radon ratio-Y measure.

- 35.

- The mean of pixel intensities from the Radon transform response of the patch.

- 36.

- The standard deviation of the pixel intensities from the Radon transform response of the patch.

- 37.

- The vessel pixel count in the patch.

- 38.

- The vessel segment count in the patch.

- 39.

- Vessel density. The rate of vessel pixels in the patch.

- 40.

- Tortuosity 1. The tortuosity of each segment is calculated using the true length (measured with the chain code) divided by the Euclidean length. The mean tortuosity is calculated from all the segments within the patch.

- 41.

- Sum of vessel lengths.

- 42.

- Number of bifurcation points. The number of bifurcation points within the patch when vessel segments were extracted.

- 43.

- Gray-level coefficient of variation. The ratio of the standard deviation to the mean of the gray level of all segment pixels within the patch.

- 44.

- Gradient mean. The mean gradient magnitude along all segment pixels within the subwindow, calculated using the Sobel gradient operator applied on the preprocessed image.

- 45.

- Gradient coefficient of variation. The ratio of the standard deviation to the mean of the gradient of all segment pixels within the subwindow.

- 46.

- Mean vessel width. Skeletonization correlates to vessel center lines. The distance from the segment pixel to the closest boundary point of the vessel using the vessel map prior to skeletonization. This gives the half-width at that point, which is then multiplied by 2 to achieve the full vessel width. The mean is calculated for all segment pixels within the subwindow.

- 47.

- The minimum standard deviation of the vessel length, based on the vessel segments present in the patch. The segments are obtained by the tortuosity points along the vessel.

- 48.

- The maximum standard deviation of the vessel length, based on the vessel segments present in the patch. The segments are obtained by the tortuosity points along the vessel.

- 49.

- The mean of the standard deviations of the vessel length, based on the vessel segments present in the patch. The segments are obtained by the tortuosity points along the vessel.

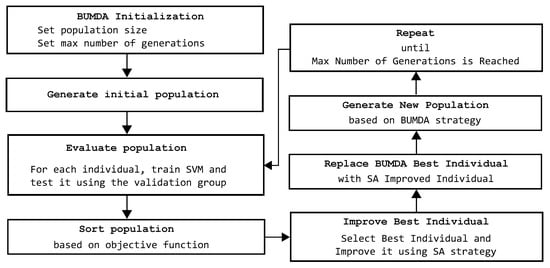

In Figure 2, the overall hybrid evolutionary proposed method steps are illustrated.

Figure 2.

Overall steps of the proposed method based on feature selection to classify coronary stenosis.

In the second step, the automatic feature selection task is performed. It is driven by the proposed hybrid evolutionary strategy involving the BUMDA and SA techniques. In this stage, BUMDA is initialized and iterated until the maximum number of generations is reached. In the third step, the selected feature subset is tested using testing cases, and finally, the obtained classification results are measured based on the accuracy and Jaccard coefficient metrics in order to evaluate their performance.

In Figure 3, the hybrid evolutionary strategy is described in detail. This stage of the proposed method is focused on the automatic feature selection task. It starts with the BUMDA initialization, requiring only the max number of generations and the population size. With BUMDA being a population-based technique, it produces a set of solutions on each iteration. Each solution indicates which features will be used and which will be discarded. Consequently, for each solution, a particular SVM is trained using only the feature subset expressed in the solution. On each BUMDA generation, different SVMs are trained according to each feature vector, which is represented by each individual in the BUMDA population. Based on the SVM training accuracy and the number of selected features, the best individual in each generation is selected. In the next step, the previously selected individual is improved by the SA strategy. Since SA is a single-solution technique, it is useful to improve the best solution produced by the BUMDA. If the SA-obtained result is higher than the best result obtained by BUMDA, its best individual is replaced by the individual improved by the SA. When the max number of BUMDA generations is reached, the individual with the highest fitness value over all generations is selected as the best solution achieved. This solution contains the selected feature subset, which will be directly applied on the test set of coronary stenosis images. In this stage, the use of a hybrid evolutionary strategy based on the BUMDA and SA techniques is relevant, because SA helps to further reduce the number of features represented in the best solution achieved in each BUMDA generation, at the same time keeping the training accuracy rate, or even improving it.

Figure 3.

Steps of the proposed hybrid evolutionary method focused in the automatic feature selection task in order to determine the best tradeoff between number of features and classification rate.

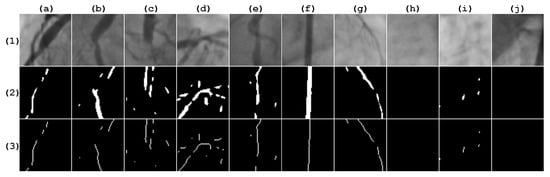

For the experiment, two different image databases were used. The first database was provided by the Mexican Social Security Institute (IMSS) and approved by a local committee under reference R-2019-1001-078. It contains 500 coronary image patches, with a proportion of for positive and negative stenosis cases. From this database, 400 instances were used for the automatic feature selection stage and the remaining 100 instances were used for testing after this stage ends. All patch sizes were pixels and were validated by a cardiologist. Figure 4 illustrates sample patches of the IMSS database with their respective vessel segmentation response and skeleton, according to the Frangi method, from which the morphological-based feature extraction task was performed.

Figure 4.

Patches taken from the IMSS database. Row (1) contains the original image. Row (2) contains the corresponding Frangi response, which is binarized, applying the Otsu method. Row (3) contains the corresponding vessel skeleton. Columns (a–e) are positive stenosis samples. Columns (f–j) are negative stenosis samples.

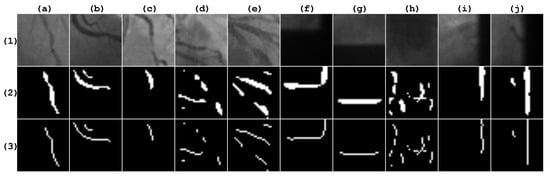

The second database was provided by Antczak [18], which is publicly available. It contains 2700 instances, which are also balanced for positive and negative stenosis cases. From this database, 2160 instances were used for training and the remaining 540 instances were used for testing. Figure 5 illustrates sample patches of the Antczak database with their respective vessel segmentation response and skeleton, according with the Frangi method.

Figure 5.

Patches taken from the Antczak database. Row (1) contains the original image. Row (2) contains the corresponding Frangi response, which is binarized with the Otsu method. Row (3) contains the corresponding vessel skeleton. Columns (a–e) are positive stenosis samples. Columns (f–j) are negative stenosis samples.

In order to evaluate the performance of the proposed method, the accuracy metric (Acc) and the Jaccard similarity coefficient () were adopted. The accuracy metric considers the fraction of correct classified cases as positive or negative by defining four necessary measures: true-positive cases (), true-negative cases (), false-positive cases () and false-negative cases. The value is the fraction of positive cases classified correctly. The value is the fraction of negative cases classified correctly. The cases is the fraction of negative cases classified as positive. The value is the fraction of positive cases classified as negative. Based on this, the accuracy is computed as follows:

The measures the similarity of two element sets. Applying this principle, it is possible to measure the accuracy of a classifier using only positive instances, as follows:

It is important to mention that only the IMSS database was used for the automatic feature selection stage. Furthermore, with the Antczak database, only the feature subset obtained by the proposed method was used in order to probe the method’s effectiveness. Additionally, classic search techniques from the literature, such as the Tabú search (TS) [60] and the iterated local search (ILS) [61], were also included in the experimentation. For the hybrid approaches, the simulated annealing strategy was used in all experiments in order to improve the best solution achieved for each particular technique.

4. Results and Discussion

In this section, the proposed method for feature selection and automatic classification is evaluated with different state-of-the-art methods using two databases of X-ray angiograms. All the experiments were performed using the Matlab software version 2018 on a computer with an Intel core i7 processor with 8 GB of RAM.

Table 1 describes the parameter settings of the compared methods used in the automatic feature selection stage, considering the same conditions for all of them in order to avoid biased measurements.

Table 1.

Main parameter settings of compared methods in the automatic feature selection stage.

The SA strategy was configured with , and . In order to ensure the obtained results, the proposed method was performed with 30 independent trials. For the SVM, 1000 max iterations were established using a cross-validation with . The parameter values for all techniques described previously were set taking into account the tradeoff between the classification accuracy and the execution time required to achieve a solution.

In Table 2, a comparative analysis related to the best results obtained by different strategies during the automatic feature selection stage is presented.

Table 2.

Comparative analysis of 30 runs between different evolutionary and path-based metaheuristics in terms of accuracy using the training set of the IMSS database. The SVM method was set as the classifier in the experiments.

Based on the results described in Table 2, the SVM training efficiency was improved in almost all cases when only a feature subset was used instead of the full set with 49 features. This behavior is because of the difficulty in projecting a high amount of overlapped data to dimensional orders higher than 49-D. Consequently, the proposed method achieved the best result since only 11 of 49 features were selected. This means that of the initial feature set was discriminated, achieving a training rate efficiency of at the same time in terms of the accuracy metric. In addition, some of the compared methods presented important variations on the best solution achieved according to the standard deviation accuracy, which gives some evidence of possible local-optima falls in some of the trials. In contrast, the standard deviation for the accuracy of the proposed method was lower, and considering the tradeoff between all measured factors, such as number of selected features, max training accuracy, mean training accuracy and standard deviation accuracy, the proposed method achieved the highest score.

After the automatic feature selection process was performed, in the next stage, the best feature subset, which was achieved by the hybrid BUMDA-SA method, was tested using the test cases from the IMSS and the Antczak databases, separately. Table 3 contains the corresponding confusion matrix, from which the accuracy and Jaccard coefficient metrics are computed.

Table 3.

Confusion matrix using 100 test cases from the IMSS database using the proposed method.

In Table 4, a comparative analysis between the proposed method and different state-of-the-art methods is presented, using the test set of 100 images of the IMSS database. The results of the proposed method are described based on the confusion matrix presented in Table 3.

Table 4.

Comparison of stenosis classification performance between the proposed method and different methods of the state of the art, using the test set of the IMSS database in terms of the accuracy and Jaccard coefficient.

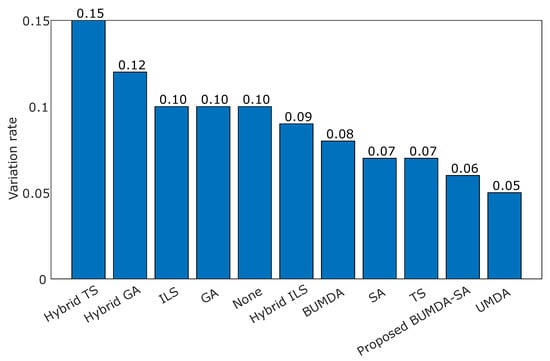

According to the data presented in Table 4, the proposed method achieved the highest classification rate in terms of the accuracy and Jaccard coefficient metrics, whose values were and , respectively. By contrasting the accuracy in the training and testing stages, there is evidence of variation rates corresponding to the compared strategies. In Figure 6, the variation differences in accuracy in the training and testing stages for the contrasted strategies are illustrated.

Figure 6.

Variation rate computed as the difference in accuracy between training and testing phases for the applied strategies.

The values of the accuracy rates show how competitive the feature subset was at classifying stenosis cases. It is remarkable how some feature subsets give evidence of possible overfitting training, such as the Hybrid-TS, since the training accuracy was against when using testing cases. In contrast, the proposed method achieved a low difference in performance in the training and testing phases compared with the Hybrid-TS and the Hybrid-GA techniques, which was , indicating that the achieved subset with 11 features is highly suitable for the classification task.

In order to evaluate the subset of 11 features achieved by the proposed hybrid BUMDA-SA method in the automatic feature selection stage, the Antczak database was used. In Table 5, the confusion matrix obtained from the proposed method using the Antczak database is presented.

Table 5.

Confusion matrix of 700 testing cases, which corresponds to the Antczak database, using the subset of 11 features obtained by the proposed method.

On the other hand, in Table 6, the results obtained by the proposed method and different strategies in the testing stage using the Antczak database are presented.

Table 6.

Automatic classification testing rates achieved by the proposed method and different strategies using the Antczak database in terms of accuracy and Jaccard coefficient.

Based on the results presented in Table 6, the highest accuracy and Jaccard coefficient rates were achieved with the proposed method, whose values were and respectively. This result is relevant to show that the feature subset found by the proposed hybrid BUMDA-SA method is suitable. Consequently, it is important to mention that according to the results presented in Table 4 and Table 6, the GA and UMDA techniques achieved very closed accuracy rates in contrast with the proposed method. However, the reduction rate, which is related to the number of selected features, was overcome by the proposed method when it was contrasted with the other methods. In addition, the proposed method also achieved the highest Jaccard coefficient rate compared with the others. It is important to mention these findings in order to show the importance of the use of a hybrid strategy in this multiobjective problem, where is required to keep or improve a high accuracy rate in the classification task, and at the same time, the use of a minimum number of features.

Finally, in Table 7, the set of 11 features obtained by the proposed method is described, along with the frequency selection rate obtained from the statistical analysis of the 30 independent runs.

Table 7.

Frequency rate and description of the set of 11 features obtained from the proposed hybrid BUMDA-SA method.

According to the results presented in Table 7, the Min, Sum Average and Sum Variance features, which correspond to intensity and texture, have the highest frequency selection rates, followed by the Bifurcation Points and Radon-Sum features, which corresponds to morphology and Radon-based texture, respectively. This analysis is relevant since it allows us to remark on the importance of performing the automatic feature selection process with a high number of features involving different feature types such as texture, intensity and morphology.

5. Conclusions

In this paper, a novel method for the automatic classification of coronary stenosis in X-ray angiograms was introduced. The method is based on feature selection using a hybrid evolutionary algorithm and a support vector machine for classification. The hybrid method was used to explore the high-dimensional search space O of a bank of 49 features involving properties of intensity, texture, and morphology. To determine the best evolutionary method, a comparative analysis in terms of feature reduction rate and classification accuracy was performed using a training set of X-ray images. From the analysis, the method using BUMDA and SA achieved the best performance, selecting a subset of 11 features and achieving a feature reduction rate of , and a classification accuracy of . Moreover, two different databases of coronary stenosis were used; the first one was provided by the Mexican Social Security Institute (IMSS), containing 500 images; and the second database is publicly available, with 2700 patches. In the experimental results, the proposed method, using the set of 11 selected features, was compared with different state-of-the-art classification methods, achieving an accuracy and Jaccard coefficient of and in the first database and and in the second one, respectively. Finally, it is important to point out that considering the execution time obtained by the proposed method when testing images (0.02 s per image), the proposed method can be useful in assisting cardiologists in clinical practice or as part of a computer-aided diagnostic system.

Author Contributions

Conceptualization, M.-A.G.-R., C.C. and I.C.-A.; methodology, M.-A.G.-R., C.C., I.C.-A. and J.-M.L.-H.; project administration, I.C.-A., C.C. and J.-M.L.-H.; software, M.-A.G.-R., I.C.-A., J.-M.L.-H. and C.C.; supervision, I.C.-A., S.-E.S.-M. and M.-A.H.-G.; validation, S.-E.S.-M., M.-A.H.-G. and I.C.-A.; visualization: J.-M.L.-H., M.-A.H.-G. and S.-E.S.-M.; writing—original draft, M.-A.G.-R., C.C., I.C.-A., J.-M.L.-H., M.-A.H.-G. and S.-E.S.-M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

This work was supported by CONACyT under Project IxM-CONACyT No. 3150-3097.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| BUMDA | Boltzmann Univariate Marginal Distribution Algorithm |

| CNN | Convolutional Neural Network |

| EDA | Estimation Distribution Algorithm |

| GA | Genetic Algorithm |

| ILS | Iterated Local Search |

| SA | Simulated Annealing |

| SVM | Support Vector Machine |

| TS | Tabú Search |

| UMDA | Univariate Marginal Distribution Algorithm |

References

- Tsao, C. Heart Disease and Stroke Statistics—2022 Update: A Report From the American Heart Association. Circulation 2022, 145, 153–639. [Google Scholar] [CrossRef] [PubMed]

- Saad, I.A. Segmentation of Coronary Artery Images and Detection of Atherosclerosis. J. Eng. Appl. Sci. 2018, 13, 7381–7387. [Google Scholar] [CrossRef]

- Kishore, A.N.; Jayanthi, V. Automatic stenosis grading system for diagnosing coronary artery disease using coronary angiogram. Int. J. Biomed. Eng. Technol. 2019, 31, 260–277. [Google Scholar] [CrossRef]

- Wan, T.; Feng, H.; Tong, C.; Li, D.; Qin, Z. Automated identification and grading of coronary artery stenoses with X-ray angiography. Comput. Methods Programs Biomed. 2018, 167, 13–22. [Google Scholar] [CrossRef]

- Sameh, S.; Azim, M.A.; AbdelRaouf, A. Narrowed Coronary Artery Detection and Classification using Angiographic Scans. In Proceedings of the 2017 12th International Conference on Computer Engineering and Systems (ICCES), Cairo, Egypt, 9–20 December 2017; pp. 73–79. [Google Scholar] [CrossRef]

- Cervantes-Sanchez, F.; Cruz-Aceves, I.; Hernandez-Aguirre, A. Automatic detection of coronary artery stenosis in X-ray angiograms using Gaussian filters and genetic algorithms. AIP Conf. Proc. 2016, 1747, 020005. [Google Scholar] [CrossRef]

- Cervantes-Sanchez, F.; Cruz-Aceves, I.; Hernandez-Aguirre, A.; Hernandez-Gonzalez, M.A.; Solorio-Meza, S.E. Automatic Segmentation of Coronary Arteries in X-ray Angiograms using Multiscale Analysis and Artificial Neural Networks. MDPI Appl. Sci. 2019, 9, 5507. [Google Scholar] [CrossRef]

- Taki, A.; Roodaki, A.; Setahredan, S.K.; Zoroofi, R.A.; Konig, A.; Navab, N. Automatic segmentation of calcified plaques and vessel borders in IVUS images. Int. J. Comput. Assist. Radiol. Surg. 2008, 2008, 347–354. [Google Scholar] [CrossRef]

- Welikala, R.; Fraz, M.; Dehmeshki, J.; Hoppe, A.; Tah, V.; Mann, S.; Williamson, T.; Barman, S. Genetic algorithm based feature selection combined with dual classification for the automated detection of proliferative diabetic retinopathy. Comput. Med. Imaging Graph. 2015, 43, 64–77. [Google Scholar] [CrossRef]

- Sreng, S.; Maneerat, N.; Hamamoto, K.; Panjaphongse, R. Automated Diabetic Retinopathy Screening System Using Hybrid Simulated Annealing and Ensemble Bagging Classifier. Appl. Sci. 2018, 8, 1198. [Google Scholar] [CrossRef]

- Chen, X.; Fu, Y.; Lin, J.; Ji, Y.; Fang, Y.; Wu, J. Coronary Artery Disease Detection by Machine Learning with Coronary Bifurcation Features. Appl. Sci. 2020, 10, 7656. [Google Scholar] [CrossRef]

- Giannoglou, V.G.; Stavrakoudis, D.G.; Theocharis, J.B. IVUS-based characterization of atherosclerotic plaques using feature selection and SVM classification. In Proceedings of the 2012 IEEE 12th International Conference on Bioinformatics & Bioengineering (BIBE), Larnaca, Cyprus, 11–13 November 2012; pp. 715–720. [Google Scholar] [CrossRef]

- Wosiak, A.; Zakrzewska, D. Integrating Correlation-Based Feature Selection and Clustering for Improved Cardiovascular Disease Diagnosis. Complexity 2021, 2018, 2520706. [Google Scholar] [CrossRef]

- Gudigar, A.; Nayak, S.; Samanth, J.; Raghavendra, U.; A J, A.; Barua, P.D.; Hasan, M.N.; Ciaccio, E.J.; Tan, R.S.; Rajendra Acharya, U. Recent Trends in Artificial Intelligence-Assisted Coronary Atherosclerotic Plaque Characterization. Int. J. Environ. Res. Public Health 2021, 18, 10003. [Google Scholar] [CrossRef] [PubMed]

- Raghavendra, U.; Bhat, N.S.; Gudijar, A. Automated system for the detection of thoracolumbar fractures using a CNN architecture. Future Gener. Comput. Syst. 2018, 85, 184–189. [Google Scholar] [CrossRef]

- Raghavendra, U.; Fujita, H.; Bhandary, S.V.; Gudigar, A.; Hong, T.J.; Acharya, U.R. Deep convolution neural network for accurate diagnosis of glaucoma using digital fundus images. Inf. Sci. 2018, 441, 41–49. [Google Scholar] [CrossRef]

- Ibarra-Vazquez, G.; Olague, G.; Chan-Ley, M.; Puente, C.; Soubervielle-Montalvo, C. Brain programming is immune to adversarial attacks: Towards accurate and robust image classification using symbolic learning. Swarm Evol. Comput. 2022, 71, 101059. [Google Scholar] [CrossRef]

- Antczak, K.; Liberadzki, Ł. Stenosis Detection with Deep Convolutional Neural Networks. Proc. MATEC Web Conf. 2018, 210, 04001. [Google Scholar] [CrossRef]

- Ovalle-Magallanes, E.; Avina-Cervantes, J.G.; Cruz-Aceves, I.; Ruiz-Pinales, J. Transfer Learning for Stenosis Detection in X-ray Coronary Angiography. Mathematics 2020, 8, 1510. [Google Scholar] [CrossRef]

- Azizpour, H.; Sharif Razavian, A.; Sullivan, J.; Maki, A.; Carlsson, S. From Generic to Specific Deep Representations for Visual Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 36–45. [Google Scholar] [CrossRef]

- Xu, S.; Wu, H.; Bie, R. CXNet-m1: Anomaly Detection on Chest X-rays with Image-Based Deep Learning. IEEE Access 2018, 7, 4466–4477. [Google Scholar] [CrossRef]

- Yadav, S.S.; Jadhav, S.M. Deep convolutional neural network based medical image classification for disease diagnosis. J. Big Data 2019, 6, 113. [Google Scholar] [CrossRef]

- Ding, J.; Chen, B.; Liu, H.; Huang, M. Convolutional Neural Network With Data Augmentation for SAR Target Recognition. IEEE Geosci. Remote Sens. Lett. 2016, 13, 364–368. [Google Scholar] [CrossRef]

- Chlap, P.; Min, H.; Vandenberg, N.; Dowling, J.; Holloway, L.; Haworth, A. A review of medical image data augmentation techniques for deep learning applications. Med. Imaging Radiat. Oncol. 2021, 65, 545–563. [Google Scholar] [CrossRef] [PubMed]

- Garcea, F.; Serra, A.; Lamberti, F.; Morra, L. Data augmentation for medical imaging: A systematic literature review. Comput. Biol. Med. 2023, 152, 106391. [Google Scholar] [CrossRef] [PubMed]

- Goceri, E. Medical image data augmentation: Techniques, comparisons and interpretations. Artif. Intell. Rev. 2023. [Google Scholar] [CrossRef]

- Kebaili, A.; Lapuyade-Lahorgue, J.; Ruan, S. Deep Learning Approaches for Data Augmentation in Medical Imaging: A Review. J. Imaging 2023, 9, 81. [Google Scholar] [CrossRef] [PubMed]

- Knapič, S.; Malhi, A.; Saluja, R.; Främling, K. Explainable Artificial Intelligence for Human Decision Support System in the Medical Domain. Mach. Learn. Knowl. Extr. 2021, 3, 37. [Google Scholar] [CrossRef]

- Van der Velden, B.H.; Kuijf, H.J.; Gilhuijs, K.G.; Viergever, M.A. Explainable artificial intelligence (XAI) in deep learning-based medical image analysis. Med. Image Anal. 2022, 79, 102470. [Google Scholar] [CrossRef]

- Trujillo, L.; Olague, G. Automated Design of Image Operators that Detect Interest Points. Evol. Comput. 2008, 16, 483–507. [Google Scholar] [CrossRef]

- Li, Y.; Wang, S.; Tian, Q.; Ding, X. A survey of recent advances in visual feature detection. Neurocomputing 2015, 149, 736–751. [Google Scholar] [CrossRef]

- Tessmann, M.; Vega-Higuera, F.; Fritz, D.; Scheuering, M.; Greiner, G. Multi-scale feature extraction for learning-based classification of coronary artery stenosis. In Proceedings of the Medical Imaging 2009: Computer-Aided Diagnosis; Karssemeijer, N., Giger, M.L., Eds.; International Society for Optics and Photonics, SPIE: Orlando, FL, USA, 2009; Volume 7260, p. 726002. [Google Scholar] [CrossRef]

- Olague, G.; Trujillo, L. Interest point detection through multiobjective genetic programming. Appl. Soft Comput. 2012, 12, 2566–2582. [Google Scholar] [CrossRef]

- Fazlali, H.R.; Karimi, N.; Soroushmehr, S.M.R.; Sinha, S.; Samavi, S.; Nallamothu, B.; Najarian, K. Vessel region detection in coronary X-ray angiograms. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec, QC, Canada, 27–30 September 2015; pp. 1493–1497. [Google Scholar] [CrossRef]

- Acharya, U.R.; Sree, S.V.; Krishnan, M.M.R.; Molinari, F.; Saba, L.; Ho, S.Y.S.; Ahuja, A.T.; Ho, S.C.; Nicolaides, A.; Suri, J.S. Atherosclerotic Risk Stratification Strategy for Carotid Arteries Using Texture-Based Features. Ultrasound Med. Biol. 2022, 38, 899–915. [Google Scholar] [CrossRef]

- Pathak, B.; Barooah, D. Texture Analysis based on the Gray-Level Co-Ocurrence Matrix considering possible orientations. Int. J. Adv. Res. Electr. Electron. Instrum. Eng. 2013, 2, 4206–4212. [Google Scholar]

- Faust, O.; Acharya, R.; Sudarshan, V.K.; Tan, R.S.; Yeong, C.H.; Molinari, F.; Ng, K.H. Computer aided diagnosis of Coronary Artery Disease, Myocardial Infarction and carotid atherosclerosis using ultrasound images: A review. Phys. Medica 2017, 33, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Mitchell, C.C.; Korcarz, C.E.; Gepner, A.D.; Nie, R.; Young, R.L.; Matsuzaki, M.; Post, W.S.; Kaufman, J.D.; McClelland, R.L.; Stein, J.H. Retinal vascular tree morphology: A semi-automatic quantification. J. Am. Heart Assoc. 2019, 8, 912–917. [Google Scholar] [CrossRef]

- Ricciardi, C.; Valente, A.S.; Edmunds, K.; Cantoni, V.; Green, R.; Fiorillo, A.; Picone, I.; Santini, S.; Cesarelli, M. Linear discriminant analysis and principal component analysis to predict coronary artery disease. Health Inform. J. 2020, 26, 2181–2192. [Google Scholar] [CrossRef]

- Hernández, B.; Olague, G.; Hammoud, R.; Trujillo, L.; Romero, E. Visual learning of texture descriptors for facial expression recognition in thermal imagery. Comput. Vis. Image Underst. 2007, 106, 258–269. [Google Scholar] [CrossRef]

- Barburiceanu, S.; Terebes, R.; Meza, S. 3D Texture Feature Extraction and Classification Using GLCM and LBP-Based Descriptors. Appl. Sci. 2021, 11, 2332. [Google Scholar] [CrossRef]

- Cheng, K.; Lin, A.; Yuvaraj, J.; Nicholls, S.J.; Wong, D.T. Cardiac Computed Tomography Radiomics for the Non-Invasive Assessment of Coronary Inflammation. Cells 2021, 10, 879. [Google Scholar] [CrossRef]

- Ayx, I.; Tharmaseelan, H.; Hertel, A.; Nörenberg, D.; Overhoff, D.; Rotkopf, L.T.; Riffel, P.; Schoenberg, S.O.; Froelich, M.F. Myocardial Radiomics Texture Features Associated with Increased Coronary Calcium Score-First Results of a Photon-Counting CT. Diagnostics 2022, 12, 1663. [Google Scholar] [CrossRef]

- Murphy, L.M. Linear feature detection and enhancement in noisy images via the Radon transform. Pattern Recognit. Lett. 1986, 4, 279–284. [Google Scholar] [CrossRef]

- Mallat, S. A Wavelet Tour of Signal Processing, 3rd ed.; Elsevier: Amsterdam, The Netherlands, 2009; Chapter 13; pp. 699–752. [Google Scholar]

- Timothy-G, F. The Radon Transform. In The Mathematics of Medical Imaging; Technical University of Denmark: Lyngby, Denmark, 2015; pp. 13–37. [Google Scholar] [CrossRef]

- Frangi, A.; Nielsen, W.; Vincken, K.; Viergever, M. Multiscale vessel enhancement filtering. In Medical Image Computing and Computer-Assisted Intervention (MICCAI’98); Springer: Berlin/Heidelberg, Germany, 1998; pp. 130–137. [Google Scholar] [CrossRef]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D.; Vecchi, M.P. Optimization by simulated annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef]

- Valdez-Peña, S.I.; Hernández, A.; Botello, S. A Boltzmann based estimation of distribution algorithm. Inf. Sci. 2013, 236, 126–137. [Google Scholar] [CrossRef]

- Gu, W.; Wu, Y.; Zhang, G. A hybrid Univariate Marginal Distribution Algorithm for dynamic economic dispatch of units considering valve-point effects and ramp rates. Int. Trans. Electr. Energy Syst. 2015, 25, 374–392. [Google Scholar] [CrossRef]

- Dang, D.C.; Lehre, P.K.; Nguyen, P.T.H. Level-Based Analysis of the Univariate Marginal Distribution Algorithm. Algorithmica 2017, 81, 668–702. [Google Scholar] [CrossRef]

- Hashemi, M.; Reza-Meybodi, M. Univariate Marginal Distribution Algorithm in Combination with Extremal Optimization (EO, GEO). In International Conference on Neural Information Processing; Springer: Berlin/Heidelberg, Germany, 2011; pp. 220–227. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Cristianini, N.; Shawe-Taylor, J. An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Noble, W.S. What is a support vector machine? Nat. Biotechnol. 2006, 24, 1565–1567. [Google Scholar] [CrossRef]

- Tong, S.; Chang, E. Support Vector Machine Active Learning for Image Retrieval. In Proceedings of the Ninth ACM International Conference on Multimedia; Association for Computing Machinery: New York, NY, USA, 2001; pp. 107–118. [Google Scholar] [CrossRef]

- Burges, C.J. A Tutorial on Support Vector Machines for Pattern Recognition. Data Min. Knowl. Discov. 1998, 2, 121–167. [Google Scholar] [CrossRef]

- Haralick, R.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Johnson, D.S.; Papadimitriou, C.H.; Yannakakis, M. How easy is Local Search? J. Comput. Syst. Sci. 1988, 37, 79–100. [Google Scholar] [CrossRef]

- Gil-Rios, M.A.; Cruz-Aceves, I.; Cervantes-Sánchez, F.; Guryev, I.; López-Hernández, J.M. Automatic enhancement of coronary arteries using convolutional gray-level templates and path-based metaheuristics. Recent Trends Comput. Intell. Enabled Res. 2021, 1, 129–152. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. arXiv 2014, arXiv:1409.4842. [Google Scholar]

- Harouni, A.; Karargyris, A.; Negahdar, M.; Beymer, D.; Syeda-Mahmood, T. Universal multi-modal deep network for classification and segmentation of medical images. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 872–876. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).