Abstract

In this paper, we solve a stochastic linear quadratic tracking problem. The controlled dynamical system is modeled by a system of linear Itô differential equations subject to jump Markov perturbations. We consider the case when there are two decision-makers and each of them wants to minimize the deviation of a preferential output of the controlled dynamical system from a given reference signal. We assume that the two decision-makers do not cooperate. Under these conditions, we state the considered tracking problem as a problem of finding a Nash equilibrium strategy for a stochastic differential game. Explicit formulae of a Nash equilibrium strategy are provided. To this end, we use the solutions of two given terminal value problems (TVPs). The first TVP is associated with a hybrid system formed by two backward nonlinear differential equations coupled by two algebraic nonlinear equations. The second TVP is associated with a hybrid system formed by two backward linear differential equations coupled by two algebraic linear equations.

MSC:

91A15; 49N10

1. Introduction

Tracking problems are often encountered in many applications and have received attention from the research community in the past few decades [1,2,3,4,5]. In the stochastic context, this problem was studied in [6,7] as well as in [8,9]. For stochastic systems with time delay, the linear quadratic tracking problem has been studied in [10,11]. Applications of tracking problems may be found in economic policy control [12], process control [13], networked control systems [14], control of mobile robots [15], spacecraft hovering [16], etc. Usually, a linear quadratic tracking problem requires minimization of the -norm of the deviation of a signal generated by a controlled linear system from a reference signal. When there exists more than one decision maker and each of them wants to minimize the deviation of a preferential signal from a given reference signal, the optimal tracking problem may be stated as a problem of finding a Nash equilibrium strategy for a linear quadratic differential game. If the controlled system whose outputs have to track the given reference signal is described by linear stochastic differential equations, one obtains a problem of finding a Nash equilibrium strategy for a stochastic differential game. Lately, the stochastic differential games have attracted an increasing research interest see, e.g., [17,18,19,20]. Moreover, for Nash tracking game problems for continuous-time systems over finite intervals see [21] and the reference therein.

In the present work, we consider the case when the controlled system is described by a system of Itô differential equations with coefficients affected by a standard homogeneous Markov process with a finite number of states. We assume that there exist at least two decision-makers. The aim of the k-th decision-maker is to minimize the deviation of an output of the controlled system from a reference signal . The class of admissible strategies consists of stochastic processes in an affine state feedback form.

In the derivation of the main results we consider two cases:

(a) the case with only one decision-maker;

(b) the case of two decision-makers.

The result derived from case (a) is used in the case with more than one decision-maker in order to obtain an optimal strategy. In case (b), we study the game theoretic model for two players, where each player wants to find the optimal admissible strategy minimizing the deviation of the controlled signal from the given reference.

We assume that the players do not cooperate. The reasons for which they are not cooperating may be caused by individual motivations or by physical reasons. We provide explicit formulae of a Nash equilibrium strategy. To this end, we use the solutions of two TVPs. The first TVP is associated with a hybrid system formed by two backward nonlinear differential equations coupled with two algebraic nonlinear equations. The second TVP is associated with a hybrid system formed by two backward linear differential equations coupled by two algebraic linear equations.

The paper is organized as follows: Section 2 includes the description of the mathematical model as well as the formulation of the tracking problem as a problem of finding a Nash equilibrium strategy for a stochastic differential game. The main results are derived in Section 3. First, in Section 3.1, we consider the case with one decision-maker. Further, in Section 3.2, we obtain explicit formulae for the optimal strategies in the case of two decision-makers. In Section 4, we briefly discuss two special cases: (i) the case when the controlled system does not contain controlled dependent terms in the diffusion part; (ii) the case when the aim of the decision-makers is to minimize the deviation of the controlled signals from a given final target without restrictions regarding the behavior of the transient states. In Section 5, we provide a numerical example that shows that the proposed procedure is feasible. Finally, in Section 6, we provide some conclusions and future research directions.

2. The Problem

Consider the controlled system having the state space representation described by

where is the state vector at instance time t and are the vectors of control parameters. In (1), is a one-dimensional standard Wiener process defined on a given probability space and is a standard right continuous Markov process taking values in a finite set and having the transition semigroup The elements of the generator matrix satisfy

for all . For more details regarding the properties of a Wiener process, one can see [22] or [23], whereas for more properties of a Markov process, we refer, for example, to [24,25].

Throughout the paper, we assume that and are independent stochastic processes. The dependence of the coefficients of the system (1) upon the Markov process highlights the fact that this system may be considered the mathematical model of some phenomena in which abrupt structural changes are likely to occur. Such variations may be due, for instance, to changes between different operation points, sensor or actuator failures, temporary loss of communication, and so on. For the readers’ convenience, we refer to [9,26,27,28] for extensive discussions on the subject. As usual, we shall write instead of and so on, whenever Assume that are continuous matrix-valued functions. The set of the admissible controls available to the decision-makers consists of the stochastic processes in an affine state feedback form, i.e.,

where and are arbitrary continuous functions. Applying Theorem 1.1 in Chapter 5 from [22] (see also Section 1.12 from [9]) we obtain:

Corollary 1.

For each and for each the initial value problem (IVP) (1a) has a unique solution which is a stochastic process with the properties

- (a)

- is a.s. continuous in every

- (b)

- for each is measurable, where is the algebra generated by the random variables

- (c)

- for all

- (d)

Throughout this work, stands for the mathematical expectation. Roughly speaking, the aim of the decision-maker is to find a control law (or an admissible strategy) of type (3), which minimizes the deviation of the signal from a given reference signal when the other decision-maker wants to minimize the deviation of the signal from another reference signal

Since this problem is an optimization problem with two objective functions, its solution can be viewed as an equilibrium strategy for a non-cooperative differential game with two players. For a rigorous mathematical setting of this optimization problem, let us introduce the following cost functions which are modeling the performance criterion for each player :

Here is the reference which must be tracked by the signal and is the target of the final value The weight matrices involved in (4) are satisfying the assumption:

Hypothesis 1.

- (a)

- are continuous matrix-valued functions;

- (b)

- for all

Here and in the sequel, denotes the subspace of symmetric matrices of size

Definition 1.

We say that the pair of admissible strategies achieve a Nash equilibrium for the differential game described by the controlled system (1), the performance criterion (4), and the admissible strategies of type (3) if

and

In the next section, we shall derive explicit formulae of a Nash equilibrium strategy for the linear quadratic differential game described by (1), (3), and (4).

Remark 1.

- (a)

- We shall see that for the computation of the gain matrices of a Nash equilibrium strategy we need to know a priori the whole reference signal

- (b)

- When for all then (4) reduces toThe performance criterion (4) could be replaced by one of the form (7), when the decision-maker is interested only by the minimization of the deviation of the final value from the target The termwhich appears both in (4) and (7), must be viewed as a penalization of the control effort.

3. The Main Results

3.1. The Case with Only One Decision Maker

In order to derive in an elegant way the state space representation of a pair of form (3) which satisfies (5) and (6), respectively, we study first the problem of tracking a reference signal in the case where there is only one decision maker.

We consider the optimal control problem described by the controlled system:

and the performance criterion

Here, the stochastic processes and have the same properties as in the case of system (1). In (8) and (9), is the state vector at the instance time t and is the vector of the control parameters.

In this subsection, the set of admissible controls consists of stochastic processes of the form

which are arbitrary continuous functions. The optimal control problem which we want to solve in this subsection consists in finding a control from which minimizes the cost function (9) along the trajectories of the system (8) determined by all admissible controls of the form (10).

Regarding the coefficients of (8) and (9), we suppose:

Hypothesis 2.

- (a)

- are continuous matrix-valued functions;

- (b)

- for all

Let us consider the function defined by

where are continuous and differentiable functions. Applying the Itô formula, see for example Theorem 1.10.2 from [9] in the case of the function (11) and to the stochastic process satisfying (8a), we obtain

for all where

Taking the expectation in (12) and adding with (9) we obtain

where

Let

be the solutions of the following terminal value problem (TVP)

being the components of the solution of the TVP (16),

being the components of the solution of the TVPs (16) and (17), respectively. The main properties of the solutions of the TVPs (16)–(18) are summarized in the following lemma.

Lemma 1.

Under the assumption (H2) the following hold:

- (i)

- the unique solution of the TVP (16) is defined on the whole interval Moreover, for all

- (ii)

- the TVPs (17) and (18) have unique solutions and

Proof.

- (i)

- Follows immediately applying Corollary 5.2.3 from [9] applied in the case of TVP (16).

- (ii)

- The TVP (17) is associated with a linear nonhomogeneous differential equation with time-varying coefficients. Hence its solution is defined on the whole interval of a definition of its coefficients. According to it follows that the coefficients of the differential Equation (17a) are defined on the whole interval Hence, its solution is also defined on the whole interval The conclusion regarding the definition of the solution of TVP (18) on the interval is obtained in the same way.

□

Further, we consider the case when (11) is defined using the solutions of the TVPs (16)–(18). In this case, (13), (15), (16)–(18) allow us to reduce (14) to

for all of type (10), where are computed as in (15f), is computed as in (17b) based on the solution of TVP (16), whereas

for all

Now we are in position to state and prove the main result of this subsection.

Theorem 1.

Assume that the assumption H2 is fulfilled. We consider the control law

where and are computed via (17b) and (20), respectively, based on the solution and of TVPs (16) and (17) and is the solution of the closed-loop system obtained when coupling the control (21) to (8a). Under these conditions, the control (21) satisfies the following minimality condition

The minimal value of the cost function (9) in the class of the controls of type (10) is given by

Proof.

From (15f) we deduce via Lemma 1 that under the assumption H2 we have for all The conclusion is obtained immediately from (19). □

3.2. The Case of Two Decision-Makers

In this subsection, we shall use the result derived in Theorem 1 to obtain the state space representation of an equilibrium strategy of type (3) which satisfies (5). Let be fixed such that Let

be a candidate for a Nash equilibrium strategy. Taking we rewrite (1) and (4) as

From Definition 1 it follows that is a Nash equilibrium strategy for the dynamic game described by (1), (3), and (4) if minimizes the cost (23) along with the trajectories of the system (22), determined by the controls of type

with arbitrary continuous functions.

In order to obtain the explicit formula of with these properties, we apply Theorem 1 specialized to the case of the optimal tracking problem described by the system (22) and the performance criterion (23). To this end, we shall rewrite TVPs (16)–(18) with the updates

Thus, TVP (16) becomes

The analogous of the feedback gains associated with the solution of TVP (16), via (17b) becomes, in the case of TVP (25)

In the case of the tracking problem described by (22) and (24), the TVPs (17) and (18) take the form

for all In this context (20) becomes

for all

Remark 2.

Although the TVP (25) is defined by a Riccati differential equation of type (16), we cannot be sure that the solution of this problem is defined on the whole interval because the domain of definition of its coefficients depends upon the domain of definition of the gain matrices

In the following, we shall regard (25) and (26) as a TVP associated with a hybrid system of nonlinear differential equations and nonlinear algebraic equations

At the same time, (27) and (29) can be viewed as a TVP associated with a hybrid system formed by two backward linear differential equations and two algebraic linear equations, as

where we denoted

In (31) and (32), is a solution of the TVP (30). Applying Theorem 1 in the case of the optimal tracking problems described by system (22) and the performance criterion (23) for and we obtain

Theorem 2.

Assume:

- (a)

- the assumption (H1) is fulfilled;

- (b)

- the solutions and of the TVPs (30) and (31), respectively, are defined on the whole interval

We set

being the solution of the IVP obtained replacing (33) in (1). Under these conditions, is an equilibrium strategy for the differential game described by the controlled system (1), the performance criteria (4), and the family of the admissible strategies of type (3). The optimal values of the performance criteria are given by

4. Several Special Cases

4.1. The Case without Control-Dependent Noise of the Diffusion Part of the Controlled System

We assume that the controlled system (1) is in the special form

In this case the TVPs (30) and (31), respectively, reduce to

In (35) and (36) we have denoted

The TVP (28) becomes

In (37), is the solution of the TVP (36). Applying the result derived in Theorem 2, we obtain

Corollary 2.

Assume:

- (a)

- the assumption (H1) is fulfilled;

- (b)

- the solution of the TVP (35a)–(35c) is defined on the whole intervalWe set

being the solution of the IVP obtained substituting (38) in (34). Under these conditions, is an equilibrium strategy for the differential game described by the controlled system (34), the performance criterion (4), and the admissible strategies of type (3). The optimal values of the performance criterion (4) are given by

being the solutions of the TVPs (36) and (37), respectively.

4.2. The Case when the Performance Criterion (4) Is Replaced by Performance Criterion of Type (7)

In this case, the aim of the decision-makers is to minimize the mean square of the deviations of the final value of the output from the target If so, the equilibrium strategy is obtained solving the TVPs (30) and (31), respectively, when the controlled system is of type (1) or the TVPs (35) and (36), respectively, when the controlled system is of type (34). In both cases

5. A Numerical Experiment

For the numerical experiment we considered the time invariant case of the system (34) with performance criterion (4) without Markovian jumping. We rewrite equations (35a) and (35b) of the form:

In this case, we have .

The matrix coefficients of the controlled system (1) are:

The weight matrices for the performance criteria are of the form:

Moreover, and the targets are , and . The initial point is chosen to be .

To compute we can use the Euler discretization method as :

with

We consider the following algorithm to compute the behavior of the controlled signals .

- Step 1.

- The aim of this step is to compute the gains matrices and

- Step 2.

- The aim is to compute for . We have:

Consider two cases:

- (A).

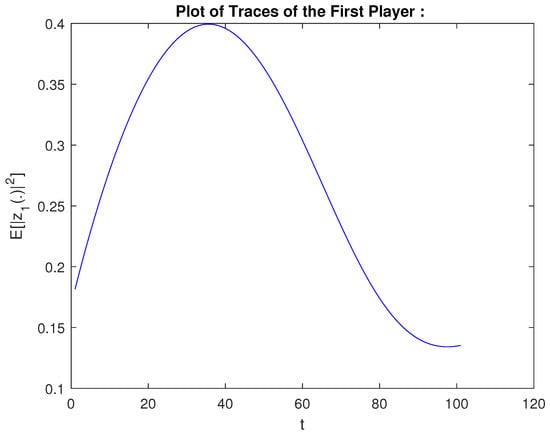

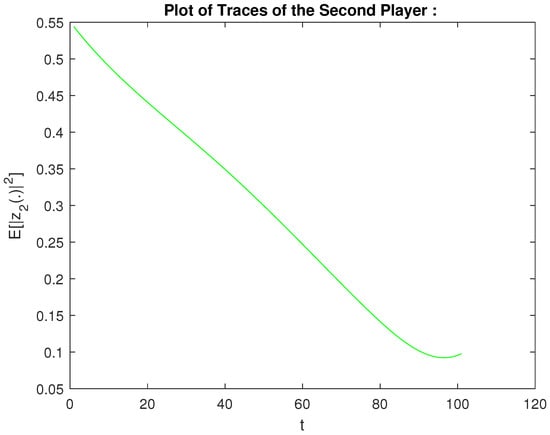

- The base variant using the above matrix coefficients. We have executed Step 1 and Step 2. The computed values for the signals of the players are given on Figure 1 and Figure 2 for the first player and the second player, respectively. Moreover, we have obtained the following values of for the players , i.e.,

Figure 1. Plot of traces of the first player: .

Figure 1. Plot of traces of the first player: . Figure 2. Plot of traces of the second player: .

Figure 2. Plot of traces of the second player: . - (B).

- We want to compute the output of the closed-loop system by using a control law (other than the optimal one) with . For this, we take and different from the optimal cases. We use the same matrix coefficients. After Step 1, we obtain the optimal values of and . Then, we compute the different values as follows ():The computations continue with Step 2 with . The computed values of areOne sees from (39) and (40) that the values of the obtained deviation from the target provided by the optimal control are better than the ones provided by another control.

6. Conclusions

In this work, we studied the problem of the minimization of the deviation of some outputs of a controlled dynamical system from some given reference signals. We considered the case where the dynamical system is controlled by two decision-makers which are not cooperating. One of the two decision-makers wants to minimize the deviation of a preferential output of the dynamical system from a given reference signal, whereas the other decision-maker wants to minimize the deviation of another output of the same dynamical controlled system from another reference signal. This problem was viewed as a problem of designing a Nash equilibrium strategy for an affine quadratic differential game with two players. Since it was supposed that the controlled dynamical system is subject to multiplicative white noise perturbations and Markovian jumping, we must find a Nash equilibrium strategy for a stochastic affine quadratic differential game. We have obtained explicit formulae of the equilibrium strategy. To this end, the solutions of two TVPs were involved. The first TVP is associated with a hybrid system formed by two nonlinear backward differential equations and two nonlinear algebraic equations, namely, the TVP (30). The second TVP is associated with a hybrid system formed by two backward linear differential equations coupled with two affine matrix algebraic equations, that is TVP (31). The first TVP is the same as that involved in the description of the Nash equilibrium strategy for an LQ differential game. The second TVP takes into consideration the reference signals together with the final targets .

There are few directions that can be considered as future research:

- Direct extensions from this article can be considered as follows: the case when two or more players (with different cost functionals) are willing to cooperate or the case when for the tracking problem associated with a controlled system of type (1).

- Anther direction of future research can consider the case of a tracking problem with preview in the case when the controlled dynamical system is affected by state multiplicative and/or control multiplicative white noise perturbations. To our knowledge, this case was not yet considered in the existing literature. Some results in this direction have been reported, for example in [2,6,7], for the case of only one decision-maker and [29,30] for the case with more than one decision-maker.

- Finally, other directions can consider the case of linear quadratic tracking problem with a delay component (for one or more players) for Itô stochastic systems. Some results in this direction have been reported for examples in [10,11].

Author Contributions

Conceptualization, V.D., I.G.I. and I.-L.P.; methodology, V.D., I.G.I. and I.-L.P.; investigation, V.D., I.G.I. and I.-L.P.; writing—original draft preparation, V.D., I.G.I. and I.-L.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Axelband, E. The structure of the optimal tracking problem for distributed-parameter systems. IEEE Trans. Autom. Control 1968, 13, 50–56. [Google Scholar] [CrossRef]

- Cohen, A.; Shaked, U. Linear discrete-time H∞-optimal tracking with preview. IEEE Trans. Autom. Control 1997, 42, 270–276. [Google Scholar] [CrossRef]

- Emami-Naeini, A.; Franklin, G. Deadbeat control and tracking of discrete-time systems. IEEE Trans. Autom. Control 1982, 27, 176–181. [Google Scholar] [CrossRef]

- Liu, D.; Liu, X. Optimal and minimum-energy optimal tracking of discrete linear time-varying systems. Automatica 1995, 31, 1407–1419. [Google Scholar] [CrossRef]

- Shaked, U.; de Souza, C.E. Continuous-time tracking problems in an H∞ setting: A game theory approach. IEEE Trans. Autom. Control 1995, 40, 841–852. [Google Scholar] [CrossRef]

- Gershon, E.; Shaked, U.; Yaesh, I. H∞ tracking of linear systems with stochastic uncertainties and preview. IFAC Proc. Vol. 2002, 35, 407–412. [Google Scholar] [CrossRef]

- Gershon, E.; Limebeer, D.J.N.; Shaked, U.; Yaesh, I. Stochastic H∞ tracking with preview for state-multiplicative systems. IEEE Trans. Autom. Control 2004, 49, 2061–2068. [Google Scholar] [CrossRef]

- Dragan, V.; Morozan, T. Discrete-time Riccati type equations and the tracking problem. ICIC Express Lett. 2008, 2, 109–116. [Google Scholar]

- Dragan, V.; Morozan, T.; Stoica, A.M. Mathematical Methods in Robust Control of Linear Stochastic Systems; Springer: New York, NY, USA, 2013. [Google Scholar]

- Han, C.; Wang, W. Optimal LQ tracking control for continuous-time systems with pointwise time-varying input delay. Int. J. Control. Autom. Syst. 2017, 15, 2243–2252. [Google Scholar] [CrossRef]

- Jin, N.; Liu, S.; Zhang, H. Tracking Problem for Itô Stochastic System with Input Delay. In Proceedings of the Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 1370–1374. [Google Scholar]

- Pindyck, R. An application of the linear quadratic tracking problem to economic stabilization policy. IEEE Trans. Autom. Control 1972, 17, 287–300. [Google Scholar] [CrossRef]

- Alba-Florest, R.; Barbieri, E. Real-time infinite horizon linear quadratic tracking controller for vibration quenching in flexible beams. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Taipei, Taiwa, 8–11 October 2006; pp. 38–43. [Google Scholar]

- Wang, Y.-L.; Yang, G.-H. Robust H∞ model reference tracking control for networked control systems with communication constraints. Int. J. Control. Autom. Syst. 2009, 7, 992–1000. [Google Scholar] [CrossRef]

- Ou, M.; Li, S.; Wang, C. Finite-time tracking control for multiple non-holonomic mobile robots based on visual servoing. Int. J. Control 2013, 86, 2175–2188. [Google Scholar] [CrossRef]

- Fu, Y.-M.; Lu, Y.; Zhang, T.; Zhang, M.-R.; Li, C.-J. Trajectory tracking problem for Markov jump systems with Itô stochastic disturbance and its application in orbit manoeuvring. IMA J. Math. Control. Inf. 2018, 35, 1201–1216. [Google Scholar] [CrossRef]

- Basar, T. Nash equilibria of risk-sensitive nonlinear stochastic differential games. J. Optim. Theory Appliations 1999, 100, 479–498. [Google Scholar] [CrossRef]

- Buckdahn, R.; Cardaliaguet, P.; Rainer, C. Nash equilibrium payoffs for nonzero-sum stochastic differential games. SIAM J. Control. Optim. 2004, 43, 624–642. [Google Scholar] [CrossRef]

- Sun, H.Y.; Li, M.; Zhang, W.H. Linear quadratic stochastic differential game: Infinite time case. ICIC Express Lett. 2010, 5, 1449–1454. [Google Scholar]

- Sun, H.; Yan, L.; Li, L. Linear quadratic stochastic differential games with Markov jumps and multiplicative noise: Infinite time case. Int. J. Innov. Comput. Inf. Control 2015, 11, 348–361. [Google Scholar]

- Nakura, G. Nash tracking game with preview by stare feedback for linear continuous-time systems. In Proceedings of the 50th ISCIE International Symposium on Stochastic Systems Theory and Its Applications, Kyoto, Japan, 1–2 November 2018; pp. 49–55. [Google Scholar]

- Fridman, A. Stochastic Differential Equations and Applications; Academic: New York, NY, USA, 1975; Volume I. [Google Scholar]

- Oksendal, B. Stoch. Differ. Equations; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Chung, K.L. Markov Chains with Stationary Transition Probabilities; Springer: Berlin/Heidelberg, Germany, 1967. [Google Scholar]

- Doob, J.L. Stochastic Processes; Wiley: New York, NY, USA, 1967. [Google Scholar]

- Boukas, E.R. Stochastic Switching Systems: Analysis and Design; Birkhäuser: Boston, MA, USA, 2005. [Google Scholar]

- Costa, O.L.V.; Fragoso, M.D.; Marques, R.P. Discrete-Time Markov Jump Linear Systems; Series: Probability and Its Applications; Springer: London, UK, 2005. [Google Scholar]

- Costa, O.L.V.; Fragoso, M.D.; Todorov, M.G. Continuous-Time Markov Jump Linear Systems; Series: Probability and Its Applications; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Nakura, G. Soft-Constrained Nash Tracking Game with Preview by State Feedback for Linear Continuous-Time Markovian Jump Systems. In Proceedings of the 2018 57th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Nara, Japan, 11–14 September 2018; pp. 450–455. [Google Scholar]

- Nakura, G. Nash Tracking Game with Preview by State Feedback for Linear Continuous-Time Markovian Jump Systems. In Proceedings of the 50th ISCIE International Symposium on Stochastic Systems Theory and Its Applications, Kyoto, Japan, 1–2 November 2018; pp. 56–63. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).