Transformer-Driven Algal Target Detection in Real Water Samples: From Dataset Construction and Augmentation to Model Optimization

Abstract

1. Introduction

- First, we constructed a comprehensive dataset of various algal microscopic images, collected from different real water environments to enhance the generalization capability and applicability of the trained model.

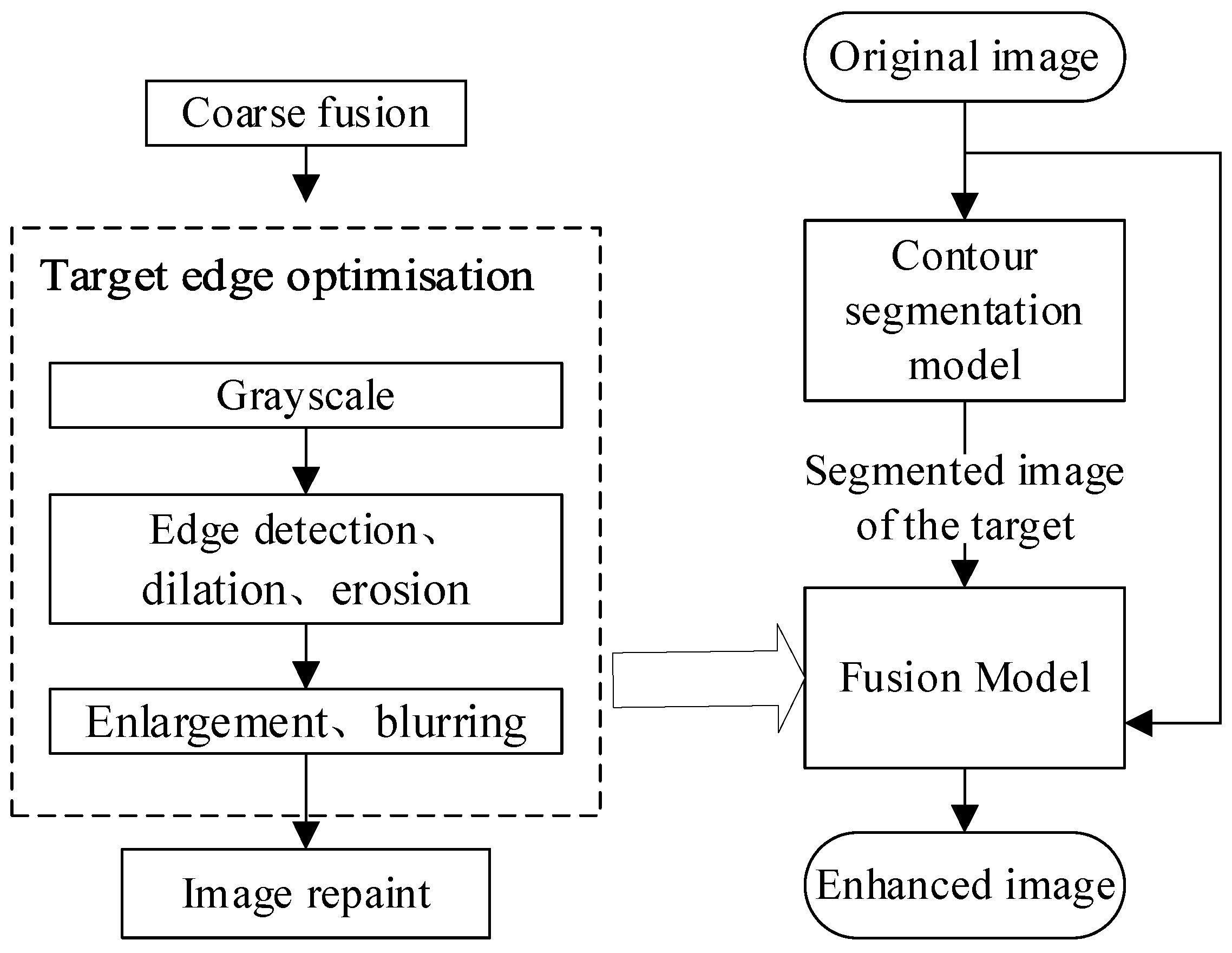

- Second, to address the issue of limited representation of disadvantaged algal species, we proposed an automated segmentation-fusion-based data augmentation method.

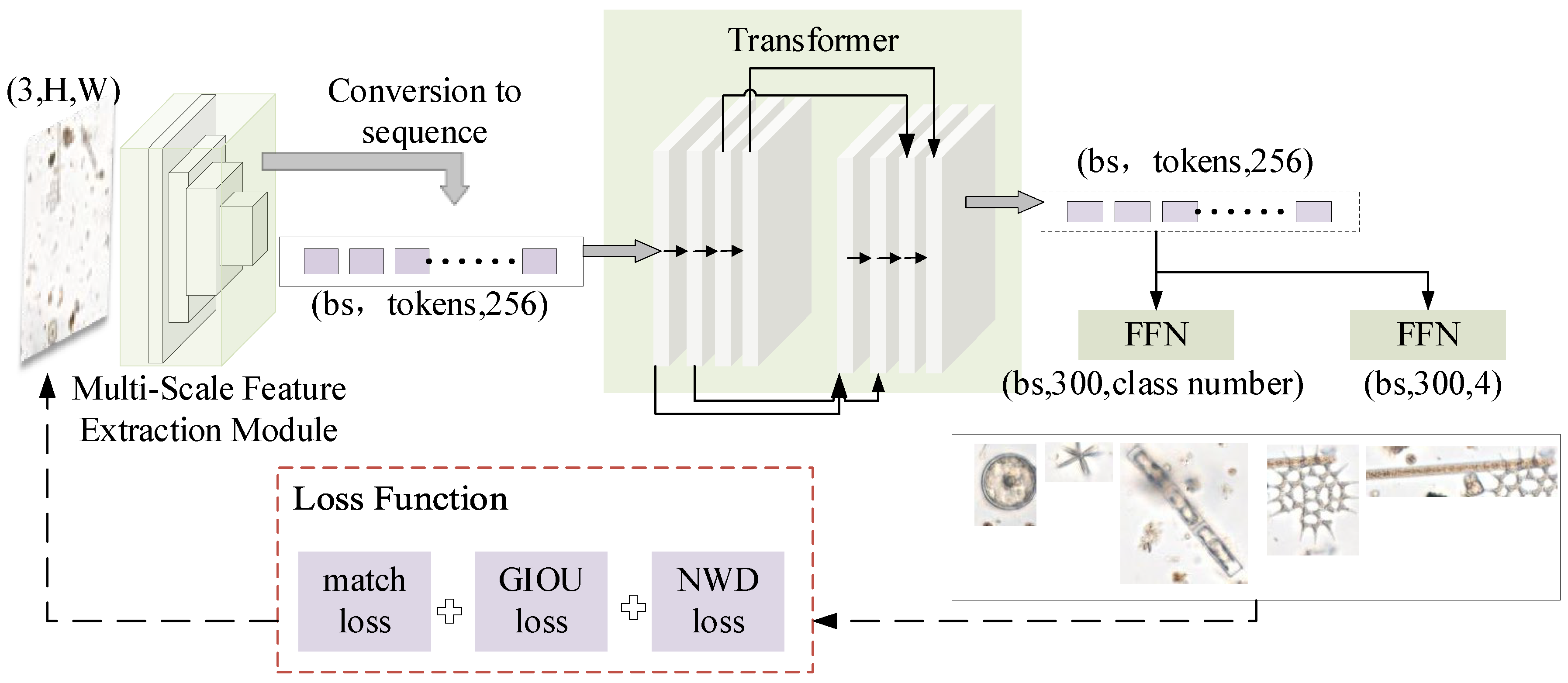

- In terms of target recognition models, the Deformable DETR model, based on Transformer, is used and optimized with the NWD loss function to better adapt to the algae dataset.

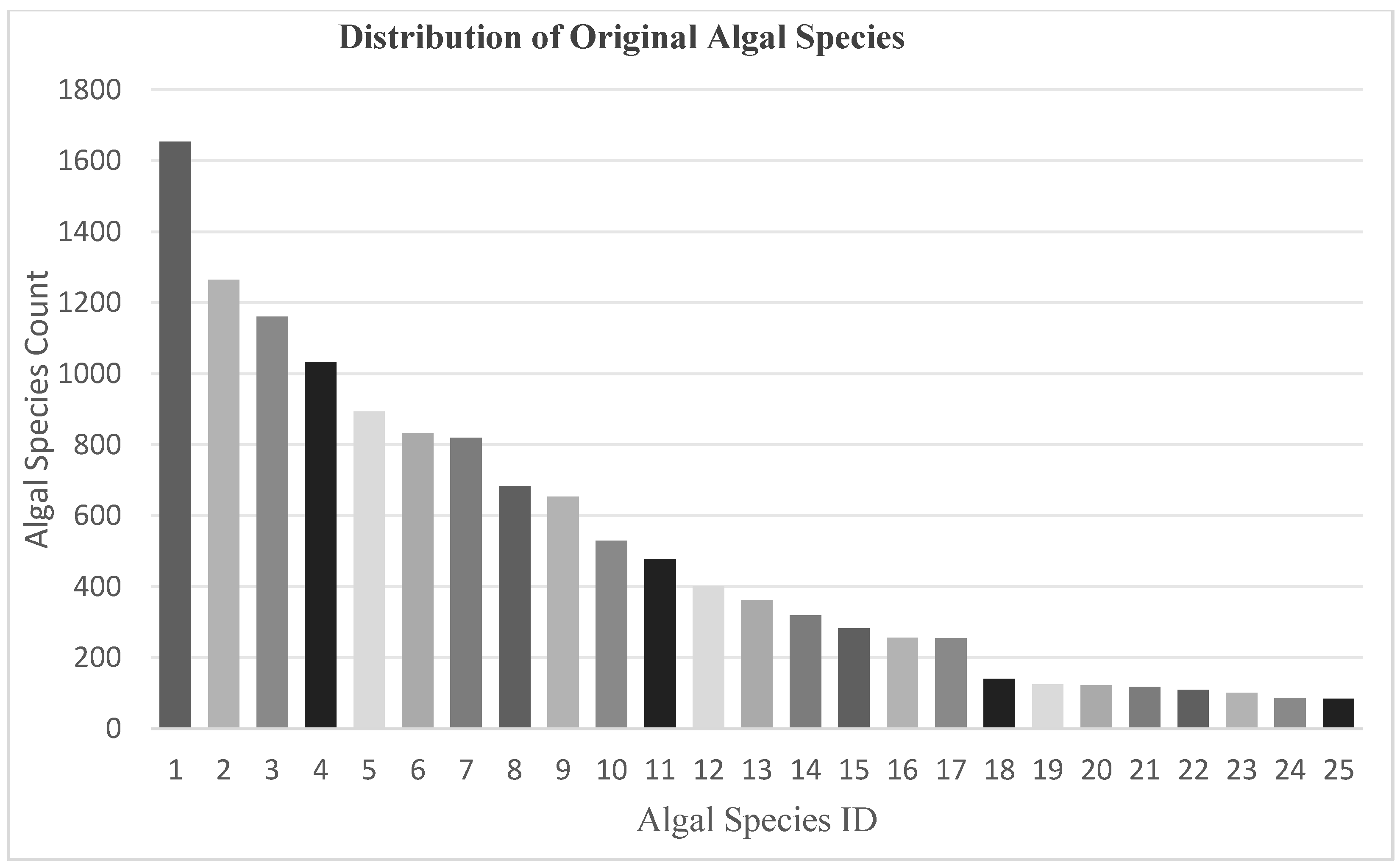

2. Dataset Construction

2.1. Dataset Collection

2.2. Dataset Augmentation

3. Improved Deformable DETR Detection Algorithm

3.1. Deformable DETR Network Architecture

3.2. Improved Loss Function for Deformable DETR

4. Experiments and Analysis

4.1. Evaluation Metrics

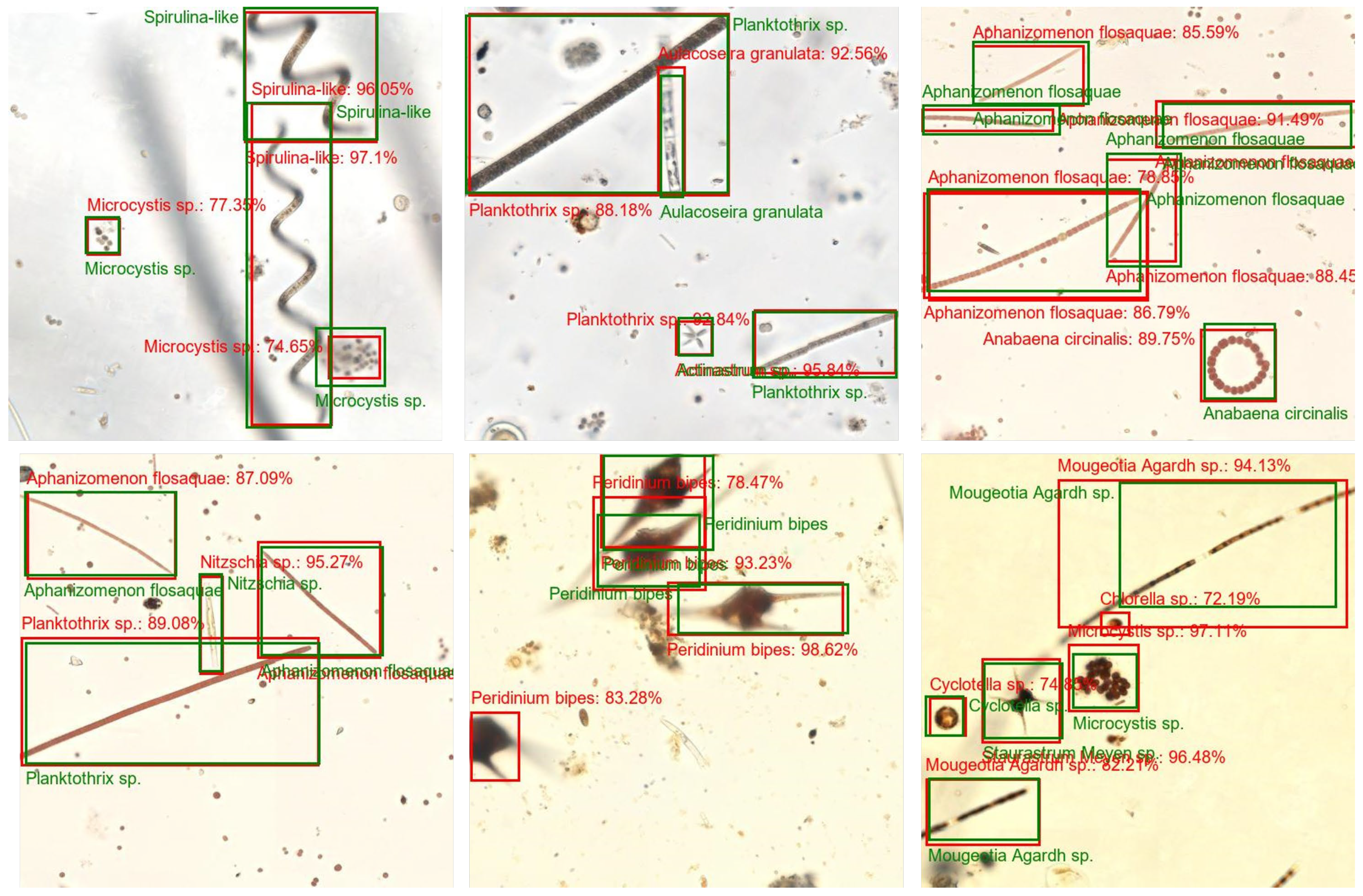

4.2. Experimental Results and Discussion

4.2.1. Comparative Experiment on Dataset Augmentation Effectiveness

4.2.2. Comparative Experiments Based on the Improved Deformable DETR

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ramanan, R.; Kim, B.-H.; Cho, D.-H.; Oh, H.-M.; Kim, H.-S. Algae–Bacteria Interactions: Evolution, Ecology and Emerging Applications. Biotechnol. Adv. 2016, 34, 14–29. [Google Scholar] [CrossRef] [PubMed]

- Paerl, H.W.; Otten, T.G. Harmful Cyanobacterial Blooms: Causes, Consequences, and Controls. Microb. Ecol. 2013, 35, 995–1010. [Google Scholar] [CrossRef] [PubMed]

- Reynolds, C.S. The Ecology of Phytoplankton; Ecology, Biodiversity and Conservation; Cambridge University Press: Cambridge, UK, 2006; ISBN 978-0-521-60519-9. [Google Scholar]

- Thessen, A. Adoption of Machine Learning Techniques in Ecology and Earth Science. One Ecosyst. 2016, 1, e8621. [Google Scholar] [CrossRef]

- Sellner, K.G.; Doucette, G.J.; Kirkpatrick, G.J. Harmful Algal Blooms: Causes, Impacts and Detection. J. Ind. Microbiol. Biotechnol. 2003, 30, 383–406. [Google Scholar] [CrossRef] [PubMed]

- Hou, T.; Chang, H.; Jiang, H.; Wang, P.; Li, N.; Song, Y.; Li, D. Smartphone Based Microfluidic Lab-on-Chip Device for Real-Time Detection, Counting and Sizing of Living Algae. Measurement 2022, 187, 110304. [Google Scholar] [CrossRef]

- Lin, K.; Tang, Y.; Tang, J.; Huang, H.; Qin, Z. Algae Object Detection Algorithm Based on Improved YOLOv5. In Proceedings of the 2023 6th International Conference on Software Engineering and Computer Science (CSECS), Chengdu, China, 22–24 December 2023; pp. 1–5. [Google Scholar]

- Guo, Y.; Gao, L.; Li, X. A Deep Learning Model for Green Algae Detection on SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4210914. [Google Scholar] [CrossRef]

- Ruiz-Santaquiteria, J.; Bueno, G.; Deniz, O.; Vallez, N.; Cristobal, G. Semantic versus Instance Segmentation in Microscopic Algae Detection. Eng. Appl. Artif. Intell. 2020, 87, 103271. [Google Scholar] [CrossRef]

- Liu, T. Research on Marine Microalgae Recognition Algorithm for Few-Shot Scenarios. Master’s Thesis, Dalian Ocean University, Dalian, China, 2024. [Google Scholar]

- Qian, P.; Zhao, Z.; Liu, H.; Wang, Y.; Peng, Y.; Hu, S.; Zhang, J.; Deng, Y.; Zeng, Z. Multi-Target Deep Learning for Algal Detection and Classification. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 1954–1957. [Google Scholar]

- Abdullah; Ali, S.; Khan, Z.; Hussain, A.; Athar, A.; Kim, H.-C. Computer Vision Based Deep Learning Approach for the Detection and Classification of Algae Species Using Microscopic Images. Water 2022, 14, 2219. [Google Scholar] [CrossRef]

- Wu, Z.; Chen, M. Lightweight detection method for microalgae based on improved YOLO v7. J. Dalian Ocean. Univ. 2023, 38, 129–139. [Google Scholar] [CrossRef]

- Chu, Z.; Zhang, X.; Ying, G.; Jia, R.; Qi, Y.; Xu, M.; Hu, X.; Huang, P.; Ma, M.; Yang, R. Detection Algorithm of Planktonic Algae Based on Improved YOYOv3. Laser Optoelectron. Prog. 2023, 60, 257–264. [Google Scholar]

- Park, J.; Baek, J.; Kim, J.; You, K.; Kim, K. Deep Learning-Based Algal Detection Model Development Considering Field Application. Water 2022, 14, 1275. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A Survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Yun, S.; Han, D.; Chun, S.; Oh, S.J.; Yoo, Y.; Choe, J. CutMix: Regularization Strategy to Train Strong Classifiers With Localizable Features. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6022–6031. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. Mixup: Beyond Empirical Risk Minimization. arXiv 2018, arXiv:1710.09412. [Google Scholar]

- Kisantal, M.; Wojna, Z.; Murawski, J.; Naruniec, J.; Cho, K. Augmentation for Small Object Detection. arXiv 2019, arXiv:1902.07296. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. arXiv 2021, arXiv:2010.04159. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Xu, C.; Wang, J.; Yang, W.; Yu, H.; Yu, L.; Xia, G.-S. Detecting Tiny Objects in Aerial Images: A Normalized Wasserstein Distance and a New Benchmark. ISPRS J. Photogramm. Remote Sens. 2022, 190, 79–93. [Google Scholar] [CrossRef]

- Zhou, S.; Jiang, J.; Hong, X.; Fu, P.; Yan, H. Vision Meets Algae: A Novel Way for Microalgae Recognization and Health Monitor. Front. Mar. Sci. 2023, 10, 1105545. [Google Scholar] [CrossRef]

- Salari, A.; Djavadifar, A.; Liu, X.; Najjaran, H. Object Recognition Datasets and Challenges: A Review. Neurocomputing 2022, 495, 129–152. [Google Scholar] [CrossRef]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2017, arXiv:1706.03762. [Google Scholar] [CrossRef]

| Article | Dataset Quantity | Algal Species |

|---|---|---|

| Wu and Chen et al. [13] | 1512 images of microalgae under microscope | Includes 14 species, such as Fibrocystis |

| Chu et al. [14] | 635 images of planktonic algae | Includes 5 species, such as Dunaliella salina, |

| Qian et al. [11] | 1859 microscopic images of algae | 9 categories |

| Ruiz-Santaquiteria et al. [9] | 635 images of planktonic algae under microscope | Includes 5 species, such as Dunaliella salina |

| Abdullah et al. [12] | 400 images of microalgae under microscope | 4 species |

| Park et al. [15] | 437 images of microalgae under microscope | 30 species |

| Algal Species | ID | Common Morphology Display | Algal Species | ID | Common Morphology Display |

|---|---|---|---|---|---|

| Planktothrix sp. | 1 |  | Aulacoseira granulata | 2 |  |

| Aphanizomenon flosaquae | 3 |  | Microcystis sp. | 4 |  |

| Cyclotella sp. | 5 |  | Peridinium bipes | 6 |  |

| Nitzschia sp. | 7 |  | Chlorella sp. | 8 |  |

| Spirulina-like | 9 |  | Cryptomonas sp. | 10 |  |

| Pediastrum sp. | 11 |  | Scenedesmus quadricauda | 12 |  |

| Anabaena circinalis | 13 |  | Mougeotia sp. | 14 |  |

| Actinastrum sp. | 15 |  | Anabaena sp. | 16 |  |

| Chlamydomonas sp. | 17 |  | Planctonema lauterbornii | 18 |  |

| Cosmarium Corda | 19 |  | Scenedesmus acuminatus | 20 |  |

| Ulothrix sp. | 21 |  | Staurastrum sp. | 22 |  |

| Spirogyra sp. | 23 |  | Dolichospermum spiroides | 24 |  |

| Euglena sp. | 25 |  |

| Experiments | Names |

|---|---|

| system | Windows11 |

| CPU | Intel(R) Core(TM) i7-10700K CPU @ 3.80GHz 3.70 GHz |

| GPU | NVIDIA GeForce RTX 2070 |

| RAM | 32 GB |

| Model | ∆P (IoU = 0.65) | ∆R (IoU = 0.65) | ∆mAP (0.5) | ∆mAP (0.5–0.95) |

|---|---|---|---|---|

| YOLOv5 | 7.1% | −1.2% | 1.8% | 1.5% |

| Faster R-CNN | 4.7% | −0.1% | 0.7% | 1.3% |

| Deformable_DETR_NWD(4) | 0.6% | 0.9% | 0 | 0.1% |

| Disadvantaged Algal Species | ∆P (IoU = 0.65) (%) |

|---|---|

| Chlamydomonas sp. | 3.8 |

| Cosmarium Corda | 0.9 |

| Scenedesmus acuminatus | 2.5 |

| Staurastrum sp. | 5.1 |

| Spirogyra sp. | 27.6 |

| Euglena sp. | 14.7 |

| Model | P (IoU = 0.65) | R (IoU = 0.65) | mAP (0.5) | mAP (0.5–0.95) |

|---|---|---|---|---|

| YOLOv5 | 0.695 | 0.659 | 0.684 | 0.397 |

| Faster R-CNN | 0.656 | 0.735 | 0.731 | 0.352 |

| Deformable_DETR | 0.800 | 0.880 | 0.790 | 0.486 |

| Deformable_DETR_NWD(4) | 0.810 | 0.907 | 0.799 | 0.488 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, L.; Liang, Z.; Liu, T.; Lu, C.; Yu, Q.; Qiao, Y. Transformer-Driven Algal Target Detection in Real Water Samples: From Dataset Construction and Augmentation to Model Optimization. Water 2025, 17, 430. https://doi.org/10.3390/w17030430

Li L, Liang Z, Liu T, Lu C, Yu Q, Qiao Y. Transformer-Driven Algal Target Detection in Real Water Samples: From Dataset Construction and Augmentation to Model Optimization. Water. 2025; 17(3):430. https://doi.org/10.3390/w17030430

Chicago/Turabian StyleLi, Liping, Ziyi Liang, Tianquan Liu, Cunyue Lu, Qiuyu Yu, and Yang Qiao. 2025. "Transformer-Driven Algal Target Detection in Real Water Samples: From Dataset Construction and Augmentation to Model Optimization" Water 17, no. 3: 430. https://doi.org/10.3390/w17030430

APA StyleLi, L., Liang, Z., Liu, T., Lu, C., Yu, Q., & Qiao, Y. (2025). Transformer-Driven Algal Target Detection in Real Water Samples: From Dataset Construction and Augmentation to Model Optimization. Water, 17(3), 430. https://doi.org/10.3390/w17030430