An Accuracy Assessment of the ESTARFM Data-Fusion Model in Monitoring Lake Dynamics

Abstract

1. Introduction

2. Materials

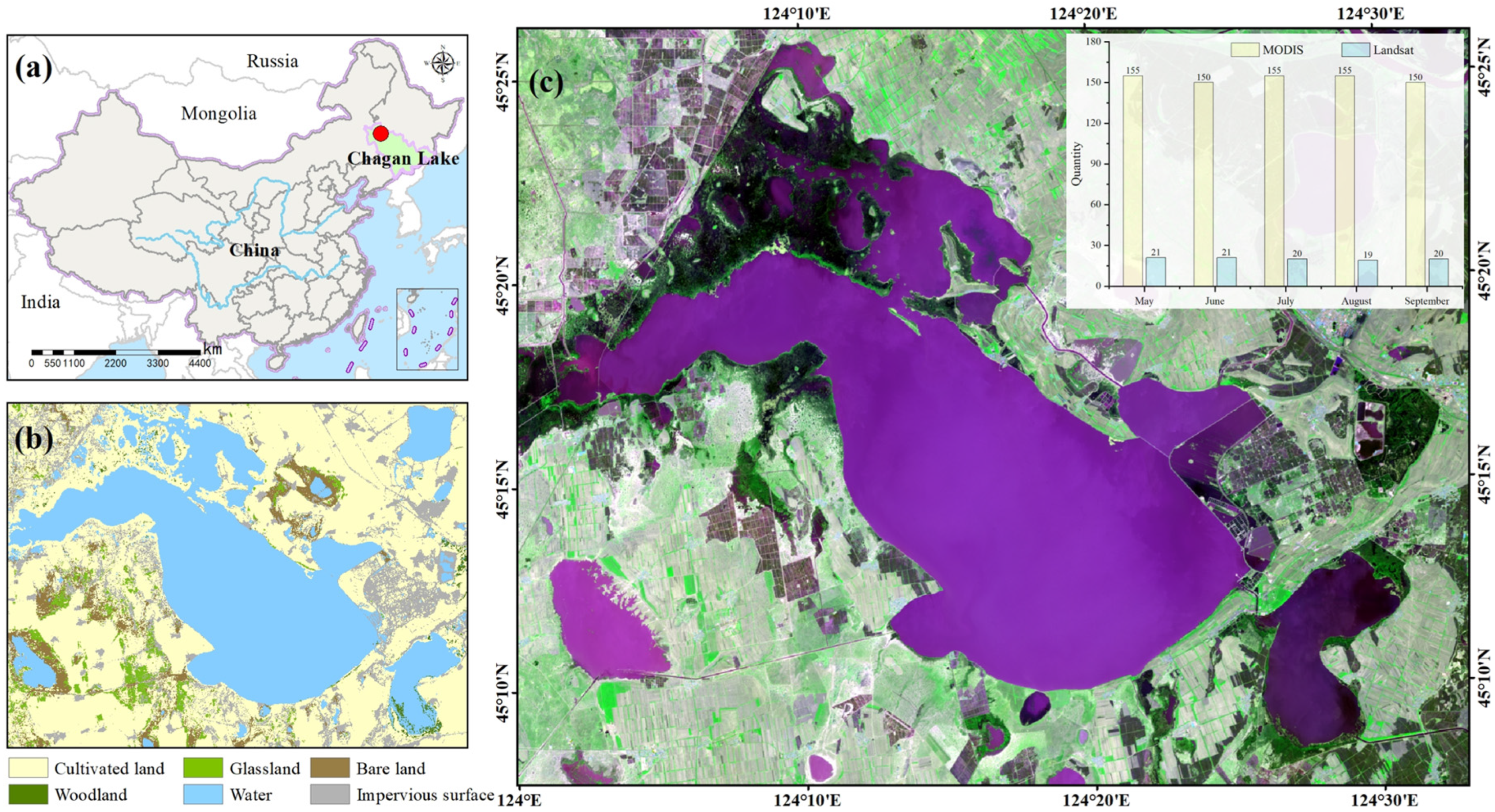

2.1. Study Area

2.2. Data Sources

2.3. Data Processing

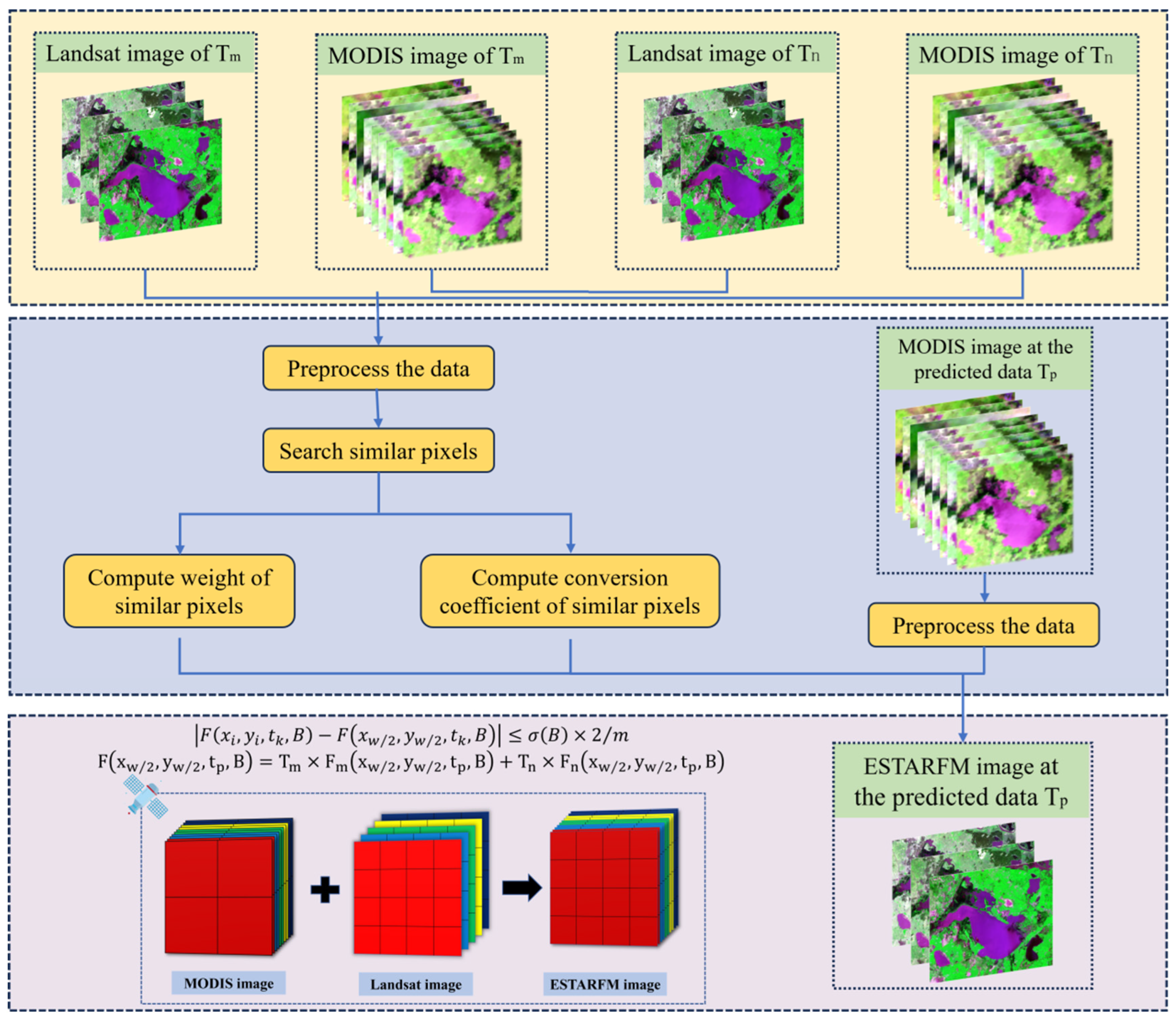

3. Method

3.1. Analysis of the ESTARFM Algorithm

3.2. Fusion Result Accuracy Evaluation Index

4. Results

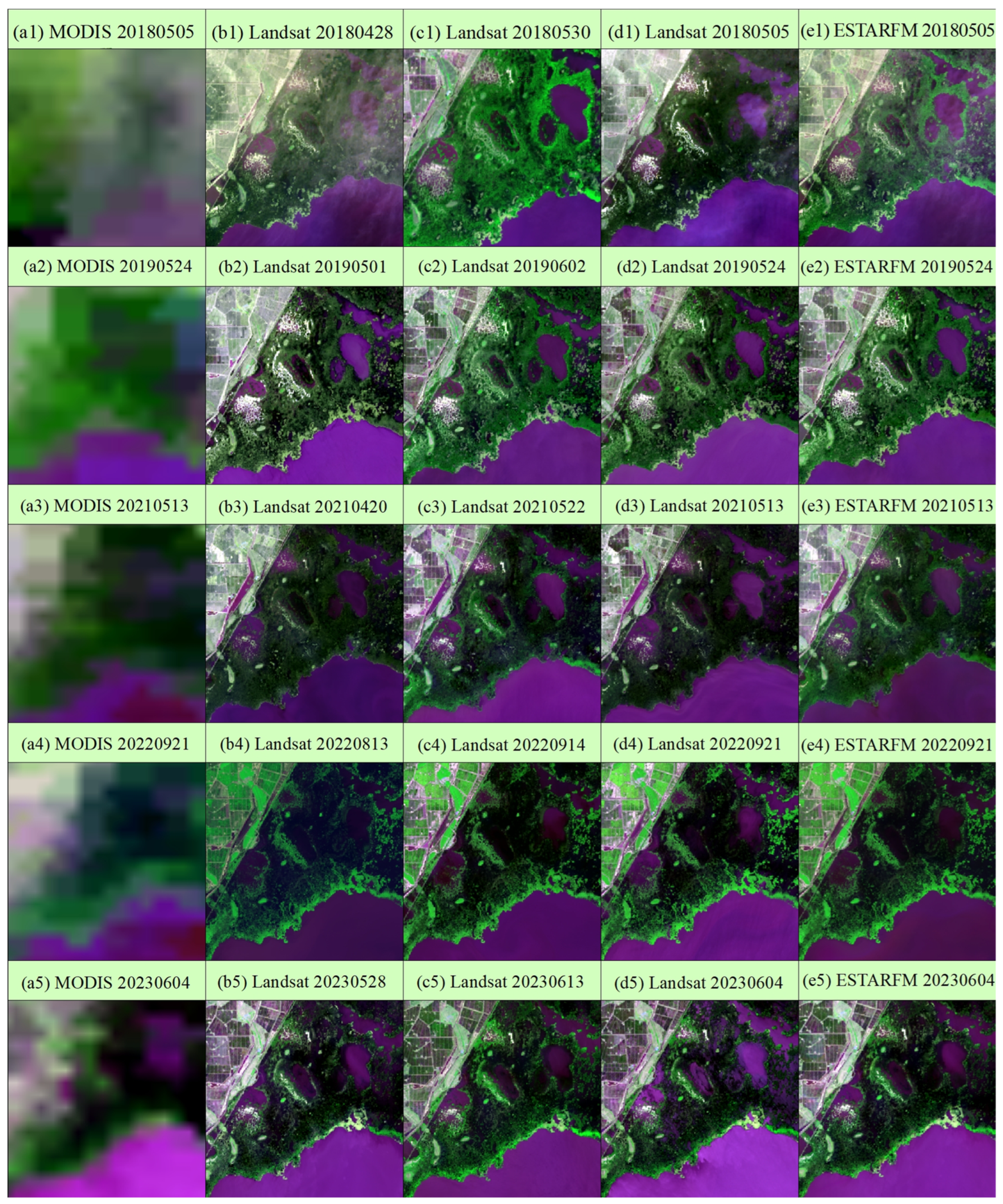

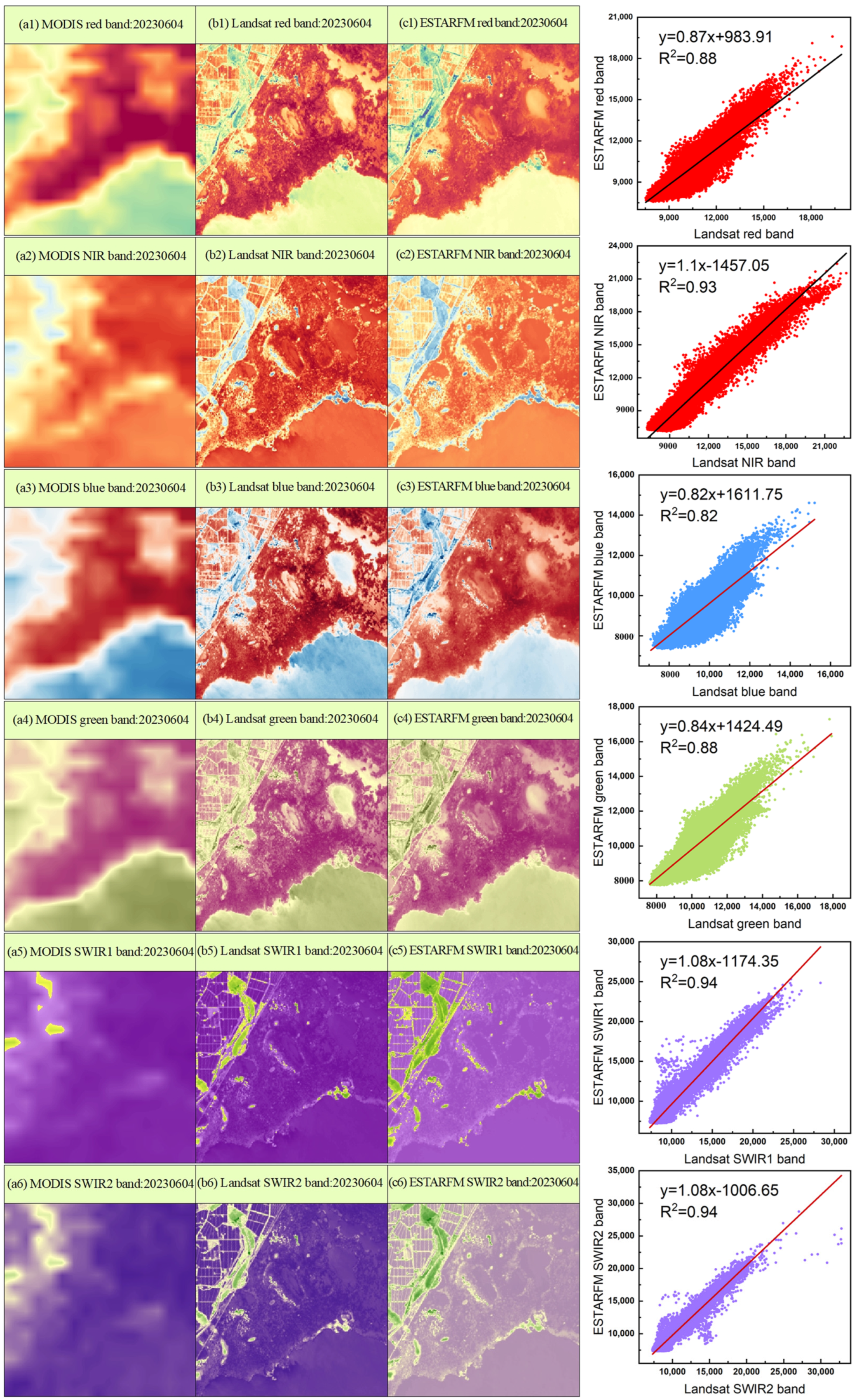

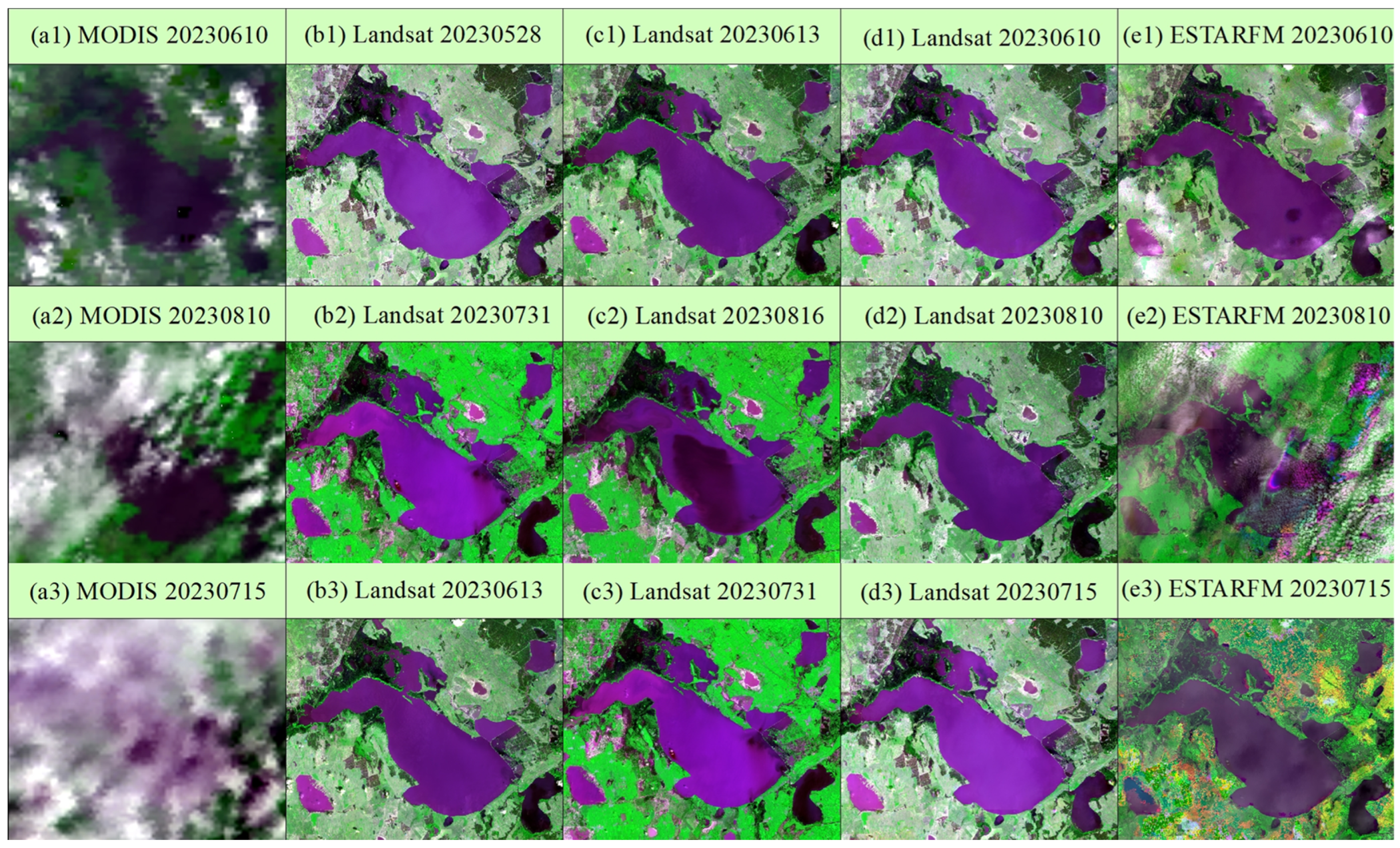

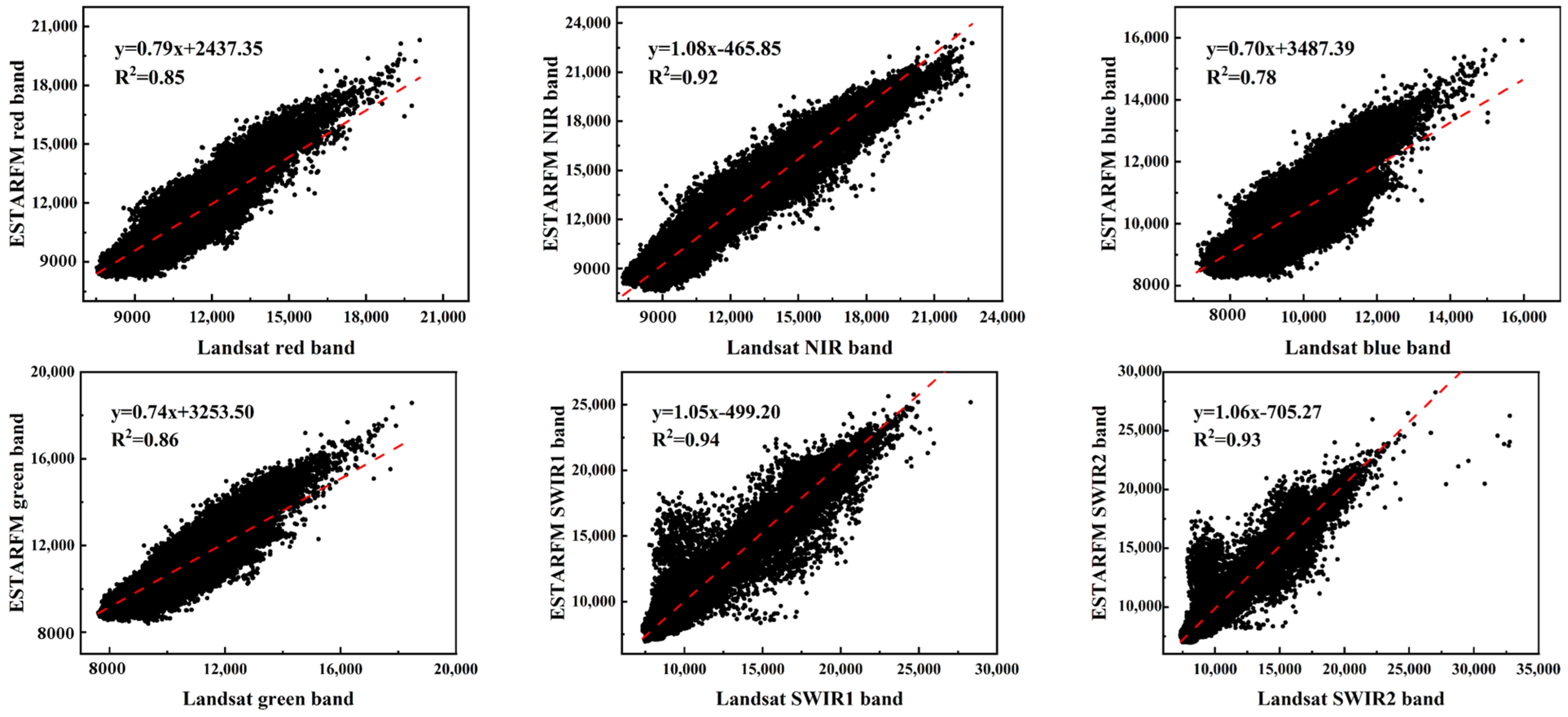

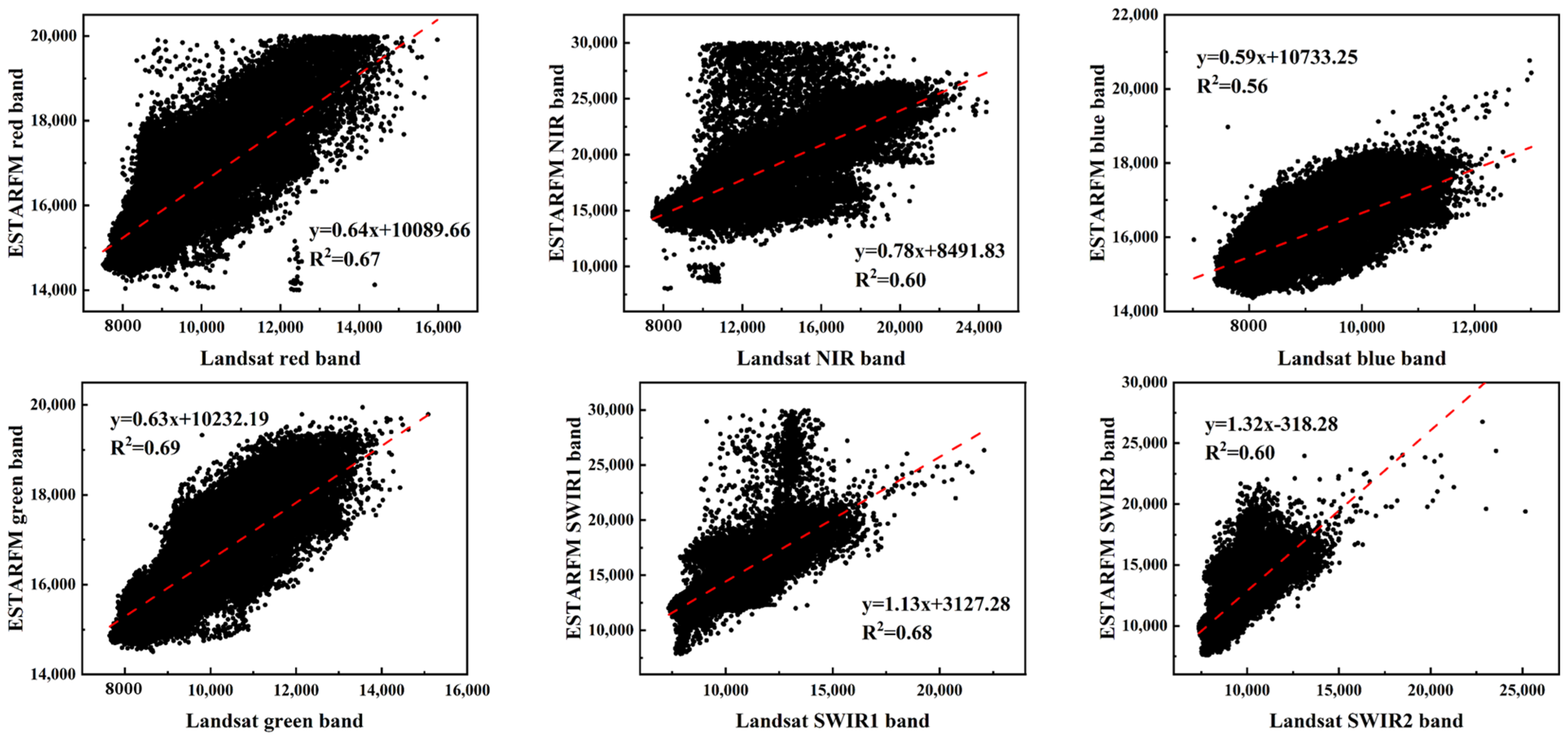

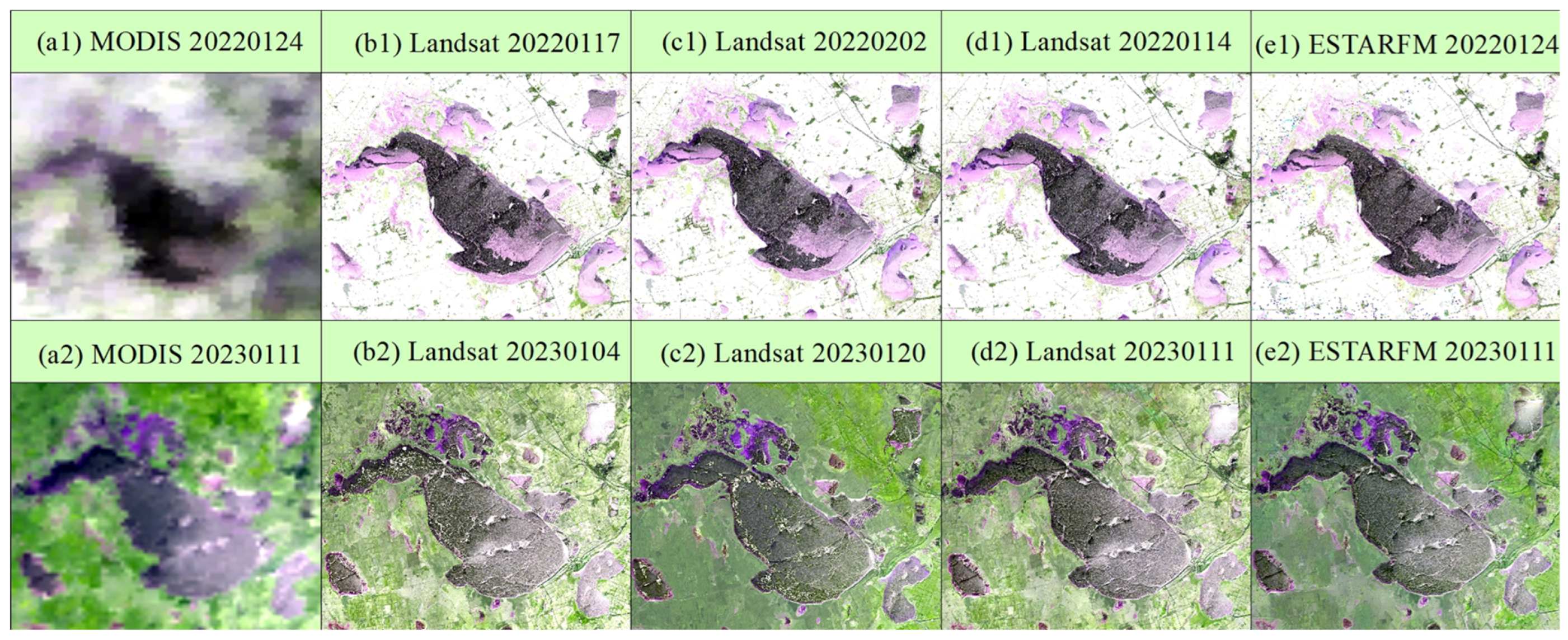

4.1. Accuracy Evaluation of Data Fusion

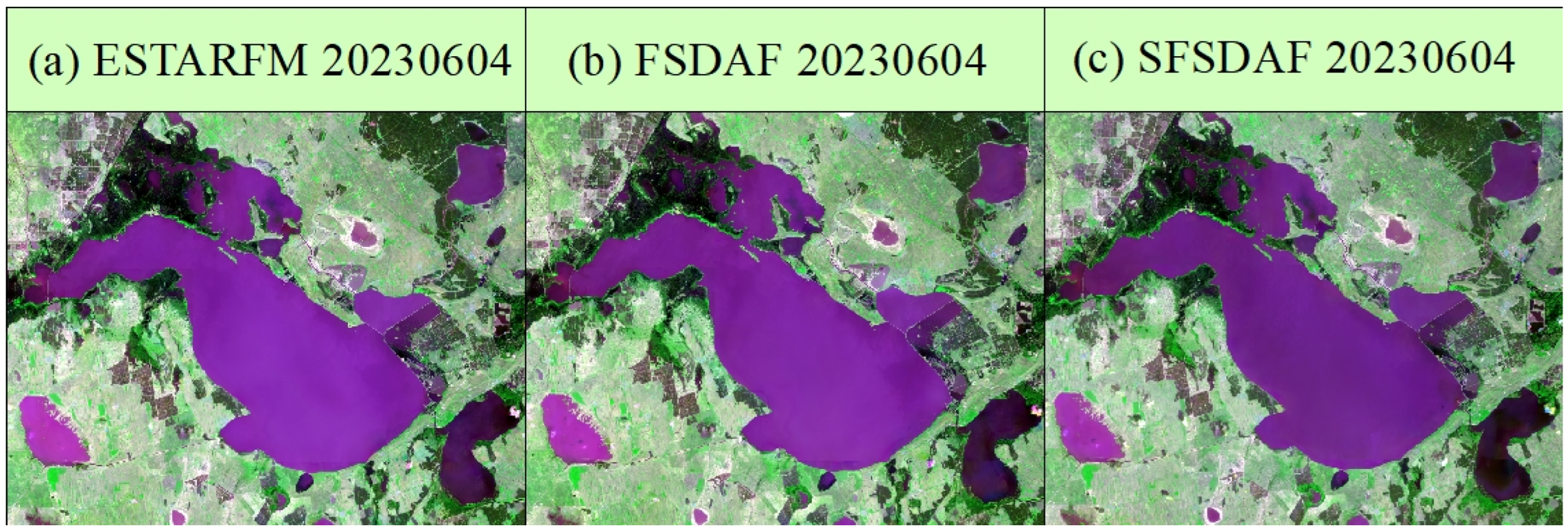

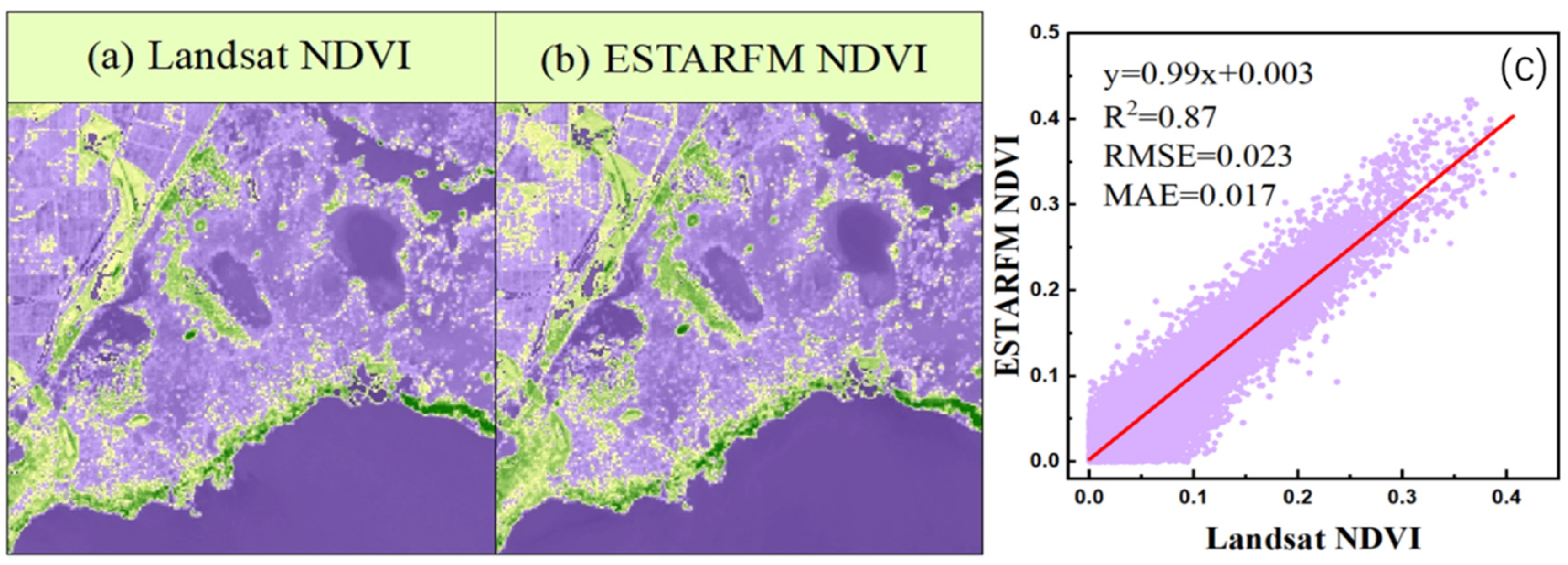

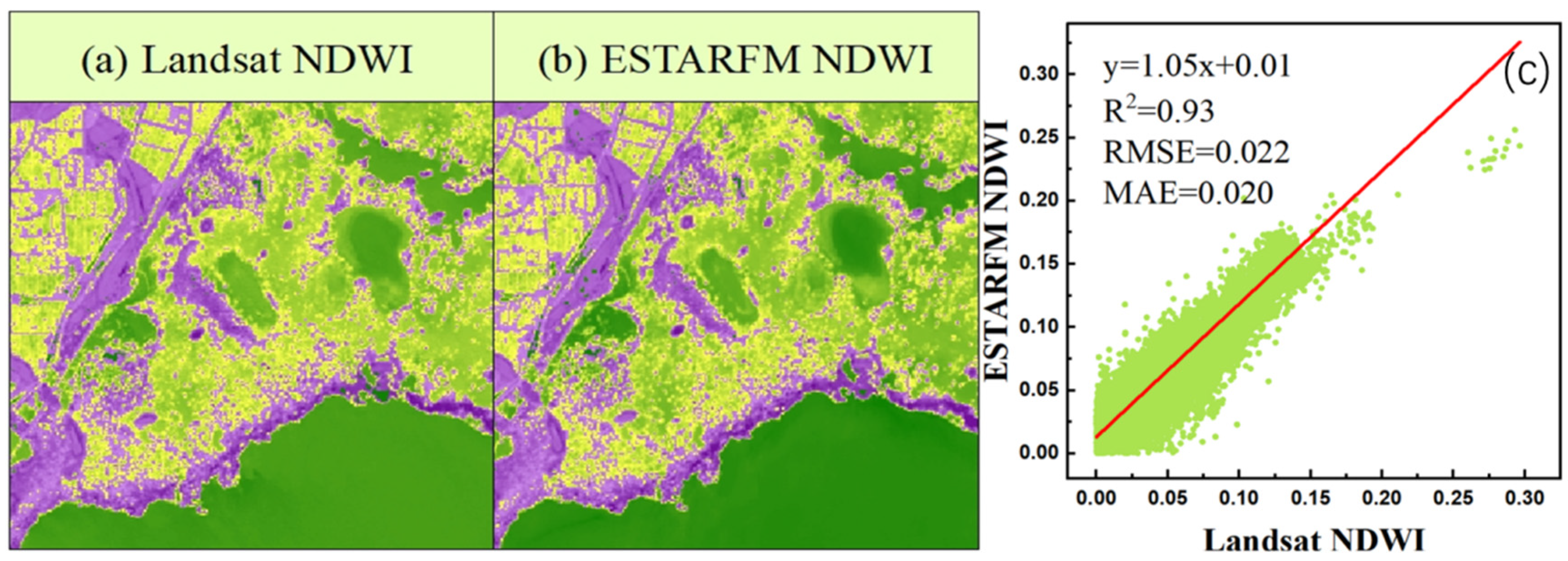

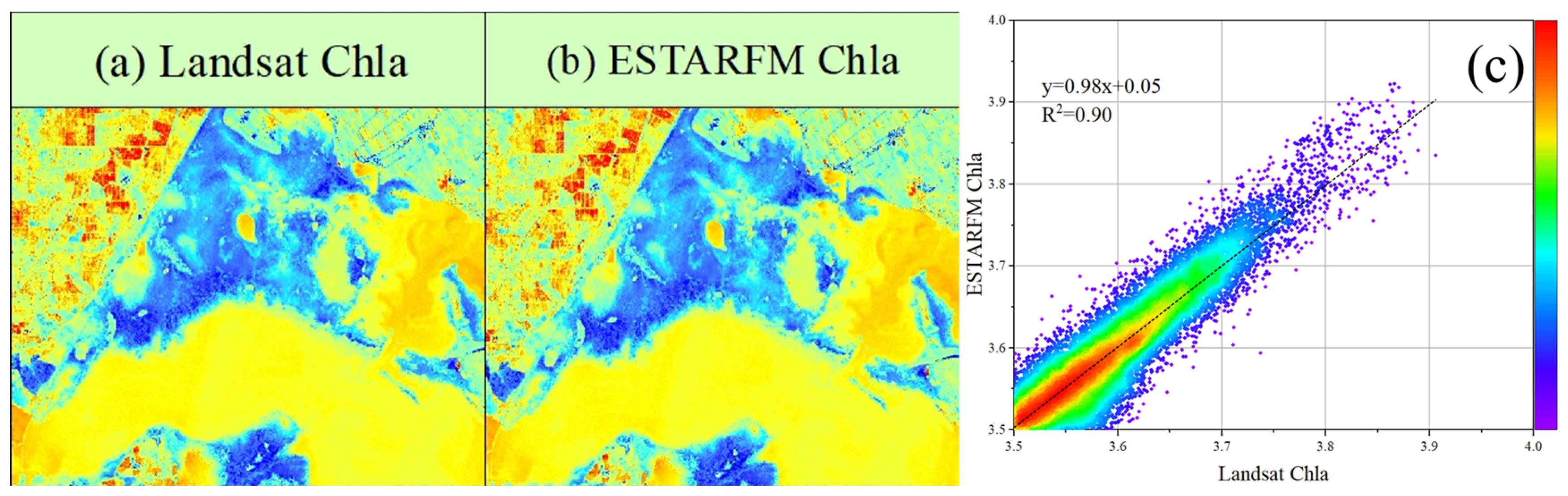

4.2. Evaluation of the Effectiveness of Post-Fusion Imaging Applications

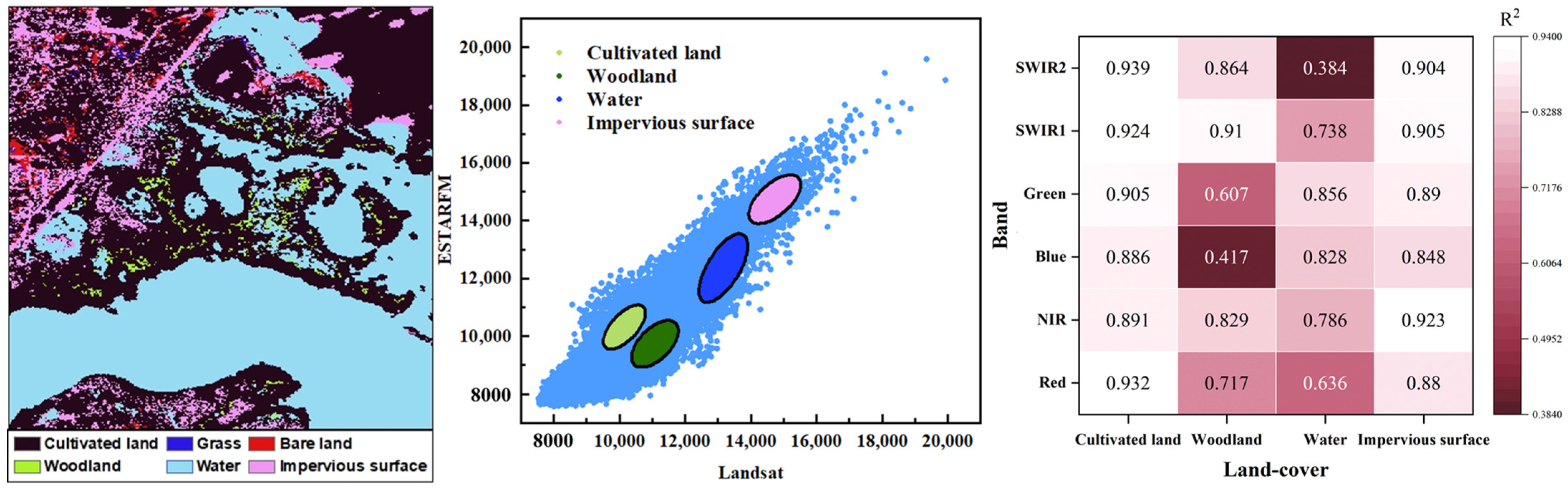

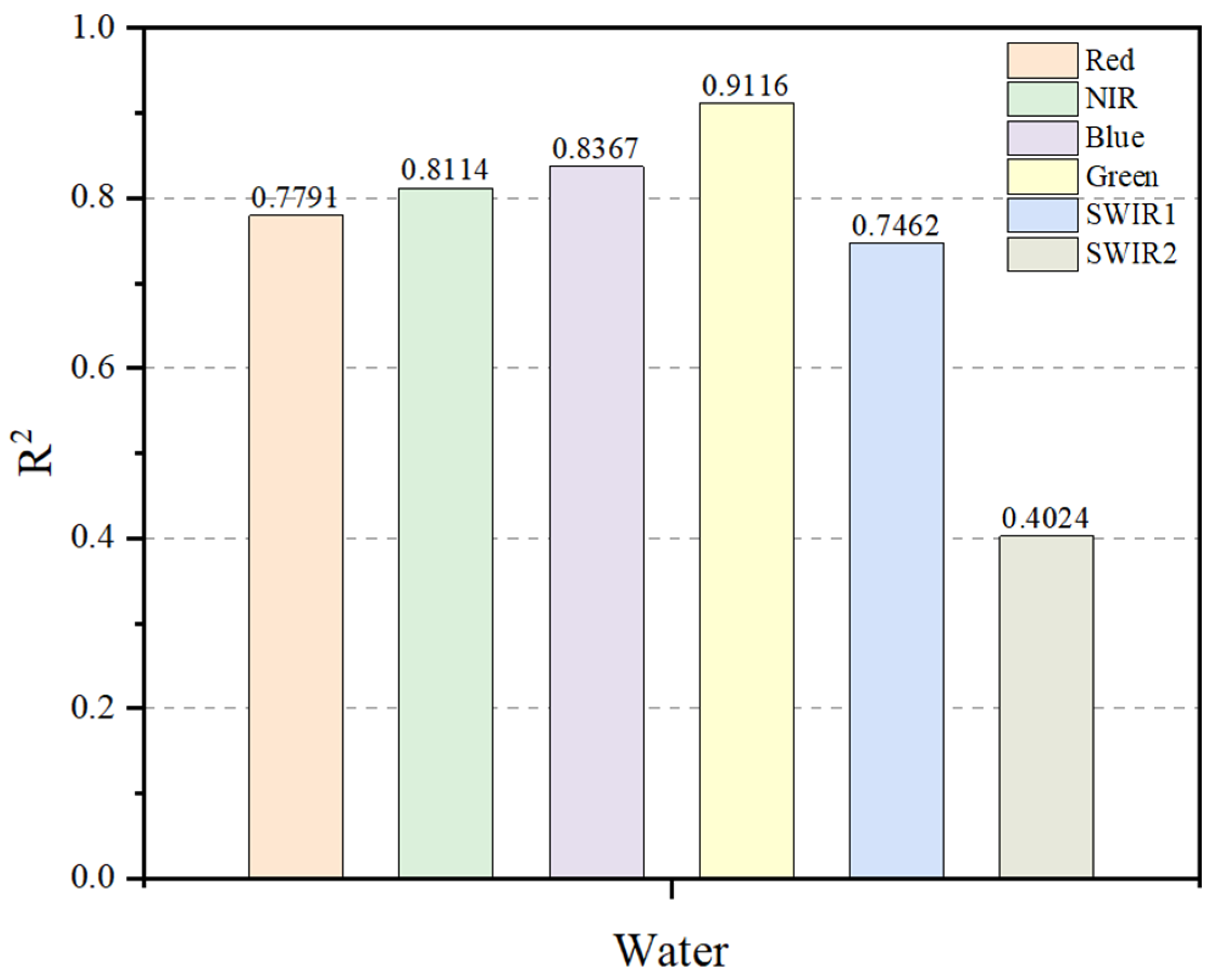

4.3. Evaluation of the Accuracy of Different Land-Cover Categories

5. Discussion

5.1. The Effect of Clouds on the Fusion Effect

5.2. Reflective Properties of Water and the Use of ESTARFM

5.3. Advantages and Limitations of ESTARFM

6. Conclusions

- The ESTARFM achieves high-precision spectral fusion across the visible to thermal infrared bands, especially in the near-infrared and shortwave infrared bands. This capability provides a reliable basis for data acquisition in the retrieval of water parameters.

- By maintaining the high accuracy of NDVI/NDWI, the enhanced spatial resolution of ESTARFM fusion data enables a clear depiction of the micro-variations in the vegetation–water transition zone. This supplies high-precision spatiotemporal observational data for examining wetland ecological boundaries and is especially beneficial for tracking ecological responses to water-level changes.

- The ESTARFM can stably process various types of surface data, efficiently generate water-quality monitoring data with high spatial and temporal resolution, and provide reliable data support for the high-frequency monitoring of lake water quality.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, L.; Weng, Q.; Shao, Z. An evaluation of monthly impervious surface dynamics by fusing Landsat and MODIS time series in the Pearl River Delta, China, from 2000 to 2015. Remote Sens. Environ. 2017, 201, 99–114. [Google Scholar]

- Zhu, X.; Leung, K.H.; Li, W.S.; Cheung, L.K. Monitoring interannual dynamics of desertification in Minqin County, China, using dense Landsat time series. Int. J. Digit. Earth 2019, 13, 886–898. [Google Scholar]

- Emelyanova, I.V.; McVicar, T.R.; Van Niel, T.G.; Li, L.T.; van Dijk, A.I.J.M. Assessing the accuracy of blending Landsat–MODIS surface reflectances in two landscapes with contrasting spatial and temporal dynamics: A framework for algorithm selection. Remote Sens. Environ. 2013, 133, 193–209. [Google Scholar]

- Sun, R.; Chen, S.; Su, H.; Mi, C.; Jin, N. The Effect of NDVI Time Series Density Derived from Spatiotemporal Fusion of Multisource Remote Sensing Data on Crop Classification Accuracy. ISPRS Int. J. Geo-Inf. 2019, 8, 502. [Google Scholar]

- Kwan, C.; Zhu, X.; Gao, F.; Chou, B.; Perez, D.; Li, J.; Shen, Y.; Koperski, K.; Marchisio, G. Assessment of Spatiotemporal Fusion Algorithms for Planet and Worldview Images. Sensors 2018, 18, 1051. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Atkinson, P.M. Spatio-temporal fusion for daily Sentinel-2 images. Remote Sens. Environ. 2018, 204, 31–42. [Google Scholar]

- Tian, F.; Wang, Y.; Fensholt, R.; Wang, K.; Zhang, L.; Huang, Y. Mapping and Evaluation of NDVI Trends from Synthetic Time Series Obtained by Blending Landsat and MODIS Data around a Coalfield on the Loess Plateau. Remote Sens. 2013, 5, 4255–4279. [Google Scholar]

- Battude, M.; Al Bitar, A.; Morin, D.; Cros, J.; Huc, M.; Marais Sicre, C.; Le Dantec, V.; Demarez, V. Estimating maize biomass and yield over large areas using high spatial and temporal resolution Sentinel-2 like remote sensing data. Remote Sens. Environ. 2016, 184, 668–681. [Google Scholar]

- Zou, X.; Liu, X.; Liu, M.; Liu, M.; Zhang, B. A Framework for Rice Heavy Metal Stress Monitoring Based on Phenological Phase Space and Temporal Profile Analysis. Int. J. Environ. Res. Public Health 2019, 16, 350. [Google Scholar]

- Zhang, B.; Liu, X.; Liu, M.; Meng, Y. Detection of Rice Phenological Variations under Heavy Metal Stress by Means of Blended Landsat and MODIS Image Time Series. Remote Sens. 2019, 11, 13. [Google Scholar]

- Lu, Y.; Wu, P.; Ma, X.; Li, X. Detection and prediction of land use/land cover change using spatiotemporal data fusion and the Cellular Automata–Markov model. Environ. Monit. Assess. 2019, 191, 68. [Google Scholar] [PubMed]

- Zhu, X.; Helmer, E.H.; Gao, F.; Liu, D.; Chen, J.; Lefsky, M.A. A flexible spatiotemporal method for fusing satellite images with different resolutions. Remote Sens. Environ. 2016, 172, 165–177. [Google Scholar]

- Wang, Q.; Zhang, Y.; Onojeghuo, A.O.; Zhu, X.; Atkinson, P.M. Enhancing Spatio-Temporal Fusion of MODIS and Landsat Data by Incorporating 250 m MODIS Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 4116–4123. [Google Scholar]

- Li, X.; Foody, G.M.; Boyd, D.S.; Ge, Y.; Zhang, Y.; Du, Y.; Ling, F. SFSDAF: An enhanced FSDAF that incorporates sub-pixel class fraction change information for spatio-temporal image fusion. Remote Sens. Environ. 2020, 237, 111537. [Google Scholar]

- Guo, D.; Shi, W.; Hao, M.; Zhu, X. FSDAF 2.0: Improving the performance of retrieving land cover changes and preserving spatial details. Remote Sens. Environ. 2020, 248, 111973. [Google Scholar]

- Wu, P.; Shen, H.; Zhang, L.; Göttsche, F.-M. Integrated fusion of multi-scale polar-orbiting and geostationary satellite observations for the mapping of high spatial and temporal resolution land surface temperature. Remote Sens. Environ. 2015, 156, 169–181. [Google Scholar]

- Weng, Q.; Fu, P.; Gao, F. Generating daily land surface temperature at Landsat resolution by fusing Landsat and MODIS data. Remote Sens. Environ. 2014, 145, 55–67. [Google Scholar]

- Feng, G.; Masek, J.; Schwaller, M.; Hall, F. On the blending of the Landsat and MODIS surface reflectance: Predicting daily Landsat surface reflectance. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–22181. [Google Scholar]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar]

- Cheng, Q.; Liu, H.; Shen, H.; Wu, P.; Zhang, L. A Spatial and Temporal Nonlocal Filter-Based Data Fusion Method. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4476–4488. [Google Scholar]

- Zurita-Milla, R.; Kaiser, G.; Clevers, J.G.P.W.; Schneider, W.; Schaepman, M.E. Downscaling time series of MERIS full resolution data to monitor vegetation seasonal dynamics. Remote Sens. Environ. 2009, 113, 1874–1885. [Google Scholar]

- Mingquan, W.; Zheng, N.; Changyao, W.; Chaoyang, W.; Li, W. Use of MODIS and Landsat time series data to generate high-resolution temporal synthetic Landsat data using a spatial and temporal reflectance fusion model. J. Appl. Remote Sens. 2012, 6, 063507. [Google Scholar]

- Wu, M.; Wu, C.; Huang, W.; Niu, Z.; Wang, C.; Li, W.; Hao, P. An improved high spatial and temporal data fusion approach for combining Landsat and MODIS data to generate daily synthetic Landsat imagery. Inf. Fusion 2016, 31, 14–25. [Google Scholar]

- Liao, L.; Song, J.; Wang, J.; Xiao, Z.; Wang, J. Bayesian Method for Building Frequent Landsat-Like NDVI Datasets by Integrating MODIS and Landsat NDVI. Remote Sens. 2016, 8, 452. [Google Scholar]

- Xue, J.; Leung, Y.; Fung, T. A Bayesian Data Fusion Approach to Spatio-Temporal Fusion of Remotely Sensed Images. Remote Sens. 2017, 9, 1310. [Google Scholar]

- Huang, B.; Song, H. Spatiotemporal Reflectance Fusion via Sparse Representation. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3707–3716. [Google Scholar]

- Song, H.; Huang, B. Spatiotemporal Satellite Image Fusion Through One-Pair Image Learning. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1883–1896. [Google Scholar]

- Xu, Y.; Huang, B.; Xu, Y.; Cao, K.; Guo, C.; Meng, D. Spatial and Temporal Image Fusion via Regularized Spatial Unmixing. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1362–1366. [Google Scholar]

- Xie, D.; Zhang, J.; Zhu, X.; Pan, Y.; Liu, H.; Yuan, Z.; Yun, Y. An improved STARFM with help of an unmixing-based method to generate high spatial and temporal resolution remote sensing data in complex heterogeneous regions. Sensors 2016, 16, 207. [Google Scholar] [CrossRef]

- Chen, B.; Huang, B.; Xu, B. A hierarchical spatiotemporal adaptive fusion model using one image pair. Int. J. Digit. Earth 2017, 10, 639–655. [Google Scholar]

- Hilker, T.; Wulder, M.A.; Coops, N.C.; Linke, J.; McDermid, G.; Masek, J.G.; Gao, F.; White, J.C. A new data fusion model for high spatial- and temporal-resolution mapping of forest disturbance based on Landsat and MODIS. Remote Sens. Environ. 2009, 113, 1613–1627. [Google Scholar]

- Schmidt, M.; Lucas, R.; Bunting, P.; Verbesselt, J.; Armston, J. Multi-resolution time series imagery for forest disturbance and regrowth monitoring in Queensland, Australia. Remote Sens. Environ. 2015, 158, 156–168. [Google Scholar]

- Walker, J.J.; de Beurs, K.M.; Wynne, R.H.; Gao, F. Evaluation of Landsat and MODIS data fusion products for analysis of dryland forest phenology. Remote Sens. Environ. 2012, 117, 381–393. [Google Scholar]

- Liu, H.; Weng, Q. Enhancing temporal resolution of satellite imagery for public health studies: A case study of West Nile Virus outbreak in Los Angeles in 2007. Remote Sens. Environ. 2012, 117, 57–71. [Google Scholar]

- Shen, H.; Huang, L.; Zhang, L.; Wu, P.; Zeng, C. Long-term and fine-scale satellite monitoring of the urban heat island effect by the fusion of multi-temporal and multi-sensor remote sensed data: A 26-year case study of the city of Wuhan in China. Remote Sens. Environ. 2016, 172, 109–125. [Google Scholar]

- Zhang, F.; Zhu, X.; Liu, D. Blending MODIS and Landsat images for urban flood mapping. Int. J. Remote Sens. 2014, 35, 3237–3253. [Google Scholar]

- Dong, T.; Liu, J.; Qian, B.; Zhao, T.; Jing, Q.; Geng, X.; Wang, J.; Huffman, T.; Shang, J. Estimating winter wheat biomass by assimilating leaf area index derived from fusion of Landsat-8 and MODIS data. Int. J. Appl. Earth Obs. Geoinf. 2016, 49, 63–74. [Google Scholar]

- Wang, C.; Fan, Q.; Li, Q.; SooHoo, W.M.; Lu, L. Energy crop mapping with enhanced TM/MODIS time series in the BCAP agricultural lands. ISPRS J. Photogramm. Remote Sens. 2017, 124, 133–143. [Google Scholar]

- Liu, H.; Li, Q.; Bai, Y.; Yang, C.; Wang, J.; Zhou, Q.; Hu, S.; Shi, T.; Liao, X.; Wu, G. Improving satellite retrieval of oceanic particulate organic carbon concentrations using machine learning methods. Remote Sens. Environ. 2021, 256, 112316. [Google Scholar]

- Zhou, X.; Wang, P.; Tansey, K.; Zhang, S.; Li, H.; Tian, H. Reconstruction of time series leaf area index for improving wheat yield estimates at field scales by fusion of Sentinel-2, -3 and MODIS imagery. Comput. Electron. Agric. 2020, 177, 105692. [Google Scholar]

- Duan, H.T.; Zhang, Y.Z.; Zhan, B.; Song, K.S.; Wang, Z.M. Assessment of chlorophyll-a concentration and trophic state for Lake Chagan using Landsat TM and field spectral data. Environ. Monit. Assess. 2007, 129, 295–308. [Google Scholar] [PubMed]

- Song, K.S.; Wang, Z.M.; Blackwell, J.; Zhang, B.; Li, F.; Zhang, Y.Z.; Jiang, G.J. Water quality monitoring using Landsat Themate Mapper data with empirical algorithms in Chagan Lake, China. J. Appl. Remote Sens. 2011, 5, 1–17. [Google Scholar]

- Yang, Q.; Shi, X.; Li, W.; Song, K.; Li, Z.; Hao, X.; Xie, F.; Lin, N.; Wen, Z.; Fang, C.; et al. Fusion of Landsat 8 Operational Land Imager and Geostationary Ocean Color Imager for hourly monitoring surface morphology of lake ice with high resolution in Chagan Lake of Northeast China. Cryosphere 2023, 17, 959–975. [Google Scholar]

- Liu, X.M.; Zhang, G.X.; Zhang, J.J.; Xu, Y.J.; Wu, Y.; Wu, Y.F.; Sun, G.Z.; Chen, Y.Q.; Ma, H.B. Effects of Irrigation Discharge on Salinity of a Large Freshwater Lake: A Case Study in Chagan Lake, Northeast China. Water 2020, 12, 2112. [Google Scholar] [CrossRef]

- Hao, X.H.; Yang, Q.; Shi, X.G.; Liu, X.M.; Huang, W.F.; Chen, L.W.; Ma, Y. Fractal-Based Retrieval and Potential Driving Factors of Lake Ice Fractures of Chagan Lake, Northeast China Using Landsat Remote Sensing Images. Remote Sens. 2021, 13, 4233. [Google Scholar]

- Kropáček, J.; Maussion, F.; Chen, F.; Hoerz, S.; Hochschild, V. Analysis of ice phenology of lakes on the Tibetan Plateau from MODIS data. Cryosphere 2013, 7, 287–301. [Google Scholar]

- Wulder, M.A.; White, J.C.; Loveland, T.R.; Woodcock, C.E.; Belward, A.S.; Cohen, W.B.; Fosnight, E.A.; Shaw, J.; Masek, J.G.; Roy, D.P. The global Landsat archive: Status, consolidation, and direction. Remote Sens. Environ. 2016, 185, 271–283. [Google Scholar]

- Salomonson, V.V.; Barnes, W.L.; Maymon, P.W.; Montgomery, H.E.; Ostrow, H. MODIS—advanced facility instrument for studies of the earth as a system. IEEE Trans. Geosci. Remote Sens. 1989, 27, 145–153. [Google Scholar]

- Justice, C.O.; Giglio, L.; Korontzi, S.; Owens, J.; Morisette, J.T.; Roy, D.; Descloitres, J.; Alleaume, S.; Petitcolin, F.; Kaufman, Y. The MODIS fire products. Remote Sens. Environ. 2002, 83, 244–262. [Google Scholar]

- Prasad, K.; Bernstein, R.L. MODIS ocean and atmospheric applications using TeraScan software. In Proceedings of the OCEANS 2003 MTS/IEEE: Celebrating the Past Teaming Toward the Future, San Diego, CA, USA, 22–26 September 2003; p. 1580. [Google Scholar]

- Liu, M.; Liu, X.; Dong, X.; Zhao, B.; Zou, X.; Wu, L.; Wei, H. An Improved Spatiotemporal Data Fusion Method Using Surface Heterogeneity Information Based on ESTARFM. Remote Sens. 2020, 12, 3673. [Google Scholar]

- Yang, J.; Yao, Y.; Wei, Y.; Zhang, Y.; Jia, K.; Zhang, X.; Shang, K.; Bei, X.; Guo, X. A Robust Method for Generating High-Spatiotemporal-Resolution Surface Reflectance by Fusing MODIS and Landsat Data. Remote Sens. 2020, 12, 2312. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar]

- McFeeters, S.K. The use of the normalized difference water index (NDWI) in the delineation of open water features. Int. J. Remote Sens. 1996, 17, 1425–1432. [Google Scholar]

- Zhang, Y.; Guindon, B.; Cihlar, J. An image transform to characterize and compensate for spatial variations in thin cloud contamination of Landsat images. Remote Sens. Environ. 2002, 82, 173–187. [Google Scholar]

- Zhu, Z.; Woodcock, C.E. Object-based cloud and cloud shadow detection in Landsat imagery. Remote Sens. Environ. 2012, 118, 83–94. [Google Scholar]

- Asner, G.P. Cloud cover in Landsat observations of the Brazilian Amazon. Int. J. Remote Sens. 2001, 22, 3855–3862. [Google Scholar]

- Arvidson, T.; Gasch, J.; Goward, S.N. Landsat 7’s long-term acquisition plan—An innovative approach to building a global imagery archive. Remote Sens. Environ. 2001, 78, 13–26. [Google Scholar]

- Saunders, R.W.; Kriebel, K.T. an improved method for detecting clear sky and cloudy radiances from avhrr data. Int. J. Remote Sens. 1988, 9, 123–150. [Google Scholar]

- Chen, Y.; Fan, J.; Wen, X.; Cao, W.; Wang, L. Research on Cloud Removal from Landsat TM Image based on Spatial and Temporal Data Fusion Model. Remote Sens. Technol. Appl. 2015, 30, 312–320. [Google Scholar]

- Gao, S.; Liu, X.; Song, J.; Shi, Z.; Yang, L.; Guo, L. Study on the Factors that Influencing High Spatio-temporal Resolution NDVI Fusion Accuracy in Tropical Mountainous Area. J. Geo-Inf. Sci. 2022, 24, 405–419. [Google Scholar]

- Zhou, H.; Bao, G.; Xu, Z.; Bayarsaikhan, S.; Bao, Y. Research on the Extraction of Key Phenological Metrics of Subalpine Meadow based on CO_2 Flux and Remote Sensing Fusion Data. Remote Sens. Technol. Appl. 2023, 38, 624–639. [Google Scholar]

- Shen, H.F.; Li, X.H.; Cheng, Q.; Zeng, C.; Yang, G.; Li, H.F.; Zhang, L.P. Missing Information Reconstruction of Remote Sensing Data: A Technical Review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 61–85. [Google Scholar]

- Lyu, L.; Liu, G.; Shang, Y.; Wen, Z.; Hou, J.; Song, K. Characterization of dissolved organic matter (DOM) in an urbanized watershed using spectroscopic analysis. Chemosphere 2021, 277, 130210. [Google Scholar]

- Tao, H.; Song, K.; Liu, G.; Wen, Z.; Wang, Q.; Du, Y.; Lyu, L.; Du, J.; Shang, Y. Songhua River basin’s improving water quality since 2005 based on Landsat observation of water clarity. Environ. Res. 2021, 199, 111299. [Google Scholar]

- Wen, Z.; Wang, Q.; Liu, G.; Jacinthe, P.-A.; Wang, X.; Lyu, L.; Tao, H.; Ma, Y.; Duan, H.; Shang, Y.; et al. Remote sensing of total suspended matter concentration in lakes across China using Landsat images and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2022, 187, 61–78. [Google Scholar]

- Cai, Y.; Ke, C.Q.; Li, X.; Zhang, G.; Duan, Z.; Lee, H. Variations of Lake Ice Phenology on the Tibetan Plateau From 2001 to 2017 Based on MODIS Data. J. Geophys. Res. Atmos. 2019, 124, 825–843. [Google Scholar]

- Chang-Qing, K.; An-Qi, T.; Xin, J. Variability in the ice phenology of Nam Co Lake in central Tibet from scanning multichannel microwave radiometer and special sensor microwave/imager: 1978 to 2013. J. Appl. Remote Sens. 2013, 7, 073477. [Google Scholar]

- Brown, L.C.; Duguay, C.R. The response and role of ice cover in lake-climate interactions. Prog. Phys. Geogr.-Earth Environ. 2010, 34, 671–704. [Google Scholar]

- Weber, H.; Riffler, M.; Noges, T.; Wunderle, S. Lake ice phenology from AVHRR data for European lakes: An automated two-step extraction method. Remote Sens. Environ. 2016, 174, 329–340. [Google Scholar]

- Knoll, L.B.; Sharma, S.; Denfeld, B.A.; Flaim, G.; Hori, Y.; Magnuson, J.J.; Straile, D.; Weyhenmeyer, G.A. Consequences of lake and river ice loss on cultural ecosystem services. Limnol. Oceanogr. Lett. 2019, 4, 119–131. [Google Scholar]

| Image ID | Data | Image ID | Data |

|---|---|---|---|

| 1 | 5 May 2018 | 8 | 2 September 2021 |

| 2 | 21 May 2018 | 9 | 16 May 2022 |

| 3 | 6 June 2018 | 10 | 17 June 2022 |

| 4 | 9 August 2018 | 11 | 20 August 2022 |

| 5 | 10 September 2018 | 12 | 5 September 2022 |

| 6 | 24 May 2019 | 13 | 21 September 2022 |

| 7 | 13 May 2021 | 14 | 4 June 2023 |

| Band | Landsat | Bandwidth (nm) | MODIS | Bandwidth (nm) |

|---|---|---|---|---|

| Red | Band 1 | 0.630–0.680 | Band 1 | 0.620–0.670 |

| Near Infrared (NIR) | Band 2 | 0.845–0.885 | Band 2 | 0.841–0.876 |

| Blue | Band 3 | 0.450–0.515 | Band 3 | 0.459–0.479 |

| Green | Band 4 | 0.525–0.600 | Band 4 | 0.545–0.565 |

| Shortwave Infrared 1 (SWIR1) | Band 6 | 1.560–1.660 | Band 6 | 1.628–1.652 |

| Shortwave Infrared 2 (SWIR2) | Band 7 | 2.100–2.300 | Band 7 | 2.105–2.155 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, C.; Liu, Y.; Chen, L.; Wu, Y.; Sun, J.; Sun, Y.; Zhang, G.; Zhang, Y.; Wang, Y.; Du, M.; et al. An Accuracy Assessment of the ESTARFM Data-Fusion Model in Monitoring Lake Dynamics. Water 2025, 17, 2057. https://doi.org/10.3390/w17142057

Peng C, Liu Y, Chen L, Wu Y, Sun J, Sun Y, Zhang G, Zhang Y, Wang Y, Du M, et al. An Accuracy Assessment of the ESTARFM Data-Fusion Model in Monitoring Lake Dynamics. Water. 2025; 17(14):2057. https://doi.org/10.3390/w17142057

Chicago/Turabian StylePeng, Can, Yuanyuan Liu, Liwen Chen, Yanfeng Wu, Jingxuan Sun, Yingna Sun, Guangxin Zhang, Yuxuan Zhang, Yangguang Wang, Min Du, and et al. 2025. "An Accuracy Assessment of the ESTARFM Data-Fusion Model in Monitoring Lake Dynamics" Water 17, no. 14: 2057. https://doi.org/10.3390/w17142057

APA StylePeng, C., Liu, Y., Chen, L., Wu, Y., Sun, J., Sun, Y., Zhang, G., Zhang, Y., Wang, Y., Du, M., & Qi, P. (2025). An Accuracy Assessment of the ESTARFM Data-Fusion Model in Monitoring Lake Dynamics. Water, 17(14), 2057. https://doi.org/10.3390/w17142057