Variational Quantum Regression Application in Modeling Monthly River Discharge

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Region and Data Series

2.2. Basic Concept of Variational Quantum Regressor and VQR Structure

- Feature encoding, which transforms the input data into quantum states;

- The variational quantum circuit (VQC) that uses parameterized quantum gates to perform quantum state transformations;

- Measurement and optimization, which extract output results via quantum measurement and optimize the circuit parameters using classical methods to achieve model convergence.

- Quantum feature encoding: classical input data must be transformed into quantum states for quantum computation. This encoding process is typically performed using parameterized quantum gates (e.g., , , ZZ-interaction gates). For an input x, the encoding can be represented as the following:where is a state. In multi-qubit systems, quantum entanglement (e.g., ZZ interaction) can be utilized to enhance feature representation.

- Quantum processing: during this process, a VQC is applied to transform the quantum state. The VQC serves as the core of the VQR and consists of parameterized quantum gates and entangling gates akin to the hidden layers in classical neural networks. The VQC learns feature representations from the input and optimizes the trainable parameter θ by [58]:where CNOT gates introduce quantum entanglement, and multiple circuit layers enable the modeling of complex nonlinear mappings.

- Measurement and regression computation: since quantum computation results are stored in quantum states, quantum measurement is required to extract information. VQR applies Pauli-Z measurement to compute the expectation value of the quantum state by:Due to the probabilistic nature of quantum measurements, shot-based sampling is typically employed to reduce measurement errors and improve result stability.

- Loss computation using the standard loss functions:

- Parameter optimization: the process uses classical algorithms (e.g., L-BFGS-B [59,60], COBYLA [61,62], and Adam [63]) to optimize the parameters of quantum circuits using the equation:where η is the learning rate.The parameter θ is optimized through iterative training to minimize the regression error and finally obtain the optimal model.

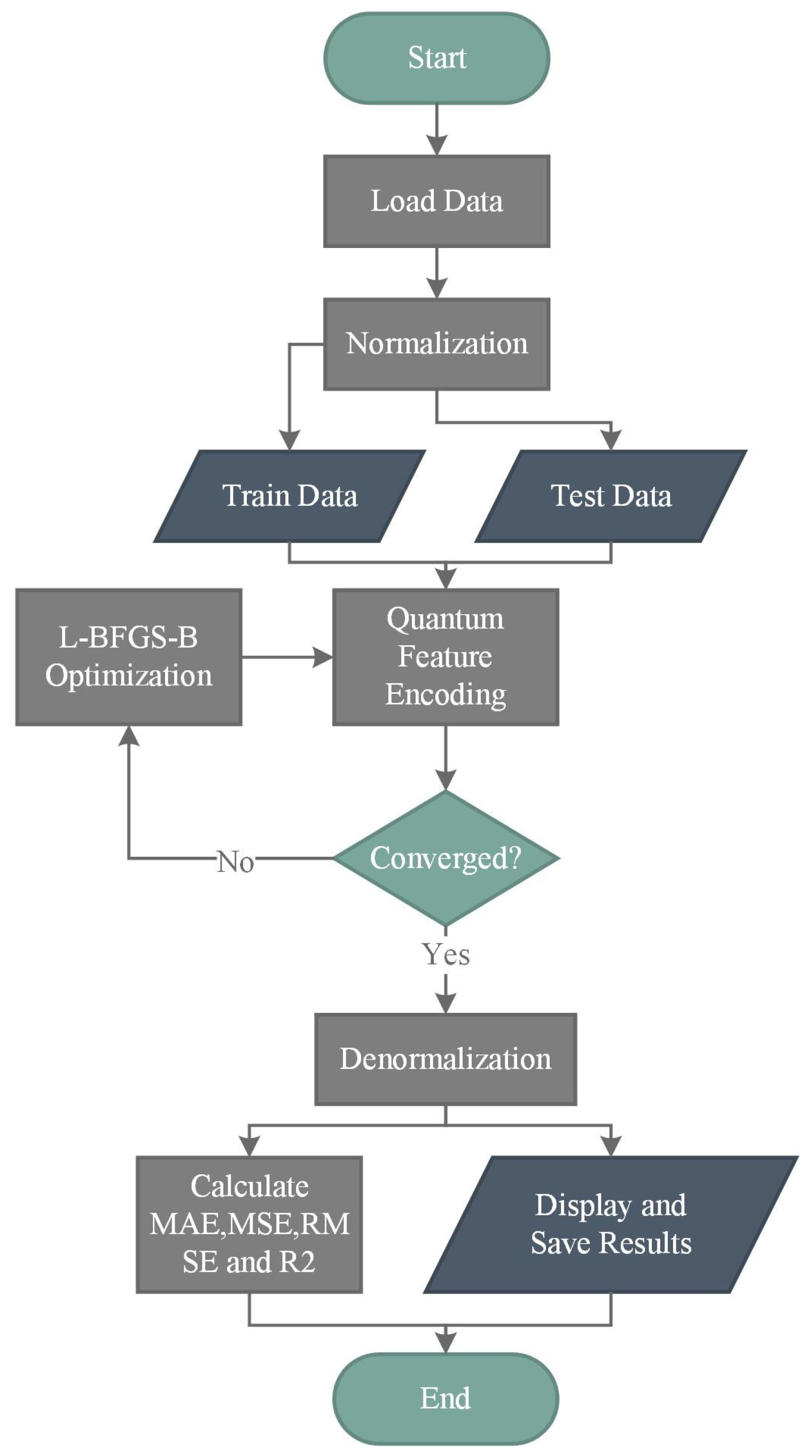

2.3. Modeling Stages

- 1.

- Load data and preprocessing:

- Raw data was imported, checked for missing values and outliers, and normalized. No cleaning was necessary given the series origin and accuracy.

- The normalized series were split into training and test sets.

- 2.

- Quantum feature encoding and circuit design

- Training data are encoded via a single-qubit feature map.

- A variational ansatz circuit is constructed.

- 3.

- Optimization loop

- The L-BFGS-B optimizer iteratively updates circuit parameters.

- A convergence check directs the loop until stopping criteria are met.

- 4.

- Post-processing and evaluation

- Optimized outputs are de-normalized.

- Standard goodness-of-fit metrics (MAE, MSE, RMSE, R2) are calculated.

- Results are displayed and saved for further analysis.

3. Results

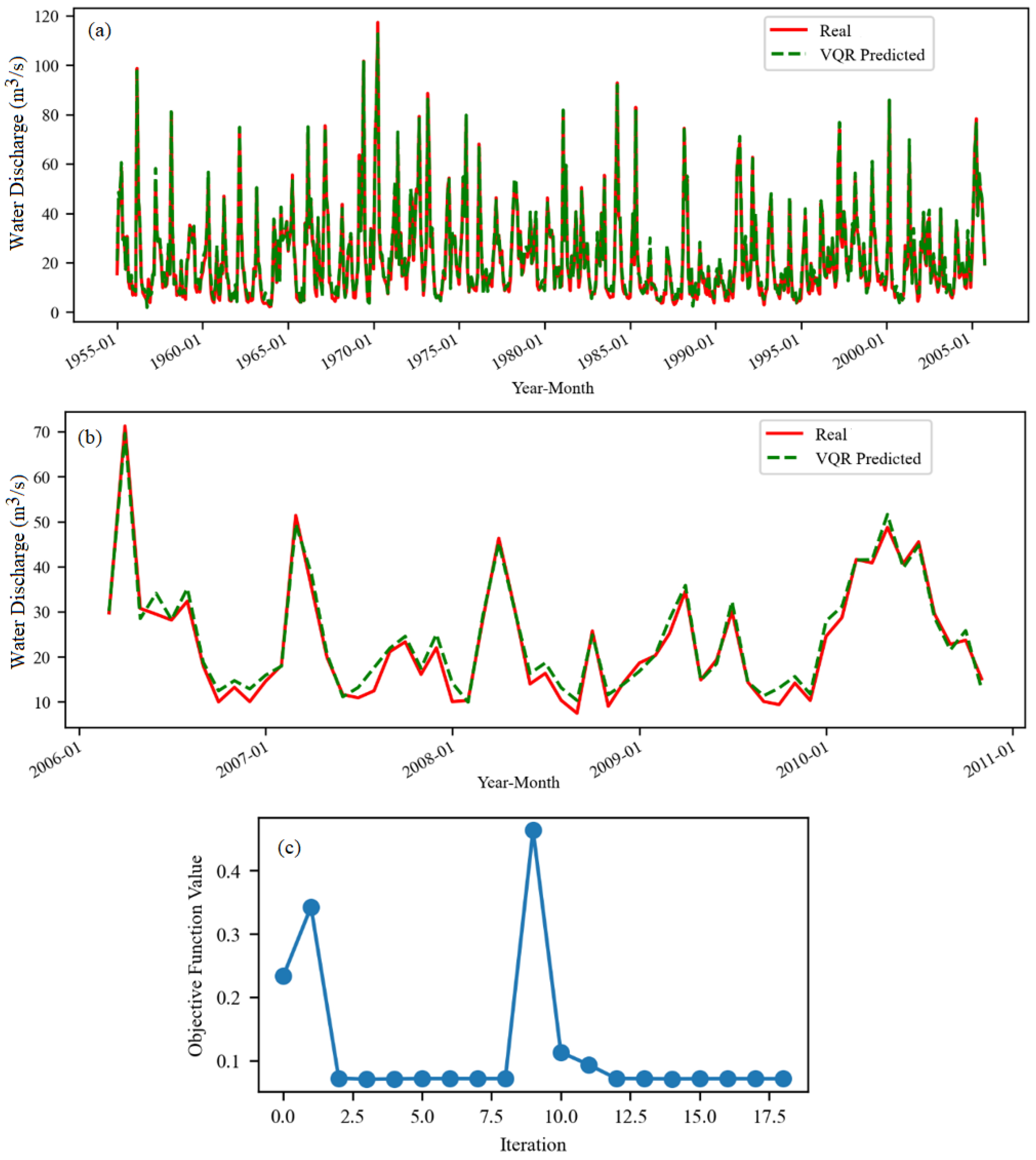

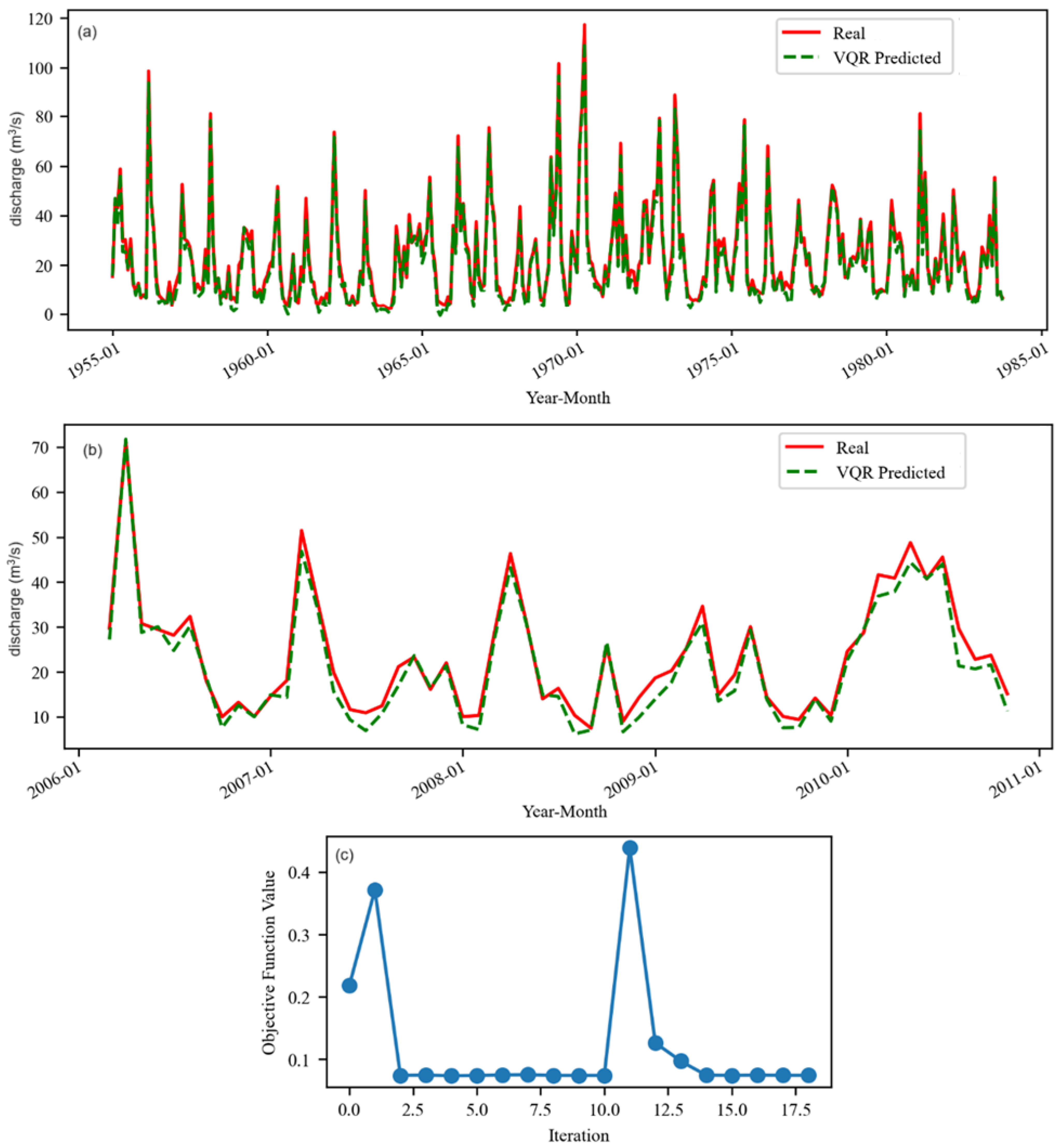

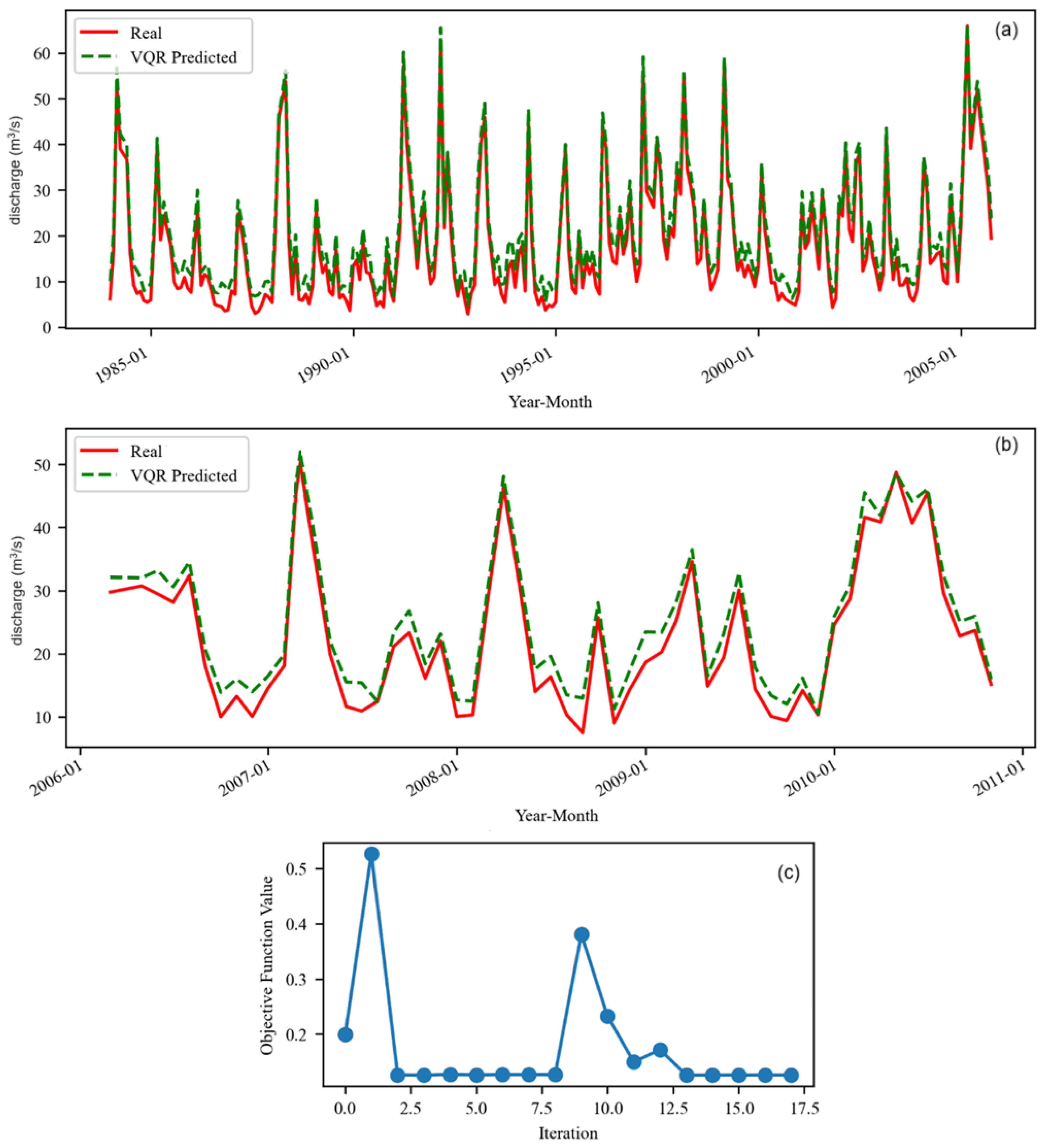

3.1. Models for Initial Datasets

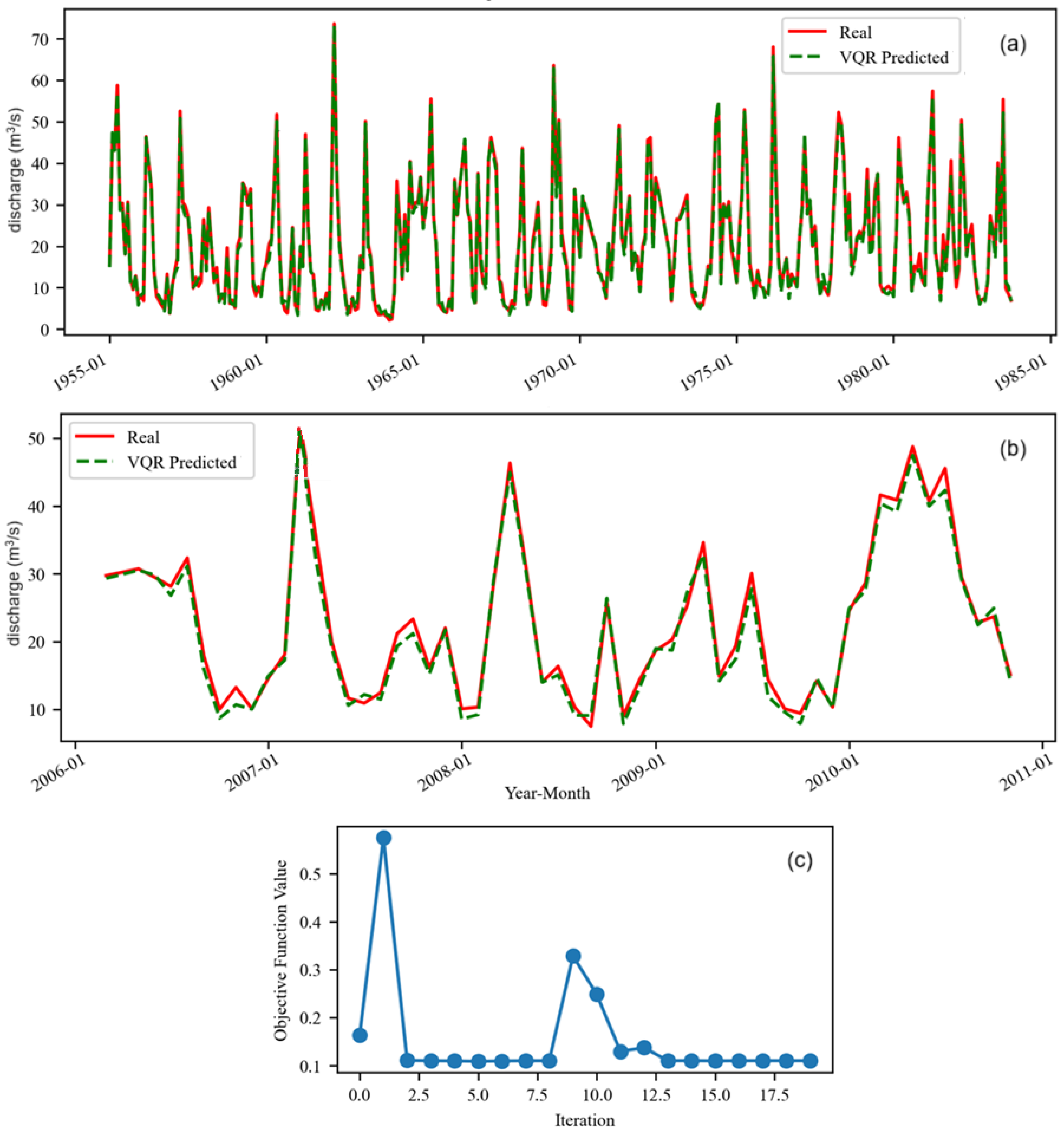

3.2. Models for the Series Without Aberrant Values

4. Discussion

4.1. Discussions on Modeling Results

- The data series comprises discharge values recorded over two distinct periods: before and after the dam’s construction. Prior to January 1984, the series features numerous high-peak floods that significantly elevated the monthly average discharge. Following January 1984, a marked reduction in both the frequency and intensity of floods was observed, leading to decreased variability in average discharge. Previous studies [51,52,53] have demonstrated that the subseries corresponding to the pre- and post-dam periods exhibit different statistical behaviors.

- In M2, both the training and test sets belong to the post-1984 period. As a result, the model was expected to generalize well, applying learned patterns effectively within the same hydrological regime.

- M1 was trained on data from the pre-1984 period and tested on data from the post-1984 period. Despite the distinct differences in flow patterns between the two sub-periods, it outperformed M2. This suggests that the richer variability and more dynamic patterns in the pre-1984 data may have enabled the model to learn more robust or generalizable features, even when applied to a different hydrological regime. This finding is contrary to the output of other kinds of neural networks and hybrid models [52,53,54].

- M was trained on a subseries that spans both the pre-1984 and 1984–2005 periods, allowing it to learn patterns from both the unregulated and regulated flow regimes. It was then applied to a post-2005 subseries, which exclusively reflects the regulated flow conditions. It seems that M, benefiting from richer temporal coverage and greater variability in its training data, enabled stronger generalization to post-2005 conditions.

- All classical neural network models built on the same data series with the same training and test sets demonstrated better performance on more homogeneous time series. Therefore, it was expected that M2o would perform better than M2, but this was not the case.

- τ = number of iterations, set by maxiter in the optimizer

- B = mini-batch size (in this case it was equal to the data size = n)

- P = circuit depth (the number of gate layers that must be executed sequentially)

- Tc′ = time per circuit when using the StatevectorEstimator.

4.2. Assessment of Fitting Quality of Aberrant Values

- In M: MAE = 1.6851, MSE = 2.1199, R2 = 0.9814

- In M1: MAE = 4.0440, MSE = 4.2410, R2 = 0.9917

- In M2: MAE = 3.7306, MSE = 4.083 R2 = 0.9824.

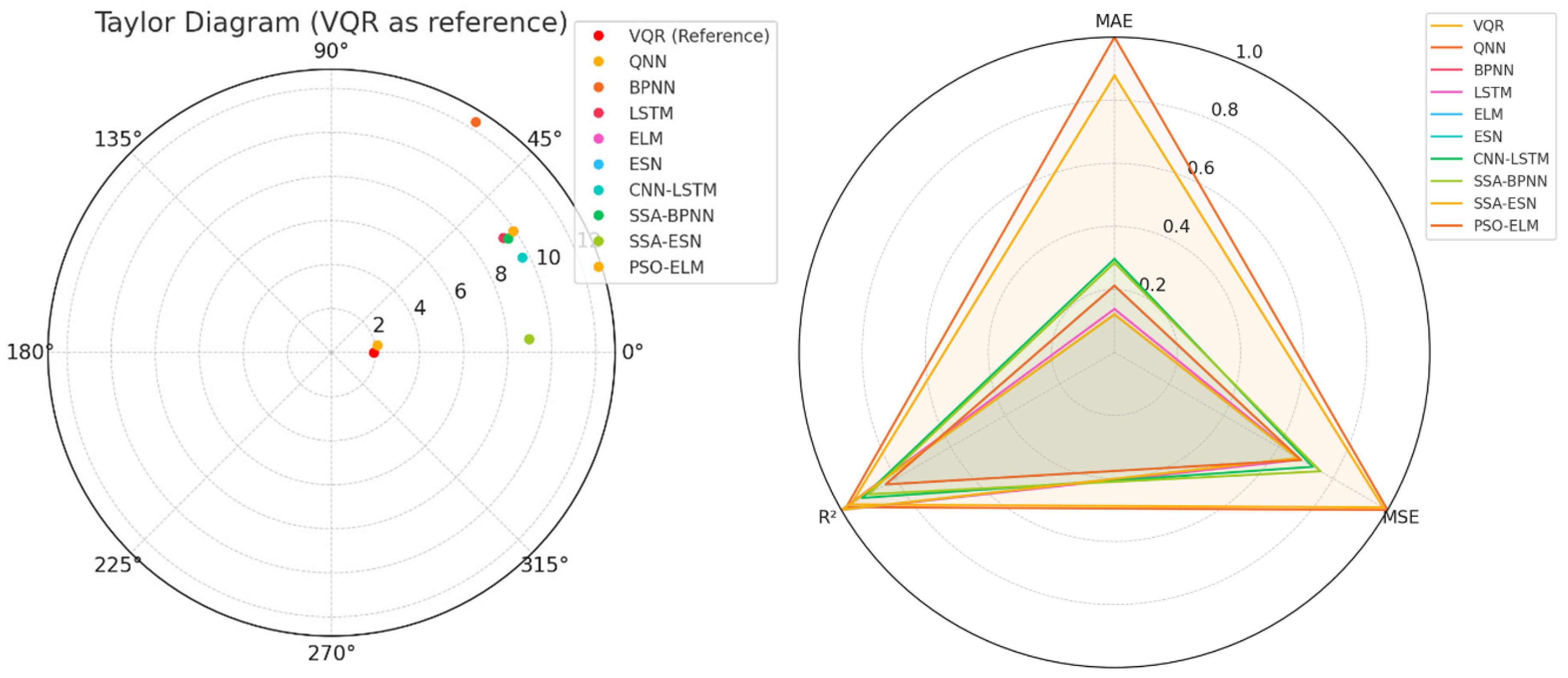

4.3. Comparisons of the VQR Models’ Performance with Those of Classical Artificial Neural Networks and Quantum Neural Networks

- When using QNN, the number of epochs to reach the objective function optimum was the lowest (9 epochs for S, 11 for Mo, 10 for M1 models, and 8 epochs for M2, M1o, and M2o models);

- When applying VQR, the smallest runtime was recorded on M1 (5.168 s) and M2o (4.3097 s) models, while running QNN resulted in shorter runtimes on M (10.8472 s), M2 (4.6218 s), Mo (12.1807 s), and M1o (5.5552 s) models.

- VQR achieved the best performance on the M model training set, while QNN produced the best results on the test set, with MAE = 1.1937, MSE = 2.3815, and R2 = 0.9858;

- VQR showed superior performance compared to QNN on M1, M2, Mo, and M2o, whereas QNN outperformed VQR on M and M1o.

- QNN performed better than ESN and SSA-ESN on the series without aberrant values in terms of MSE and MAE. On So and M2o, ESN was the best with respect to R2 on the test set. Moreover, the time necessary to run the algorithms was significantly lower for ESN and SSA-ESN.

- VQR, QNN, and SSA-ESN are the closest to the reference point, indicating the best performance.

- BPNN stands out with a high standard deviation and low correlation, showing its weak performance.

- ESN and SSA-ESN show high correlation and reasonable variance, performing very well.

- QNN, VQR, and SSA-ESN show strong performance across all metrics.

- BPNN performs the worst, especially on MSE and R2.

- LSTM and CNN-LSTM also perform well, particularly on R2.

4.4. Limitations of the Actual Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ANN | Artificial Neural Networks |

| BPNN | Backpropagation Neural Networks |

| CNN | Convolutional Neural Network |

| CNN-LSTM | Convolutional Neural Network–Long Short-Term Memory |

| ELM | Extreme Learning Machine |

| ESN | Echo State Network and Sparow Search–Echo State Network |

| h. b. | Hydrographic basin |

| LSTM | Long-Short Term Memory |

| MAE | Mean absolute error |

| ML | Machine learning |

| MSE | Mean standard error |

| MLP | Multilayer Perceptron |

| NLS | Nonlinear system |

| NN | Neural networks |

| PBM | Physics-based model |

| PSO-ELM | Particle Swarm Optimization with Extreme Learning Machines |

| QNN | Quantum neural network |

| R2 | Coefficient of determination |

| SSA-ESN | Sparrow Search Algorithm–Echo State Network |

| VQA | Variational quantum algorithm |

| VQC | Variational quantum circuits |

| VQE | Variational quantum eigensolver |

| VQR | Variational quantum regression |

References

- Cristian, A.; Zuzeac, M.; Ciocan, G.; Iorga, G.; Antonescu, B. A thunderstorm climatology of Romania (1941–2022). Rom. Rep. Phys. 2024, 76, 710. [Google Scholar] [CrossRef]

- Popescu, N.C.; Bărbulescu, A. On the flash flood susceptibility and accessibility in the Vărbilău catchment (Romania). Rom. J. Phys. 2022, 67, 811. [Google Scholar]

- Birsan, M.-V.; Nita, I.-A.; Amihăesei, V.-A. Influence of large-scale atmospheric circulation on Romanian snowpack duration. Rom. Rep. Phys. 2024, 76, 708. [Google Scholar] [CrossRef]

- Ene, A.; Moraru, D.I.; Pintilie, V.; Iticescu, C.; Georgescu, L.P. Metals and Natural Radioactivity Investigation of Danube River Water in the Lower Sector. Rom. J. Phys. 2024, 69, 802. [Google Scholar] [CrossRef]

- Bărbulescu, A.; Băutu, E. Mathematical models of climate evolution in Dobrudja. Theor. Appl. Clim. 2010, 100, 29–44. [Google Scholar] [CrossRef]

- Cui, H.; Ji, J.; Hürlimann, M.; Medina, V. Probabilistic and physically-based modelling of rainfall-induced landslide susceptibility using integrated GIS-FORM algorithm. Landslides 2024, 21, 1461–1481. [Google Scholar] [CrossRef]

- Sannino, G.; Bordoni, M.; Bittelli, M.; Meisina, C.; Tomei, F.; Valentino, R. Deterministic Physically Based Distributed Models for Rainfall-Induced Shallow Landslides. Geosciences 2024, 14, 255. [Google Scholar] [CrossRef]

- Saliba, Y.; Bărbulescu, A. A comparative evaluation of spatial interpolation techniques for maximum temperature series in the Montreal region, Canada. Rom. Rep. Phys. 2024, 76, 701. [Google Scholar]

- Amisha, M.P.; Pathania, M.; Rathaur, V.K. Overview of artificial intelligence in medicine. J. Family Med. Prim Care 2019, 8, 2328–2331. [Google Scholar] [CrossRef]

- Bărbulescu, A.; Dumitriu, C.S.; Dragomir, F.-L. Detecting Aberrant Values and Their Influence on the Time Series Forecast. In Proceedings of the 2021 International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Mauritius, 7–8 October 2021. [Google Scholar] [CrossRef]

- Bell, M. he Impact of AI and Generative Technologies on the Engineering Profession. Institution of Engineers Australia. 2025. Available online: https://www.engineersaustralia.org.au/sites/default/files/2025-01/impact-ai-generative-technologies-engineering-profession_0.pdf (accessed on 2 May 2025).

- Berber, S.; Brückner, M.; Maurer, N.; Huwer, J. Artificial Intelligence in Chemistry Research—Implications for Teaching and Learning. J. Chem. Educ. 2025, 102, 1445–1456. [Google Scholar] [CrossRef]

- Dragomir, F.-L. Thinking patterns in decision-making in information systems. New Trends Psych. 2025, 7, 89–98. [Google Scholar]

- Dragomir, F.-L. Information System for Macroprudential Policies. Acta Univ. Danubius Œconomica 2025, 21, 48–57. [Google Scholar]

- Ni, L.; Wang, D.; Singh, V.P.; Wu, J.; Wang, Y.; Tao, Y.; Zhang, J. Streamflow and rainfall forecasting by two long short-term memory-based models. J. Hydrol. 2019, 583, 124296. [Google Scholar] [CrossRef]

- Xu, H.; Song, S.; Li, J.; Guo, T. Hybrid model for daily runoff interval predictions based on Bayesian inference. Hydrol. Sci. J. 2022, 68, 62–75. [Google Scholar] [CrossRef]

- Bărbulescu, A.; Mohammed, N. Study of the river discharge alteration. Water 2024, 16, 808. [Google Scholar] [CrossRef]

- Liang, W.; Chen, Y.; Fang, G.; Kaldybayev, A. Machine learning method is an alternative for the hydrological model in an alpine catchment in the Tianshan region, Central Asia. J. Hydrol. Reg. Stud. 2023, 49, 101492. [Google Scholar] [CrossRef]

- Mosaffa, H.; Sadeghi, M.; Mallakpour, I.; Jahromi, M.N.; Pourghasemi, H.R. Chapter 43—Application of machine learning algorithms in hydrology. In Computers in Earth and Environmental Sciences; Pourghasemi, H.R., Ed.; Elsevier: Amsterdam, The Netherlands, 2022; pp. 585–591. [Google Scholar] [CrossRef]

- Rozos, E. Assessing Hydrological Simulations with Machine Learning and Statistical Models. Hydrology 2023, 10, 49. [Google Scholar] [CrossRef]

- Mushtaq, H.; Akhtar, T.; Hashmi, M.Z.u.R.; Masood, A. Hydrologic interpretation of machine learning models for 10-daily streamflow simulation in climate sensitive upper Indus catchments. Theor. Appl. Climatol. 2024, 155, 5525–5542. [Google Scholar] [CrossRef]

- Carleo, G.; Cirac, J.I.; Gull, S.; Martin-Delgado, M.A.; Troyer, M. Solving the quantum many-body problem with artificial neural networks. Science 2017, 355, 602–606. [Google Scholar] [CrossRef]

- McClean, J.R.; Romero, J.; Babbush, R.; Aspuru-Guzik, A. The theory of variational hybrid quantum—Classical algorithms. New J. Phys. 2016, 18, 023023. [Google Scholar] [CrossRef]

- Kölle, M.; Feist, A.; Stein, J.; Wölckert, S.; Linnhoff-Popien, C. Evaluating Parameter-Based Training Performance of Neural Networks and Variational Quantum Circuits. arXiv 2025. [Google Scholar] [CrossRef]

- Schuld, M.; Killoran, N. Quantum machine learning in feature Hilbert spaces. Phys. Rev. Lett. 2019, 122, 040504. [Google Scholar] [CrossRef] [PubMed]

- Schuld, M.; Bocharov, A.; Svore, K.M.; Wiebe, N. Circuit-centric quantum classifiers. Phys. Rev. A 2020, 101, 032308. [Google Scholar] [CrossRef]

- Mitarai, K.; Negoro, M.; Kitagawa, M.; Fujii, K. Quantum circuit learning. Phys. Rev. A 2018, 98, 032309. [Google Scholar] [CrossRef]

- Du, Y.; Hsieh, M.H.; Liu, T.; Tao, D. Expressive power of parametrized quantum circuits. Phys. Rev. Res. 2020, 2, 033125. [Google Scholar] [CrossRef]

- Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2018, 2, 79. [Google Scholar] [CrossRef]

- Zhou, J. Quantum Finance: Exploring the Implications of Quantum Computing on Financial Models. Comput. Econ. 2025. [Google Scholar] [CrossRef]

- Ullah, U.; Garcia-Zapirain, B. Quantum Machine Learning Revolution in Healthcare: A Systematic Review of Emerging Perspectives and Applications. IEEE Access 2024, 12, 11423–11450. [Google Scholar] [CrossRef]

- Batra, K.; Zorn, K.M.; Foil, D.H.; Minerali, E.; Gawriljuk, V.O.; Lane, T.R.; Ekins, S. Quantum Machine Learning Algorithms for Drug Discovery Applications. J. Chem. Inf. Model. 2021, 61, 2641–2647. [Google Scholar] [CrossRef]

- Ajagekar, A.; You, F. Molecular design with automated quantum computing-based deep learning and optimization. npj Comput Mater 2023, 9, 143. [Google Scholar] [CrossRef]

- Tao, Y.; Zeng, X.; Fan, Y.; Liu, J.; Li, Z.; Yang, J. Exploring Accurate Potential Energy Surfaces via Integrating Variational Quantum Eigensolver with Machine Learning. J. Phys. Chem. Lett. 2022, 13, 6420–6426. [Google Scholar] [CrossRef] [PubMed]

- Hailu, N.A. Predicting Materials’ with Machine Learning. Available online: https://trepo.tuni.fi/bitstream/handle/10024/119253/HailuNahomAymere.pdf?sequence=2 (accessed on 1 May 2025).

- Blanco, C.; Santos-Olmo, A.; Sánchez, L.E. QISS: Quantum-Enhanced Sustainable Security Incident Handling in the IoT. Information 2024, 15, 181. [Google Scholar] [CrossRef]

- Mohamed, Y.; Elghadban, A.; Lei, H.I.; Shih, A.A.; Lee, P.H. Quantum machine learning regression optimisation for full-scale sewage sludge anaerobic digestion. npj Clean Water 2025, 8, 17. [Google Scholar] [CrossRef]

- Yan, F.; Huang, H.; Pedrycz, W.; Hirota, K. Review of medical image processing using quantum-enabled algorithms. Artif. Intell. Rev. 2024, 57, 300. [Google Scholar] [CrossRef]

- Faccio, D. The future of quantum technologies for brain imaging. PLoS Biol. 2024, 22, e3002824. [Google Scholar] [CrossRef]

- Nammouchi, A.; Kassler, A.; Theocharis, A. Quantum Machine Learning in Climate Change and Sustainability: A Short Review. Proc. AAAI Symp. Ser. 2024, 2, 107–114. [Google Scholar] [CrossRef]

- Berger, C.; Di Paolo, A.; Forrest, T.; Hadfield, S.; Sawaya, N.; Stęchły, M.; Thibault, K. Quantum Technologies for Climate Change: Preliminary Assessment. Available online: https://arxiv.org/pdf/2107.05362 (accessed on 2 May 2025).

- Ahmad, N.; Jas, S. Quantum-inspired neural networks for time-series air pollution prediction and control of the most polluted region in the world. Quantum Mach. Intell. 2025, 7, 9. [Google Scholar] [CrossRef]

- Grzesiak, M.; Thakkar, P. Flood Prediction using Classical and Quantum Machine Learning Models. Int. J. Comp. Sci. Mob. Appl. 2024, 12, 84–98. [Google Scholar]

- Dutta, S.; Basarab, A.; Kouamé, D.; Georgeot, B. Quantum Algorithm for Signal Denoising. IEEE Signal Proc. Lett. 2024, 31, 31–156. [Google Scholar] [CrossRef]

- Ujvari, I. Geography of Romanian Waters; Editura Științifică: București, Romania, 1972. (In Romanian) [Google Scholar]

- Grecu, F.; Benabbas, C.; Teodor, M.; Yakhlefoune, M.; Săndulache, I.; Manchar, N.; Kharchi, T.E.; Vișan, G. Risk of Dynamics of the River Stream in Tectonic Areas. Case studies: Curvature Carpathian-Romania and Maghrebian Chain—Algeria. Forum Geogr. 2021, XX, 5–22. [Google Scholar] [CrossRef]

- Costache, R. The identification of suitable areas for afforestation in order to reduce the potential for surface runoff in the upper and middle sectors of Buzãu catchment. Cinq Cont. 2015, 5, 93–103. [Google Scholar]

- Popa, M.C.; Peptenatu, D.; Drăghici, C.C.; Diaconu, D.C. Flood Hazard Mapping Using the Flood and Flash-Flood Potential Index in the Buzău River Catchment, Romania. Water 2019, 11, 2116. [Google Scholar] [CrossRef]

- Zumpano, V.; Hussin, H.; Reichenbach, P.; Bălteanu, D.; Micu, M.; Sterlacchini, S. A landslide susceptibility analysis for Buzau County, Romania. Rev. Roum. Géogr./Rom. Journ. Geogr. 2014, 58, 9–16. [Google Scholar]

- Posea, G.; Popescu, N.; Ielenicz, M. The Relief of Romania; Editura Științifică: București, Romania, 1974. (In Romanian) [Google Scholar]

- Posea, G. Geomorphology of Romania; Editura Fundaţiei România de Mâine: Bucureşti, Romania, 2005. (In Romanian) [Google Scholar]

- Bărbulescu, A.; Zhen, L. Forecasting the River Water Discharge by Artificial Intelligence Methods. Water 2024, 16, 1248. [Google Scholar] [CrossRef]

- Zhen, L.; Bărbulescu, A. Comparative Analysis of Convolutional Neural Network-Long Short-Term Memory, Sparrow Search Algorithm-Backpropagation Neural Network, and Particle Swarm Optimization-Extreme Learning Machine for the Water Discharge of the Buzău River, Romania. Water 2024, 16, 289. [Google Scholar] [CrossRef]

- Zhen, L.; Bărbulescu, A. Echo State Network and Sparrow Search: Echo State Network for Modeling the Monthly River Discharge of the Biggest River in Buzău County, Romania. Water 2024, 16, 2916. [Google Scholar] [CrossRef]

- Costache, R.; Pal, S.C.; Pande, C.B.; Islam, A.R.M.T.; Alshehri, F.; Abdo, H.G. Flood mapping based on novel ensemble modeling involving the deep learning, Harris Hawk optimization algorithm and stacking based machine learning. Appl. Water Sci. 2024, 14, 78. [Google Scholar] [CrossRef]

- Gherghe, A.; Dobre, R.R.; Apotrosoaei, V.; Briceag, A.; Melinte-Dobrinescu, M. The Bâsca Rozilei river drainage model, Romanian Carpathian belt. Geo-Eco-Marina 2021, 27, 37–54. [Google Scholar] [CrossRef]

- Grecu, F.; Zaharia, L.; Ioana-Toroimac, G.; Armas, I. Floods and Flash-Floods Related to River Channel Dynamics. In Landform Dynamics and Evolution in Romania; Radoane, M., Vespremeanu-Stroe, A., Eds.; Springer Geography; Springer: Cham, Switzerland, 2017; pp. 821–844. [Google Scholar] [CrossRef]

- Gong, C.; Guan, W.; Gani, A.; Han, Q. Network attack detection scheme based on variational quantum neural network. J. Supercomput. 2022, 78, 16876–16897. [Google Scholar] [CrossRef]

- Chen, H.; Wu, H.-C.; Chan, S.-C.; Lam, W.-H. A Stochastic Quasi-Newton Method for Large-Scale Nonconvex Optimization with Applications. IEEE T. Neur. Net. Lear. 2020, 31, 4776–4790. [Google Scholar] [CrossRef]

- L_BFGS_B. Available online: https://qiskit-community.github.io/qiskit-algorithms/stubs/qiskit_algorithms.optimizers.L_BFGS_B.html (accessed on 3 May 2025).

- Powell, M.J.D. A direct search optimization method that models the objective and constraint functions by linear interpolation. In Advances in Optimization and Numerical Analysis; Gomez, S.S., Hennart, J.-P., Eds.; Kluwer Academic: Dordrecht, The Netherlands, 1994; pp. 51–67. [Google Scholar]

- COBYLA. Available online: https://qiskit-community.github.io/qiskit-algorithms/stubs/qiskit_algorithms.optimizers.COBYLA.html (accessed on 15 February 2025).

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. arXiv 2017. [Google Scholar] [CrossRef]

- Liu, Z.; Bărbulescu, A. Quantum Neural Networks Approach for Water Discharge Forecast. Appl. Sci. 2025, 15, 4119. [Google Scholar] [CrossRef]

| Hyperparameter | Description |

|---|---|

| Number of qubits | 1 |

| Quantum register | 1 qubit |

| Classical register | 0 bits |

| Feature map | QuantumCircuit(1, name=“fm”), with an ry(param_x) rotation on qubit 0 |

| Variational circuit (ansatz) | QuantumCircuit(1, name=“vf”), with an ry(param_y) rotation on qubit 0 |

| Optimizer | L_BFGS_B(maxiter=50) |

| Callback | callback_graph, used to record the objective function value at each training iteration |

| Estimator | EstimatorQNN(circuit=qc, estimator=estimator): StatevectorEstimator |

| Loss function | MSE |

| Training call | vqr.fit(X_norm, y_norm), fitting the model on normalized inputs and targets |

| Model | Set | MAE | MSE | R2 |

|---|---|---|---|---|

| M | Training | 1.5158 | 3.6647 | 0.9886 |

| Test | 1.7197 | 4.335 | 0.9742 | |

| M1 | Training | 2.3706 | 8.3552 | 0.9767 |

| Test | 2.1569 | 7.3694 | 0.9561 | |

| M2 | Training | 2.4182 | 7.6084 | 0.9728 |

| Test | 2.2678 | 6.8897 | 0.9589 |

| Model | Set | MAE | MSE | R2 |

|---|---|---|---|---|

| Mo | Training | 0.9559 | 1.4420 | 0.9919 |

| Test | 1.0103 | 1.5313 | 0.9881 | |

| M1o | Training | 1.0585 | 1.8321 | 0.9906 |

| Test | 1.1503 | 1.9007 | 0.9853 | |

| M2o | Training | 2.8241 | 9.0615 | 0.9454 |

| Test | 2.5055 | 7.5413 | 0.9416 |

| Model | Time(s) | Epochs | Model | Time(s) | Epochs |

|---|---|---|---|---|---|

| M | 21.8606 | 30 | Mo | 14.3117 | 25 |

| M1 | 5.168 | 18 | M1o | 6.8727 | 19 |

| M2 | 4.6312 | 17 | M2o | 4.3097 | 17 |

| Model | Set | MAE (Model) | MSE (Model) | R2 (Model) |

|---|---|---|---|---|

| M | Training | 5.7250 (SSA-BP) | 80.5765 (ESN) | 0.9976 (SSA-ESN) |

| Test | 4.2351 (CNN-LSTM) | 32.4993 (SSA-BP) | 0.9983 (LSTM) | |

| M1 | Training | 6.5177 (CNN-LSTM) | 102.9393 (ESN) | 0.9899 (LSTM) |

| Test | 4.4784 (CNN-LSTM) | 39.7982 (CNN-LSTM) | 0.9917 (LSTM) | |

| M2 | Training | 4.7433 (CNN-LSTM) | 57.3421 (SSA-ESN) | 0.9992 (LSTM) |

| Test | 3.5245 (CNN-LSTM) | 29.8323 (CNN-LSTM) | 0.9970 (LSTM) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhen, L.; Bărbulescu, A. Variational Quantum Regression Application in Modeling Monthly River Discharge. Water 2025, 17, 1836. https://doi.org/10.3390/w17121836

Zhen L, Bărbulescu A. Variational Quantum Regression Application in Modeling Monthly River Discharge. Water. 2025; 17(12):1836. https://doi.org/10.3390/w17121836

Chicago/Turabian StyleZhen, Liu, and Alina Bărbulescu. 2025. "Variational Quantum Regression Application in Modeling Monthly River Discharge" Water 17, no. 12: 1836. https://doi.org/10.3390/w17121836

APA StyleZhen, L., & Bărbulescu, A. (2025). Variational Quantum Regression Application in Modeling Monthly River Discharge. Water, 17(12), 1836. https://doi.org/10.3390/w17121836