1. Introduction

The efficient management of water distribution systems (WDSs) is a cornerstone of urban infrastructure, fundamental to public health, economic stability, and environmental sustainability. However, the management of these vast and complex networks poses significant challenges, particularly in leak detection and network partitioning [

1]. Hydraulic modeling has emerged as a pivotal tool, simulating the dynamic behaviors of WDSs to predict, analyze, and optimize their performance [

2]. Developing hydraulic models of WDSs is a vital step in the information era, providing comprehensive insights into the components of nodes and links. The nodes include junctions, reservoirs, towers, and tanks. Hydraulic models have traditionally provided insights into network flow dynamics, aiding in the prediction of system behavior under various operational scenarios [

3,

4]. However, these models could not analyze leak detection, and network partitioning is often hampered by the complexity of WDSs [

5]. There is a growing recognition of the need to enhance these models with more sophisticated analytical methods.

Partitioning a WDS into district-metered areas (DMAs) has become an important tool for water utilities to improve leakage control, water quality monitoring, and valve system operability [

6,

7]. DMAs are created by closing selected boundary pipes to separate the network into individual pressure zones that can be continuously monitored. Developing optimal DMA boundaries is a complex optimization problem that considers competing objectives, such as minimizing the number of boundary pipes while maintaining adequate system pressures and water quality [

8,

9]. A variety of methods have been proposed for the DMA partitioning problem. Perelman presented a clustering method based on network flow directions to identify DMAs [

10]. Zheng et al. decomposed a network graph into subnetworks and then used an evolutionary algorithm to optimize boundary pipes [

6]. More recently, community detection techniques from complex network analysis have shown promise for finding inherent clusters in water distribution topologies [

11,

12]. Community detection, for instance, uses modularity to evaluate the effectiveness of division between network pressure differences using graph theory. Ciaponi et al. introduced a practical DMA design method combining modularity containing minimum pressure and pipe size constraints [

13]. They applied the Tabu search to determine near-optimal boundaries after partitioning a WDS using community detection. This hybrid approach allows leveraging the strengths of both graph partitioning techniques and multi-objective optimizations, which produced a high-quality solution meeting operational requirements in a case study. Perelman et al. worked on detecting leaks and ensuring the integrity of water networks in transient modeling, which leverages the K-nearest neighbors modularity for classifying transient pressure data parallels. These contribute to the efficient and accurate identification of leakages, reducing water loss and enhancing the efficiency of water distribution systems [

14]. Zhang et al. developed an integrated framework using multiscale community detection and multi-objective optimization for water network partitioning, which introduced random walk-based modularity to overcome the limitations of traditional modularity metrics in identifying partitions [

15]. The extended modularity integrates nodal pressure information to partition the network according to both topology and hydraulic conditions. Then, boundaries are further optimized by minimizing the number of boundary links and maximizing pressure uniformity using the BORG evolutionary algorithm. A real WDS demonstrated that their approach can effectively decompose the network into zones with balanced pressures. While these methods provide an effective integrated approach to WDS partitioning, they do have some limitations. Specifically, pressure sensitivities are used to partition, but the spatial status of nodes—their locations and connectivity—which should always be accessible or reliable, are not simultaneously considered [

16].

Recent advances in network embedding and deep learning provide alternative data-driven techniques to address these weaknesses. Network embedding methods such as Deepwalk, Graph Sample and AggreGatE (GraphSAGE), Node to Vector (N2V), and large-scale information network embedding (LINE) [

17,

18] can encode topological and attribute information from massive networks in a low-dimensional representation that preserves structural relationships. Deep learning graph partitioning models can then detect patterns and cluster these embeddings to partition the network in a scalable unsupervised fashion without relying on hydraulic simulations [

18,

19,

20]. Specifically, LINE explicitly captures first-order and second-order proximities between nodes in the network through optimized objective functions. The resulting node embeddings summarize not only node attributes but also connectivity patterns and community structure. Then, some cluster models such as k-means could automatically learn features from the graph embeddings to group nodes into clusters for pressure or water quality detection objectives [

21,

22]. In the cluster models, network nodes are grouped in a partition based on certain properties or behaviors. In the LINE model, nodes within the same partition have similar embeddings with each other compared to nodes in a different partition, which is useful for understanding the structure and hydraulic dynamics of networks.

After partitioning, effective leakage detection is critical for water utilities to reduce non-revenue water loss and ensure adequate water supply. However, locating pipe leaks in complex pipe networks poses significant challenges. Conventional acoustic sounding and logging surveys are time-consuming and lack efficiency at the city-wide system level due to their reliance on labor-intensive procedures. Further, acoustic methods may not always accurately detect issues in complex network structures, requiring multiple surveys and interpretations. Logging survey methods may require massive leakage data as references that are scarce and difficult to obtain. Data-driven approaches using hydraulic models have shown promise for leakage detection. For example, Wu et al. developed a pressure-dependent leakage detection (PDLD) methodology integrating leak simulation within hydraulic model calibration [

23]. However, the methodology’s performance suffers from computational complexity when applied to multiple partitioning networks, which have exponentially expanding solution search spaces, though it can successfully identify leakage. To enhance the scalability of calibration methods, Zhang et al. proposed a leakage zone identification framework using multiclass support vector machines (SVM) [

24]. The approach divides the network into zones using k-means clustering. Leakage simulation data train the SVM classifier model to predict leak events from nodal pressure sensitivity, which demonstrated effectiveness in a real case study. However, the method has some limitations. Manual intervention is required to select the optimal hyperparameters of the model and to re-train it when there are some changes in the network topology. Inaccuracy may arise in pressure-sensitivity-based leak detection if the actual pressure readings are in a state of instability over a period.

Deep learning provides an intriguing technology pathway to overcome these challenges through highly flexible neural network architectures. Deep learning includes deep brief networks (DBNs), convolutional neural networks (CNNs), and recurrent neural networks (RNNs), which have been widely applied in numerous areas. Deep neural networks can learn abstract representations and patterns in massive, high-dimensional datasets like sensor measurements in complex urban environments [

25]. Deep learning leakage detection frameworks can capitalize on physics knowledge while adapting models to operational data distributions. For instance, Guo et al. developed a vision-based CNN for image recognition of leak acoustic signals [

26]. Basnet et al. explored the efficacy of supervised machine learning models, specifically multilayer perceptron (MLP) and CNN for leak detection in each node of WDSs. They emphasized the models’ capability to address the complexities inherent in real-world leak characteristics, which marks a significant advancement in recognizing leaks without the need for leak data collection through labor-intensive methods [

27]. However, topological networks often comprise hundreds or even thousands of nodes, making it impractical to determine the pressure states of all of them. This limitation can diminish the accuracy of leak detection. To address this issue, it is advisable to focus on detecting specific leakage zones that contain a subset of nodes. Concentrating on such integrated frameworks provides a promising direction for efficient, robust, and scalable leak detection across entire WDSs.

Therefore, in this study, we propose a partition and leak detection method with a deep learning framework using embedding and pressure sensitivity. Our contributions include the following: (1) a three-order embedding method is presented based on LINE, (2) a WDS partition method is presented with integrated k-means clustering and graph embedding, and (3) a deep learning framework is applied to analyze pressure sensitivity for leakage detection. This model’s novelties include the following: (1) the ability to locate leaks in a specific leakage partition containing a subset of nodes; (2) generating a leak dataset by increasing water demands, which obviates the need for manual data collection; (3) considering pressure sensitivity and topology simultaneously, allowing for more accurate identification of leak locations.

The rest of this paper is organized as follows:

Section 2 illustrates the methodology of the partition and leak detection model.

Section 3 focuses on case studies for this method, and compares the results with those of another model. We present the advantages and weaknesses of this method in

Section 4. Finally, in

Section 5, the conclusions are drawn.

2. Methods

2.1. Pressure Sensitivity Matrix

Pressure sensitivity analysis is an essential component, primarily focusing on understanding how changes in pressure affect water flow and network stability. Pressure sensitivity could be expressed as Equation (1)

where

is the absolute difference in values of pressure between abnormal and normal at node

i, with node

j regarded as a leakage point;

is abnormal pressure, as node

j is regarded as a leakage point;

is normal pressure.

Leakage can cause sudden changes in water demand and pressure at the nodes of a pipe network, especially in the case of pipe bursts. In the pipe network topology model, the occurrence of leakage can be simulated by increasing the leakage demand. To obtain the pressure sensitivity of each node, an extended period simulation (EPS) is conducted. Firstly, one node is assigned a leakage demand

. Subsequently, the hydraulic model is executed to compute pressure variations at both normal and abnormal conditions in all nodes to simulate the leakage event using Equation (1). Finally, an N × N pressure sensitivity matrix can be obtained, as expressed by Equation (2).

where

is the number of nodes in a WDS.

Due to the great variation in water base demands across various nodes, the pressure sensitivity can differ by orders of magnitude [

28]. Thus, standardization should be implemented. The average values of the pressure differences are set to zero, and the standard deviation is scaled to one after applying the standardization process, which can be expressed as Equation (3) [

29].

where

is the standardization pressure sensitivity of node

i, as the leakage appeared in node

j;

is the average value of pressure sensitivity, as node

j is regarded as a leakage node, which is expressed as

;

is the standard deviation of pressure sensitivity of all nodes as node

j is experiencing leakage, which is expressed as:

Furthermore, the standardized values are normalized to a 0 to 1 real-valued range across all nodes. This normalization transformation shifts and scales the distribution of standardized pressure sensitivity to span from 0 to 1, thereby balancing the data contribution and range across all network nodes for the subsequent analysis. The normalization process converts the standardized values using Equation (5), as follows:

where

is the normalization pressure sensitivity of node I, as the leakage appeared in node

j;

are determined from the bounds of the standardized dataset.

Through standardization and normalization, the pressure differences are transformed to have a mean of zero and a standard deviation of one with the same orders of magnitude. This allows the data to be analyzed on a common, normalized scale regardless of the raw data distribution across nodes.

2.2. Improvement Using Three-Order Embedding

There are numerous water demand nodes in a WDS. Detecting leakage at each node would require installing a large number of SCADA sensors, significantly increasing costs. Additionally, processing massive data would consume considerable time, and storing it would require extensive storage space in the server. Dividing the network into zones, detecting only the leak node’s partition, and then further localizing the leakage within that area, can significantly reduce costs and computational efforts. The most effective method for partitioning a network through clustering algorithms involves considering the interconnections between nodes in the graph and integrating the characteristics of adjacent nodes to reflect their spatial topological relationships. Embedding using LINE could serve as a pivotal method for transforming complex network structures into a more interpretable, low-dimensional space. This process is crucial for mapping the extensive and intricate networks of pipes and nodes within a WDS. By embedding these elements into a vector space, it becomes possible to analyze relationships and interactions within a WDS more effectively, such as the clustering in terms of water pressure sensitivity.

In this study, we improve the LINE model via a three-order proximity objective function to apply to the partitioning of WDSs. During the LINE model step, random embeddings could be generated for each node in the WDS. Then, three-order proximity objection functions are applied to optimize the embeddings.

The first-order proximity objective function uses Kullback–Leibler divergence to optimize the embeddings via a direct hydraulic relationship between two connected nodes [

30]. Given two nodes

i and

j with an edge between them, their embeddings are

ui = [

u1,

u2,…,

uk] and

vj = [

v1,

v2,…,

vk]. The objective function can be formulated as Equation (6), which adjusts the embeddings of the nodes so that directly connected nodes are close to each other. For instance, for three nodes A, B, and C in WDSs, node A is directly connected to node B, and node B is directly connected to node C. The direct hydraulic relationship between node A and node B would be analyzed, focusing on the weights of the pipe connecting them, obtaining

. Node B is directly connected to node B, and the hydraulic relationship with node C would be analyzed in a similar way, obtaining

. Finally,

and

would be added to accurately represent the overall hydraulic interactions between directly connected nodes in the WDS.

where

is the value of the first-order proximity objective function and

is the weight of the edge between nodes

i and

j. If

i is directly connected with

j, the value is

. If there is no direct connection between them, the value is zero.

is the sigmoid function.

For the weight of the edge between two nodes, the average of pressure sensitivities is solved, expressed as Equation (7).

Second-order proximity focuses on preserving the neighborhood structure, which shares the connections of nodes, even if they are not directly connected. The objective function aims to preserve the context of nodes, where the context is defined by the node’s neighborhood expressed as Equation (8). For instance, taking nodes A, B, and C again, node A and node C are not directly connected, but both have a direct connection to node B. Second-order proximity analyzes this indirect relationship by examining the similarity in their connections through B. This could involve assessing how a change in pressure at node B affects both A and C, or how A and C might have similar pressure profiles due to their shared connection with B, despite no direct pipe between them.

where

is the value of the second-order proximity objective function and

is the ratio of

and the out-degree in node

i. The details of this objective function can be found in reference [

17].

Third-order proximity focuses on the spatial distribution of nodes. The network is divided into different partitions. Some outliers might exist in each partition, especially in complex networks, which would give rise to partition discontinuity [

1]. To ensure node connectivity in each partition, connectivity optimization for all nodes should be determined, as expressed in Equation (9).

where

is the value of the third-order proximity objective function;

dij is the distance between node

i and

j;

represents the spatial coordinates of node

i, encapsulated by the vector [

xi,

yi,

zi];

xi, and

yi are the horizontal coordinates within a two-dimensional plane, reflecting the geographical positioning of the node; and

zi represents the elevation data at the location of node

i.

To effectively embed nodes with three orders, this model separately preserves the three-order proximity functions during optimization. Firstly, the topological network is preprocessed to convert it to an adjacency matrix, establishing a structured representation for subsequent operations. Secondly, the dimensionality of the embeddings is determined, and embeddings are generated randomly. Higher dimensions capture more information but take longer to train. Thirdly, the first-, second-, and third-order proximity are calculated using Equations (6)–(9). Fourthly, the stochastic gradient descent algorithm is employed to minimize the first-order proximity function. Following the optimization of the first-order proximity, the second- and third-order proximities are minimized in sequence, employing a similar approach. This ensures that not just direct connections but also neighborhood similarities and spatial distribution relationships are accurately reflected in the embeddings. Fifth, the embeddings are updated to reflect the minimized proximity functions, gradually improving the representation of the network’s structure in the low-dimensional space. Sixth, a maximum epoch is set and steps 3–5 are repeated until the model converges or the predefined maximum number of epochs is reached, ensuring the optimization process is thorough yet bounded.

For each node, we concatenate the embeddings obtained from these three functions, thereby capturing the comprehensive relational dynamics within the network. The flow chart of the partition method is illustrated in

Figure 1a.

2.3. Network Partition

Partitioning a network in WDSs is key for efficient management and operation, as it enables more precise leak detection, reduces response time for repairs, and enhances system reliability and safety by isolating problems to prevent widespread impact. By integrating the pressure sensitivity matrix with the LINE embedding and clustered topological network, we can simulate various scenarios, such as pipe bursts or leakages, and predict their impact on the network. The k-means algorithm is introduced for dividing a network into different leakage partitions. This clustering method groups nodes into K number of clusters, where each point belongs to the cluster with the nearest mean value. Initially, cluster centers are chosen randomly. Each node in the network is then assigned to the nearest cluster center based on Euclidean distance, which is calculated using Equation (10).

where

D is the distance between two nodes,

i and

j.

The centroid of each cluster is recalculated after all nodes have been assigned. The mean position of all the points in each cluster is solved. It involves calculating the average of all the dimensions for the points in each cluster. The equation for the new centroid for a cluster is given by Equation (11).

where

is the centroid of the

kth partition.

This recalculated centroid then becomes the new center for the respective cluster in the next iteration and the process repeats until the centroids stabilize, ensuring the network is divided into distinct and optimized leakage partitions. Finally, pressure sensors would be installed at the nodes nearest to each centroid within the partitions.

2.4. Leak Detection

To locate the areas with leakage nodes, pressure sensitivity can be used for analysis. After partitioning, a pressure sensor is installed at the point nearest to center in each zone. The Monte Carlo simulation is employed to stochastically generate instances of leak events via increasing the demand of nodes, where each event is solved through the EPS process. For the specific implementation steps, the Monte Carlo method is first utilized to randomly select one or two nodes and assign random leakage demands to them in each partition, respectively. If there are K partitions in the pipe network, and leakage occurs at nodes in the kth partition, the pressure at the sensor points might change. The hydraulic model is executed to compute pressure variations for both normal and abnormal conditions at each sensor for every leakage event. The pressure sensitivity of the sensor can be calculated using Equation (1). Subsequently, the Monte Carlo process is repeated for the next leakage event until all events have been simulated. The number of leakage events is

, where

N is the number of nodes. Finally, the inputs of training samples are composed and expressed as Equation (12). Meanwhile, the outputs of training samples are the labels of partitions.

where

P is the array of input data in LSTM and

L is the number of leakage events

;

RNN models are a type of recursive neural network that processes sequential inputs and are among the most crucial algorithms in deep learning. Long short-term memory (LSTM) networks, the most popular RNN models, are capable of learning long-term dependencies. In the context of leak detection, an LSTM has the ability to handle complex, non-linear relationships in data, which can lead to more accurate detection of leaks under varying conditions. Additionally, LSTMs can adapt to changes in the network’s behavior over time, maintaining effectiveness even as the system leaks.

Therefore, this study established a three-layer LSTM model for leak detection [

31,

32]. For the input layer, the preprocessed dataset serves as the input variable. The second layer is the LSTM layer shown in

Figure 1b. The LSTM has three gates. The first is a forget gate with a sigmoid activation function

, producing numbers between 0 and 1. A value of 1 retains the last cell state, while 0 forgets it. The second is the input gate, updating the cell state using hyperbolic tangent and sigmoid activation functions. The third is the output gate, where the tangent activation function is used. Finally, the output layer is connected to a fully connected layer, which serves as the third layer. The output layer consists of M neuron cells indicating the partition of leaks. Both models employ the Softmax activation function [

33] in their output layers. In addition, the cross-entropy loss function is used during the backpropagation to refine the classification accuracy between the model’s predicted and the actual partition labels, expressed as Equation (13). This loss function quantifies the difference between the predicted probability distributions and the actual labels across the dataset. The main advantage of using cross-entropy is its ability to handle probabilities in a way that penalizes incorrect classifications more severely, guiding the model towards more accurate predictions. This optimization process is crucial for enhancing the model’s ability to accurately distinguish between the presence of leaks and their absence in WDSs, by minimizing the prediction error [

34,

35].

where

is an indicator of whether class

k is the correct leak partition. If correct, the

is one, or zero. The

is the predicted probability that a leak appears in partition

k.

The framework of this state-of-the-art model is illustrated in

Figure 1c.

3. Case Studies

Two cases, A and B, are used to verify this methodology. The Open Water Analytics (OWA) toolbox is employed for hydraulic calculations [

36], which is an EPANET classlib of MATLAB developed by the KIOS Research Center for Intelligent Systems and Networks, University of Cyprus.

3.1. Network A

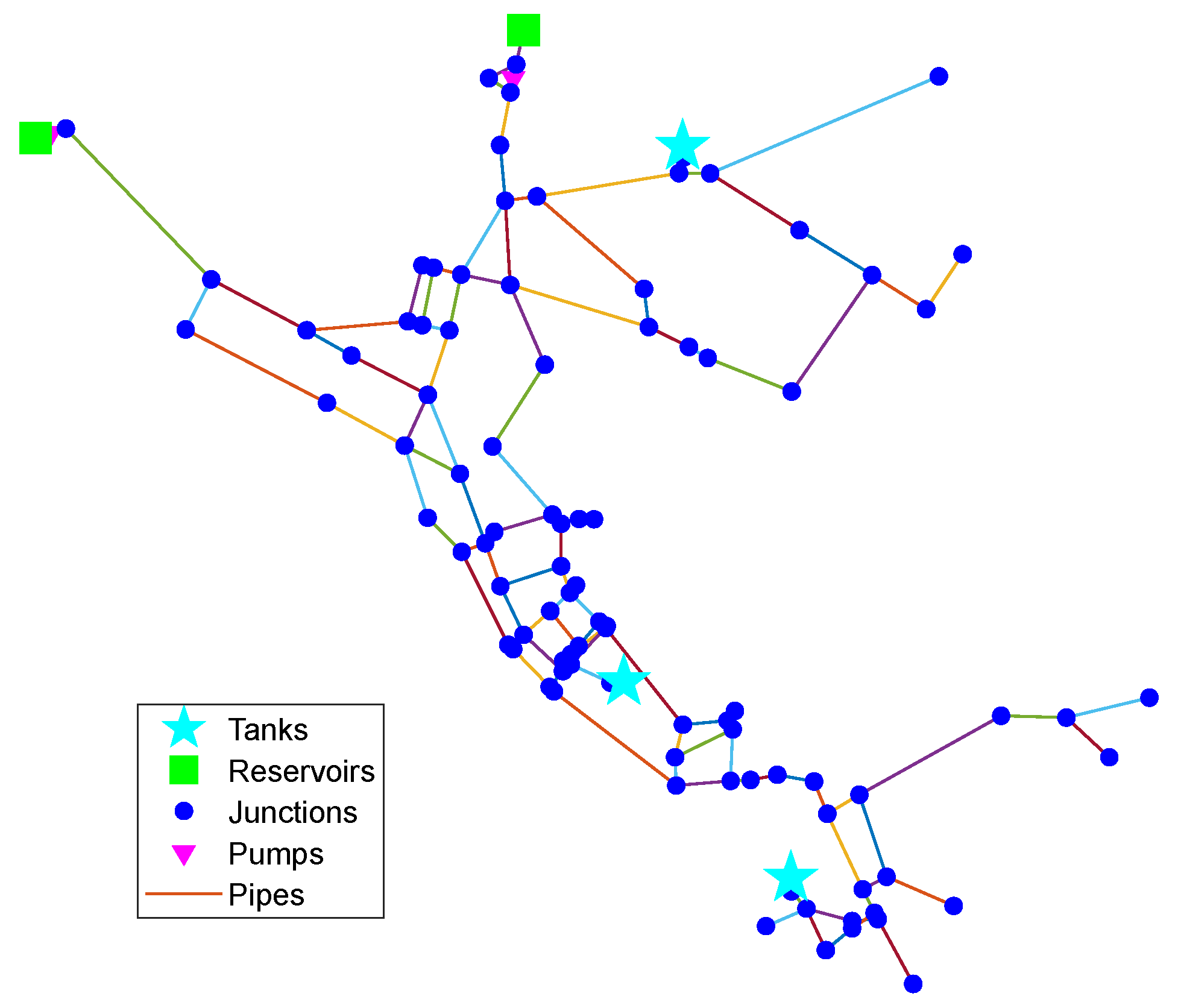

Network A has 92 junctions, 117 pipes, two pumps, two reservoirs, and three tanks, as shown in

Figure 2 [

19,

37]. The average water demand is 2450.03 m

3/h. The time interval of hydraulic calculation is set to one hour during the EPS process via the EPANET OWA toolbox. The maximum time of EPS is set to 24 h.

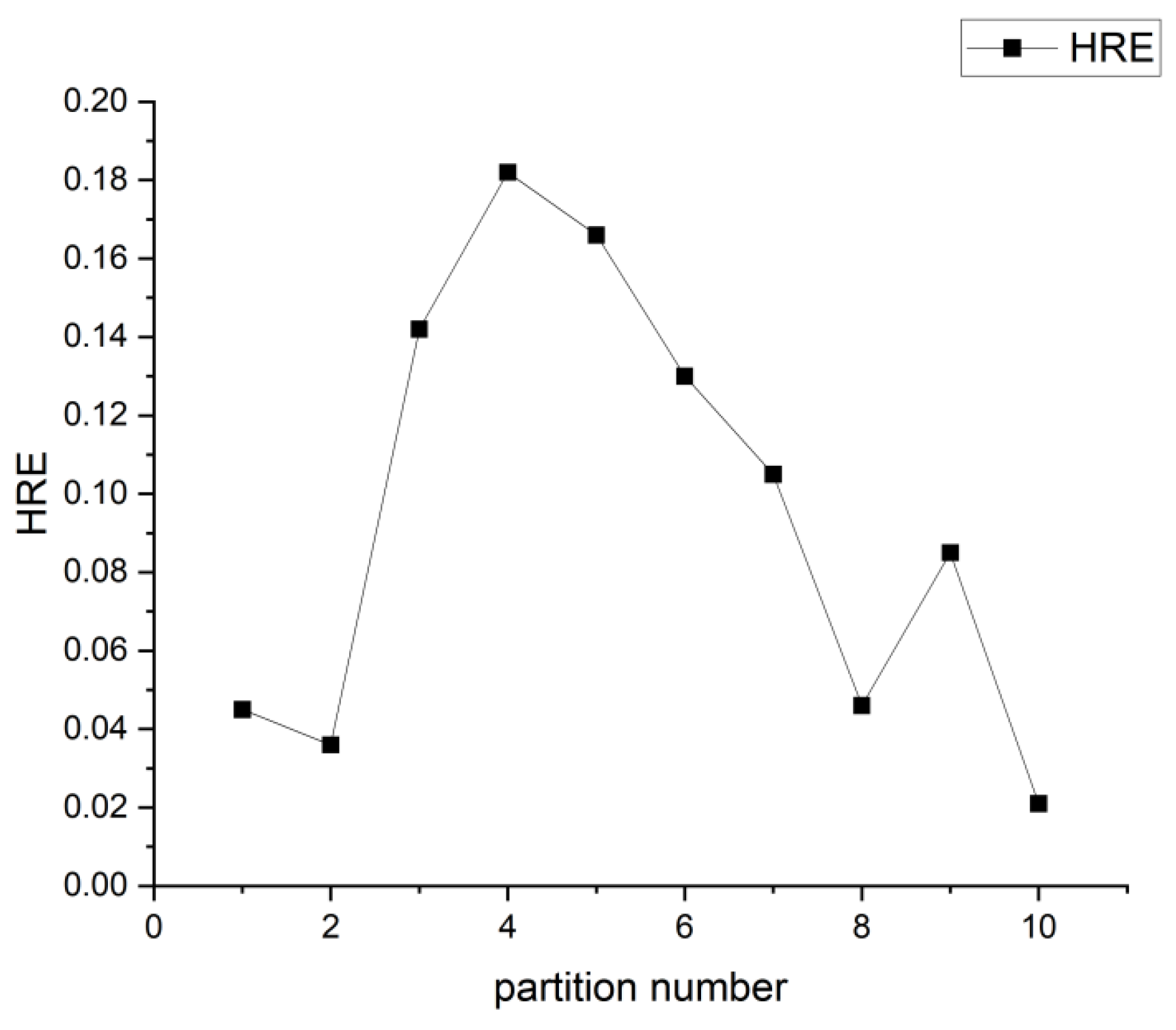

Firstly, the network partition is processed. In order to select an effective number of partitions, the hydraulic reliability estimation (HRE) method is employed as the objective function [

38], which represents the probability of leakage occurring. The water demands are set as an independent variable in HRE, where water demands are randomly allocated to the nodes of the WDS following a normal distribution. Then, coefficients including 0.1, 0.2, 0.4, 0.6, 0.8, and 1.0 are multiplied with the demands to represent five different scenarios. The details can be found in the

Supplementary Materials. In the process of partitioning, the three-order embedding model is used. The embedding length is determined for enhancing calculating efficiency and removing outliers. The embedding lengths ranging from 2 to 6 are tested, as illustrated in

Table S3, where the number of partitions is set at ten, clustered by the k-means algorithm. When the length is three, the number of outliers is seven, and stability is maintained with the increase in the length. Thus, the embedding length is set to three, where the dimension of pressure sensitivity would be reduced (92 to 3). Then, the k-means algorithm is applied to cluster the nodes to different partitions. The network is divided into different partitions ranging from 2 to 10. Valves would be installed in the boundary pipes after partitioning. The states of valves (open or closed) are selected to obtain the best HRE solution. An exhaustive search method is employed to identify the optimal states of the boundary valves for the best HRE outcome. The exhaustive search method involves systematically evaluating all possible combinations of valve states to identify the configuration that obtains the highest HRE. The HRE solutions are solved, as illustrated in

Figure 3. Five partitions yield the largest HRE, which indicates that the leakage will occur with a lower probability. There are 13 valves installed in the boundary pipes of the network, as shown in

Figure 4. The pipe indexes are 19, 39, 57, 59, 63, 64, 73, 89, 102, 103, 106, 112, and 113. The states of these valves are [0, 1, 1, 0, 1, 0, 0, 0, 1, 0, 1, 1, 1], where 0 represents closed and 1 is open.

The best strategy of partition is shown in

Figure 4. The nearest center node would be regarded as the sensor for each partition. The sensors’ indexes are 12, 24, 46, 66, and 79.

After that, the EPS is conducted to calculate the normal pressures at the locations of five sensors. Following this, a leakage equivalent to 3% of demand is introduced at one or two specific nodes, and the EPS is conducted once more to calculate the abnormal pressures at the sensors. The number of = 12,834 potential leak events would be generated, leveraging combinations of leaks at different nodes. For each event, both the normal and abnormal pressures at each sensor are calculated over a 24 h period using Equation (1). Finally, the samples are integrated according to Equation (12), forming samples where the inputs comprise the pressure sensitivities detected by sensors, and the outputs denote the partition labels indicative of leak presence. The output employs a one-hop structure with the Softmax activation function, consisting of five neurons, each representing a different partition, such as [0, 0, 0, 0, 0] and [1, 0, 1, 0, 0], indicating no leak and leaks in the first and third partitions, respectively.

As the datasets are composed, the LSTM model is applied to detect the leaks. The dataset is divided into a training set and a testing set. The proportion of the two sets is 4:1. After the model training is completed, the test data are input into the trained model to determine the leakage partition numbers. These are then compared with the leakage area labels to calculate the leak detection rate. The leak detection rate is formulated as Equation (14). The results of leak locations in the five test datasets are shown in

Table 1.

where

is the number of leakage nodes correctly identified by the partition label;

is the number of leakage nodes that the partition label failed to detect correctly.

The leakage detection rate reaches its maximum at 95.65%, and the lowest detection rate is 92.39%. The detection rates in different time periods all exceed 90%, indicating a relatively high performance. The standard deviation could be solved. There is a small standard deviation of only 1.24 in the five tests. Thus, the model is stable and reliable, with minimal fluctuation in its ability to predict water leakage scenarios in five different test samples. Therefore, it can effectively detect leaks in network A.

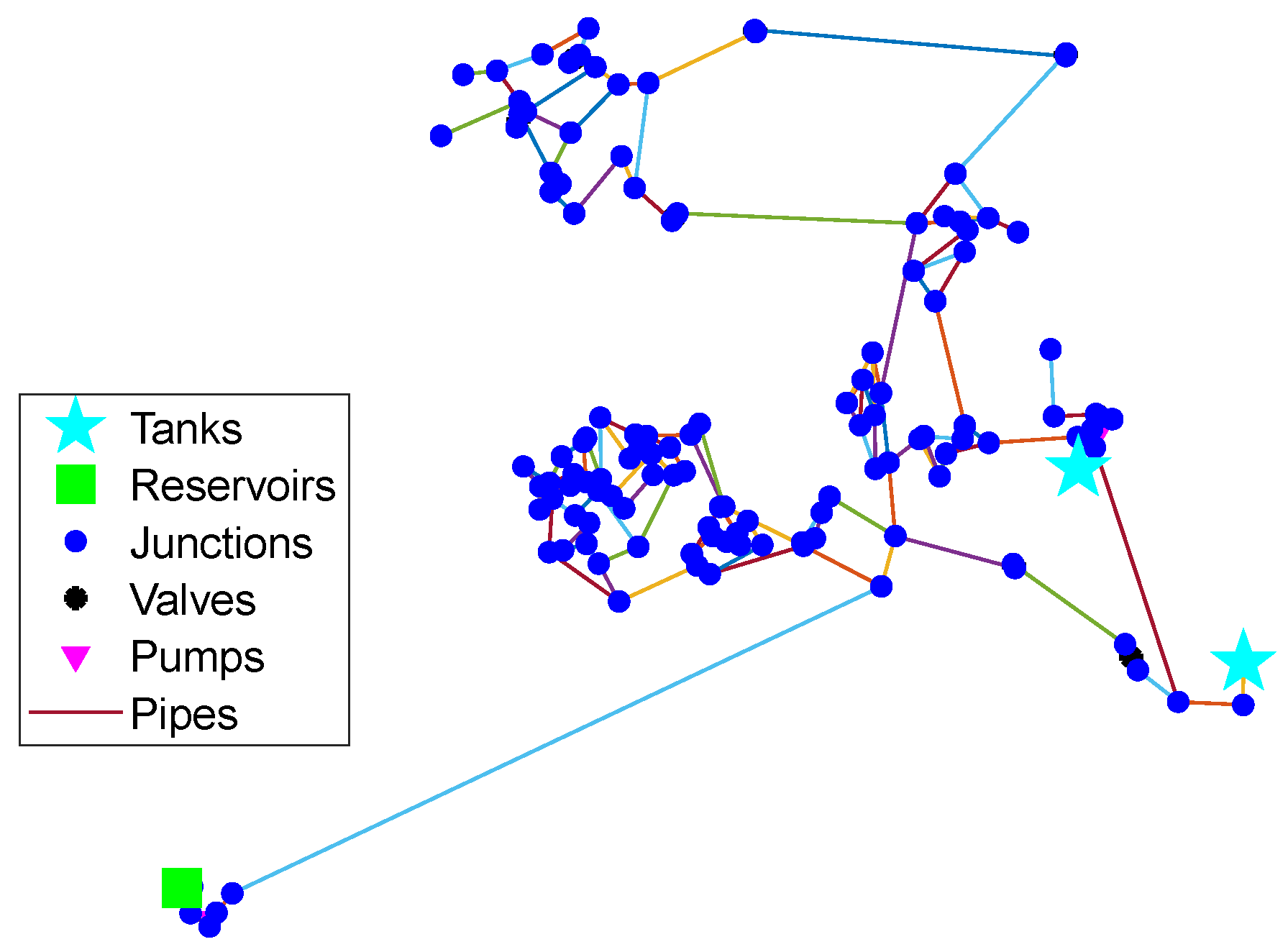

3.2. Network B

Network B has 126 junctions, 168 pipes, two pumps, one reservoir, and two tanks [

39], as shown in

Figure 5. The average water demand is 211.81 m

3/h. The maximum time of EPS is set to 24 h.

The network partition is processed. The embedding lengths ranging from 2 to 6 are tested, as illustrated in

Table S4. The k-means algorithm is used to cluster the node into ten partitions. The length of embedding is set to three, having an optimized number of outliers, where the dimension of pressure sensitivity would be reduced (126 to 3). Then, the k-means algorithm is applied to cluster the nodes to different partitions. The network is divided into different partitions ranging from 2 to 10. Valves would be installed in the boundary pipes after partitioning. The states of valves are selected to obtain the best HRE solution with an exhaustive search. The HREs are solved, as illustrated in

Figure 6. Four partitions exhibit the largest HRE, which indicates that the leakage will occur with a lower probability. There are five valves in the network and the boundary pipe indexes are 1, 36, 42, 46, and 170. The states of these valves are [1, 0, 0, 1, 1].

The best strategy of partition is shown in

Figure 7. The nearest center nodes would be regarded as the sensors for each partition. The sensors’ indexes are 5, 43, 62, and 110. The details can be found in the

Supplementary Materials.

The 3% leakage demands are added to the nodes. The normal and abnormal pressures are solved by the EPS process. Then, the number of

= 24,003 leakage events are generated. After the EPS process, the pressure sensitivities are solved. Then, the samples are imported to the LSTM model. The dataset is divided into a training set and a testing set with a proportion of 4:1. The average values of the leak detection rate for the partitions are calculated, as shown in

Table 2.

The leakage detection rate reaches its maximum at 91.27%, and the lowest detection rate is 87.30%. The detection rates in different time periods all exceed 85%, indicating a relatively high performance. Meanwhile, there is a small standard deviation of 1.59 in the five tests. So, the model is also stable and reliable, with minimal fluctuation in its ability to predict water leakage scenarios in five different test samples. Our results demonstrate that this model is effective at predicting leaks.

3.3. Comparison

To prove the efficiency and reliability of the method for leak detection in this study, a multiple support vector machine (M-SVM) approach could be adopted in the abovementioned two cases. The pressure sensitivities of sensors are used directly as input data for the M-SVM models, with details provided in reference [

24]. The inputs are the water pressure sensitivity matrix, and the outputs are the leak partition labels. Finally, we evaluate the efficiency and reliability of the proposed model for leakage detection on the simulated validation dataset using standard classification metrics, including leak detection rates, accuracy, precision, recall, and F1 score. The equations are expressed as follows:

where ACC, PREC, REC, and F are the accuracy, precision, recall, and F1 score of leak detection, respectively. TP is the true positive where the model correctly identified leakage partitions, TN is the true negative that correctly identified no leakage partitions, FP is the false positive that incorrectly identified leakage partitions, and FN is the false negative that failed to identify an actual leakage partition.

The results of these evaluations are shown in

Table 3. In cases A and B, the leak detection rates, accuracy, precision, recall, and F1 score of this study surpass those of M-SVM. With its enhanced accuracy and precision, the model demonstrates an ability to detect leaks with a reduced number of FPs, facilitating more efficient management for leakage. The high recall indicates fewer FNs, confirming the model’s reliability without missing leakage incidents. Moreover, higher F1 scores imply that it is capable of performing consistently under a variety of pressure sensitivities. Additionally, a higher average leak detection rate indicates the model is effective in detecting leakages. Therefore, the model can efficiently process data and provide reliability for leakage detection.