Extraction and Classification of Flood-Affected Areas Based on MRF and Deep Learning

Abstract

:1. Introduction

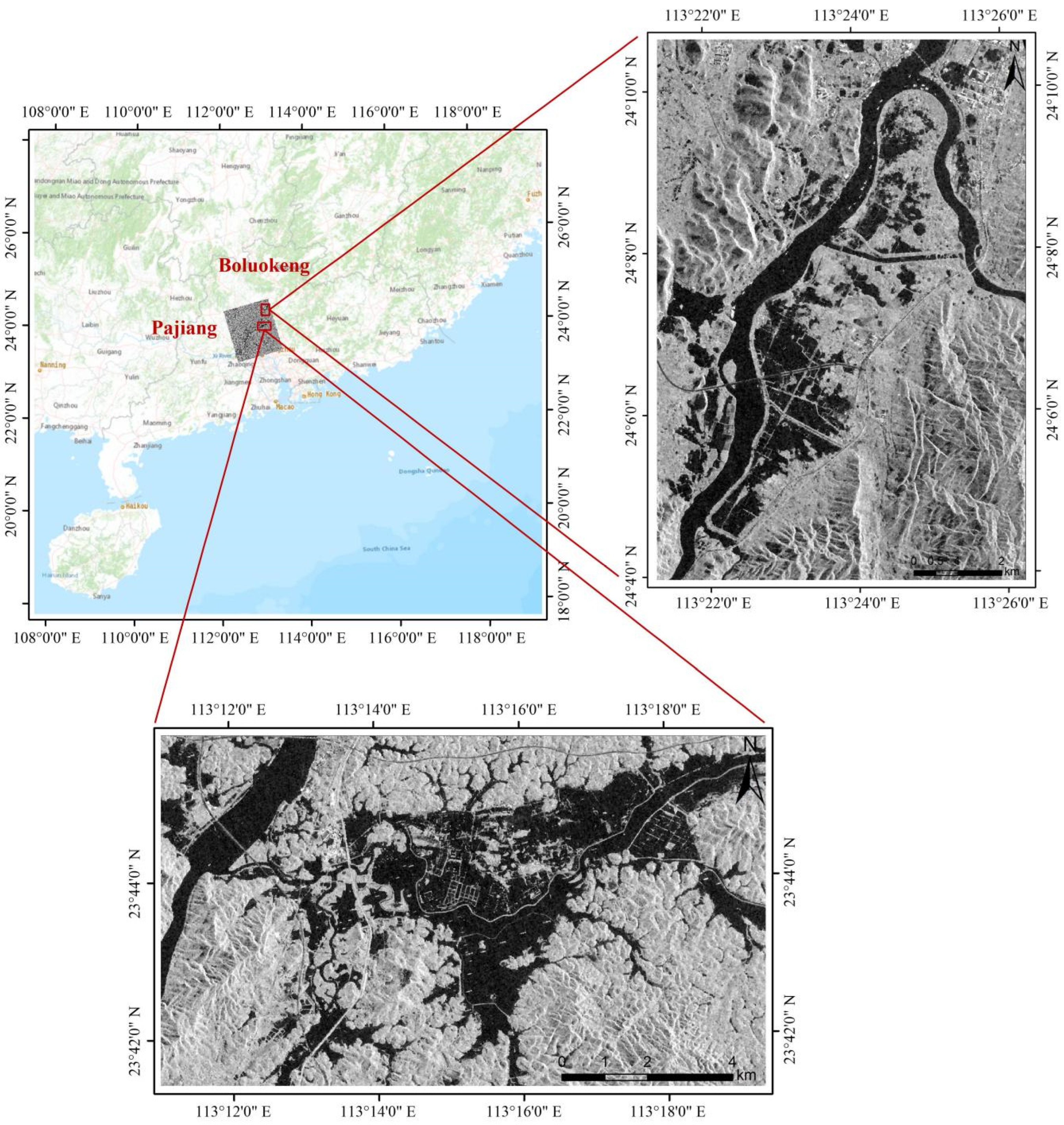

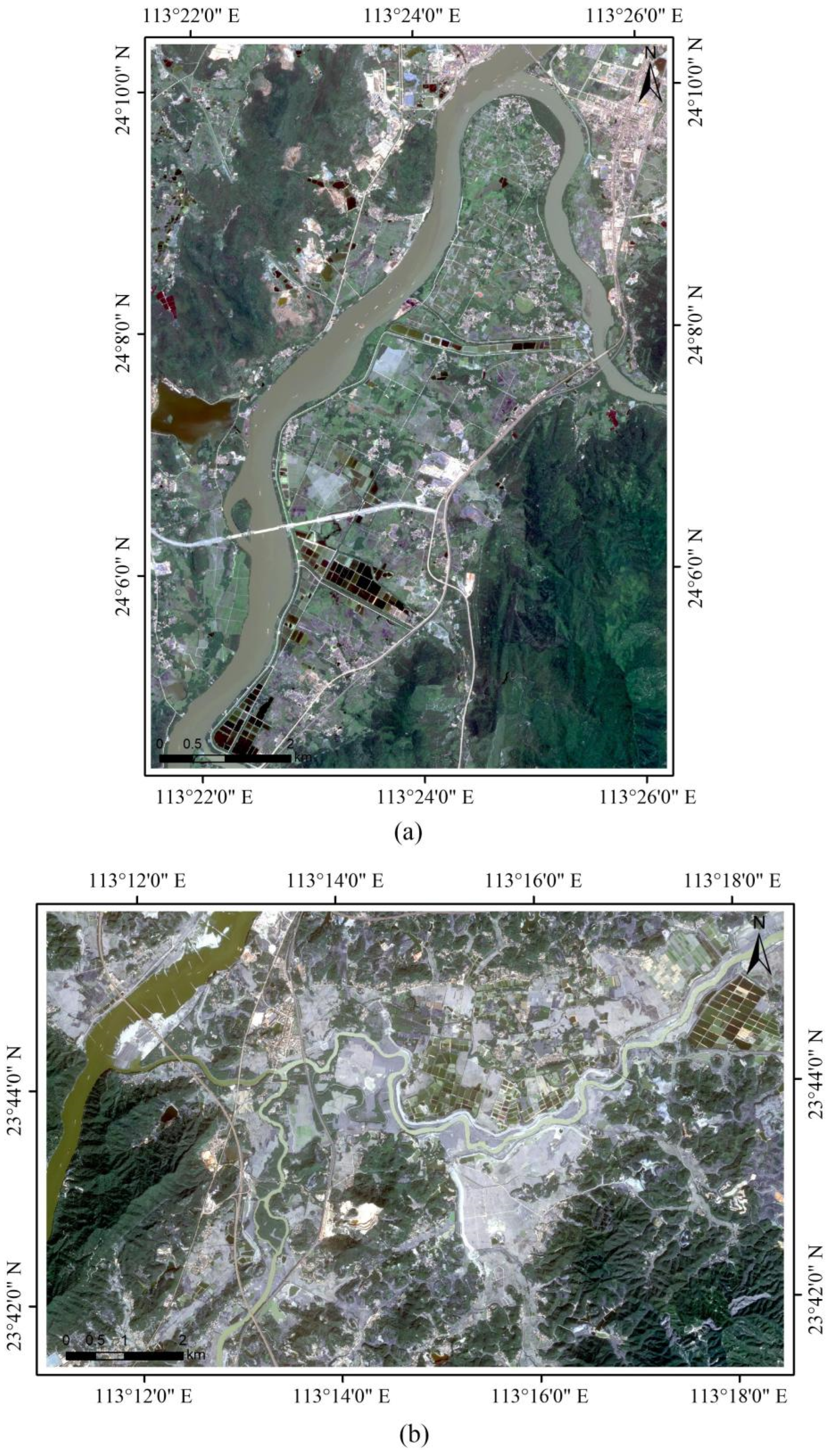

2. Test Case and Dataset

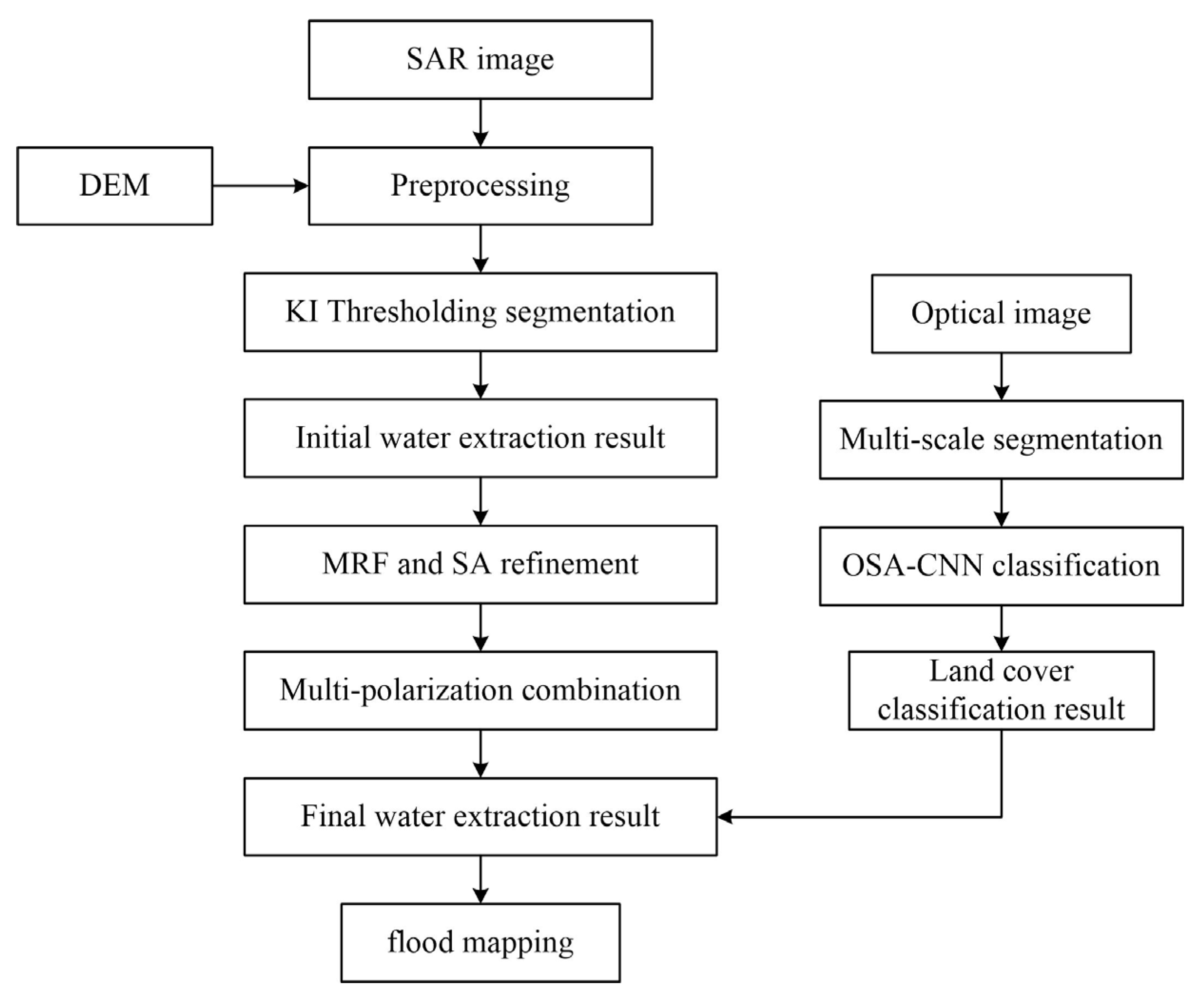

3. Methodology

3.1. Image Preprocessing

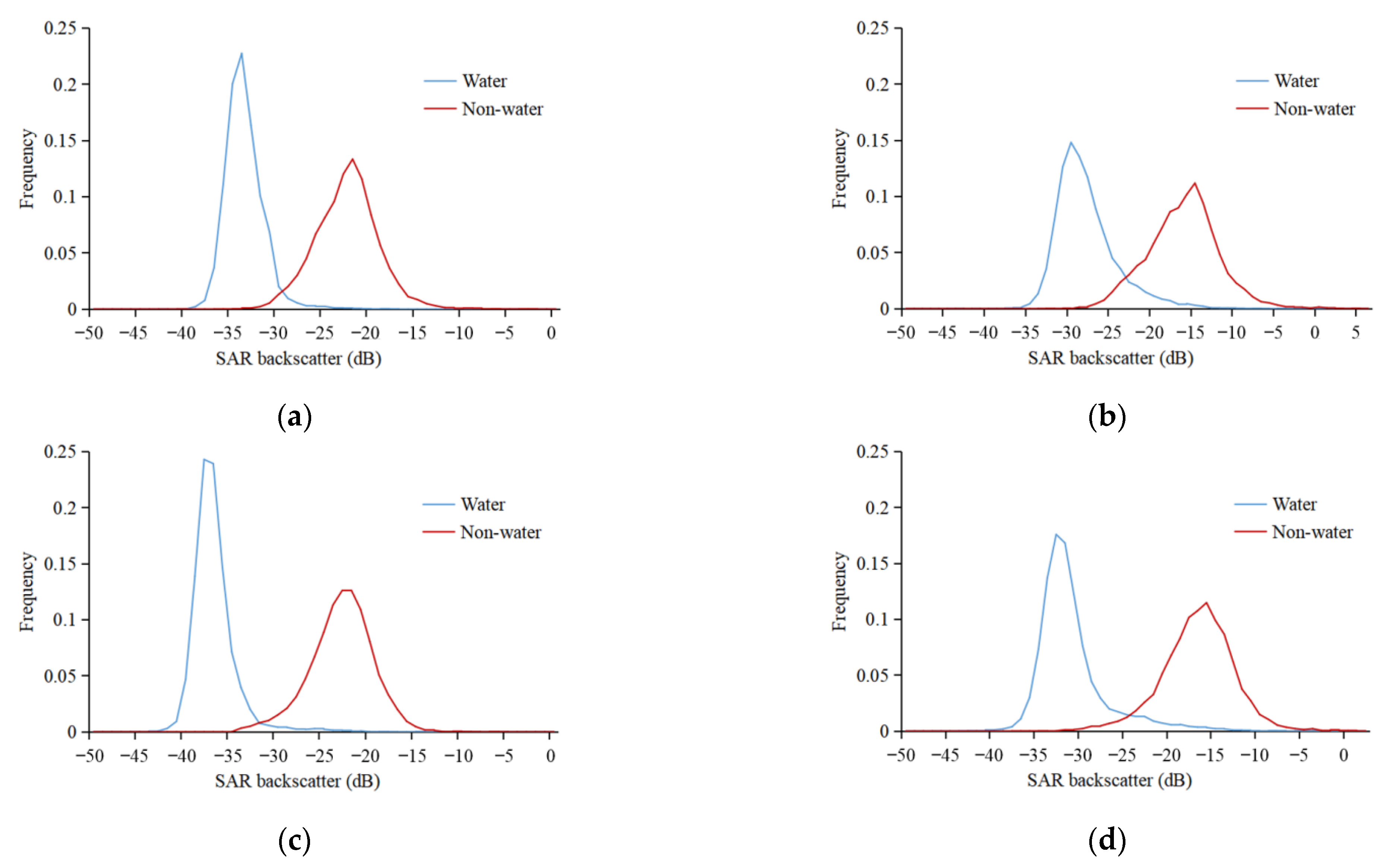

3.2. Thresholding Segmentation

3.3. Refinement of the Water Extraction Method

3.4. Classification of Flooded Area

3.5. Accuracy Evaluation

4. Results

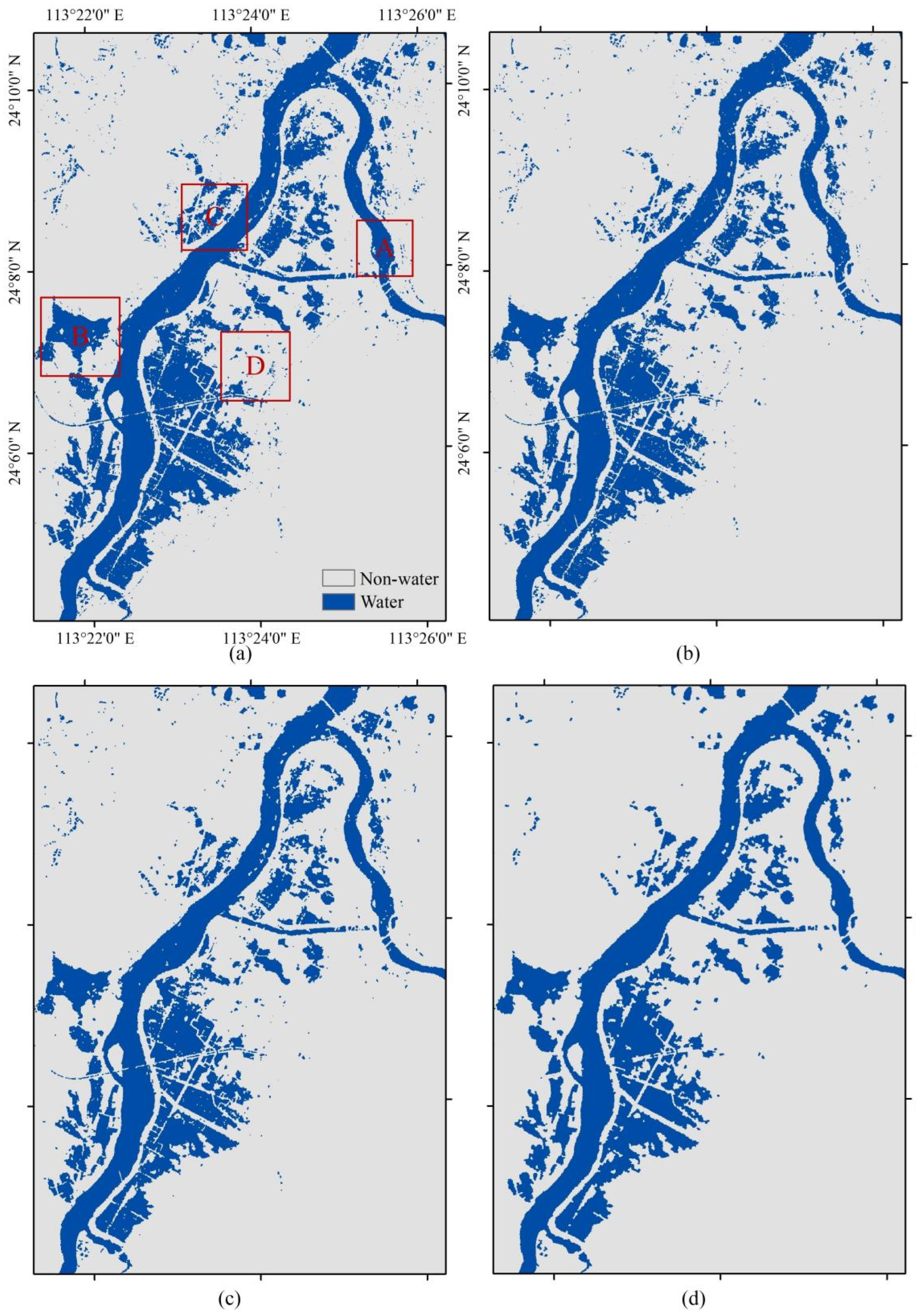

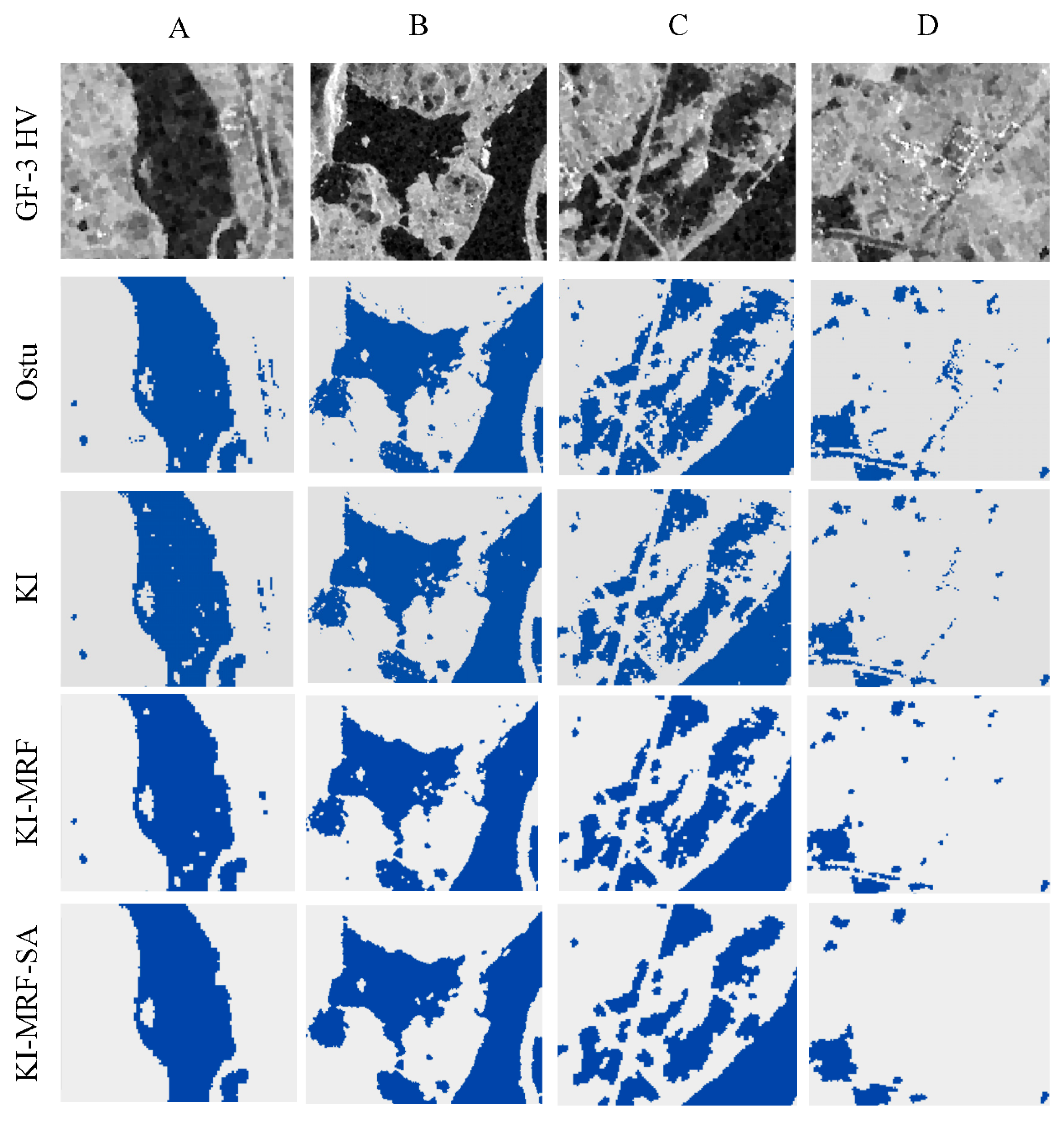

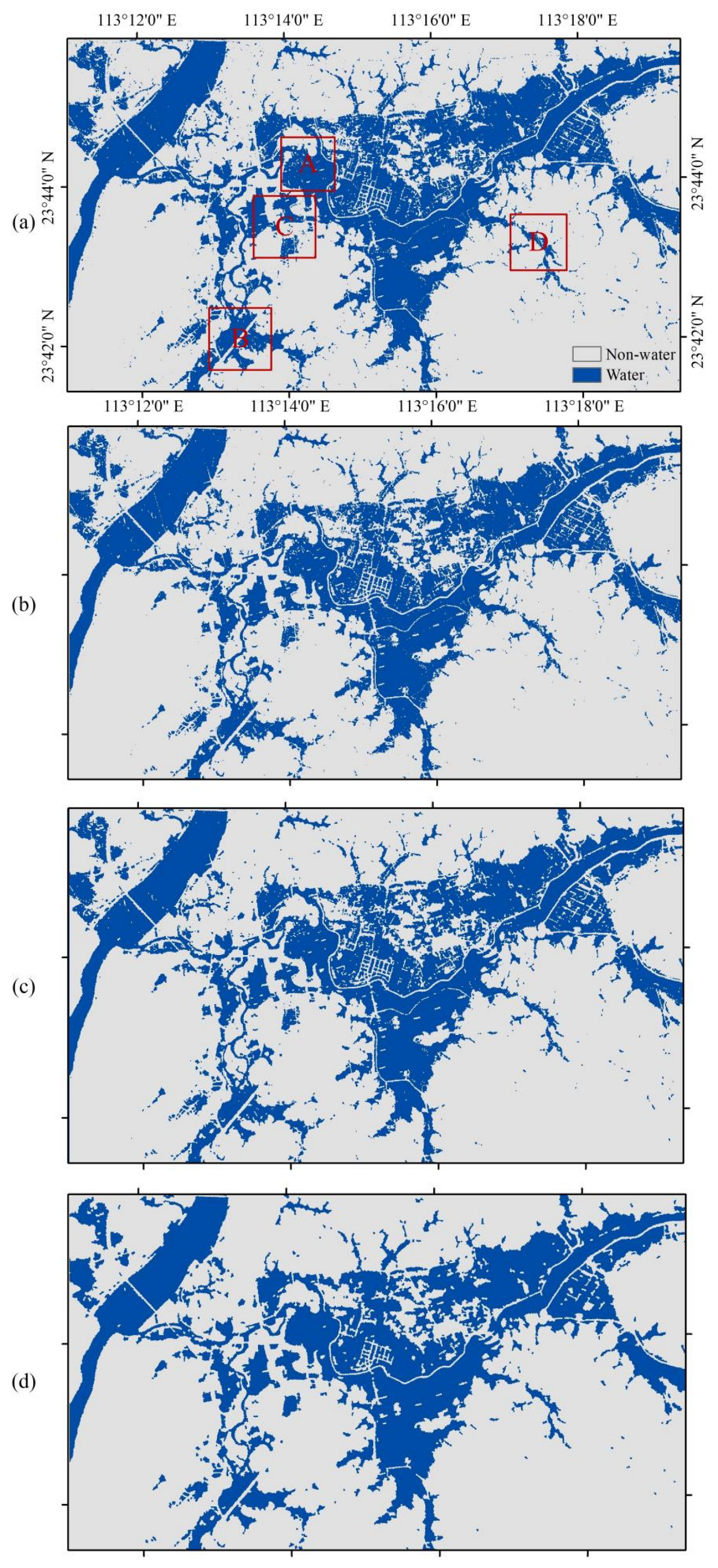

4.1. Water Extraction

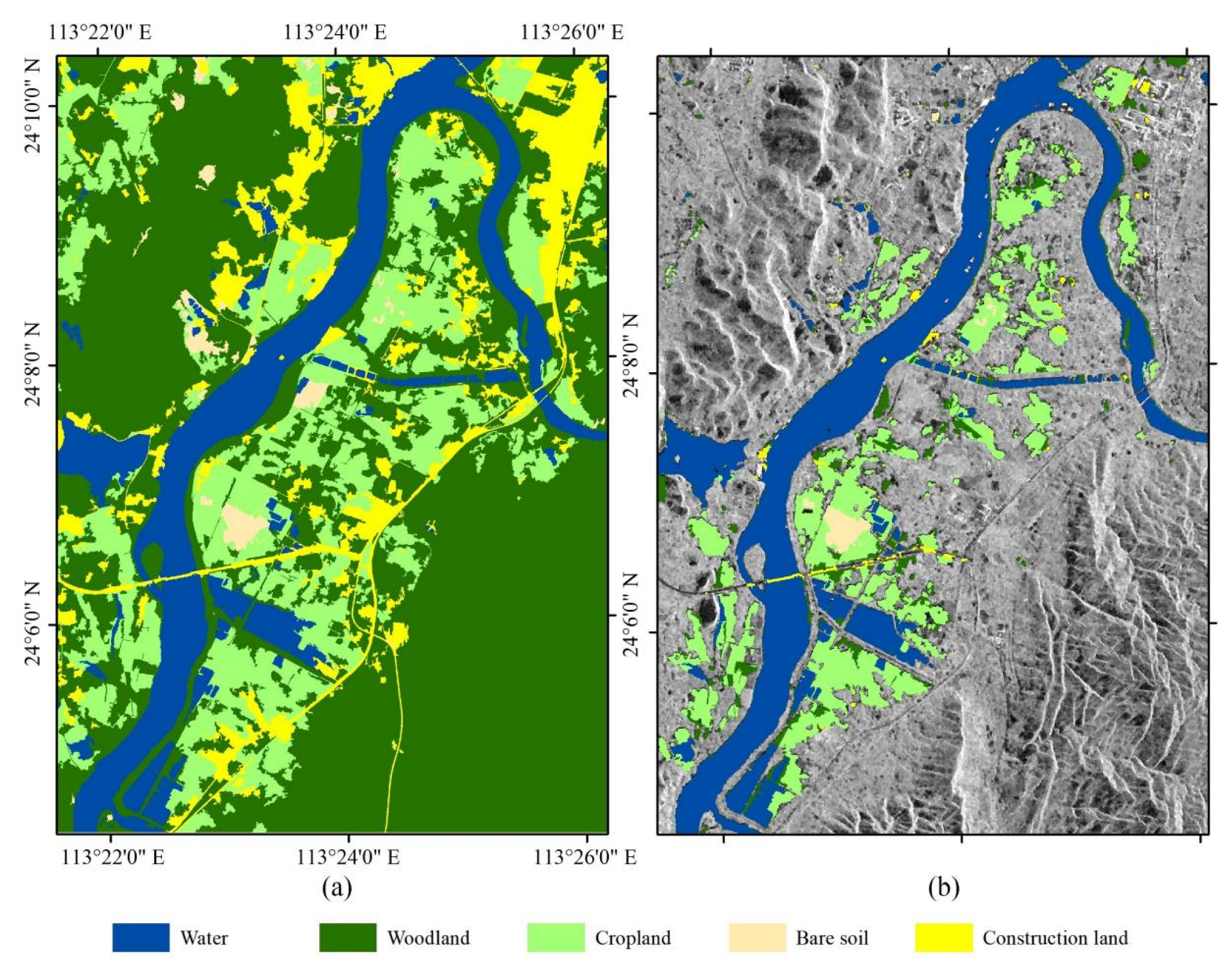

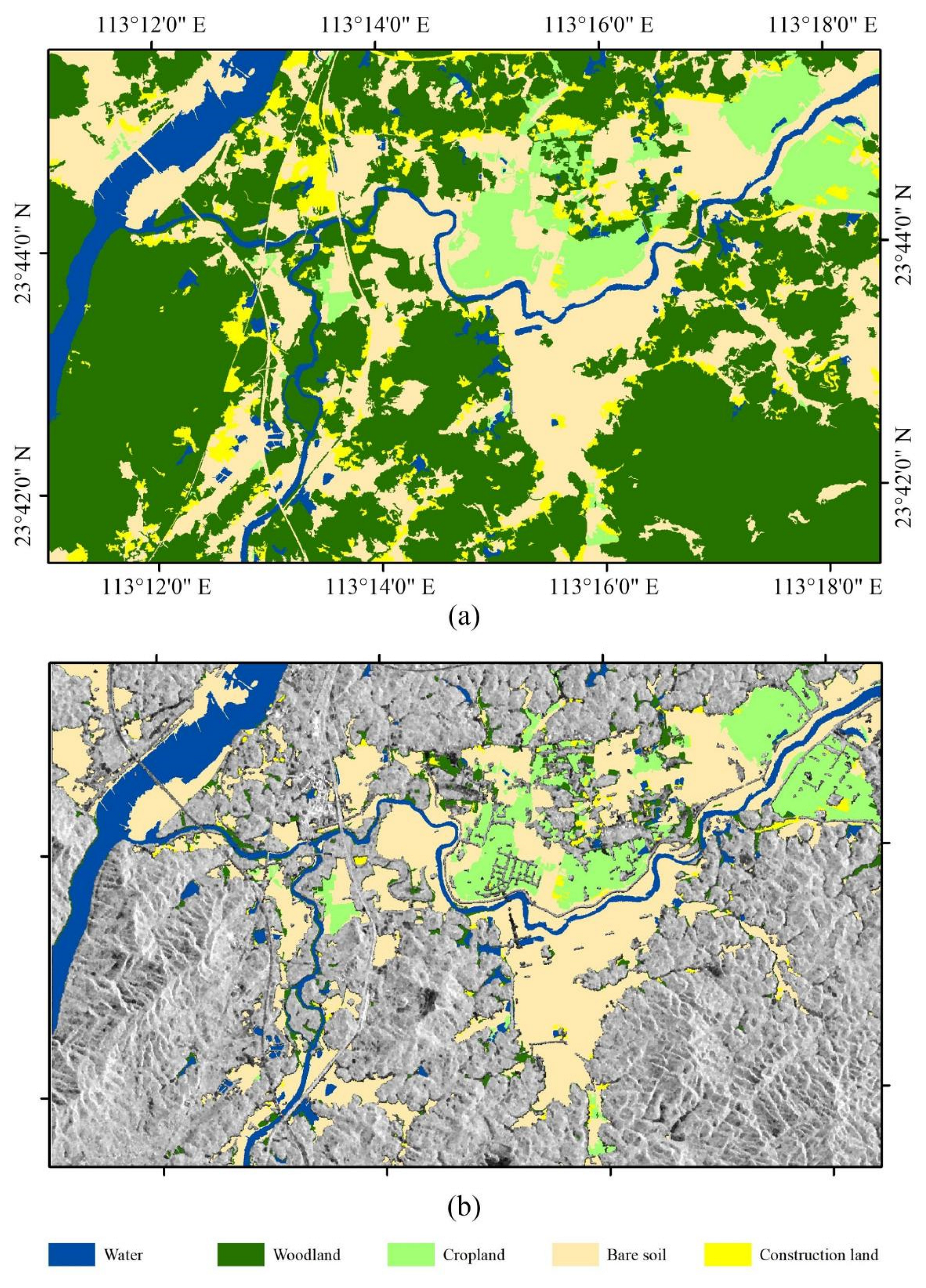

4.2. Classification of Inundated Areas

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liang, Y.; Liu, D. A local thresholding approach to flood water delineation using Sentinel-1 SAR imagery. ISPRS J. Photogramm. Remote Sens. 2020, 159, 53–62. [Google Scholar] [CrossRef]

- Adhikari, P.; Hong, Y.; Douglas, K.R.; Kirschbaum, D.B.; Gourley, J.; Adler, R.; Robert Brakenridge, G. A digitized global flood inventory (1998–2008): Compilation and preliminary results. Nat. Hazards. 2010, 55, 405–422. [Google Scholar] [CrossRef]

- Hallegatte, S.; Green, C.; Nicholls, R.J.; Corfee-Morlot, J. Future flood losses in major coastal cities. Nat. Clim. Chang. 2013, 3, 802–806. [Google Scholar] [CrossRef]

- Schumann, G.J.P.; Bates, P.D.; Di Baldassarre, G.; Mason, D.C. Chapter 6 The Use of Radar Imagery in Riverine Flood Inundation Studie. In Fluvial Remote Sensing for Science and Management; John Wiley & Sons: Hoboken, NJ, USA, 2012; pp. 115–140. [Google Scholar]

- Pall, P.; Aina, T.; Stone, D.A.; Stott, P.A.; Nozawa, T.; Hilberts, A.G.J.; Lohmann, D.; Allen, M.R. Anthropogenic greenhouse gas contribution to flood risk in England and Wales in autumn 2000. Nature 2011, 470, 382–385. [Google Scholar] [CrossRef]

- Martinez, J.M.; Le Toan, T. Mapping of flood dynamics and spatial distribution of vegetation in the Amazon floodplain using multitemporal SAR data. Remote Sens. Environ. 2007, 108, 209–223. [Google Scholar] [CrossRef]

- Tralli, D.M.; Blom, R.G.; Zlotnicki, V.; Donnellan, A.; Evans, D.L. Satellite remote sensing of earthquake, volcano, flood, landslide and coastal inundation hazards. ISPRS J. Photogramm. Remote Sens. 2005, 59, 185–198. [Google Scholar] [CrossRef]

- Kuenzer, C.; Guo, H.; Schlegel, I.; Tuan, V.; Li, X.; Dech, S. Varying scale and capability of Envissat ASAR-WSM, TerraSAR-X Scansar and TerraSAR-X Stripmap data to assess urban flood situations: A case study of the mekong delta in can tho province. Remote Sens. 2013, 5, 5122–5142. [Google Scholar] [CrossRef] [Green Version]

- Mason, D.C.; Giustarini, L.; Garcia-Pintado, J.; Cloke, H.L. Detection of flooded urban areas in high resolution Synthetic Aperture Radar images using double scattering. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 150–159. [Google Scholar] [CrossRef] [Green Version]

- O’Grady, D.; Leblanc, M.; Bass, A. The use of radar satellite data from multiple incidence angles improves surface water mapping. Remote Sens. Environ. 2014, 140, 652–664. [Google Scholar] [CrossRef]

- Schumann, G.J.P.; Neal, J.C.; Mason, D.C.; Bates, P.D. The accuracy of sequential aerial photography and SAR data for observing urban flood dynamics, a case study of the UK summer 2007 floods. Remote Sens. Environ. 2011, 115, 2536–2546. [Google Scholar] [CrossRef]

- Ulaby, F.; Dobson, M.C. Handbook of Radar Scattering Statistics for Terrain; Artech House: Norwood, MA, USA, 1989. [Google Scholar]

- Chaouch, N.; Temimi, M.; Hagen, S.; Weishampel, J.; Medeiros, S.; Khanbilvardi, R. A synergetic use of satellite imagery from SAR and optical sensors to improve coastal flood mapping in the Gulf of Mexico. Hydrol. Process. 2011, 26, 1617–1628. [Google Scholar] [CrossRef] [Green Version]

- Chung, H.W.; Liu, C.C.; Cheng, I.F.; Lee, Y.R.; Shieh, M.C. Rapid response to a typhoon-induced flood with an SAR-derived map of inundated areas: Case study and validation. Remote Sens. 2015, 7, 11954–11973. [Google Scholar] [CrossRef] [Green Version]

- Gstaiger, V.; Huth, J.; Gebhardt, S.; Wehrmann, T.; Kuenzer, C. Multi-sensoral and automated derivation of inundated areas using TerraSAR-X and ENVISAT ASAR data. Int. J. Remote Sens. 2012, 33, 7291–7304. [Google Scholar] [CrossRef]

- Boni, G.; Ferraris, L.; Pulvirenti, L.; Squicciarino, G.; Pierdicca, N.; Candela, L.; Pisani, A.R.; Zoffoli, S.; Onori, R.; Proietti, C.; et al. A prototype system for flood monitoring based on flood forecast combined with COSMO-SkyMed and Sentinel-1 Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 2794–2805. [Google Scholar] [CrossRef]

- Chini, M.; Hostache, R.; Giustarini, L.; Matgen, P. A hierarchical split-based approach for parametric thresholding of SAR images: Flood inundation as a test case. Geosci. Remote Sens. IEEE Trans. 2017, 55, 6975–6988. [Google Scholar] [CrossRef]

- Greifeneder, F.; Wagner, W.; Sabel, D.; Naeimi, V. Suitability of SAR imagery for automatic flood mapping in the Lower Mekong Basin. Int. J. Remote Sens. 2014, 35, 2857–2874. [Google Scholar] [CrossRef]

- Martinis, S.; Kersten, J.; Twele, A. A fully automated TerraSAR-X based flood service. ISPRS J. Photogramm. Remote Sens. 2015, 104, 203–212. [Google Scholar] [CrossRef]

- Martinis, S.; Twele, A.; Strobl, C.; Kersten, J.; Stein, E. A multi-scale flood monitoring system based on fully automatic MODIS and TerraSAR-X processing chains. Remote Sens. 2013, 5, 5598–5619. [Google Scholar] [CrossRef] [Green Version]

- Martinis, S.; Twele, A.; Voigt, S. Towards operational near real-time flood detection using a split-based automatic thresholding procedure on high resolution TerraSAR-X data. Nat. Hazards Earth Syst. Sci. 2009, 9, 303–314. [Google Scholar] [CrossRef]

- Twele, A.; Cao, W.; Plank, S.; Martinis, S. Sentinel-1-based flood mapping: A fully automated processing chain. Int. J. Remote Sens. 2016, 37, 2990–3004. [Google Scholar] [CrossRef]

- Iervolino, P.; Guida, R.; Iodice, A.; Riccio, D. Flooding water depth estimation with high-resolution SAR. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2295–2307. [Google Scholar] [CrossRef] [Green Version]

- Chini, M.; Pulvirenti, L.; Pierdicca, N. Analysis and interpretation of the COSMO-SkyMed observations of the 2011 Japan Tsunami. IEEE Geosci. Remote Sens. Lett. 2012, 9, 467–471. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Chini, M.; Pierdicca, N.; Boni, G. Use of SAR data for detecting floodwater in urban and suburban areas: The role of the interferometric coherence. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1532–1544. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Pierdicca, N.; Chini, M.; Guerriero, L. Monitoring flood evolution in vegetated areas using COSMO-SkyMed data: The Tuscany 2009 case study. IEEE J. Sel. Topics Appl. Earth Obs. Remote Sens. 2013, 6, 1807–1816. [Google Scholar] [CrossRef]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P.; Ludwig, R. Detection of temporary flooded vegetation using Sentinel-1 time series data. Remote Sens. 2018, 10, 1286. [Google Scholar] [CrossRef] [Green Version]

- Tsyganskaya, V.; Martinis, S.; Marzahn, P.; Ludwig, R. SAR-based detection of flooded vegetation—A review of characteristics and approaches. Int. J. Remote Sens. 2018, 39, 2255–2293. [Google Scholar] [CrossRef]

- Pierdicca, N.; Pulvirenti, L.; Boni, G.; Squicciarino, G.; Chini, M. Mapping flooded vegetation using COSMO-SkyMed: Comparison with polarimetric and optical data over rice fields. IEEE J. Sel. Topics Appl. Earth Obs. Remote Sens. 2017, 10, 2650–2662. [Google Scholar] [CrossRef]

- Giustarini, L.; Hostache, R.; Matgen, P.; Schumann, G.J.P.; Bates, P.D.; Mason, D.C. A change detection approach to flood mapping in urban areas using TerraSAR-X. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2417–2430. [Google Scholar] [CrossRef] [Green Version]

- Hostache, R.; Matgen, P.; Wagner, W. Change detection approaches for flood extent mapping: How to select the most adequate reference image from online archives? Int. J. Appl. Earth Obs. Geoinf. 2012, 19, 205–213. [Google Scholar] [CrossRef]

- Pierdicca, N.; Chini, M.; Pulvirenti, L.; Macina, F. Integrating physical and topographic information into a fuzzy scheme to map flooded area by SAR. Sensors 2008, 8, 4151–4164. [Google Scholar] [CrossRef] [Green Version]

- Pierdicca, N.; Pulvirenti, L.; Chini, M. Flood mapping in vegetated and urban areas and other challenges: Models and methods. In Flood Monitoring through Remote Sensing; Refice, A., D’Addabbo, A., Capolongo, D., Eds.; Springer: Cham, Switzerland, 2018; pp. 135–179. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 142–158. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Zhu, X.; Tuia, D.; Mou, L.; Xia, G.; Zhang, L.; Xu, F. Deep learning in remote sensing: A comprehensive review and list of resources. IEEE Geosci. Remote Sens. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state-of-the-art. Proc. IEEE. 2017, 105, 1865–1883. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Yang, C.; Yao, X.; Guo, L.; Han, J. When deep learning meets metric learning: Remote sensing image scene classification via learning discriminative CNNs. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2811–2821. [Google Scholar] [CrossRef]

- Zhou, Q.; Zhou, Y.; Zhang, L.; Li, D. Adaptive deep sparse semantic modeling framework for high spatial resolution image scene classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6180–6195. [Google Scholar] [CrossRef]

- Li, E.; Xia, J.; Du, P.; Ling, C.; Samat, A. Integrating multi-layer features of convolutional neural networks for remote sensing scene classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5653–5665. [Google Scholar] [CrossRef]

- Du, P.; Li, E.; Xia, J.; Samat, A.; Bai, X. Feature and model level fusion of pretrained CNN for remote sensing scene classification. IEEE J. Sel. Topics Appl. Earth Obs. Remote Sens. 2019, 12, 2600–2611. [Google Scholar] [CrossRef]

- Hu, F.; Xia, G.; Yang, W.; Zhang, L. Mining deep semantic representations for scene classification of high-resolution remote sensing imagery. IEEE Trans. Big Data. 2019, 6, 522–536. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S.; Emery, W.J. Object-based convolutional neural network for high-resolution imagery classification. IEEE J. Sel. Topics Appl. Earth Obs. Remote Sens. 2017, 10, 3386–3396. [Google Scholar] [CrossRef]

- Xu, Y.; Du, B.; Zhang, F.; Zhang, L. Hyperspectral image classification via a random patches network. ISPRS J. Photogramm. Remote Sens. 2018, 142, 344–357. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, Y. Urban land-use mapping using a deep convolutional neural network with high spatial resolution multispectral remote sensing imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Zhou, S.; Xue, Z.; Du, P. Semisupervised stacked autoencoder with cotraining for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3813–3826. [Google Scholar] [CrossRef]

- Wang, J.; Zheng, Y.; Shen, Q.; Huang, J. Object-Scale Adaptive Convolutional Neural Networks for High-Spatial Resolution Remote Sensing Image Classification. IEEE J. Sel. Topics Appl. Earth Obs. Remote Sens. 2020, 14, 283–299. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Kittler, J.; Illingworth, J. Minimum error thresholding. Pattern Recogn. 1986, 19, 41–47. [Google Scholar] [CrossRef]

- Bazi, Y.; Bruzzone, L.; Melgani, F. An unsupervised approach based on the generalized Gaussian model to automatic change detection in multitemporal SAR images. IEEE Trans. Geosci. Remote Sens. 2005, 43, 874–887. [Google Scholar] [CrossRef] [Green Version]

- Melgani, F.; Moser, G.; Serpico, S.B. Unsupervised change-detection methods for remote-sensing images. Opt. Eng. 2002, 41, 3288–3297. [Google Scholar]

- Bovolo, F.; Bruzzone, L. A split-based approach to unsupervised change detection in large-size SAR images. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1658–1670. [Google Scholar] [CrossRef]

- Moser, G.; Serpico, S.B. Generalized Minimum-Error Thresholding for Unsupervised Change Detection From SAR Amplitude Imagery. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2972–2982. [Google Scholar] [CrossRef]

- Besag, J. On the Statistical-Analysis of Dirty Pictures. J. R. Stat. Society. Ser. B Methodol. 1986, 48, 259–302. [Google Scholar] [CrossRef] [Green Version]

- Kirkpatrick, S.; Gelatt, C.D.; Vecchi, A. Optimization by Simulated Annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef] [PubMed]

- Metropolis, N.; Rosenbluth, A.W.; Rosenbluth, M.N.; Taller, A.H.; Teller, E. Equation of State by Fast Computing Machines. J. Chem. Phys. 1953, 21, 1087–1092. [Google Scholar] [CrossRef] [Green Version]

- Wang, M.; Wang, J. A Region-Line Primitive Association Framework for Object-Based Remote Sensing Image Analysis. Photogramm. Eng. Remote Sens. 2016, 82, 149–159. [Google Scholar] [CrossRef]

- Wang, M.; Li, R. Segmentation of High Spatial Resolution Remote Sensing Imagery Based on Hard-Boundary Constraint and Two-Stage Merging. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5712–5725. [Google Scholar] [CrossRef]

- Wang, M.; Xing, J.; Wang, J.; Lv, G. Technical design and system implementation of region-line primitive association framework. ISPRS J. Photogramm. Remote Sens. 2017, 130, 202–216. [Google Scholar] [CrossRef]

- Wang, M.; Cui, Q.; Wang, J.; Ming, D.; Lv, G. Raft cultivation area extraction from high resolution remote sensing imagery by fusing multi-scale region-line primitive association features. ISPRS J. Photogramm. Remote Sens. 2017, 123, 104–113. [Google Scholar] [CrossRef]

- Wang, M.; Cui, Q.; Sun, Y.; Wang, Q. Photovoltaic panel extraction from very high-resolution aerial imagery using region–line primitive association analysis and template matching. ISPRS J. Photogramm. Remote Sens. 2018, 141, 100–111. [Google Scholar] [CrossRef]

- Oubanas, H.; Gejadze, I.; Malaterre, P.O.; Mercier, F. River discharge estimation from synthetic SWOT-type observations using variational data assimilitation and the full Saint-Venant hydrualic model. J. Hydrol. 2018, 559, 638–647. [Google Scholar] [CrossRef]

- Hostache, R.; Chini, M.; Giustarini, L.; Neal, J.; Kavetski, D.; Wood, M.; Corato, G.; Pelich, R.M.; Matgen, P. Near-real-time assimilation of SAR-derived flood maps for improving flood forecasts. Water Resour. Res. 2018, 54, 5516–5535. [Google Scholar] [CrossRef]

- Bates, P.D. Remote sensing and flood inundation modelling. Hydrol. Process. 2002, 18, 2593–2597. [Google Scholar] [CrossRef]

| Image | Location | Remote Sensor | Acquisition Date | Size (Pixel) | Resolution (m) |

|---|---|---|---|---|---|

| Boluokeng | 113°23′51″ E | GF-3 (FSII) | 24 June 2022 | 1045 × 1411 | 10 × 10 |

| 24°7′23″ N | GF-2 | 11 November 2021 | 1972 × 2768 | 4 × 4 | |

| Pajiang | 113°14′56″ E | GF-3 (FSII) | 24 June 2022 | 2099 × 1319 | 10 × 10 |

| 23°43′31″ N | GF-2 | 11 November 2021 | 3160 × 1953 | 4 × 4 |

| Method | UA (%) | PA (%) | OA (%) | Kappa | ||

|---|---|---|---|---|---|---|

| Water | Non-Water | Water | Non-Water | |||

| Otsu | 84.90 | 92.87 | 88.35 | 90.61 | 88.60 | 0.77 |

| KI | 86.51 | 92.62 | 88.31 | 91.42 | 90.50 | 0.80 |

| KI-MRF | 87.41 | 94.20 | 90.84 | 91.91 | 91.50 | 0.82 |

| KI-MRF-SA | 89.82 | 95.38 | 92.65 | 93.53 | 93.10 | 0.85 |

| User/Reference Class | Water | Cropland | Bare Soil | Woodland | Construction Land | Sum |

|---|---|---|---|---|---|---|

| Water | 185 | 10 | 6 | 2 | 0 | 203 |

| Cropland | 10 | 257 | 9 | 5 | 2 | 283 |

| Bare soil | 0 | 8 | 382 | 1 | 0 | 391 |

| Woodland | 3 | 2 | 6 | 75 | 3 | 89 |

| Construction land | 0 | 1 | 2 | 3 | 28 | 34 |

| Sum | 198 | 278 | 405 | 86 | 33 | |

| PA (%) | 93.4 | 92.4 | 94.3 | 87.2 | 84.8 | |

| UA (%) | 91.1 | 90.8 | 97.7 | 84.3 | 82.4 | |

| OA (%) | 92.7 | |||||

| Kappa | 0.90 |

| Image | Visual Interpretation (dB) | Otsu (dB) | KI (dB) |

|---|---|---|---|

| Boluokeng HV | −29 | −28.02 | −29.20 |

| Boluokeng HH | −23 | −21.12 | −22.80 |

| Pajiang HV | −32 | −30.56 | −31.90 |

| Pajiang HH | −24 | −22.31 | −23.64 |

| k | Energy | |||

|---|---|---|---|---|

| s = 0 | s = 0.005 | s = 0.01 | s = 0.02 | |

| 0 | −7198 | −7198 | −7198 | −7198 |

| 1 | −7231 | −6838 | −4339 | −1522 |

| 2 | −7233 | −7216 | −6569 | −4236 |

| 3 | −7233 | −7240 | −7167 | −5933 |

| 4 | −7233 | −7246 | −7229 | −6876 |

| 5 | −7233 | −7246 | −7248 | −7146 |

| 6 | −7233 | −7246 | −7250 | −7211 |

| 7 | −7233 | −7247 | −7251 | −7231 |

| 8 | −7233 | −7247 | −7251 | −7243 |

| 9 | −7233 | −7247 | −7251 | −7250 |

| 10 | −7233 | −7247 | −7251 | −7251 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Huang, B.; Wang, F. Extraction and Classification of Flood-Affected Areas Based on MRF and Deep Learning. Water 2023, 15, 1288. https://doi.org/10.3390/w15071288

Wang J, Huang B, Wang F. Extraction and Classification of Flood-Affected Areas Based on MRF and Deep Learning. Water. 2023; 15(7):1288. https://doi.org/10.3390/w15071288

Chicago/Turabian StyleWang, Jie, Bensheng Huang, and Fuming Wang. 2023. "Extraction and Classification of Flood-Affected Areas Based on MRF and Deep Learning" Water 15, no. 7: 1288. https://doi.org/10.3390/w15071288