Comparison of Machine Learning Algorithms for Discharge Prediction of Multipurpose Dam

Abstract

1. Introduction

2. Materials and Methods

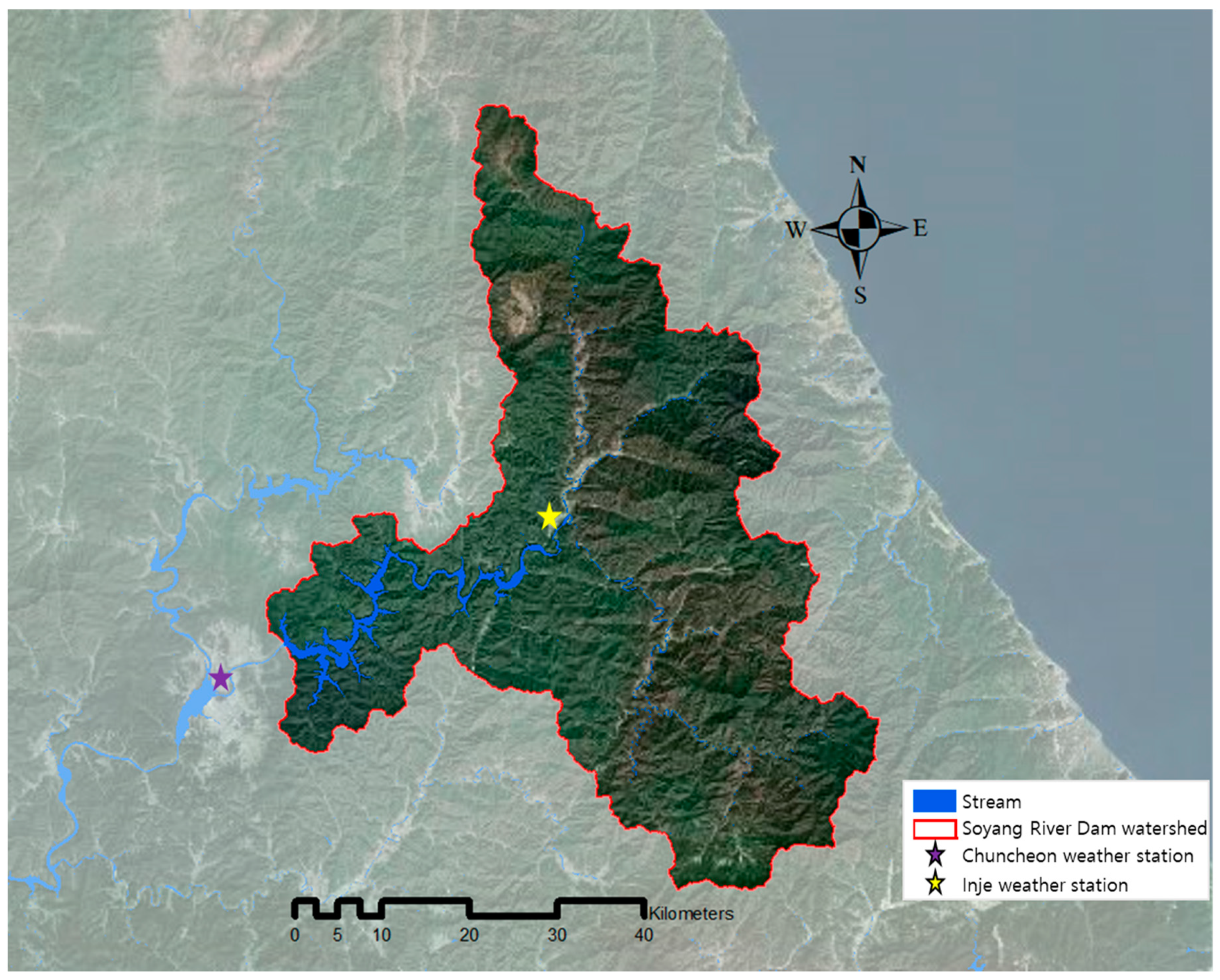

2.1. Study Area

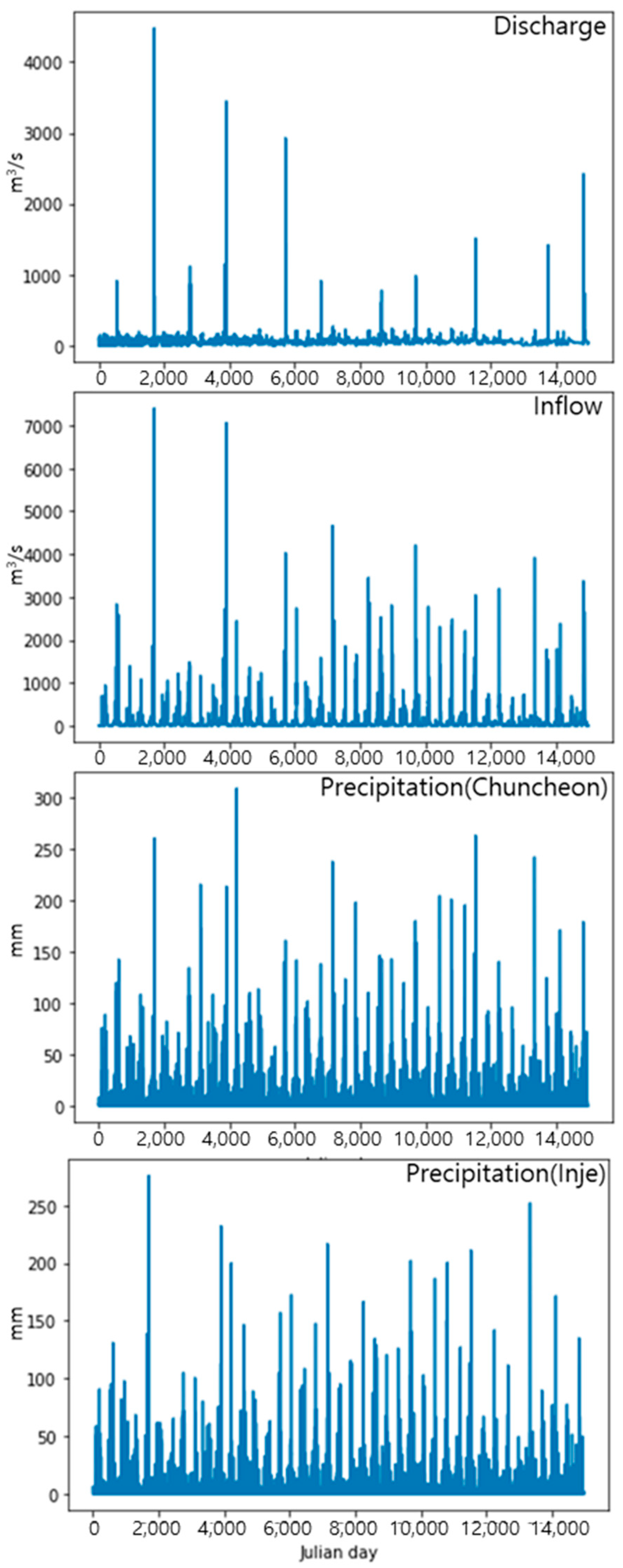

2.2. Data Descriptions

2.3. Machine Learning Algorithms

2.3.1. Decision Tree

2.3.2. Multilayer Perceptron

2.3.3. Random Forest

2.3.4. Gradient Boosting

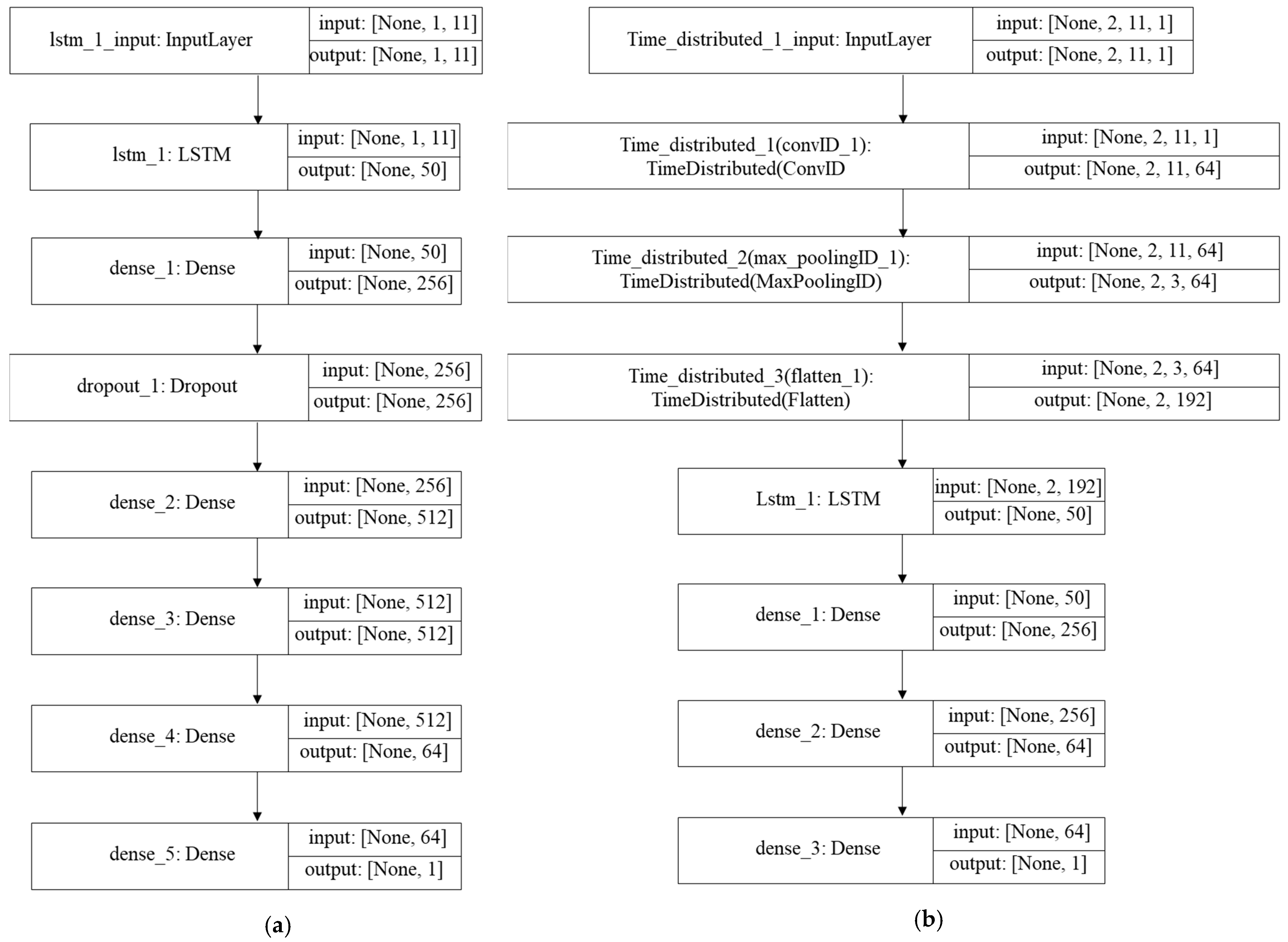

2.3.5. LSTM

2.3.6. CNN-LSTM

2.4. Model Training Test

3. Results and Discussion

3.1. Heatmap Analysis

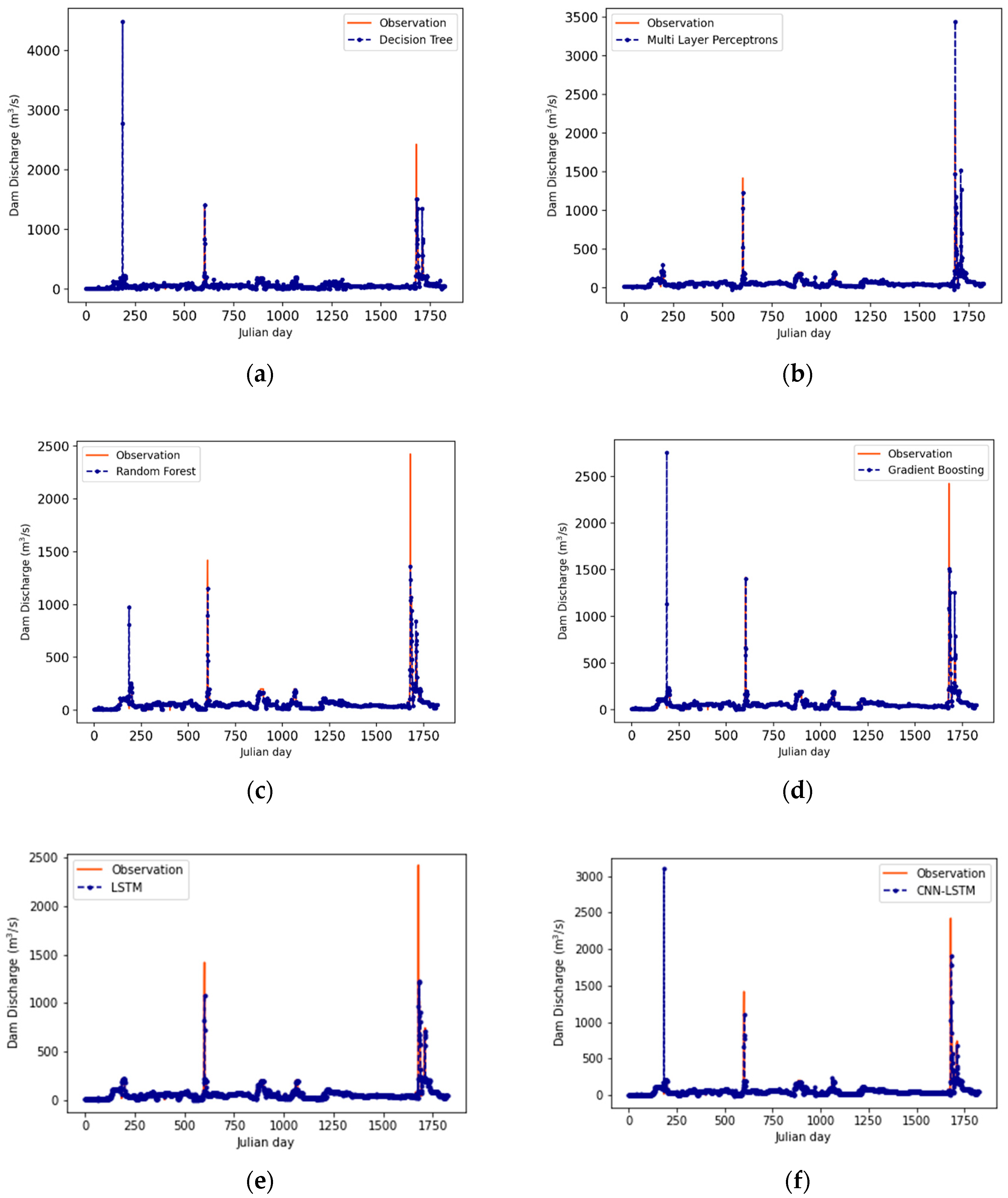

3.2. Simulation Results Using Machine Learning Algorithms

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Hanak, E.; Lund, J.R. Adapting California’s water management to climate change. Clim. Chang. 2012, 111, 17–44. [Google Scholar] [CrossRef]

- Hirsch, P.E.; Schillinger, S.; Weigt, H.; Burkhardt-Holm, P. A hydro-economic model for water level fluctuations: Combining limnology with economics for sustainable development of hydropower. PLoS ONE 2014, 9, e114889. [Google Scholar]

- Ho, M.; Lall, U.; Allaire, M.; Devineni, N.; Kwon, H.H.; Pal, I.; Raff, D.; Wegner, D. The future role of dams in the U nited S tates of A merica. Water Resour. Res. 2017, 53, 982–998. [Google Scholar] [CrossRef]

- Patel, D.P.; Srivastava, P.K. Flood hazards mitigation analysis using remote sensing and GIS: Correspondence with town planning scheme. Water Resour. Manag. 2013, 27, 2353–2368. [Google Scholar] [CrossRef]

- Yaghmaei, H.; Sadeghi, S.H.; Moradi, H.; Gholamalifard, M. Effect of Dam operation on monthly and annual trends of flow discharge in the Qom Rood Watershed, Iran. J. Hydrol. 2018, 557, 254–264. [Google Scholar] [CrossRef]

- Zhong, Y.; Guo, S.; Liu, Z.; Wang, Y.; Yin, J. Quantifying differences between reservoir inflows and dam site floods using frequency and risk analysis methods. Stoch. Environ. Res. Risk Assess. 2018, 32, 419–433. [Google Scholar] [CrossRef]

- Birkinshaw, S.J.; Guerreiro, S.B.; Nicholson, A.; Liang, Q.; Quinn, P.; Zhang, L.; He, B.; Yin, J.; Fowler, H.J. Climate change impacts on Yangtze River discharge at the Three Gorges Dam. Hydrol. Earth Syst. Sci. 2017, 21, 1911–1927. [Google Scholar] [CrossRef]

- Lee, G.; Lee, H.W.; Lee, Y.S.; Choi, J.H.; Yang, J.E.; Lim, K.J.; Kim, J. The effect of reduced flow on downstream water systems due to the kumgangsan dam under dry conditions. Water 2019, 11, 739. [Google Scholar] [CrossRef]

- Hong, J.; Lee, S.; Bae, J.H.; Lee, J.; Park, W.J.; Lee, D.; Kim, J.; Lim, K.J. Development and evaluation of the combined machine learning models for the prediction of dam inflow. Water 2020, 12, 2927. [Google Scholar] [CrossRef]

- Khai, W.J.; Alraih, M.; Ahmed, A.N.; Fai, C.M.; El-Shafie, A. Daily forecasting of dam water levels using machine learning. Int. J. Civ. Eng. Technol. (IJCIET) 2019, 10, 314–323. [Google Scholar]

- Kang, M.-G.; Cai, X.; Koh, D.-K. Real-time forecasting of flood discharges upstream and downstream of a multipurpose dam using grey models. J. Korea Water Resour. Assoc. 2009, 42, 61–73. [Google Scholar] [CrossRef][Green Version]

- Hu, X.; Han, Y.; Yu, B.; Geng, Z.; Fan, J. Novel leakage detection and water loss management of urban water supply network using multiscale neural networks. J. Clean. Prod. 2021, 278, 123611. [Google Scholar] [CrossRef]

- Hu, X.; Han, Y.; Geng, Z. Novel Trajectory Representation Learning Method and Its Application to Trajectory-User Linking. IEEE Trans. Instrum. Meas. 2021, 70, 1–9. [Google Scholar] [CrossRef]

- Korea Meteorological Administration (KMA). Available online: http://kma.go.kr/kma/ (accessed on 1 May 2021).

- MyWater by K-water. Available online: https://www.water.or.kr/ (accessed on 1 May 2021).

- Lee, M.W.; Yi, C.S.; Kim, H.S.; Shim, M.P. Determination of Suitable Antecedent Precipitation Day for the Application of NRCS Method in the Korean Basin. J. Wetl. Res. 2005, 7, 41–48. [Google Scholar]

- Penna, D.; Tromp-van Meerveld, H.; Gobbi, A.; Borga, M.; Dalla Fontana, G. The influence of soil moisture on threshold runoff generation processes in an alpine headwater catchment. Hydrol. Earth Syst. Sci. 2011, 15, 689–702. [Google Scholar] [CrossRef]

- Alpaydin, E. Introduction to Machine Learning; MIT Press: Cambridge, MA, USA, 2020. [Google Scholar]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees; Routledge: Oxfordshire, UK, 2017. [Google Scholar]

- Moon, J.; Park, S.; Hwang, E. A multilayer perceptron-based electric load forecasting scheme via effective recovering missing data. KIPS Trans. Softw. Data Eng. 2019, 8, 67–78. [Google Scholar]

- Panchal, G.; Ganatra, A.; Kosta, Y.; Panchal, D. Behaviour analysis of multilayer perceptrons with multiple hidden neurons and hidden layers. Int. J. Comput. Theory Eng. 2011, 3, 332–337. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Fox, E.W.; Hill, R.A.; Leibowitz, S.G.; Olsen, A.R.; Thornbrugh, D.J.; Weber, M.H. Assessing the accuracy and stability of variable selection methods for random forest modeling in ecology. Environ. Monit. Assess. 2017, 189, 1–20. [Google Scholar] [CrossRef]

- Fernández-Delgado, M.; Cernadas, E.; Barro, S.; Amorim, D. Do we need hundreds of classifiers to solve real world classification problems? J. Mach. Learn. Res. 2014, 15, 3133–3181. [Google Scholar]

- Natekin, A.; Knoll, A. Gradient boosting machines, a tutorial. Front. Neurorobot. 2013, 7, 21. [Google Scholar] [CrossRef] [PubMed]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Liu, Y.; Liu, S. Mechanical state prediction based on LSTM neural netwok. In Proceedings of the 2017 36th Chinese Control Conference (CCC), Dalian, China, 26–28 July 2017; pp. 3876–3881. [Google Scholar]

- Fukuoka, R.; Suzuki, H.; Kitajima, T.; Kuwahara, A.; Yasuno, T. Wind speed prediction model using LSTM and 1D-CNN. J. Signal Process. 2018, 22, 207–210. [Google Scholar] [CrossRef]

- Abbaspour, K.C.; Yang, J.; Maximov, I.; Siber, R.; Bogner, K.; Mieleitner, J.; Zobrist, J.; Srinivasan, R. Modelling hydrology and water quality in the pre-alpine/alpine Thur watershed using SWAT. J. Hydrol. 2007, 333, 413–430. [Google Scholar] [CrossRef]

- Bhatta, B.; Shrestha, S.; Shrestha, P.K.; Talchabhadel, R. Evaluation and application of a SWAT model to assess the climate change impact on the hydrology of the Himalayan River Basin. Catena 2019, 181, 104082. [Google Scholar] [CrossRef]

- Moriasi, D.N.; Gitau, M.W.; Pai, N.; Daggupati, P. Hydrologic and water quality models: Performance measures and evaluation criteria. Trans. ASABE 2015, 58, 1763–1785. [Google Scholar]

- Dong, L.; Fang, D.; Wang, X.; Wei, W.; Damaševičius, R.; Scherer, R.; Woźniak, M. Prediction of Streamflow Based on Dynamic Sliding Window LSTM. Water 2020, 12, 3032. [Google Scholar] [CrossRef]

- Fu, M.; Fan, T.; Ding, Z.A.; Salih, S.Q.; Al-Ansari, N.; Yaseen, Z.M. Deep learning data-intelligence model based on adjusted forecasting window scale: Application in daily streamflow simulation. IEEE Access 2020, 8, 32632–32651. [Google Scholar] [CrossRef]

- Ni, L.; Wang, D.; Singh, V.P.; Wu, J.; Wang, Y.; Tao, Y.; Zhang, J. Streamflow and rainfall forecasting by two long short-term memory-based models. J. Hydrol. 2020, 583, 124296. [Google Scholar] [CrossRef]

| Input Variable | Output Variable | |

|---|---|---|

| Precipitation and dam data of 2 days ago | inflow(d−2), discharge(d−2), precip(CC)(d−2), precip(IJ)(d − 2) | Discharge of the day(d) |

| Precipitation and dam data of 1 day ago | inflow(d−1), discharge(d−1), precip(CC)(d−1), precip(IJ)(d−1) | |

| Precipitation and dam data of the day (forecasted) | Precip(CC)(d), precip(IJ)(d) |

| Machine Learning Models | Module | Function | Notation |

|---|---|---|---|

| Decision tree | sklearn.tree | DecisionTreeRegressor | DT |

| Multilayer perceptron | sklearn.neural_network | MLPRegressor | MLP |

| Random forest | sklearn.ensemble | RandomForestRegressor | RF |

| Gradient boosting | sklearn.ensemble | GradientBoostingRegressor | GB |

| RNN-LSTM | keras.models.Sequential | LSTM, Dense, Dropout | LSTM |

| CNN-LSTM | keras.models.Sequential | LSTM, Dense, Dropout, Conv1D, MaxPooling1D | CNN-LSTM |

| Decision Tree Regressor | MLP Regressor | ||

|---|---|---|---|

| Hyperparameter | Value | Hyperparameter | Value |

| Criterion min_samples_leaf min_impurity_decrease Splitter min_samples_split random_state | Entropy 1 0 best 2 0 | hidden_layer_sizes solver learning_rate_init max_iter momentum beta_1 epsilon activation | (50, 50, 50) adam 0.001 200 0.9 0.9 1× 10−8 relu |

| Random Forest Regressor | Gradient Boosting Regressor | ||

| Hyperparameter | Value | Hyperparameter | Value |

| n_estimators min_samples_split min_weight_fraction_leaf min_impurity_decrease verbose criterion min_samples_leaf max_features bootstrap | 52 2 0 0 0 mse 1 Auto True | Loss n_estimators criterion min_samples_leaf max_depth alpha presort tol learning_rate subsample min_samples_split validation_fraction | ls 100 friedman_mse 1 10 0.9 Auto 1 × 10−4 0.1 1.0 2 0.1 |

| Method | NSE | RMSE (m3/s) | MAE (m3/s) | R | R2 |

|---|---|---|---|---|---|

| Decision Tree | −0.609 | 137.578 | 18.103 | 0.530 | 0.281 |

| MLP | 0.480 | 78.202 | 14.248 | 0.784 | 0.614 |

| Random Forest | 0.765 | 52.558 | 11.096 | 0.875 | 0.765 |

| Gradient Boosting | 0.317 | 89.601 | 13.005 | 0.700 | 0.490 |

| LSTM | 0.796 | 48.996 | 10.024 | 0.898 | 0.807 |

| CNN-LSTM | 0.221 | 95.730 | 13.372 | 0.655 | 0.428 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hong, J.; Lee, S.; Lee, G.; Yang, D.; Bae, J.H.; Kim, J.; Kim, K.; Lim, K.J. Comparison of Machine Learning Algorithms for Discharge Prediction of Multipurpose Dam. Water 2021, 13, 3369. https://doi.org/10.3390/w13233369

Hong J, Lee S, Lee G, Yang D, Bae JH, Kim J, Kim K, Lim KJ. Comparison of Machine Learning Algorithms for Discharge Prediction of Multipurpose Dam. Water. 2021; 13(23):3369. https://doi.org/10.3390/w13233369

Chicago/Turabian StyleHong, Jiyeong, Seoro Lee, Gwanjae Lee, Dongseok Yang, Joo Hyun Bae, Jonggun Kim, Kisung Kim, and Kyoung Jae Lim. 2021. "Comparison of Machine Learning Algorithms for Discharge Prediction of Multipurpose Dam" Water 13, no. 23: 3369. https://doi.org/10.3390/w13233369

APA StyleHong, J., Lee, S., Lee, G., Yang, D., Bae, J. H., Kim, J., Kim, K., & Lim, K. J. (2021). Comparison of Machine Learning Algorithms for Discharge Prediction of Multipurpose Dam. Water, 13(23), 3369. https://doi.org/10.3390/w13233369