A Model Tree Generator (MTG) Framework for Simulating Hydrologic Systems: Application to Reservoir Routing

Abstract

1. Introduction

2. Materials and Methods

2.1. Background and Scope

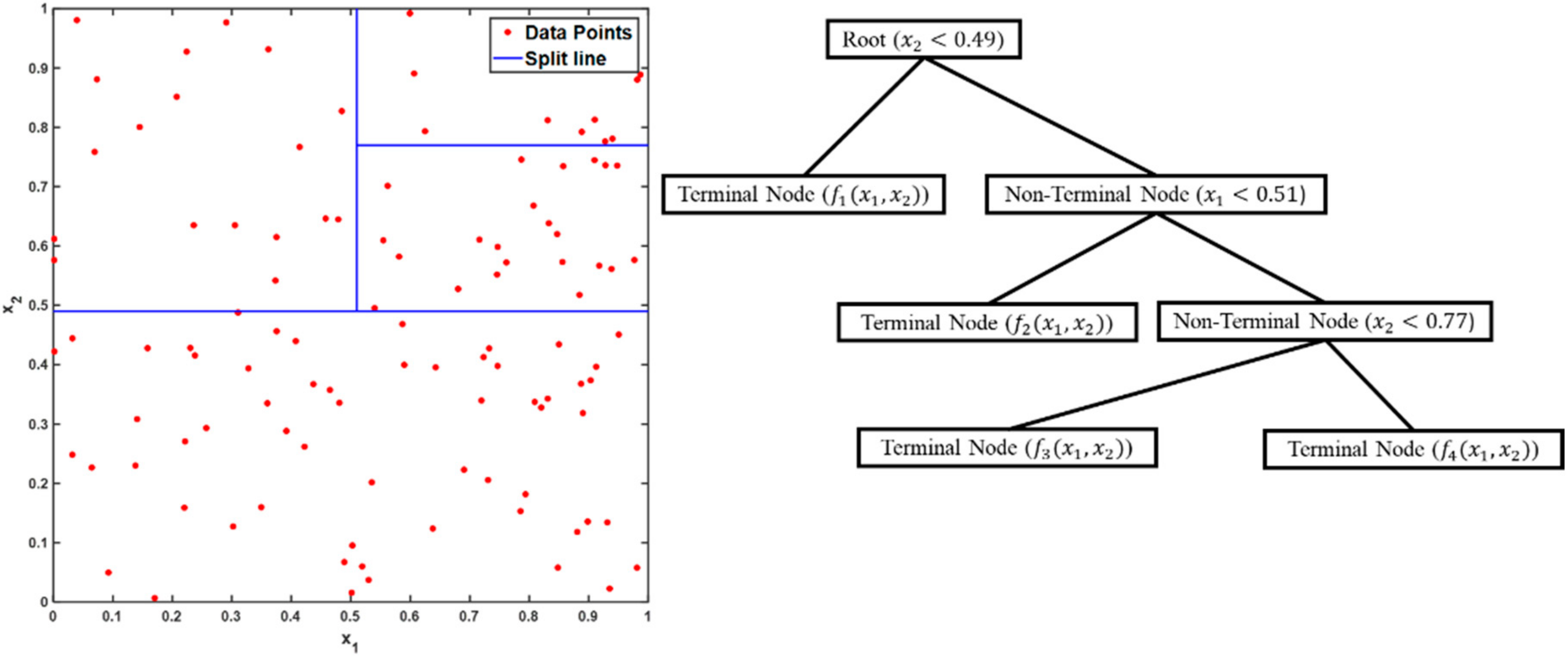

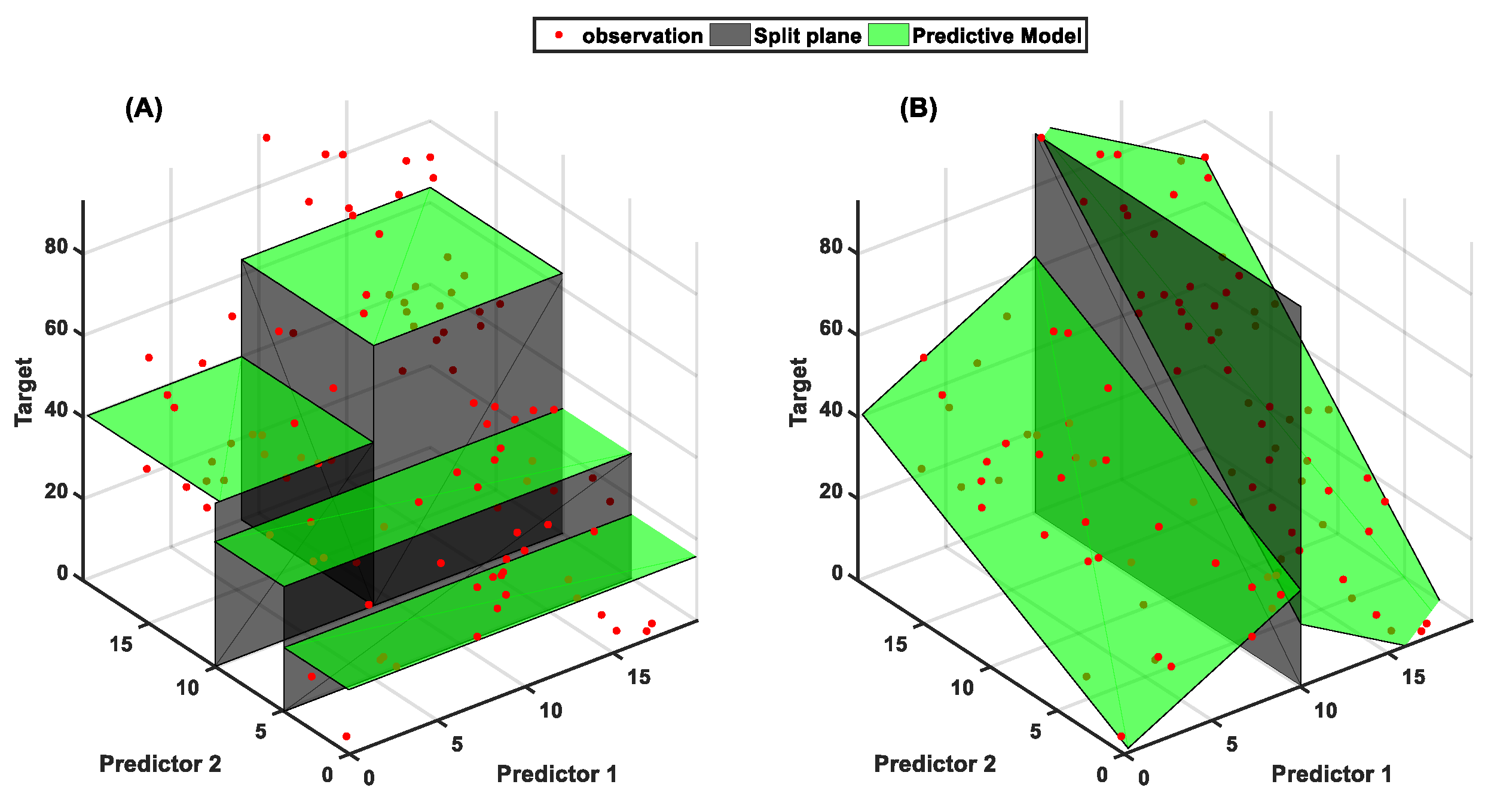

2.2. Model Tree Generator Scheme

3. Case Study

3.1. Machine Learning Test Cases

3.2. Settings of the MTG Framework for Machine Learning Datasets

3.3. Reservoir Routing Simulation

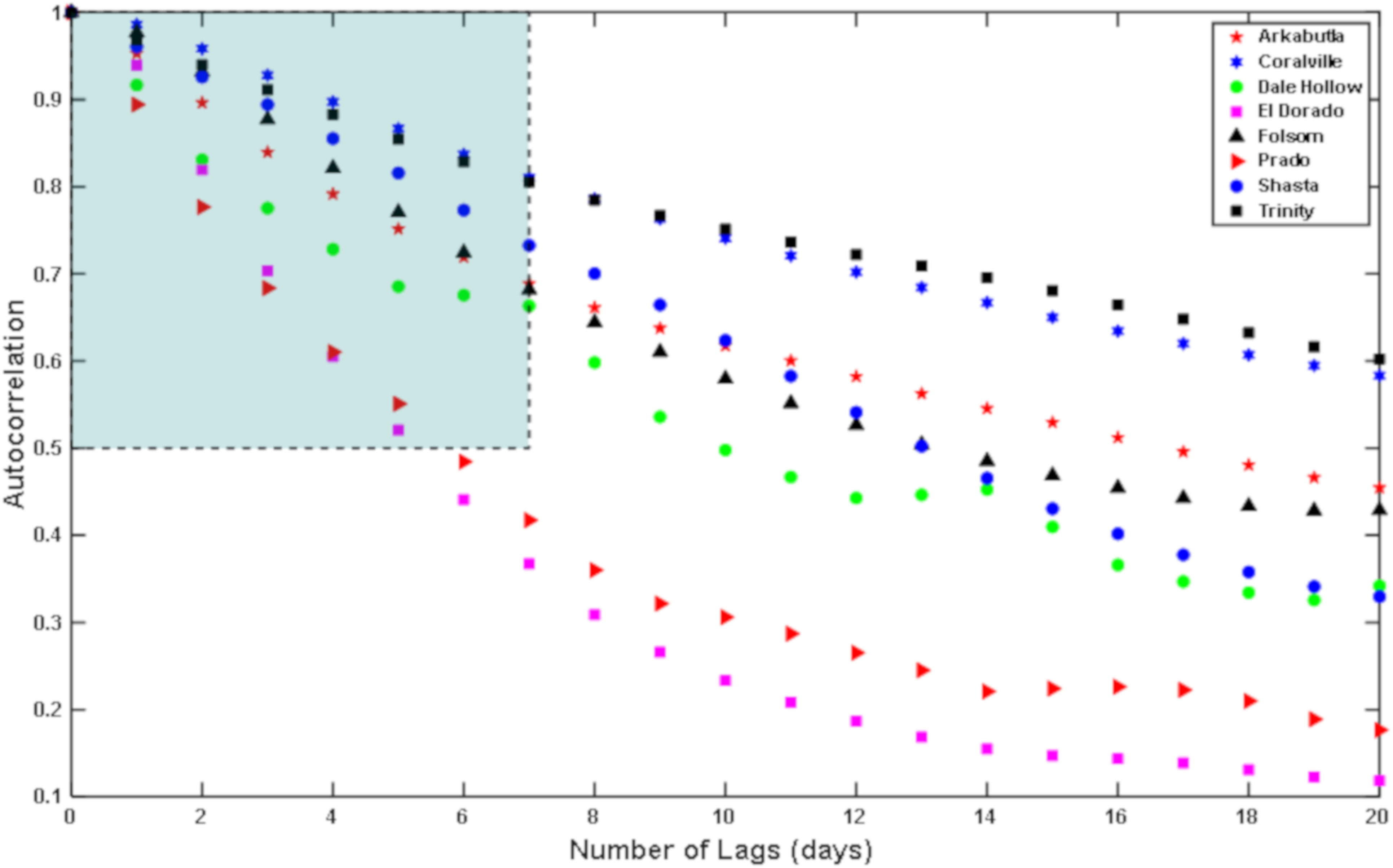

3.4. Settings of the MTG Framework for Reservoir Routing

4. Results

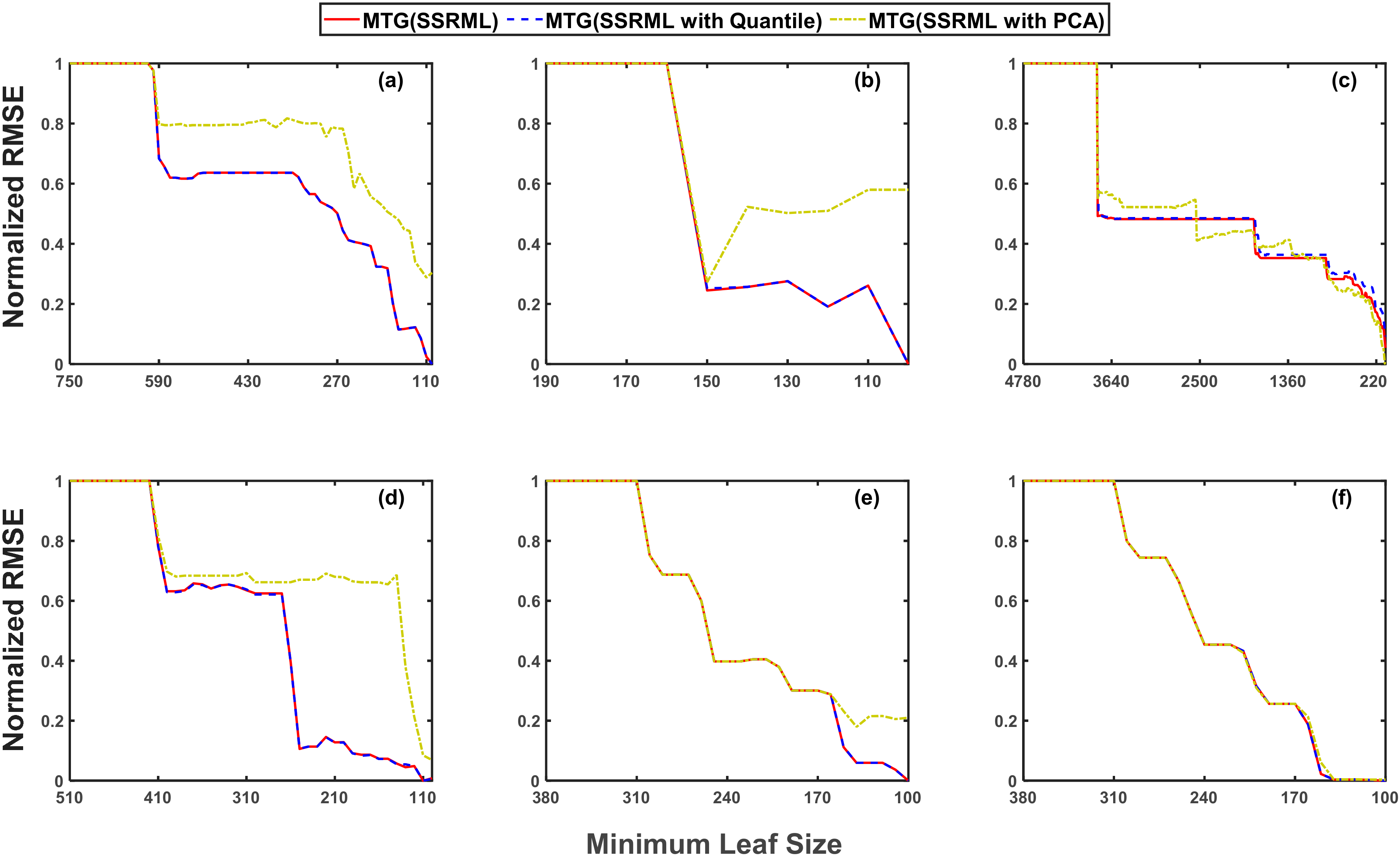

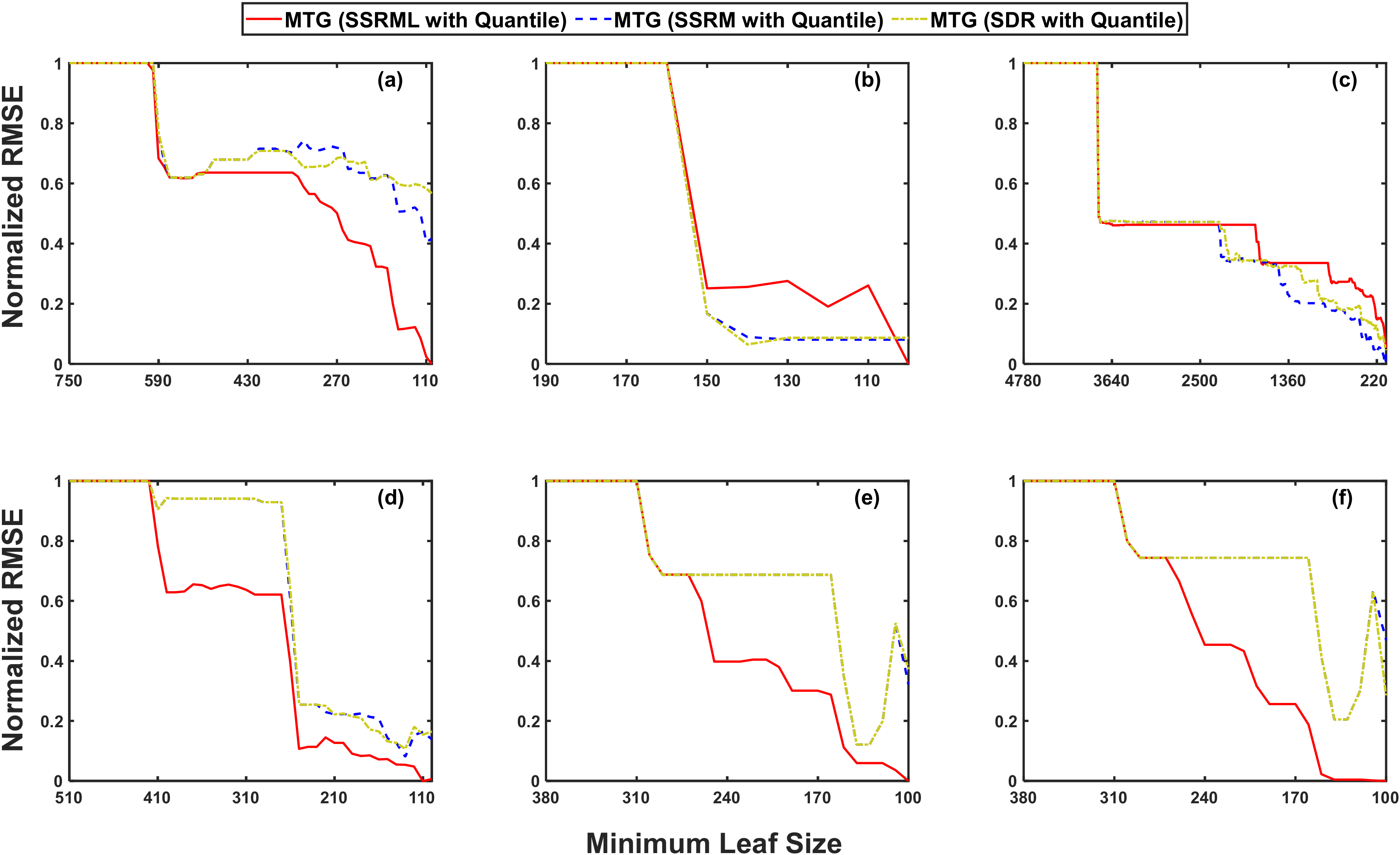

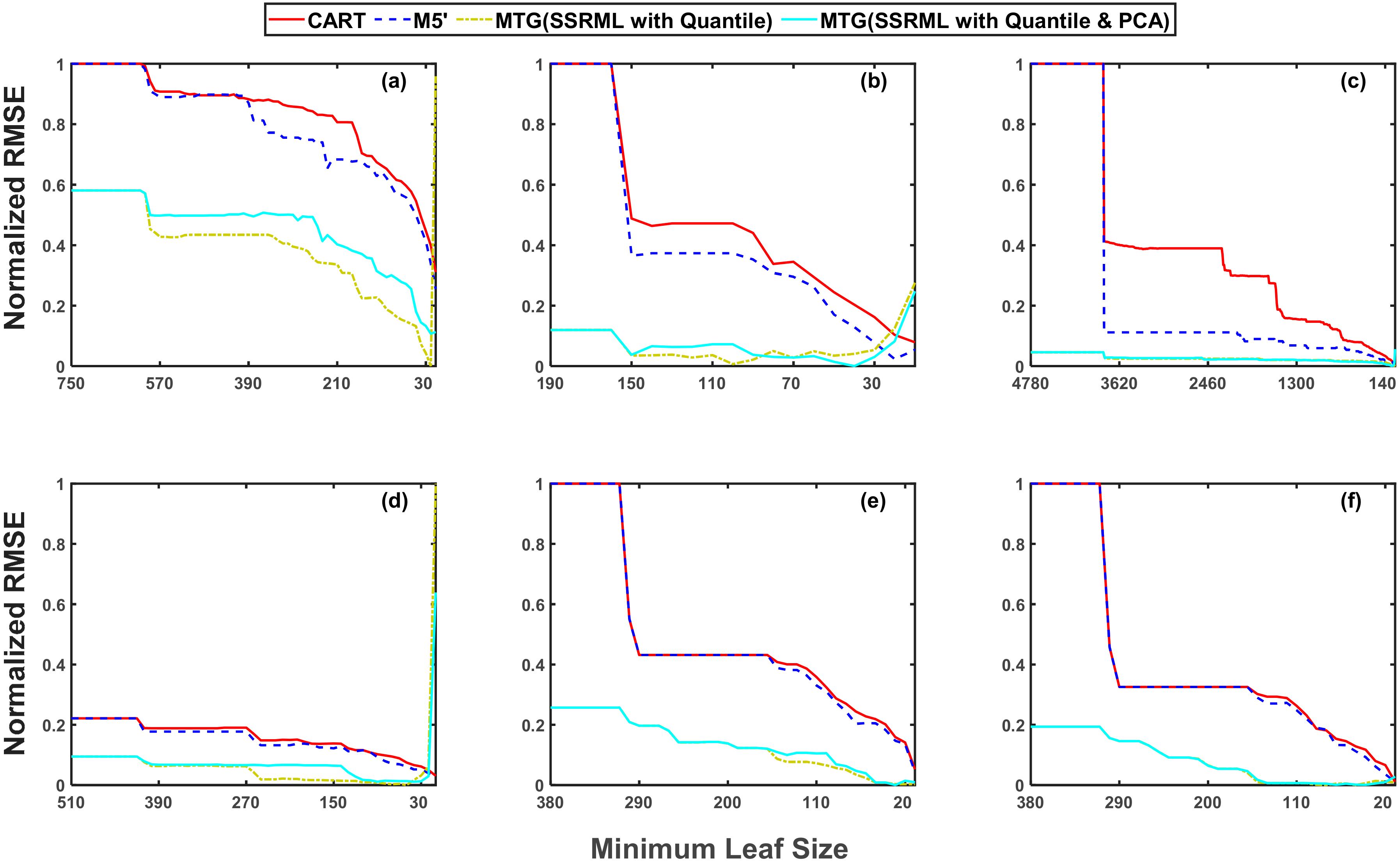

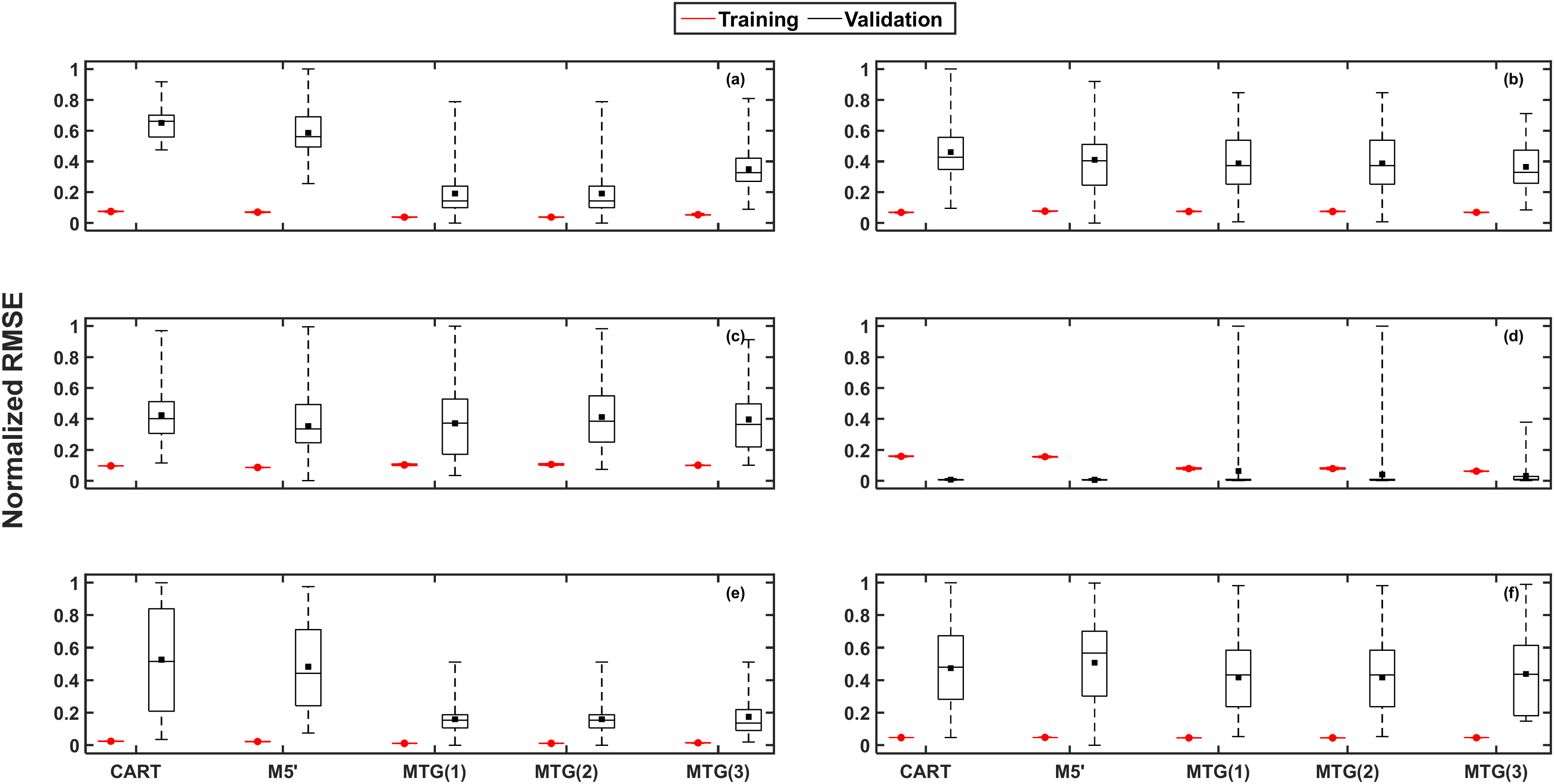

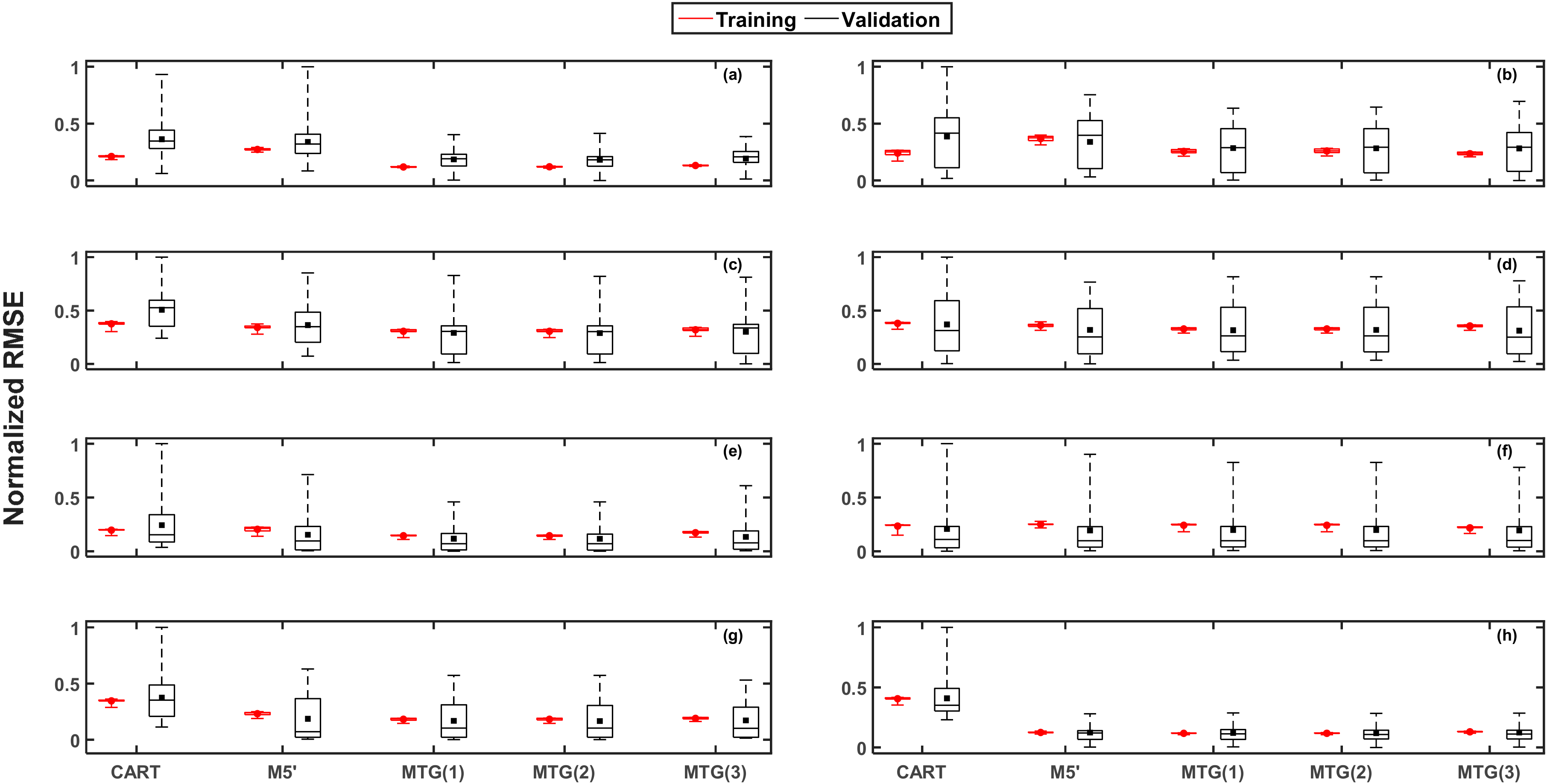

4.1. Machine Learning Datasets

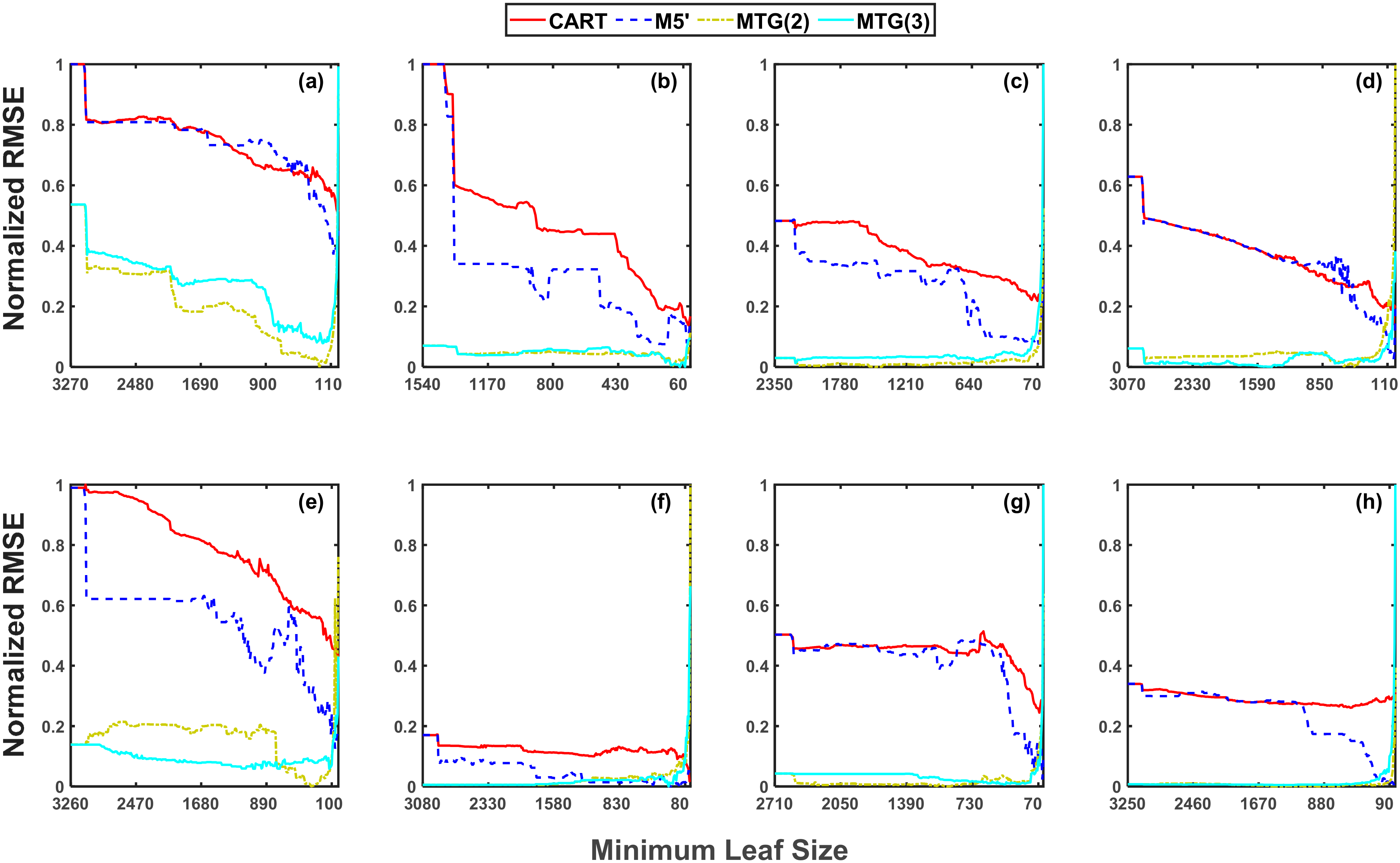

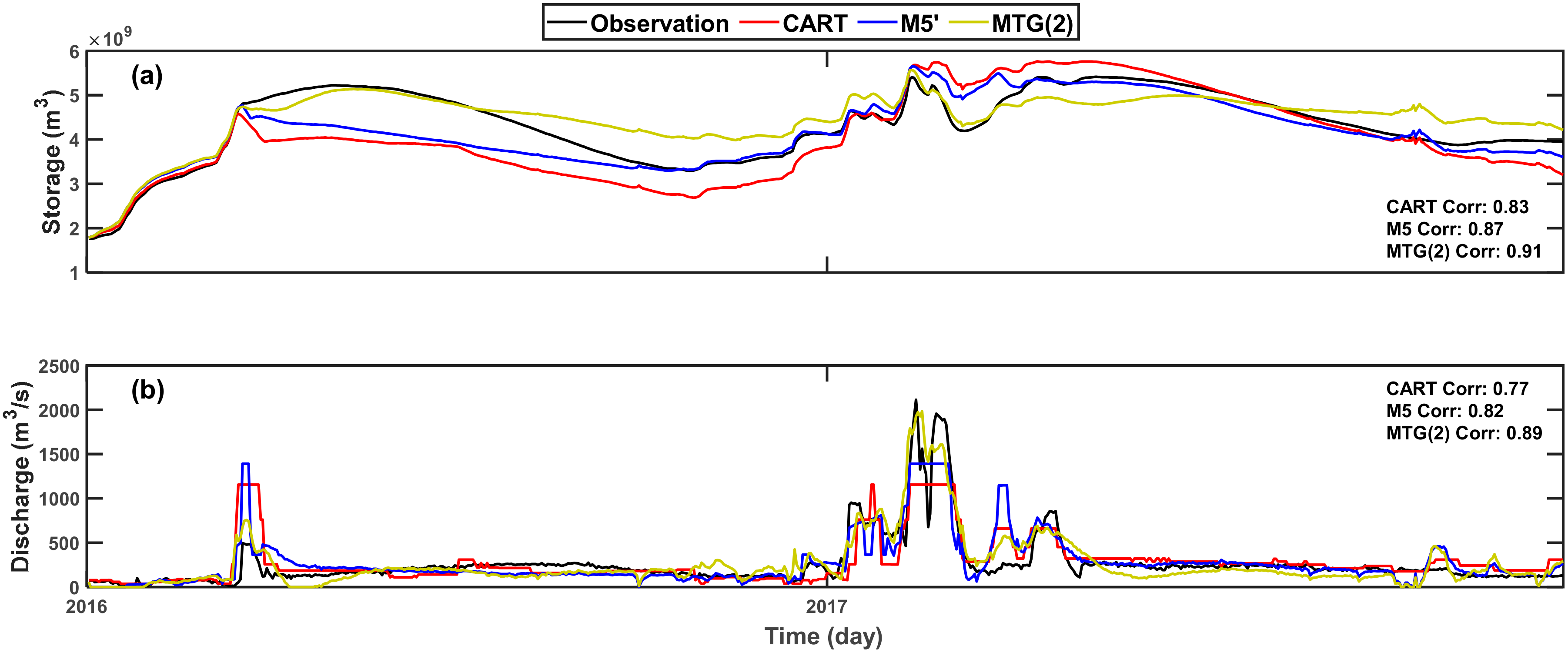

4.2. Reservoir Routing

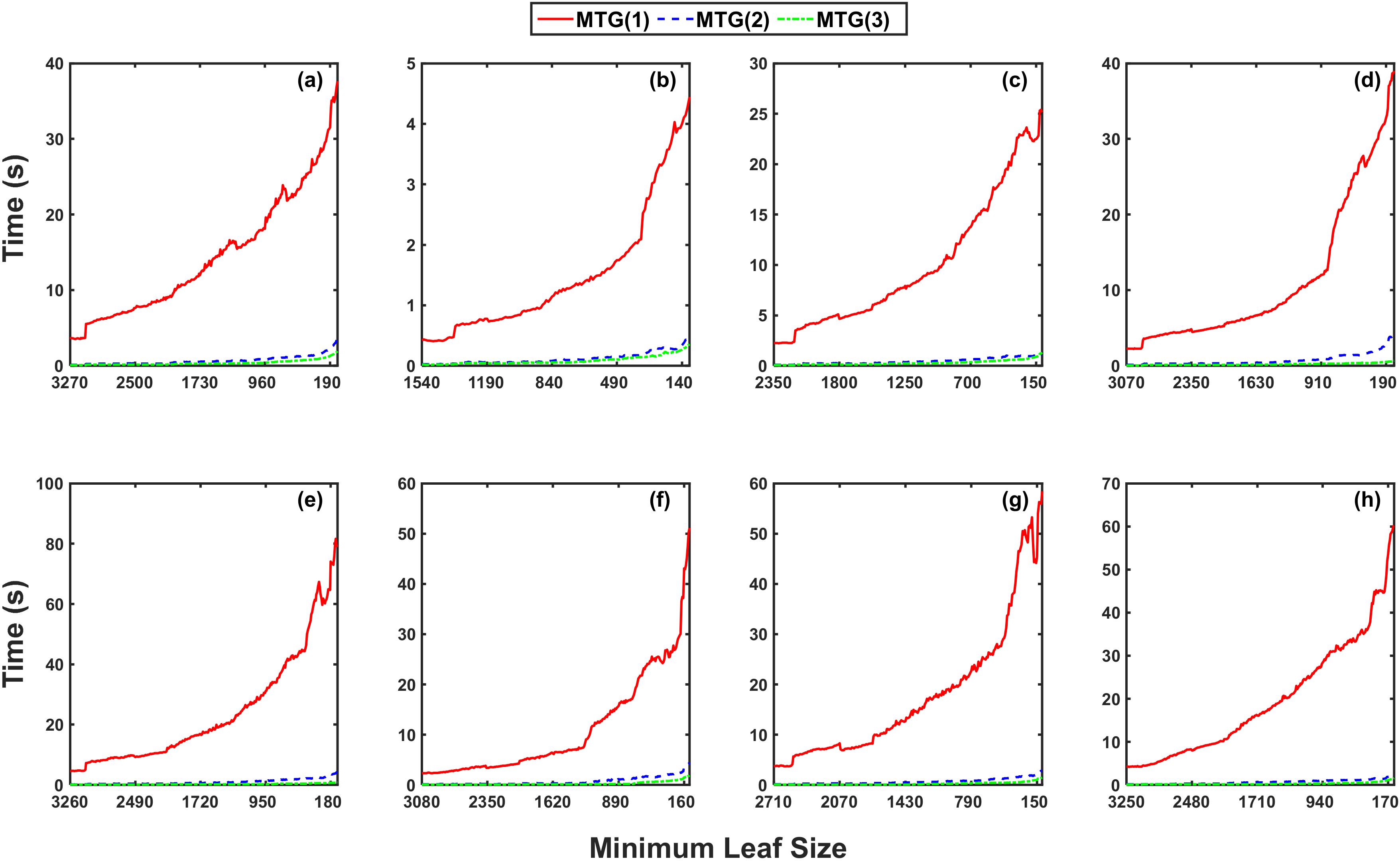

4.3. Induction Speed of the MTG Framework

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Afzali Gorooh, V.; Kalia, S.; Nguyen, P.; Hsu, K.; Sorooshian, S.; Ganguly, S.; Nemani, R.R. Deep Neural Network Cloud-Type Classification (DeepCTC) model and its application in evaluating PERSIANN-CCS. Remote Sens. 2020, 12, 316. [Google Scholar] [CrossRef]

- Sadeghi, M.; Asanjan, A.A.; Faridzad, M.; Nguyen, P.; Hsu, K.; Sorooshian, S.; Braithwaite, D. PERSIANN-CNN: Precipitation estimation from remotely sensed information using artificial neural networks–convolutional neural networks. J. Hydrometeorol. 2019, 20, 2273–2289. [Google Scholar] [CrossRef]

- Akbari Asanjan, A.; Yang, T.; Hsu, K.; Sorooshian, S.; Lin, J.; Peng, Q. Short-Term precipitation forecast based on the PERSIANN system and LSTM recurrent neural networks. J. Geophys. Res. Atmos. 2018, 123, 12,512–543,563. [Google Scholar] [CrossRef]

- Zhang, D.; Lin, J.; Peng, Q.; Wang, D.; Yang, T.; Sorooshian, S.; Liu, X.; Zhuang, J. Modeling and simulating of reservoir operation using the artificial neural network, support vector regression, deep learning algorithm. J. Hydrol. 2018, 565, 720–736. [Google Scholar] [CrossRef]

- Etemad-Shahidi, A.; Taghipour, M. Predicting longitudinal dispersion coefficient in natural streams using M5′ model tree. J. Hydraul. Eng. 2012, 138, 542–554. [Google Scholar] [CrossRef]

- Jung, N.-C.; Popescu, I.; Kelderman, P.; Solomatine, D.P.; Price, R.K. Application of model trees and other machine learning techniques for algal growth prediction in Yongdam reservoir, Republic of Korea. J. Hydroinformatics 2009, 12, 262–274. [Google Scholar] [CrossRef]

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Turini, F.; Giannotti, F.; Pedreschi, D. A survey of methods for explaining black box models. ACM Comput. Surv. 2018, 51, 1–42. [Google Scholar] [CrossRef]

- Ciampi, A. Generalized regression trees. Comput. Stat. Data Anal. 1991, 12, 57–78. [Google Scholar] [CrossRef]

- De’ath, G.; Fabricius, K.E. Classification and regression trees: A powerful yet simple technique for ecological data analysis. Ecology 2000, 81, 3178–3192. [Google Scholar] [CrossRef]

- Loh, W. Fifty years of classification and regression trees. Int. Stat. Rev. 2014, 82, 329–348. [Google Scholar] [CrossRef]

- Friedl, M.A.; Brodley, C.E. Decision tree classification of land cover from remotely sensed data. Remote Sens. Environ. 1997, 61, 399–409. [Google Scholar] [CrossRef]

- Huang, C.; Townshend, J.R.G. A stepwise regression tree for nonlinear approximation: Applications to estimating subpixel land cover. Int. J. Remote Sens. 2003, 24, 75–90. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P.M. An assessment of the effectiveness of decision tree methods for land cover classification. Remote Sens. Environ. 2003, 86, 554–565. [Google Scholar] [CrossRef]

- Castelletti, A.; Galelli, S.; Restelli, M.; Soncini-Sessa, R. Tree-based reinforcement learning for optimal water reservoir operation. Water Resour. Res. 2010, 46, W09507. [Google Scholar] [CrossRef]

- Yang, T.; Gao, X.; Sorooshian, S.; Li, X. Simulating California reservoir operation using the classification and regression-tree algorithm combined with a shuffled cross-validation scheme. Water Resour. Res. 2016, 52, 1626–1651. [Google Scholar] [CrossRef]

- Yang, T.; Asanjan, A.A.; Welles, E.; Gao, X.; Sorooshian, S.; Liu, X. Developing reservoir monthly inflow forecasts using artificial intelligence and climate phenomenon information. Water Resour. Res. 2017, 53, 2786–2812. [Google Scholar] [CrossRef]

- Herman, J.D.; Giuliani, M. Policy tree optimization for threshold-based water resources management over multiple timescales. Environ. Model. Softw. 2018, 99, 39–51. [Google Scholar] [CrossRef]

- Yang, T.; Liu, X.; Wang, L.; Bai, P.; Li, J. Simulating hydropower discharge using multiple decision tree methods and a dynamical model merging technique. J. Water Resour. Plan. Manag. 2020, 146, 4019072. [Google Scholar] [CrossRef]

- Galelli, S.; Castelletti, A. Assessing the predictive capability of randomized tree-based ensembles in streamflow modelling. Hydrol. Earth Syst. Sci. 2013, 17, 2669–2684. [Google Scholar] [CrossRef]

- Han, J.; Mao, K.; Xu, T.; Guo, J.; Zuo, Z.; Gao, C. A soil moisture estimation framework based on the CART algorithm and its application in China. J. Hydrol. 2018, 563, 65–75. [Google Scholar] [CrossRef]

- Wang, Y.; Witten, I.H. Induction of Model Trees for Predicting Continuous Classes; University of Waikato: Hamilton, New Zealand, 1996. [Google Scholar]

- Breiman, L. Classification and Regression Trees; Routledge: New York, NY, USA, 2017; ISBN 1351460498. [Google Scholar]

- Malerba, D.; Esposito, F.; Ceci, M.; Appice, A. Top-down induction of model trees with regression and splitting nodes. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 612–625. [Google Scholar] [CrossRef] [PubMed]

- Etemad-Shahidi, A.; Mahjoobi, J. Comparison between M5′ model tree and neural networks for prediction of significant wave height in Lake Superior. Ocean Eng. 2009, 36, 1175–1181. [Google Scholar] [CrossRef]

- Quinlan, J.R. Learning with continuous classes. In Proceedings of the 5th Australian Joint Conference on Artificial Intelligence, Hobart, Tasmania, 16–18 November 1992; World Scientific: Singapore; Volume 92, pp. 343–348. [Google Scholar]

- Elshorbagy, A.; Corzo, G.; Srinivasulu, S.; Solomatine, D.P. Experimental investigation of the predictive capabilities of data driven modeling techniques in hydrology-Part 1: Concepts and methodology. Hydrol. Earth Syst. Sci. 2010, 14, 1931–1941. [Google Scholar] [CrossRef]

- Elshorbagy, A.; Corzo, G.; Srinivasulu, S.; Solomatine, D.P. Experimental investigation of the predictive capabilities of data driven modeling techniques in hydrology-Part 2: Application. Hydrol. Earth Syst. Sci. 2010, 14, 1943–1961. [Google Scholar] [CrossRef]

- Galelli, S.; Castelletti, A. Tree-based iterative input variable selection for hydrological modeling. Water Resour. Res. 2013, 49, 4295–4310. [Google Scholar] [CrossRef]

- Jothiprakash, V.; Kote, A.S. Effect of pruning and smoothing while using M5 model tree technique for reservoir inflow prediction. J. Hydrol. Eng. 2011, 16, 563–574. [Google Scholar] [CrossRef]

- Kompare, B.; Steinman, F.; Cerar, U.; Dzeroski, S. Prediction of rainfall runoff from catchment by intelligent data analysis with machine learning tools within the artificial intelligence tools. Acta Hydrotech. 1997, 16, 79–94. [Google Scholar]

- Rezaie-balf, M.; Naganna, S.R.; Ghaemi, A.; Deka, P.C. Wavelet coupled MARS and M5 Model Tree approaches for groundwater level forecasting. J. Hydrol. 2017, 553, 356–373. [Google Scholar] [CrossRef]

- Solomatine, D.P.; Dulal, K.N. Model trees as an alternative to neural networks in rainfall—runoff modelling. Hydrol. Sci. J. 2003, 48, 399–411. [Google Scholar] [CrossRef]

- Štravs, L.; Brilly, M. Development of a low-flow forecasting model using the M5 machine learning method. Hydrol. Sci. J. 2007, 52, 466–477. [Google Scholar] [CrossRef]

- Bhattacharya, B.; Solomatine, D.P. Neural networks and M5 model trees in modelling water level–discharge relationship. Neurocomputing 2005, 63, 381–396. [Google Scholar] [CrossRef]

- Witten, I.H.; Frank, E. Data mining: Practical machine learning tools and techniques with Java implementations. ACM Sigmod Rec. 2002, 31, 76–77. [Google Scholar] [CrossRef]

- Elomaa, T.; Rousu, J. General and efficient multisplitting of numerical attributes. Mach. Learn. 1999, 36, 201–244. [Google Scholar] [CrossRef]

- Yang, T.; Asanjan, A.A.; Faridzad, M.; Hayatbini, N.; Gao, X.; Sorooshian, S. An enhanced artificial neural network with a shuffled complex evolutionary global optimization with principal component analysis. Inf. Sci. 2017, 418, 302–316. [Google Scholar] [CrossRef]

- Chen, K.; Guo, S.; Wang, J.; Qin, P.; He, S.; Sun, S.; Naeini, M.R. Evaluation of GloFAS-Seasonal Forecasts for Cascade Reservoir Impoundment Operation in the Upper Yangtze River. Water 2019, 11, 2539. [Google Scholar] [CrossRef]

- Gaddam, S.R.; Phoha, V.V.; Balagani, K.S. K-Means+ ID3: A novel method for supervised anomaly detection by cascading K-Means clustering and ID3 decision tree learning methods. IEEE Trans. Knowl. Data Eng. 2007, 19, 345–354. [Google Scholar] [CrossRef]

- Laurent, H.; Rivest, R.L. Constructing optimal binary decision trees is NP-complete. Inf. Process. Lett. 1976, 5, 15–17. [Google Scholar]

- Ishwaran, H. Variable importance in binary regression trees and forests. Electron. J. Stat. 2007, 1, 519–537. [Google Scholar] [CrossRef]

- Murthy, S.K.; Salzberg, S. Decision Tree Induction: How Effective Is the Greedy Heuristic? In Proceedings of the KDD, Montreal, Canada, 20–21 August 1995; pp. 222–227. [Google Scholar]

- Hazen, A. Storage to be provided in impounding reservoirs for municipal water supply. Proc. Am. Soc. Civil Eng. 1914, 39, 1943–2044. [Google Scholar]

- Cunnane, C. Unbiased plotting positions—A review. J. Hydrol. 1978, 37, 205–222. [Google Scholar] [CrossRef]

- Rahnamay Naeini, M.; Analui, B.; Gupta, H.V.; Duan, Q.; Sorooshian, S. Three decades of the Shuffled Complex Evolution (SCE-UA) optimization algorithm: Review and applications. Sci. Iran. 2019, 26, 2015–2031. [Google Scholar]

- Rahnamay Naeini, M.; Yang, T.; Sadegh, M.; AghaKouchak, A.; Hsu, K.-L.; Sorooshian, S.; Duan, Q.; Lei, X. Shuffled Complex-Self Adaptive Hybrid EvoLution (SC-SAHEL) optimization framework. Environ. Model. Softw. 2018, 104. [Google Scholar] [CrossRef]

- Hsu, K.; Gupta, H.V.; Gao, X.; Sorooshian, S.; Imam, B. Self-organizing linear output map (SOLO): An artificial neural network suitable for hydrologic modeling and analysis. Water Resour. Res. 2002, 38, 31–38. [Google Scholar] [CrossRef]

- Shlens, J. A tutorial on principal component analysis. arXiv 2014, arXiv:1404.1100x51. [Google Scholar]

- Jolliffe, I.T. A note on the use of principal components in regression. J. R. Stat. Soc. Ser. C (Appl. Stat.) 1982, 31, 300–303. [Google Scholar] [CrossRef]

- Broman, K.W.; Speed, T.P. A model selection approach for the identification of quantitative trait loci in experimental crosses. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2002, 64, 641–656. [Google Scholar] [CrossRef]

- Schwarz, G. Estimating the dimension of a model. Ann. Stat. 1978, 6, 461–464. [Google Scholar] [CrossRef]

- Diks, C.G.H.; Vrugt, J.A. Comparison of point forecast accuracy of model averaging methods in hydrologic applications. Stoch. Environ. Res. Risk Assess. 2010, 24, 809–820. [Google Scholar] [CrossRef]

- Sadegh, M.; Ragno, E.; AghaKouchak, A. Multivariate C opula A nalysis T oolbox (MvCAT): Describing dependence and underlying uncertainty using a B ayesian framework. Water Resour. Res. 2017, 53, 5166–5183. [Google Scholar] [CrossRef]

- Nasta, P.; Vrugt, J.A.; Romano, N. Prediction of the saturated hydraulic conductivity from Brooks and Corey’s water retention parameters. Water Resour. Res. 2013, 49, 2918–2925. [Google Scholar] [CrossRef]

- Asuncion, A.; Newman, D. UCI Machine Learning Repository. 2007. Available online: http://archive.ics.uci.edu/ml (accessed on 30 June 2020).

- Mehran, A.; Mazdiyasni, O.; AghaKouchak, A. A hybrid framework for assessing socioeconomic drought: Linking climate variability, local resilience, and demand. J. Geophys. Res. Atmos. 2015, 120, 7520–7533. [Google Scholar] [CrossRef]

- Simonovic, S.P. Reservoir systems analysis: Closing gap between theory and practice. J. Water Resour. Plan. Manag. 1992, 118, 262–280. [Google Scholar] [CrossRef]

- Castillo-Botón, C.; Casillas-Pérez, D.; Casanova-Mateo, C.; Moreno-Saavedra, L.M.; Morales-Díaz, B.; Sanz-Justo, J.; Salcedo-Sanz, P.A. Analysis and Prediction of dammed water level in a hydropower reservoir using machine learning and persistence-based techniques. Water 2020, 12, 1528. [Google Scholar] [CrossRef]

- Ahmad, A.; El-Shafie, A.; Razali, S.F.M.; Mohamad, Z.S. Reservoir optimization in water resources: A review. Water Resour. Manag. 2014, 28, 3391–3405. [Google Scholar] [CrossRef]

- Rahnamay Naeini, M. A Framework for Optimization and Simulation of Reservoir Systems Using Advanced Optimization and Data Mining Tools. Ph.D. Thesis, University of California Irvine, Irvine, CA, USA, 2019. [Google Scholar]

- Chang, F.; Chen, L.; Chang, L. Optimizing the reservoir operating rule curves by genetic algorithms. Hydrol. Process. 2005, 19, 2277–2289. [Google Scholar] [CrossRef]

- Draper, A.J.; Munévar, A.; Arora, S.K.; Reyes, E.; Parker, N.L.; Chung, F.I.; Peterson, L.E. CalSim: Generalized model for reservoir system analysis. J. Water Resour. Plan. Manag. 2004, 130, 480–489. [Google Scholar] [CrossRef]

- Oliveira, R.; Loucks, D.P. Operating rules for multireservoir systems. Water Resour. Res. 1997, 33, 839–852. [Google Scholar] [CrossRef]

- David, C.H.; Yang, Z.; Famiglietti, J.S. Quantification of the upstream-to-downstream influence in the Muskingum method and implications for speedup in parallel computations of river flow. Water Resour. Res. 2013, 49, 2783–2800. [Google Scholar] [CrossRef]

- Lin, P.; Pan, M.; Beck, H.E.; Yang, Y.; Yamazaki, D.; Frasson, R.; David, C.H.; Durand, M.; Pavelsky, T.M.; Allen, G.H. Global reconstruction of naturalized river flows at 2.94 million reaches. Water Resour. Res. 2019, 55, 6499–6516. [Google Scholar] [CrossRef]

- Raftery, A.E.; Madigan, D.; Hoeting, J.A. Bayesian model averaging for linear regression models. J. Am. Stat. Assoc. 1997, 92, 179–191. [Google Scholar] [CrossRef]

- Maidment, D.R.; Tavakoly, A.A.; David, D.R.; Yang, Z.L.; Cai, X. An upscaling process for large-scale vector-based river networks using the NHDPlus data set. In Proceedings of the Spring Speciality Conference, American Water Resources Association, New Orleans, LA, USA, 26–28 March 2012; pp. 168–173. [Google Scholar]

- Tavakoly, A.A.; Snow, A.D.; David, C.H.; Follum, M.L.; Maidment, D.R.; Yang, Z. Continental-scale river flow modeling of the Mississippi River Basin using high-resolution NHDPlus dataset. JAWRA J. Am. Water Resour. Assoc. 2017, 53, 258–279. [Google Scholar] [CrossRef]

- Yamazaki, D.; de Almeida, G.A.M.; Bates, P.D. Improving computational efficiency in global river models by implementing the local inertial flow equation and a vector-based river network map. Water Resour. Res. 2013, 49, 7221–7235. [Google Scholar] [CrossRef]

- Lin, P.; Yang, Z.-L.; Gochis, D.J.; Yu, W.; Maidment, D.R.; Somos-Valenzuela, M.A.; David, C.H. Implementation of a vector-based river network routing scheme in the community WRF-Hydro modeling framework for flood discharge simulation. Environ. Model. Softw. 2018, 107, 1–11. [Google Scholar] [CrossRef]

- Salas, F.R.; Somos-Valenzuela, M.A.; Dugger, A.; Maidment, D.R.; Gochis, D.J.; David, C.H.; Yu, W.; Ding, D.; Clark, E.P.; Noman, N. Towards real-time continental scale streamflow simulation in continuous and discrete space. JAWRA J. Am. Water Resour. Assoc. 2018, 54, 7–27. [Google Scholar] [CrossRef]

- Snow, A.D.; Christensen, S.D.; Swain, N.R.; Nelson, E.J.; Ames, D.P.; Jones, N.L.; Ding, D.; Noman, N.S.; David, C.H.; Pappenberger, F. A high-resolution national-scale hydrologic forecast system from a global ensemble land surface model. JAWRA J. Am. Water Resour. Assoc. 2016, 52, 950–964. [Google Scholar] [CrossRef]

- David, C.H.; Famiglietti, J.S.; Yang, Z.; Eijkhout, V. Enhanced fixed-size parallel speedup with the Muskingum method using a trans-boundary approach and a large subbasins approximation. Water Resour. Res. 2015, 51, 7547–7571. [Google Scholar] [CrossRef]

- Hejazi, M.I.; Cai, X.; Ruddell, B.L. The role of hydrologic information in reservoir operation–learning from historical releases. Adv. Water Resour. 2008, 31, 1636–1650. [Google Scholar] [CrossRef]

- Park, K., II. Fundamentals of Probability and Stochastic Processes with Applications to Communications; Springer: Jersey City, NJ, USA, 2018; ISBN 3319680757. [Google Scholar]

| Case | Dataset Name | No. Attributes | No. Instances |

|---|---|---|---|

| a | Airfoil self-noise | 5 | 1503 |

| b | Auto MPG | 7 | 398 |

| c | Combined cycle power plant | 4 | 9568 |

| d | Concrete compressive Strength | 8 | 1030 |

| e | Energy efficiency (heating load) | 8 | 768 |

| f | Energy efficiency (cooling load) | 8 | 768 |

| Case | Dataset Name | CART | M5′ | MTG (SSRML with Quantile) | MTG (SSRML with Quantile and PCA) |

|---|---|---|---|---|---|

| a | Airfoil self-noise | 10 | 10 | 20 | 20 |

| b | Auto MPG | 10 | 20 | 100 | 40 |

| c | Combined cycle power plant | 20 | 10 | 20 | 50 |

| d | Concrete compressive Strength | 10 | 10 | 50 | 90 |

| e | Energy efficiency (heating load) | 10 | 10 | 20 | 30 |

| f | Energy efficiency (cooling load) | 10 | 10 | 50 | 70 |

| Setting | SC | Quantile Sampling | PCA |

|---|---|---|---|

| MTG(1) | SSRML | ||

| MTG(2) | SSRML | × | |

| M2G(3) | SSRML | × |

| Case | Reservoir | State | City | Services | Study Period | Hydrologic Variables |

|---|---|---|---|---|---|---|

| a | Arkabutla | MS | Tunica | Flood Control, Recreation | 1999–2016 | Inflow, Pool Elevation, Discharge |

| b | Coralville | IA | Iowa City | Flood Control, Recreation | 2005–2013 | Inflow, Discharge |

| c | Dale Hollow | TN | Celina | Hydropower, Flood Control, Recreation | 2000–2012 | Inflow, Pool Elevation, Discharge |

| d | El Dorado | KS | El Dorado | Flood Control, Water Supply, Recreation | 2000–2016 | Inflow, Storage Volume, Discharge |

| e | Folsom | CA | Folsom | Hydropower, Flood Control, Water Supply, Recreation | 2001–2018 | Inflow, Storage Volume, Discharge |

| f | Prado | CA | Corona | Flood Control | 2000–2016 | Inflow, Storage Volume, Discharge |

| g | Shasta | CA | Shasta Lake | Hydropower, Flood Control, Water Supply, Recreation | 2004–2018 | Inflow, Storage Volume, Discharge |

| h | Trinity | CA | Lewiston | Hydropower, Flood Control, Water Supply, Recreation | 2000–2018 | Inflow, Storage Volume, Discharge |

| Case | Reservoir | CART | M5′ | MTG(2) | MTG(3) |

|---|---|---|---|---|---|

| a | Arkabutla | 30 | 80 | 250 | 210 |

| b | Coralville | 10 | 160 | 130 | 80 |

| c | Dale Hollow | 70 | 60 | 1480 | 2170 |

| d | El Dorado | 40 | 40 | 490 | 1430 |

| e | Folsom | 20 | 60 | 350 | 1030 |

| f | Prado | 20 | 250 | 1660 | 270 |

| g | Shasta | 60 | 30 | 990 | 400 |

| h | Trinity | 560 | 40 | 920 | 1470 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rahnamay Naeini, M.; Yang, T.; Tavakoly, A.; Analui, B.; AghaKouchak, A.; Hsu, K.-l.; Sorooshian, S. A Model Tree Generator (MTG) Framework for Simulating Hydrologic Systems: Application to Reservoir Routing. Water 2020, 12, 2373. https://doi.org/10.3390/w12092373

Rahnamay Naeini M, Yang T, Tavakoly A, Analui B, AghaKouchak A, Hsu K-l, Sorooshian S. A Model Tree Generator (MTG) Framework for Simulating Hydrologic Systems: Application to Reservoir Routing. Water. 2020; 12(9):2373. https://doi.org/10.3390/w12092373

Chicago/Turabian StyleRahnamay Naeini, Matin, Tiantian Yang, Ahmad Tavakoly, Bita Analui, Amir AghaKouchak, Kuo-lin Hsu, and Soroosh Sorooshian. 2020. "A Model Tree Generator (MTG) Framework for Simulating Hydrologic Systems: Application to Reservoir Routing" Water 12, no. 9: 2373. https://doi.org/10.3390/w12092373

APA StyleRahnamay Naeini, M., Yang, T., Tavakoly, A., Analui, B., AghaKouchak, A., Hsu, K.-l., & Sorooshian, S. (2020). A Model Tree Generator (MTG) Framework for Simulating Hydrologic Systems: Application to Reservoir Routing. Water, 12(9), 2373. https://doi.org/10.3390/w12092373