Multi-Model Approaches for Improving Seasonal Ensemble Streamflow Prediction Scheme with Various Statistical Post-Processing Techniques in the Canadian Prairie Region

Abstract

1. Introduction

2. Materials and Methods

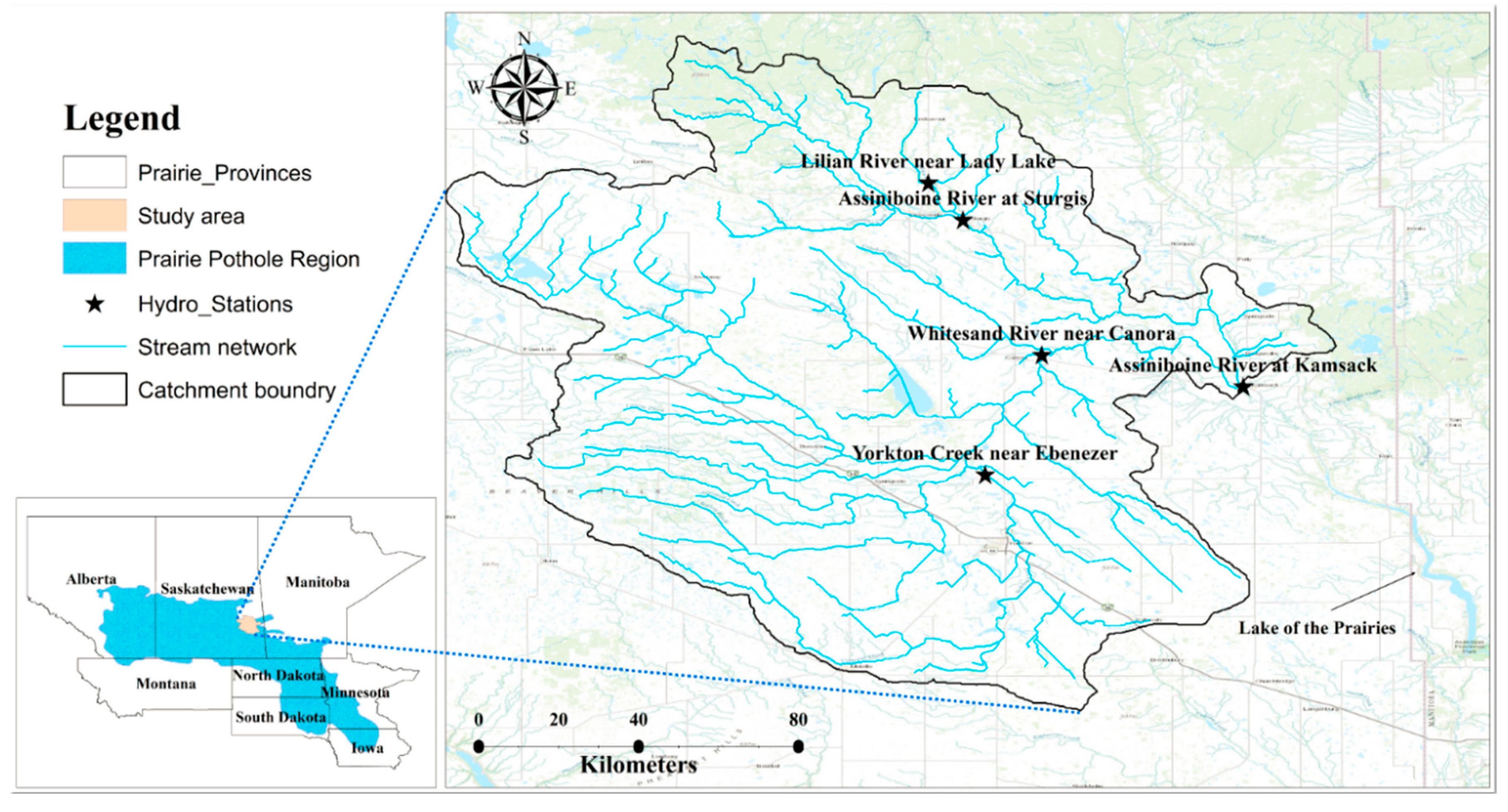

2.1. Study Area

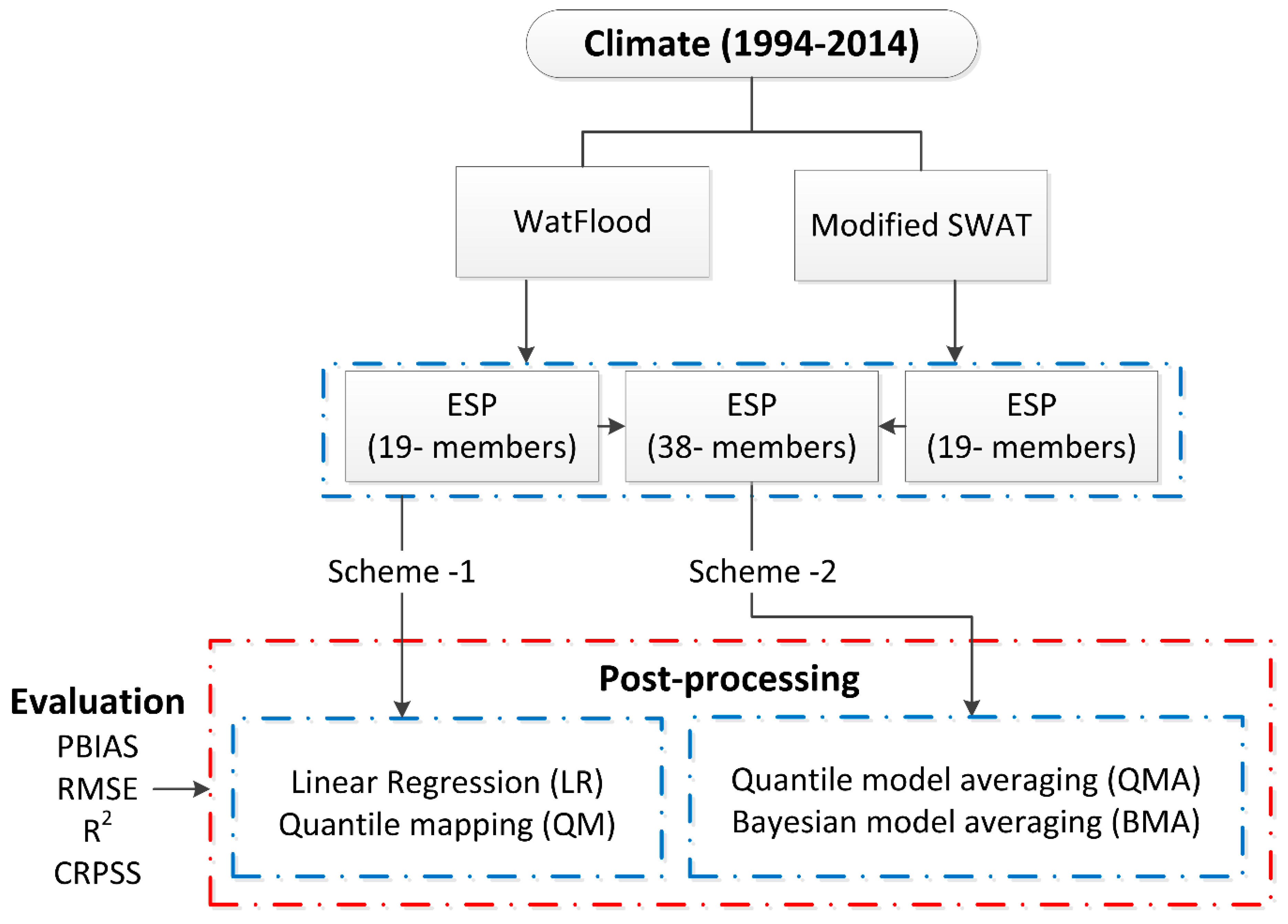

2.2. Hydrological Model

2.2.1. WATFLOOD Model

2.2.2. SWAT Model

2.3. Statistical Post-Processing

2.4. Forecast Generation

2.5. Performance Metrics

3. Results and Discussion

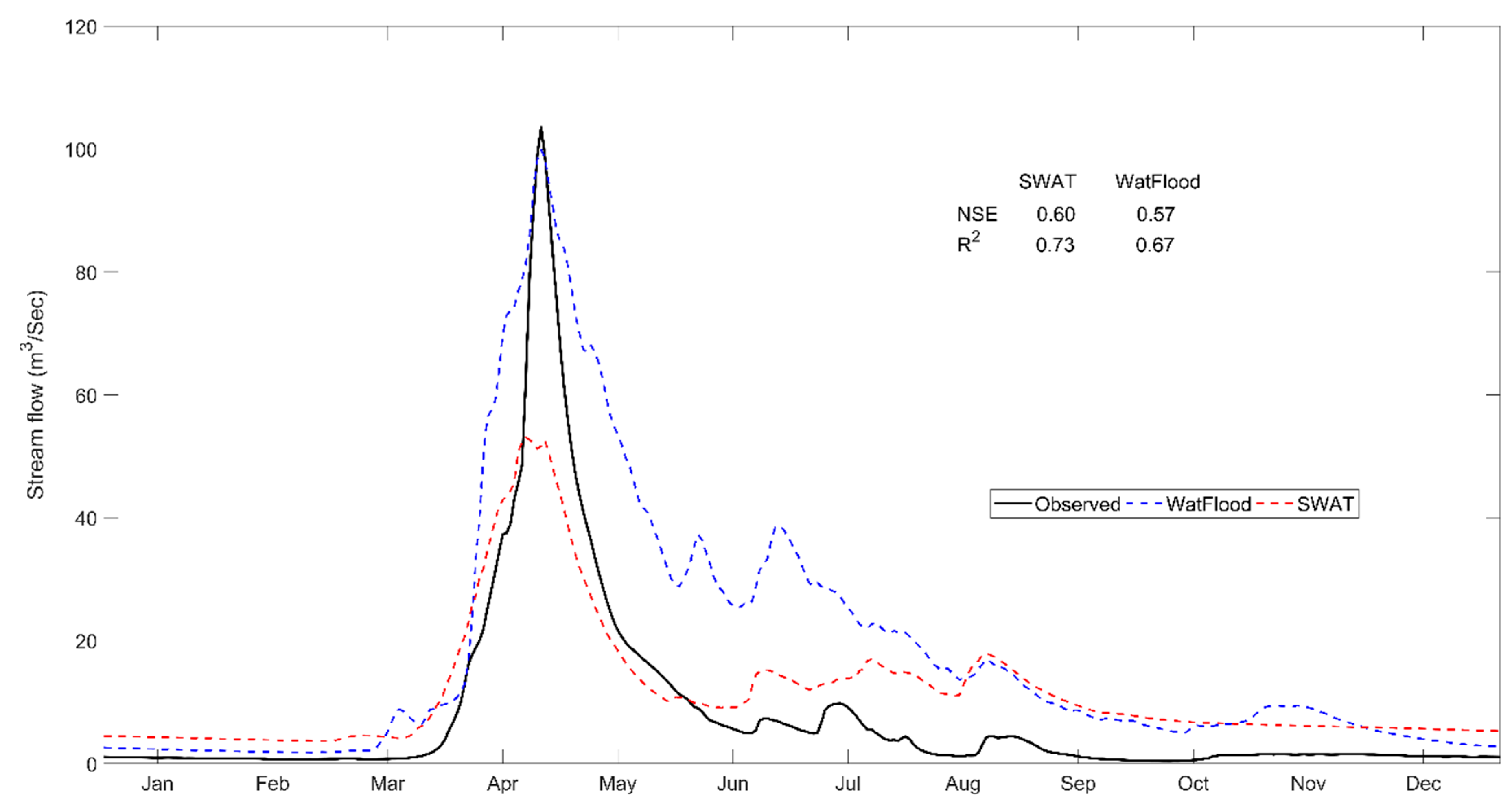

3.1. Hydrologic Model Evaluation

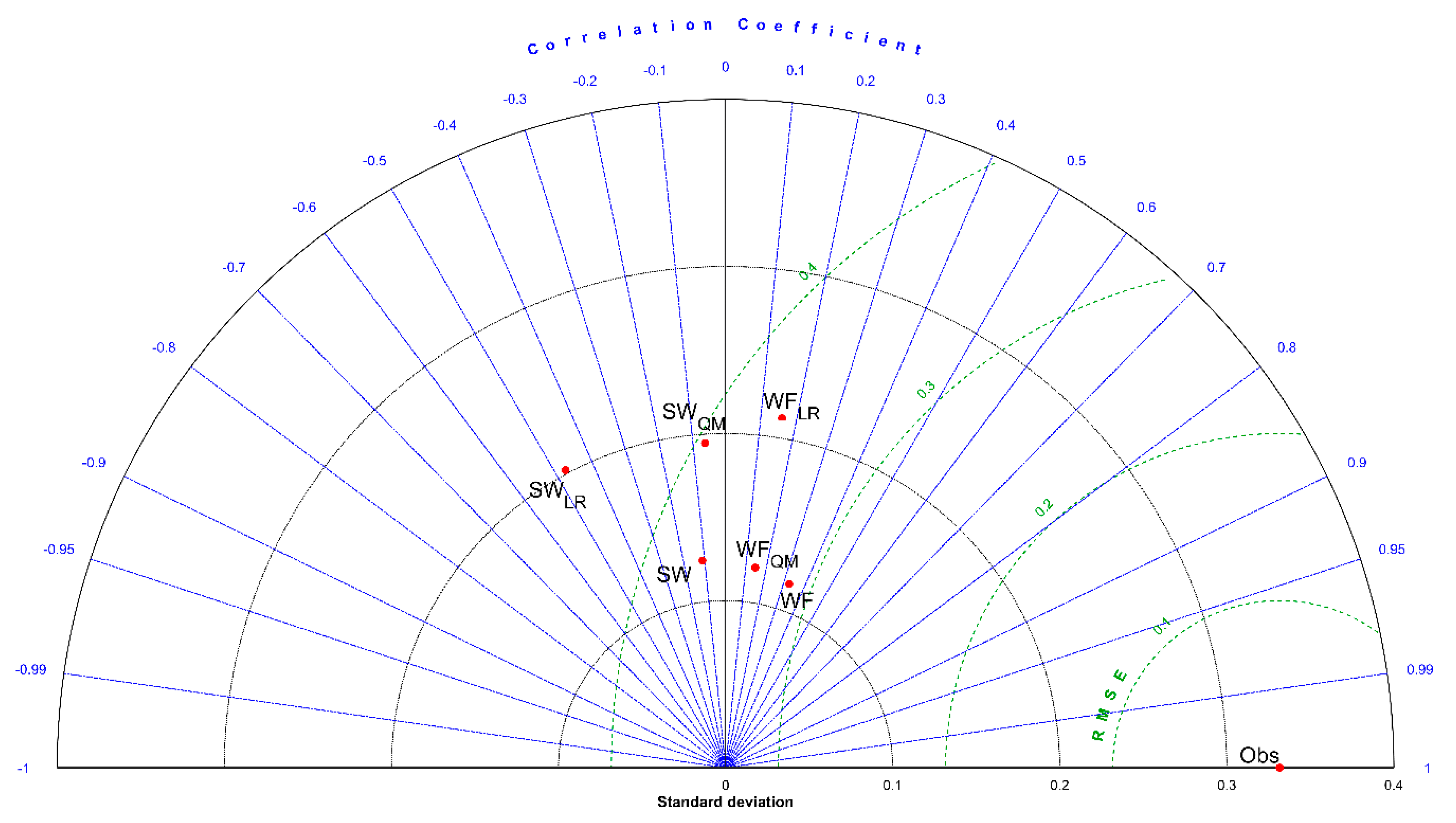

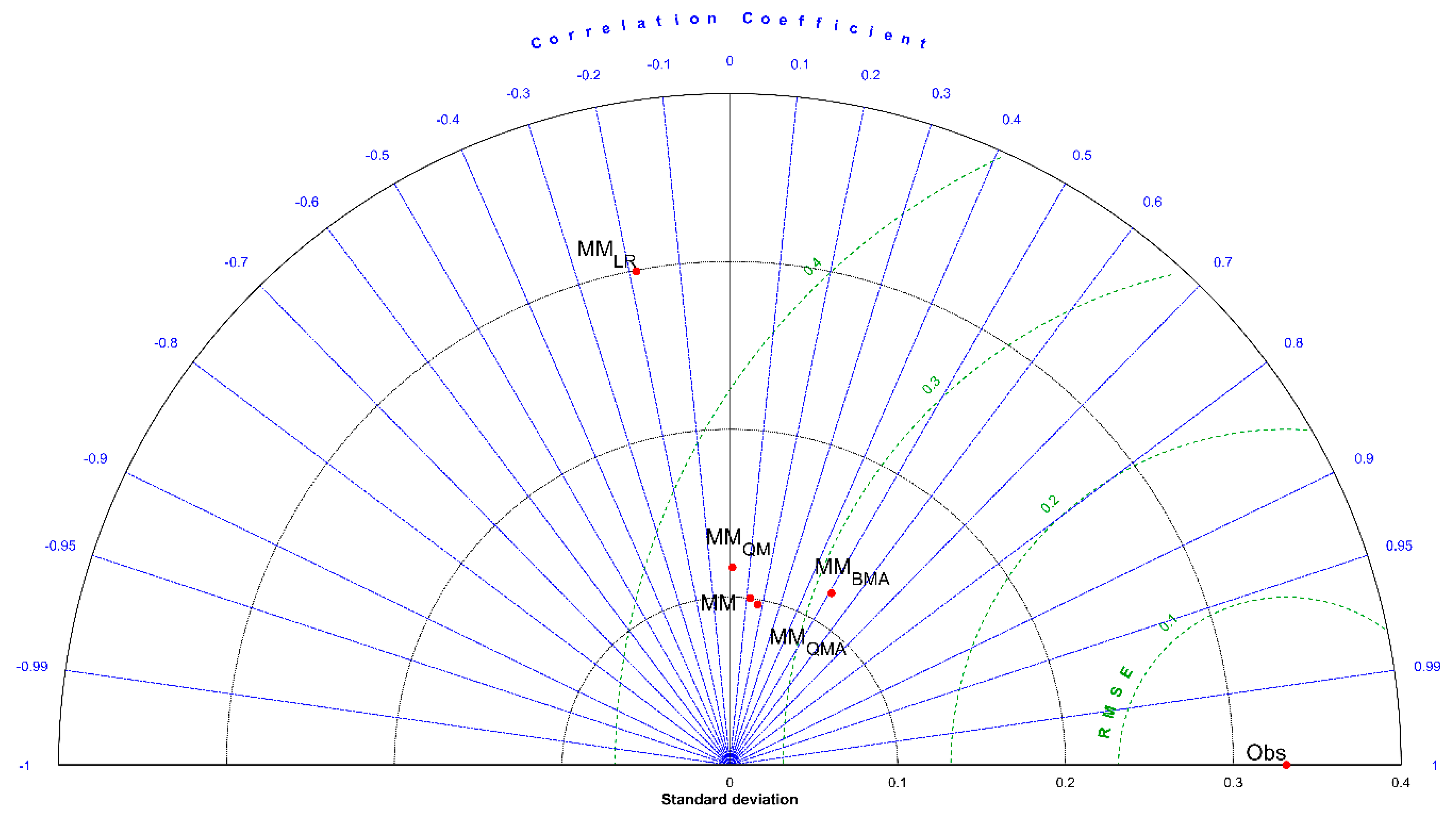

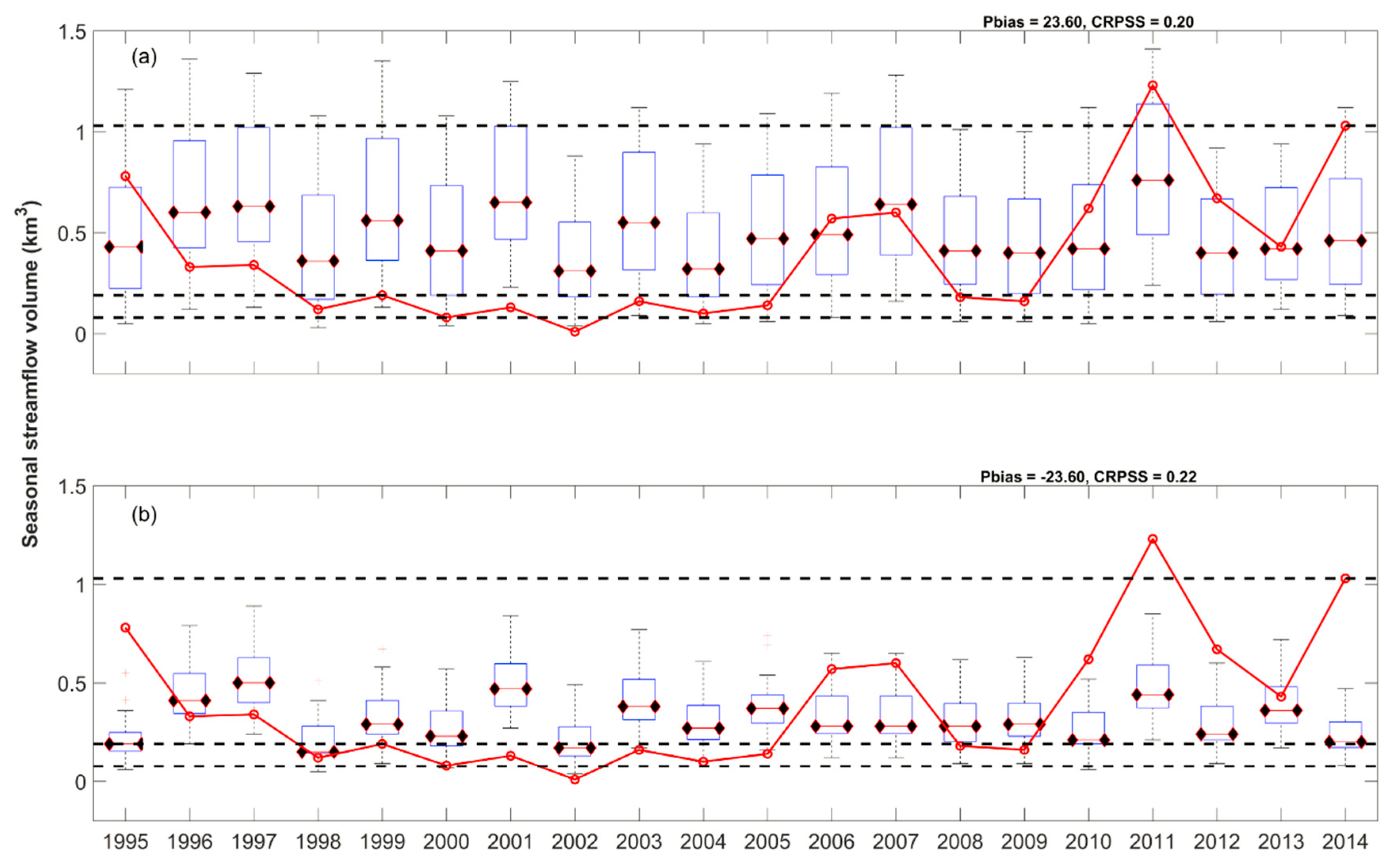

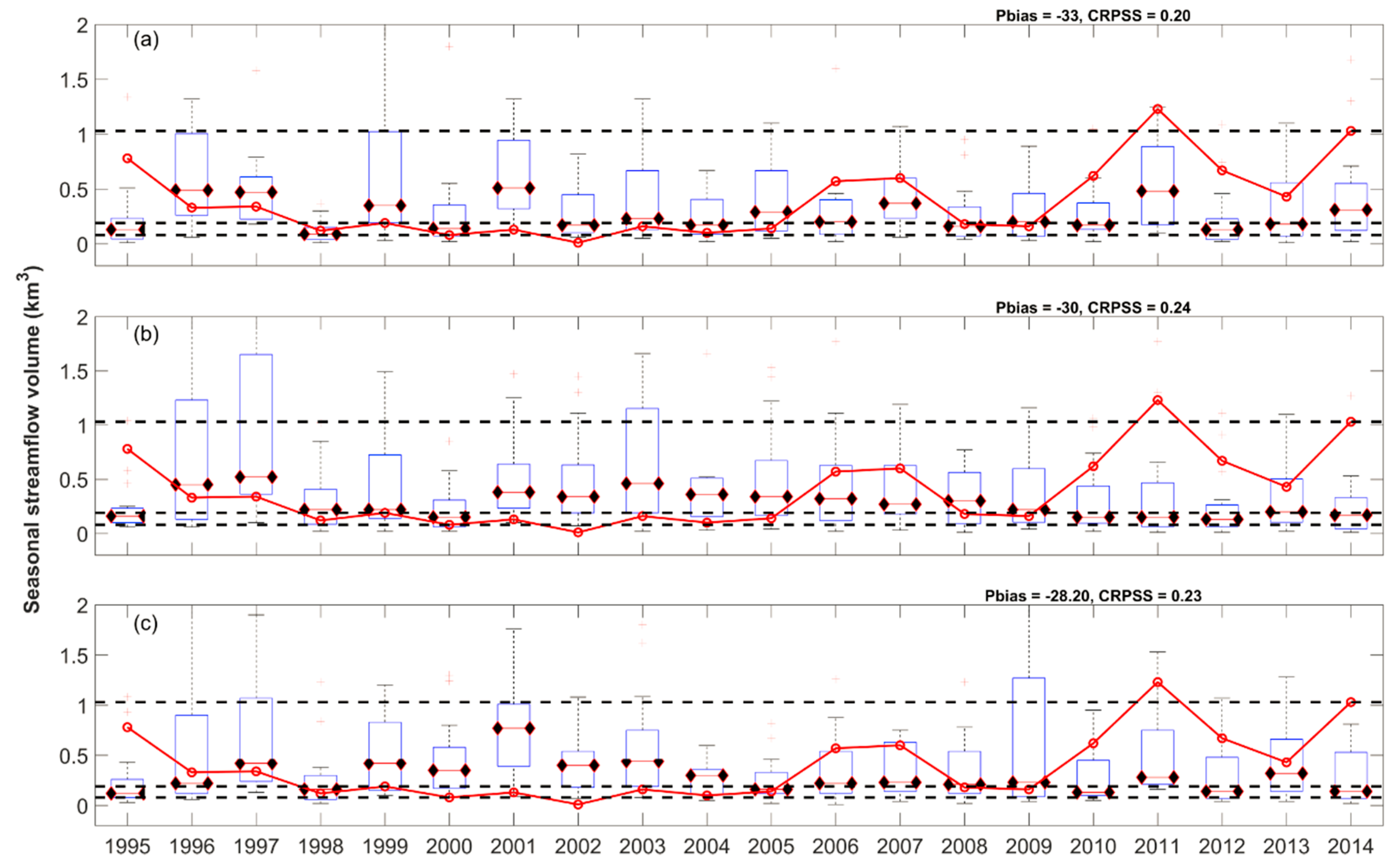

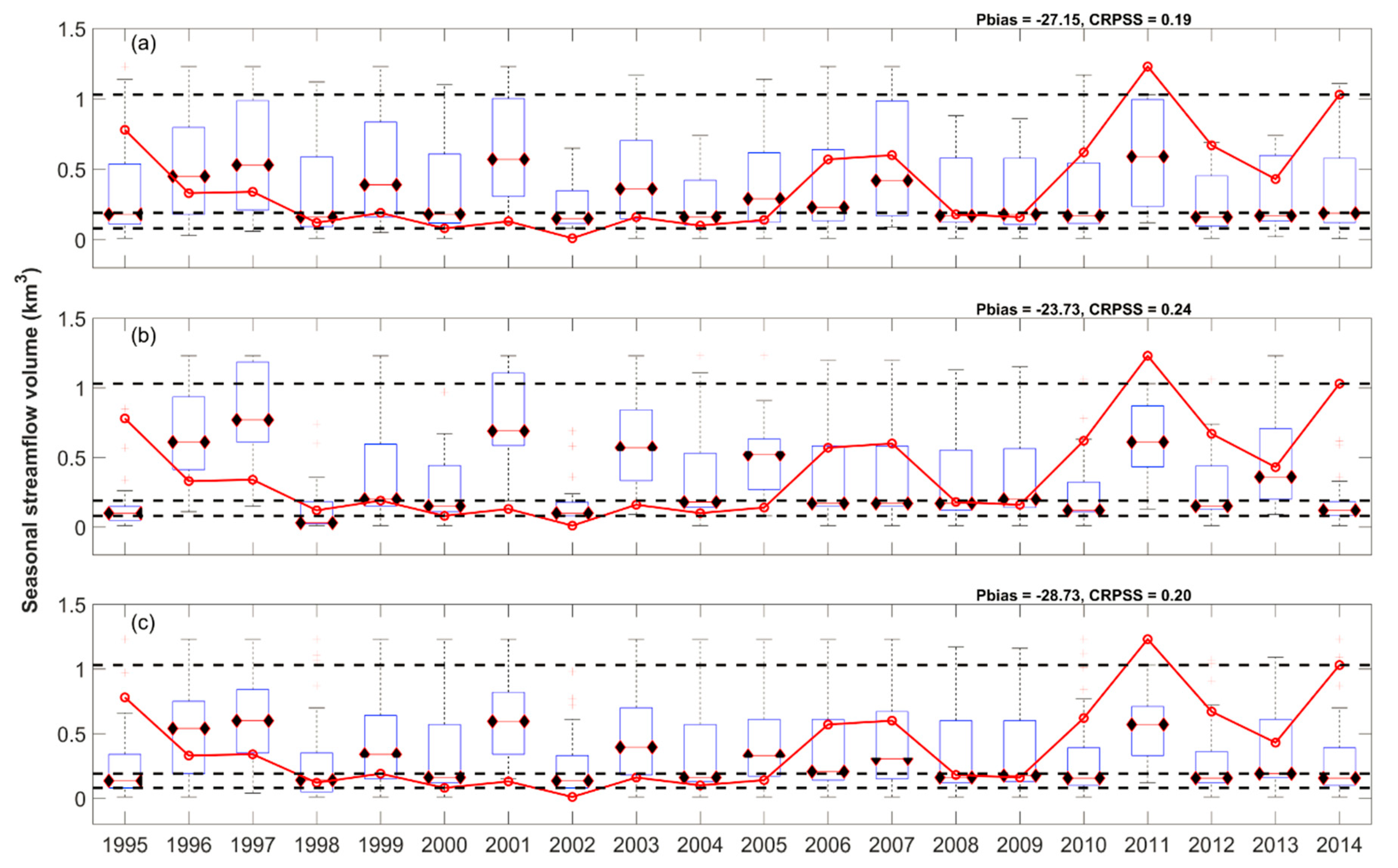

3.2. Deterministic Evaluation of the Benchmark and Post-Processed ESPs

3.3. Probabilistic Evaluation of the Benchmark and Post-Processed ESPs

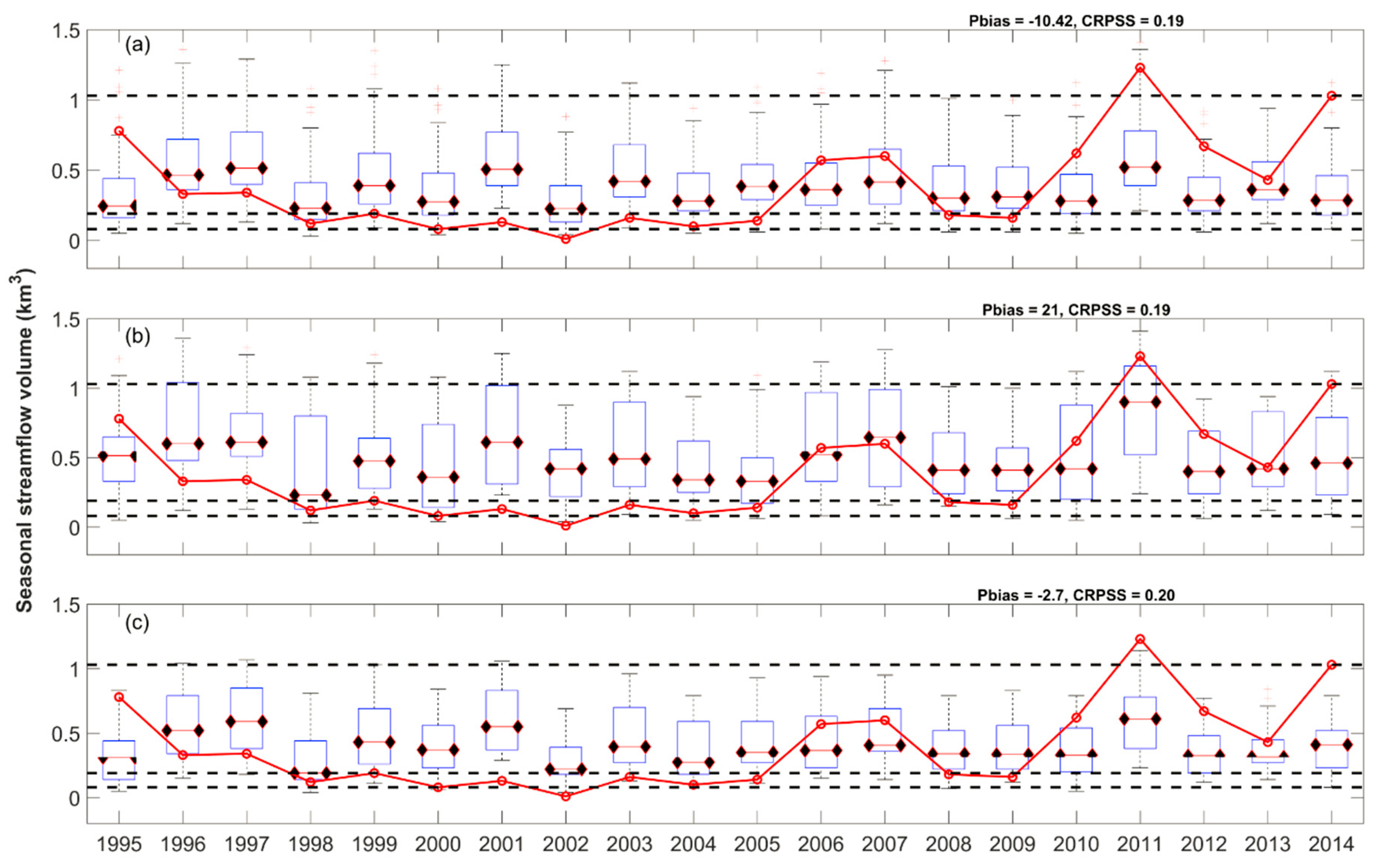

3.4. Evaluation of Weighted MM ESPs

3.5. Post-Processing Effectiveness for Operational Prediction

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Pagano, T.C.; Pappenberger, F.; Wood, A.W.; Ramos, M.-H.; Persson, A.; Anderson, B. Automation and human expertise in operational river forecasting. Wiley Interdiscip. Rev. Water 2016, 3, 692–705. [Google Scholar] [CrossRef]

- Bourdin, D.R.; Fleming, S.W.; Stull, R.B. Streamflow modelling: A primer on applications, approaches and challenges. Atmos. Ocean 2012, 50, 507–536. [Google Scholar] [CrossRef]

- Clark, M.P.; Nijssen, B.; Lundquist, J.D.; Kavetski, D.; Rupp, D.E.; Woods, R.A.; Freer, J.E.; Gutmann, E.D.; Wood, A.W.; Gochis, D.J.; et al. A unified approach for process-based hydrologic modeling: 2. Model implementation and case studies. Water Resour. Res. 2015, 51, 2515–2542. [Google Scholar] [CrossRef]

- Beven, K. Changing ideas in hydrology—The case of physically-based models. J. Hydrol. 1989, 105, 157–172. [Google Scholar] [CrossRef]

- Beven, K.; Binley, A. The future of distributed models: Model calibration and uncertainty prediction. Hydrol. Process. 1992, 6, 279–298. [Google Scholar] [CrossRef]

- Butts, M.B.; Payne, J.T.; Kristensen, M.; Madsen, H. An evaluation of the impact of model structure on hydrological modelling uncertainty for streamflow simulation. J. Hydrol. 2004, 298, 242–266. [Google Scholar] [CrossRef]

- Crochemore, L.; Ramos, M.-H.; Pappenberger, F. Bias correcting precipitation forecasts to improve the skill of seasonal streamflow forecasts. Hydrol. Earth Syst. Sci. 2016, 20, 3601–3618. [Google Scholar] [CrossRef]

- Petrie, R. Localization in the Ensemble Kalman Filter. Master’s Thesis, Univ. of Reading, Reading, UK, 2008. [Google Scholar]

- Georgakakos, K.P.; Seo, D.-J.J.; Gupta, H.; Schaake, J.; Butts, M.B. Towards the characterization of streamflow simulation uncertainty through multimodel ensembles. J. Hydrol. 2004, 298, 222–241. [Google Scholar] [CrossRef]

- Liu, Y.; Gupta, H.V. Uncertainty in hydrologic modeling: Toward an integrated data assimilation framework. Water Resour. Res. 2007, 43. [Google Scholar] [CrossRef]

- Dietrich, J.; Schumann, A.H.; Redetzky, M.; Walther, J.; Denhard, M.; Wang, Y.; Utzner, B.P.; Uttner, U. Assessing uncertainties in flood forecasts for decision making: Prototype of an operational flood management system integrating ensemble predictions. Nat. Hazards Earth Syst. Sci. 2009, 9, 1529–1540. [Google Scholar] [CrossRef]

- Kauffeldt, A.; Wetterhall, F.; Pappenberger, F.; Salamon, P.; Thielen, J. Technical review of large-scale hydrological models for implementation in operational flood forecasting schemes on continental level. Environ. Model. Softw. 2016, 75, 68–76. [Google Scholar] [CrossRef]

- Kasiviswanathan, K.S.; Sudheer, K.P. Methods used for quantifying the prediction uncertainty of artificial neural network based hydrologic models. Stoch. Environ. Res. Risk Assess. 2017, 31, 1659–1670. [Google Scholar] [CrossRef]

- Liu, Y.R.; Li, Y.P.; Huang, G.H.; Zhang, J.L.; Fan, Y.R. A Bayesian-based multilevel factorial analysis method for analyzing parameter uncertainty of hydrological model. J. Hydrol. 2017, 553, 750–762. [Google Scholar] [CrossRef]

- Day, G.N. Extended Streamflow Forecasting Using NWSRFS. J. Water Resour. Plan. Manag. 1985, 111, 157–170. [Google Scholar] [CrossRef]

- Cloke, H.L.; Pappenberger, F.; van Andel, S.J.; Schaake, J.; Thielen, J.; Ramos, M.H. Hydrological ensemble prediction systems. Hydrol. Process. 2013, 27, 1–4. [Google Scholar] [CrossRef]

- Cloke, H.L.; Pappenberger, F. Ensemble flood forecasting: A review. J. Hydrol. 2009, 375, 613–626. [Google Scholar] [CrossRef]

- WMO. Guidelines on Ensemble Prediction Systems and Forecasting; World Meteorological Organization (WMO): Geneva, Switzerland, 2012. [Google Scholar]

- Harrigan, S.; Prudhomme, C.; Parry, S.; Smith, K.; Tanguy, M. Benchmarking Ensemble Streamflow Prediction skill in the UK. Hydrol. Earth Syst. Sci. Discuss. 2017. [Google Scholar] [CrossRef]

- Mendoza, P.A.; Wood, A.W.; Clark, E.; Rothwell, E.; Clark, M.P.; Nijssen, B.; Brekke, L.D.; Arnold, J.R. An intercomparison of approaches for improving operational seasonal streamflow forecasts. Hydrol. Earth Syst. Sci. 2017, 21, 3915–3935. [Google Scholar] [CrossRef]

- Lucatero, D.; Madsen, H.; Refsgaard, J.C.; Kidmose, J.; Jensen, K.H. Seasonal streamflow forecasts in the Ahlergaarde catchment, Denmark: The effect of preprocessing and postprocessing on skill and statistical consistency. Hydrol. Earth Syst. Sci. 2018. [Google Scholar] [CrossRef]

- Li, W.; Duan, Q.; Miao, C.; Ye, A.; Gong, W.; Di, Z. A review on statistical postprocessing methods for hydrometeorological ensemble forecasting. Wiley Interdiscip. Rev. Water 2017, 4, e1246. [Google Scholar] [CrossRef]

- Mendoza, P.A.; Rajagopalan, B.; Clark, M.P.; Ikeda, K.; Rasmussen, R.M.; Mendoza, P.A.; Rajagopalan, B.; Clark, M.P.; Ikeda, K.; Rasmussen, R.M. Statistical Postprocessing of High-Resolution Regional Climate Model Output. Mon. Weather Rev. 2015, 143, 1533–1553. [Google Scholar] [CrossRef]

- Jha, S.K.; Shrestha, D.L.; Stadnyk, T.; Coulibaly, P. Evaluation of ensemble precipitation forecasts generated through postprocessing in a Canadian catchment. Hydrol. Earth Syst. Sci. Discuss. 2017. [Google Scholar] [CrossRef]

- Coulibaly, P. NSERC FloodNet Manual; Natural Sciences and Engineering Research Council of Canada: Hamilton, ON, Canada, 2014. [Google Scholar]

- Saskatchewan Water Security Agency. Upper Assiniboine River Basin Study; Sask Water: Moose Jaw, SK, Canada, 2000. Available online: https://www.gov.mb.ca/waterstewardship/reports/planning_development/uarb_report.pdf (accessed on 8 November 2018).

- Shrestha, R.R.; Dibike, Y.B.; Prowse, T.D. Modeling Climate Change Impacts on Hydrology and Nutrient Loading in the Upper Assiniboine Catchmen. J. Am. Water Resour. Assoc. 2012, 48, 74–89. [Google Scholar] [CrossRef]

- Stadnyk-Falcone, T.A. Mesoscale Hydrological Model Validation and Verification Using Stable Water Isotopes: The isoWATFLOOD Model. Ph.D. Thesis, University of Waterloo, Waterloo, ON, Canada, 2008. [Google Scholar]

- Kouwen, N. WATFLOOD Users Manual; Water Resources Group, Department of Civil Engineering, University of Waterloo: Waterloo, ON, Canada, 1998. [Google Scholar]

- Kouwen, N. Flow Forecasting Manual for WATFLOOD and GreenKenue. Available online: http://www.civil.uwaterloo.ca/watflood/downloads/Flow_Forecasting_Manual.pdf (accessed on 8 November 2018).

- Gassman, P.W.; Arnold, J.G.; Srinivasan, R.; Reyes, M. The worldwide use of the SWAT Model: Technological drivers, networking impacts, and simulation trends. In Proceedings of the Watershed Technology Conference, Guácimo, Costa Rica, 21–24 February 2010; pp. 21–24. [Google Scholar]

- Neitsch, S.L.; Arnold, J.G.; Kiniry, J.R.; Williams, J.R. Soil and Water Assessment Tool Theoretical Documentation Version 2009; Texas Water Resources Institute: College Station, TX, USA, 2011; Available online: http://hdl.handle.net/1969.1/128050 (accessed on 8 November 2018).

- White, E.D.; Easton, Z.M.; Fuka, D.R.; Collick, A.S.; Adgo, E.; McCartney, M.; Awulachew, S.B.; Selassie, Y.G.; Steenhuis, T.S. Development and application of a physically based landscape water balance in the SWAT model. Hydrol. Process. 2011, 25, 915–925. [Google Scholar] [CrossRef]

- Williams, J.R. Flood routing with variable travel time or variable storage coefficients. Trans. ASAE 1969, 12, 100–103. [Google Scholar] [CrossRef]

- Evenson, G.R.; Golden, H.E.; Lane, C.R.; D’Amico, E. An improved representation of geographically isolated wetlands in a watershed-scale hydrologic model. Hydrol. Process. 2016, 30, 4168–4184. [Google Scholar] [CrossRef]

- Evenson, G.R.; Golden, H.E.; Lane, C.R.; Amico, E.D.; D’Amico, E. Geographically isolated wetlands and watershed hydrology: A modified model analysis. J. Hydrol. 2015, 529, 240–256. [Google Scholar] [CrossRef]

- Qu, B.; Zhang, X.; Pappenberger, F.; Zhang, T.; Fang, Y. Multi-Model Grand Ensemble Hydrologic Forecasting in the Fu River Basin Using Bayesian Model Averaging. Water 2017, 9, 74. [Google Scholar] [CrossRef]

- Hsu, K.; Moradkhani, H.; Sorooshian, S. A sequential Bayesian approach for hydrologic model selection and prediction. Water Resour. Res. 2009, 45. [Google Scholar] [CrossRef]

- Zhang, X.; Srinivasan, R.; Bosch, D. Calibration and uncertainty analysis of the SWAT model using Genetic Algorithms and Bayesian Model Averaging. J. Hydrol. 2009, 374, 307–317. [Google Scholar] [CrossRef]

- Tian, X.; Xie, Z.; Wang, A.; Yang, X. A new approach for Bayesian model averaging. Sci. China Earth Sci. 2012, 55, 1336–1344. [Google Scholar] [CrossRef]

- Wood, A.W.; Schaake, J.C. Correcting Errors in Streamflow Forecast Ensemble Mean and Spread. J. Hydrometeorol. 2008, 9, 132–148. [Google Scholar] [CrossRef]

- Raftery, A.E.; Gneiting, T.; Balabdaoui, F.; Polakowski, M. Using Bayesian Model Averaging to Calibrate Forecast Ensembles. Mon. Weather Rev. 2005, 133, 1155–1174. [Google Scholar] [CrossRef]

- Schepen, A.; Wang, Q.J. Model averaging methods to merge operational statistical and dynamic seasonal streamflow forecasts in Australia. Water Resour. Res. 2015, 51, 1797–1812. [Google Scholar] [CrossRef]

- Wood, A.W.; Sankarasubramanian, A.; Mendoza, P. Seasonal Ensemble Forecast Post-processing. In Handbook of Hydrometeorological Ensemble Forecasting; Springer: Berlin/Heidelberg, Germany, 2018; pp. 1–27. [Google Scholar]

- Hashino, T.; Bradley, A.A.; Schwartz, S.S. Evaluation of bias-correction methods for ensemble streamflow volume forecasts. Hydrol. Earth Syst. Sci. 2007, 11, 939–950. [Google Scholar] [CrossRef]

- Najafi, M.R.; Moradkhani, H. Ensemble Combination of Seasonal Streamflow Forecasts. J. Hydrol. Eng. 2016, 21, 04015043. [Google Scholar] [CrossRef]

- Jiang, S.; Ren, L.; Xu, C.-Y.; Liu, S.; Yuan, F.; Yang, X. Quantifying multi-source uncertainties in multi-model predictions using the Bayesian model averaging scheme. Hydrol. Res. 2017, nh2017272. [Google Scholar] [CrossRef]

- Moriasi, D.N.; Arnold, J.G.; Van Liew, M.W.; Binger, R.L.; Harmel, R.D.; Veith, T.L. Model evaluation guidelines for systematic quantification of accuracy in watershed simulations. Trans. ASABE 2007, 50, 885–900. [Google Scholar] [CrossRef]

- Veerasamy, R.; Rajak, H.; Jain, A.; Sivadasan, S.; Varghese, C.P.; Agrawal, R.K. Validation of QSAR Models—Strategies and Importance. Int. J. Drug Des. Disocov. 2011, 2, 511–519. [Google Scholar] [CrossRef]

- Hersbach, H. Decomposition of the Continuous Ranked Probability Score for Ensemble Prediction Systems. Weather Forecast. 2000, 15, 559–570. [Google Scholar] [CrossRef]

- Alfieri, L.; Pappenberger, F.; Wetterhall, F.; Haiden, T.; Richardson, D.; Salamon, P. Evaluation of ensemble streamflow predictions in Europe. J. Hydrol. 2014, 517, 913–922. [Google Scholar] [CrossRef]

- Kouwen, N. WATFLOOD: A Micro-Computer Based Flood Forecasting System Based on Real-Time Weather Radar. Can. Water Resour. J. 1988, 13, 62–77. [Google Scholar] [CrossRef]

- Shook, K.; Pomeroy, J.W.; Spence, C.; Boychuk, L. Storage dynamics simulations in prairie wetland hydrology models: Evaluation and parameterization. Hydrol. Process. 2013, 27, 1875–1889. [Google Scholar] [CrossRef]

- Unduche, F.; Tolossa, H.; Senbeta, D.; Zhu, E. Evaluation of four hydrological models for operational flood forecasting in a Canadian Prairie watershed. Hydrol. Sci. J. 2018, 1–17. [Google Scholar] [CrossRef]

- Wood, A.W.; Hopson, T.; Newman, A.; Brekke, L.; Arnold, J.; Clark, M. Quantifying Streamflow Forecast Skill Elasticity to Initial Condition and Climate Prediction Skill. J. Hydrometeorol. 2016, 17, 651–668. [Google Scholar] [CrossRef]

- Greuell, W.; Franssen, W.H.P.; Hutjes, R.W.A. Seasonal streamflow forecasts for Europe—II. Explanation of the skill. Hydrol. Earth Syst. Sci. Discuss. 2016. [Google Scholar] [CrossRef]

- Wood, A.W.; Lettenmaier, D.P. An ensemble approach for attribution of hydrologic prediction uncertainty. Geophys. Res. Lett. 2008, 35, L14401. [Google Scholar] [CrossRef]

- Najafi, M.R.; Moradkhani, H. Multi-model ensemble analysis of runoff extremes for climate change impact assessments. J. Hydrol. 2015, 525, 352–361. [Google Scholar] [CrossRef]

- Borah, D.K.; Bera, M.; Xia, R. Storm event flow and sediment simulations in agricultural watersheds using DWSM. Trans. ASAE 2004, 47, 1539–1559. [Google Scholar] [CrossRef]

- Yaduvanshi, A.; Sharma, R.K.; Kar, S.C.; Sinha, A.K. Rainfall–runoff simulations of extreme monsoon rainfall events in a tropical river basin of India. Nat. Hazards 2018, 90, 843–861. [Google Scholar] [CrossRef]

- Shook, K.; Pomeroy, J.W. Memory effects of depressional storage in Northern Prairie hydrology. Hydrol. Process. 2011, 25, 3890–3898. [Google Scholar] [CrossRef]

- Shamseldin, A.Y.; O’Connor, K.M.; Liang, G.C. Methods for combining the outputs of different rainfall–runoff models. J. Hydrol. 1997, 197, 203–229. [Google Scholar] [CrossRef]

- Duan, Q.; Ajami, N.K.; Gao, X.; Sorooshian, S. Multi-model ensemble hydrologic prediction using Bayesian model averaging. Adv. Water Resour. 2007, 30, 1371–1386. [Google Scholar] [CrossRef]

- Bohn, T.J.; Sonessa, M.Y.; Lettenmaier, D.P. Seasonal Hydrologic Forecasting: Do Multimodel Ensemble Averages Always Yield Improvements in Forecast Skill? J. Hydrometeorol. 2010, 11, 1358–1372. [Google Scholar] [CrossRef]

- Madadgar, S.; Moradkhani, H. Improved Bayesian multimodeling: Integration of copulas and Bayesian model averaging. Water Resour. Res. 2014, 50, 9586–9603. [Google Scholar] [CrossRef]

- Zhao, T.; Bennett, J.C.; Wang, Q.J.; Schepen, A.; Wood, A.W.; Robertson, D.E.; Ramos, M.-H.; Zhao, T.; Bennett, J.C.; Wang, Q.J.; et al. How Suitable is Quantile Mapping for Postprocessing GCM Precipitation Forecasts? J. Clim. 2017, 30, 3185–3196. [Google Scholar] [CrossRef]

- Krysanova, V.; Donnelly, C.; Gelfan, A.; Gerten, D.; Arheimer, B.; Hattermann, F.; Kundzewicz, Z.W. How the performance of hydrological models relates to credibility of projections under climate change. Hydrol. Sci. J. 2018, 63, 696–720. [Google Scholar] [CrossRef]

- Rajagopalan, B.; Lall, U. Categorical Climate Forecasts through Regularization and Optimal Combination of Multiple GCM Ensembles. Mon. Weather Rev. 2002, 130, 1792–1811. [Google Scholar] [CrossRef]

- Grantz, K.; Rajagopalan, B.; Clark, M.; Zagona, E. A technique for incorporating large-scale climate information in basin-scale ensemble streamflow forecasts. Water Resour. Res. 2005, 41. [Google Scholar] [CrossRef]

- Weigel, A.P.; Liniger, M.A.; Appenzeller, C. Can multi-model combination really enhance the prediction skill of probabilistic ensemble forecasts? Q. J. R. Meteorol. Soc. 2008, 134, 241–260. [Google Scholar] [CrossRef]

- Verkade, J.S.; Brown, J.D.; Reggiani, P.; Weerts, A.H. Post-processing ECMWF precipitation and temperature ensemble reforecasts for operational hydrologic forecasting at various spatial scales. J. Hydrol. 2013, 501, 73–91. [Google Scholar] [CrossRef]

- Mendoza, P.A.; Rajagopalan, B.; Clark, M.P.; Cortés, G.; McPhee, J. A robust multimodel framework for ensemble seasonal hydroclimatic forecasts. Water Resour. Res. 2014, 50, 6030–6052. [Google Scholar] [CrossRef]

- Velázquez, J.A.; Anctil, F.; Perrin, C. Performance and reliability of multimodel hydrological ensemble simulations based on seventeen lumped models and a thousand catchments. Hydrol. Earth Syst. Sci. 2010, 14, 2303–2317. [Google Scholar] [CrossRef]

- Hagedorn, R.; Doblas-Reyes, F.J.; Palmer, T.N. The rationale behind the success of multi-model ensembles in seasonal forecasting—I. Basic concept. Tellus Ser. A Dyn. Meteorol. Oceanogr. 2005, 57, 219–233. [Google Scholar] [CrossRef]

- Bormann, H.; Breuer, L.; Croke, B. Reduction of predictive uncertainty by ensemble hydrological Uncertainties in the modelling’ sequence of catchment research. In Proceedings of the 11th Conference of the Euromediterranean Network of Experimental and Representative Basins (ERB), Luxembourg, Luxembourg, 20–22 September 2006. [Google Scholar]

- Krishnamurti, T.N.; Kishtawal, C.M.; LaRow, T.E.; Bachiochi, D.R.; Zhang, Z.; Williford, C.E.; Gadgil, S.; Surendran, S. Improved Weather and Seasonal Climate Forecasts from Multimodel Superensemble. Science 1999, 285, 1548–1550. [Google Scholar] [CrossRef] [PubMed]

- Milly, A.P.C.D.; Betancourt, J.; Falkenmark, M.; Hirsch, R.M.; Zbigniew, W.; Lettenmaier, D.P.; Stouffer, R.J.; Milly, P.C.D. Stationarity Is Dead: Stationarity Whither Water Management? Science 2008, 319, 573–574. [Google Scholar] [CrossRef] [PubMed]

- Blais, E.-L.; Clark, S.; Dow, K.; Rannie, B.; Stadnyk, T.; Wazney, L. Background to flood control measures in the Red and Assiniboine River Basins. Can. Water Resour. J. 2016, 41, 31–44. [Google Scholar] [CrossRef]

- Blais, E.-L.; Greshuk, J.; Stadnyk, T. The 2011 flood event in the Assiniboine River Basin: Causes, assessment and damages. Can. Water Resour. J. 2016, 41, 74–84. [Google Scholar] [CrossRef]

| WATFLOOD (CRPSS) | Stat. Sig: H(p) | SWAT (CRPSS) | Stat. Sig: H(p) | Multi-Model (CRPSS) | Stat. Sig: H(p) | |

|---|---|---|---|---|---|---|

| Scheme-1 | ||||||

| LR | 0.20 | 1 (0.0232) | 0.24 | 1 (0.0082) | 0.23 | 0 (0.4973) |

| QM | 0.19 | 1 (0.0026) | 0.24 | 0 (0.4973) | 0.20 | 0 (0.0591) |

| Scheme-2 | ||||||

| BMA | 0.19 | 1 (0.0232) | ||||

| QMA | 0.20 | 0 (0.7710) |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Muhammad, A.; Stadnyk, T.A.; Unduche, F.; Coulibaly, P. Multi-Model Approaches for Improving Seasonal Ensemble Streamflow Prediction Scheme with Various Statistical Post-Processing Techniques in the Canadian Prairie Region. Water 2018, 10, 1604. https://doi.org/10.3390/w10111604

Muhammad A, Stadnyk TA, Unduche F, Coulibaly P. Multi-Model Approaches for Improving Seasonal Ensemble Streamflow Prediction Scheme with Various Statistical Post-Processing Techniques in the Canadian Prairie Region. Water. 2018; 10(11):1604. https://doi.org/10.3390/w10111604

Chicago/Turabian StyleMuhammad, Ameer, Tricia A. Stadnyk, Fisaha Unduche, and Paulin Coulibaly. 2018. "Multi-Model Approaches for Improving Seasonal Ensemble Streamflow Prediction Scheme with Various Statistical Post-Processing Techniques in the Canadian Prairie Region" Water 10, no. 11: 1604. https://doi.org/10.3390/w10111604

APA StyleMuhammad, A., Stadnyk, T. A., Unduche, F., & Coulibaly, P. (2018). Multi-Model Approaches for Improving Seasonal Ensemble Streamflow Prediction Scheme with Various Statistical Post-Processing Techniques in the Canadian Prairie Region. Water, 10(11), 1604. https://doi.org/10.3390/w10111604