Reducing Computational Costs of Automatic Calibration of Rainfall-Runoff Models: Meta-Models or High-Performance Computers?

Abstract

:1. Introduction

2. Material and Methods

2.1. The Hydrologic Modeling System

2.2. Particle Swarm Optimization

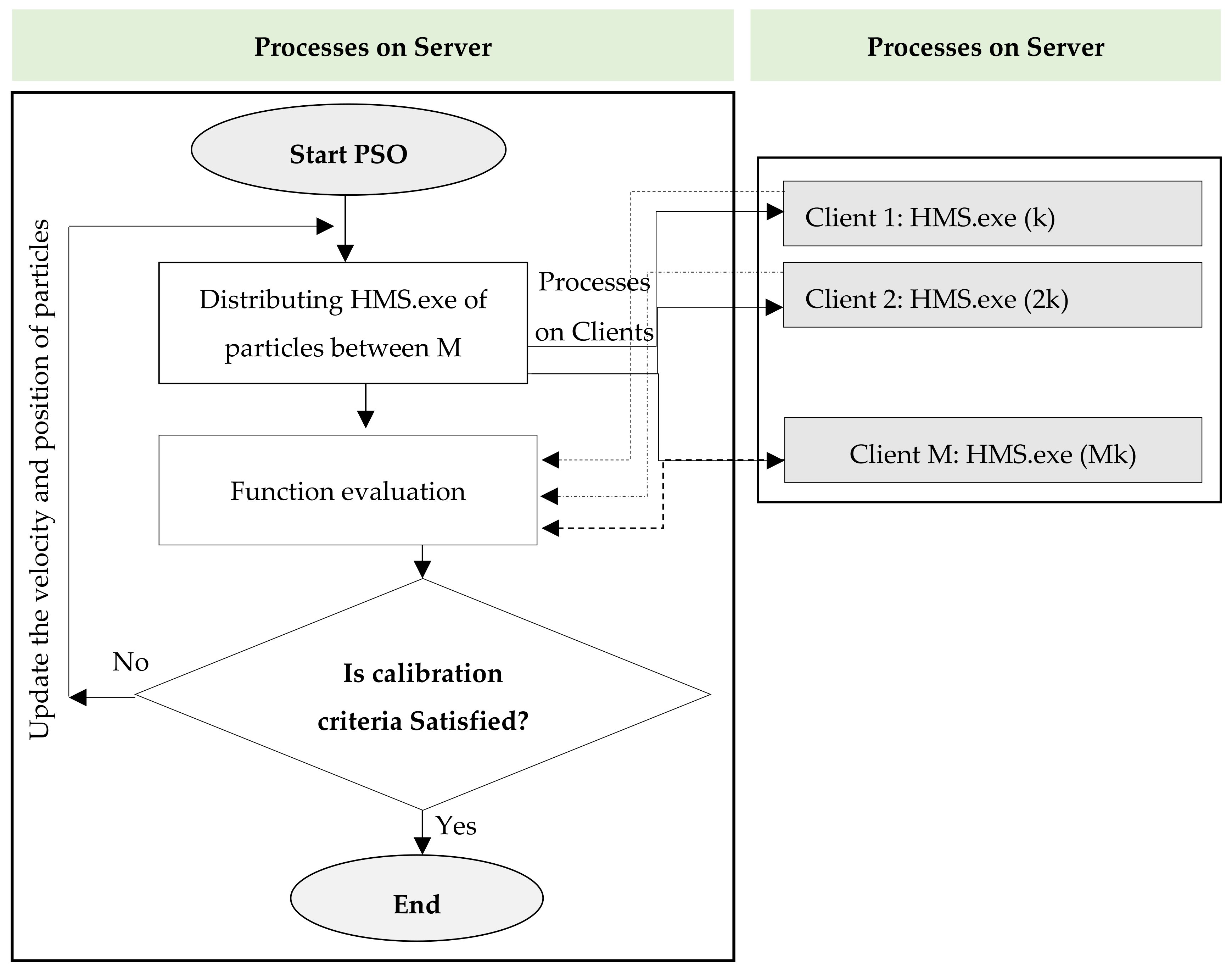

2.3. Parallel Processing Technique

2.4. Artificial Neural Network (ANN)

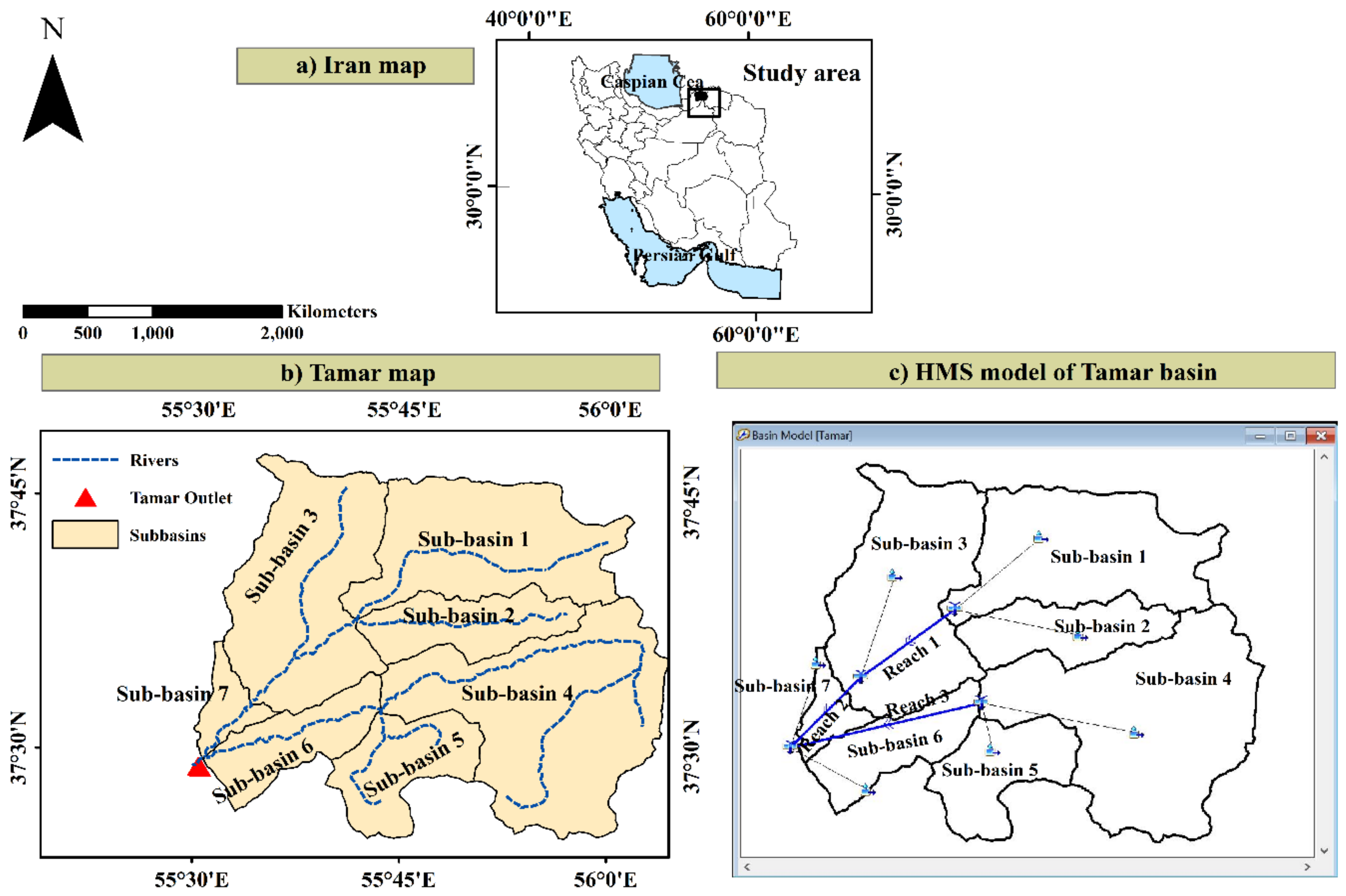

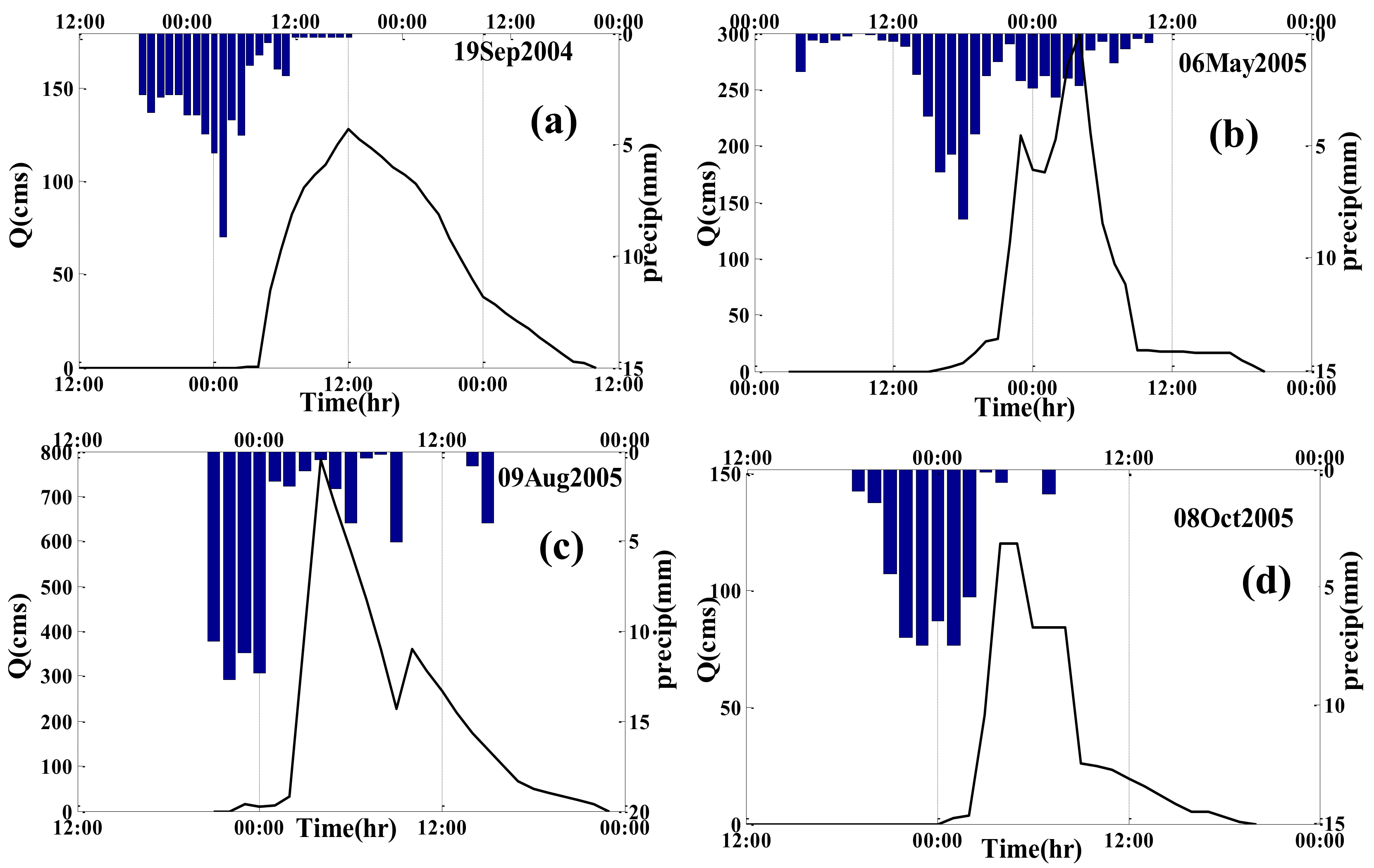

3. Study Area and Model Set-Up

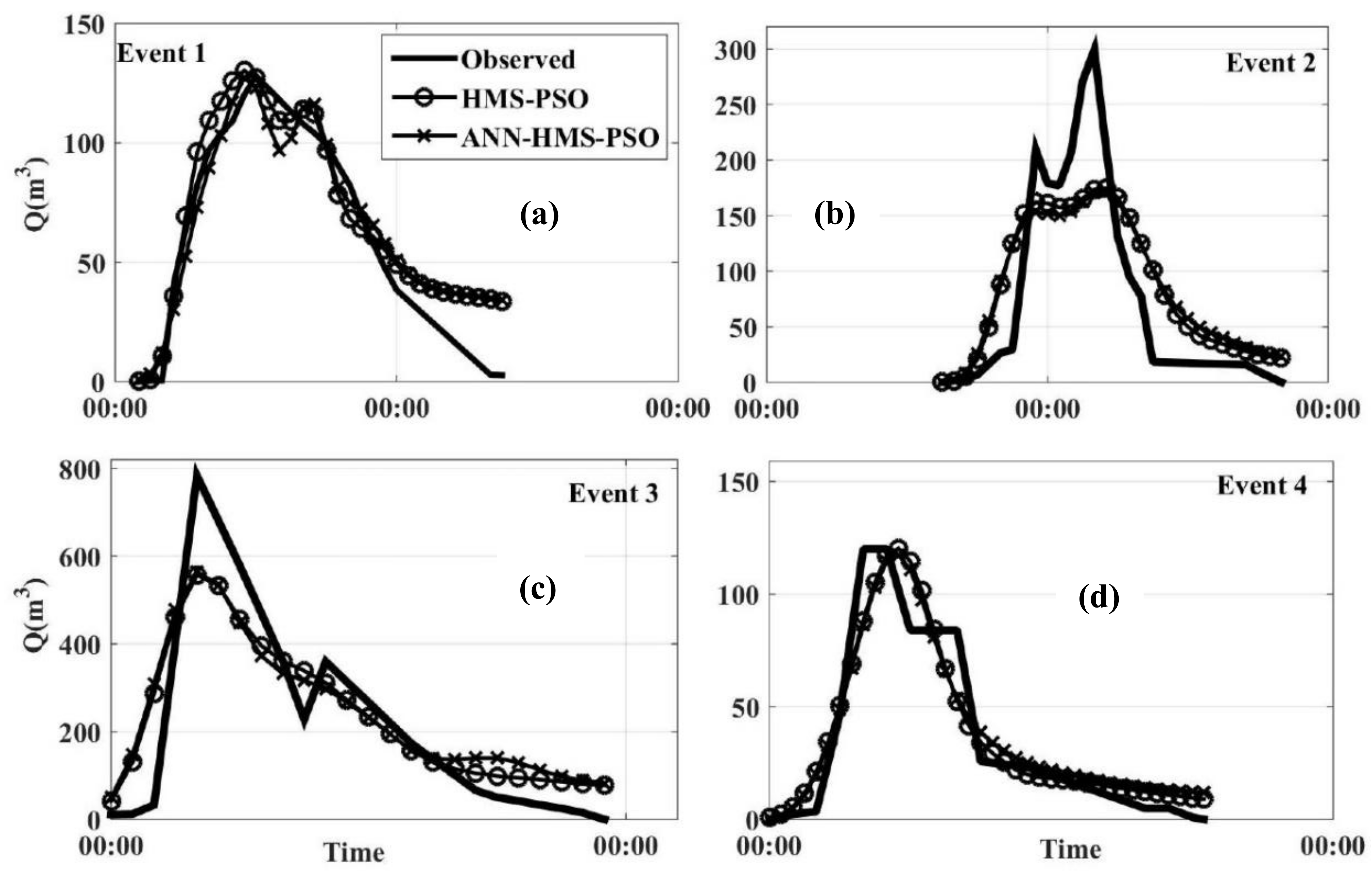

4. Results

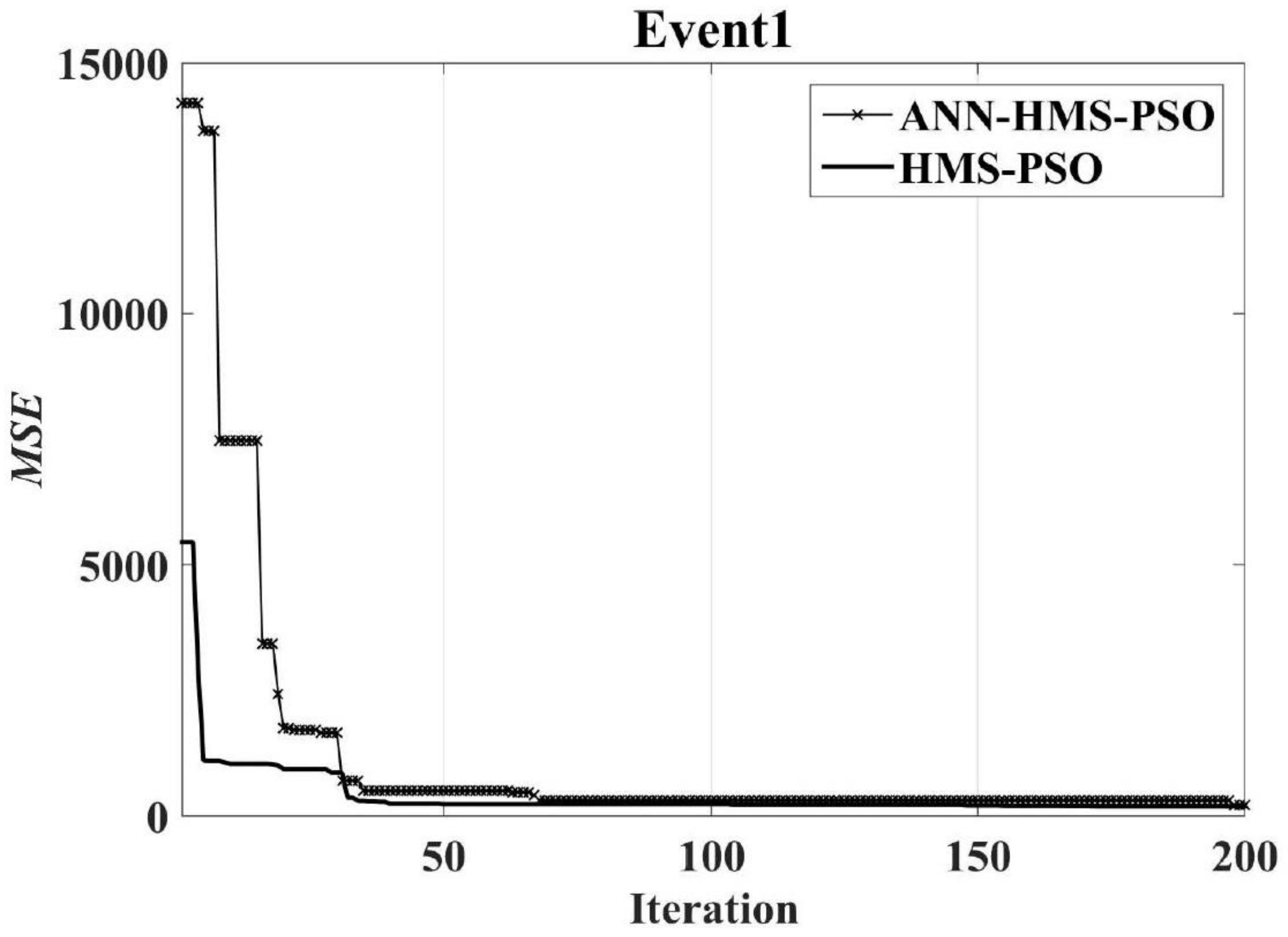

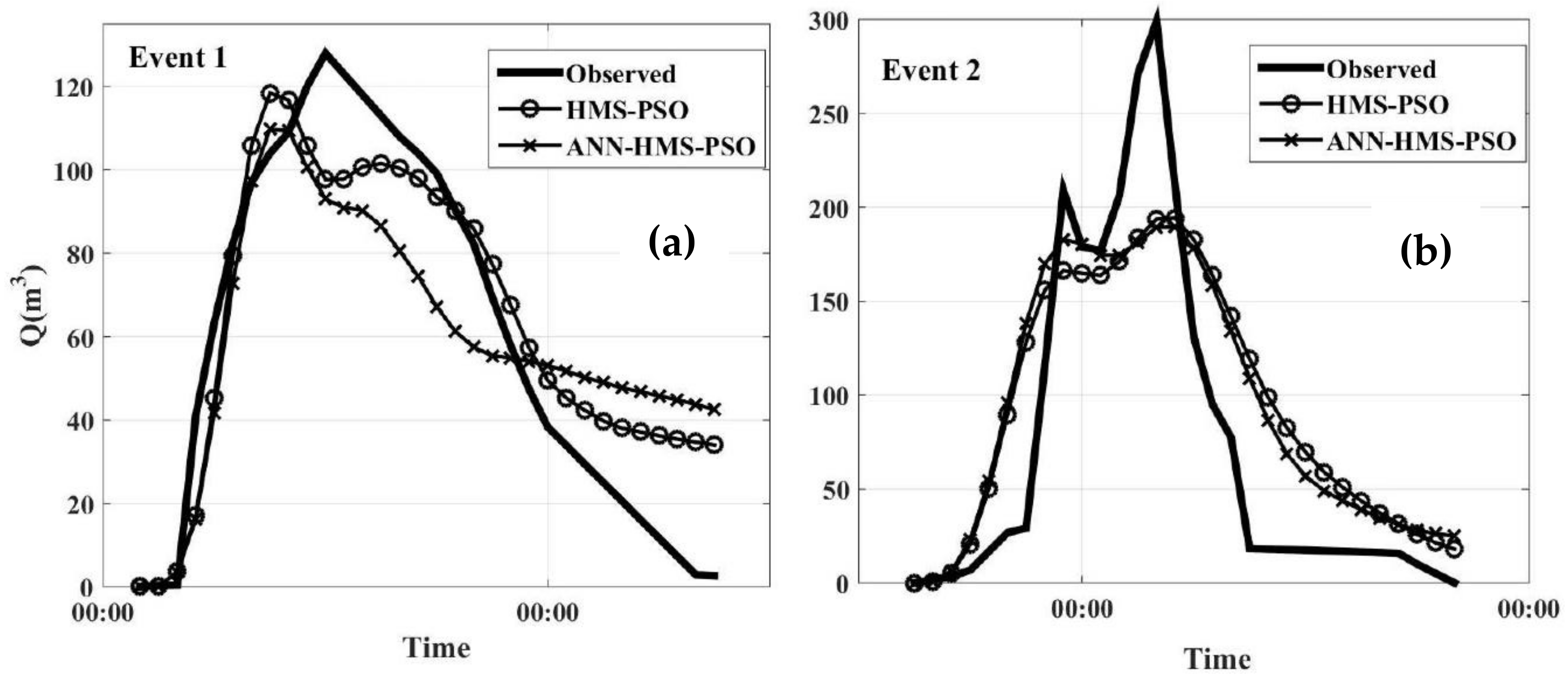

4.1. Reducing Computational Costs Using Surrogate Model

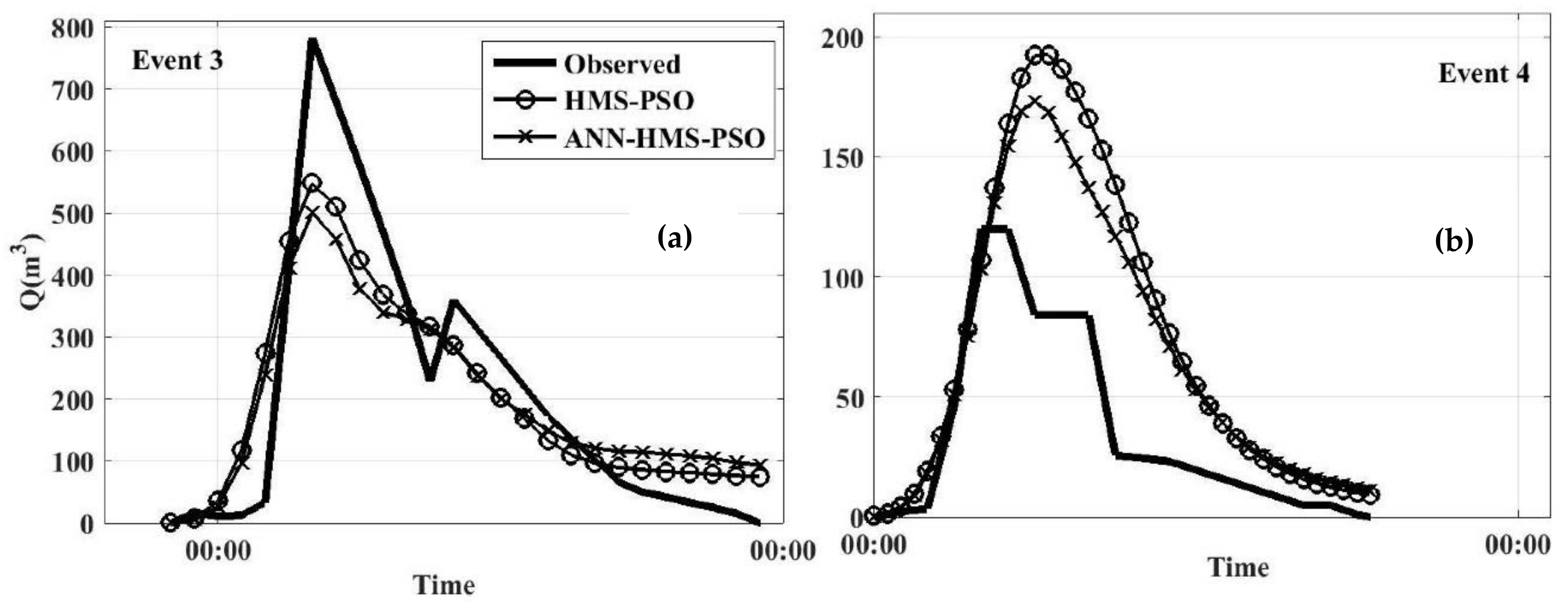

4.2. Reducing Computational Costs by Parallel Processing

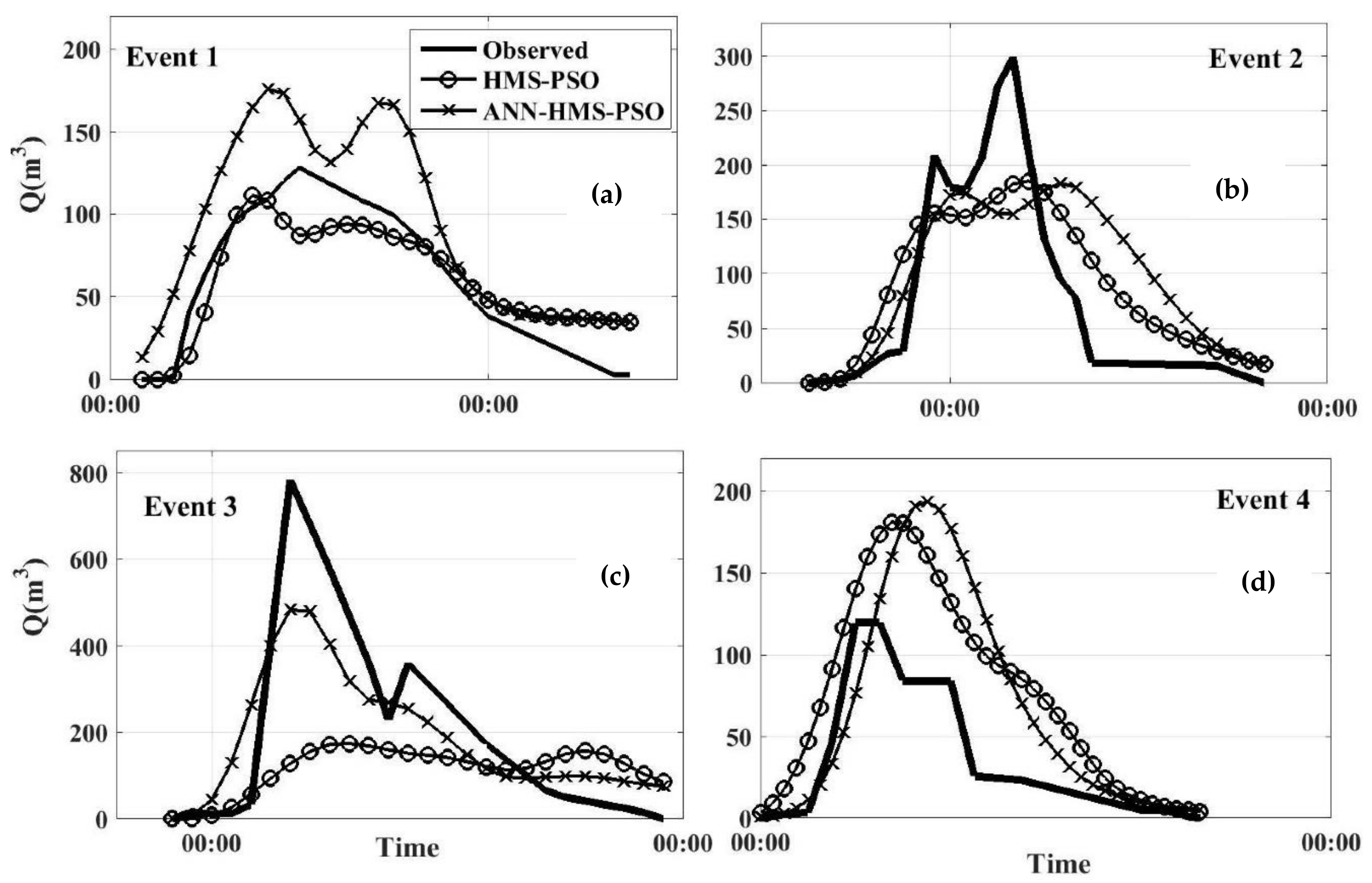

4.3. Comparing the Performance of Surrogate Models and Parallel Processing

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Razavi, S.; Tolson, B.A.; Burn, D.H. Review of surrogate modeling in water resources. Water Resour. Res. 2012, 48, 1–32. [Google Scholar] [CrossRef]

- Wang, G.G.; Shan, S. Review of metamodeling techniques in support of engineering design optimization. J. Mech. Des. 2007, 129, 370–380. [Google Scholar] [CrossRef]

- Jones, D.R.; Schonlau, M.; Welch, W.J. Efficient global optimization of expensive black-box functions. J. Glob. Optim. 1998, 13, 455–492. [Google Scholar] [CrossRef]

- Mousavi, S.J.; Shourian, M. Adaptive sequentially space-filling metamodeling applied in optimal water quantity allocation at basin scale. Water Resour. Res. 2010, 46, 1–13. [Google Scholar] [CrossRef]

- Her, Y.; Cibin, R.; Chaubey, I. Application of Parallel Computing Methods for Improving Efficiency of Optimization in Hydrologic and Water Quality Modeling. Appl. Eng. Agric. 2015, 31, 455–468. [Google Scholar]

- Rouholahnejad, E.; Abbaspour, K.C.; Vejdani, M.; Srinivasan, R.; Schulin, R.; Lehmann, A. A parallelization framework for calibration of hydrological models. Environ. Model. Softw. 2012, 31, 28–36. [Google Scholar] [CrossRef]

- Rao, P. A parallel RMA2 model for simulating large-scale free surface flows. Environ. Model. Softw. 2005, 20, 47–53. [Google Scholar] [CrossRef]

- Muttil, N.; Jayawardena, A.W. Shuffled Complex Evolution model calibrating algorithm: enhancing its robustness and efficiency. Hydrol. Process. 2008, 22, 4628–4638. [Google Scholar] [CrossRef] [Green Version]

- Sharma, V.; Swayne, D.A.; Lam, D.; Schertzer, W. Parallel shuffled complex evolution algorithm for calibration of hydrological models. In Proceedings of the 20th International Symposium on High-Performance Computing in an Advanced Collaborative Environment (HPCS’06), St. John’s, NF, Canada, 14–17 May 2006; IEEE: Piscataway, NJ, USA, 2006. [Google Scholar]

- Zhang, X.S.; Srinivasan, R.; Van Liew, M. Approximating SWAT model using Artificial Neural Network and Support Vector Machine. J. Am. Water Resour. Assoc. 2009, 45, 460–474. [Google Scholar] [CrossRef]

- Bishop, C. Neural Networks for Pattern Recognition; Oxford University Press: New York, NY, USA, 1995; p. 482. [Google Scholar]

- Cortes, C.; Vapnik, V. Machine Learning; Kluwer Academic Publishers-Plenum Publisher: Dordrecht, The Netherlands, 1995; Volume 20, pp. 273–297. [Google Scholar]

- Shourian, M.; Mousavi, S.J.; Menhaj, M.B.; Jabbari, E. Neural-network-based simulation-optimization model for water allocation planning at basin scale. J. Hydroinform. 2008, 10, 331–343. [Google Scholar] [CrossRef] [Green Version]

- Eberhart, R.C.; Kennedy, J.A. New optimizer using Particle Swarm theory. In Proceedings of the Sixth International Symposium on Micro Machine and Human Science, Nagoya, Japan, 4–6 October 1995; pp. 39–43. [Google Scholar]

- Mousavi, S.J.; Abbaspour, K.C.; Kamali, B.; Amini, M.; Yang, H. Uncertainty-based automatic calibration of HEC-HMS model using sequential uncertainty fitting approach. J. Hydroinform. 2012, 14, 286–309. [Google Scholar] [CrossRef] [Green Version]

- Kamali, B.; Mousavi, S.J.; Abbaspour, K.C. Automatic calibration of HEC-HMS using single-objective and multi-objective PSO algorithms. Hydrol. Process. 2013, 27, 4028–4042. [Google Scholar] [CrossRef]

- U.S. Army Corps of Engineers. Hydrologic Modeling System (HEC-HMS) Applications Guide: Version 3.1.0; Hydrologic Engineers Center: Davis, CA, USA, 2008.

- Rauf, A.U.; Ghumman, A.R. Impact assessment of rainfall-runoff simulations on the flow duration curve of the Upper Indus River-Acomparison of data-driven and hydrologic models. Water 2018, 10. [Google Scholar] [CrossRef]

- Parsopoulos, K.E.; Vrahatis, M.N. Recent approaches to global optimization problems through Particle Swarm Optimization. Nat. Comput. 2002, 1, 235–306. [Google Scholar] [CrossRef]

- Liu, H.; Abraham, A.; Zhang, W. A fuzzy adaptive turbulent particle swarm optimization. J. Innov. Comp. Appl. 2007, 39–47. [Google Scholar] [CrossRef]

- Reddy, M.J.; Kumar, D.N. Optimal reservoir operation for irrigation of multiple crops using elitist-mutated particle swarm optimization. Hydrol. Sci. J. 2007, 52, 686–701. [Google Scholar] [CrossRef] [Green Version]

- Heaton, J. Introduction to Neural Networks for C#, 2nd ed.; WordsRU.com, Ed.; Heaton Research, Inc.: Chesterfield, MO, USA, 2008. [Google Scholar]

- Cybenko, G. Approximation by superpositions of a sigmoidal function. Math. Control Signal 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Jabri, M.; Jerbi, H. Comparative Study between Levenberg Marquardt and Genetic Algorithm for Parameter Optimization of an Electrical System. IFAC Proc. Vol. 2009, 42, 77–82. [Google Scholar] [CrossRef]

- Adamowski, J.; Sun, K.R. Development of a coupled wavelet transform and neural network method for flow forecasting of non-perennial rivers in semi-arid watersheds. J. Hydrol. 2010, 390, 85–91. [Google Scholar] [CrossRef]

- UNDP. Draft Report of the Inter-Agency flood Recovery Mission to Golestan; United Nations Development Programme in Iran: Tehran, Iran, 2001. [Google Scholar]

- U.S. Army Corps of Engineers. Hydrologic Modeling System HEC-HMS, Technical Reference Guide; Hydrologic Engineering Center: Davis, CA, USA, 2000.

- USDA. Urban Hydrology for Small Watersheds; Technical Release 55; United States Department of Agriculture, Natural Resources Conservation Service, Conservation Engineering Division: Washington, DC, USA, 1986; pp. 1–164.

- Chow, V.T.; Maidment, D.R.; Mays, L.W. Applied Hydrology; McGraw-Hill: New York, NY, USA, 1988. [Google Scholar]

- Timothy, D.S.; Charles, S.M.; Kyle, E.K. Equations for Estimating Clarkunit-Hydrograph Parameters for Small Rural Watersheds in Illinois; Water-Resources Investigations Report 2000-4184; U.S. Dept. of the Interior, U.S. Geological Survey; Branch of Information Services: Urbana, IL, USA, 2000.

- Shaw, E.M.; Beven, K.J.; Chappell, N.A.; Lamb, R. Hydrology in Practice, 4th ed.; CRC Press: Boca Raton, FL, USA, 2010; pp. 1–546. [Google Scholar]

- Sola, J.; Sevilla, J. Importance of input data normalization for the application of neural networks to complex industrial problems. IEEE Trans. Nucl. Sci. 1997, 44, 1464–1468. [Google Scholar] [CrossRef]

| Sub-Basins | Area (km2) | Slope (%) |

|---|---|---|

| Sub-Basin 1 | 307.7 | 20.99 |

| Sub-Basin 2 | 129.9 | 31.61 |

| Sub-Basin 3 | 341.1 | 13.85 |

| Sub-Basin 4 | 455.7 | 79.52 |

| Sub-Basin 5 | 135.2 | 24.8 |

| Sub-Basin 6 | 117.4 | 18.4 |

| Sub-Basin 7 | 43.6 | 2.9 |

| Parameter Number | Parameters | Sub-Basin | Upper Limit | Lower Limit |

|---|---|---|---|---|

| 1–7 | curve number (CN1–CN7) | Sub-Basin-1 | 91 | 60 |

| Sub-Basin-2 | 91 | 61 | ||

| Sub-Basin-3 | 87 | 58 | ||

| Sub-Basin-4 | 85 | 60 | ||

| Sub-Basin-5 | 84 | 50 | ||

| Sub-Basin-6 | 91 | 70 | ||

| Sub-Basin-7 | 91 | 70 | ||

| 8–14 | cons (cons1–cons7) | 7 Sub-Basins | 0.65 | 0.2 |

| 15–17 | (Xm1–Xm3) | 3 reaches | 0.5 | 0.2 |

| Single PC | Three Parallel PCs | Six Parallel PCs | Nine Parallel PCs | ||

|---|---|---|---|---|---|

| Single event | Event-1 | 4197 | 1579 | 943 | 770 |

| Event-2 | 3910 | 1673 | 1103 | 732 | |

| Event-3 | 4034 | 1578 | 969 | 760 | |

| Event-4 | 4156 | 1836 | 959 | 763 | |

| Jointly events | JEvent 1,2 | 8737 | 2645 | 2282 | 1507 |

| JEvent 3,4 | 8653 | 2861 | 2309 | 1523 | |

| JEvent 1–3 | 12,815 | 4175 | 2834 | 2259 | |

| JEvent 1–4 | 18,697 | 5422 | 3870 | 3041 |

| Scenario | Event 1 | Event 2 | Event 3 | Event 4 | Sum | |

|---|---|---|---|---|---|---|

| HMS-PSO | JEvents 1,2 | 269 | 2651 | - | - | 2920 |

| JEvent 3,4 | - | - | 9075 | 2914 | 11,989 | |

| JEvent 1–3 | 1665 | 4939 | 11,114 | - | 17,749 | |

| JEvent 1–4 | 360 | 2522 | 48,572 | 2341 | 53,797 | |

| ANN-HMS-PSO | JEvents 1,2 | 585 | 2478 | - | - | 3062 |

| JEvent 3,4 | - | - | 10,641 | 1863 | 12,504 | |

| JEvent 1–3 | 1573 | 5307 | 11,571 | - | 17,800 | |

| JEvent 1–4 | 1423 | 4236 | 12,288 | 2840 | 21,189 |

| Number of PCs | Speed-Up (%) | Improvement in MSE Error (%) | ||

|---|---|---|---|---|

| Parallel processing | Single event | 3PCs | 60 | The same in parallelized and unparalleled runs |

| 6PCs | 76 | |||

| JEvent 1,2 | 3PCs | 70 | ||

| 6PCs | 73 | |||

| JEvent 3,4 | 3PCs | 67 | ||

| 6PCs | 74 | |||

| JEvent 1–3 | 3PCs | 67 | ||

| 6PCs | 78 | |||

| JEvent 1–4 | 3PCs | 70 | ||

| 6PCs | 80 | |||

| Surrogate Models | Single event | 86.6 | −9.5 | |

| JEvent 1,2 | 70–75 | −4.8 | ||

| JEvent 3,4 | 70–75 | −4.3 | ||

| JEveny 1–3 | 60–70% | −3.9 | ||

| JEvent 1–4 | 60–65% | +42 | ||

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Semiromi, M.T.; Omidvar, S.; Kamali, B. Reducing Computational Costs of Automatic Calibration of Rainfall-Runoff Models: Meta-Models or High-Performance Computers? Water 2018, 10, 1440. https://doi.org/10.3390/w10101440

Semiromi MT, Omidvar S, Kamali B. Reducing Computational Costs of Automatic Calibration of Rainfall-Runoff Models: Meta-Models or High-Performance Computers? Water. 2018; 10(10):1440. https://doi.org/10.3390/w10101440

Chicago/Turabian StyleSemiromi, Majid Taie, Sorush Omidvar, and Bahareh Kamali. 2018. "Reducing Computational Costs of Automatic Calibration of Rainfall-Runoff Models: Meta-Models or High-Performance Computers?" Water 10, no. 10: 1440. https://doi.org/10.3390/w10101440