Automatic Colorectal Cancer Screening Using Deep Learning in Spatial Light Interference Microscopy Data

Abstract

:1. Introduction

2. Results and Methods

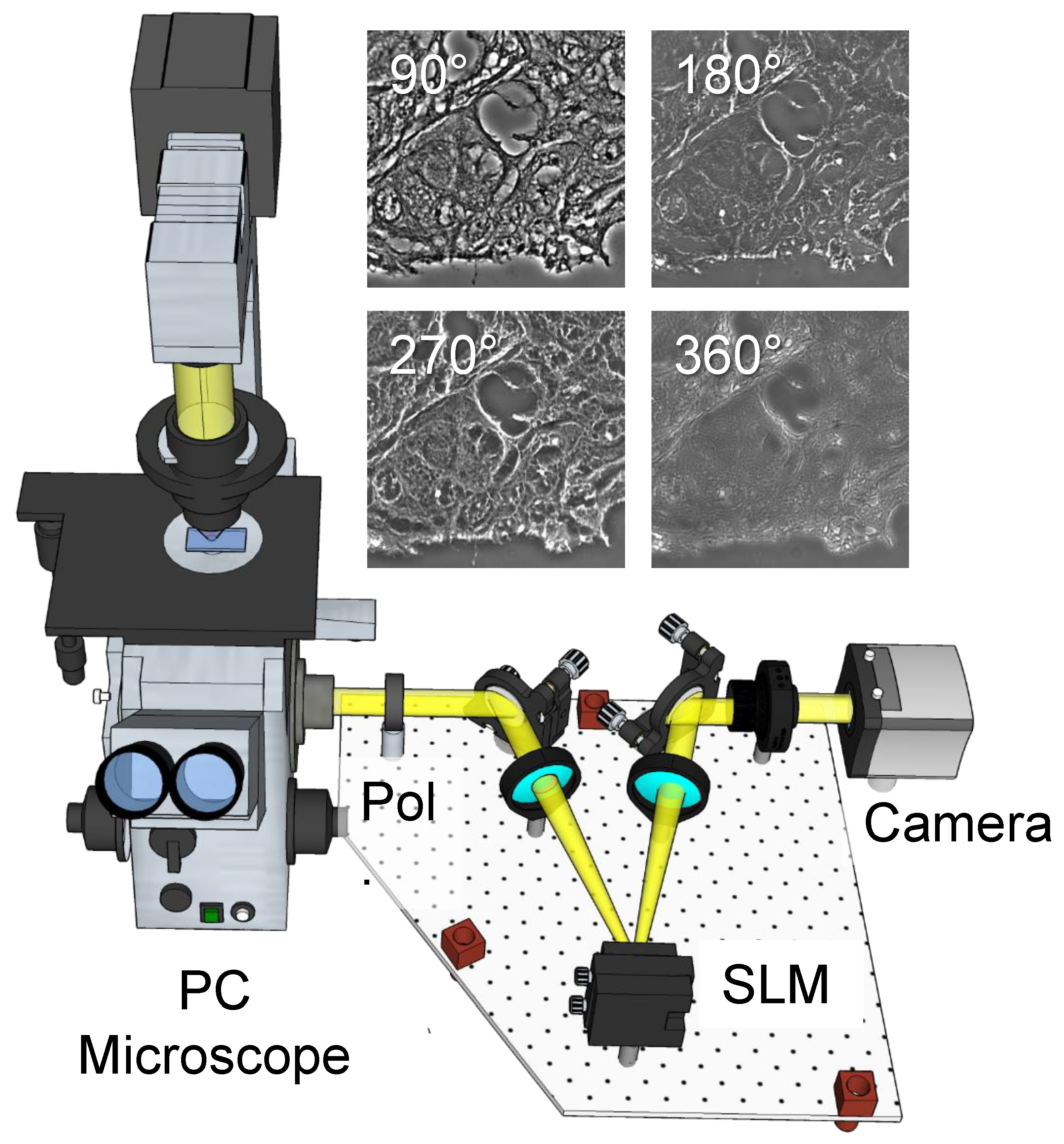

2.1. Label-Free Tissue Scanner

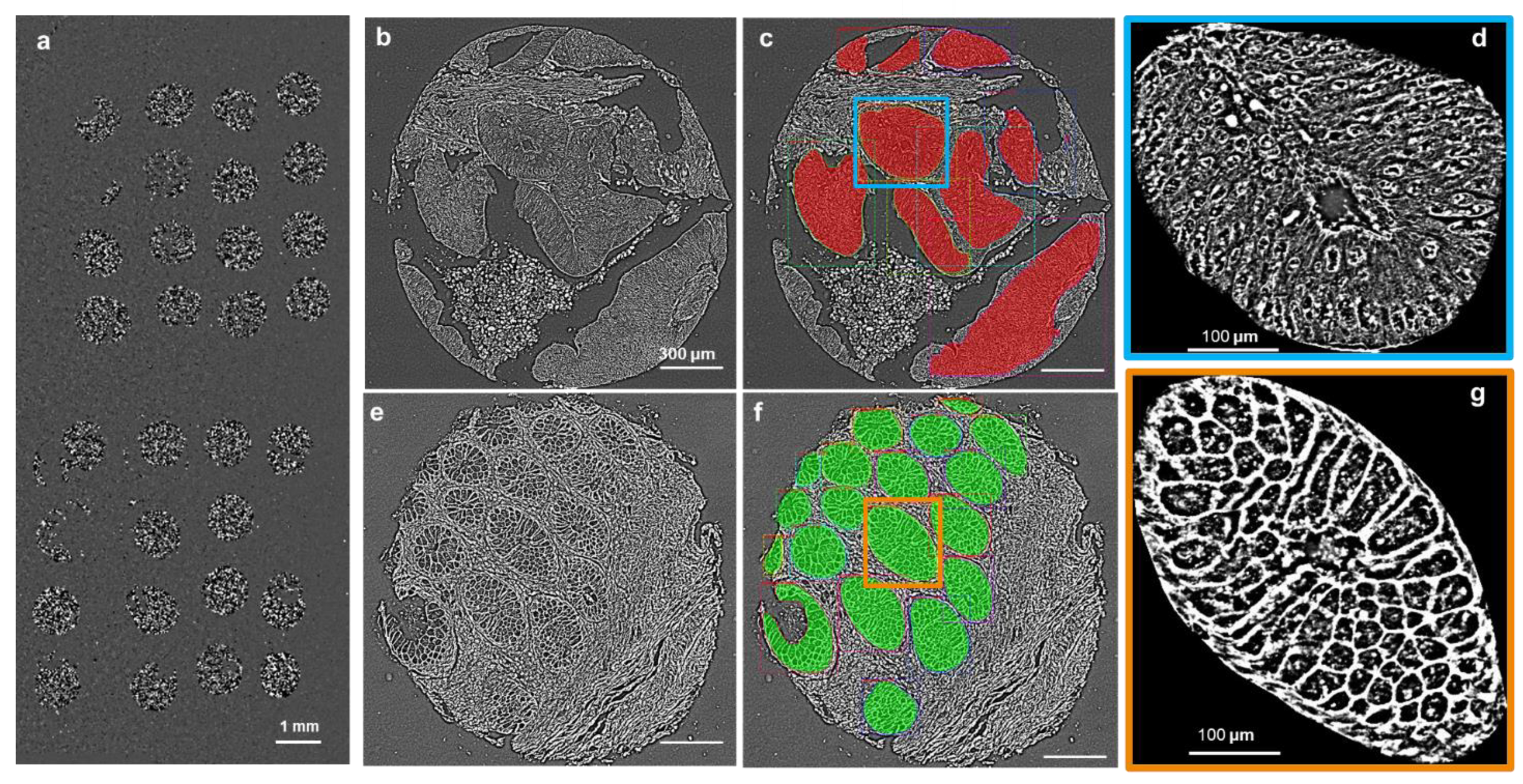

2.2. Tissue Imaging

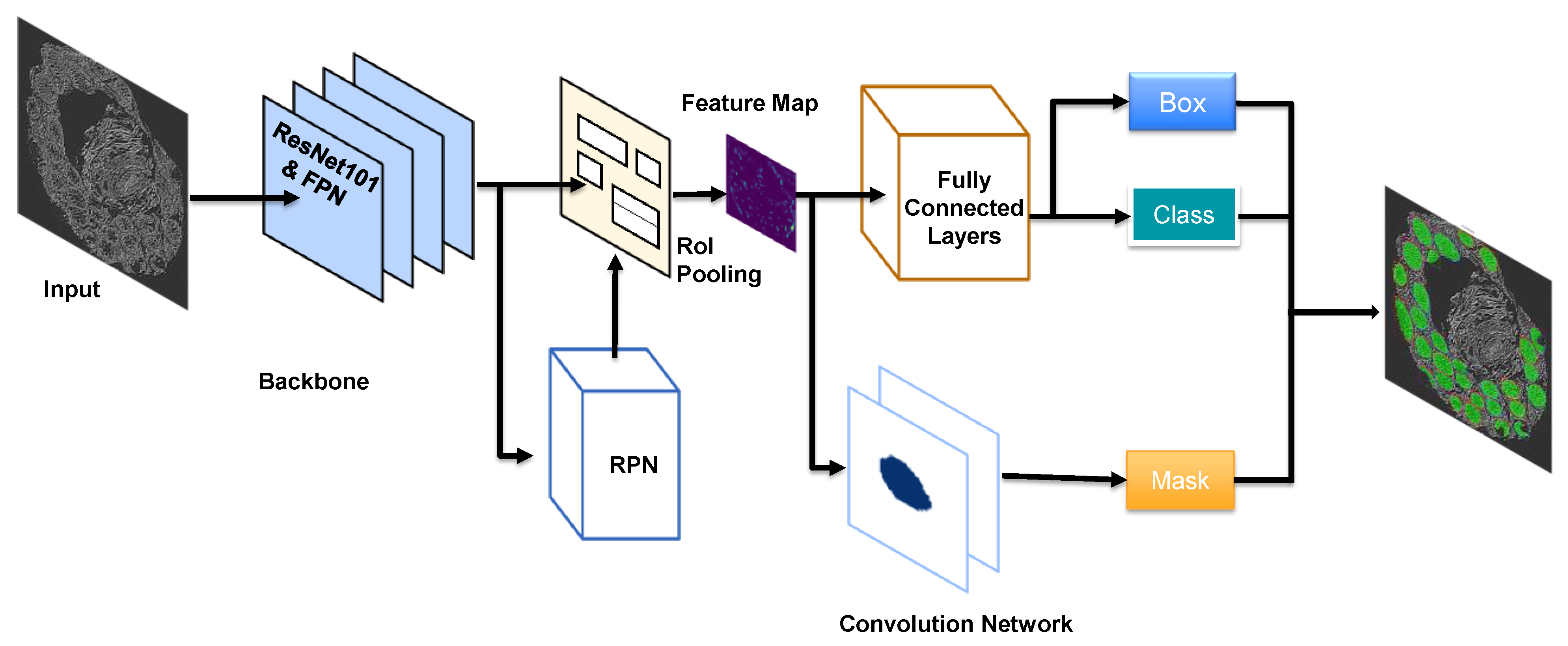

2.3. Deep Learning Network

2.4. Whole Core Segmentation and Classification

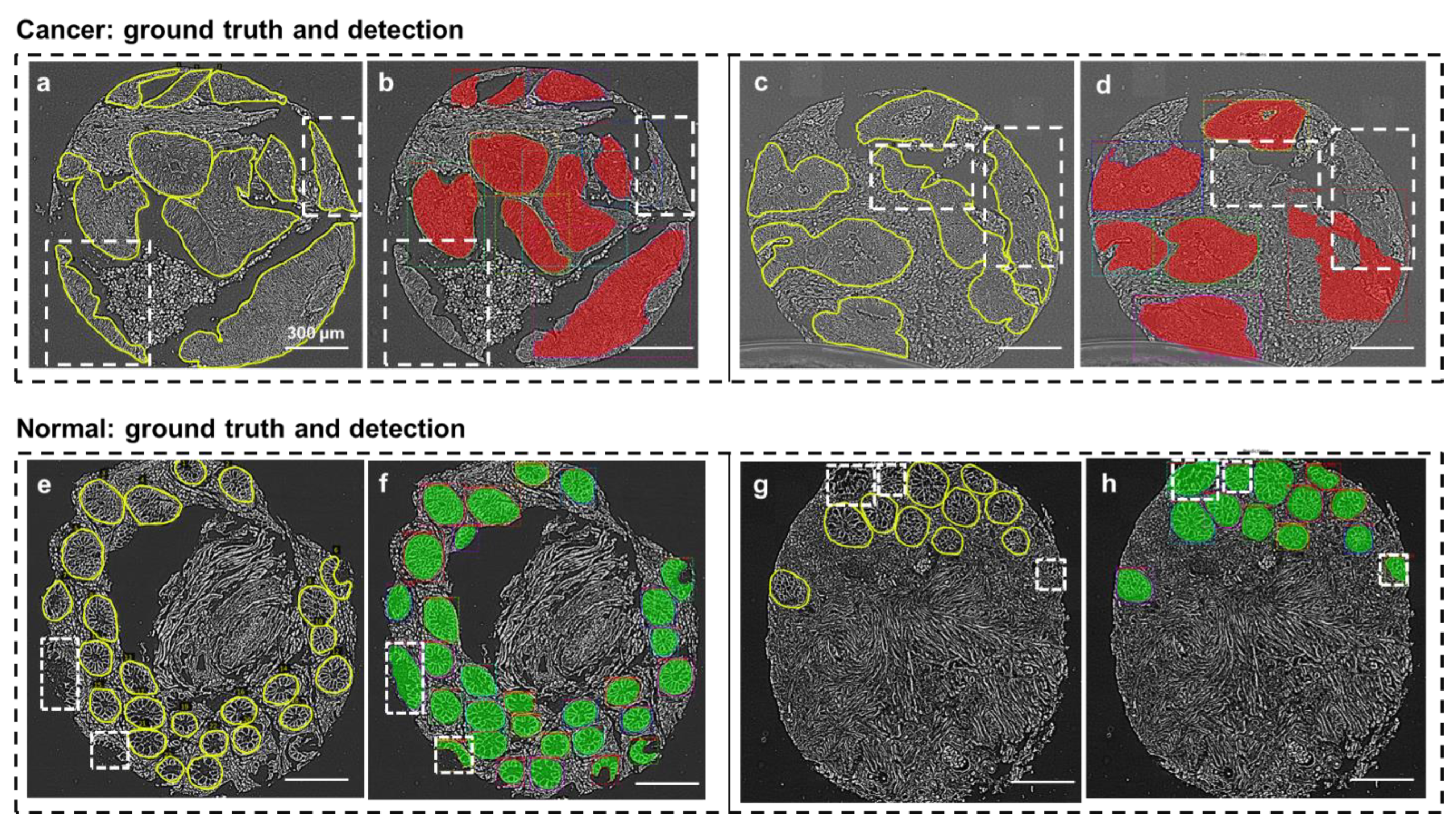

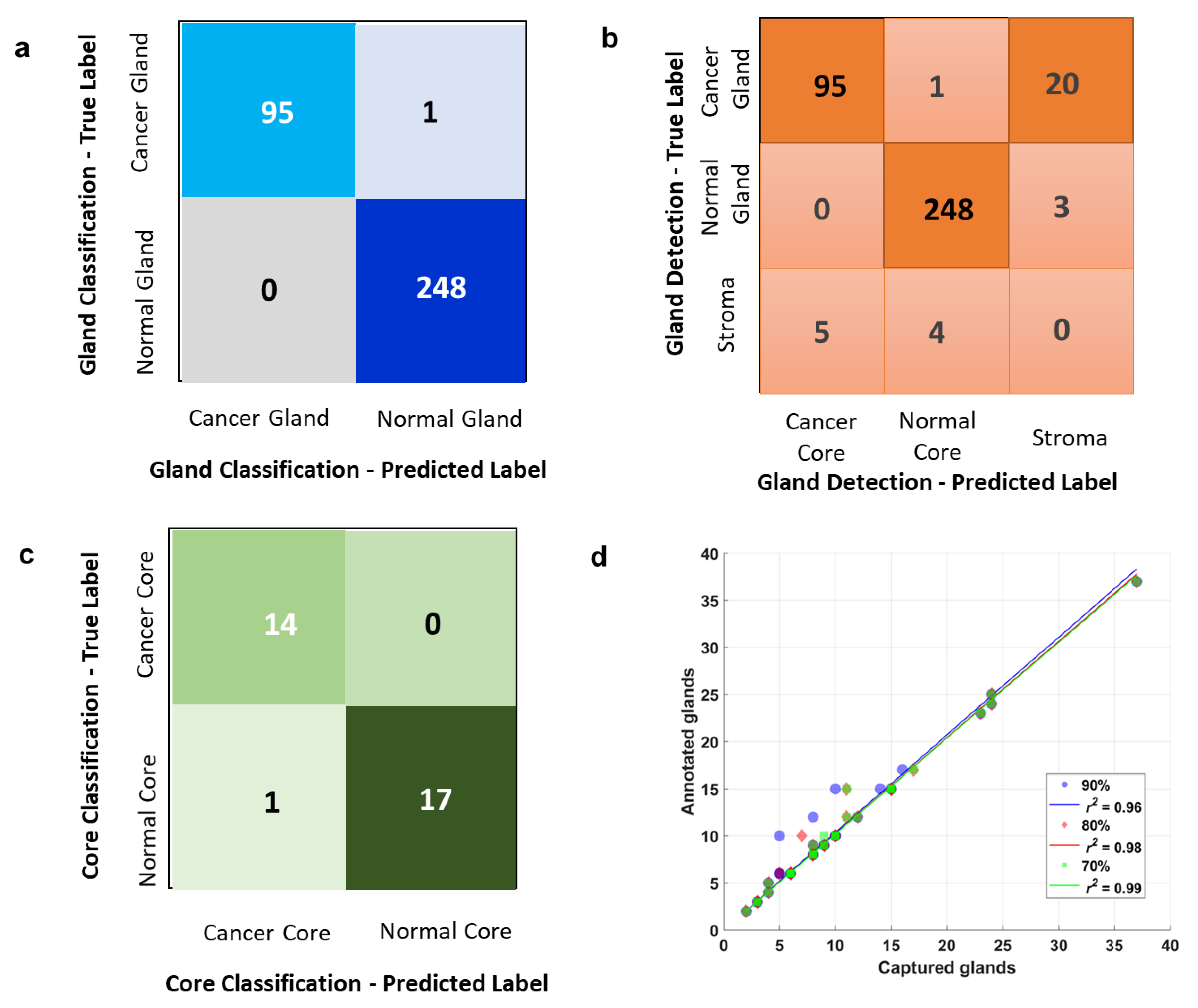

2.5. Overall Gland Classification and Detection

2.6. Overall Core Classification

2.7. Detection Performance at Three Different Confidence Scores

2.8. Accuracy Reports in Classification, Detecting and Diagnosis

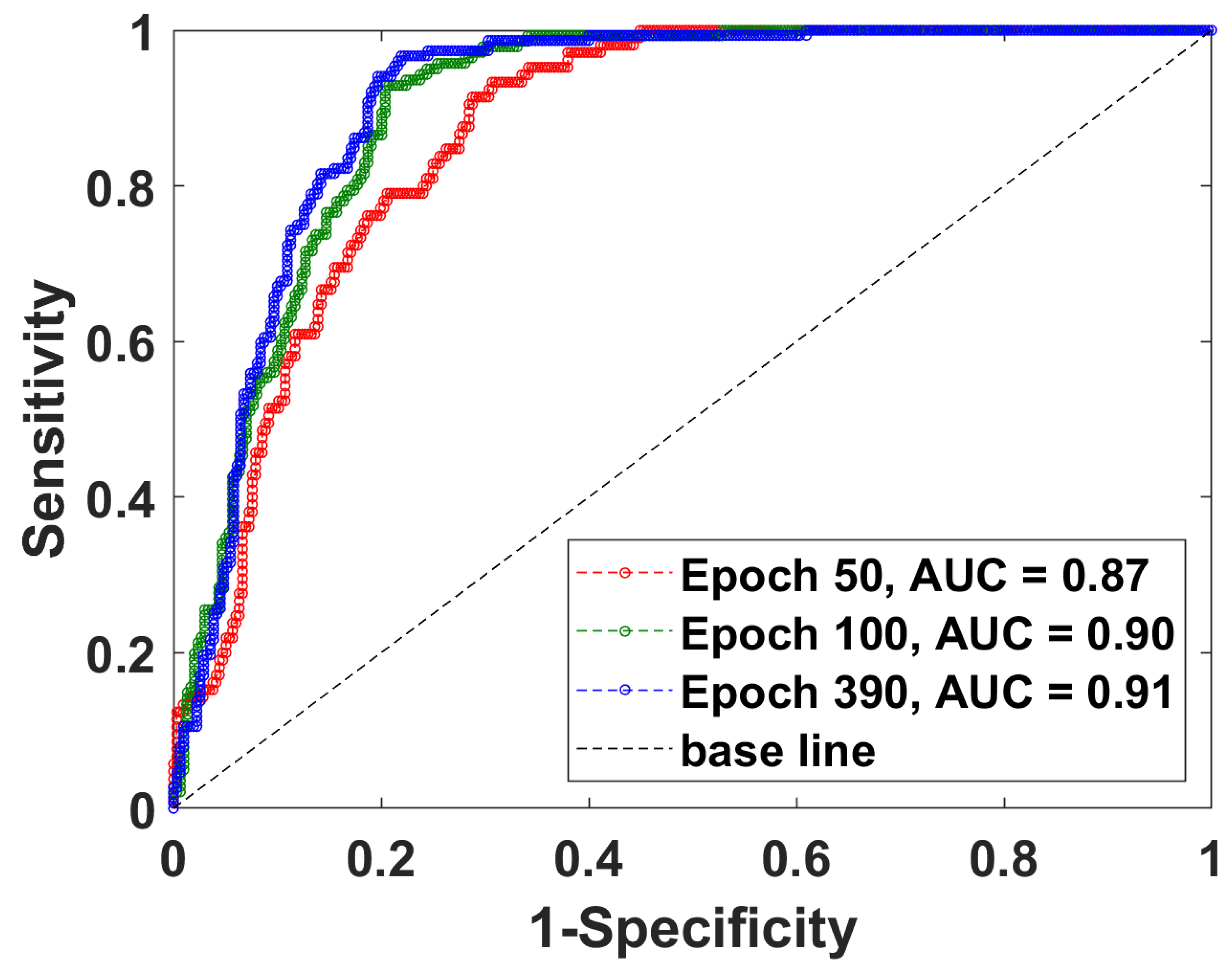

2.9. Detection Performance at Three Different Epochs

3. Summary and Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Muto, T.; Bussey, H.; Morson, B. The evolution of cancer of the colon and rectum. Cancer 1975, 36, 2251–2270. [Google Scholar] [CrossRef] [PubMed]

- Howlader, N.; Krapcho, M.N.A.; Garshell, J.; Miller, D.; Altekruse, S.F.; Kosary, C.L.; Yu, M.; Ruhl, J.; Tatalovich, Z.; Mariotto, A. SEER Cancer Statistics Review, 1975–2011; National Cancer Institute: Bethesda, MD, USA, 2014. [Google Scholar]

- Klabunde, C.N.; Joseph, D.A.; King, J.B.; White, A.; Plescia, M. Vital signs: Colorectal cancer screening test use—United States, 2012. MMWR Morb. Mortal. Wkly. Rep. 2013, 62, 881. [Google Scholar]

- Colorectal Cancer Facts & Figures 2020–2022; American Cancer Society: Atlanta, GA, USA, 2020.

- Bibbins-Domingo, K.; Grossman, D.C.; Curry, S.J.; Davidson, K.W.; Epling, J.W.; García, F.A.; Gillman, M.W.; Harper, D.M.; Kemper, A.R.; Krist, A.H.; et al. Screening for colorectal cancer: US Preventive Services Task Force recommendation statement. JAMA 2016, 315, 2564–2575. [Google Scholar] [PubMed]

- Ng, K.; May, F.P.; Schrag, D. US Preventive Services Task Force Recommendations for Colorectal Cancer Screening: Forty-Five Is the New Fifty. JAMA 2021, 325, 1943–1945. [Google Scholar] [CrossRef]

- Giacosa, A.; Frascio, F.; Munizzi, F. Epidemiology of colorectal polyps. Tech. Coloproctol. 2004, 8, s243–s247. [Google Scholar] [CrossRef]

- Winawer, S.J. Natural history of colorectal cancer. Am. J. Med. 1999, 106, 3–6. [Google Scholar] [CrossRef]

- Karen, P.; Kevin, L.; Katie, K.; Robert, S.; Mary, D.; Holly, W.; Weber, T. Coverage of Colonoscopies under the Affordable Care Act’s Prevention Benefit. The Henry J. Kaiser Family Foundation, American Cancer Society, and National Colorectal Cancer Roundtable. September 2012. Available online: http://kaiserfamilyfoundation.files.wordpress.com/2013/01/8351.pdf (accessed on 8 January 2022).

- Pantanowitz, L. Automated pap tests. In Practical Informatics for Cytopathology; Springer: New York, NY, USA, 2014; pp. 147–155. [Google Scholar]

- Kandel, M.; Sridharan, S.; Liang, J.; Luo, Z.; Han, K.; Macias, V.; Shah, A.; Patel, R.; Tangella, K.; Kajdacsy-Balla, A.; et al. Label-free tissue scanner for colorectal cancer screening. J. Biomed. Opt. 2017, 22, 066016. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Popescu, G. Quantitative Phase Imaging of Cells and Tissues; McGraw Hill Professional: New York, NY, USA, 2011. [Google Scholar]

- Jiao, Y.; Kandel, M.E.; Liu, X.; Lu, W.; Popescu, G. Real-time Jones phase microscopy for studying transparent and birefringent specimens. Opt. Express 2020, 28, 34190–34200. [Google Scholar] [CrossRef] [PubMed]

- Lue, N.; Choi, W.; Popescu, G.; Badizadegan, K.; Dasari, R.R.; Feld, M.S. Synthetic aperture tomographic phase microscopy for 3D imaging of live cells in translational motion. Opt. Express 2008, 16, 16240–16246. [Google Scholar] [CrossRef]

- Wang, Z.; Millet, L.J.; Gillette, M.U.; Popescu, G. Jones phase microscopy of transparent and anisotropic samples. Opt. Lett. 2008, 33, 1270–1272. [Google Scholar] [CrossRef] [Green Version]

- Majeed, H.; Keikhosravi, A.; Kandel, M.E.; Nguyen, T.H.; Liu, Y.; Kajdacsy-Balla, A.; Tangella, K.; Eliceiri, K.; Popescu, G. Quantitative Histopathology of Stained Tissues using Color Spatial Light Interference Microscopy (cSLIM). Sci. Rep. 2019, 9, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Majeed, H.; Okoro, C.; Kajdacsy-Balla, A.; Toussaint, K.C.; Popescu, G. Quantifying collagen fiber orientation in breast cancer using quantitative phase imaging. J. Biomed. Opt. 2017, 22, 046004. [Google Scholar] [CrossRef] [PubMed]

- Majeed, H.; Sridharan, S.; Mir, M.; Ma, L.; Min, E.; Jung, W.; Popescu, G. Quantitative phase imaging for medical diagnosis. J. Biophotonics 2016, 10, 177–205. [Google Scholar] [CrossRef]

- Sridharan, S.; Macias, V.; Tangella, K.; Kajdacsy-Balla, A.; Popescu, G. Prediction of Prostate Cancer Recurrence Using Quantitative Phase Imaging. Sci. Rep. 2015, 5, 9976. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Sridharan, S.; Macias, V.; Tangella, K.; Melamed, J.; Dube, E.; Kong, M.X.; Kajdacsy-Balla, A.; Popescu, G. Prediction of prostate cancer recurrence using quantitative phase imaging: Validation on a general population. Sci. Rep. 2016, 6, 33818. [Google Scholar] [CrossRef]

- Uttam, S.; Pham, H.V.; LaFace, J.; Leibowitz, B.; Yu, J.; Brand, R.E.; Hartman, D.J.; Liu, Y. Early Prediction of Cancer Progression by Depth-Resolved Nanoscale Mapping of Nuclear Architecture from Unstained Tissue Specimens. Cancer Res. 2015, 75, 4718–4727. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, T.; Zhou, R.; Goddard, L.L.; Popescu, G. Solving inverse scattering problems in biological samples by quantitative phase imaging. Laser Photon- Rev. 2015, 10, 13–39. [Google Scholar] [CrossRef]

- Nguyen, T.H.; Sridharan, S.; Macias, V.; Kajdacsy-Balla, A.; Melamed, J.; Do, M.N.; Popescu, G. Automatic Gleason grading of prostate cancer using quantitative phase imaging and machine learning. J. Biomed. Opt. 2017, 22, 036015. [Google Scholar] [CrossRef]

- Majeed, H.; Nguyen, T.H.; Kandel, M.E.; Kajdacsy-Balla, A.; Popescu, G. Label-free quantitative evaluation of breast tissue using Spatial Light Interference Microscopy (SLIM). Sci. Rep. 2018, 8, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Takabayashi, M.; Majeed, H.; Kajdacsy-Balla, A.; Popescu, G. Disorder strength measured by quantitative phase imaging as intrinsic cancer marker in fixed tissue biopsies. PLoS ONE 2018, 13, e0194320. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Tangella, K.; Balla, A.; Popescu, G. Tissue refractive index as marker of disease. J. Biomed. Opt. 2011, 16, 116017–1160177. [Google Scholar] [CrossRef] [PubMed]

- Mir, M.; Kim, T.; Majumder, A.A.S.; Xiang, M.; Wang, R.; Liu, S.C.; Gillette, M.U.; Stice, S.L.; Popescu, G. Label-Free Characterization of Emerging Human Neuronal Networks. Sci. Rep. 2014, 4, 4434. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, J.K.; He, Y.R.; Sobh, N.; Popescu, G. Label-free colorectal cancer screening using deep learning and spatial light interference microscopy (SLIM). APL Photon. 2020, 5, 040805. [Google Scholar] [CrossRef] [PubMed]

- Mahjoubfar, A.; Chen, C.L.; Jalali, B. Deep Learning and Classification. In Artificial Intelligence in Label-Free Microscopy; Springer: New York, NY, USA, 2017; pp. 73–85. [Google Scholar]

- Christiansen, E.M.; Yang, S.J.; Ando, D.M.; Javaherian, A.; Skibinski, G.; Lipnick, S.; Mount, E.; O’Neil, A.; Shah, K.; Lee, A.K.; et al. In Silico Labeling: Predicting Fluorescent Labels in Unlabeled Images. Cell 2018, 173, 792–803. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ounkomol, C.; Seshamani, S.; Maleckar, M.M.; Collman, F.; Johnson, G.R. Label-free prediction of three-dimensional fluorescence images from transmitted-light microscopy. Nat. Methods 2018, 15, 917–920. [Google Scholar] [CrossRef]

- Rivenson, Y.; Liu, T.; Wei, Z.; Zhang, Y.; De Haan, K.; Ozcan, A. PhaseStain: The digital staining of label-free quantitative phase microscopy images using deep learning. Light. Sci. Appl. 2019, 8, 1–11. [Google Scholar] [CrossRef]

- Rivenson, Y.; Wang, H.; Wei, Z.; de Haan, K.; Zhang, Y.; Wu, Y.; Günaydın, H.; Zuckerman, J.E.; Chong, T.; Sisk, A.E.; et al. Virtual histological staining of unlabelled tissue-autofluorescence images via deep learning. Nat. Biomed. Eng. 2019, 3, 466–477. [Google Scholar] [CrossRef] [Green Version]

- Purandare, S.; Zhu, J.; Zhou, R.; Popescu, G.; Schwing, A.; Goddard, L.L. Optical inspection of nanoscale structures using a novel machine learning based synthetic image generation algorithm. Opt. Express 2019, 27, 17743–17762. [Google Scholar] [CrossRef]

- Jiao, Y.; He, Y.R.; Kandel, M.E.; Liu, X.; Lu, W.; Popescu, G. Computational interference microscopy enabled by deep learning. APL Photon. 2021, 6, 046103. [Google Scholar] [CrossRef]

- Wang, Z.; Millet, L.; Mir, M.; Ding, H.; Unarunotai, S.; Rogers, J.; Gillette, M.U.; Popescu, G. Spatial light interference microscopy (SLIM). Opt. Express 2011, 19, 1016–1026. [Google Scholar] [CrossRef]

- Kim, T.; Zhou, R.; Mir, M.; Babacan, S.D.; Carney, P.S.; Goddard, L.L.; Popescu, G. White-light diffraction tomography of unlabelled live cells. Nat. Photon. 2014, 8, 256–263. [Google Scholar] [CrossRef] [Green Version]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 7–13 December 2015. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Processing Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Abdulla, W. Mask R-CNN for Object Detection and Instance Segmentation on Keras and TensorFlo. Mask_RCNN. 2017. Available online: https://github.com/matterport (accessed on 8 January 2022).

- Tsang, A.H.F.; Cheng, K.H.; Wong, A.S.P.; Ng, S.S.M.; Ma, B.B.Y.; Chan, C.M.L.; Tsui, N.B.Y.; Chan, L.W.C.; Yung, B.Y.M.; Wong, S.C.C. Current and future molecular diagnostics in colorectal cancer and colorectal adenoma. World J. Gastroenterol. 2014, 20, 3847. [Google Scholar] [CrossRef] [PubMed]

- Kandel, M.E.; He, Y.R.; Lee, Y.J.; Chen, T.H.-Y.; Sullivan, K.M.; Aydin, O.; Saif, M.T.A.; Kong, H.; Sobh, N.; Popescu, G. Phase imaging with computational specificity (PICS) for measuring dry mass changes in sub-cellular compartments. Nat. Commun. 2020, 11, 6256. [Google Scholar] [CrossRef] [PubMed]

| Total Images | Cancer Images | Normal Images | Percentage | |

|---|---|---|---|---|

| Train | 196 | 98 | 98 | 76% |

| Validation | 30 | 15 | 15 | 12% |

| Test | 32 | 14 | 18 | 12% |

| Total | 258 | 127 | 131 | N/A |

| Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|

| Cancer Gland | 0.95 | 0.82 | 0.88 | 116 |

| Normal Gland | 0.98 | 0.99 | 0.98 | 251 |

| Stroma | 0.00 | 0.00 | 0.00 | 9 |

| Accuracy | 0.91 | 376 | ||

| Macro Avg | 0.64 | 0.60 | 0.62 | 376 |

| Weighted Avg | 0.95 | 0.91 | 0.93 | 376 |

| Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|

| Cancer Gland | 1.00 | 0.99 | 0.99 | 96 |

| Normal Gland | 1.00 | 1.00 | 1.00 | 248 |

| Accuracy | 1.00 | 344 | ||

| Macro Avg | 1.00 | 0.99 | 1.00 | 344 |

| Weighted Avg | 1.00 | 01.00 | 1.00 | 344 |

| Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|

| Cancer | 0.93 | 1.00 | 0.97 | 14 |

| Normal | 1.00 | 0.94 | 0.97 | 18 |

| Accuracy | 0.97 | 32 | ||

| Macro Avg | 0.97 | 0.97 | 0.97 | 32 |

| Weighted Avg | 0.97 | 0.97 | 0.97 | 32 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.K.; Fanous, M.; Sobh, N.; Kajdacsy-Balla, A.; Popescu, G. Automatic Colorectal Cancer Screening Using Deep Learning in Spatial Light Interference Microscopy Data. Cells 2022, 11, 716. https://doi.org/10.3390/cells11040716

Zhang JK, Fanous M, Sobh N, Kajdacsy-Balla A, Popescu G. Automatic Colorectal Cancer Screening Using Deep Learning in Spatial Light Interference Microscopy Data. Cells. 2022; 11(4):716. https://doi.org/10.3390/cells11040716

Chicago/Turabian StyleZhang, Jingfang K., Michael Fanous, Nahil Sobh, Andre Kajdacsy-Balla, and Gabriel Popescu. 2022. "Automatic Colorectal Cancer Screening Using Deep Learning in Spatial Light Interference Microscopy Data" Cells 11, no. 4: 716. https://doi.org/10.3390/cells11040716

APA StyleZhang, J. K., Fanous, M., Sobh, N., Kajdacsy-Balla, A., & Popescu, G. (2022). Automatic Colorectal Cancer Screening Using Deep Learning in Spatial Light Interference Microscopy Data. Cells, 11(4), 716. https://doi.org/10.3390/cells11040716