Abstract

Efficient and accurate weed detection in wheat fields is critical for precision agriculture to optimize crop yield and minimize herbicide usage. The dataset for weed detection in wheat fields was created, encompassing 5967 images across eight well-balanced weed categories, and it comprehensively covers the entire growth cycle of spring wheat as well as the associated weed species observed throughout this period. Based on this dataset, PMDNet, an improved object detection model built upon the YOLOv8 architecture, was introduced and optimized for wheat field weed detection tasks. PMDNet incorporates the Poly Kernel Inception Network (PKINet) as the backbone, the self-designed Multi-Scale Feature Pyramid Network (MSFPN) for multi-scale feature fusion, and Dynamic Head (DyHead) as the detection head, resulting in significant performance improvements. Compared to the baseline YOLOv8n model, PMDNet increased mAP@0.5 from 83.6% to 85.8% (+2.2%) and mAP@0.50:0.95 from 65.7% to 69.6% (+5.9%). Furthermore, PMDNet outperformed several classical single-stage and two-stage object detection models, achieving the highest precision (94.5%, 14.1% higher than Faster-RCNN) and mAP@0.5 (85.8%, 5.4% higher than RT-DETR-L). Under the stricter mAP@0.50:0.95 metric, PMDNet reached 69.6%, surpassing Faster-RCNN by 16.7% and RetinaNet by 13.1%. Real-world video detection tests further validated PMDNet’s practicality, achieving 87.7 FPS and demonstrating high precision in detecting weeds in complex backgrounds and small targets. These advancements highlight PMDNet’s potential for practical applications in precision agriculture, providing a robust solution for weed management and contributing to the development of sustainable farming practices.

1. Introduction

With the continuous growth of the global population, food security is becoming an increasingly pressing issue. By 2050, the global population is expected to exceed 9 billion, which would require a 70% to 100% increase in food production to meet the rising demand [1]. As one of the most important staple crops worldwide, wheat plays a crucial role in the global food system. According to a report by the Food and Agriculture Organization (FAO), wheat is the most widely cultivated cereal globally and provides a primary food source for billions of people each year [2]. However, the increase in wheat yields is confronted by several challenges, among which weed infestation is a significant factor.

Weeds compete with wheat for resources such as water, nutrients, and sunlight, significantly affecting the growth and yield of wheat. For instance, the study by Anwar et al. [3] (2021) demonstrates that the presence of weeds significantly reduces yield, especially in the context of climate change. Colbach et al. [4] (2014) further point out that weed competition severely reduces wheat yield, with the intensity of the competition directly correlated to the weed biomass. Additionally, Jalli et al. [5] (2021) emphasize that weeds compete for resources, potentially reducing crop yield, and that diverse crop rotations can help mitigate the severity of plant diseases and pest occurrences.

Moreover, Javaid et al. [6] (2022) observed that the uncontrolled growth of weeds significantly reduces wheat productivity by affecting the photosynthetic activity and overall physiological performance of the crop. Similarly, Usman et al. [7] (2010) conducted research in Northwestern Pakistan and demonstrated that insufficient weed control drastically lowers wheat yields. Their study found that the use of reduced and zero tillage systems, in combination with appropriate herbicides such as Affinity, significantly improved weed control efficiency (up to 94.1%) and resulted in higher wheat productivity compared to conventional tillage. Therefore, the implementation of effective weed management strategies is crucial for maintaining wheat health and productivity.

Traditional weed management methods in agriculture, particularly in wheat fields, such as manual weeding and herbicide use, face significant limitations. Manual weeding, while effective in precisely removing weeds, is labor-intensive and time-consuming. It requires significant manpower, which is often expensive and scarce, especially in large-scale agricultural operations. Furthermore, manual weeding is not always feasible due to its dependency on specific timing during the growing season. Shamshiri et al. [8] (2024) pointed out that although manual weeding is considered efficient in selective weed removal, its high labor costs and limited scalability make it impractical for modern agricultural systems.

On the other hand, herbicide use, while effective for large-scale weed control, has led to increasing issues of herbicide-resistant weed species and environmental contamination. Recent research has shown that the overuse of herbicides accelerates the development of resistant weed populations, complicating long-term management. For example, Reed et al. [9] (2024) noted that herbicide resistance in barnyardgrass continues to increase, leading farmers to apply higher doses or a mix of chemicals, which not only raises production costs but also exacerbates environmental concerns related to soil and water contamination.

Given the challenges associated with traditional weed management, effective weed identification is crucial in wheat fields. Meesaragandla et al. [10] (2023) emphasize that effective weed identification is critical in modern agriculture, particularly in wheat fields, to address the inefficiencies and challenges posed by traditional weed management methods. Their study highlights how advanced detection techniques improve the precision and effectiveness of weed control strategies.

In recent years, with the rapid development of computer vision and deep learning technologies, automated image recognition has become increasingly prevalent in agriculture. Significant progress in object detection enables weed recognition through machine vision, enhancing agricultural precision while reducing herbicide use and promoting sustainable farming.

For example, Punithavathi et al. [11] (2023) proposed a model combining computer vision and deep learning that significantly improves weed detection in precision agriculture. Additionally, Razfar et al. [12] (2022) developed a lightweight deep learning model successfully applied to weed detection in soybean fields, achieving higher efficiency with reduced computational costs.

At the same time, Rakhmatulin et al. [13] (2021) provided a comprehensive review of various deep learning models applied to real-time weed detection in agricultural settings, discussing current challenges and future directions. These studies demonstrate that deep learning-based weed recognition technologies not only enhance agricultural productivity but also drive the advancement of smart farming.

The YOLO (You Only Look Once) model [14] series represents cutting-edge technology in the field of object detection. Known for its high real-time efficiency and superior detection accuracy, YOLO models are widely applied across various object detection tasks. The core advantage of YOLO lies in its ability to complete both object localization and classification in a single network pass, significantly increasing detection speed compared to traditional algorithms.

Due to its end-to-end architecture, high detection speed, and accuracy, YOLO has become a major focus in precision agriculture, especially for real-time applications such as weed detection using drone monitoring systems and smart farming equipment. As noted by Gallo et al. [15] (2023), the YOLOv7 model excels in weed detection in precision agriculture, offering fast and precise detection through UAV images.

Compared to other deep learning models, the YOLO model’s lightweight structure allows it to operate efficiently on resource-constrained devices. The proliferation of the Internet of Things (IoT) in agriculture has further expanded YOLO’s potential for application in low-power embedded systems. Zhang et al. [16] (2024) successfully deployed the GVC-YOLO model on the Nvidia Jetson Xavier NX edge computing device, utilizing real-time video captured by field cameras to detect and process aphid damage on cotton leaves. This application not only reduces the need for manual intervention and labor costs but also promotes the development of intelligent agriculture.

The standard YOLO model faces significant challenges in complex agricultural environments, particularly in detecting weeds and wheat under conditions of occlusion and overlap. Wheat fields often feature complex backgrounds, variable lighting (e.g., strong sunlight, shadows), and changing weather conditions (e.g., sunny, cloudy, foggy), all of which degrade detection performance. Additionally, morphological similarities between weed species and wheat, as well as among weed species themselves, further complicate classification, increasing false positives and missed detections. These limitations hinder the model’s reliability in real-world agricultural applications, highlighting the need for optimization to enhance adaptability and accuracy.

To address these issues, this study proposes PMDNet, an improved model built upon the YOLOv8 [17] framework, introducing targeted enhancements specifically tailored to the unique scenarios and challenges of wheat fields. These improvements are designed to significantly enhance both detection accuracy and efficiency in weed identification.

2. Related Work

2.1. Traditional Methods for Weed Identification in Wheat Fields

In traditional agricultural practices, weed identification predominantly depends on the farmers’ experience and visual observation skills. This manual approach requires substantial field expertise, as farmers distinguish weeds from crops based on visible characteristics such as leaf shape, color, and stem structure. Although effective when distinguishing clearly different species, this method is labor-intensive and varies in accuracy, contingent upon the individual farmer’s expertise.

In certain cases, farmers employ simple tools like magnifying glasses to enhance their observation of plant characteristics. However, these traditional techniques remain manual and susceptible to human error. As agricultural practices expand in scale, the limitations in efficiency and precision underscore the necessity for more advanced and systematic methods for weed identification.

2.2. Application of Deep Learning in Weed Identification

Recent advancements in deep learning have significantly impacted the agricultural sector, particularly in weed identification. Traditional weed management methods, relying on manual identification or excessive herbicide use, are time-consuming and environmentally harmful. Deep learning and computer vision technologies now enable automated weed identification systems, leveraging models like Convolutional Neural Networks and YOLO to accurately classify and identify weeds and crops, offering critical support for precision agriculture.

For instance, Upadhyay et al. [18] (2024) developed a machine vision and deep learning-based smart sprayer system aimed at site-specific weed management in row crops. The study utilized edge computing technology to enable real-time identification of multiple weed species and precise spraying, thereby reducing unnecessary chemical usage and enhancing the sustainability of crop production.

In another study, Ali et al. [19] (2024) introduced a comprehensive dataset for weed detection in rice fields in Bangladesh, using deep learning for identification. The dataset, consisting of 3632 high-resolution images of 11 common weed species, provides a foundational resource for developing and evaluating advanced machine learning algorithms, enhancing the practical application of weed detection technology in agriculture.

Additionally, Coleman et al. [20] (2024) presented a multi-growth stage plant recognition system for Palmer amaranth (Amaranthus palmeri) in cotton (Gossypium hirsutum), utilizing various YOLO architectures. Among these, YOLOv8-X achieved the highest detection accuracy (mean average precision, mAP@[0.5:0.95]) of 47.34% across eight growth stages, and YOLOv7-Original attained 67.05% for single-class detection, demonstrating the significant potential of YOLO models for phenotyping and weed management applications.

The application of deep learning in weed identification enables precision agriculture by reducing herbicide usage, improving weed recognition accuracy, and ultimately minimizing the environmental impact of agricultural activities.

2.3. Overview of YOLO Model

The development of the YOLO (You Only Look Once) model began in 2016 when Redmon et al. [14] (2016) introduced the initial version. This pioneering model reframed object detection as a single-stage regression task, achieving remarkable speed and positioning YOLO as a crucial model for real-time applications. YOLOv2 [21], building on this foundation, incorporated strategies like Batch Normalization, Anchor Boxes, and multi-scale training, leading to notable improvements in accuracy and generalizability. By 2018, YOLOv3 [22] further refined the network structure, employing a Feature Pyramid Network (FPN) to facilitate multi-scale feature detection, thereby significantly enhancing the detection performance for small objects.

In 2020, YOLOv4, led by Bochkovskiy et al. [23] (2020), integrated several advancements, such as CSPNet, Mish activation, and CIoU loss, achieving a balanced trade-off between detection precision and speed, solidifying its position as a standard in the field. Ultralytics then released YOLOv5 [24], focusing on a lightweight design that enabled efficient inference even on mobile and resource-constrained devices. As YOLO continued to gain prominence in diverse applications, further iterations were introduced, including YOLOv6 [25] and YOLOv7 [26], which employed depthwise separable convolutions and modules inspired by EfficientNet [27] to optimize both model efficiency and inference speed. YOLOv8 builds on these foundations, delivering enhanced performance and robustness in multi-object detection tasks.

2.4. Current Research on Weed Identification Based on Improved YOLO Models

In recent years, YOLO models have been widely applied to agricultural weed identification, with researchers improving accuracy and real-time performance through various optimizations. This study provides an overview of current advancements in YOLO-based weed detection and analyzes the limitations, identifying areas for further improvement.

- (1)

- Enhancements in Precision and Detection Capability

Recent advancements in object detection algorithms have significantly improved precision in agricultural weed detection. In a study by Sportelli et al. [28] (2023), various YOLO object detectors, including YOLOv5, YOLOv6, YOLOv7, and YOLOv8, were evaluated for detecting weeds in turfgrass under varying conditions. YOLOv8l demonstrated the highest performance, achieving a precision of 94.76%, mAP_0.5 of 97.95%, and mAP_0.5:0.95 of 81.23%. However, challenges remain, as the results on additional datasets revealed limitations in robustness when dealing with diverse and complex backgrounds. The study highlighted the potential for further enhancements, such as integrating advanced annotation techniques and incorporating diverse vegetative indices to better address challenges like overlapping weeds and inconsistent lighting conditions.

- (2)

- Lightweight Design and Real-Time Optimization

To improve detection speed and accuracy, several studies have focused on lightweight versions of the YOLO model. Zhu et al. [29] (2024) proposed a YOLOx model enhanced with a lightweight attention module and a deconvolution layer, significantly reducing computational load and improving real-time detection and small feature extraction. However, the model’s precision still faces challenges in complex agricultural environments where weed occlusion is frequent, indicating a need for further optimization in robustness and feature extraction efficiency.

- (3)

- Multi-Class Identification and Classification

In cotton production, Dang et al. [30] (2022) developed the YOLOWeeds model and created the CottonWeedDet12 dataset for multi-class weed detection. Their approach successfully classified various types of weeds in cotton fields, achieving high accuracy with data augmentation for improved model adaptability. However, the study primarily focused on cotton-specific environments, and the model’s transferability to other crops or environments with greater weed diversity was not explored in detail, suggesting a potential area for further research.

- (4)

- Incorporating Attention Mechanisms

Some studies have enhanced YOLO models with attention mechanisms to improve focus and precision in weed detection tasks. For example, Chen et al. [31] (2022) introduced the YOLO-sesame model, which integrates an attention mechanism, local importance pooling in the SPP layer, and an adaptive spatial feature fusion structure to address variations in weed size and shape. The model achieved superior detection performance in sesame fields, with a mAP of 96.16% and F1 scores of 0.91 and 0.92 for sesame crops and weeds, respectively. Although the YOLO-sesame model showed promising results, further research is needed to test its adaptability in diverse agricultural environments.

While effective in specific contexts, improved YOLO models face limitations in robustness and accuracy under diverse conditions, such as varying lighting and complex backgrounds. Additionally, their performance is often crop-specific, lacking the generalizability required for broader agricultural applications.

3. Dataset Construction and Preprocessing

3.1. Dataset Construction

The dataset was collected in Anding District, Dingxi City, Gansu Province, China, with geographical coordinates at 104°39′3.05″ E longitude and 35°34′45.4″ N latitude. It covers the entire growth cycle of spring wheat, with the collection period spanning from 8 April 2024 to 20 July 2024. The dataset includes key phenological stages such as seedling emergence, tillering, stem elongation, heading, grain filling, and maturation.

The data were gathered using a smartphone with a 40-megapixel primary camera. All photos were captured at a resolution of 2736 × 2736 pixels. Images were taken at a distance of approximately 30 cm to 100 cm from the ground, ensuring that the weeds were clearly visible while maintaining the natural context of the wheat field. Considering the training effectiveness of the YOLOv8 model, each image contains between 1 and 5 bounding boxes of target weeds, reflecting the natural distribution and density of the weeds in the field.

To ensure environmental diversity, the dataset includes images taken under various weather conditions, such as sunny, cloudy, and rainy days. Multiple shooting angles were employed, including vertical shots, frontal views, and 45-degree angles, enhancing the comprehensiveness of the dataset. A total of 4274 images were collected, covering the eight most common weed species in the collection area.

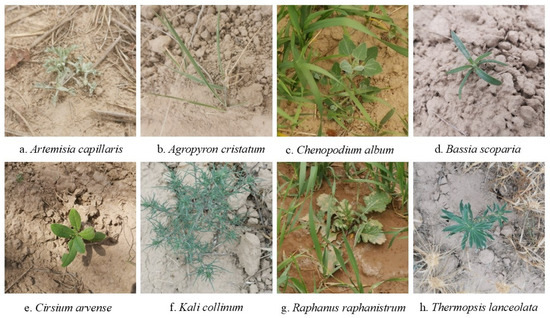

The targeted weed species, as shown in Figure 1, include Artemisia capillaris, Agropyron cristatum, Chenopodium album, Bassia scoparia, Cirsium arvense, Kali collinum, Raphanus raphanistrum, and Thermopsis lanceolata.

Figure 1.

Example of Weeds.

3.2. Data Annotation

Data annotation was performed using LabelMe software. Trained personnel conducted detailed labeling of each image by consulting local wheat field farmers to ensure accurate identification of weed species. Additionally, all annotations were reviewed and verified by weed specialists to guarantee the precision and consistency of the labeling, with each weed species labeled using its Latin scientific name. On average, each image contained 1 to 5 annotation boxes, resulting in a total of 10,127 initial annotation boxes. After the initial labeling, weed experts carefully reviewed the annotations to address cases involving overlapping or occluded weeds and ensure labeling accuracy.

3.3. Data Augmentation and Data Splitting

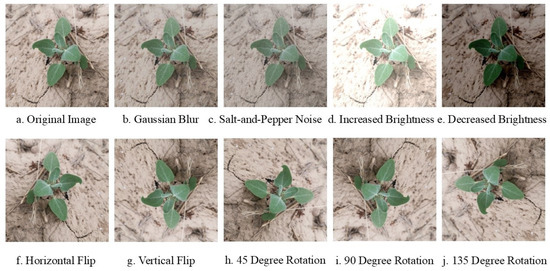

To address the initial imbalance in the collection of images across different categories, several data augmentation techniques were implemented to supplement and balance the dataset, improving its robustness, as discussed in Xu et al. [32] (2023). This approach is illustrated in Figure 2, demonstrating how these techniques result in a more representative and varied dataset. These techniques included adjusting brightness levels to simulate various lighting conditions in real-world environments, ensuring the model can effectively recognize weed species under different illumination, as seen in subfigures (d) Increased Brightness and (e) Decreased Brightness. Gaussian blur was applied to mimic varying focus conditions, as depicted in subfigure (b) Gaussian Blur, helping the model identify weeds even when images are not perfectly in focus. Additionally, salt-and-pepper noise was introduced to replicate disturbances in real-world imaging scenarios, enhancing the model’s resilience to noise, as illustrated in subfigure (c) Salt-and-Pepper Noise.

Figure 2.

Example of Data Augmentation.

Furthermore, horizontal and vertical flips were performed to capture the weed species from different orientations, represented in subfigures (f) Horizontal Flip and (g) Vertical Flip. Finally, several rotational transformations were applied, including 45 degrees, 90 degrees, and 135 degrees, to ensure the model can recognize the weeds from multiple perspectives, as indicated in subfigures (h), (i), and (j), respectively. This comprehensive approach to data augmentation helps create a more balanced and effective training dataset, ultimately enhancing the model’s performance in identifying weed species.

In terms of data splitting, the original dataset comprised 4274 images. After data augmentation, the total number of images increased to 5967. A random selection of 600 images was used for the validation set, and 1167 images were allocated to the test set, with the remaining images forming the training set. The training set consisted of 4200 images, the validation set contained 600 images, and the test set included 1167 images, totaling 12,866 labeled boxes. The proportions of the training, validation, and test sets are approximately 7:1:2.

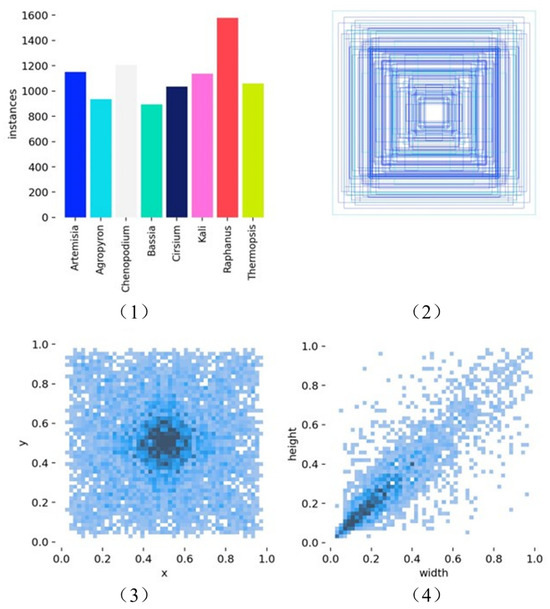

Figure 3 provides a comprehensive view of the training set’s label distribution and bounding box characteristics after data augmentation:

Figure 3.

Training Set Labels Distribution: (1) Category Instances (2) Bounding Box Shapes (3) Bounding Box Position (x vs. y) (4) Bounding Box Dimensions (width vs. height).

(1) Top Left (Category Instances): The bar chart shows the instance count for each weed species category in the training set. Post data augmentation, the sample distribution across categories has been balanced, ensuring that each class is represented by a relatively equal number of instances.

(2) Top Right (Bounding Box Shapes): This plot demonstrates the variety of bounding box shapes by overlaying multiple boxes. The boxes exhibit consistent proportions, supporting a balanced representation of object shapes across categories.

(3) Bottom Left (Bounding Box Position—x vs. y): The heatmap displays the distribution of bounding box center coordinates within the image space. A high density at the center of the plot indicates that many bounding boxes are centrally located in the images, though there is some spread across other regions as well, allowing the model to learn positional variations.

(4) Bottom Right (Bounding Box Dimensions—width vs. height): This scatter plot depicts the relationship between the width and height of bounding boxes. The diagonal trend indicates that bounding boxes maintain consistent aspect ratios, promoting uniform object representation across categories and aiding the model in recognizing similar objects with varying dimensions.

4. Model Optimization and Improvements

4.1. Introduction to YOLOv8 Model

The YOLOv8 model architecture consists of three main components: the backbone network, the feature fusion layer, and the detection head. Each component plays a critical role in enhancing the model’s performance across various detection tasks, including small object detection, multi-scale feature fusion, and flexible adaptation to diverse object shapes and sizes.

(1) Backbone Network: The backbone network utilizes Cross-Stage Partial Network (CSPNet) [33] to enhance feature extraction across various scales, improving small object detection through advanced techniques like Spatial Pyramid Pooling (SPP) [34] and Path Aggregation Network (PAN) [35].

(2) Feature Fusion Layer: This layer employs a Feature Pyramid Network (FPN) [36] + Path Aggregation Network (PAN) structure to effectively merge multi-scale feature maps, enhancing the model’s ability to detect objects of different sizes by improving feature propagation and integration.

(3) Detection Head: YOLOv8 features an anchor-free detection head, which simplifies training and enhances flexibility, allowing the model to adapt better to various object shapes and sizes, resulting in improved localization and accuracy.

4.2. Model Optimization

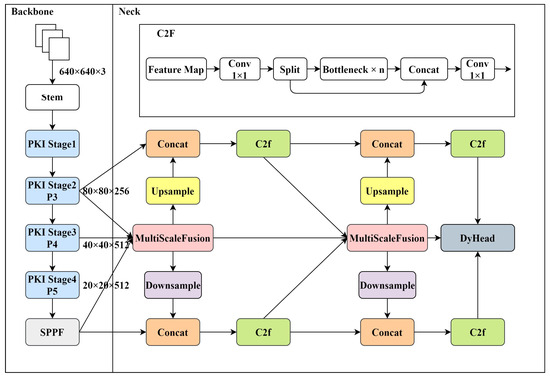

To develop a better model for detecting weeds in wheat fields, we based our work on the YOLOv8 model and made improvements to its three components: the backbone network, the feature fusion layer, and the detection head, as shown in Figure 4. These enhancements resulted in a new and optimized detection model named PMDNet, designed specifically to address the challenges of weed detection in agricultural scenarios.

Figure 4.

Network structure diagram of PMDNet model. Note: Stem is the initial preprocessing module for feature extraction; SPPF is a spatial pyramid pooling module for enhancing multi-scale contextual information. Concat merges feature maps from different layers to preserve multi-scale details; C2f is a feature fusion component derived from YOLOv8, designed to optimize feature representation. Upsampling increases spatial resolution for fine-grained localization. Downsampling reduces spatial resolution to capture high-level semantic features effectively. P3–P5 represent outputs of different layer feature maps. PKI Stage is the core module of the PKINet backbone; MultiScaleFusion is the core module of the self-designed feature fusion layer MSFPN, and DyHead serves as the detection head. These three components will be described in detail in the subsequent sections.

First, we introduce the Poly Kernel Inception Network (PKINet) [37] to enhance the backbone of YOLOv8. PKINet extracts features of objects at different scales using multi-scale convolutional kernels and incorporates a Context Anchor Attention (CAA) module to capture long-range contextual information, which is crucial for detecting spatial and contextual details in weed detection tasks.

Next, we design a Multi-Scale Feature Pyramid Network (MSFPN) tailored for PKINet as the feature fusion layer to further enhance YOLOv8’s feature fusion capabilities. MSFPN flexibly adjusts the structure of the feature pyramid to better meet the needs of weed detection in wheat fields, allowing the model to capture subtle differences between weeds and crops in agricultural environments. This custom structure facilitates better feature propagation and retention across scales.

Finally, we introduce Dynamic Head (DyHead) [38], a dynamic detection head based on attention mechanisms, to improve YOLOv8’s detection head. DyHead significantly enhances the representation power of the detection head by integrating multi-head self-attention across scale-aware features, spatial position awareness, and task-specific output channels, enabling more precise localization and identification of weeds in wheat fields.

4.2.1. Backbone Network: Improved with the Poly Kernel Inception Network (PKINet)

PKINet is a backbone network designed for object detection in remote sensing images. It extracts multi-scale features using parallel convolution kernels, effectively balancing local and global contextual information. This capability is particularly valuable for weed detection in wheat fields, where weed distribution and size vary widely, outperforming traditional single-scale convolutions.

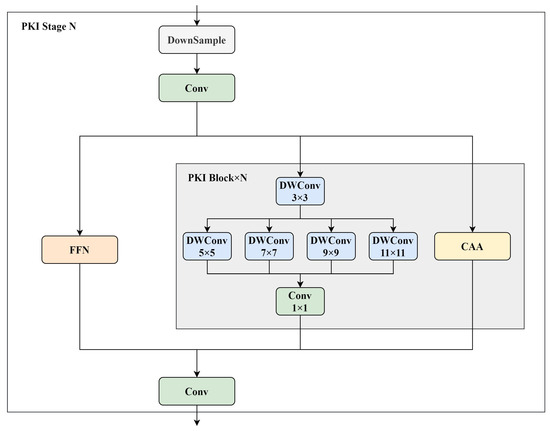

The backbone structure of PKINet is divided into four stages, each based on a CSP structure. As shown in Figure 5, the input features are split along the channel dimension, with one part processed by a Feed-Forward Network (FFN) and the other passing through PKI blocks to extract features. Each PKI block contains a PKI module and a Context Anchor Attention (CAA) module, enabling multi-scale feature extraction and long-range context modeling.

Figure 5.

Structure diagram of PKI Stage. Note: FFN refers to the Feed-Forward Network, a fully connected layer used to process and transform feature representations. CAA refers to the Context Anchor Attention module, designed to capture long-range dependencies and enhance central feature regions, improving small object detection in complex backgrounds. Conv stands for a standard convolutional layer responsible for extracting local spatial features. DWConv refers to Depthwise Convolution, which reduces computation by applying spatial convolution independently to each channel, often used to build lightweight neural networks.

The PKI module employs an Inception-style multi-scale convolution structure, using parallel depth-wise convolution kernels of varying sizes (e.g., 3 × 3, 5 × 5, 7 × 7). This design expands the receptive field without dilated convolutions, maintaining feature density and improving adaptability to varying scales, which is crucial for distinguishing small weeds from larger-scale wheat backgrounds.

The CAA module enhances long-range context modeling by capturing relationships between distant pixels with global average pooling and strip convolutions. This improves feature clarity and ensures small targets are not obscured by complex backgrounds, making PKINet highly effective for weed detection in wheat fields.

4.2.2. Feature Fusion Layer: Improved with Multi-Scale Feature Pyramid Network (MSFPN)

To enhance the YOLOv8 model for weed detection in wheat fields, we introduce MSFPN, a specialized feature fusion layer tailored for the PKINet backbone. MSFPN optimizes multi-scale feature integration to address the complex visual patterns in agricultural environments, enabling robust feature extraction and detailed resolution in detection tasks.

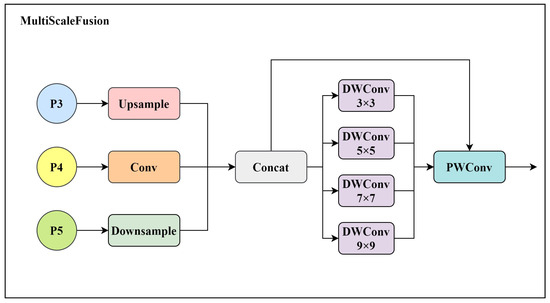

The core of MSFPN is the MultiScaleFusion module, which processes multi-scale feature maps (P3, P4, and P5) from the PKINet backbone. As shown in Figure 6, P3 is upsampled, P4 remains unchanged, and P5 is downsampled to align scales before concatenation. This ensures a dense integration of hierarchical information, which is critical for detecting fine details in densely vegetated wheat fields.

Figure 6.

Structure diagram of MultiScaleFusion. Note: PWConv refers to Pointwise Convolution, a 1 × 1 convolution used to adjust the channel dimensions of feature maps, enabling efficient feature transformation and information fusion with minimal computational cost.

The concatenated multi-scale feature map is processed through Depthwise Convolution (DWConv) layers with kernel sizes of 5, 7, 9, and 11. This multi-kernel approach allows MSFPN to capture spatial information at varying scales, improving its ability to detect small, occluded weeds. The outputs are summed and refined using a pointwise convolution (PWConv) for efficient feature representation. Finally, the refined features are combined with the original concatenated map in a residual manner, preserving spatial information and enhancing robustness.

This dense, multi-scale integration improves YOLOv8’s sensitivity to small, intricate features in complex agricultural backgrounds, boosting its performance for accurate weed detection.

4.2.3. Detection Head: Improved with Dynamic Head (DyHead)

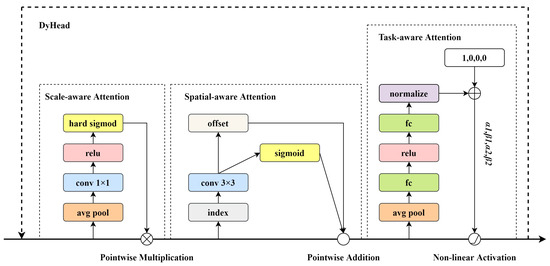

To improve the detection capability of the YOLOv8 model, especially for the intricate task of wheat field weed detection, we replaced the traditional detection head with DyHead, as described in Microsoft’s Dynamic Head model. The DyHead approach enhances feature adaptivity through a sequence of three specialized attention mechanisms: Scale Attention, Spatial Attention, and Task Attention. Figure 7 illustrates the structural organization of DyHead, emphasizing its modular attention design and the data flow between each module.

Figure 7.

Structure diagram of DyHead. Note: hard sigmoid refers to an approximation of the sigmoid function to constrain values within [0, 1]; relu denotes the activation function; avg pool represents global average pooling; offset refers to self-learned offsets in deformable convolution; index indicates the corresponding channel or spatial index. Sigmoid represents the standard sigmoid function; fc stands for fully connected layers; normalize is the normalization operation applied to inputs. Symbols α1, α2, β1, and β2 represent parameters controlling channel activations and offsets.

- (1)

- Scale-aware Attention

The Scale-aware Attention module aggregates spatial information from input features via global average pooling, generating a compact global feature representation. A 1 × 1 convolution is then used to produce attention weights for each scale, followed by a non-linear activation function (hard sigmoid) to maintain optimal weight ranges. These scaled weights are applied to the corresponding feature maps through element-wise multiplication, emphasizing features of varying importance across scales. This adaptive weighting enhances the model’s ability to detect objects of different sizes, improving accuracy in weed detection.

- (2)

- Spatial-aware Attention

The Spatial-aware Attention module uses dynamic convolutions with learned offsets and masks to adapt the receptive field to spatial variations. Offsets adjust sampling positions dynamically, while masks emphasize important regions and suppress background noise. This mechanism ensures consistent spatial refinement across multiple feature scales, enabling the cohesive fusion of spatially enhanced features. The result is a robust ability to capture crucial spatial details in complex agricultural environments, improving detection performance.

- (3)

- Task-aware Attention

The Task-aware Attention module refines task-specific features by applying non-linear transformations, such as the ReLU [39] activation function, to enhance regions and textures relevant to identifying weed species. This module processes features from the Spatial-aware Attention module, completing the feature transformation process. The final output retains multi-scale and spatial information while optimizing distinctive features critical for accurate weed detection in complex scenarios.

By incorporating DyHead into the detection head, YOLOv8 significantly improves its ability to detect small and densely distributed objects, addressing the challenges posed by weed distributions in wheat fields.

5. Experiment Design and Result Analysis

5.1. Experimental Setup

In this study, the experimental setup employed a high-performance computing system to evaluate the model’s performance in wheat field weed detection. The hardware configuration included an NVIDIA RTX 4090 GPU with 24 GB of VRAM, paired with an Intel Xeon Platinum 8352V CPU featuring 16 virtual cores at 2.10 GHz and 90 GB of RAM. This setup ensured efficient processing and training of the model.

The software environment was based on Python 3.8 and PyTorch version 2.0.0, running on Ubuntu 20.04 with CUDA version 11.8 for optimized GPU performance. The training was configured to run for a maximum of 300 epochs using Stochastic Gradient Descent (SGD) as the optimization algorithm, with an initial learning rate of 0.001, determined to facilitate effective model convergence through preliminary experiments. The batch size was set to 32, a decision influenced by the available GPU memory, allowing for balanced memory usage and training efficiency.

5.2. Performance Evaluation Metrics

Referring to the study by Padilla et al. [40] (2020), this research uses performance evaluation metrics such as Precision (P), Recall (R), and mean Average Precision (mAP) at Intersection over Union (IoU) thresholds of 0.50 (mAP@0.50) and 0.50-0.95 (mAP@0.50:0.95) to assess the effectiveness of the weed detection model in wheat fields.

(1) Precision (P): Precision quantifies the accuracy of the positive predictions made by the model. It is defined as the ratio of true positive predictions to the total predicted positives, as shown in Equation (1):

where TP denotes the number of true positives and FP denotes the number of false positives. A high precision value indicates a low rate of false positives, which is essential in applications where false alarms can result in significant consequences.

(2) Recall (R): Recall, or sensitivity, measures the model’s capability to identify all relevant instances within the dataset. It is calculated as shown in Equation (2):

where FN represents the number of false negatives. A high recall value indicates that the model successfully detects a significant proportion of true instances, which is particularly critical in weed detection to minimize missed detections.

(3) Mean Average Precision (mAP): mAP is a critical evaluation metric in object detection, summarizing model performance by balancing precision and recall across various IoU thresholds. It quantifies how effectively a model identifies and localizes objects within an image, providing a comprehensive measure of detection quality.

The metric includes two common thresholds: mAP@0.50 and mAP@0.50:0.95. mAP@0.50 represents the mean precision at a fixed IoU threshold of 0.50, which is relatively lenient and allows for moderate localization errors. This metric is often used as a baseline to gauge a model’s ability to detect objects, even if the bounding boxes are not perfectly aligned. In contrast, mAP@0.50:0.95 evaluates precision across a range of stricter IoU thresholds, from 0.50 to 0.95, in increments of 0.05. This more stringent metric assesses both detection and localization accuracy, offering a robust measure of the model’s consistency and precision in handling varying levels of overlap.

mAP@0.50 highlights detection capability, while mAP@0.50:0.95 provides a deeper evaluation of localization performance. A higher mAP value across these thresholds indicates superior detection and localization effectiveness.

(4) F1 Score: The F1 score serves as a combined metric that balances precision and recall. As shown in Equation (3), it is defined as the harmonic mean of precision and recall:

This score is particularly beneficial in scenarios with uneven class distributions, emphasizing the model’s performance in accurately identifying both positive and negative instances.

5.3. Model Performance Comparison

In this study, to enhance the performance of YOLOv8 in weed detection in wheat fields, we experimented with replacing the original YOLOv8 backbone network with various alternatives, including EfficientViT [41], UniRepLKNet [42], Convnextv2 [43], Vanillanet [44], FasterNet [45], and PKINet. We evaluated each network based on key metrics such as Precision (P), Recall (R), mAP@0.5, mAP@0.50:0.95, and F1 score. The results show that PKINet achieved superior performance across these metrics, standing out as the most effective choice overall. Consequently, we selected PKINet to replace the original YOLOv8 backbone network.

As shown in Table 1, PKINet achieved the highest mAP@0.5 (84.8%) and mAP@0.50:0.95 (67.7%) among all tested backbones, indicating both high detection accuracy and improved localization precision. Additionally, PKINet’s Recall (76.3%) and F1 score (83.7%) are on par with or exceed the baseline YOLOv8, further highlighting its balanced performance in both identifying and correctly localizing weed instances. While FasterNet showed slightly higher Precision (93.3%), PKINet’s performance across all other key metrics makes it the most robust and consistent choice for enhancing YOLOv8 in this application.

Table 1.

Training Results of Different Backbone Networks.

Our results demonstrate that integrating PKINet into YOLOv8 yields the greatest improvement in weed detection performance, establishing it as the best choice among the tested backbones.

As shown in Table 2, the training results of various object detection models for wheat field weed detection are presented, showcasing their overall performance and effectiveness across multiple evaluation metrics. Among these models, PMDNet, the model proposed in this study, achieves the best performance across multiple metrics. Notably, compared to the baseline YOLOv8n model, PMDNet improves mAP@0.5 by 2.2% and mAP@0.50:0.95 by 5.9%, showcasing significant advancements in detection accuracy.

Table 2.

Training Results of Different Object Detection Models.

The baseline model YOLOv8n achieves a Precision of 92.0%, Recall of 76.4%, mAP@0.5 of 83.6%, mAP@0.50:0.95 of 65.7%, and F1-score of 83.5%. PMDNet surpasses the baseline with a Precision of 94.5%, mAP@0.5 of 85.8%, and mAP@0.50:0.95 of 69.6%, indicating the effectiveness of the proposed improvements.

Compared with other models, such as YOLOv5n [24], YOLOv10n [46], TOOD [47], Faster-RCNN [48], RetinaNet [49], ATSS [50], EfficientDet [51], and RT-DETR-L [52], PMDNet consistently demonstrates superior performance. Specifically, YOLOv10 achieves a mAP@0.50:0.95 of 64.7%, which is lower than PMDNet’s 69.6%. TOOD and Faster-RCNN perform relatively poorly, with TOOD achieving a mAP@0.50:0.95 of 55.3% and an F1-score of 73.6%, while Faster-RCNN achieves a mAP@0.50:0.95 of 52.9% and an F1-score of 72.3%, significantly below PMDNet’s performance.

Other models, such as RetinaNet and ATSS, perform adequately in certain metrics but still fall short of PMDNet overall. RetinaNet achieves a mAP@0.5 of 84.4%, slightly lower than PMDNet’s 85.8%, and a mAP@0.50:0.95 of only 56.5%, which is considerably lower than PMDNet’s 69.6%. Similarly, ATSS achieves a mAP@0.50:0.95 of 58.2% and an F1-score of 72.3%, both of which are inferior to PMDNet’s performance.

PMDNet outperformed several classical single-stage and two-stage object detection models, achieving the highest precision (94.5%), which is 14.1% higher than the lowest precision observed in Faster-RCNN (80.4%). For mAP@0.5, PMDNet achieved 85.8%, which is 5.4% higher than the lowest score of 80.4% from RT-DETR-L. Under the stricter mAP@0.50:0.95 metrics, PMDNet reached 69.6%, significantly surpassing Faster-RCNN (52.9%, an improvement of 16.7%) and RetinaNet (56.5%, an improvement of 13.1%). These results highlight PMDNet’s robustness and its consistent superiority over both single-stage and two-stage object detection models.

5.4. Ablation Study

To understand the contribution of each component in enhancing YOLOv8’s performance for weed detection, we conducted a series of ablation experiments by selectively incorporating PKINet, MSFPN, and DyHead. Each experiment systematically evaluates the effects of including or excluding these components on key performance metrics: Precision (P), Recall (R), mAP@0.5, mAP@0.50:0.95, and F1 score. The results are summarized in Table 3.

Table 3.

Ablation experiments.

Experiment 1 serves as the baseline model, where none of the enhancements (PKINet, MSFPN, DyHead) are applied. This baseline achieves a precision of 92.0%, recall of 76.4%, mAP@0.5 of 83.6%, mAP@0.50:0.95 of 65.7%, and an F1 score of 83.5%.

Introducing PKINet alone (Experiment 2) yields immediate performance gains across all metrics, improving mAP@0.5 to 84.8% and mAP@0.50:0.95 to 67.7%, with a slight increase in the F1 score to 83.7%. These results suggest that PKINet contributes significantly to both detection accuracy and localization precision.

In Experiment 3, only MSFPN is introduced, enhancing the model’s multi-scale detection capability. MSFPN increases precision to 93.7% and mAP@0.50:0.95 to 68.0%, with an F1 score of 83.7%, demonstrating that MSFPN is particularly beneficial for precision improvement, as it allows the model to capture features at various scales.

When only DyHead is applied in Experiment 4, there is a noticeable improvement in recall to 76.7% and mAP@0.50:0.95 to 67.6%, which highlights DyHead’s effectiveness in refining object detection heads. However, the overall F1 score of 83.4% shows that the addition of DyHead alone does not outperform the combination of PKINet or MSFPN.

Experiment 5 combines PKINet and MSFPN, achieving further enhancements with a precision of 91.9%, recall of 77.0%, mAP@0.5 of 85.0%, and mAP@0.50:0.95 of 69.2%. The F1 score also increases to 83.8%, indicating the synergistic effect of PKINet and MSFPN in capturing multi-scale features while maintaining accuracy.

Experiment 6 combines MSFPN and DyHead without PKINet. This configuration achieves a mAP@0.50:0.95 of 69.9%, the highest among setups without PKINet, showing that MSFPN and DyHead together significantly enhance localization precision. However, the F1 score decreases slightly to 83.3%.

Finally, Experiment 7 incorporates all three components (PKINet, MSFPN, DyHead), achieving the highest scores across most metrics, with a precision of 94.5%, mAP@0.5 of 85.8%, mAP@0.50:0.95 of 69.6%, and an F1 score of 84.2%. This configuration validates that the combination of PKINet, MSFPN, and DyHead delivers the best performance, maximizing the model’s capability to detect and localize weeds accurately in wheat fields.

These ablation results confirm that each component contributes uniquely to model performance, and their combination results in the most balanced improvement across detection and localization metrics.

5.5. Model Visualization Results

5.5.1. Training Process Visualization

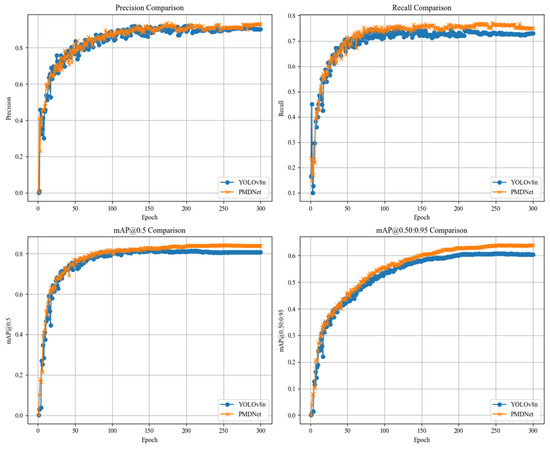

Figure 8 shows the comparison of training performance between the PMDNet model and the YOLOv8n model across four key metrics: Precision, Recall, mAP@0.5, and mAP@0.50:0.95. Each subplot represents one metric, with the horizontal axis indicating the number of training epochs and the vertical axis showing the corresponding metric values. Overall, PMDNet consistently outperforms YOLOv8n across all four metrics, highlighting the advantages of the proposed model in detection accuracy and stability during training.

Figure 8.

Comparison of Training Results Between PMDNet and YOLOv8n Models.

Precision shows a consistent advantage for PMDNet over YOLOv8n across most epochs. PMDNet demonstrates a more stable improvement in detection accuracy, particularly in the earlier epochs, where it achieves higher precision values. Recall values for both models follow a similar trend, with PMDNet achieving slightly higher recall in the later epochs, indicating a better balance between detecting true positives and minimizing false negatives. For mAP@0.5, PMDNet consistently outperforms YOLOv8n throughout the training process, maintaining higher values even as training progresses. Under the stricter evaluation metric, mAP@0.50:0.95, PMDNet achieves significantly better results than YOLOv8n, underscoring its robustness and improved capability to accurately detect objects under challenging IoU thresholds.

By training up to 300 epochs, it can be observed that all metrics have stabilized, indicating that the models have converged. This demonstrates that the training parameters, such as learning rate and batch size, were well-chosen and effectively configured for both models.

It is worth noting, as shown in Figure 8, that although PMDNet achieves a slightly higher recall than the YOLOv8n model during training on the training dataset, its recall on the test dataset (76.0%) is slightly lower than that of YOLOv8n (76.4%). This discrepancy can be attributed to the distribution characteristics of the test dataset. PMDNet focuses more on improving overall detection performance and robustness, consistently outperforming YOLOv8n across other metrics. This superiority is evident in both the training process on the training dataset and the validation results on the test dataset, demonstrating the consistency of PMDNet’s performance.

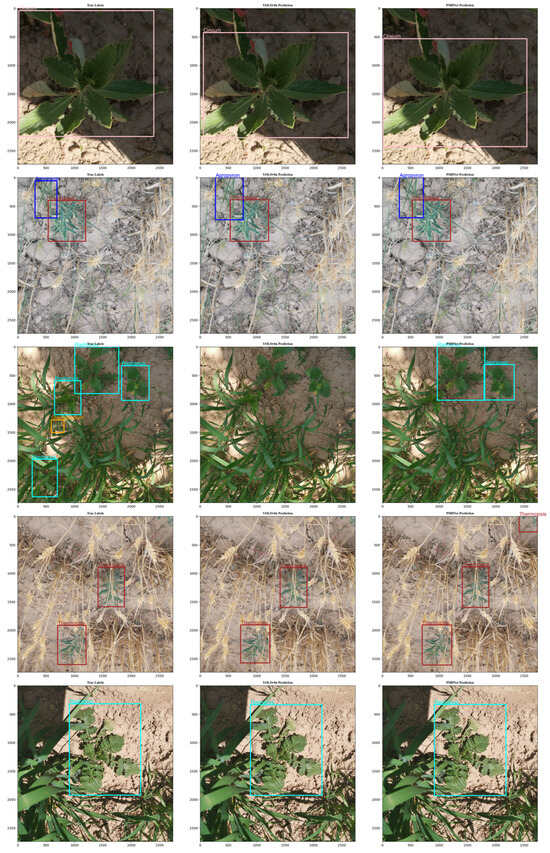

5.5.2. Visualization of Prediction Results

Figure 9 illustrates the comparison of prediction results between the YOLOv8n model and the PMDNet model. To demonstrate the visualized prediction results of the models, five images were randomly selected from the test set. Each image was evaluated using both YOLOv8n and PMDNet. The three columns in Figure 9 represent, from left to right, the ground truth annotations, the predictions by YOLOv8n, and the predictions by PMDNet.

Figure 9.

Comparison of Model Prediction Results. Note: The three columns in this figure represent, from left to right, the ground truth annotations, the predictions by YOLOv8n, and the predictions by PMDNet.

Both models showed relatively good prediction performance, effectively detecting and classifying objects in most cases. However, PMDNet demonstrated superior performance overall. In particular, the third row of images highlights a challenging scenario with highly similar weeds and backgrounds (complex backgrounds and nearly identical colors). In this case, PMDNet demonstrated better prediction performance, successfully detecting weeds in complex backgrounds. Although not all weeds were detected, PMDNet performed significantly better in handling complex scenarios compared to YOLOv8n, which failed to predict any bounding boxes in this case.

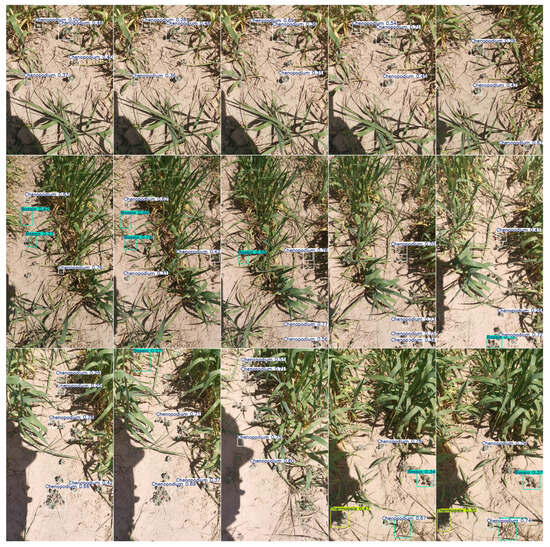

5.6. Testing on Wheat Field Video Sequence

In the final step of this study, a 30 s video was recorded in the wheat field used for dataset collection, using the same equipment as for dataset creation (a smartphone with a 40-megapixel primary camera). The captured video was processed using the PMDNet model for tracking and detection, and the detection results are shown in Figure 10. A frame was selected every 2 s from the video, with a total of 15 images displayed. The experimental results demonstrate that the PMDNet model performs well in weed detection on the video, effectively locating and identifying weeds, especially small target weeds and those in complex backgrounds. The detection boxes accurately marked the positions of weeds, with minimal false positives and false negatives observed in the video. The FPS of the PMDNet model during video detection was 87.7, which meets the real-time monitoring requirements. This experiment validates the application potential of the PMDNet model for weed detection in real-world wheat fields, confirming its effectiveness in agricultural weed detection.

Figure 10.

Field detection results of the PMDNet model in wheat fields.

6. Discussion

In this study, we propose PMDNet, an improved model built upon the YOLOv8 architecture, specifically designed to enhance its detection performance for wheat field weed detection tasks. By systematically replacing the backbone, feature fusion layer, and detection head of YOLOv8 with PKINet, MSFPN, and DyHead, respectively, we have demonstrated significant improvements in detection accuracy. The results provide valuable insights into the benefits of advanced model architecture customization for agricultural object detection tasks.

6.1. Performance Analysis

The experimental results reveal that PMDNet outperforms the baseline YOLOv8n model in terms of both mAP@0.5 and mAP@0.50:0.95. Specifically, the mAP@0.5 increased from 83.6% to 85.8%, indicating a 2.2% improvement. Furthermore, the mAP@0.50:0.95 rose from 65.7% to 69.6%, achieving a substantial 5.9% enhancement. These advancements suggest that PMDNet effectively captures multi-scale features and adapts to complex scenes in wheat fields with improved generalization capabilities. The improvements in the comprehensive metric (mAP@0.50:0.95) highlight the robustness of PMDNet across varying IoU thresholds.

6.2. Impact of Individual Components

Each of the architectural modifications contributed uniquely to the performance gains. PKINet, as the backbone, provides stronger feature extraction capabilities, leveraging its hierarchical and multi-scale design to enhance the representation of fine-grained features in weed and wheat segmentation. MSFPN optimizes the flow of multi-scale features through an advanced pyramid structure, ensuring effective information propagation and feature alignment. DyHead enhances the detection head by dynamically attending to contextual information, enabling better localization and classification of small or occluded objects.

6.3. Significance for Agricultural Applications

The proposed PMDNet demonstrates that integrating advanced deep learning modules can significantly improve the precision of weed detection in agricultural settings. Accurate detection of weeds in wheat fields is critical for ensuring optimal crop yield and minimizing herbicide usage. Beyond the quantitative improvements achieved in controlled experiments, PMDNet was also successfully applied to real-world wheat field video detection tasks, showcasing its capability to perform with both high precision and real-time efficiency. This practical validation underscores PMDNet’s robustness and suitability for deployment in dynamic, real-world scenarios. By improving detection accuracy and robustness and maintaining real-time processing, PMDNet provides a viable solution for precision agriculture applications. These advancements could lead to cost savings, reduced environmental impact, and higher efficiency in weed management practices, making it a valuable tool in sustainable farming.

6.4. Limitations and Future Work

While PMDNet achieves superior accuracy, several challenges remain that require further exploration. The model’s computational complexity, resulting from the integration of PKINet, MSFPN, and DyHead, limits its deployment on resource-constrained devices such as those commonly used in agricultural settings. To address this, future research will focus on developing lightweight versions of these components. For instance, pruning techniques, quantization, and knowledge distillation will be explored to balance performance and efficiency. This could enable real-time deployment of PMDNet on low-power edge devices, making it more practical for field applications.

Additionally, the dataset used in this study includes eight categories of wheat field weeds, which, while sufficient for the current scope, do not capture the full diversity of weed species encountered in real-world agricultural environments. Future work will prioritize expanding the dataset to include a broader spectrum of weed species, particularly those common in other climatic zones and agricultural systems.

The geographical and biological limitations of the dataset also warrant attention. The dataset was collected exclusively in a single region, which restricts the model’s generalizability to other agricultural conditions involving varying soil types, climates, and weed-crop interactions. In addition to geographic expansion, future efforts will focus on creating synthetic datasets or leveraging domain adaptation techniques to simulate diverse environmental conditions. This will reduce the dependence on extensive data collection and improve the model’s adaptability to new regions.

Furthermore, weeds exhibit distinct growth cycles, with visual appearances varying significantly across stages such as seedling, vegetative, and flowering. These intra-species variations can lead to misclassifications or missed detections by PMDNet, especially in later growth stages where weeds may closely resemble wheat. To mitigate this, future research will focus on creating stage-specific datasets and training the model using temporal feature extraction techniques to account for these growth-related changes. Techniques such as temporal attention mechanisms or recurrent neural network-based modules will be explored to enhance robustness to temporal variations in weed appearances.

Another limitation lies in PMDNet’s performance with particularly small or slender weed targets, such as Agropyron cristatum, which results in lower recall rates. To address this, targeted optimizations will be explored, including augmenting the training dataset with synthetic samples of such targets and employing advanced detection strategies, such as anchor-free methods or multi-scale feature refinement, to improve the detection of small and slender weeds.

Finally, future work will investigate alternative training strategies to improve generalization capabilities. Semi-supervised learning approaches, which leverage unlabeled data, and domain adaptation techniques, which address domain shifts between training and deployment environments, will be prioritized. These strategies, combined with efforts to optimize PMDNet for low-power devices and enhance its adaptability to diverse agricultural conditions, will ensure that PMDNet evolves into a more robust and practical tool for precision agriculture.

7. Conclusions

In conclusion, this study developed a wheat field weed detection dataset consisting of 5967 images, representing eight categories of weeds with balanced distribution across classes, supported by data augmentation techniques. Experimental results confirm that this dataset enables effective model training and serves as a reliable benchmark for evaluating object detection models.

Building upon this dataset, we proposed PMDNet, an improved object detection model specifically optimized for wheat field weed detection tasks. PMDNet integrates several architectural innovations: the backbone network was replaced with PKINet, the self-designed MSFPN was employed for multi-scale feature fusion, and DyHead was utilized as the detection head. These modifications significantly enhanced detection performance. Compared to the baseline YOLOv8n model, PMDNet achieved a 2.2% improvement in mAP@0.5, increasing from 83.6% to 85.8%, and a 5.9% improvement in mAP@0.50:0.95, increasing from 65.7% to 69.6%. Moreover, PMDNet demonstrated superior performance over classical detection models such as Faster-RCNN and RetinaNet, achieving a 16.7% higher mAP@0.50:0.95 compared to Faster-RCNN (52.9%) and a 13.1% improvement over RetinaNet (56.5%).

The training process analysis further demonstrated PMDNet’s stability and robustness. Throughout 300 epochs, PMDNet consistently outperformed YOLOv8n in precision, recall, and mAP metrics during training. Visualization of prediction results highlighted PMDNet’s advantage in handling challenging scenarios, such as complex backgrounds and small targets, where it consistently performed better than YOLOv8n.

Extensive ablation studies were conducted to validate the effectiveness of each proposed module, confirming their individual contributions to the model’s overall performance. In real-world video detection tests, PMDNet achieved an FPS of 87.7, meeting real-time monitoring requirements while maintaining high detection precision. These experiments demonstrated the model’s practical applicability, effectively detecting weeds in challenging environments such as dense vegetation and complex lighting conditions.

This study not only highlights the potential of PMDNet for addressing complex agricultural detection tasks but also provides a foundation for future research in precision agriculture. The advancements demonstrated in this work pave the way for more sustainable and effective weed management solutions, fostering the integration of AI technologies into modern farming practices.

Author Contributions

Conceptualization, Z.Q. and J.W.; methodology, Z.Q.; software, Z.Q.; validation, Z.Q.; formal analysis, Z.Q.; investigation, Z.Q.; resources, J.W.; data curation, Z.Q.; writing—original draft preparation, Z.Q.; writing—review and editing, J.W.; visualization, Z.Q.; supervision, J.W.; project administration, J.W.; funding acquisition, J.W. All authors have read and agreed to the published version of the manuscript.

Funding

Supported by The National Natural Science Foundation of China (32360438); The Support Fund for Young Graduate Guidance Teachers at Gansu Agricultural University (GAU-QDFC-2022-18); 2024 Central Guidance for Local Science and Technology Development Special Project (24ZYQA023); Gansu Province Top-notch Leading Talent Project (GSBJLJ-2023-09).

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to restrictions related to limited-purpose collection.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| Abbreviation | Full Form |

| MSFPN | Multi-Scale Feature Pyramid Network |

| YOLO | You Only Look Once |

| FPN | Feature Pyramid Network |

| mAP | mean Average Precision |

| CSPNet | Cross-Stage Partial Network |

| SPP | Spatial Pyramid Pooling |

| PAN | Path Aggregation Network |

| PKINet | Poly Kernel Inception Network |

| CAA | Context Anchor Attention |

| FFN | Feed-Forward Network |

| DWConv | Depthwise Convolution |

| PWConv | Pointwise Convolution |

| DyHead | Dynamic Head |

| SGD | Stochastic Gradient Descent |

| P | Precision |

| R | Recall |

| IoU | Intersection over Union |

References

- Godfray, H.C.J.; Beddington, J.R.; Crute, I.R.; Haddad, L.; Lawrence, D.; Muir, J.F.; Pretty, J.; Robinson, S.; Thomas, S.M.; Toulmin, C. Food security: The challenge of feeding 9 billion people. Science 2010, 327, 812–818. [Google Scholar] [CrossRef]

- Food and Agriculture Organization of the United Nations. The Future of Food and Agriculture: Trends and Challenges; FAO: Rome, Italy, 2017. [Google Scholar]

- Anwar, M.P.; Islam, A.K.M.M.; Yeasmin, S.; Rashid, M.H.; Juraimi, A.S.; Ahmed, S.; Shrestha, A. Weeds and Their Responses to Management Efforts in A Changing Climate. Agronomy 2021, 11, 1921. [Google Scholar] [CrossRef]

- Colbach, N.; Collard, A.; Guyot, S.H.M.; Mézière, D.; Munier-Jolain, N. Assessing innovative sowing patterns for integrated weed management with a 3D crop:weed competition model. Eur. J. Agron. 2014, 53, 74–89. [Google Scholar] [CrossRef]

- Jalli, M.; Huusela, E.; Jalli, H.; Kauppi, K.; Niemi, M.; Himanen, S.; Jauhiainen, L. Effects of Crop Rotation on Spring Wheat Yield and Pest Occurrence in Different Tillage Systems: A Multi-Year Experiment in Finnish Growing Conditions. Front. Sustain. Food Syst. 2021, 5, 647335. [Google Scholar] [CrossRef]

- Javaid, M.M.; Mahmood, A.; Bhatti, M.I.N.; Waheed, H.; Attia, K.; Aziz, A.; Nadeem, M.A.; Khan, N.; Al-Doss, A.A.; Fiaz, S.; et al. Efficacy of Metribuzin Doses on Physiological, Growth, and Yield Characteristics of Wheat and Its Associated Weeds. Front. Plant Sci. 2022, 13, 866793. [Google Scholar] [CrossRef] [PubMed]

- Usman, K.; Khalil, S.K.; Khan, A.Z.; Khalil, I.H.; Khan, M.A.; Amanullah. Tillage and herbicides impact on weed control and wheat yield under rice–wheat cropping system in Northwestern Pakistan. Soil Tillage Res. 2010, 110, 101–107. [Google Scholar] [CrossRef]

- Shamshiri, R.R.; Rad, A.K.; Behjati, M.; Balasundram, S.K. Sensing and Perception in Robotic Weeding: Innovations and Limitations for Digital Agriculture. Sensors 2024, 24, 6743. [Google Scholar] [CrossRef] [PubMed]

- Reed, N.H.; Butts, T.R.; Norsworthy, J.K.; Hardke, J.T.; Barber, L.T.; Bond, J.A.; Bowman, H.D.; Bateman, N.R.; Poncet, A.M.; Kouame, K.B.J. Ecological implications of row width and cultivar selection on rice (Oryza sativa) and barnyardgrass (Echinochloa crus-galli). Sci. Rep. 2024, 14, 24844. [Google Scholar] [CrossRef] [PubMed]

- Meesaragandla, S.; Jagtap, M.P.; Khatri, N.; Madan, H.; Vadduri, A.A. Herbicide spraying and weed identification using drone technology in modern farms: A comprehensive review. Results Eng. 2024, 21, 101870. [Google Scholar] [CrossRef]

- Rasappan, P.; Delphin, A.; Rani, C.; Sughashini, K.; Kurangi, C.; Nirmala, M.; Farhana, H.; Ahmed, T.; Balamurugan, S.P. Computer Vision and Deep Learning-enabled Weed Detection Model for Precision Agriculture. Comput. Syst. Sci. Eng. 2022, 44, 2759–2774. [Google Scholar] [CrossRef]

- Razfar, N.; True, J.; Bassiouny, R.; Venkatesh, V.; Kashef, R. Weed detection in soybean crops using custom lightweight deep learning models. J. Agric. Food Res. 2022, 8, 100308. [Google Scholar] [CrossRef]

- Rakhmatulin, I.; Kamilaris, A.; Andreasen, C. Deep Neural Networks to Detect Weeds from Crops in Agricultural Environments in Real-Time: A Review. Remote Sens. 2021, 13, 4486. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Gallo, I.; Rehman, A.U.; Dehkordi, R.H.; Landro, N.; La Grassa, R.; Boschetti, M. Deep Object Detection of Crop Weeds: Performance of YOLOv7 on a Real Case Dataset from UAV Images. Remote Sens. 2023, 15, 539. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, Y.; Xu, X.; Liu, L.; Yue, J.; Ding, R.; Lu, Y.; Liu, J.; Qiao, H. GVC-YOLO: A Lightweight Real-Time Detection Method for Cotton Aphid-Damaged Leaves Based on Edge Computing. Remote Sens. 2024, 16, 3046. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8, 8.0.0; Ultralytics: Frederick, MD, USA, 2023. [Google Scholar]

- Upadhyay, A.; Sunil, G.C.; Zhang, Y.; Koparan, C.; Sun, X. Development and evaluation of a machine vision and deep learning-based smart sprayer system for site-specific weed management in row crops: An edge computing approach. J. Agric. Food Res. 2024, 18, 101331. [Google Scholar] [CrossRef]

- Ali, M.S.; Rashid, M.R.A.; Hossain, T.; Kabir, M.A.; Kamrul, M.; Aumy, S.H.B.; Mridha, M.H.; Sajeeb, I.H.; Islam, M.M.; Jabid, T. A comprehensive dataset of rice field weed detection from Bangladesh. Data Brief 2024, 57, 110981. [Google Scholar] [CrossRef]

- Coleman, G.R.Y.; Kutugata, M.; Walsh, M.J.; Bagavathiannan, M.V. Multi-growth stage plant recognition: A case study of Palmer amaranth (Amaranthus palmeri) in cotton (Gossypium hirsutum). Comput. Electron. Agric. 2024, 217, 108622. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Farhadi, A.; Redmon, J. Yolov3: An incremental improvement. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1–6. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G. YOLOv5 by Ultralytics. License = AGPL-3.0. 2020. Available online: https://zenodo.org/records/7347926 (accessed on 8 October 2024).

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Sportelli, M.; Apolo-Apolo, O.E.; Fontanelli, M.; Frasconi, C.; Raffaelli, M.; Peruzzi, A.; Perez-Ruiz, M. Evaluation of YOLO Object Detectors for Weed Detection in Different Turfgrass Scenarios. Appl. Sci. 2023, 13, 8502. [Google Scholar] [CrossRef]

- Zhu, H.; Zhang, Y.; Mu, D.; Bai, L.; Wu, X.; Zhuang, H.; Li, H. Research on improved YOLOx weed detection based on lightweight attention module. Crop Prot. 2024, 177, 106563. [Google Scholar] [CrossRef]

- Dang, F.; Chen, D.; Lu, Y.; Li, Z. YOLOWeeds: A novel benchmark of YOLO object detectors for multi-class weed detection in cotton production systems. Comput. Electron. Agric. 2023, 205, 107655. [Google Scholar] [CrossRef]

- Chen, J.; Wang, H.; Zhang, H.; Luo, T.; Wei, D.; Long, T.; Wang, Z. Weed detection in sesame fields using a YOLO model with an enhanced attention mechanism and feature fusion. Comput. Electron. Agric. 2022, 202, 107412. [Google Scholar] [CrossRef]

- Xu, M.; Yoon, S.; Fuentes, A.; Park, D.S. A Comprehensive Survey of Image Augmentation Techniques for Deep Learning. Pattern Recognit. 2023, 137, 109347. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Cai, X.; Lai, Q.; Wang, Y.; Wang, W.; Sun, Z.; Yao, Y. Poly kernel inception network for remote sensing detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 27706–27716. [Google Scholar]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Yuan, L.; Zhang, L. Dynamic head: Unifying object detection heads with attentions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7373–7382. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Padilla, R.; Netto, S.L.; Silva, E.A.B.d. A Survey on Performance Metrics for Object-Detection Algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niterói, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar]

- Liu, X.; Peng, H.; Zheng, N.; Yang, Y.; Hu, H.; Yuan, Y. Efficientvit: Memory efficient vision transformer with cascaded group attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 14420–14430. [Google Scholar]

- Ding, X.; Zhang, Y.; Ge, Y.; Zhao, S.; Song, L.; Yue, X.; Shan, Y. UniRepLKNet: A Universal Perception Large-Kernel ConvNet for Audio Video Point Cloud Time-Series and Image Recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 5513–5524. [Google Scholar]

- Woo, S.; Debnath, S.; Hu, R.; Chen, X.; Liu, Z.; Kweon, I.S.; Xie, S. Convnext v2: Co-designing and scaling convnets with masked autoencoders. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 16133–16142. [Google Scholar]

- Chen, H.; Wang, Y.; Guo, J.; Tao, D. Vanillanet: The power of minimalism in deep learning. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver BC, Canada, 10–15 December 2024; Volume 36. [Google Scholar]

- Chen, J.; Kao, S.-H.; He, H.; Zhuo, W.; Wen, S.; Lee, C.-H.; Chan, S.-H.G. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. Tood: Task-aligned one-stage object detection. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 3490–3499. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Lin, T. Focal Loss for Dense Object Detection. arXiv 2017, arXiv:1708.02002. [Google Scholar]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 9759–9768. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10781–10790. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).