Evaluation of Sugarcane Crop Growth Monitoring Using Vegetation Indices Derived from RGB-Based UAV Images and Machine Learning Models

Abstract

1. Introduction

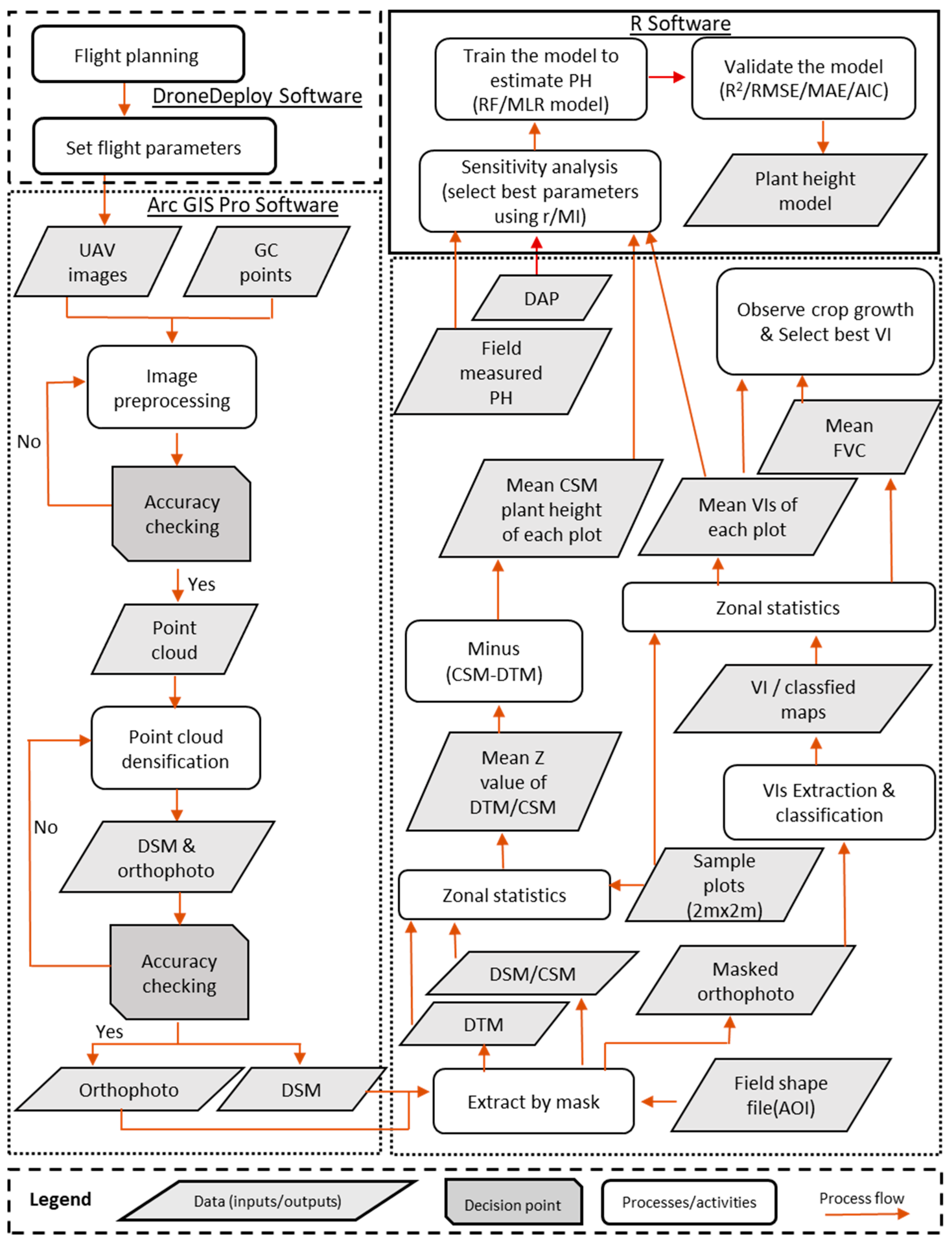

2. Materials and Methods

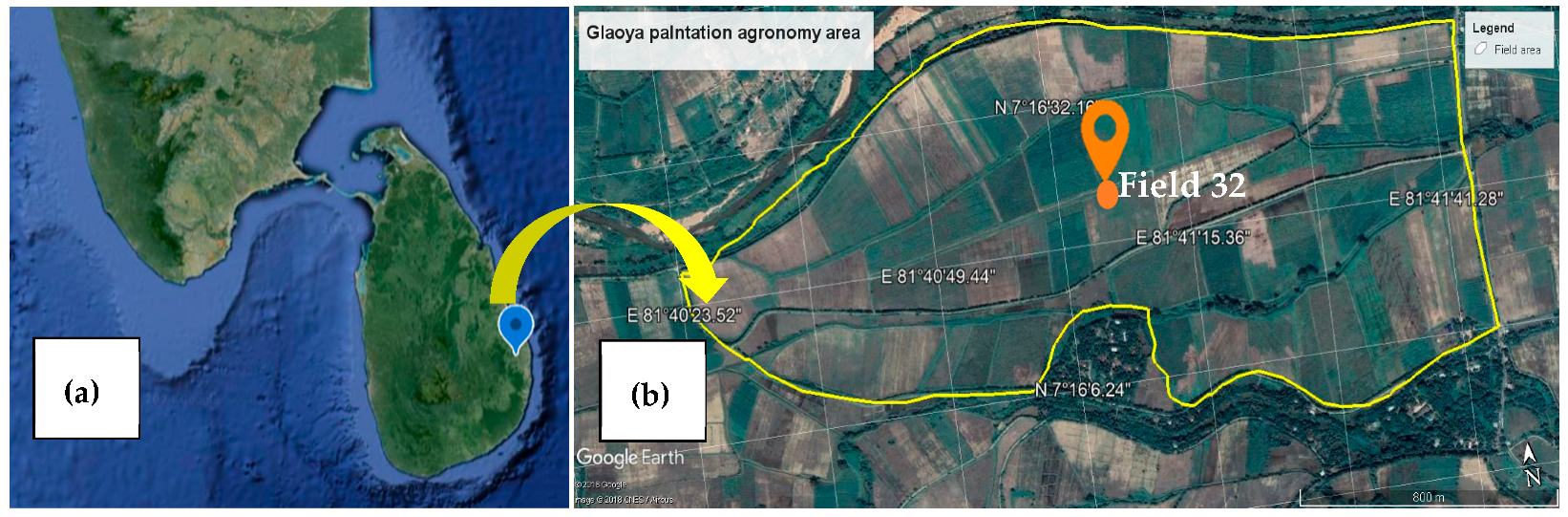

2.1. Study Area

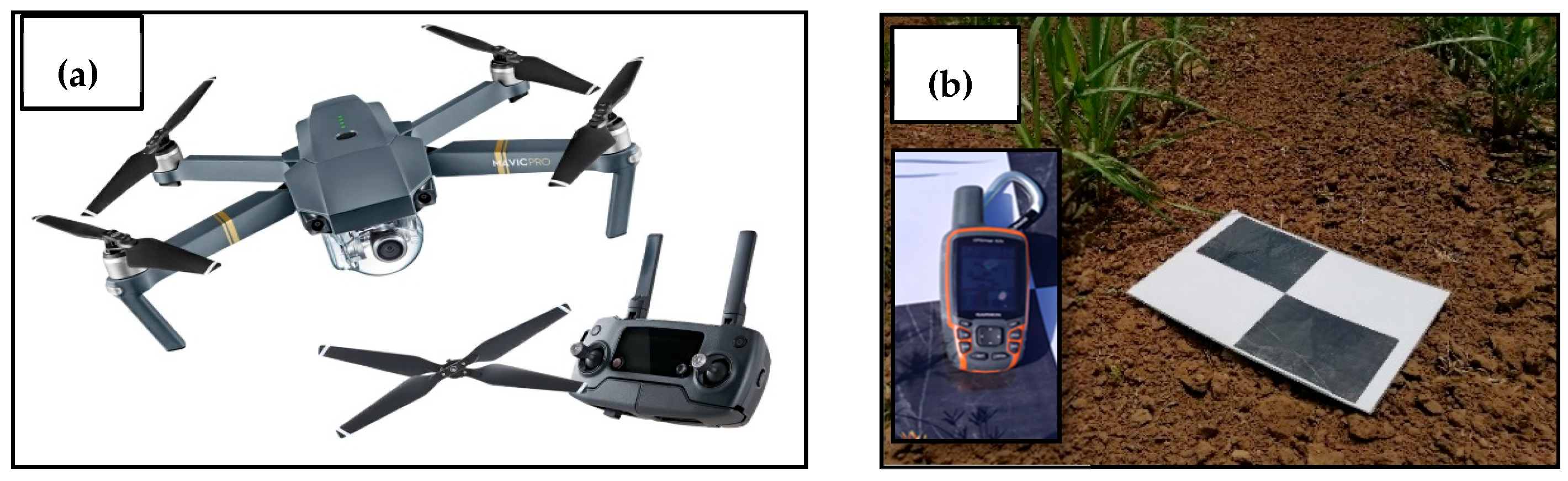

2.2. Description of the UAV

2.3. Flight Planning and Drone Image Acquisition

2.4. Ground Truth Data Collection

2.5. Feature Extraction from the UAV Image Data

2.5.1. UAV-Based Plant Height Generation from Crop Surface Models (CSM_PH)

2.5.2. Computation of Vegetation Indices

2.5.3. Analysis of Crop Growth by Fractional Vegetation Cover (FVC) and Image Classification

2.6. Estimation of Plant Height Models for Crop Growth Monitoring by Machine Learning (ML)

2.6.1. Selection of Predictor Variables

2.6.2. Machine Learning Plant Height Prediction Models and Statistical Analysis

3. Results

3.1. Visual Inspection of Crop Growth Monitoring at Different Growth Stages in Orthomosaic Images

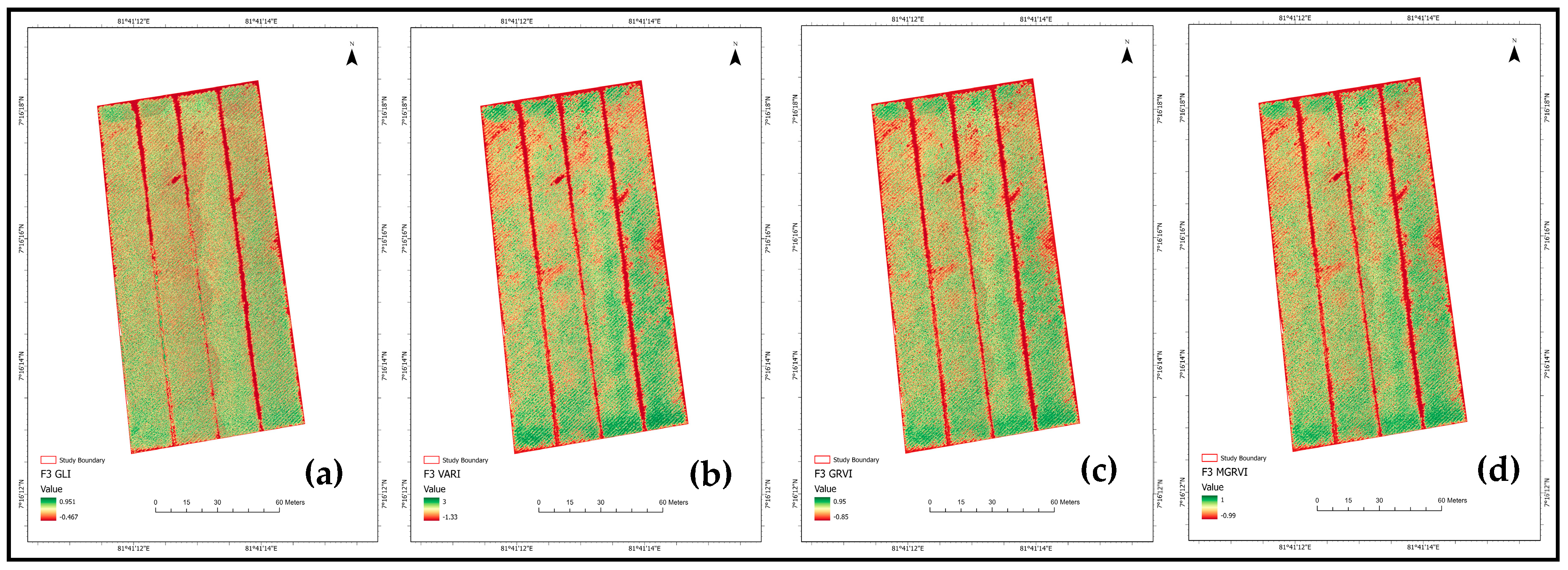

3.2. Vegetation Indices and Vegetation Cover Analysis at Different Growth Stages

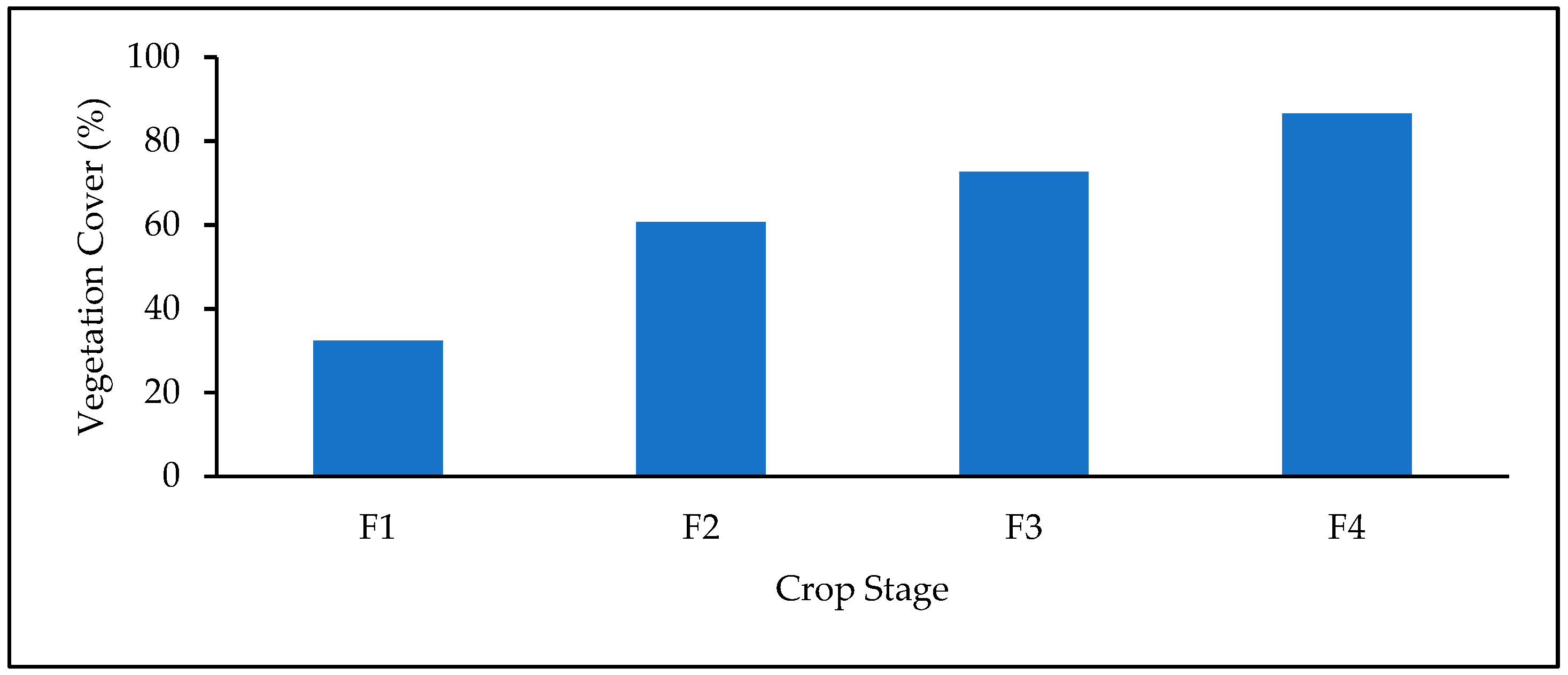

3.3. Image Classification and Fractional Vegetation Cover Analysis

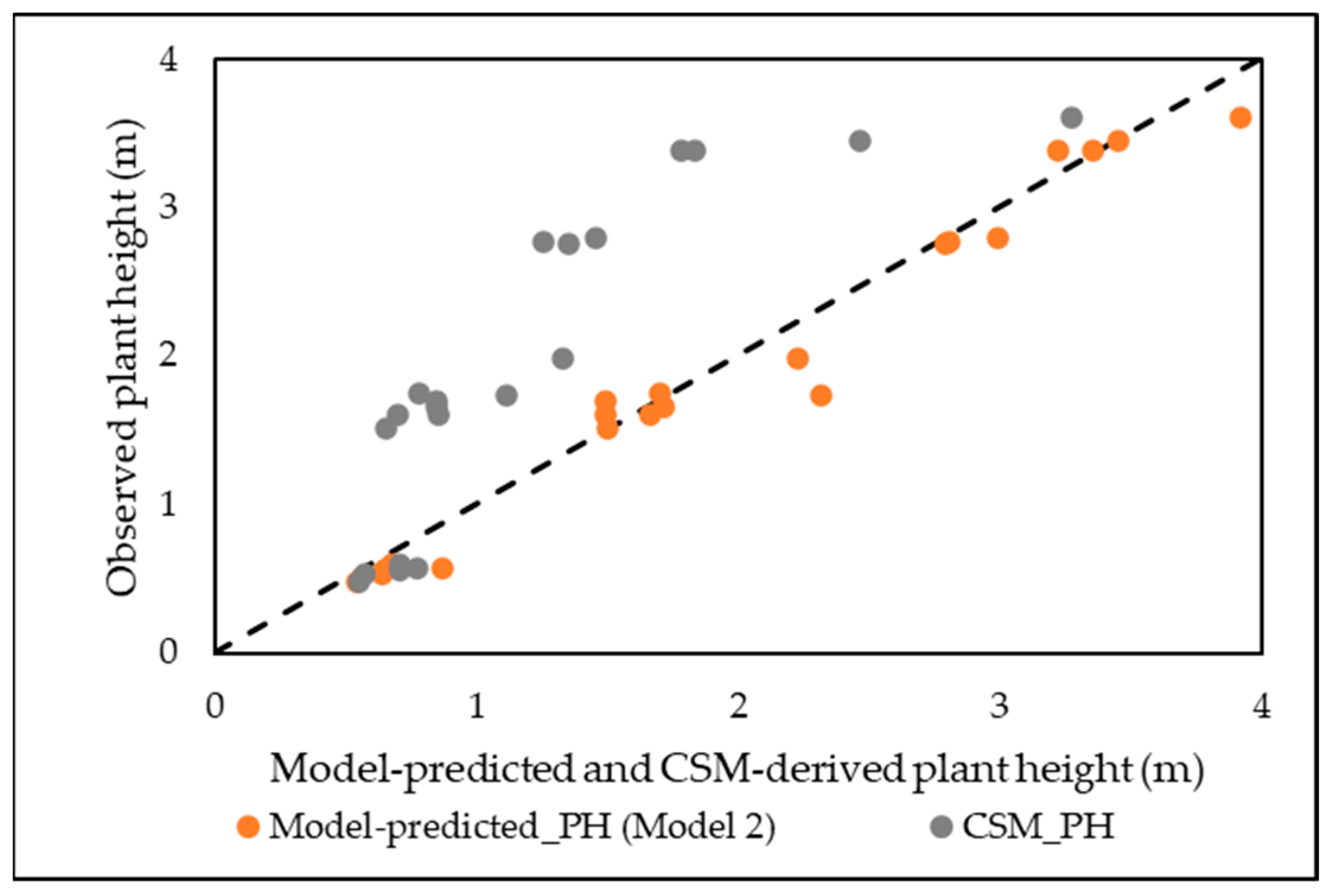

3.4. Plant Height Prediction from CSM

3.4.1. Selection of Predictor Variables by Sensitivity Analysis

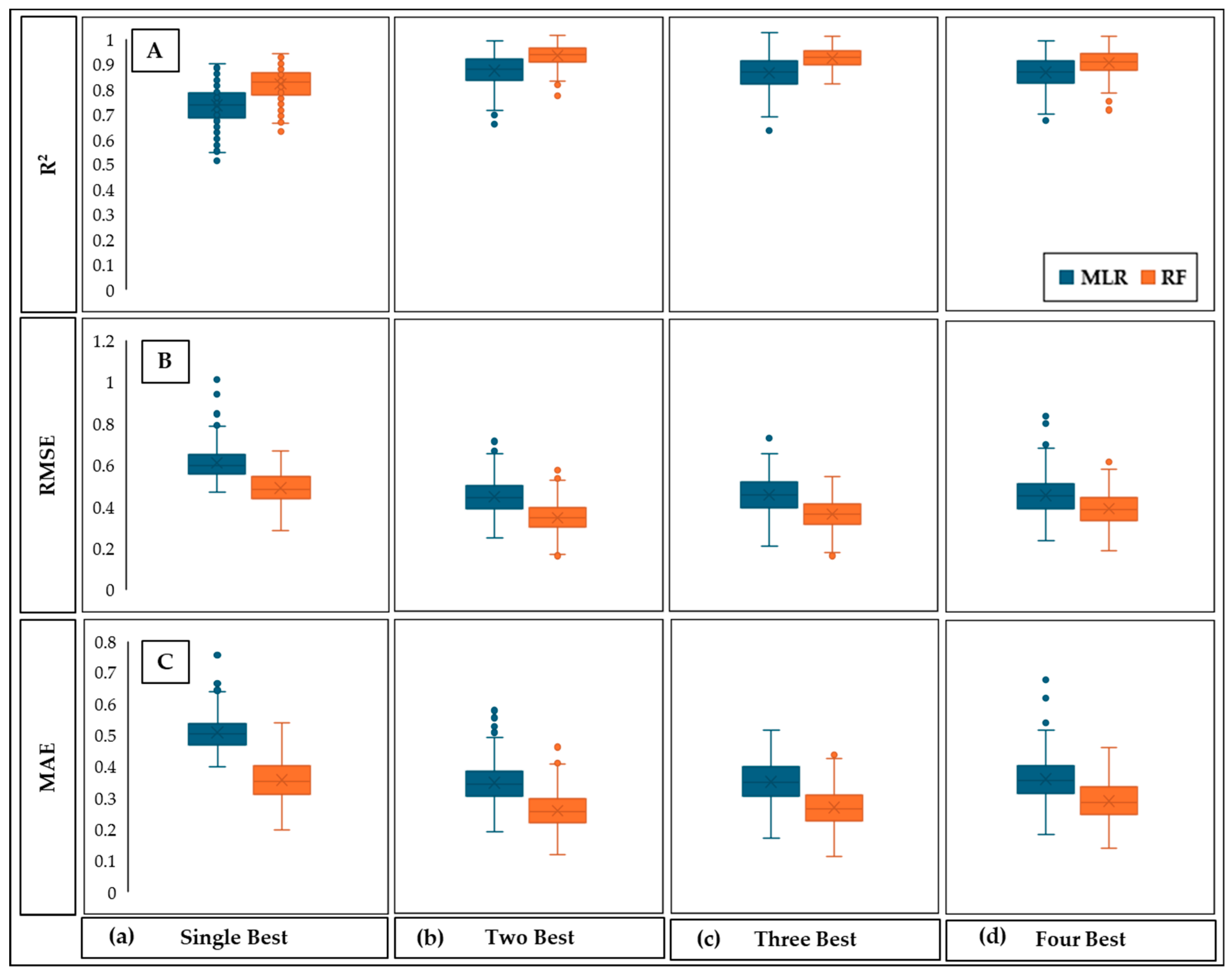

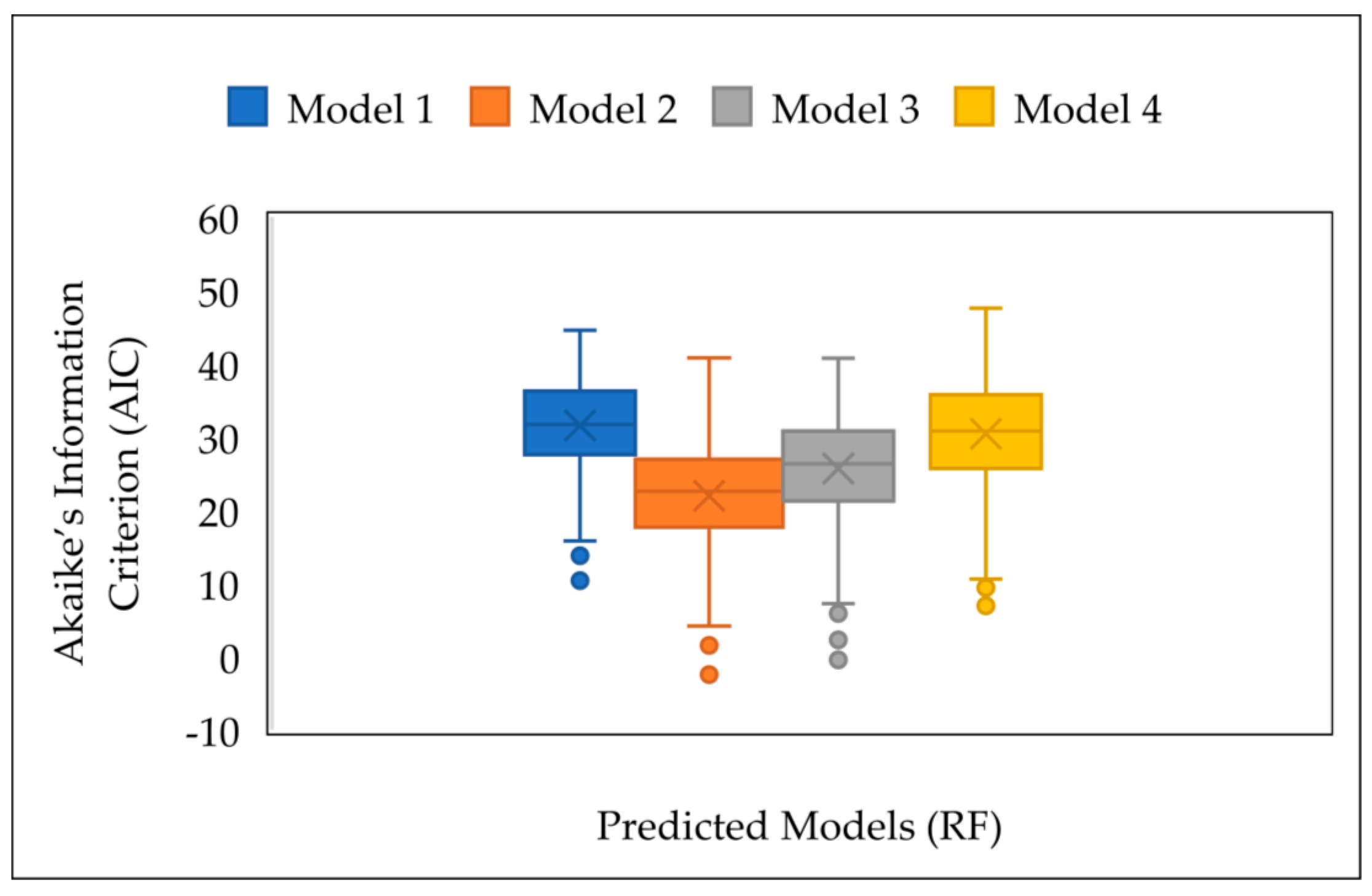

3.4.2. Prediction of Plant Height Using MLR and RF

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fróna, D.; Szenderák, J.; Harangi-Rákos, M. The Challenge of Feeding the World. Sustainability 2019, 11, 5816. [Google Scholar] [CrossRef]

- Röös, E.; Bajželj, B.; Smith, P.; Patel, M.; Little, D.; Garnett, T. Greedy or needy? Land use and climate impacts of food in 2050 under different livestock futures. Glob. Environ. Chang. 2017, 47, 1–12. [Google Scholar] [CrossRef]

- Valin, H.; Sands, R.D.; Van der Mensbrugghe, D.; Nelson, G.C.; Ahammad, H.; Blanc, E.; Bodirsky, B.; Fujimori, S.; Hasegawa, T.; Havlik, P.; et al. The Future of Food Demand: Understanding Differences in Global Economic Models. Agric. Econ. 2014, 45, 51–67. [Google Scholar] [CrossRef]

- Turner, N.C.; Molyneux, N.; Yang, S.; Xiong, Y.C.; Siddique, K.H. Climate Change in South-West Australia and North-West China: Challenges and Opportunities for Crop Production. Crop Pasture Sci. 2011, 62, 445–456. [Google Scholar] [CrossRef]

- Siervo, M.; Montagnese, C.; Mathers, J.C.; Soroka, K.R.; Stephan, B.C.; Wells, J.C. Sugar Consumption and Global Prevalence of Obesity and Hypertension: An Ecological Analysis. Public Health Nutr. 2014, 17, 587–596. [Google Scholar] [CrossRef] [PubMed]

- Central Bank of Sri Lanka. Chapter 2: National Output, Expenditure, and Income (Annual Report 2019). Available online: https://www.cbsl.gov.lk/en/publications/economic-and-financial-reports/annual-reports/annual-report-2019 (accessed on 2 August 2021).

- Department of Development Finance, Ministry of Finance. Development Policy for Sugar Industry in Sri Lanka. 2016. Available online: https://sugarres.lk/wp-content/uploads/2020/05/Policy-Paper-Sugar-Industry-Final.pdf (accessed on 2 August 2021).

- FAO. Food Outlook—Biannual Report on Global Food Markets: November 2019; Food & Agriculture Organization: Rome, Italy, 2019; Available online: https://openknowledge.fao.org/server/api/core/bitstreams/5b53665b-3767-4681-9cad-ebf60d5d1dbe/content (accessed on 2 August 2021).

- Molijn, R.A.; Iannini, L.; Vieira Rocha, J.; Hanssen, R.F. Sugarcane Productivity Mapping through C-Band and L-Band SAR and Optical Satellite Imagery. Remote Sens. 2019, 11, 1109. [Google Scholar] [CrossRef]

- Susantoro, T.M.; Wikantika, K.; Saepuloh, A.; Harsolumakso, A.H. Selection of Vegetation Indices for Mapping the Sugarcane Condition Around the Oil and Gas Field of North West Java Basin, Indonesia. IOP Conf. Ser. Earth Environ. Sci. 2018, 149, 012001. [Google Scholar] [CrossRef]

- Sanghera, G.S.; Malhotra, P.K.; Singh, H.; Bhatt, R. Climate Change Impact in Sugarcane Agriculture and Mitigation Strategies. In Harnessing Plant Biotechnology and Physiology to Stimulate Agricultural Growth; Agrobios: Jodhpur, India, 2019; pp. 99–115. [Google Scholar]

- Bizzo, W.A.; Lenço, P.C.; Carvalho, D.J.; Veiga, J.P.S. The Generation of Residual Biomass during the Production of Bioethanol from Sugarcane, Its Characterization and Its Use in Energy Production. Renew. Sustain. Energy Rev. 2014, 29, 589–603. [Google Scholar] [CrossRef]

- Keerthipala, A.P. Development of sugar industry in Sri Lanka. Sugar Technol. 2016, 18, 612–626. [Google Scholar] [CrossRef]

- Luna, I.; Lobo, A. Mapping Crop Planting Quality in Sugarcane from UAV Imagery: A Pilot Study in Nicaragua. Remote Sens. 2016, 8, 500. [Google Scholar] [CrossRef]

- Ji-Hua, M.; Bing-Fang, W. Study on the crop condition monitoring methods with remote sensing. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2008, 37, 945–950. [Google Scholar]

- Ji-Hua, M.; Bing-Fang, W.; Qiang-Zi, L. A Global Crop Growth Monitoring System Based on Remote Sensing. In Proceedings of the 2006 IEEE International Symposium on Geoscience and Remote Sensing, Denver, CO, USA, 31 July–4 August 2006; pp. 2277–2280. [Google Scholar] [CrossRef]

- Shafi, U.; Mumtaz, R.; García-Nieto, J.; Hassan, S.A.; Zaidi, S.A.R.; Iqbal, N. Precision Agriculture Techniques and Practices: From Considerations to Applications. Sensors 2019, 19, 3796. [Google Scholar] [CrossRef] [PubMed]

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of Remote Sensing in Precision Agriculture: A Review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Chen, H.; Li, D.; Wu, M.; Zhao, W. Remote Estimation of Canopy Height and Aboveground Biomass of Maize Using High-Resolution Stereo Images from a Low-Cost Unmanned Aerial Vehicle System. Ecol. Indic. 2016, 67, 637–648. [Google Scholar] [CrossRef]

- Chen, Y.; Feng, L.; Mo, J.; Mo, W.; Ding, M.; Liu, Z. Identification of Sugarcane with NDVI Time Series Based on HJ-1 CCD and MODIS Fusion. J. Indian Soc. Remote Sens. 2020, 48, 249–262. [Google Scholar] [CrossRef]

- Wan, L.; Li, Y.; Cen, H.; Zhu, J.; Yin, W.; Wu, W.; Zhu, H.; Sun, D.; Zhou, W.; He, Y. Combining UAV-Based Vegetation Indices and Image Classification to Estimate Flower Number in Oilseed Rape. Remote Sens. 2018, 10, 1484. [Google Scholar] [CrossRef]

- Yang, C. High Resolution Satellite Imaging Sensors for Precision Agriculture. Front. Agric. Sci. Eng. 2018, 5, 393–405. [Google Scholar] [CrossRef]

- Atzberger, C. Advances in Remote Sensing of Agriculture: Context Description, Existing Operational Monitoring Systems and Major Information Needs. Remote Sens. 2013, 5, 949–981. [Google Scholar] [CrossRef]

- Khanal, S.; KC, K.; Fulton, J.P.; Shearer, S.; Ozkan, E. Remote Sensing in Agriculture—Accomplishments, Limitations, and Opportunities. Remote Sens. 2020, 12, 3783. [Google Scholar] [CrossRef]

- Xiang, H.; Tian, L. Development of a Low-Cost Agricultural Remote Sensing System Based on an Autonomous Unmanned Aerial Vehicle (UAV). Biosyst. Eng. 2011, 108, 174–190. [Google Scholar] [CrossRef]

- Whitehead, K.; Hugenholtz, C.H. Remote Sensing of the Environment with Small Unmanned Aircraft Systems (UASs), Part 1: A Review of Progress and Challenges. J. Unmanned Vehicle Syst. 2014, 2, 69–85. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A Review on UAV-Based Applications for Precision Agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Strong, C.J.; Burnside, N.G.; Llewellyn, D. The Potential of Small Unmanned Aircraft Systems for the Rapid Detection of Threatened Unimproved Grassland Communities Using an Enhanced Normalized Difference Vegetation Index. PLoS ONE 2017, 12, e0186193. [Google Scholar] [CrossRef]

- Khan, Z.; Rahimi-Eichi, V.; Haefele, S.; Garnett, T.; Miklavcic, S.J. Estimation of Vegetation Indices for High-Throughput Phenotyping of Wheat Using Aerial Imaging. Plant Methods 2018, 14, 20. [Google Scholar] [CrossRef]

- Cucho-Padin, G.; Loayza, H.; Palacios, S.; Balcazar, M.; Carbajal, M.; Quiroz, R. Development of Low-Cost Remote Sensing Tools and Methods for Supporting Smallholder Agriculture. Appl. Geomat. 2020, 12, 247–263. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Freeman, P.K.; Freeland, R.S. Agricultural UAVs in the US: Potential, Policy, and Hype. Remote Sens. Appl. Soc. Environ. 2015, 2, 35–43. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; González-Dugo, V.; Berni, J.A. Fluorescence, Temperature and Narrow-Band Indices Acquired from a UAV Platform for Water Stress Detection Using a Micro-Hyperspectral Imager and a Thermal Camera. Remote Sens. Environ. 2012, 117, 322–337. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-Based Plant Height from Crop Surface Models, Visible, and Near Infrared Vegetation Indices for Biomass Monitoring in Barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Yuan, H.; Yang, G.; Li, C.; Wang, Y.; Liu, J.; Yu, H.; Feng, H.; Xu, B.; Zhao, X.; Yang, X. Retrieving Soybean Leaf Area Index from Unmanned Aerial Vehicle Hyperspectral Remote Sensing: Analysis of RF, ANN, and SVM Regression Models. Remote Sens. 2017, 9, 309. [Google Scholar] [CrossRef]

- Tumlisan, G.Y. Monitoring Growth Development and Yield Estimation of Maize Using Very High-Resolution UAV-Images in Gronau, Germany. Master’s Thesis, University of Twente, Enschede, The Netherlands, February 2017. [Google Scholar]

- Wang, X.; Zhang, R.; Song, W.; Han, L.; Liu, X.; Sun, X.; Luo, X.; Chen, K.; Zhang, Y.; Yang, G.; et al. Dynamic Plant Height QTL Revealed in Maize Through Remote Sensing Phenotyping Using a High-Throughput Unmanned Aerial Vehicle (UAV). Sci. Rep. 2019, 9, 3458. [Google Scholar] [CrossRef]

- Panday, U.S.; Shrestha, N.; Maharjan, S.; Pratihast, A.K.; Shahnawaz; Shrestha, K.L.; Aryal, J. Correlating the Plant Height of Wheat with Above-Ground Biomass and Crop Yield Using Drone Imagery and Crop Surface Model, A Case Study from Nepal. Drones 2020, 4, 28. [Google Scholar] [CrossRef]

- Fu, Z.; Jiang, J.; Gao, Y.; Krienke, B.; Wang, M.; Zhong, K.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.; et al. Wheat Growth Monitoring and Yield Estimation based on Multi-Rotor Unmanned Aerial Vehicle. Remote Sens. 2020, 12, 508. [Google Scholar] [CrossRef]

- Somard, J.; Hossain, M.D.; Ninsawat, S.; Veerachitt, V. Pre-Harvest Sugarcane Yield Estimation Using UAV-Based RGB Images and Ground Observation. Sugar. Technol. 2018, 20, 645–657. [Google Scholar] [CrossRef]

- Basso, B.; Cammarano, D.; De Vita, P. Remotely Sensed Vegetation Indices: Theory and Applications for Crop Management. Ital. J. Agrometeorol. 2004, 53, 36–53. [Google Scholar]

- Ni, J.; Yao, L.; Zhang, J.; Cao, W.; Zhu, Y.; Tai, X. Development of an Unmanned Aerial Vehicle-Borne Crop-Growth Monitoring System. Sensors 2017, 17, 502. [Google Scholar] [CrossRef]

- Stanton, C.; Starek, M.J.; Elliott, N.; Brewer, M.; Maeda, M.M.; Chu, T. Unmanned Aircraft System-Derived Crop Height and Normalized Difference Vegetation Index Metrics for Sorghum Yield and Aphid Stress Assessment. J. Appl. Remote Sens. 2017, 11, 026035. [Google Scholar] [CrossRef]

- De Swaef, T.; Maes, W.H.; Aper, J.; Baert, J.; Cougnon, M.; Reheul, D.; Steppe, K.; Roldán-Ruiz, I.; Lootens, P. Applying RGB- and Thermal-Based Vegetation Indices from UAVs for High-Throughput Field Phenotyping of Drought Tolerance in Forage Grasses. Remote Sens. 2021, 13, 147. [Google Scholar] [CrossRef]

- Kazemi, F.; Parmehr, E.G. Evaluation of RGB Vegetation Indices Derived from UAV Images for Rice Crop Growth Monitoring. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 10, 385–390. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel Algorithms for Remote Estimation of Vegetation Fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Trevisan, L.R.; Brichi, L.; Gomes, T.M.; Rossi, F. Estimating Black Oat Biomass Using Digital Surface Models and a Vegetation Index Derived from RGB-Based Aerial Images. Remote Sens. 2023, 15, 1363. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.; Yang, H.; Xu, B.; Li, Z.; Yang, X. Clustering Field-Based Maize Phenotyping of Plant-Height Growth and Canopy Spectral Dynamics Using a UAV Remote-Sensing Approach. Front. Plant Sci. 2018, 871, 1638. [Google Scholar] [CrossRef]

- Sumesh, K.C.; Ninsawat, S.; Som-Ard, J. Integration of RGB-Based Vegetation Index, Crop Surface Model and Object-Based Image Analysis Approach for Sugarcane Yield Estimation Using Unmanned Aerial Vehicle. Comput. Electron. Agric. 2021, 180, 105903. [Google Scholar] [CrossRef]

- Yu, D.; Zha, Y.; Shi, L.; Jin, X.; Hu, S.; Yang, Q.; Huang, K.; Zeng, W. Improvement of Sugarcane Yield Estimation by Assimilating UAV-Derived Plant Height Observations. Eur. J. Agron. 2020, 121, 126159. [Google Scholar] [CrossRef]

- Han, X.; Thomasson, J.A.; Bagnall, G.C.; Pugh, N.A.; Horne, D.W.; Rooney, W.L.; Jung, J.; Chang, A.; Malambo, L.; Popescu, S.C.; et al. Measurement and Calibration of Plant-Height from Fixed-Wing UAV Images. Sensors 2018, 18, 4092. [Google Scholar] [CrossRef]

- Lu, N.; Zhou, J.; Han, Z.; Li, D.; Cao, Q.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cheng, T. Improved Estimation of Aboveground Biomass in Wheat from RGB Imagery and Point Cloud Data Acquired with a Low-Cost Unmanned Aerial Vehicle System. Plant Methods 2019, 15, 17. [Google Scholar] [CrossRef]

- Abdullakasim, W.; Kasemjit, A.; Satirametekul, T. Estimation of Sugarcane Growth Using Vegetation Indices Derived from Aerial Images. In Proceedings of the 1st International Conference on Innovation for Resilient Agriculture, Bangkok, Thailand, 6–8 October 2022; pp. 174–181. [Google Scholar]

- Vasconcelos, J.C.S.; Speranza, E.A.; Antunes, J.F.G.; Barbosa, L.A.F.; Christofoletti, D.; Severino, F.J.; de Almeida Cançado, G.M. Development and Validation of a Model Based on Vegetation Indices for the Prediction of Sugarcane Yield. AgriEngineering 2023, 5, 698–719. [Google Scholar] [CrossRef]

- Ruwanpathirana, P.P.; Madushanka, P.L.A.; Jayasinghe, G.Y.; Wijekoon, W.M.C.J.; Priyankara, A.C.P.; Kazuhitho, S. Assessment of the Optimal Flight Time of RGB Image Based Unmanned Aerial Vehicles for Crop Monitoring. Rajarata Univ. J. 2021, 6, 765. [Google Scholar]

- Turner, D.; Lucieer, A.; Watson, C. An Automated Technique for Generating Georectified Mosaics from Ultra-High Resolution Unmanned Aerial Vehicle (UAV) Imagery, Based on Structure from Motion (SfM) Point Clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating Biomass of Barley Using Crop Surface Models (CSMs) Derived from UAV-Based RGB Imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Pepe, M.; Costantino, D.; Alfio, V.S.; Vozza, G.; Cartellino, E. A Novel Method Based on Deep Learning, GIS and Geomatics Software for Building a 3D City Model from VHR Satellite Stereo Imagery. ISPRS Int. J. Geo-Inf. 2021, 10, 697. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and Photographic Infrared Linear Combinations for Monitoring Vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially Located Platform and Aerial Photography for Documentation of Grazing Impacts on Wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Gao, L.; Wang, X.; Johnson, B.A.; Tian, Q.; Wang, Y.; Verrelst, J.; Mu, X.; Gu, X. Remote Sensing Algorithms for Estimation of Fractional Vegetation Cover Using Pure Vegetation Index Values: A Review. ISPRS J. Photogramm. Remote Sens. 2020, 159, 364–377. [Google Scholar] [CrossRef]

- Wolff, F.; Kolari, T.H.; Villoslada, M.; Tahvanainen, T.; Korpelainen, P.; Zamboni, P.A.; Kumpula, T. RGB vs. Multispectral Imagery: Mapping Aapa Mire Plant Communities with UAVs. Ecol. Indic. 2023, 148, 110140. [Google Scholar] [CrossRef]

- Bascon, M.V.; Nakata, T.; Shibata, S.; Takata, I.; Kobayashi, N.; Kato, Y.; Inoue, S.; Doi, K.; Murase, J.; Nishiuchi, S. Estimating Yield-Related Traits Using UAV-Derived Multispectral Images to Improve Rice Grain Yield Prediction. Agriculture 2022, 12, 1141. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.; Dai, H.; Xu, B.; Yang, H.; Feng, H.; Li, Z.; Yang, X. Modeling Maize Above-Ground Biomass Based on Machine Learning Approaches Using UAV Remote-Sensing Data. Plant Methods 2019, 15, 10. [Google Scholar] [CrossRef]

- Barbosa, B.D.S.; Ferraz, G.A.S.; Costa, L.; Ampatzidis, Y.; Vijayakumar, V.; dos Santos, L.M. UAV-Based Coffee Yield Prediction Utilizing Feature Selection and Deep Learning. Smart Agric. Technol. 2021, 1, 100010. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- de Oliveira, R.P.; Barbosa Júnior, M.R.; Pinto, A.A.; Oliveira, J.L.P.; Zerbato, C.; Furlani, C.E.A. Predicting Sugarcane Biometric Parameters by UAV Multispectral Images and Machine Learning. Agronomy 2022, 12, 1992. [Google Scholar] [CrossRef]

- Akaike, H. A New Look at the Statistical Model Identification. IEEE Trans. Autom. Control 1974, 19, 716–723. [Google Scholar] [CrossRef]

- Xu, J.-X.; Ma, J.; Tang, Y.-N.; Wu, W.-X.; Shao, J.-H.; Wu, W.-B.; Wei, S.-Y.; Liu, Y.-F.; Wang, Y.-C.; Guo, H.-Q. Estimation of Sugarcane Yield Using a Machine Learning Approach Based on UAV-LiDAR Data. Remote Sens. 2020, 12, 2823. [Google Scholar] [CrossRef]

- Niu, Y.; Zhang, L.; Zhang, H.; Han, W.; Peng, X. Estimating Above-Ground Biomass of Maize Using Features Derived from UAV-Based RGB Imagery. Remote Sens. 2019, 11, 1261. [Google Scholar] [CrossRef]

- Montibeller, M.; da Silveira, H.L.F.; Sanches, I.D.A.; Körting, T.S.; Fonseca, L.M.G. Identification of gaps in sugarcane plantations using UAV images. In Proceedings of the Brazilian Symposium on Remote Sensing, Santos, Brazil, 28–31 May 2017; pp. 1169–1176. [Google Scholar]

- Du, M.; Noguchi, N. Monitoring of Wheat Growth Status and Mapping of Wheat Yield’s Within-Field Spatial Variations Using Color Images Acquired from UAV-Camera System. Remote Sens. 2017, 9, 289. [Google Scholar] [CrossRef]

- Zhao, H.; Lee, J. The Feasibility of Consumer RGB Camera Drones in Evaluating Multitemporal Vegetation Status of a Selected Area: A Technical Note. Papers Appl. Geogr. 2020, 6, 480–488. [Google Scholar] [CrossRef]

- Sanches, G.M.; Duft, D.G.; Kölln, O.T.; Luciano, A.C.D.S.; De Castro, S.G.Q.; Okuno, F.M.; Franco, H.C.J. The Potential for RGB Images Obtained Using Unmanned Aerial Vehicle to Assess and Predict Yield in Sugarcane Fields. Int. J. Remote Sens. 2018, 39, 5402–5414. [Google Scholar] [CrossRef]

- Li, H.; Yan, X.; Su, P.; Su, Y.; Li, J.; Xu, Z.; Gao, C.; Zhao, Y.; Feng, M.; Shafiq, F.; et al. Estimation of Winter Wheat LAI Based on Color Indices and Texture Features of RGB Images Taken by UAV. J. Sci. Food Agric. 2024, 104, 13807. [Google Scholar] [CrossRef]

- Felix, F.C.; Cândido, B.M.; de Moraes, J.F.L. How Suitable Are Vegetation Indices for Estimating the (R) USLE C-Factor for Croplands? A Case Study from Southeast Brazil. ISPRS Open J. Photogramm. Remote Sens. 2023, 10, 100050. [Google Scholar] [CrossRef]

- Poudyal, C.; Sandhu, H.; Ampatzidis, Y.; Odero, D.C.; Arbelo, O.C.; Cherry, R.H.; Costa, L.F. Prediction of Morpho-Physiological Traits in Sugarcane Using Aerial Imagery and Machine Learning. Smart Agric. Technol. 2023, 3, 100104. [Google Scholar] [CrossRef]

- de Souza, C.H.W.; Lamparelli, R.A.C.; Rocha, J.V.; Magalhães, P.S.G. Mapping Skips in Sugarcane Fields Using Object-Based Analysis of Unmanned Aerial Vehicle (UAV) Images. Comput. Electron. Agric. 2017, 143, 49–56. [Google Scholar] [CrossRef]

- Niu, Y.; Han, W.; Zhang, H.; Zhang, L.; Chen, H. Estimating Fractional Vegetation Cover of Maize under Water Stress from UAV Multispectral Imagery Using Machine Learning Algorithms. Comput. Electron. Agric. 2021, 189, 106414. [Google Scholar] [CrossRef]

- Kawamura, K.; Asai, H.; Yasuda, T.; Khanthavong, P.; Soisouvanh, P.; Phongchanmixay, S. Field Phenotyping of Plant Height in an Upland Rice Field in Laos Using Low-Cost Small Unmanned Aerial Vehicles (UAVs). Plant Prod. Sci. 2020, 23, 452–465. [Google Scholar] [CrossRef]

- Aasen, H.; Burkart, A.; Bolten, A.; Bareth, G. Generating 3D hyperspectral information with lightweight UAV snapshot cameras for vegetation monitoring: From camera calibration to quality assurance. ISPRS J. Photogramm. Remote Sens. 2015, 108, 245–259. [Google Scholar] [CrossRef]

- Ramos, A.P.M.; Osco, L.P.; Furuya, D.E.G.; Gonçalves, W.N.; Santana, D.C.; Teodoro, L.P.R.; da Silva Junior, C.A.; Capristo-Silva, G.F.; Li, J.; Baio, F.H.R.; et al. A Random Forest Ranking Approach to Predict Yield in Maize with UAV-Based Vegetation Spectral Indices. Comput. Electron. Agric. 2020, 178, 105791. [Google Scholar] [CrossRef]

- Ranđelović, P.; Đorđević, V.; Milić, S.; Balešević-Tubić, S.; Petrović, K.; Miladinović, J.; Đukić, V. Prediction of Soybean Plant Density Using a Machine Learning Model and Vegetation Indices Extracted from RGB Images Taken with a UAV. Agronomy 2020, 10, 1108. [Google Scholar] [CrossRef]

- Teodoro, P.E.; Teodoro, L.P.; Baio, F.H.; da Silva Junior, C.A.; dos Santos, R.G.; Ramos, A.P.; Pinheiro, M.M.; Osco, L.P.; Gonçalves, W.N.; Carneiro, A.M.; et al. Predicting Days to Maturity, Plant Height, and Grain Yield in Soybean: A Machine and Deep Learning Approach Using Multispectral Data. Remote Sens. 2021, 13, 4632. [Google Scholar] [CrossRef]

| Flight No | Days after Planting (DAP) | Growth Stage | Images Collected |

|---|---|---|---|

| 1 (F1) | 81 | Tillering | 69 |

| 2 (F2) | 141 | Grand growth | 69 |

| 3 (F3) | 201 | Grand growth | 69 |

| 4 (F4) | 251 | Ripening | 69 |

| VI | Name | Formula | References |

|---|---|---|---|

| GRVI | Green–Red Vegetation Index | [34] | |

| ARI | Visible Atmospherically Resistant Index | [46] | |

| GLI | Green Leaf Index | [60] | |

| MGRVI | Modified Green–Red Vegetation Index | [34] |

| Flight No | GLI | VARI | GRVI | MGRVI | ||||

|---|---|---|---|---|---|---|---|---|

| Mean | SD | Mean | SD | Mean | SD | Mean | SD | |

| F1 | 0.037 a | 0.021 | −0.038 a | 0.042 | −0.022 a | 0.026 | −0.044 a | 0.051 |

| F2 | 0.111 b | 0.018 | 0.130 b | 0.053 | 0.057 b | 0.023 | 0.114 b | 0.047 |

| F3 | 0.135 b | 0.047 | 0.098 b | 0.070 | 0.072 b | 0.049 | 0.138 b | 0.095 |

| F4 | 0.129 b | 0.021 | 0.086 b | 0.069 | 0.056 b | 0.038 | 0.108 b | 0.073 |

| Flight No | Area Covered by Vegetation (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Classified FVC | Vegetation Indices | ||||||||

| GLI | VARI | GRVI | MGRVI | ||||||

| FVC | Accuracy | FVC | Accuracy | FVC | Accuracy | FVC | Accuracy | ||

| F1 | 32.37 | 30.55 | 98.18 | 30.25 | 97.88 | 29.95 | 97.59 | 31.25 | 98.89 |

| F2 | 60.67 | 56.54 | 95.85 | 57.65 | 96.96 | 55.37 | 94.67 | 58.21 | 97.52 |

| F3 | 72.56 | 71.13 | 98.56 | 70.33 | 97.76 | 69.95 | 97.39 | 71.65 | 99.09 |

| F4 | 86.47 | 71.23 | 85.09 | 70.64 | 84.17 | 71.70 | 85.23 | 81.05 | 94.58 |

| F1 | F2 | F3 | F4 | |

|---|---|---|---|---|

| Mean | 0.64 | 0.83 | 1.49 | 2.29 |

| Median | 0.61 | 0.82 | 1.43 | 2.19 |

| Max | 1.00 | 1.33 | 2.12 | 3.56 |

| Min | 0.40 | 0.65 | 0.80 | 1.36 |

| SD | 0.13 | 0.17 | 0.34 | 0.59 |

| CV % | 20.31 | 20.48 | 22.82 | 25.76 |

| Variable | Rank | Pearson’s Correlation of Coefficient | Mutual Information |

|---|---|---|---|

| CSM_PH | 1 | 0.850 | 0.706 |

| GLI | 2 | 0.736 | 0.502 |

| GRVI | 3 | 0.563 | 0.397 |

| MGRVI | 4 | 0.558 | 0.390 |

| VARI | 5 | 0.466 | 0.367 |

| Model Number | Input Variables | MLR | RF | ||||

|---|---|---|---|---|---|---|---|

| R2 | RMSE (m) | MAE (m) | R2 | RMSE (m) | MAE (m) | ||

| 1 | Single best | 0.73 | 0.601 | 0.50 | 0.82 | 0.49 | 0.36 |

| 2 | Two best | 0.84 | 0.46 | 0.35 | 0.90 | 0.37 | 0.27 |

| 3 | Three best | 0.83 | 0.47 | 0.36 | 0.89 | 0.38 | 0.28 |

| 4 | Four best | 0.83 | 0.47 | 0.37 | 0.87 | 0.41 | 0.30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ruwanpathirana, P.P.; Sakai, K.; Jayasinghe, G.Y.; Nakandakari, T.; Yuge, K.; Wijekoon, W.M.C.J.; Priyankara, A.C.P.; Samaraweera, M.D.S.; Madushanka, P.L.A. Evaluation of Sugarcane Crop Growth Monitoring Using Vegetation Indices Derived from RGB-Based UAV Images and Machine Learning Models. Agronomy 2024, 14, 2059. https://doi.org/10.3390/agronomy14092059

Ruwanpathirana PP, Sakai K, Jayasinghe GY, Nakandakari T, Yuge K, Wijekoon WMCJ, Priyankara ACP, Samaraweera MDS, Madushanka PLA. Evaluation of Sugarcane Crop Growth Monitoring Using Vegetation Indices Derived from RGB-Based UAV Images and Machine Learning Models. Agronomy. 2024; 14(9):2059. https://doi.org/10.3390/agronomy14092059

Chicago/Turabian StyleRuwanpathirana, P. P., Kazuhito Sakai, G. Y. Jayasinghe, Tamotsu Nakandakari, Kozue Yuge, W. M. C. J. Wijekoon, A. C. P. Priyankara, M. D. S. Samaraweera, and P. L. A. Madushanka. 2024. "Evaluation of Sugarcane Crop Growth Monitoring Using Vegetation Indices Derived from RGB-Based UAV Images and Machine Learning Models" Agronomy 14, no. 9: 2059. https://doi.org/10.3390/agronomy14092059

APA StyleRuwanpathirana, P. P., Sakai, K., Jayasinghe, G. Y., Nakandakari, T., Yuge, K., Wijekoon, W. M. C. J., Priyankara, A. C. P., Samaraweera, M. D. S., & Madushanka, P. L. A. (2024). Evaluation of Sugarcane Crop Growth Monitoring Using Vegetation Indices Derived from RGB-Based UAV Images and Machine Learning Models. Agronomy, 14(9), 2059. https://doi.org/10.3390/agronomy14092059