Abstract

Breeding technology is one of the necessary means for agricultural development, and the automatic identification of poor seeds has become a trend in modern breeding. China is one of the main producers of buckwheat, and the cultivation of Hongshan buckwheat plays an important role in agricultural production. The quality of seeds affects the final yield, and improving buckwheat breeding technology is particularly important. In order to quickly and accurately identify broken Hongshan buckwheat seeds, an identification algorithm based on an improved YOLOv5s model is proposed. Firstly, this study added the Ghost module to the YOLOv5s model, which improved the model’s inference speed. Secondly, we introduced the bidirectional feature pyramid network (BiFPN) to the neck of the YOLOv5s model, which facilitates multi-scale fusion of Hongshan buckwheat seeds. Finally, we fused the Ghost module and BiFPN to form the YOLOV5s+Ghost+BiFPN model for identifying broken Hongshan buckwheat seeds. The results show that the precision of the YOLOV5s+Ghost+BiFPN model is 99.7%, which is 11.7% higher than the YOLOv5s model, 1.3% higher than the YOLOv5+Ghost model, and 0.7% higher than the YOLOv5+BiFPN model. Then, we compared the FLOPs value, model size, and confidence. Compared to the YOLOv5s model, the FLOPs value decreased by 6.8 G, and the model size decreased by 5.2 MB. Compared to the YOLOv5+BiFPN model, the FLOPs value decreased by 8.1 G, and the model size decreased by 7.3MB. Compared to the YOLOv5+Ghost model, the FLOPs value increased by only 0.9 G, and the model size increased by 1.4 MB, with minimal numerical fluctuations. The YOLOv5s+Ghost+BiFPN model has more concentrated confidence. The YOLOv5s+Ghost+BiFPN model is capable of fast and accurate recognition of broken Hongshan buckwheat seeds, meeting the requirements of lightweight applications. Finally, based on the improved YOLOv5s model, a system for recognizing broken Hongshan buckwheat seeds was designed. The results demonstrate that the system can effectively recognize seed features and provide technical support for the intelligent selection of Hongshan buckwheat seeds.

1. Introduction

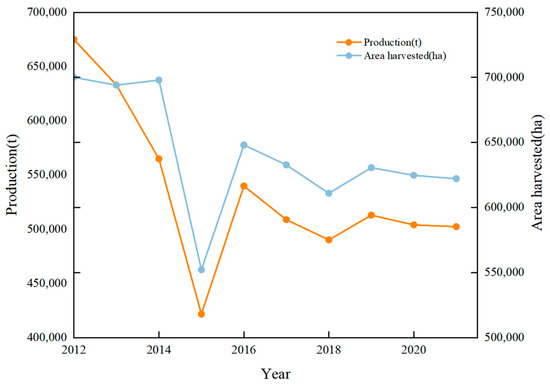

China is the origin of buckwheat and has the richest buckwheat germplasm resources in the world. Buckwheat, as a dual-use crop for food and medicine, has been an important food crop in many regions for thousands of years due to its fast growth, wide adaptability, and rich nutritional value [1]. China is a major buckwheat producer, with cultivation mainly concentrated in the northern and southwestern regions [2]. According to data from the Food and Agriculture Organization of the United Nations, China ranked second in both the harvested area and production volume of buckwheat in the world from 2012 to 2021, as shown in Figure 1. A series of nutritional and health foods have been developed using buckwheat, and its unique secondary metabolites, such as flavonoids, can also be used as high-quality forage to promote the development of animal husbandry [3].

Figure 1.

The trend of changes in the harvested area and production of buckwheat in China from 2012 to 2021.

Hongshan buckwheat is a type of buckwheat. As an important coarse grain, it has strong resistance to disease and drought and is rich in nutrients, making it the main food for people in the major local producing areas. Excellent seed sources are the foundation for a high-quality and stable yield of Hongshan buckwheat, as well as the basis for large-scale cultivation. Currently, the detection of buckwheat seeds mainly relies on manual identification by technicians [4], which is labor-intensive and low in production efficiency, resulting in the slow development of breeding technology for buckwheat. Therefore, designing a fast and accurate algorithm to replace manual detection for use in buckwheat breeding work can help improve buckwheat yield and expand its planting area.

With the rapid development of spectral imaging technology [5], machine vision [6], and artificial intelligence [7], a foundation has been laid for crop breeding research. Ambrose et al. [8] used hyperspectral imaging (HSI) to distinguish vigorous and non-vigorous maize seeds and established a partial least-squares discriminant analysis (PLS-DA) to classify the hyperspectral images. Jia et al. [9] utilized near-infrared spectroscopy to quickly determine the variety of maize-coated seeds and established four maize variety discrimination models using SVM, SIMCA, and BPR. Mukasa et al. [10] used Fourier transform infrared spectroscopy to distinguish between viable and nonviable Hinoki cypress seeds collected within a certain range, and this method achieved the non-destructive detection of seed purity. However, the high cost of spectrometers makes it difficult to widely use them in practical breeding work. To ensure production quality and reduce breeding costs, machine vision and deep learning provide a solution.

In agricultural breeding work, many seeds are not only very small but also similar in appearance, which makes seed detection a challenge and increases the difficulty of breeding work [11]. Tu et al. [12] developed a new software called AIseed based on machine vision technology that can perform a high-throughput phenotypic analysis of 54 characteristics of individual plant seeds. With the emergence of deep learning algorithms, accuracy has significantly improved in traditional target recognition tasks and has been applied to crop breeding tasks due to their powerful computing capabilities and information classification abilities [13]. Wang et al. [14] collected hyperspectral images of sweet corn seeds and evaluated the classification performance of varieties using four machine learning methods and six deep learning algorithms. The results showed that the deep learning algorithms had better performance. Singh Thakur et al. [15] obtained laser backscatter images of soybean seeds through a designed photon sensor and processed the images based on a convolutional neural network (CNN) and various deep learning models. Among them, InceptionV3 achieved a precision of 98.31% and accelerated the classification efficiency. Luo et al. [16] proposed a detection system for the intelligent classification of 47 weed seeds, comparing six deep learning models, where AlexNet had the highest classification accuracy and efficiency, while GoogLeNet had the strongest classification accuracy. The YOLO model, proposed by Redmon et al. [17], as a regression network, uses a single CNN backbone to predict bounding boxes with label probabilities in a single evaluation pattern, with advanced performance in object detection. Ouf et al. [18] collected 11 legume plant seeds of different colors, sizes, and shapes to increase research diversity and compared the detection performance of Faster RCNN and YOLOv4, showing that YOLOv4 had significantly higher accuracy, detection ability, and speed than Faster RCNN. Zhao et al. [19] proposed a rotation-aware model called YOLO-rot for automatically measuring the quantity and size of seeds. To address the challenge of recognizing small and broken Hongshan buckwheat seeds, this study proposes a lightweight improved model based on YOLOv5s. The improved YOLOv5s+GhostNet+BiFPN model achieves fast detection speed, meets the requirements for lightweight applications, and enables the rapid and accurate identification of broken Hongshan buckwheat seeds.

1. The GhostNet module is introduced to reduce the size and parameters of the model. While ensuring precision, this lightweight design improves detection speed, making it suitable for deployment on mobile or embedded devices.

2. The BiFPN module is introduced to realize multi-scale feature fusion in the model. Given the small and similar shapes of Hongshan buckwheat seeds, this module enables the model to focus more on subtle broken features, preventing the loss of target information and improving detection precision.

3. Ablation experiments evaluate the effects of the GhostNet and BiFPN modules on the improved model. The compatibility between different modules is analyzed, verifying the advantages of the YOLOv5s+GhostNet+BiFPN model.

4. A Hongshan buckwheat seed recognition system is designed based on the YOLOv5s+GhostNet+BiFPN model. The system can identify seeds through both image and video detection. After testing the system, it demonstrates good performance in terms of accuracy and speed, accurately identifying broken seeds.

2. Materials and Methods

2.1. Data Acquisition Systems and Experiment Samples

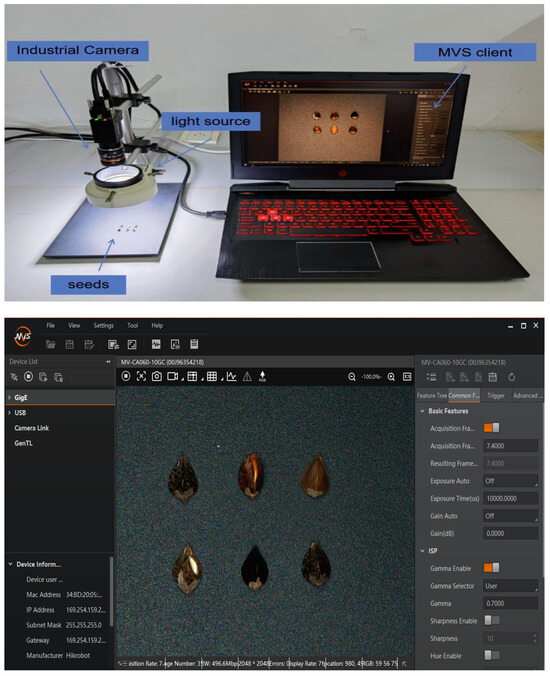

The image acquisition system used in this study includes a computer, camera, and light source. The camera chosen is a Chinese Hikvision Gigabit Ethernet industrial line scan camera, positioned parallel to the desktop at a height of 14 cm. The copyright of the camera belongs to Chinese Hangzhou Hikang Robot Technology Co., Ltd. or its customs Associated company. A circular LED light with adjustable brightness is fixed below the camera on a support at a height of 7 cm, set to constant brightness, and designed to ensure uniform lighting for high-quality seed images. Seeds are placed beneath the system, and the images are uploaded to the MVS client in real time, with the camera operated using computer control. This acquisition system is the camera supporting system, as shown in Figure 2.

Figure 2.

Data collection system.

The buckwheat seeds of Hongshan originated in Shuozhou City, Shanxi Province, China. The seed characteristics are random, and not all are complete with clear stripes.

To meet breeding requirements, it is necessary to ensure the purity of Hongshan buckwheat seeds. Therefore, the seeds are classified into four categories, as shown in Figure 3, including complete and with clear texture; complete but with unclear texture; complete without texture; and broken, which were, respectively, represented by WL, WLBMX, WWL, and PS in the experimental results. The uneven distribution of the original buckwheat seeds can lead to errors in training. To ensure the diversity of the dataset and prevent overfitting caused by an imbalanced sample distribution, it is necessary to preprocess the original data. Data augmentation was carried out in this study using the following process, as shown in Figure 4.

Figure 3.

Data augmentation process. The first image is the original data image, and the rest are all images after data augmentation.

Figure 4.

The collected image samples in order from left to right are WL, WLBMX, WWL, and PS.

- Random rotation and flipping: simulating different shooting angles in various recognition environments.

- Brightness adjustment: simulating different lighting conditions in various environments.

- Adding pepper and salt noise: the model is capable of resisting random perturbations, which aids in improving the generalization performance.

Before training, a manual annotation method was adopted, and the labeling tool LabelImg was used to mark the position of each object on the image for each category, and their category was labeled. After data augmentation, there were a total of 4000 images of buckwheat data, divided into a training set, a validation set, and a test set in an 8:1:1 ratio. The specific quantities in the dataset are shown in Table 1.

Table 1.

Number of seeds in each category.

2.2. Methods

The YOLOv5 network model has a small volume and a fast training speed [20]. Based on the differences in network depth and feature map width, it can be divided into YOLOv5s, YOLOv5m, YOLOv5l, and YOLOv5x. In order to meet the requirements of lightweight models, reduce storage space, and improve recognition speed, this study used the YOLOv5s detection model with lower complexity. As the Hongshan buckwheat seeds are small and have similar characteristics between broken and intact seeds, the redundant feature maps may increase the difficulty of recognition. Therefore, improvements were made to the backbone and neck parts of the YOLOv5s model.

2.2.1. YOLOv5s Network Structure

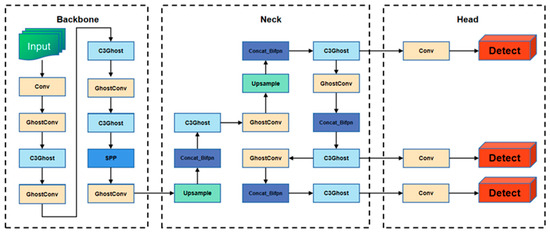

YOLOv5s is mainly composed of a backbone, neck, and head, and the network structure is shown in Figure 5.

Figure 5.

The network structure of YOLOv5. (a) The Focus structure slices the image into smaller feature maps. (b) The SPP module performs pooling operations of different sizes on input feature maps. (c,d) The CSP1_x is used to represent the C3 layer in the backbone, while the CSP2_x is used to represent the C3 layer in the neck.

The backbone is the main network of YOLOv5 [21], responsible for extracting features from input images and converting an original input image into multiple feature maps to complete subsequent object detection tasks. Its main structure includes the Focus structure, Conv module, C3 module, and SPP module. The key to the Focus structure is slicing the image into smaller feature maps, as shown in Figure 5a. The Conv module is a basic module commonly used in convolutional neural networks, mainly composed of convolutional layers, BN layers, and activation functions. The BN layer is added after the convolutional layer for normalization, which accelerates the training process, improves the model’s generalization ability, and reduces the model’s dependence on initialization. The activation function is a type of nonlinear function that introduces nonlinear transformation capability to neural networks and can adapt to different types of data distributions.

The C3 layer, also known as the Cross-Stage Partial Layer (CSP), mainly increases the depth and receptive field of the network, making it more attentive to global information about objects and improving its feature extraction capability. CSP1_x is used to represent the C3 layer in the backbone, while CSP2_x is used to represent the C3 layer in the neck. Compared to CSP1_x, CSP2_x replaces Resunit with 2× CBL modules. The CBL module divides the input into two branches, one passing through the CBL and several residual structures to perform a convolution operation, while the other performs a direct convolution operation. The two branches are then concatenated, passed through the BN, and finally through another CBL, as shown in Figure 5c,d.

The SPP module [22] is a pooling module commonly used in convolutional neural networks to achieve the spatial and positional invariance of input data, thus improving the network’s recognition ability. Its main idea is to apply different sizes of receptive fields to the same image to capture features of different scales. In the SPP module, the input feature map is subjected to pooling of different sizes to obtain a set of feature maps of different sizes. These feature maps are concatenated together, and their dimensionality is reduced through a fully connected layer to obtain a fixed-size feature vector, as shown in Figure 5b.

The neck module is responsible for the multi-scale feature fusion of feature maps to improve the accuracy of object detection. In YOLOv5, the FPN+PAN structure is used to fuse feature maps from different levels through upsampling and downsampling operations to generate a multi-scale feature pyramid. The top–down part mainly merges features of different levels by upsampling and fusing them with coarser feature maps, while the bottom–up part uses a convolutional layer to merge feature maps from different levels, filling and reinforcing localization information, as shown in Figure 6.

Figure 6.

Figure (I) shows that FPN first generates feature maps of all levels by a bottom-up path, and then generates feature pyramids by a top-down path. The FPN structure merges features from P6 to P3 through a top–down path. Figure (II) shows that PANet adds a bottom–up merging method from N3 to N6 on the basis of the FPN structure.

The head is responsible for the final regression prediction. In YOLOv5, the main component of the head is three Detect detectors, which perform object detection on feature maps of different scales using grid-based anchors, as shown in Figure 5.

2.2.2. Improved Model

A structure diagram of the improved YOLOv5 model is shown in Figure 7. The model adopts the lightweight GhostNet algorithm and introduces the weighted bidirectional feature pyramid network module (BiFPN) to achieve the automatic recognition of Hongshan buckwheat seeds.

Figure 7.

Improved network structure.

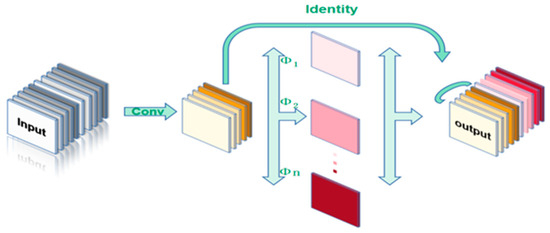

GhostNet

To reduce the size and parameter count of the model, improve running speed, and effectively save computational resources, this study uses GhostNet, a lightweight neural network, to replace the YOLOv5s’ backbone network and perform simple linear operations on the features to generate more similar feature maps. This greatly reduces the model’s parameters and computational complexity, avoiding the redundancy of Hongshan buckwheat seed feature maps and speeding up the automatic identification of damaged seeds. GhostNet is constructed by stacking Ghost modules to obtain Ghost bottlenecks [23]. It replaces traditional convolutions with ghost modules. First, a regular 1 × 1 convolution is used to compress the number of channels in the input image, followed by a depthwise separable convolution to obtain more feature maps. Then, different feature maps are combined into a new output by connecting them together, as shown in Figure 8.

Figure 8.

The Ghostnet module can be divided into three parts. The first part is a normal convolution operation. The second part is a grouped convolution operation, which can generate a portion of the feature maps below the output feature map. The third part is a recognition process that adds the number of channels corresponding to the feature maps obtained from the first part of the convolution and the channels obtained from the second part of the grouped convolution. Φn is a linear transformation in the module.

In GhostNet, Φn is a linear transformation. The transformation method of Φn is not fixed and can be a 3 × 3 linear kernel or a 5 × 5 linear kernel. Additionally, different sizes of convolution kernels can be used for linear transformation operations. Usually, to consider the inference situation of the CPU or GPU, all 3 × 3 or all 5 × 5 convolution kernels are used. The size of the convolution kernel is determined based on the recognized object, and while not changing the output feature map’s size, it can effectively reduce the computational complexity and improve the running speed and accuracy, making it highly versatile.

The Ghost bottleneck is built based on the advantages of the Ghost module. The Ghost bottleneck consists of two stacked Ghost modules. When the stride = 1, the first Ghost module acts as an extension layer that increases the number of channels, while the second Ghost module reduces the number of channels to match the residual connection. The residual is connected between the inputs and outputs of the two Ghost modules, and batch normalization and ReLU non-linearity are applied after each layer. When the stride = 2, the main body consists of two Ghost modules and a deep convolutional layer. The first Ghost module increases the number of channels and uses deep convolutional downsampling in high-dimensional space to compress the width and height of the feature map. The second Ghost module compresses the number of channels. In the residual edge part, the input feature map is first compressed in width and height with deep convolution, and then the channel is adjusted with a 1 × 1 convolution to make the size of the input and output feature maps the same, as shown in Figure 9.

Figure 9.

Structure of the Ghost bottleneck. ReLU is an activation function.

The Weighted Bidirectional Feature Pyramid Module (BiFPN)

Considering that Hongshan buckwheat seeds are small, similar in shape and dark in color, and it is difficult to recognize the stripe information, it is difficult to extract the target information, which may lead to the loss of target information, which is not conducive to the automatic recognition and detection of Hongshan buckwheat seeds, resulting in not meeting the breeding requirements for seed purity. Selecting the weighted bidirectional feature pyramid network module can efficiently implement bidirectional cross-scale connections and weighted feature map fusion and improve the recognition accuracy of small and damaged seeds. In Yolov5s, the neck section adopts the FPN+PAN structure, which has relatively simple fusion and incomplete extraction for complex and small target features, resulting in lower recognition accuracy for damaged Hongshan buckwheat seeds.

Unlike the FPN structure, the BiFPN module mostly treats all input features equally compared to previous feature fusion methods [24]. However, different features have different resolutions, and their contributions to feature fusion are unequal. The BiFPN module adds an additional weight to each input during feature fusion, letting the network learn the significant features of each input and achieve higher-level feature fusion. It helps to identify and distinguish targets with similar features, which is more in line with the requirements of this study, as shown in Figure 10.

Figure 10.

BiFPN’s bidirectional cross-scale connections.

2.3. Model Training and Result Analysis

2.3.1. Model Training

The environment for these experiments is a laptop computer with an AMD Ryzen R9 5900HX CPU, 32 GB of memory, and an RTX3080 GPU. The operating system is Windows 11, the CUDA version is 11.6, and the development language used is Python.

2.3.2. Evaluation Metrics

The value of the IoU can be understood as the degree of overlap between the predicted box generated by the system and the marked box in the original image. If the calculated IoU is greater than the preset threshold, the target is considered correctly detected; otherwise, it is considered not correctly detected. The detection results can be classified into four categories: true positive (TP), false positive (FP), false negative (FN), and true negative (TN).

To evaluate the impact of the improved YOLOv5s model on the experimental results, precision, recall, and the mean average precision (mAP) are introduced to assess the performance of object detection. The superior performance in detection speed and model volume is reflected in the floating-point operations per second (FLOPs) and model size. Precision is the percentage of correctly predicted positive samples out of all predicted samples, while recall is the ratio of correctly identified objects to the total number of objects. The formulas are defined as follows:

The mean average precision (mAP) is the average of the average precision (AP) for each category. mAP@0.5 refers to the average precision (AP) value for all classes when the IoU threshold is set to 0.5. mAP@0.5:0.95 refers to the mAP value when the IoU threshold ranges from 0.5 to 0.95. The formulas are defined as follows:

FLOPs represent the number of floating-point operations per second, used to measure the complexity of the model.

Confidence indicates how accurate the model is in predicting the detected object.

The model size is used to evaluate the storage space occupied by a trained model in a system. The smaller the size of the algorithm model, the easier it is to deploy on mobile platforms and apply in practical scenarios.

3. Result

3.1. Network Model Comparison

To validate the experimental results of the improved YOLOv5s model, ablation experiments were conducted using metrics such as precision, recall, and mAP, and the time-consuming aspect was compared. As shown in Table 2, “√” means using the corresponding method to improve the model, and “-” means not using the corresponding method. Both the added GhostNet and BiFPN modules contribute to the performance of the model. The original model exhibits relatively low precision in identifying broken and blurry buckwheat seeds. Moreover, there is a significant disparity in recognition precision between different categories, with a higher precision in identifying seeds without textures but a lower recall rate.

Table 2.

Experimental results of different networks.

For broken buckwheat seeds, the experimental data of the YOLOv5s+GhostNet+BiFPN model outperform other models. It enhances the focus on broken seeds with the highest detection precision of 99.7% and the fastest detection speed of 0.114 s per frame. Comparing the fusion of the GhostNet and BiFPN models with the YOLOv5s model, there is an improvement of 11.7% in precision. In comparison to YOLOv5s+GhostNet, there is a 1.3% increase in precision and a 0.5% improvement in mAP@.5:.95. Compared to YOLOv5s+BiFPN, there is a 0.7% increase in precision and a 0.7% improvement in mAP@.5:.95. These ablation experiments demonstrate that the fusion of the GhostNet and BiFPN models enhances the recognition ability of the model for broken Hongshan buckwheat seeds.

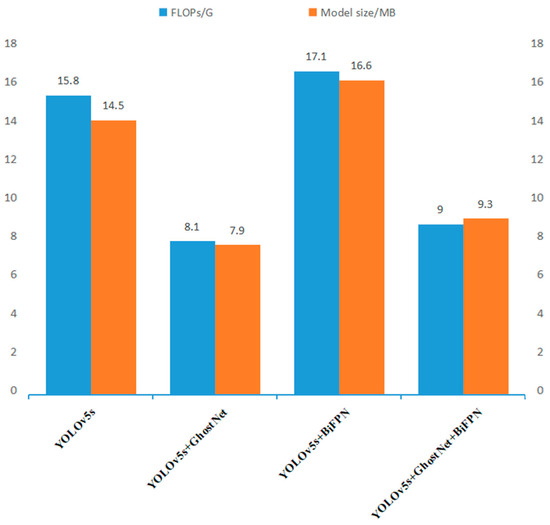

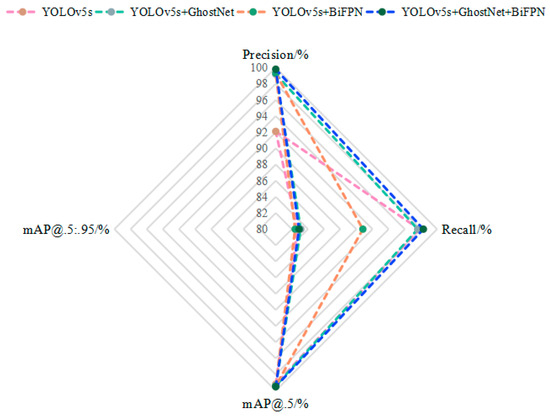

3.2. Lightweight Comparison

To validate the lightweightness of the models, the FLOPs and model sizes of different models were compared, as shown in Figure 11. Compared to YOLOv5s, YOLOv5s+GhostNet+BiFPN reduces the FLOPs by 6.8 G and decreases the model size by 5.2 MB. Compared to YOLOv5s+BiFPN, it lowers the FLOPs by 8.1 G and decreases the model size by 7.3 MB. As for YOLOv5s+GhostNet, the values slightly increase. The training results for all the seed data from the three models are shown in Figure 12. The YOLOv5s+GhostNet+BiFPN model demonstrates better precision, recall rate, and mAP compared to the other models, meeting the requirements of lightweight models while ensuring higher detection precision in seed detection.

Figure 11.

FLOPs and model sizes of different networks.

Figure 12.

Performance comparison of different detection algorithms.

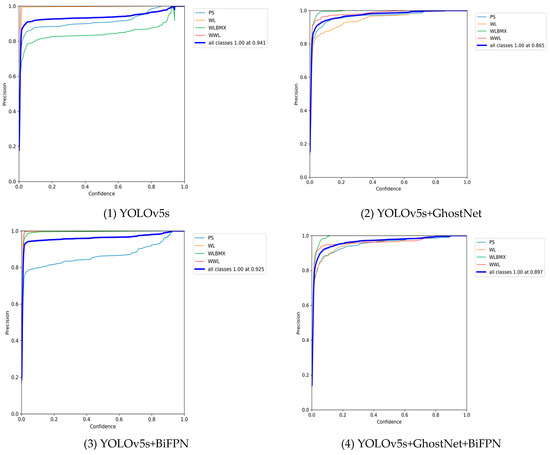

3.3. Confidence Comparison

To have a clearer view of the precision of detected objects across different classes, a confidence relationship graph for four classes is shown in Figure 13. From the graph, it can be observed that the YOLOv5s+GhostNet+BiFPN model has more concentrated and denser confidence scores, performing the best among the four classes. Compared to the original model, all three models show improvements in confidence scores. The fusion of the Ghost module and the BiFPN module can improve the performance of identifying broken Hongshan buckwheat seeds through mutual stacking and complementation. Therefore, we conclude that there is interaction between the fused modules, which contributes to the higher stability and reliability of the YOLOv5s+GhostNet+BiFPN model.

Figure 13.

The precision and confidence relationship for the four categories of Hongshan buckwheat seeds.

4. Identification System

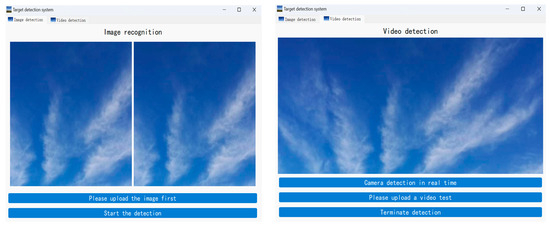

Based on the comparison and data analyses of multiple recognition models, combined with the experimental results and theoretical knowledge of the previous sections, a broken Hongshan buckwheat recognition system based on an improved YOLOv5s model was designed and constructed using computer programming techniques. The system was designed with a PyQT5 interface and required the model to be replaced with a trained model by modifying the address of the used model before creating the interface. The interface design includes two parts: image detection and video detection. Image detection requires uploading photos of buckwheat seeds that have been taken and then clicking on “Start Detection” to identify them. Video detection includes real-time camera detection and uploaded video detection. Real-time camera detection can automatically recognize the seeds by calling the computer’s camera, while uploaded video detection can detect recorded seed videos. The composition and workflow of the system are shown in Figure 14.

Figure 14.

System composition and workflow.

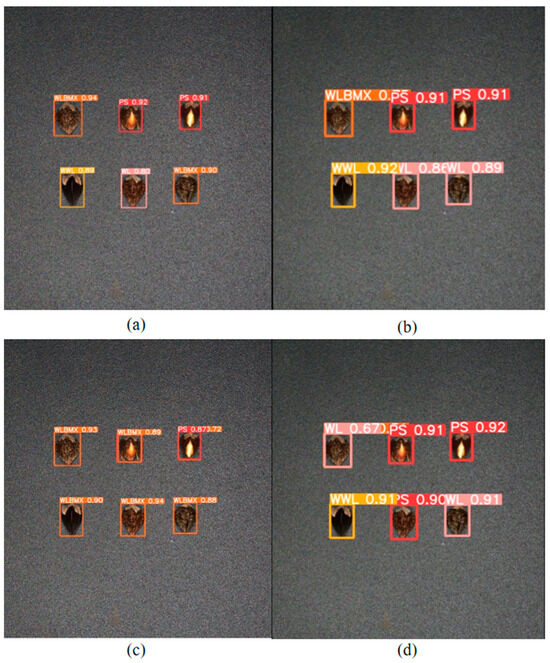

The interface of the recognition system is shown in Figure 15. To evaluate the detection performance of the YOLOv5s+GhostNet+BiFPN model on broken Hongshan buckwheat seeds, we compared the image and video detection results of the YOLOv5s and YOLOv5s+GhostNet+BiFPN models using the validation set. The detection results are shown in Figure 16, where Figure 16a,b represent the image and video detection results of the YOLOv5s+GhostNet+BiFPN model, and Figure 16c,d represent the image and video detection results of the YOLOv5s model. According to the results, it can be observed that the YOLOv5s model has relatively lower precision and is prone to recognition errors. On the other hand, the YOLOv5s+GhostNet+BiFPN model exhibits superior detection capability and higher precision. Through experimental testing, we can conclude that the core recognition algorithm model of this system, the optimized YOLOv5s model, has a stronger advantage in identifying Hongshan buckwheat seeds.

Figure 15.

Image detection interface and video detection interface.

Figure 16.

System identification result.

5. Discussion

Good seeds are crucial for crop breeding. Damaged seeds of Hongshan buckwheat can affect the germination rate and cause uneven growth or even a seedling shortage, thus impacting the quality of crop breeding. To address these issues, this paper proposes the YOLOv5s+GhostNet+BiFPN model, which achieves the accurate identification of broken Hongshan buckwheat seeds, laying a foundation for Hongshan buckwheat breeding work. Liu et al. [25] improved the YOLOv3-tiny detection to detect broken corn on the conveyor belt of a corn harvester, providing real-time detection for mechanized harvesting. Xiang et al. [26] proposed the YOLOX-based YOLO POD model for the rapid and accurate counting of soybean pods, enabling precise yield estimation. Therefore, the proposed method in this paper can provide directions for further research:

1. The improved model can be deployed on mobile devices such as the Jetson platform, enabling the automatic detection and screening of damaged seeds during Hongshan buckwheat seed production and planting processes.

2. The YOLOv5s+GhostNet+BiFPN model can provide a research foundation for predicting yield. By eliminating damaged seeds and analyzing the relationship between seed quality and yield, yield prediction and analysis can be conducted.

3. With the development trend of agricultural mechanization and automation, the recognition system proposed in this paper can be applied to agricultural machinery such as seeders, enabling automatic screening and automated planting.

6. Conclusions

This paper focuses on the recognition of broken Hongshan buckwheat seeds based on an improved YOLOv5s algorithm. This algorithm effectively identifies different texture features and broken buckwheat, making it suitable for small object detection. The YOLOv5s+Ghost+BiFPN model can quickly and accurately recognize broken Hongshan buckwheat seeds while carrying out lightweight operations, with a precision of 99.7%, an mAP@.5 of 99.5%, and an mAP@.5:.95 of 75.7%. The model size is 9.3 MB. Based on the results obtained from this experiment, we can draw the following conclusions:

- The YOLOv5s+Ghost+BiFPN model outperforms the YOLOv5s model, the YOLOv5s+Ghost model, and the YOLOv5s+BiFPN model. This is evidenced by the precision values, as it can better recognize broken seed features and improve the robustness of the detection algorithm at multiple scales.

- The YOLOv5s+BiFPN model and the YOLOv5s+Ghost model have higher detection precision than the YOLOv5s model. Specifically, in the recognition of WLBMX, the precision improvement of the YOLOv5s+BiFPN model and the YOLOv5s+Ghost model is significant.

- The YOLOv5s+BiFPN model and the YOLOv5s+Ghost model have similar recognition precision, but the YOLOv5s+Ghost model has a smaller size, reduced by 7.7 MB, and a decrease of 9G in FLOPs. Therefore, the YOLOv5s+Ghost model is more suitable for lightweight requirements.

- Through comparative verification, all three improvements contribute to the improvement of the model’s performance. There is a certain degree of mutual compatibility between the three models.

- A Hongshan buckwheat seed recognition system was designed based on the improved YOLOv5s model, and the results showed that the system can effectively recognize seed features. We hope this system can provide technical support for improving the quality and yield of Hongshan buckwheat.

The lightweight operations and high precision of the YOLOv5s+Ghost+BiFPN model can effectively address the issue of broken seed recognition. It also provides a new method for intelligent object recognition and promotes the modernization and intelligence of agriculture.

Author Contributions

Conceptualization, X.L.; methodology, X.L. and W.N.; writing—original draft, X.L.; writing—review & editing, W.N., Y.Y., S.M., J.H., Y.W., R.C. and H.S.; investigation, Y.Y.; formal analysis, S.M.; software, J.H. and Y.W.; validation, R.C.; supervision, H.S. All authors have read and agreed to the published version of the manuscript.

Funding

The National Key Research and Development of China (Project No: 2021YFD1600602-09).

Data Availability Statement

The data are available from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fan, Z. Buckwheat proteins and peptides: Biological functions and food applications. Trends Food Sci. Technol. 2021, 110, 155–167. [Google Scholar]

- Tang, Y.; Ding, M.Q.; Tang, Y.X.; Wu, Y.M.; Shao, J.R.; Zhou, M.L. Germplasm Resources of Buckwheat in China. In Molecular Breeding and Nutritional Aspects of Buckwheat; Academic Press: Cambridge, MA, USA, 2016; pp. 13–20. [Google Scholar]

- Suvorova, G.; Zhou, M. Distribution of Cultivated Buckwheat Resources in the World. In Buckwheat Germplasm in the World; Academic Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Suzuki, T.; Noda, T.; Morishita, T.; Ishiguro, K.; Otsuka, S.; Brunori, A. Present status and future perspectives of breeding for buckwheat quality. Breed. Sci. 2020, 70, 48–66. [Google Scholar] [CrossRef] [PubMed]

- Yali, W.; Yankun, P.; Xin, Q.; Qibin, Z. Discriminant analysis and comparison of corn seed vigor based on multiband spectrum. Comput. Electron. Agric. 2021, 190, 106444. [Google Scholar]

- Kiani, S.; Minaee, S. Potential Application of Machine Vision Technology to Saffron (Crocus sativus L.) Quality Characterization. Food Chem. 2016, 212, 392–394. [Google Scholar] [CrossRef] [PubMed]

- Sanaeifar, A.; Guindo, M.L.; Bakhshipour, A.; Fazayeli, H.; Li, X.; Yang, C. Advancing precision agriculture: The potential of deep learning for cereal plant head detection. Comput. Electron. Agric. 2023, 209, 107875. [Google Scholar] [CrossRef]

- Ambrose, A.; Lohumi, S.; Lee, W.-H.; Cho, B.K. Comparative nondestructive measurement of corn seed viability using Fourier transform near-infrared (FT-NIR) and Raman spectroscopy. Sens. Actuators B Chem. 2016, 224, 500–506. [Google Scholar] [CrossRef]

- Jia, S.; An, D.; Liu, Z.; Gu, J.; Yan, Y. Variety identification method of coated maize seeds based on near-infrared spectroscopy and chemometrics. J. Cereal Sci. 2015, 63, 21–26. [Google Scholar] [CrossRef]

- Mukasa, P.; Wakholi, C.; Mo, C.; Oh, M.; Joo, H.-J.; Suh, H.K.; Cho, B.-K. Determination of viability of Retinispora (Hinoki cypress) seeds using FT-NIR spectroscopy. Infrared Phys. Technol. 2019, 98, 62–68. [Google Scholar] [CrossRef]

- Mar, A.-S.; João, V.; Lammert, K.; Henk, K.; Sander, M. Estimation of spinach (Spinacia oleracea) seed yield with 2D UAV data and deep learning. Smart Agric. Technol. 2023, 3, 100129. [Google Scholar]

- Tu, K.; Wu, W.; Cheng, Y.; Zhang, H.; Xu, Y.; Dong, X.; Wang, M.; Sun, Q. AIseed: An automated image analysis software for high-throughput phenotyping and quality non-destructive testing of individual plant seeds. Comput. Electron. Agric. 2023, 207, 107740. [Google Scholar] [CrossRef]

- Guoyang, Z.; Longzhe, Q.; Hailong, L.; Huaiqu, F.; Songwei, L.; Shuhan, Z.; Ruiqi, L. Real-time recognition system of soybean seed full-surface defects based on deep learning. Comput. Electron. Agric. 2021, 187, 106230. [Google Scholar]

- Wang, Y.; Song, S. Variety identification of sweet maize seeds based on hyperspectral imaging combined with deep learning. Infrared Phys. Technol. 2023, 130, 104611. [Google Scholar] [CrossRef]

- Puneet, S.T.; Bhavya, T.; Abhishek, K.; Bhavesh, G.; Vimal, B.; Ondrej, K.; Michal, D.; Jamel, N.; Shashi, P. Deep transfer learning based photonics sensor for assessment of seed-quality. Comput. Electron. Agric. 2022, 196, 106891. [Google Scholar]

- Tongyun, L.; Jianye, Z.; Yujuan, G.; Shuo, Z.; Xi, Q.; Wen, T.; Yangchun, H. Classification of weed seeds based on visual images and deep learning. Inf. Process. Agric. 2023, 10, 40–51. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the Computer Vision & Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Ouf, N.S. Leguminous seeds detection based on convolutional neural networks: Comparison of faster R-CNN and YOLOv4 on a small custom dataset. Artif. Intell. Agric. 2023, 8, 30–45. [Google Scholar] [CrossRef]

- Jinfeng, Z.; Yan, M.; Kaicheng, Y.; Min, Z.; Yueqi, W.; Xuan, W.; Wei, L.; Xin, W.; Xuehui, H. Rice seed size measurement using a rotational perception deep learning model. Comput. Electron. Agric. 2023, 205, 107583. [Google Scholar]

- Jinnan, H.; Guo, L.; Haolan, M.; Yibo, L.; Tingting, Q.; Ming, C.; Shenglian, L. Crop Node Detection and Internode Length Estimation Using an Improved YOLOv5 Model. Agriculture 2023, 13, 473. [Google Scholar]

- Jiaxing, X.; Jiajun, P.; Jiaxin, W.; Binhan, C.; Tingwei, J.; Daozong, S.; Peng, G.; Weixing, W.; Jianqiang, L.; Rundong, Y.; et al. Litchi Detection in a Complex Natural Environment Using the YOLOv5-Litchi Model. Agronomy 2022, 12, 3054. [Google Scholar]

- Huang, Z.; Wang, J.; Fu, X.; Yu, T.; Guo, Y.; Wang, R. DC-SPP-YOLO: Dense connection and spatial pyramid pooling based YOLO for object detection. Inf. Sci. 2020, 522, 241–258. [Google Scholar] [CrossRef]

- Sijia, L.; Furkat, S.; Jamshid, T.; JunHyun, P.; Sangseok, Y.; JaeMo, K. Ghostformer: A GhostNet-Based Two-Stage Transformer for Small Object Detection. Sensors 2022, 22, 6939. [Google Scholar]

- Jidong, L.; Hao, X.; Ying, H.; Wenbin, L.; Liming, X.; Hailong, R.; Biao, Y.; Ling, Z.; Zhenghua, M. A visual identification method for the apple growth forms in the orchard. Comput. Electron. Agric. 2022, 197, 106954. [Google Scholar]

- Liu, Z.; Wang, S. Broken Corn Detection Based on an Adjusted YOLO With Focal Loss. IEEE Access 2019, 7, 68281–68289. [Google Scholar] [CrossRef]

- Shuai, X.; Siyu, W.; Mei, X.; Wenyan, W.; Weiguo, L. YOLO POD: A fast and accurate multi-task model for dense Soybean Pod counting. Plant Methods 2023, 19, 8. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).