A Prediction Method of Seedling Transplanting Time with DCNN-LSTM Based on the Attention Mechanism

Abstract

:1. Introduction

2. Materials and Methods

2.1. General Situation of the Test Area

2.2. Experiment Design

3. Data Collection and Processing

3.1. Data Collection

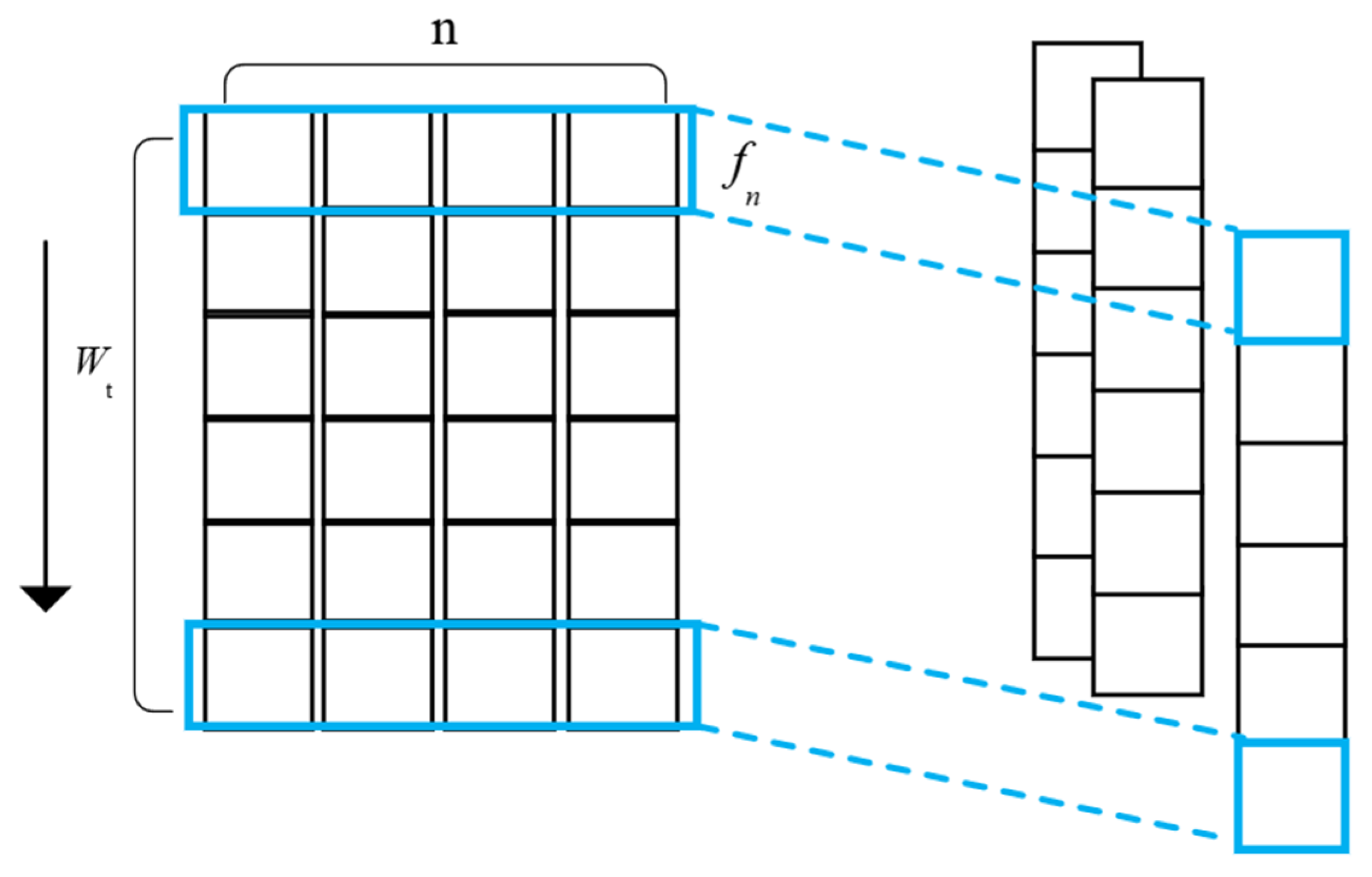

3.2. Data Preprocessing

4. Predictive Model Building

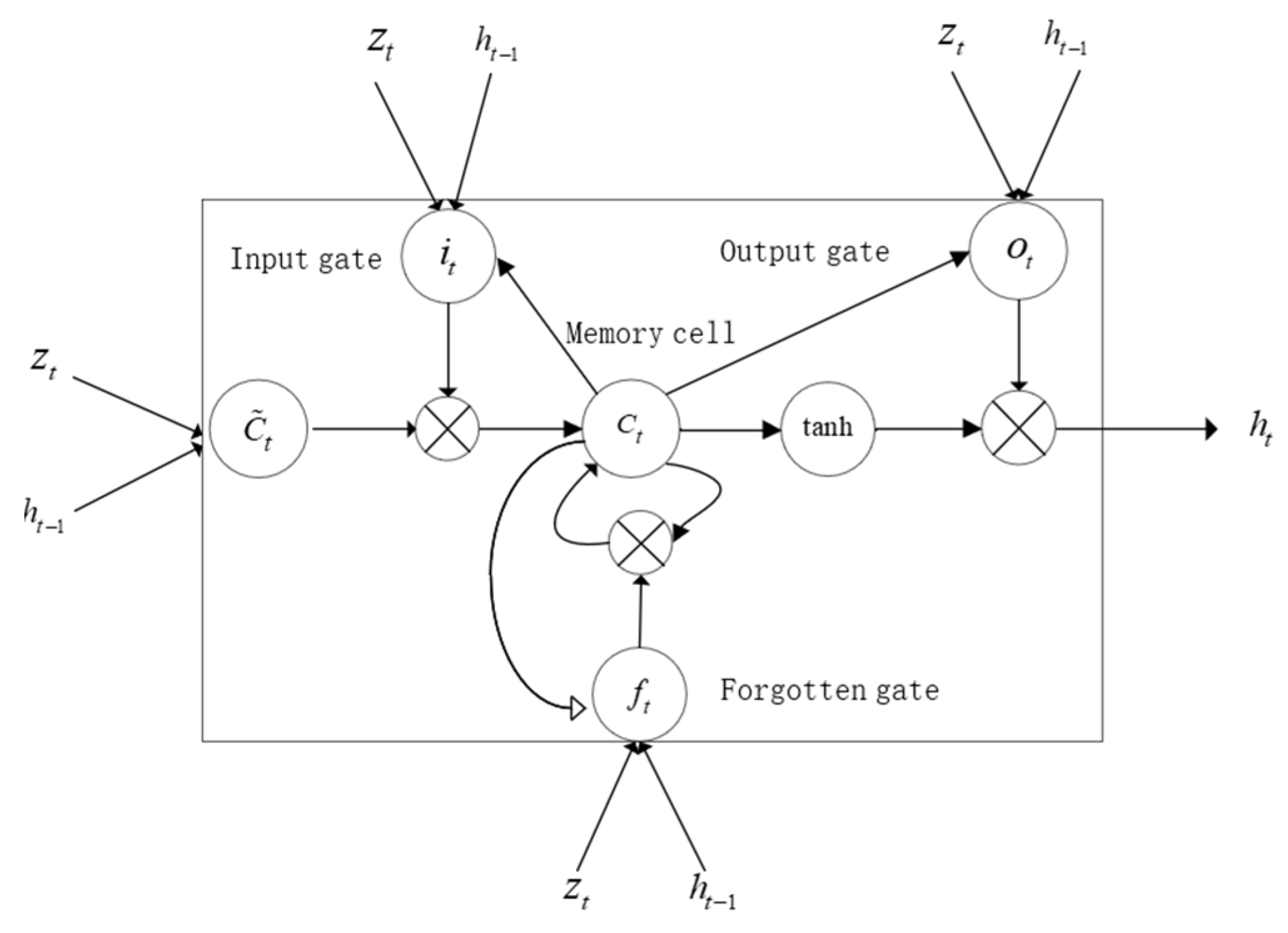

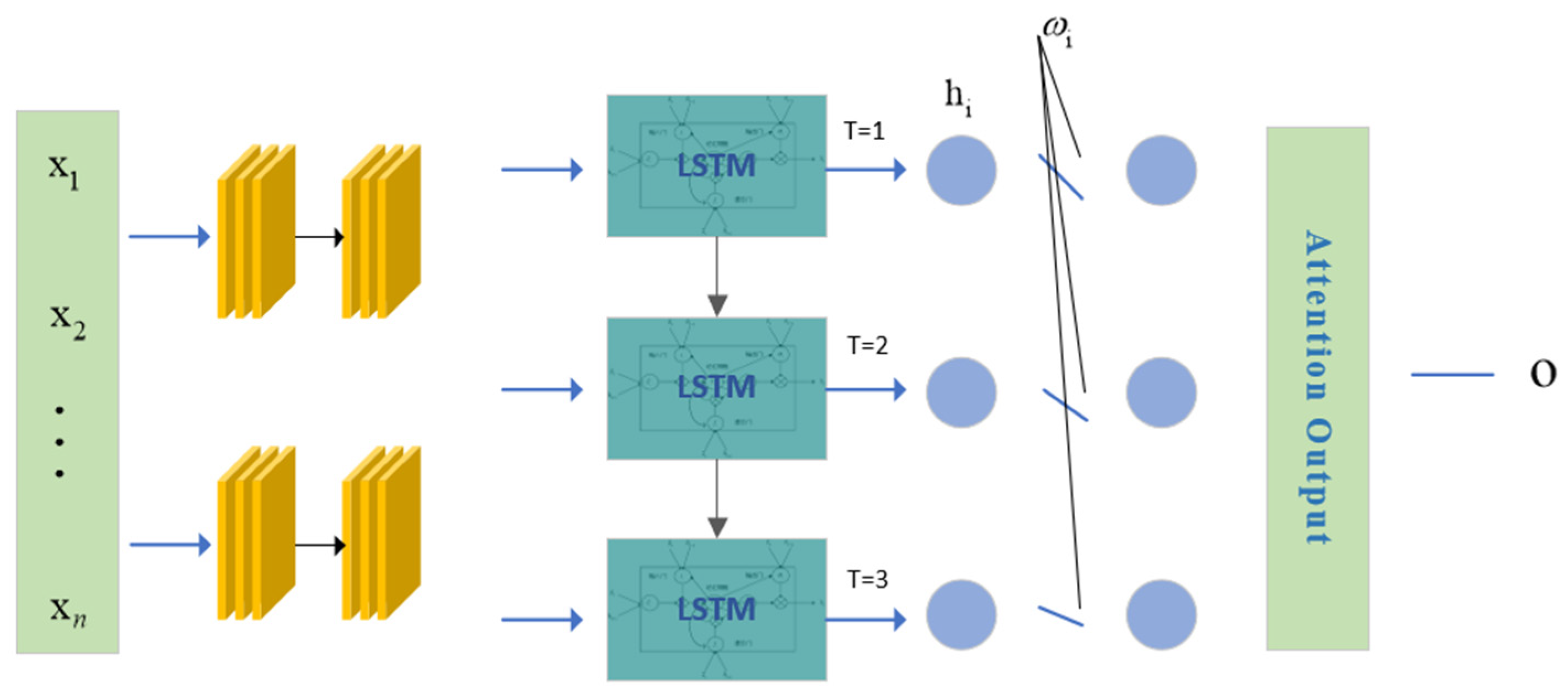

4.1. DCNN-LSTM

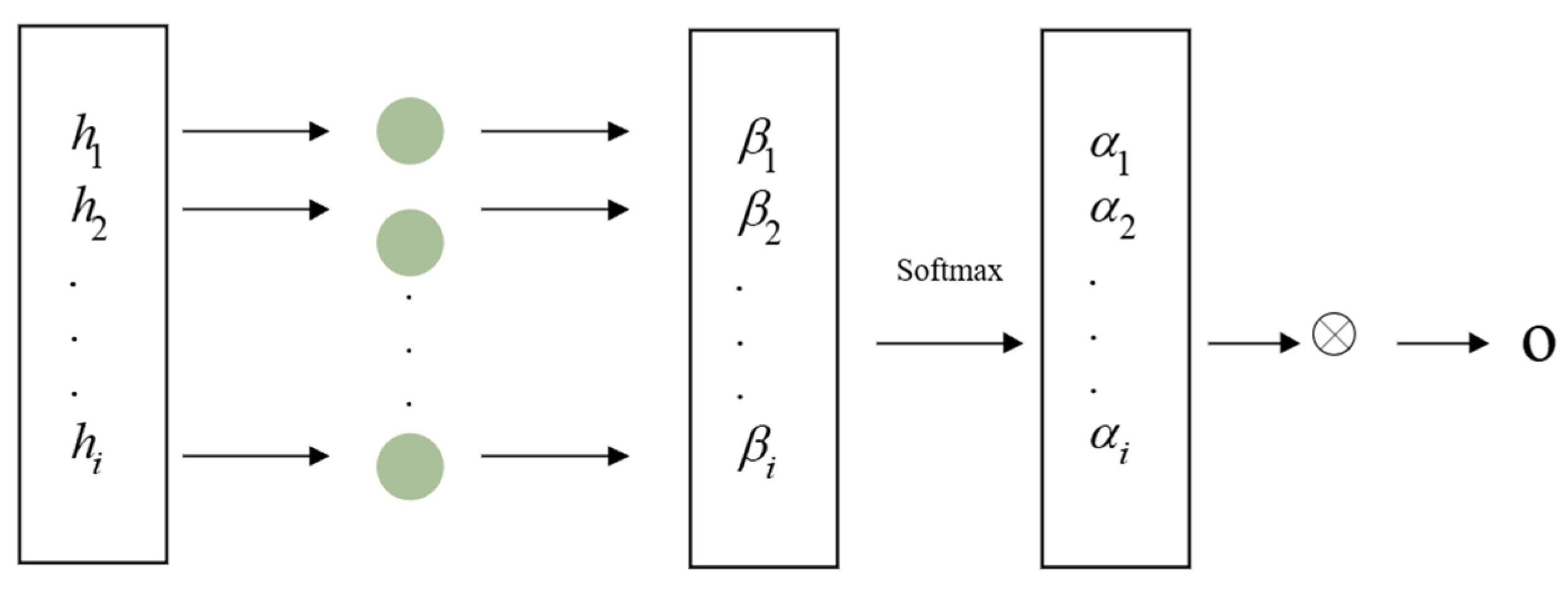

4.2. Attention Mechanism

4.3. Attention-Based DCNN-LSTM Prediction Framework

5. Experimental Results and Analysis

5.1. Experimental Setup

5.2. Prediction Result

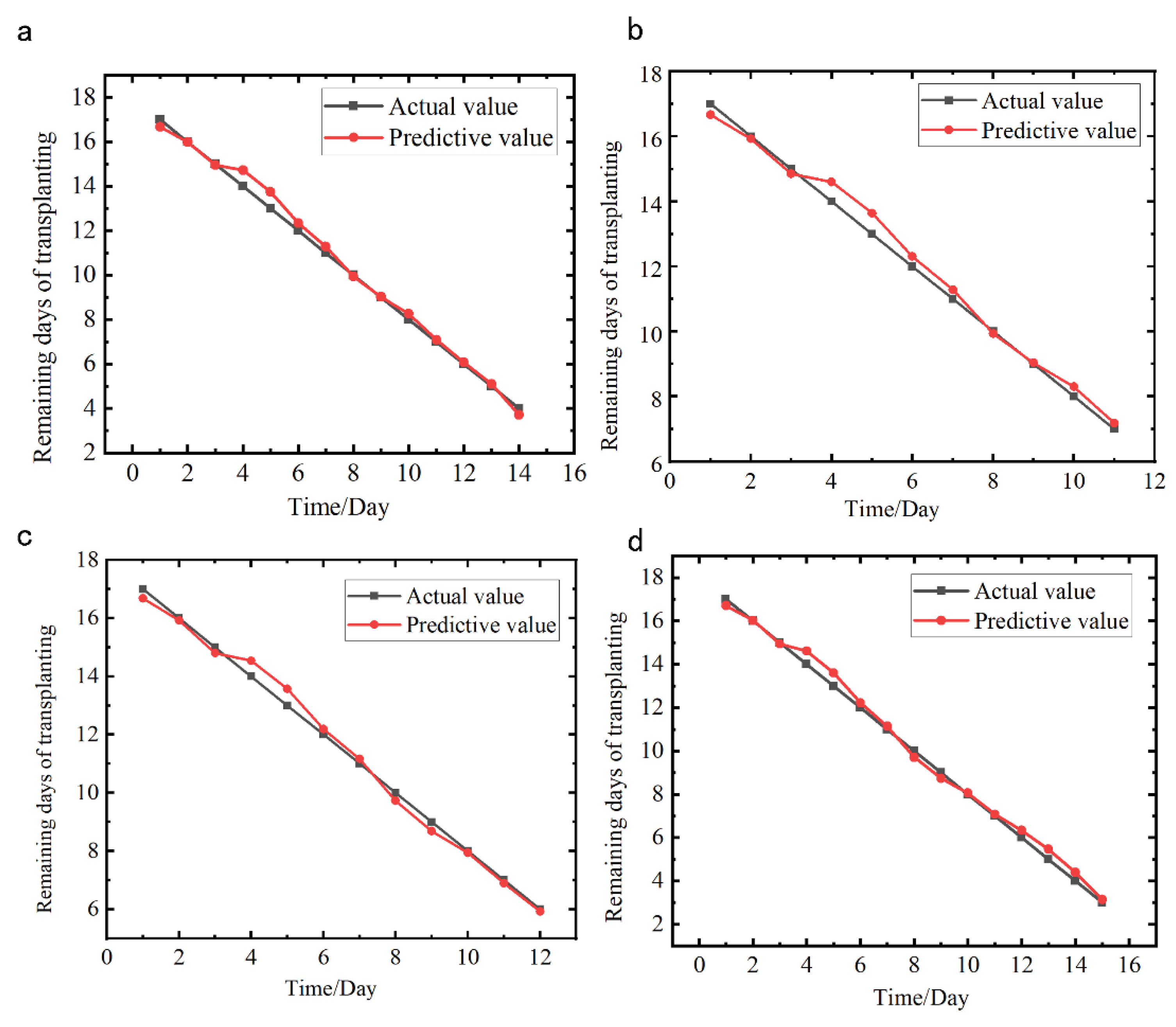

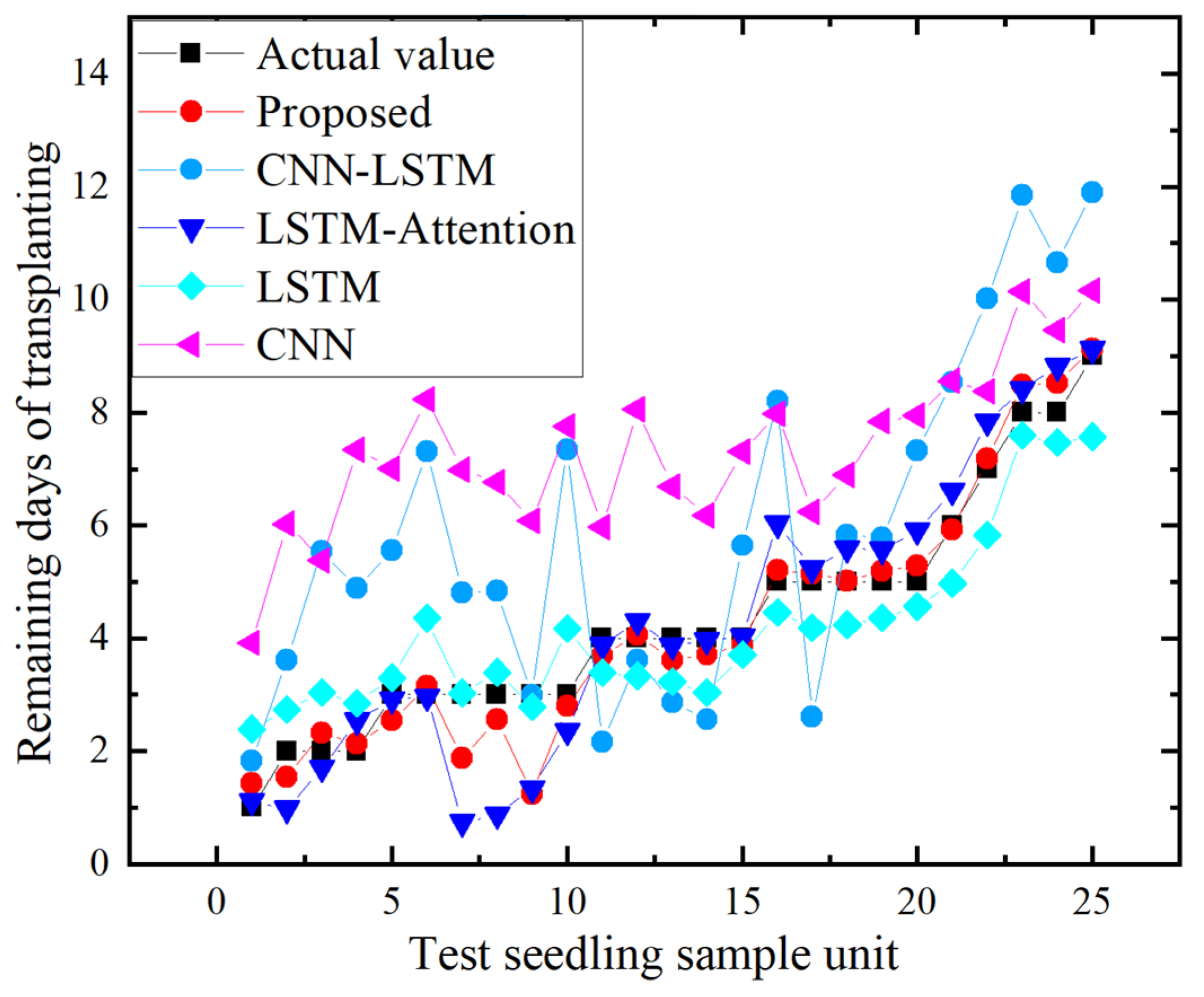

5.2.1. Performance Analysis of Predictive Models

5.2.2. Effect of the Attention Mechanism on Prediction Performance

5.2.3. Effect of the Attention Mechanism on Prediction Performance

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Manchali, S.; Murthy, K.N.C.; Patil, B.S. Crucial facts about health benefits of popular cruciferous vegetables. J. Funct. Foods 2012, 4, 94–106. [Google Scholar] [CrossRef]

- Vaishnnave, M.P.; Manivannan, R. An Empirical Study of Crop Yield Prediction Using Reinforcement Learning. Artif. Intell. Tech. Wirel. Commun. Netw. 2022, 3, 47–58. [Google Scholar]

- Lenz-Wiedemann, V.I.S.; Klar, C.W.; Schneider, K. Development and test of a crop growth model for application within a Global Change decision support system. Ecol. Model. 2010, 221, 314–329. [Google Scholar] [CrossRef]

- Johnson, R.; Vishwakarma, K.; Hossen, S.; Kumar, V.; Shackira, A.; Puthur, J.T.; Abdi, G.; Sarraf, M.; Hasanuzzaman, M. Potassium in plants: Growth regulation, signaling, and environmental stress tolerance. Plant Physiol. Biochem. 2022, 172, 56–69. [Google Scholar] [CrossRef]

- Chen, Z.; Galli, M.; Gallavotti, A. Mechanisms of temperature-regulated growth and thermotolerance in crop species. Curr. Opin. Plant Biol. 2022, 65, 102134. [Google Scholar] [CrossRef]

- Latif, M.S.; Kazmi, R.; Khan, N.; Majeed, R.; Ikram, S.; Ali-Shahid, M.M. Pest Prediction in Rice using IoT and Feed Forward Neural Network. KSII Trans. Internet Inf. Syst. 2022, 16, 133–152. [Google Scholar]

- Drees, L.; Junker-Frohn, L.V.; Kierdorf, J.; Roscher, R. Temporal Prediction and Evaluation of Brassica Growth in the Field using Conditional Generative Adversarial Networks. arXiv 2021, arXiv:2105.07789. [Google Scholar] [CrossRef]

- Pohan, S.; Warsito, B.; Suryono, S. Backpropagation artificial neural network for prediction plant seedling growth. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2020; Volume 1524, p. 012147. [Google Scholar]

- Taherei, G.P.; Hassanpour, D.H.; Mosavi, A.; Yusof, K.B.; Alizamir, M.; Shamshirband, S.; Chau, K.W. Sugarcane growth prediction based on meteorological parameters using extreme learning machine and artificial neural network. Eng. Appl. Comput. Fluid Mech. 2018, 12, 738–749. [Google Scholar]

- Rimaz, H.R.; Zand-Parsa, S.; Taghvaei, M.; Kamgar-Haghighi, A.A. Predicting the seedling emergence time of sugar beet (Beta vulgaris) using beta models. Physiol. Mol. Biol. Plants 2020, 26, 2329–2338. [Google Scholar] [CrossRef]

- Zhou, H. Rice Growth Prediction Based on Improved Elman Neural Network. Master’s Thesis, Yangzhou University, Yangzhou, China, 2019. [Google Scholar]

- Meng, Y.R. Research and Application of Vegetable Growth Cycle Prediction Model base on Environment on the Agricultural-Cloud Service Platform. Master’s Thesis, Guizhou University, Guizhou, China, 2019. [Google Scholar]

- Zhang, C.S.; Xu, L.J.; Li, X.L.; Li, H.; Ge, W.Z.; Liu, X.T.; Yu, X.N.; Sun, M.Y. Effects of main environmental parameters on the growth of tomato in solar greenhouse. J. China Agric. Univ. 2019, 10, 118–124. [Google Scholar]

- Yang, L.L.; Wang, Y.M.; Dong, Q.X. Fruit growth modeling and realization for greenhouse tomato. Trans. Chin. Soc. Agric. Eng. 2013, 29, 197–202. [Google Scholar]

- Perugachi-Diaz, Y.; Tomczak, J.M.; Bhulai, S. Deep learning for white cabbage seedling prediction. Comput. Electron. Agric. 2021, 184, 106059. [Google Scholar] [CrossRef]

- Susilo, A.S.; Karna, N.B.A.; Mayasari, R. Bok Choy Growth Prediction Model Analysis Based On Smart Farm Using Machine Learning. eProc. Eng. 2021, 8, 1312. [Google Scholar]

- Alhnaity, B.; Kollias, S.; Leontidis, G.; Jiang, S.; Schamp, B.; Pearson, S. An autoencoder wavelet based deep neural network with attention mechanism for multi-step prediction of plant growth. Inf. Sci. 2021, 560, 35–50. [Google Scholar] [CrossRef]

- Singh, D.; Singh, B. Investigating the impact of data normalization on classification performance. Appl. Soft Comput. 2020, 97, 105524. [Google Scholar] [CrossRef]

- Shin, H.-C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.; Summers, R.M. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef] [Green Version]

- Kim, P. Convolutional Neural Network. In MATLAB Deep Learning; Apress: Berkeley, CA, USA, 2017; pp. 121–147. [Google Scholar]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A search space odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2222–2232. [Google Scholar] [CrossRef] [Green Version]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; Neural Information Processing Systems: Long Beach, CA, USA, 2017; pp. 5998–6008. [Google Scholar]

- Wright, D.J.; Capon, G.; Page, R.; Quiroga, J.; Taseen, A.A.; Tomasini, F. Evaluation of forecasting methods for decision support. Int. J. Forecast. 1986, 2, 139–152. [Google Scholar] [CrossRef]

- Armstrong, J.S. Evaluating forecasting methods. In Principles of Forecasting; Springer: Boston, MA, USA, 2001; pp. 443–472. [Google Scholar]

- Makridakis, S.; Andersen, A.; Carbone, R.; Fildes, R.; Hibon, M.; Lewandowski, R.; Newton, J.; Parzen, E.; Winkler, R. The accuracy of extrapolation (time series) methods: Results of a forecasting competition. J. Forecast. 1982, 1, 111–153. [Google Scholar] [CrossRef]

- Goodwin, P.; Lawton, R. On the asymmetry of the symmetric MAPE. Int. J. Forecast. 1999, 15, 405–408. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Subdataset | Variety | Number of Seeding Samples | Number of Features | Sample Size |

|---|---|---|---|---|

| Training set | Zhonggan No. 21 | 25 | 15 | 676 |

| Test set | purple cabbage | 25 | 15 | 676 |

| Number of Neurons | MAE | RMSE | MAPE | SMAPE |

|---|---|---|---|---|

| 50 | 0.455 | 0.556 | 0.160 | 0.099 |

| 100 | 0.356 | 0.507 | 0.157 | 0.082 |

| 150 | 1.418 | 1.594 | 0.692 | 0.284 |

| 200 | 0.404 | 0.673 | 0.286 | 0.097 |

| 250 | 0.473 | 0.606 | 0.183 | 0.109 |

| 300 | 0.484 | 0.706 | 0.277 | 0.127 |

| Model | MAE | RMSE | MAPE | SMAPE |

|---|---|---|---|---|

| CNN | 3.012 | 3.230 | 0.431 | 0.448 |

| LSTM | 0.770 | 0.880 | 0.247 | 0.143 |

| LSTM-Attention | 0.621 | 0.870 | 0.387 | 0.138 |

| CNN-LSTM | 2.187 | 2.473 | 0.402 | 0.327 |

| Proposed | 0.356 | 0.507 | 0.157 | 0.082 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, H.; Liu, C.; Wu, H. A Prediction Method of Seedling Transplanting Time with DCNN-LSTM Based on the Attention Mechanism. Agronomy 2022, 12, 1504. https://doi.org/10.3390/agronomy12071504

Zhu H, Liu C, Wu H. A Prediction Method of Seedling Transplanting Time with DCNN-LSTM Based on the Attention Mechanism. Agronomy. 2022; 12(7):1504. https://doi.org/10.3390/agronomy12071504

Chicago/Turabian StyleZhu, Huaji, Chang Liu, and Huarui Wu. 2022. "A Prediction Method of Seedling Transplanting Time with DCNN-LSTM Based on the Attention Mechanism" Agronomy 12, no. 7: 1504. https://doi.org/10.3390/agronomy12071504

APA StyleZhu, H., Liu, C., & Wu, H. (2022). A Prediction Method of Seedling Transplanting Time with DCNN-LSTM Based on the Attention Mechanism. Agronomy, 12(7), 1504. https://doi.org/10.3390/agronomy12071504