Toward Field Soil Surveys: Identifying and Delineating Soil Diagnostic Horizons Based on Deep Learning and RGB Image

Abstract

1. Introduction

2. Materials and Methods

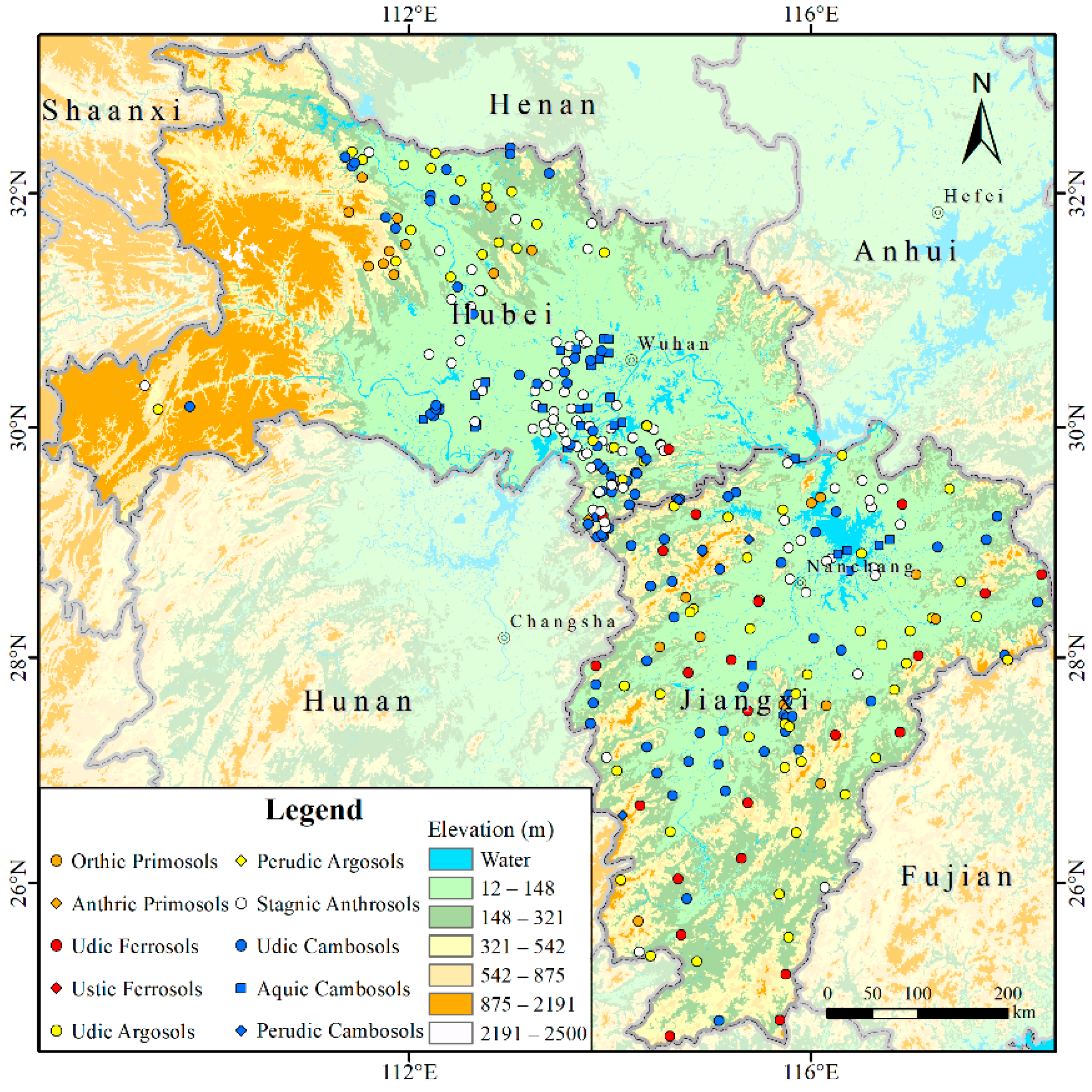

2.1. Study Area and Soil Profiles

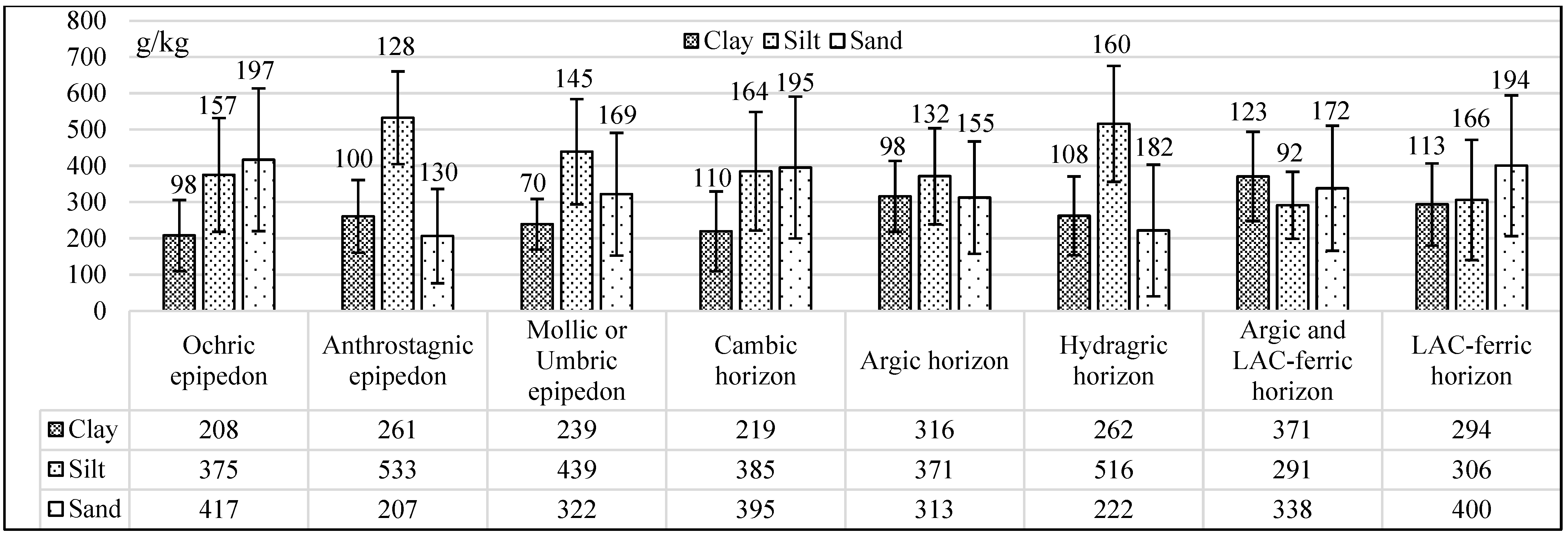

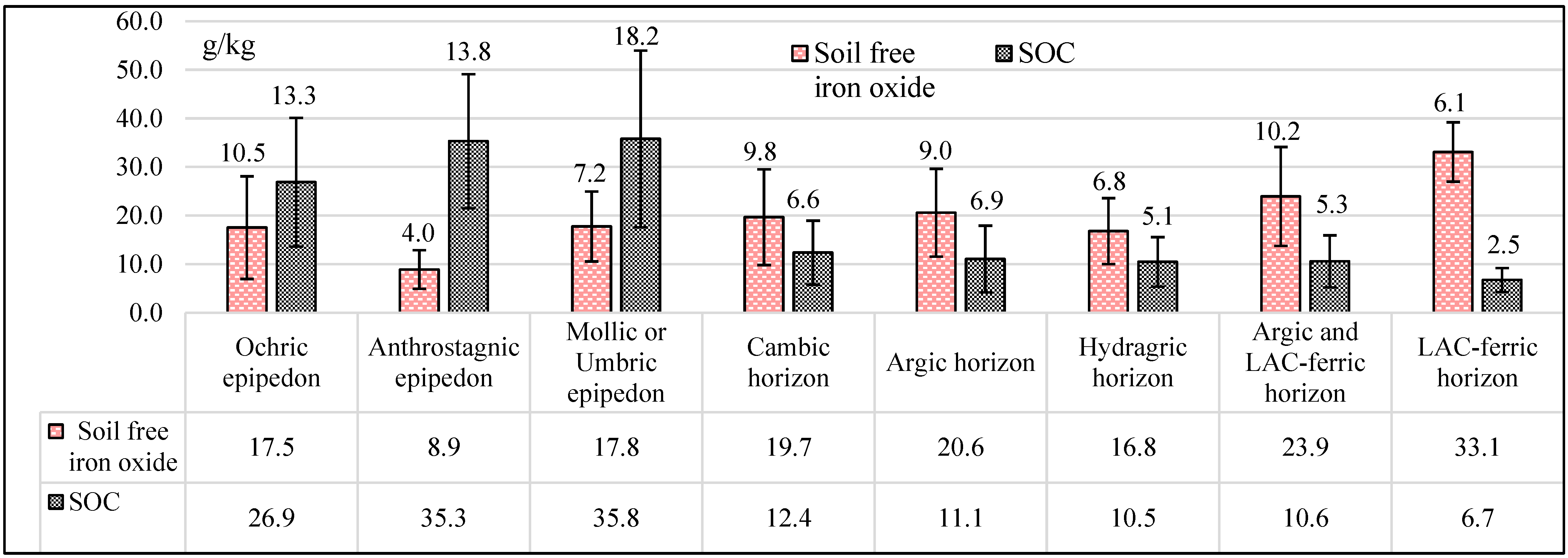

2.2. Categories Distribution, Morphological Attributes, and Physico-Chemical Properties of Soil Diagnostic Horizons

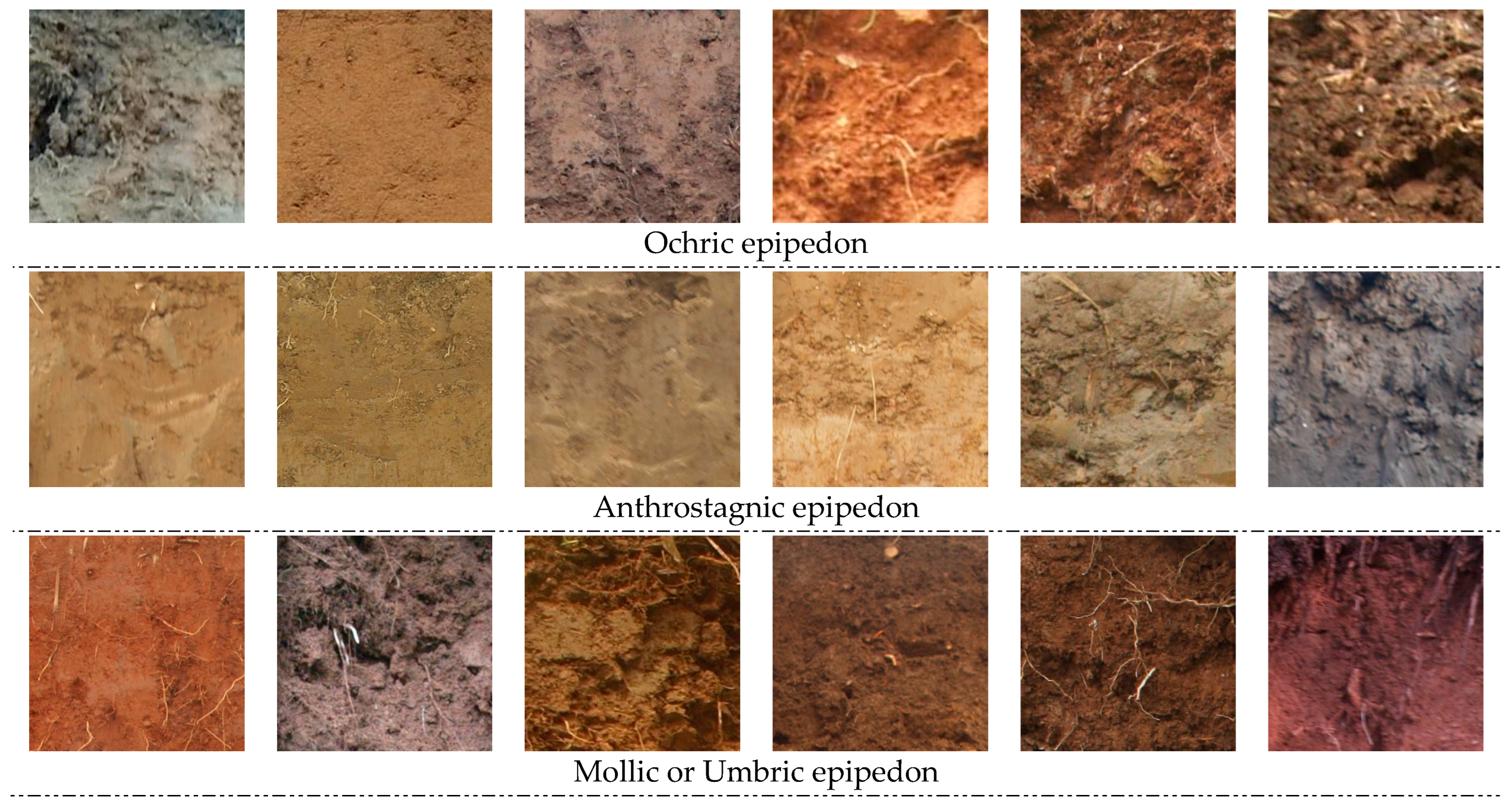

2.3. Image Dataset of Soil Diagnostic Horizons

- (1)

- Anthrostagnic epipedon tend to be yellowish or grayish in color and show a visual representation of gleying due to their agitation by rice farming activities.

- (2)

- The color of Hydragric horizon is mainly similar to Anthrostagnic epipedon, and it has a distinct redox characteristic, dominated by rust streak and rust spots.

- (3)

- Mollic or Umbric epipedon is generally darker in color; it also has more internal roots due to its higher organic carbon content.

- (4)

- The color of LAC-ferric horizon and Argic and LAC-ferric horizon tends to be reddish.

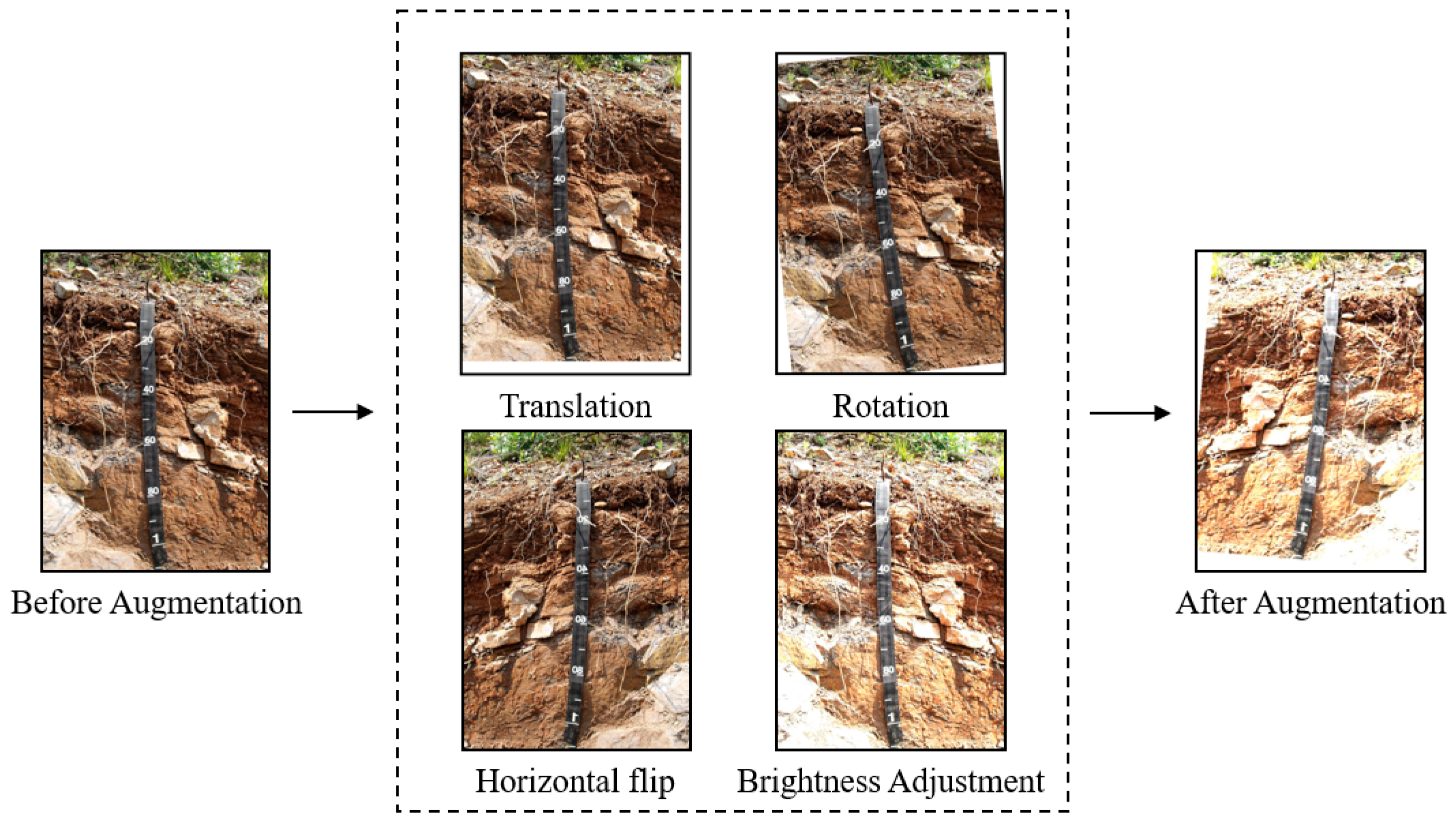

2.3.1. Data Augmentation

- (1)

- Translation and rotation: Given the difficulty of photographing perfectly upright in the field and the susceptibility of researchers to shaking, data enhancement was limited to a percentage shift of less than 5% and an angle of less than 5°.

- (2)

- Flip: The profile images were all taken with the surface vegetation on top and from the top down, so only horizontal flips were considered.

- (3)

- Adjusting brightness: In view of the inconsistent lighting conditions at the time of shooting, brightness was adjusted appropriately to simulate different sunlight intensities.

2.3.2. Image Labeling

2.4. Semantic Segmentation Network Model-UNet++

2.5. Model Validation Indicators

2.6. Model Training

3. Results

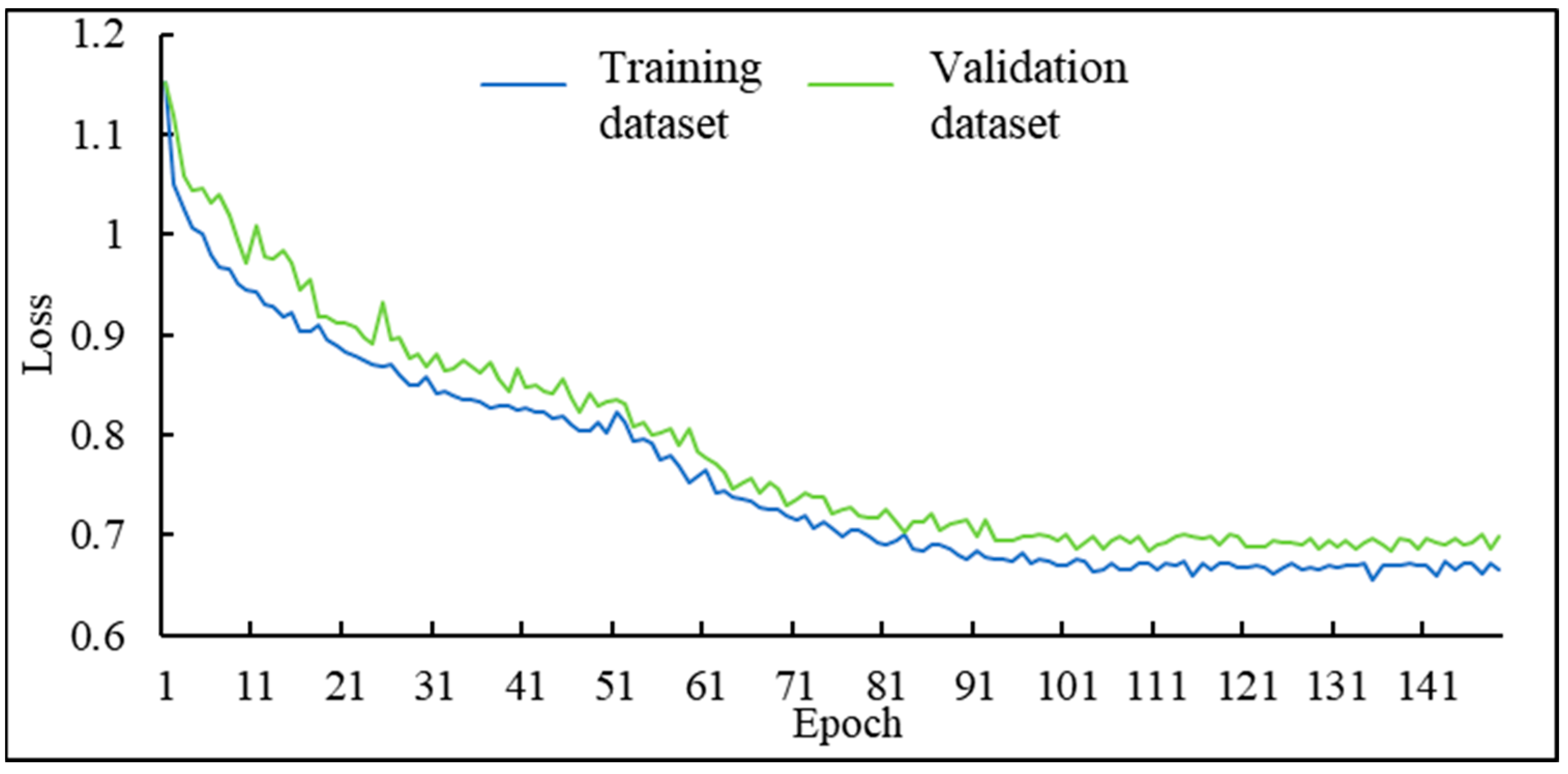

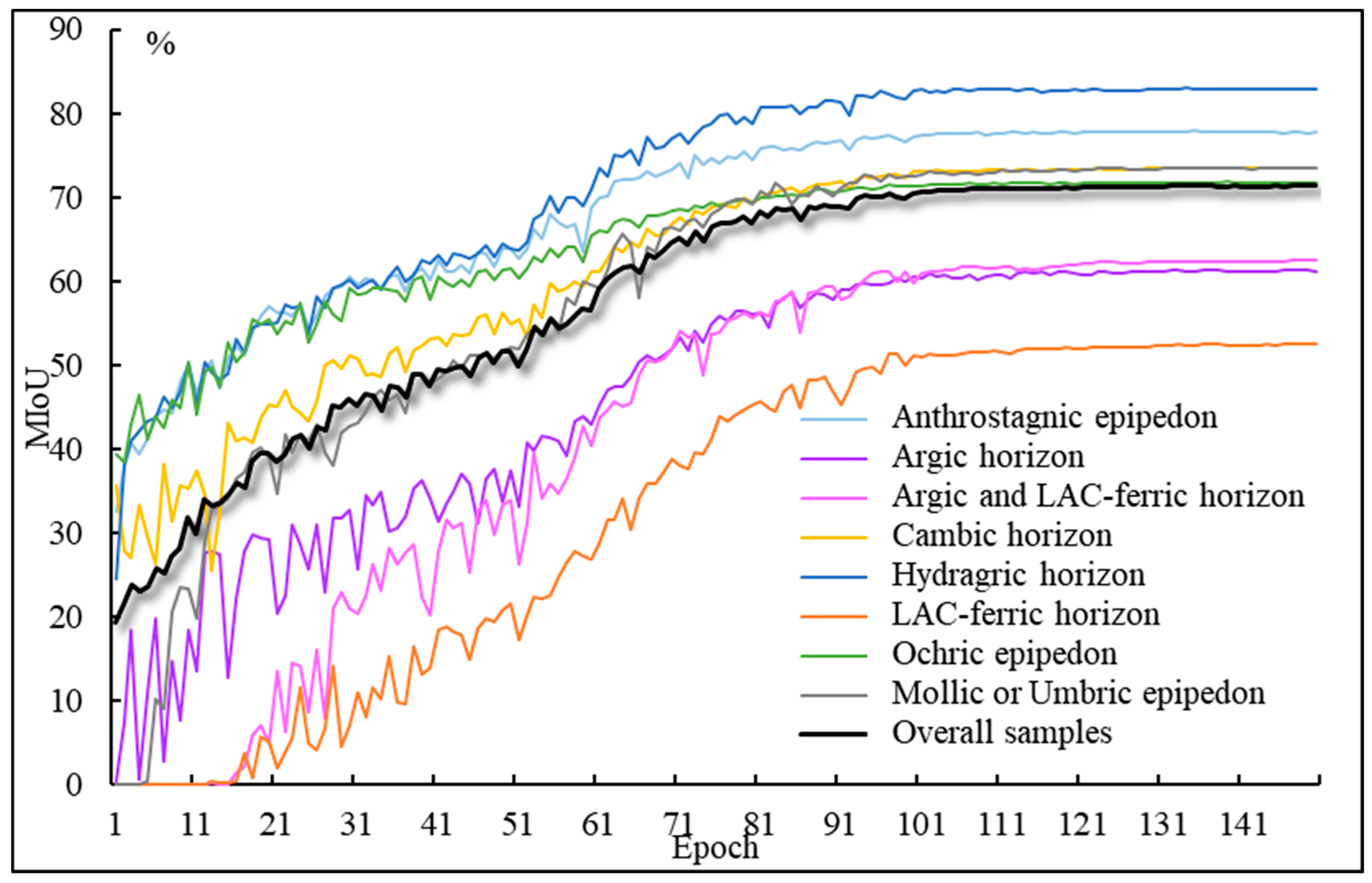

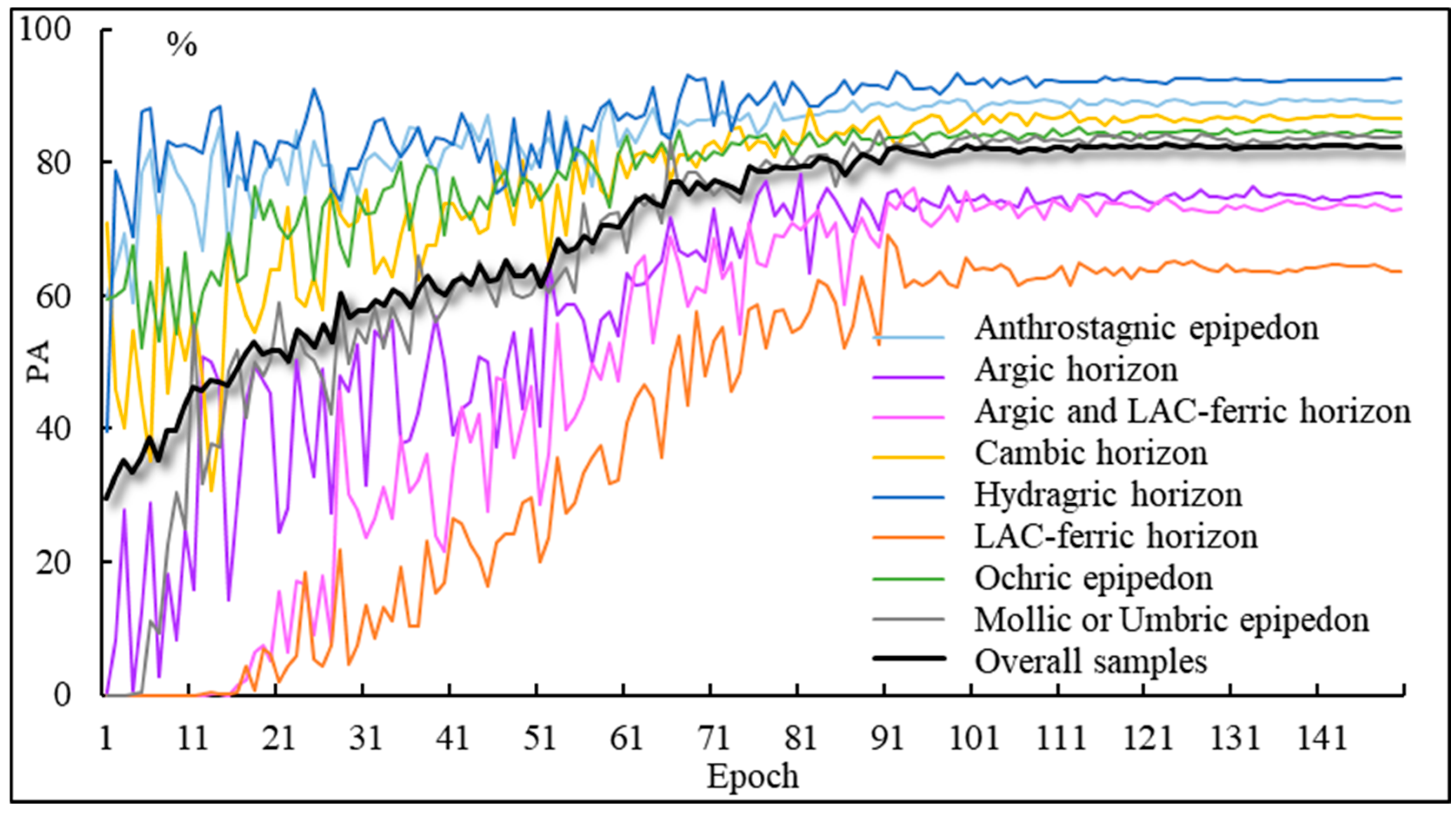

3.1. Training Process

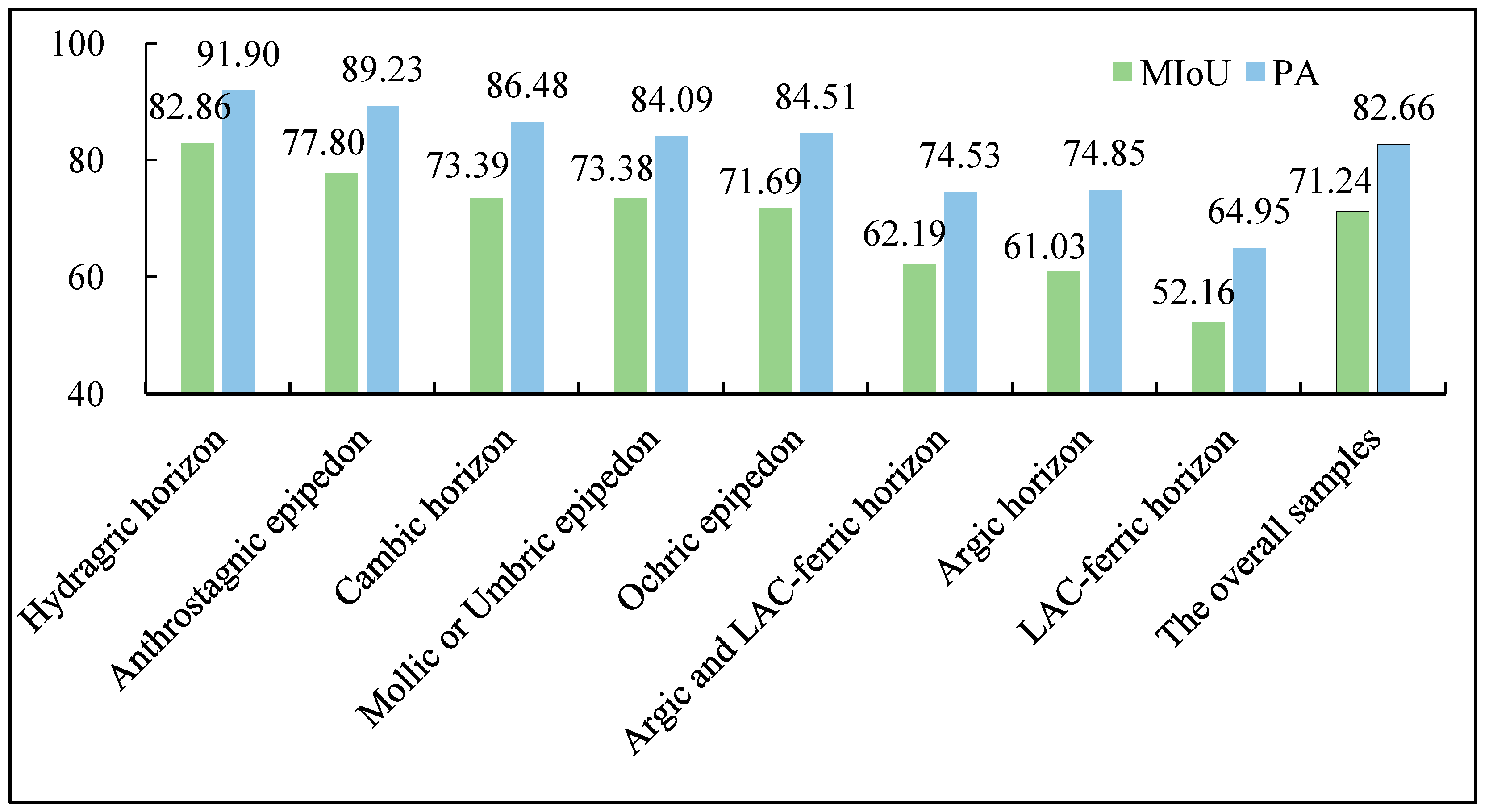

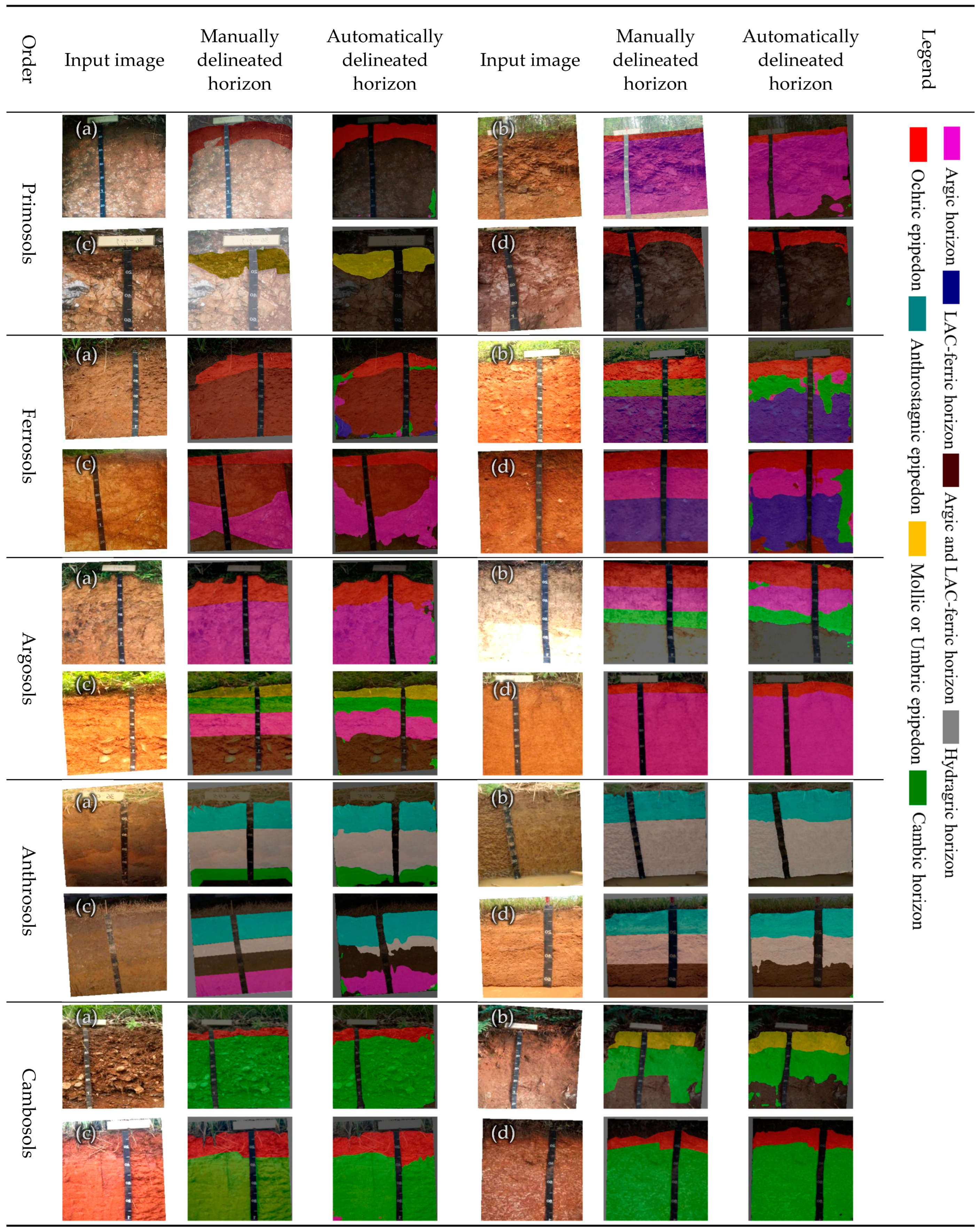

3.2. Model Validation

4. Discussion

4.1. Analysis of Misclassifications

4.2. Applicability Analysis to Other Taxonomic Systems

4.3. Comparison to Existing Studies

4.4. Limitations and Prospects

- (1)

- Only soil profile image data from Hubei and Jiangxi Provinces were used in this study, resulting in a large limitation of identifiable categories. Meanwhile, the severe imbalance in different category samples, although suppressing the disappearance of some of the gradients by adjusting the loss function, did not completely resolve the problem and still had an impact on the small number of samples, such as the LAC-ferric horizon.

- (2)

- This study relied only on manual delineation when labeling soil diagnostic horizons, resulting in a large amount of data preprocessing. This study also has repeatedly emphasized that not all boundaries of soil diagnostic horizons are clear, which will have an impact on model training. Thus, future research is expected to automatically label images by image clustering to reduce the preprocessing workload.

- (3)

- Soil diagnostic horizon identification by RGB images alone cannot sufficiently reflect the physical and chemical properties of the soil. In the identification of diagnostic horizons where some diagnostic conditions have requirements for elemental content (e.g., LAC-ferric horizon), the identification and delineation of soil diagnostic horizons may still require the combined support of pXRF or vis-NIR spectroscopy to quantitatively reflect the variation of different soil chemical properties and elemental distributions in the profile.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Xu, D.; Ma, W.; Chen, S.; Jiang, Q.; He, K.; Shi, Z. Assessment of Important Soil Properties Related to Chinese Soil Taxonomy Based on Vis–NIR Reflectance Spectroscopy. Comput. Electron. Agric. 2018, 144, 1–8. [Google Scholar] [CrossRef]

- Shi, X.Z.; Yu, D.S.; Yang, G.X.; Wang, H.J.; Sun, W.X.; Du, G.H.; Gong, Z.T. Cross-Reference Benchmarks for Translating the Genetic Soil Classification of China into the Chinese Soil Taxonomy. Pedosphere 2006, 16, 147–153. [Google Scholar] [CrossRef]

- Ju, B.; Wu, K.; Zhang, G.; Rossiter, D.G.; Li, L. Characterization of Some Calcareous Soils from Henan and Their Proposed Classification in Chinese Soil Taxonomy. Pedosphere 2017, 27, 758–768. [Google Scholar] [CrossRef]

- Hartemink, A.E.; Zhang, Y.; Bockheim, J.G.; Curi, N.; Silva, S.H.G.; Grauer-Gray, J.; Lowe, D.J.; Krasilnikov, P. Soil Horizon Variation: A Review. Adv. Agron. 2020, 160, 125–185. [Google Scholar] [CrossRef]

- Churchman, G.J. The Philosophical Status of Soil Science. Geoderma 2010, 157, 214–221. [Google Scholar] [CrossRef]

- Yang, J.; Shen, F.; Wang, T.; Wu, L.; Li, Z.; Li, N.; Dai, L.; Liang, J.; Zhang, J. PEF-MODFLOW: A Framework for Preliminary Soil Profile Horizon Delineation Based on Soil Color Captured by Smartphone Images. Environ. Model. Softw. 2022, 155, 105423. [Google Scholar] [CrossRef]

- Hartemink, A.E.; Minasny, B. Towards Digital Soil Morphometrics. Geoderma 2014, 230–231, 305–317. [Google Scholar] [CrossRef]

- Kidd, D.; Searle, R.; Grundy, M.; McBratney, A.; Robinson, N.; O’Brien, L.; Zund, P.; Arrouays, D.; Thomas, M.; Padarian, J.; et al. Operationalising Digital Soil Mapping—Lessons from Australia. Geoderma Reg. 2020, 23, e00335. [Google Scholar] [CrossRef]

- Sepuru, T.K.; Dube, T. An Appraisal on the Progress of Remote Sensing Applications in Soil Erosion Mapping and Monitoring. Remote Sens. Appl. Soc. Environ. 2018, 9, 1–9. [Google Scholar] [CrossRef]

- Barra, I.; Haefele, S.M.; Sakrabani, R.; Kebede, F. Soil Spectroscopy with the Use of Chemometrics, Machine Learning and Pre-Processing Techniques in Soil Diagnosis: Recent Advances—A Review. TrAC Trends Anal. Chem. 2021, 135, 116166. [Google Scholar] [CrossRef]

- Searle, R.; McBratney, A.; Grundy, M.; Kidd, D.; Malone, B.; Arrouays, D.; Stockman, U.; Zund, P.; Wilson, P.; Wilford, J.; et al. Digital Soil Mapping and Assessment for Australia and beyond: A Propitious Future. Geoderma Reg. 2021, 24, e00359. [Google Scholar] [CrossRef]

- Sun, F.; Bakr, N.; Dang, T.; Pham, V.; Weindorf, D.C.; Jiang, Z.; Li, H.; Wang, Q.B. Enhanced Soil Profile Visualization Using Portable X-ray Fluorescence (PXRF) Spectrometry. Geoderma 2020, 358, 113997. [Google Scholar] [CrossRef]

- Zhang, Y.; Hartemink, A.E. Soil Horizon Delineation Using Vis-NIR and PXRF Data. CATENA 2019, 180, 298–308. [Google Scholar] [CrossRef]

- Ge, Y.; Chen, K.; Liu, G.; Zhang, Y.; Tang, H. A Low-Cost Approach for the Estimation of Rock Joint Roughness Using Photogrammetry. Eng. Geol. 2022, 305, 106726. [Google Scholar] [CrossRef]

- Jiang, Z.D.; Wang, Q.B.; Brye, K.R.; Adhikari, K.; Sun, F.J.; Sun, Z.X.; Chen, S.; Owens, P.R. Quantifying Organic Carbon Stocks Using a Stereological Profile Imaging Method to Account for Rock Fragments in Stony Soils. Geoderma 2021, 385, 114837. [Google Scholar] [CrossRef]

- Yang, J.; Shen, F.; Wang, T.; Luo, M.; Li, N.; Que, S. Effect of Smart Phone Cameras on Color-Based Prediction of Soil Organic Matter Content. Geoderma 2021, 402, 115365. [Google Scholar] [CrossRef]

- Zhang, Y.; Hartemink, A.E. A Method for Automated Soil Horizon Delineation Using Digital Images. Geoderma 2019, 343, 97–115. [Google Scholar] [CrossRef]

- Jiang, Z.D.; Owens, P.R.; Zhang, C.L.; Brye, K.R.; Weindorf, D.C.; Adhikari, K.; Sun, Z.X.; Sun, F.J.; Wang, Q.B. Towards a Dynamic Soil Survey: Identifying and Delineating Soil Horizons in-Situ Using Deep Learning. Geoderma 2021, 401, 115341. [Google Scholar] [CrossRef]

- Ganaie, M.A.; Hu, M.; Malik, A.K.; Tanveer, M.; Suganthan, P.N. Ensemble Deep Learning: A Review. Eng. Appl. Artif. Intell. 2022, 115, 105151. [Google Scholar] [CrossRef]

- Mo, Y.; Wu, Y.; Yang, X.; Liu, F.; Liao, Y. Review the State-of-the-Art Technologies of Semantic Segmentation Based on Deep Learning. Neurocomputing 2022, 493, 626–646. [Google Scholar] [CrossRef]

- Lateef, F.; Ruichek, Y. Survey on Semantic Segmentation Using Deep Learning Techniques. Neurocomputing 2019, 338, 321–348. [Google Scholar] [CrossRef]

- Zamani, V.; Taghaddos, H.; Gholipour, Y.; Pourreza, H. Deep Semantic Segmentation for Visual Scene Understanding of Soil Types. Autom. Constr. 2022, 140, 104342. [Google Scholar] [CrossRef]

- Akca, S.; Gungor, O. Semantic Segmentation of Soil Salinity Using In-Situ EC Measurements and Deep Learning Based U-NET Architecture. CATENA 2022, 218, 106529. [Google Scholar] [CrossRef]

- X-Rite Incorporated. Munsell Soil Color Charts. Available online: https://munsell.com/color-products/color-communications-products/environmental-color-communication/munsell-soil-color-charts/ (accessed on 15 May 2022).

- Gee, G.W.; Or, D. 2.4 Particle-Size Analysis. In Methods Soil Anal. Part 4 Physical Methods; John Wiley & Sons: Hoboken, NJ, USA, 2018; pp. 255–293. [Google Scholar] [CrossRef]

- Mehra, O.P. Ion Oxide Removal from Soils and Clays by a Dithionite-Citrate System Buffered with Sodium Bicarbonate. In Clays and Clay Minerals, Proceedings of the Seventh National Conference on Clays and Clay Minerals, Washington, DC, USA, 20–23 October 1958; Pergamon Press: Oxford, UK, 1960. [Google Scholar]

- Nelson, D.W.; Sommers, L.E. Total Carbon and Organic Matter. In Methods of Soil Analysis: Part 2 Chemical and Microbiological Properties; Soil Science Society of America: Madison, WI, USA, 1982; pp. 539–579. [Google Scholar] [CrossRef]

- Yin, S.; Li, T.; Cheng, X.; Wu, J. Remote Sensing Estimation of Surface PM2.5 Concentrations Using a Deep Learning Model Improved by Data Augmentation and a Particle Size Constraint. Atmos. Environ. 2022, 287, 119282. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Z.; Liu, X.; Wang, L.; Xia, X. Ore Image Classification Based on Small Deep Learning Model: Evaluation and Optimization of Model Depth, Model Structure and Data Size. Miner. Eng. 2021, 172, 107020. [Google Scholar] [CrossRef]

- Oyelade, O.N.; Ezugwu, A.E. A Deep Learning Model Using Data Augmentation for Detection of Architectural Distortion in Whole and Patches of Images. Biomed. Signal Process. Control 2021, 65, 102366. [Google Scholar] [CrossRef]

- Aghnia Farda, N.; Lai, J.Y.; Wang, J.C.; Lee, P.Y.; Liu, J.W.; Hsieh, I.H. Sanders Classification of Calcaneal Fractures in CT Images with Deep Learning and Differential Data Augmentation Techniques. Injury 2021, 52, 616–624. [Google Scholar] [CrossRef]

- Barshooi, A.H.; Amirkhani, A. A Novel Data Augmentation Based on Gabor Filter and Convolutional Deep Learning for Improving the Classification of COVID-19 Chest X-ray Images. Biomed. Signal Process. Control 2022, 72, 103326. [Google Scholar] [CrossRef]

- Su, D.; Kong, H.; Qiao, Y.; Sukkarieh, S. Data Augmentation for Deep Learning Based Semantic Segmentation and Crop-Weed Classification in Agricultural Robotics. Comput. Electron. Agric. 2021, 190, 106418. [Google Scholar] [CrossRef]

- Zhai, R.; Zou, J.; He, Y.; Meng, L. BIM-Driven Data Augmentation Method for Semantic Segmentation in Superpoint-Based Deep Learning Network. Autom. Constr. 2022, 140, 104373. [Google Scholar] [CrossRef]

- Khan, A.; Raouf, I.; Noh, Y.R.; Lee, D.; Sohn, J.W.; Kim, H.S. Autonomous Assessment of Delamination in Laminated Composites Using Deep Learning and Data Augmentation. Compos. Struct. 2022, 290, 115502. [Google Scholar] [CrossRef]

- Kim, S.; Lee, K.; Lee, M.; Lee, J.; Ahn, T.; Lim, J.T. Evaluation of Saturation Changes during Gas Hydrate Dissociation Core Experiment Using Deep Learning with Data Augmentation. J. Pet. Sci. Eng. 2022, 209, 109820. [Google Scholar] [CrossRef]

- Raei, E.; Akbari Asanjan, A.; Nikoo, M.R.; Sadegh, M.; Pourshahabi, S.; Adamowski, J.F. A Deep Learning Image Segmentation Model for Agricultural Irrigation System Classification. Comput. Electron. Agric. 2022, 198, 106977. [Google Scholar] [CrossRef]

- Schellenberg, M.; Dreher, K.K.; Holzwarth, N.; Isensee, F.; Reinke, A.; Schreck, N.; Seitel, A.; Tizabi, M.D.; Maier-Hein, L.; Gröhl, J. Semantic Segmentation of Multispectral Photoacoustic Images Using Deep Learning. Photoacoustics 2022, 26, 100341. [Google Scholar] [CrossRef]

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: A Database and Web-Based Tool for Image Annotation. Int. J. Comput. Vis. 2007, 77, 157–173. [Google Scholar] [CrossRef]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A Nested u-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Lecture Notes in Computer Science Book Series; Springer: Cham, Switzerland, 2018; LNCS; Volume 11045, pp. 3–11. [Google Scholar] [CrossRef]

- Wang, H.; Dalton, L.; Fan, M.; Guo, R.; McClure, J.; Crandall, D.; Chen, C. Deep-Learning-Based Workflow for Boundary and Small Target Segmentation in Digital Rock Images Using UNet++ and IK-EBM. J. Pet. Sci. Eng. 2022, 215, 110596. [Google Scholar] [CrossRef]

- de Melo, M.J.; Gonçalves, D.N.; Gomes, M.d.N.B.; Faria, G.; Silva, J.d.A.; Ramos, A.P.M.; Osco, L.P.; Furuya, M.T.G.; Marcato Junior, J.; Gonçalves, W.N. Automatic Segmentation of Cattle Rib-Eye Area in Ultrasound Images Using the UNet++ Deep Neural Network. Comput. Electron. Agric. 2022, 195, 106818. [Google Scholar] [CrossRef]

- Hoorali, F.; Khosravi, H.; Moradi, B. Automatic Microscopic Diagnosis of Diseases Using an Improved UNet++ Architecture. Tissue Cell 2022, 76, 101816. [Google Scholar] [CrossRef]

- Liu, F.; Wang, L. UNet-Based Model for Crack Detection Integrating Visual Explanations. Constr. Build. Mater. 2022, 322, 126265. [Google Scholar] [CrossRef]

- Bhagat, S.; Kokare, M.; Haswani, V.; Hambarde, P.; Kamble, R. Eff-UNet++: A Novel Architecture for Plant Leaf Segmentation and Counting. Ecol. Inform. 2022, 68, 101583. [Google Scholar] [CrossRef]

- Fernández, J.G.; Mehrkanoon, S. Broad-UNet: Multi-Scale Feature Learning for Nowcasting Tasks. Neural Netw. 2021, 144, 419–427. [Google Scholar] [CrossRef]

- Yang, B.; Wu, M.; Teizer, W. Modified UNet++ with Attention Gate for Graphene Identification by Optical Microscopy. Carbon N. Y. 2022, 195, 246–252. [Google Scholar] [CrossRef]

- Hoorali, F.; Khosravi, H.; Moradi, B. Automatic Bacillus Anthracis Bacteria Detection and Segmentation in Microscopic Images Using UNet++. J. Microbiol. Methods 2020, 177, 106056. [Google Scholar] [CrossRef] [PubMed]

- Aghalari, M.; Aghagolzadeh, A.; Ezoji, M. Brain Tumor Image Segmentation via Asymmetric/Symmetric UNet Based on Two-Pathway-Residual Blocks. Biomed. Signal Process. Control 2021, 69, 102841. [Google Scholar] [CrossRef]

- Lu, Y.; Qin, X.; Fan, H.; Lai, T.; Li, Z. WBC-Net: A White Blood Cell Segmentation Network Based on UNet++ and ResNet. Appl. Soft Comput. 2021, 101, 107006. [Google Scholar] [CrossRef]

- Sun, Q.; Zhang, R.; Chen, L.; Zhang, L.; Zhang, H.; Zhao, C. Semantic Segmentation and Path Planning for Orchards Based on UAV Images. Comput. Electron. Agric. 2022, 200, 107222. [Google Scholar] [CrossRef]

- Ni, Z.L.; Bian, G.B.; Zhou, X.H.; Wang, G.A.; Yue, W.Q.; Li, Z.; Hou, Z.G. SurgiNet: Pyramid Attention Aggregation and Class-Wise Self-Distillation for Surgical Instrument Segmentation. Med. Image Anal. 2022, 76, 102310. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Q.; Situ, Z.; Teng, S.; Liu, H.; Chen, W.; Chen, G. Automatic Sewer Defect Detection and Severity Quantification Based on Pixel-Level Semantic Segmentation. Tunn. Undergr. Sp. Technol. 2022, 123, 104403. [Google Scholar] [CrossRef]

- Pont-Tuset, J.; Marques, F. Supervised Evaluation of Image Segmentation and Object Proposal Techniques. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1465–1478. [Google Scholar] [CrossRef]

- Chang, H.H.; Zhuang, A.H.; Valentino, D.J.; Chu, W.C. Performance Measure Characterization for Evaluating Neuroimage Segmentation Algorithms. Neuroimage 2009, 47, 122–135. [Google Scholar] [CrossRef]

- Hossain, M.S.; Betts, J.M.; Paplinski, A.P. Dual Focal Loss to Address Class Imbalance in Semantic Segmentation. Neurocomputing 2021, 462, 69–87. [Google Scholar] [CrossRef]

- Yeung, M.; Sala, E.; Schönlieb, C.B.; Rundo, L. Unified Focal Loss: Generalising Dice and Cross Entropy-Based Losses to Handle Class Imbalanced Medical Image Segmentation. Comput. Med. Imaging Graph. 2022, 95, 102026. [Google Scholar] [CrossRef] [PubMed]

- Bridges, E.M. Soil Horizon Designations; Past Use and Future Prospects. CATENA 1993, 20, 363–373. [Google Scholar] [CrossRef]

- Chinese Soil Taxonomy Research Group; Institute of Soil Science Chinese Academy of Sciences; Cooperative Research Group on Chinese Soil Taxonomy. Keys to Chinese Soil Taxonomy, 3rd ed.; University of Science and Technology of China Press: Hefei, China, 2001. (In Chinese) [Google Scholar]

- Mou, J. Study of Ferrosols and Argosols in Hubei and Jiangxi Province; Huazhong Agricultural University: Wuhan, China, 2016. (In Chinese) [Google Scholar]

- Haburaj, V.; Krause, J.; Pless, S.; Waske, B.; Schütt, B. Evaluating the Potential of Semi-Automated Image Analysis for Delimiting Soil and Sediment Layers. J. Field Archaeol. 2019, 44, 538–549. [Google Scholar] [CrossRef]

| Order | Suborder | Number of Profiles | Total |

|---|---|---|---|

| Primosols | Orthic Primosols | 22 | 23 |

| Anthric Primosols | 1 | ||

| Ferrosols | Udic Ferrosols | 24 | 25 |

| Ustic Ferrosols | 1 | ||

| Argosols | Udic Argosols | 64 | 65 |

| Perudic Argosols | 1 | ||

| Anthrosols | Stagnic Anthrosols | 89 | 89 |

| Cambosols | Udic Cambosols | 96 | 129 |

| Aquic Cambosols | 29 | ||

| Perudic Cambosols | 4 |

| Diagnostic Horizon | Thickness Mean (cm) | Boundary Distinctness | % | Boundary Topography | % | Density of Roots (/dm2) | % | Thickness of Roots (mm) | % |

|---|---|---|---|---|---|---|---|---|---|

| Ochric epipedon | 23 | Abrupt | 4% | Smooth | 66% | 0 | 3% | 0 | 3% |

| Clear | 44% | Wavy | 27% | 1–20 | 13% | <0.5 | 9% | ||

| Gradual | 38% | Irregular | 6% | 20–50 | 40% | 0.5–2 | 70% | ||

| Diffuse | 14% | Broken | 1% | 50–200 | 35% | 2–5 | 16% | ||

| ≥200 | 9% | 5–10 | 2% | ||||||

| Anthrostagnic epipedon | 31 | Abrupt | 23% | Smooth | 89% | 0 | 6% | 0 | 6% |

| Clear | 42% | Wavy | 10% | 1–20 | 5% | <0.5 | 9% | ||

| Gradual | 30% | Irregular | 1% | 20–50 | 25% | 0.5–2 | 85% | ||

| Diffuse | 5% | Broken | 0% | 50–200 | 50% | 2–5 | 0% | ||

| ≥200 | 14% | 5–10 | 0% | ||||||

| Mollic or Umbric epipedon | 26 | Abrupt | 0% | Smooth | 55% | 0 | 9% | 0 | 9% |

| Clear | 45% | Wavy | 45% | 1–20 | 0% | <0.5 | 9% | ||

| Gradual | 55% | Irregular | 0% | 20–50 | 46% | 0.5–2 | 64% | ||

| Diffuse | 0% | Broken | 0% | 50–200 | 18% | 2–5 | 9% | ||

| ≥200 | 27% | 5–10 | 9% | ||||||

| Cambic horizon | 67 | Abrupt | 8% | Smooth | 65% | 0 | 21% | 0 | 21% |

| Clear | 27% | Wavy | 28% | 1–20 | 33% | <0.5 | 18% | ||

| Gradual | 42% | Irregular | 4% | 20–50 | 35% | 0.5–2 | 53% | ||

| Diffuse | 23% | Broken | 3% | 50–200 | 10% | 2–5 | 5% | ||

| ≥200 | 1% | 5–10 | 3% | ||||||

| Argic horizon | 62 | Abrupt | 3% | Smooth | 58% | 0 | 14% | 0 | 14% |

| Clear | 28% | Wavy | 30% | 1–20 | 40% | <0.5 | 28% | ||

| Gradual | 45% | Irregular | 12% | 20–50 | 33% | 0.5–2 | 48% | ||

| Diffuse | 24% | Broken | 0% | 50–200 | 11% | 2–5 | 6% | ||

| ≥200 | 2% | 5–10 | 4% | ||||||

| Hydragric horizon | 74 | Abrupt | 19% | Smooth | 86% | 0 | 44% | 0 | 44% |

| Clear | 29% | Wavy | 10% | 1–20 | 25% | <0.5 | 12% | ||

| Gradual | 34% | Irregular | 4% | 20–50 | 21% | 0.5–2 | 44% | ||

| Diffuse | 18% | Broken | 0% | 50–200 | 9% | 2–5 | 0% | ||

| ≥200 | 1% | 5–10 | 0% | ||||||

| Argic and LAC-ferric horizon | 48 | Abrupt | 0% | Smooth | 62% | 0 | 6% | 0 | 6% |

| Clear | 44% | Wavy | 19% | 1–20 | 38% | <0.5 | 38% | ||

| Gradual | 31% | Irregular | 19% | 20–50 | 38% | 0.5–2 | 37% | ||

| Diffuse | 25% | Broken | 0% | 50–200 | 18% | 2–5 | 13% | ||

| ≥200 | 0% | 5–10 | 6% | ||||||

| LAC-ferric horizon | 54 | Abrupt | 0% | Smooth | 68% | 0 | 12% | 0 | 12% |

| Clear | 26% | Wavy | 11% | 1–20 | 11% | <0.5 | 23% | ||

| Gradual | 48% | Irregular | 11% | 20–50 | 44% | 0.5–2 | 43% | ||

| Diffuse | 26% | Broken | 0% | 50–200 | 22% | 2–5 | 22% | ||

| ≥200 | 11% | 5–10 | 0% |

| Hue (Moist) | 5R | 7.5R | 10R | 2.5YR | 5YR | 7.5YR | 10YR | 2.5Y | 5Y | 7.5Y | 10G | 10BG | 5PB | Total | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Diagnostic Horizons | |||||||||||||||

| Ochric epipedon | 1 | 1 | 3 | 27 | 47 | 85 | 41 | 2 | 1 | 1 | 209 | ||||

| Anthrostagnic epipedon | 1 | 9 | 18 | 45 | 3 | 3 | 1 | 80 | |||||||

| Mollic or Umbric epipedon | 2 | 3 | 4 | 2 | 11 | ||||||||||

| Cambic horizon | 5 | 22 | 25 | 56 | 31 | 3 | 1 | 143 | |||||||

| Argic horizon | 2 | 1 | 19 | 28 | 24 | 10 | 84 | ||||||||

| Hydragric horizon | 3 | 7 | 18 | 40 | 2 | 3 | 2 | 2 | 77 | ||||||

| Argic and LAC-ferric horizon | 6 | 7 | 2 | 1 | 16 | ||||||||||

| LAC-ferric horizon | 1 | 2 | 5 | 1 | 9 | ||||||||||

| Total | 1 | 3 | 10 | 82 | 131 | 207 | 171 | 10 | 7 | 1 | 2 | 3 | 1 | 629 | |

| Epoch | 123rd | 148th | ||

|---|---|---|---|---|

| MIoU (%) | PA (%) | MIoU (%) | PA (%) | |

| Ochric epipedon | 71.69 | 84.51 | 71.78 | 84.68 |

| Cambic horizon | 73.39 | 86.48 | 73.45 | 86.57 |

| Mollic or Umbric epipedon | 73.38 | 84.09 | 73.49 | 83.64 |

| LAC-ferric horizon | 52.16 | 64.95 | 52.49 | 64.01 |

| Argic horizon | 61.03 | 74.85 | 61.29 | 75.25 |

| Anthrostagnic epipedon | 77.80 | 89.23 | 77.74 | 89.08 |

| Hydragric horizon | 82.86 | 91.90 | 82.88 | 92.33 |

| Argic and LAC-ferric horizon | 62.19 | 74.53 | 62.43 | 73.15 |

| Standard deviation | 9.41 | 8.48 | 9.29 | 8.86 |

| The overall samples | 71.24 | 82.66 | 71.36 | 82.38 |

| The sum of standard deviations | 17.89 | 18.15 | ||

| MIoU + PA | 153.9 | 153.7 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, R.; Chen, J.; Wang, J.; Liu, S. Toward Field Soil Surveys: Identifying and Delineating Soil Diagnostic Horizons Based on Deep Learning and RGB Image. Agronomy 2022, 12, 2664. https://doi.org/10.3390/agronomy12112664

Yang R, Chen J, Wang J, Liu S. Toward Field Soil Surveys: Identifying and Delineating Soil Diagnostic Horizons Based on Deep Learning and RGB Image. Agronomy. 2022; 12(11):2664. https://doi.org/10.3390/agronomy12112664

Chicago/Turabian StyleYang, Ruiqing, Jiaying Chen, Junguang Wang, and Shuyu Liu. 2022. "Toward Field Soil Surveys: Identifying and Delineating Soil Diagnostic Horizons Based on Deep Learning and RGB Image" Agronomy 12, no. 11: 2664. https://doi.org/10.3390/agronomy12112664

APA StyleYang, R., Chen, J., Wang, J., & Liu, S. (2022). Toward Field Soil Surveys: Identifying and Delineating Soil Diagnostic Horizons Based on Deep Learning and RGB Image. Agronomy, 12(11), 2664. https://doi.org/10.3390/agronomy12112664