Fatigue Life Prediction of CFRP-FBG Sensor-Reinforced RC Beams Enabled by LSTM-Based Deep Learning

Abstract

1. Introduction

2. Experimental Methodology and Data Acquisition

2.1. Structural Configuration of CFRP-FBG Sensors

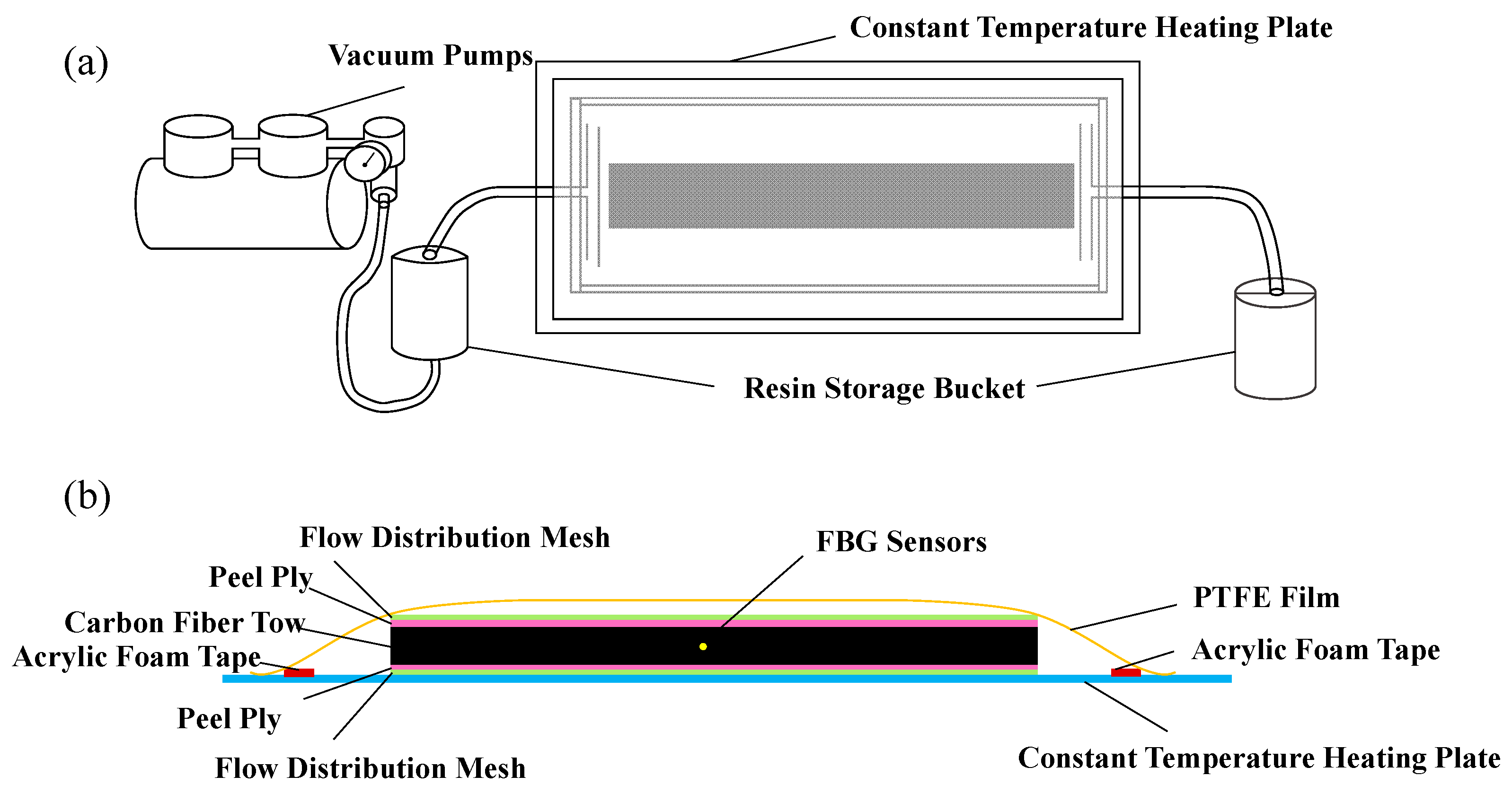

2.2. Specimen Preparation

2.3. Dynamic Cyclic Loading Experiments and Sensor Data Collection

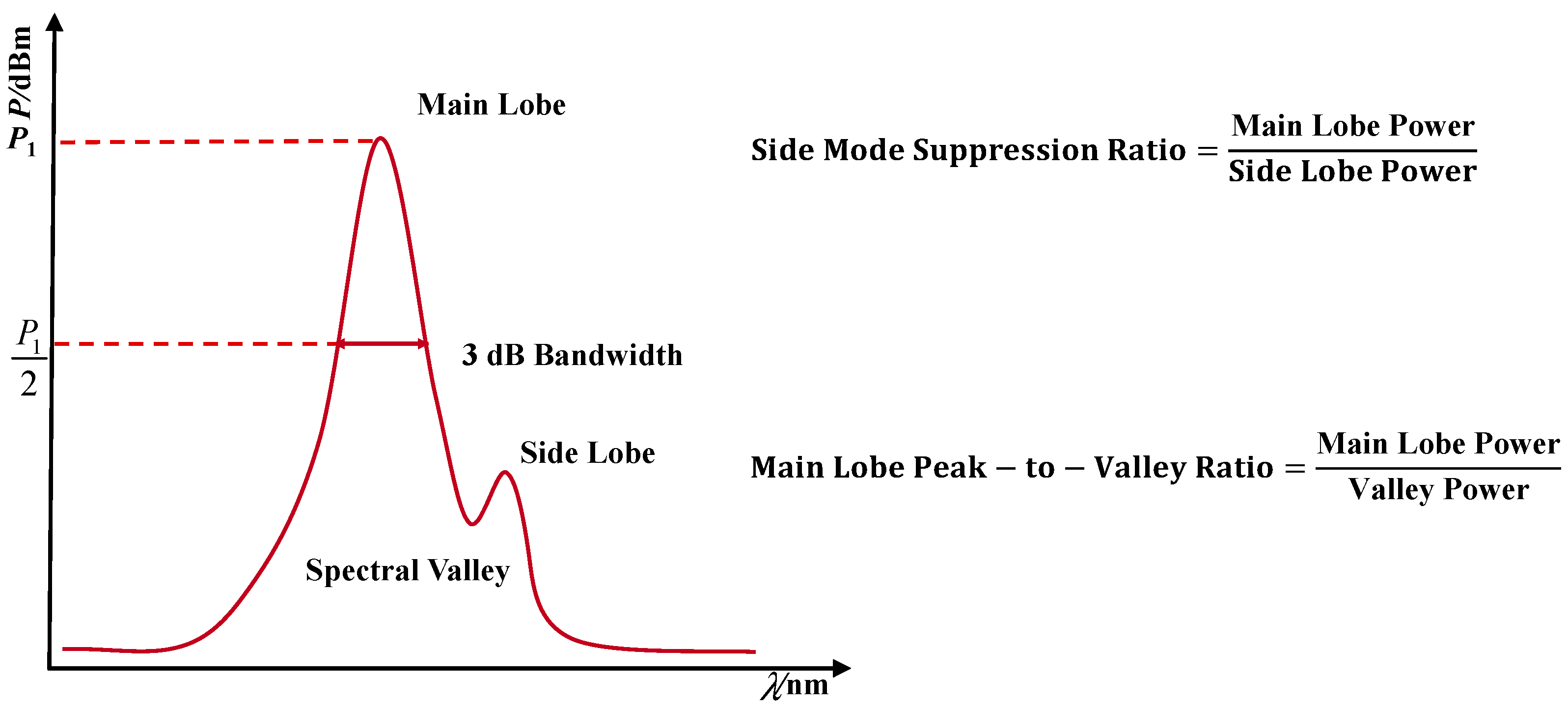

3. Feature Extraction and Data Preprocessing

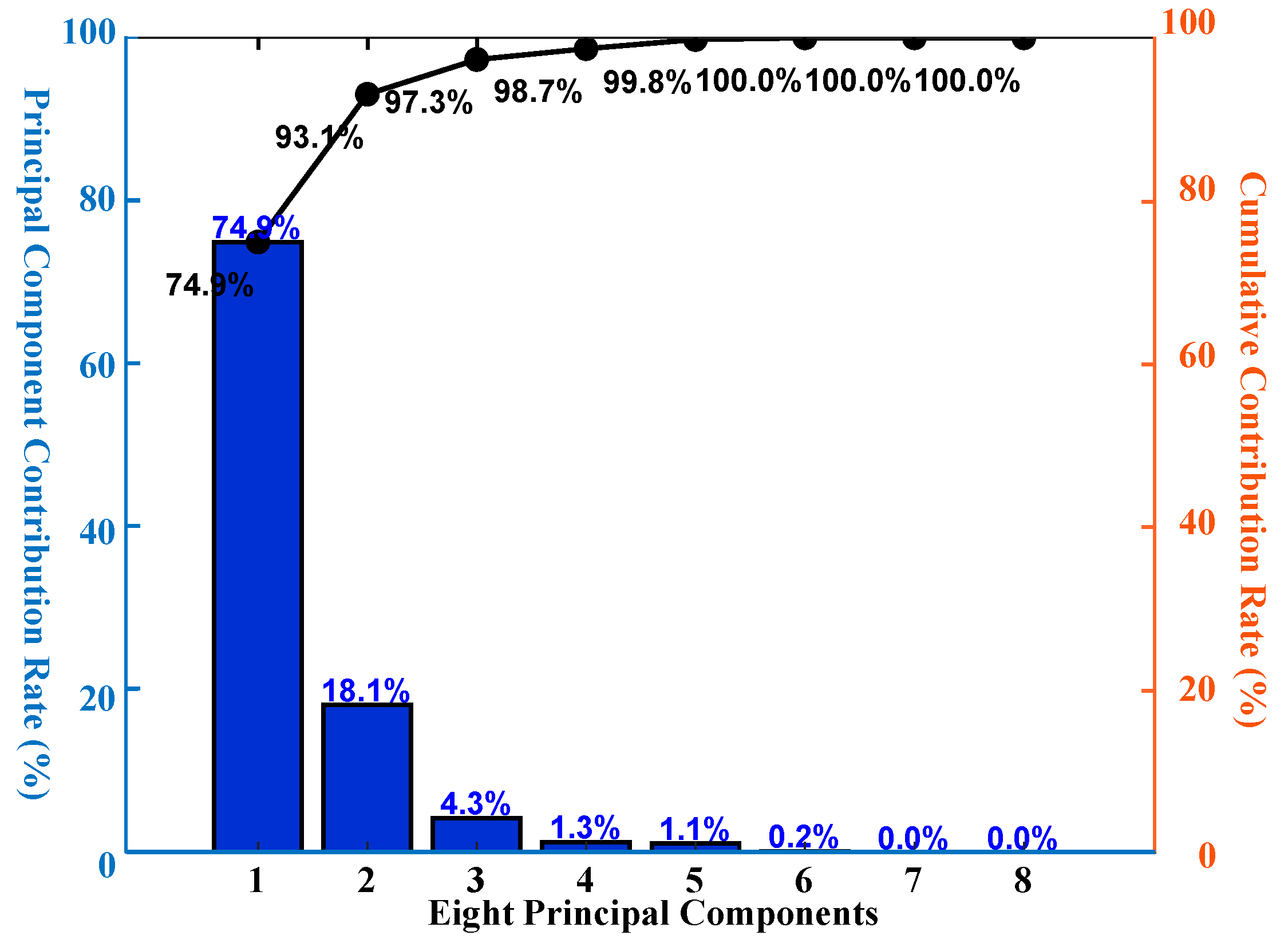

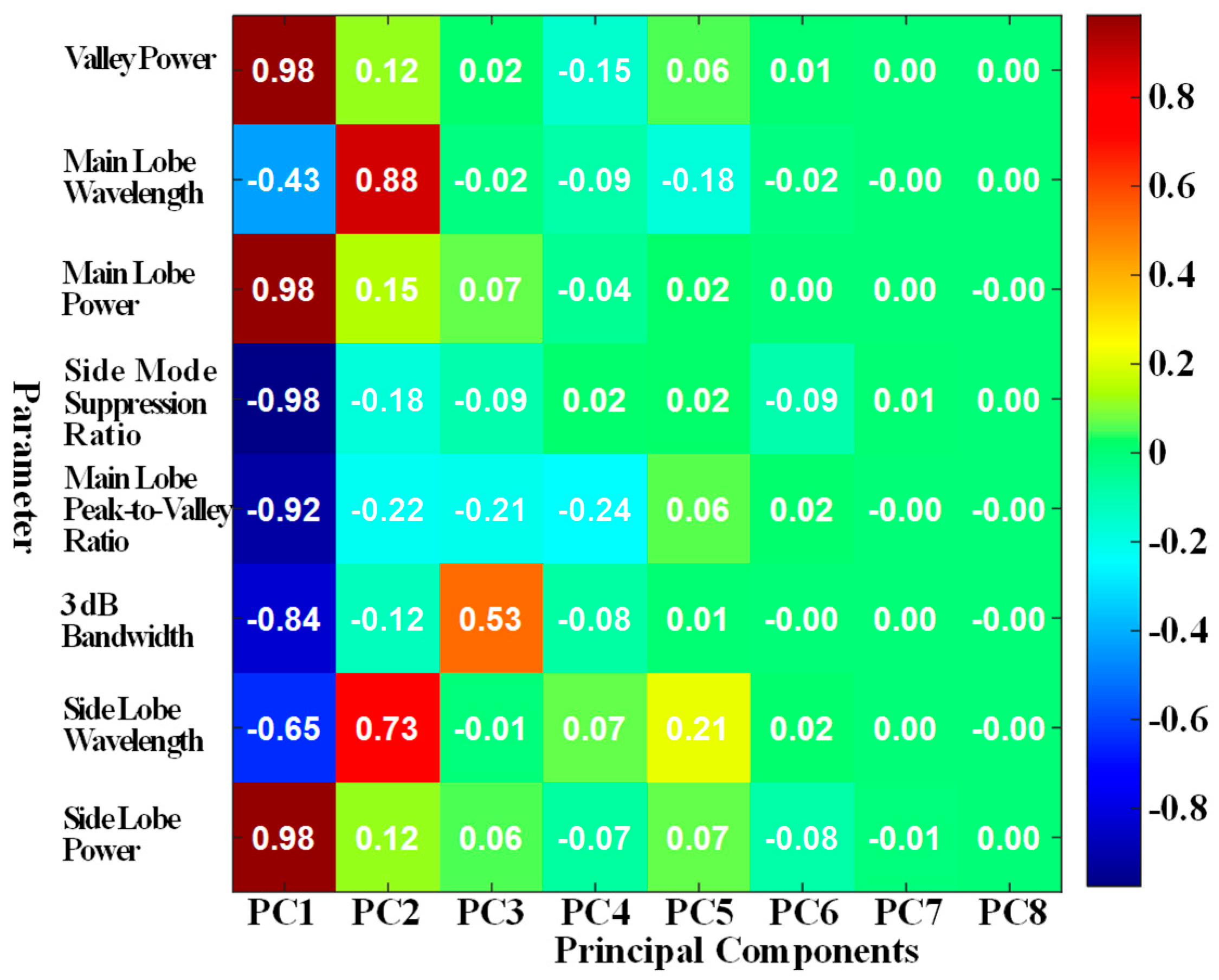

3.1. Dimensionality Reduction in Feature Space

- (1)

- Standardization of the original dataset.

- (2)

- Calculation of the correlation matrix R among variables.

- (3)

- Determination of eigenvalues and eigenvectors of the correlation matrix R.

- (4)

- Construction of principal components.

- (5)

- Computation of composite scores.

- (1)

- Standardization of the original dataset

- (2)

- Calculation of the correlation matrix R among variables

- (3)

- Determination of eigenvalues and eigenvectors of the correlation matrix R

- (4)

- Construction of principal components

- (5)

- Computation of composite scores

3.2. Normalization Methodology

3.3. Evaluation Metrics for Prediction Performance

4. Construction of Predictive Models and Prognostic Evaluation

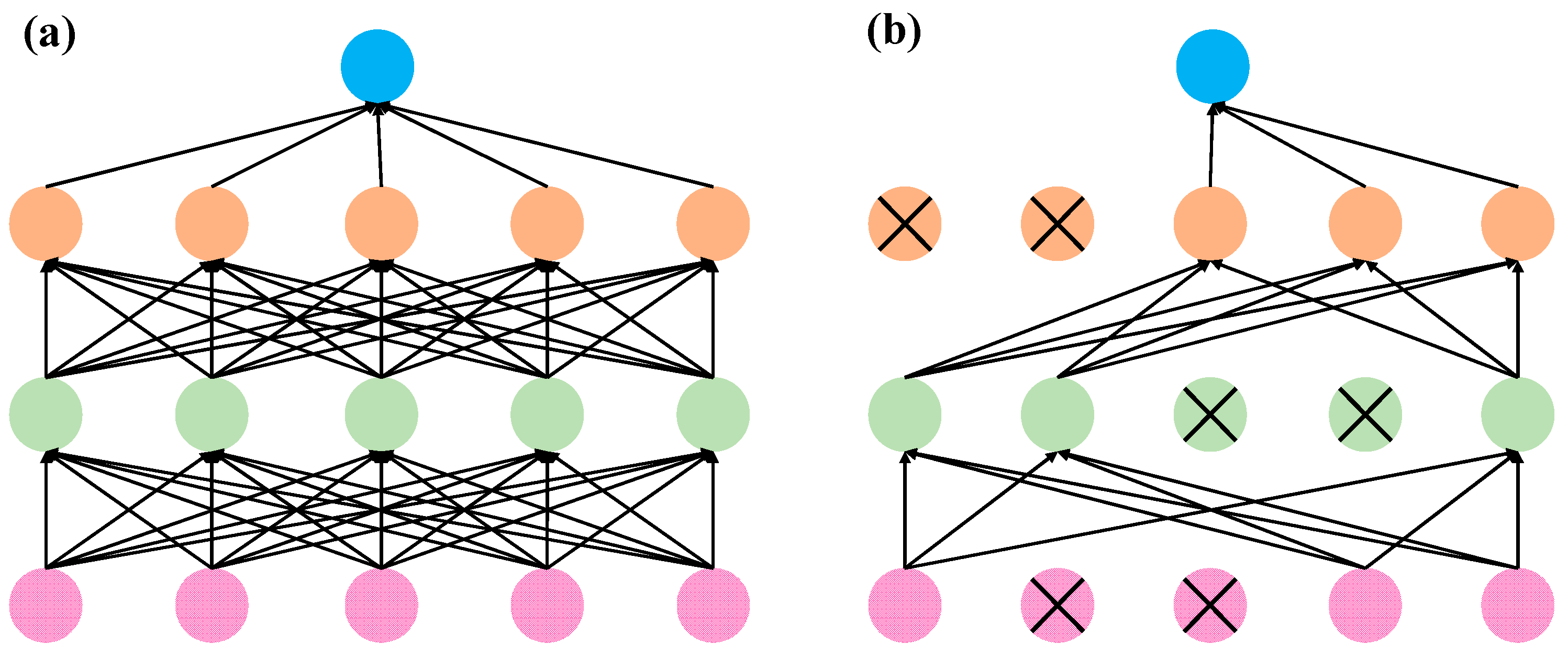

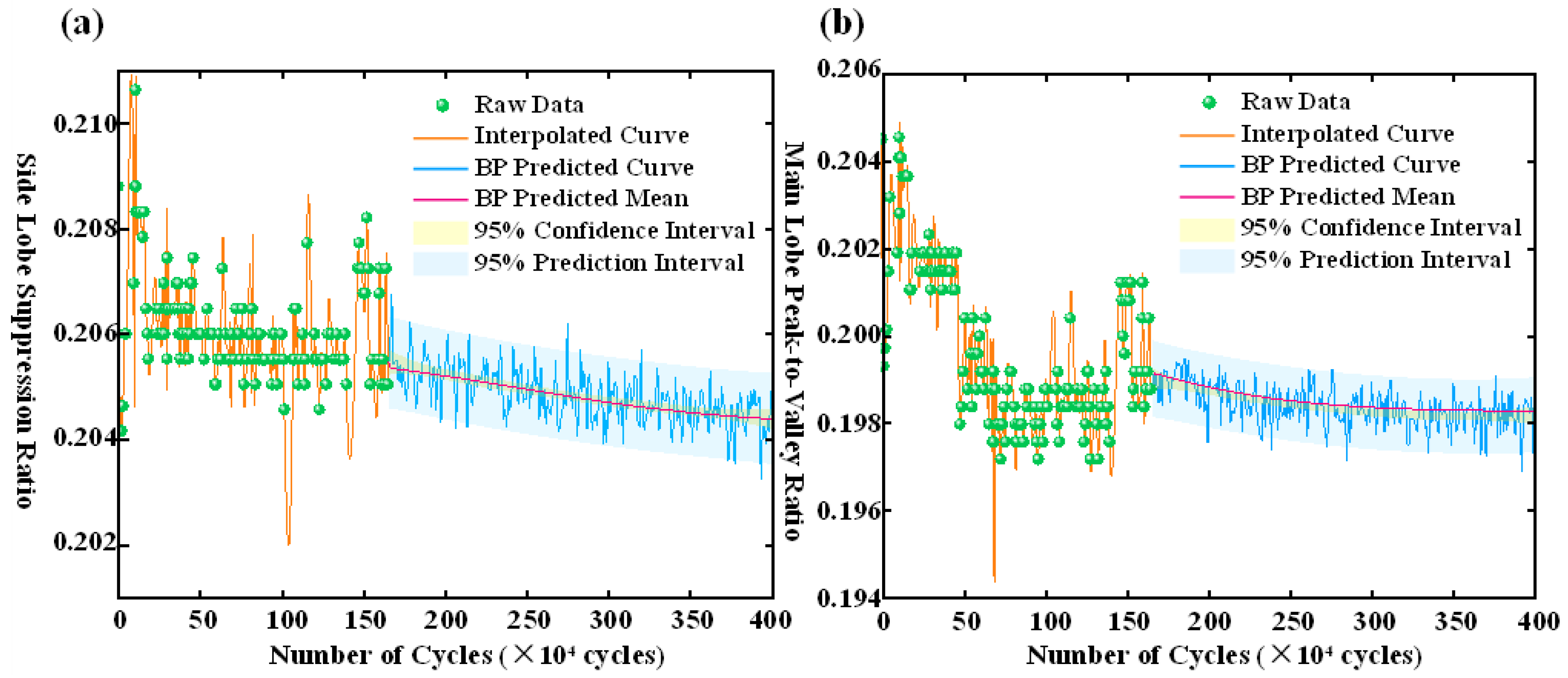

4.1. Benchmark Neural Network Model Based on BP

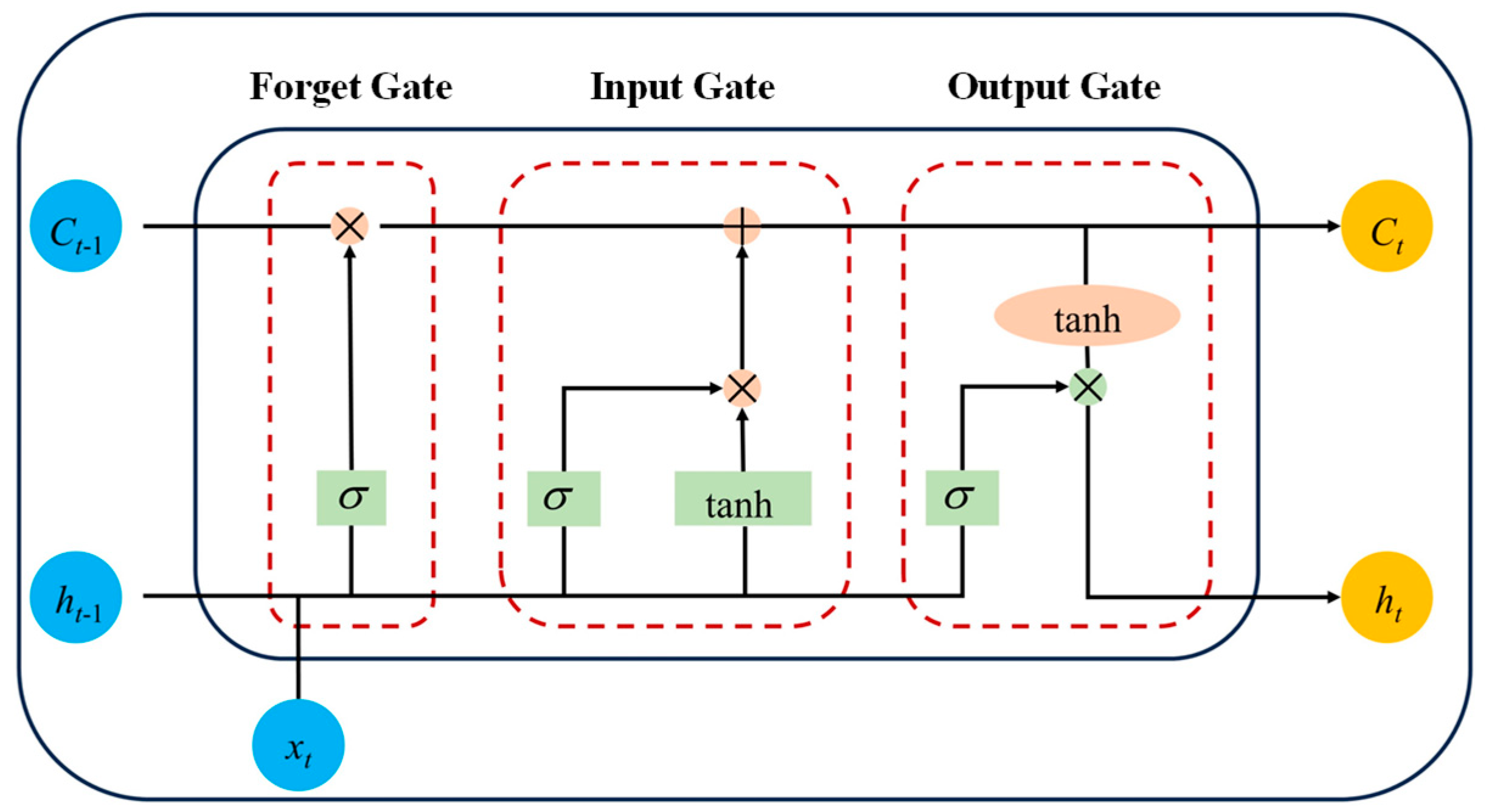

4.2. Structural Design and Optimization Methodology for the LSTM Neural Network

- (1)

- Dropout Regularization

- (2)

- Adam Optimization Algorithm

- (a)

- First-Order Moment Estimation

- (b)

- Second-Order Moment Estimation

- (c)

- Bias-Corrected Estimates

- (d)

- Parameter Update Rule

- (3)

- Model Hyperparameter Configuration

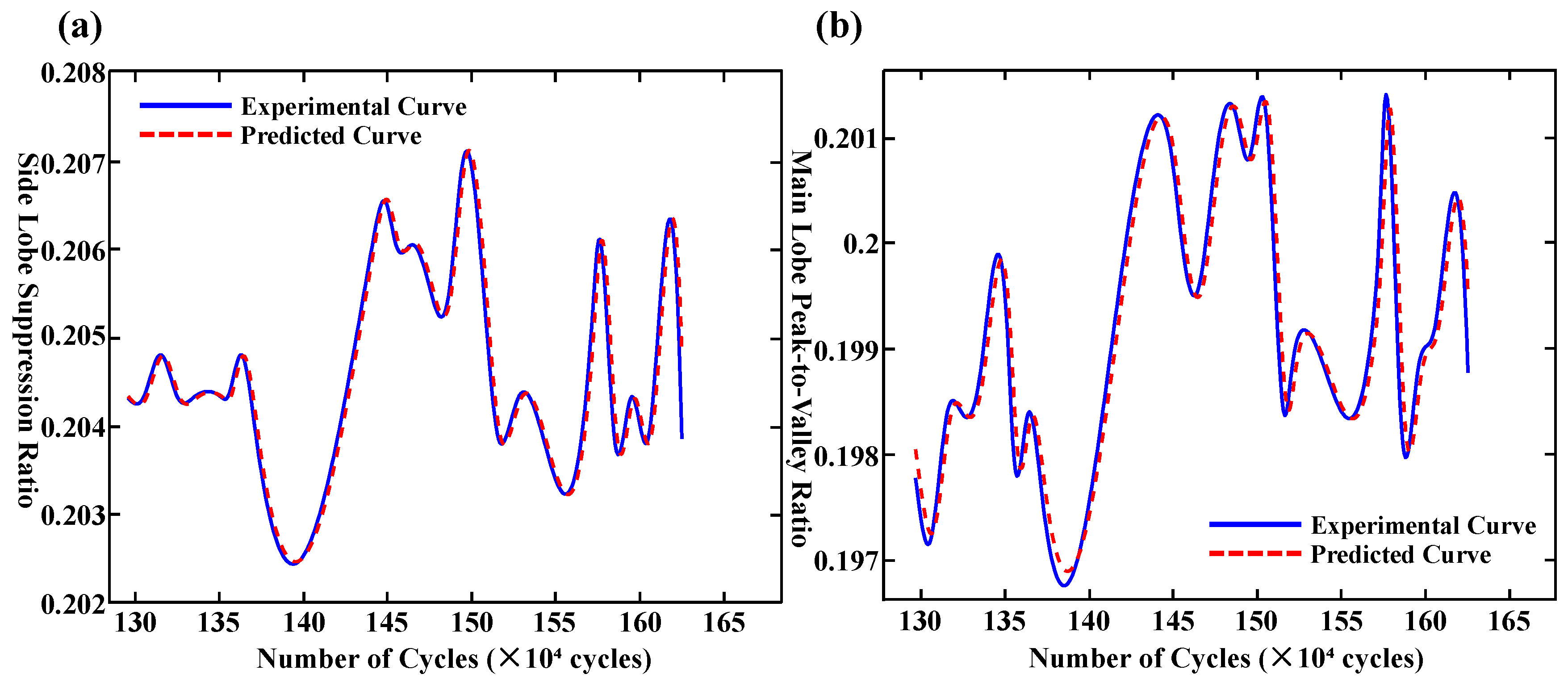

4.3. Prediction Results and Performance Evaluation of the LSTM Model

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Ma, Y.; Guo, Z.; Wang, L.; Zhang, J. Probabilistic Life Prediction for Reinforced Concrete Structures Subjected to Seasonal Corrosion-Fatigue Damage. J. Struct. Eng. 2020, 146, 04020117. [Google Scholar] [CrossRef]

- Thomas, C.; Sainz-Aja, J.; Setien, J.; Cimentada, A.; Polanco, J. Resonance fatigue testing on high-strength self-compacting concrete. J. Build. Eng. 2021, 35, 102057. [Google Scholar] [CrossRef]

- Oneschkow, N.; Scheiden, T.; Hüpgen, M.; Rozanski, C.; Haist, M. Fatigue-Induced Damage in High-Strength Concrete Microstructure. Materials 2021, 14, 5650. [Google Scholar] [CrossRef]

- Qiang, X.; Chen, L.; Jiang, X. Achievements and Perspectives on Fe-Based Shape Memory Alloys for Rehabilitation of Reinforced Concrete Bridges: An Overview. Materials 2022, 15, 8089. [Google Scholar] [CrossRef]

- Hadzima-Nyarko, M.; Ademović, N.; Koković, V.; Lozančić, S. Structural dynamic properties of reinforced concrete tunnel form system buildings. Structures 2022, 41, 657–667. [Google Scholar] [CrossRef]

- Shan, Z.; Looi, D.T.W.; Cheng, B.; Su, R.K.L. Simplified seismic axial collapse capacity prediction model for moderately compressed reinforced concrete shear walls adjacent to transfer structure in tall buildings. Struct. Des. Tall Spéc. Build. 2020, 29, e1752. [Google Scholar] [CrossRef]

- Mohamed Sayed, A.; Mohamed Rashwan, M.; Emad Helmy, M. Experimental Behavior of Cracked Reinforced Concrete Columns Strengthened with Reinforced Concrete Jacketing. Materials 2020, 13, 2832. [Google Scholar] [CrossRef]

- Tiwary, A.K.; Singh, S.; Kumar, R.; Sharma, K.; Chohan, J.S.; Sharma, S.; Singh, J.; Kumar, J.; Deifalla, A.F. Comparative Study on the Behavior of Reinforced Concrete Beam Retrofitted with CFRP Strengthening Techniques. Polymers 2022, 14, 4024. [Google Scholar] [CrossRef]

- Erol, G.; Karadogan, H.F. Seismic strengthening of infilled reinforced concrete frames by CFRP. Compos. Part B Eng. 2016, 91, 473–491. [Google Scholar] [CrossRef]

- Li, S.; Dezfuli, F.H.; Wang, J.-Q.; Alam, M.S. Seismic vulnerability and loss assessment of an isolated simply-supported highway bridge retrofitted with optimized superelastic shape memory alloy cable restrainers. Bull. Earthq. Eng. 2020, 18, 3285–3316. [Google Scholar] [CrossRef]

- Yang, F.; Qin, G.; Liu, K.; Xiong, F.; Liu, W. Analytical and Numerical Study on the Performance of the Curved Surface of a Circular Tunnel Reinforced with CFRP. Buildings 2022, 12, 2042. [Google Scholar] [CrossRef]

- Jahan, M.A.I.; Honnungar, R.V.; Nandhini, V.L.; Malini, V.L.; Vohra, H.; Balaji, V.R.; Royc, S.K. Deciphering the sensory landscape: A comparative analysis of fiber Bragg grating and strain gauge systems in structural health monitoring. J. Opt. 2024, 1–9. [Google Scholar] [CrossRef]

- Hu, K.; Yao, Z.; Wu, Y.; Xu, Y.; Wang, X.; Wang, C. Application of FBG Sensor to Safety Monitoring of Mine Shaft Lining Structure. Sensors 2022, 22, 4838. [Google Scholar] [CrossRef] [PubMed]

- Shi, Y.; Wang, C.; Cai, J.; Gao, Z.; Zhang, Y. FBG displacement sensor with hyperbolic flexible hinge structure. Meas. Sci. Technol. 2023, 34, 125156. [Google Scholar] [CrossRef]

- Xiong, X.; Zhang, Y.; Zhang, Y.; Luo, Z. FBG sensing fusion with deep learning for damage identification in CFRP composites. Opt. Fiber Technol. 2025, 93, 104256. [Google Scholar] [CrossRef]

- Geng, X.; Jiang, M.; Gao, L.; Wang, Q.; Jia, Y.; Sui, Q.; Jia, L.; Li, D. Sensing characteristics of FBG sensor embedded in CFRP laminate. Measurement 2017, 98, 199–204. [Google Scholar] [CrossRef]

- Chen, C.; Zhang, C.; Ma, J.; He, S.-Z.; Chen, J.; Sun, L.; Wang, H.-P. FBG Sensing Data Motivated Dynamic Feature Assessment of the Complicated CFRP Antenna Beam under Various Vibration Modes. Buildings 2024, 14, 2249. [Google Scholar] [CrossRef]

- Demiss, B.A.; Elsaigh, W.A. Application of novel hybrid deep learning architectures combining Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN): Construction duration estimates prediction considering preconstruction uncertainties. Eng. Res. Express 2024, 6, 032102. [Google Scholar] [CrossRef]

- Malashin, I.; Tynchenko, V.; Gantimurov, A.; Nelyub, V.; Borodulin, A. Applications of Long Short-Term Memory (LSTM) Networks in Polymeric Sciences: A Review. Polymers 2024, 16, 2607. [Google Scholar] [CrossRef]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to Forget: Continual Prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef]

- Hochreiter, S. Untersuchungen zu Dynamischen Neuronalen Netzen. Ph.D. Thesis, Technical University MunichInstitute of Computer Science, Munich, Germany, 1991. [Google Scholar]

- Han, Y.; Li, C.; Zheng, L.; Lei, G.; Li, L. Remaining Useful Life Prediction of Lithium-Ion Batteries by Using a Denoising Transformer-Based Neural Network. Energies 2023, 16, 6328. [Google Scholar] [CrossRef]

- Donahue, J.; Hendricks, L.A.; Guadarrama, S.; Rohrbach, M.; Venugopalan, S.; Saenko, K.; Darrell, T. Long-Term Recurrent Convolutional Networks for Visual Recognition and Description. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 677–691. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Karpathy, A.; Li, F. Deep Visual-Semantic Alignments for Generating Image Descriptions. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 664–676. [Google Scholar] [CrossRef]

- Greff, K.; Srivastava, R.K.; Koutník, J.; Steunebrink, B.R.; Schmidhuber, J. LSTM: A Search Space Odyssey. IEEE Trans. Neural Netw. Learn. Syst. 2017, 28, 2222–2232. [Google Scholar] [CrossRef]

- Lipton, Z.C.; Berkowitz, J.; Elkan, C. A critical review of recurrent neural networks for sequence learning. In Proceedings of the 2015 International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Reyad, M.; Sarhan, A.M.; Arafa, M. A modified Adam algorithm for deep neural network optimization. Neural Comput. Appl. 2023, 35, 17095–17112. [Google Scholar] [CrossRef]

- Li, Y.; Li, W.; Zhang, B.; Du, J. Federated Adam-Type Algorithm for Distributed Optimization with Lazy Strategy. IEEE Internet Things J. 2022, 9, 20519–20531. [Google Scholar] [CrossRef]

| Parameter | Specification |

|---|---|

| Central Wavelength (nm) | 1530–1570 |

| Grating Length (mm) | 10 |

| Reflectivity | ≥80% |

| Fiber Core Diameter (µm) | 8 |

| Cladding Diameter (µm) | 125 |

| Fiber Outer Diameter (µm) | 250 |

| Heat Treatment Temperature (°C) | 130 |

| Parameter | m | D | e | G | R | V |

|---|---|---|---|---|---|---|

| Value | 1000 | 0.2 | 500 | 1 | 0.001 | 0 or 1 |

| Metric | BP Neural Network | LSTM Neural Network | Change Trend |

|---|---|---|---|

| RMSE | 0.2487 | 0.1616 | −34.99% |

| MAE | 0.1346 | 0.1150 | −14.6% |

| MSE | 0.068 | 0.0261 | −61.62% |

| Metric | BP Neural Network | LSTM Neural Network | Change Trend |

|---|---|---|---|

| RMSE | 0.2698 | 0.2126 | −21.2% |

| MAE | 0.2158 | 0.1620 | −24.9% |

| MSE | 0.0728 | 0.0452 | −37.9% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, M.; Zhou, C.; Pei, X.; Xu, Z.; Xu, W.; Wan, Z. Fatigue Life Prediction of CFRP-FBG Sensor-Reinforced RC Beams Enabled by LSTM-Based Deep Learning. Polymers 2025, 17, 2112. https://doi.org/10.3390/polym17152112

Jia M, Zhou C, Pei X, Xu Z, Xu W, Wan Z. Fatigue Life Prediction of CFRP-FBG Sensor-Reinforced RC Beams Enabled by LSTM-Based Deep Learning. Polymers. 2025; 17(15):2112. https://doi.org/10.3390/polym17152112

Chicago/Turabian StyleJia, Minrui, Chenxia Zhou, Xiaoyuan Pei, Zhiwei Xu, Wen Xu, and Zhenkai Wan. 2025. "Fatigue Life Prediction of CFRP-FBG Sensor-Reinforced RC Beams Enabled by LSTM-Based Deep Learning" Polymers 17, no. 15: 2112. https://doi.org/10.3390/polym17152112

APA StyleJia, M., Zhou, C., Pei, X., Xu, Z., Xu, W., & Wan, Z. (2025). Fatigue Life Prediction of CFRP-FBG Sensor-Reinforced RC Beams Enabled by LSTM-Based Deep Learning. Polymers, 17(15), 2112. https://doi.org/10.3390/polym17152112