Real Time Predictions of VGF-GaAs Growth Dynamics by LSTM Neural Networks

Abstract

1. Introduction

2. Models and Methodology

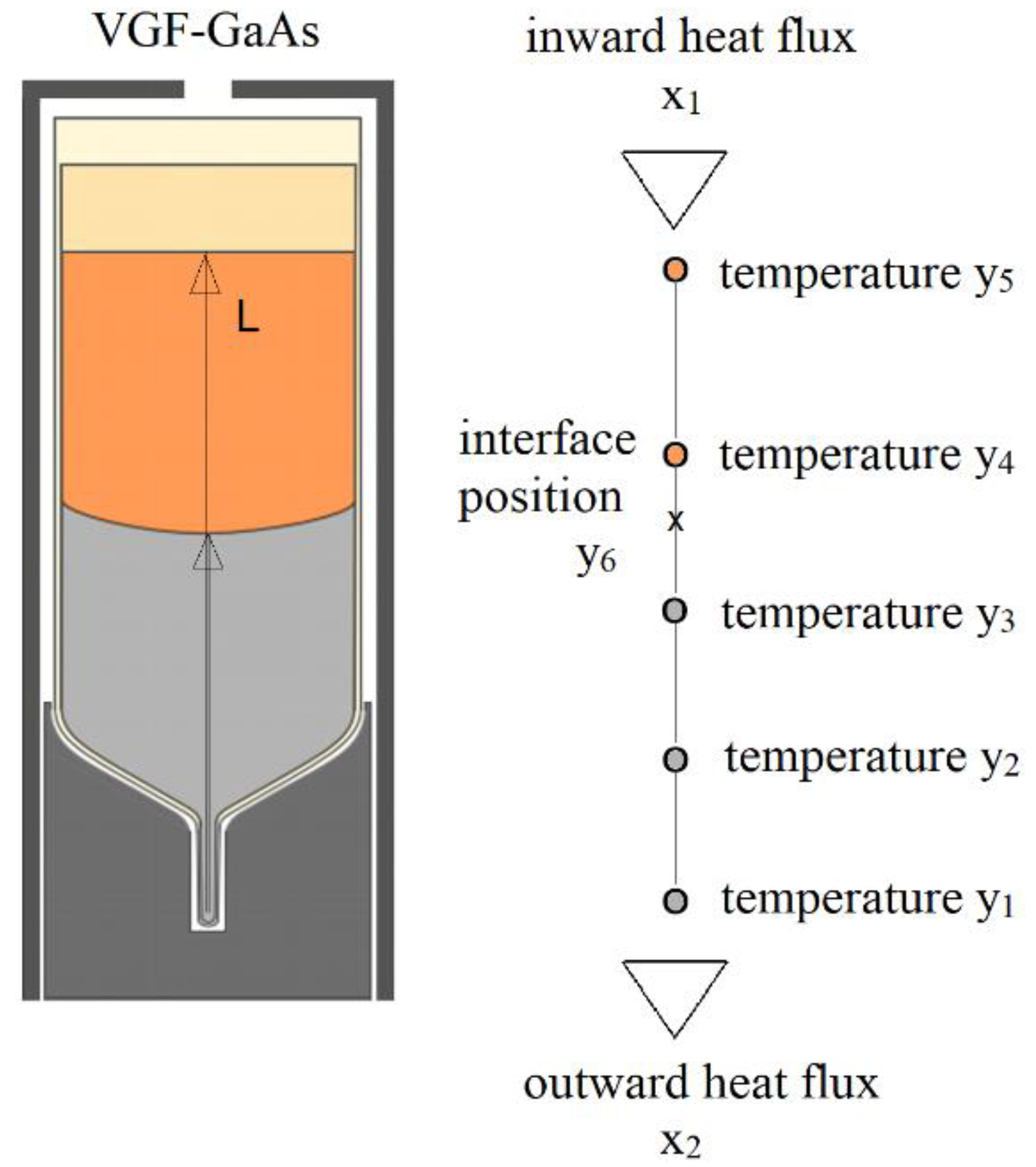

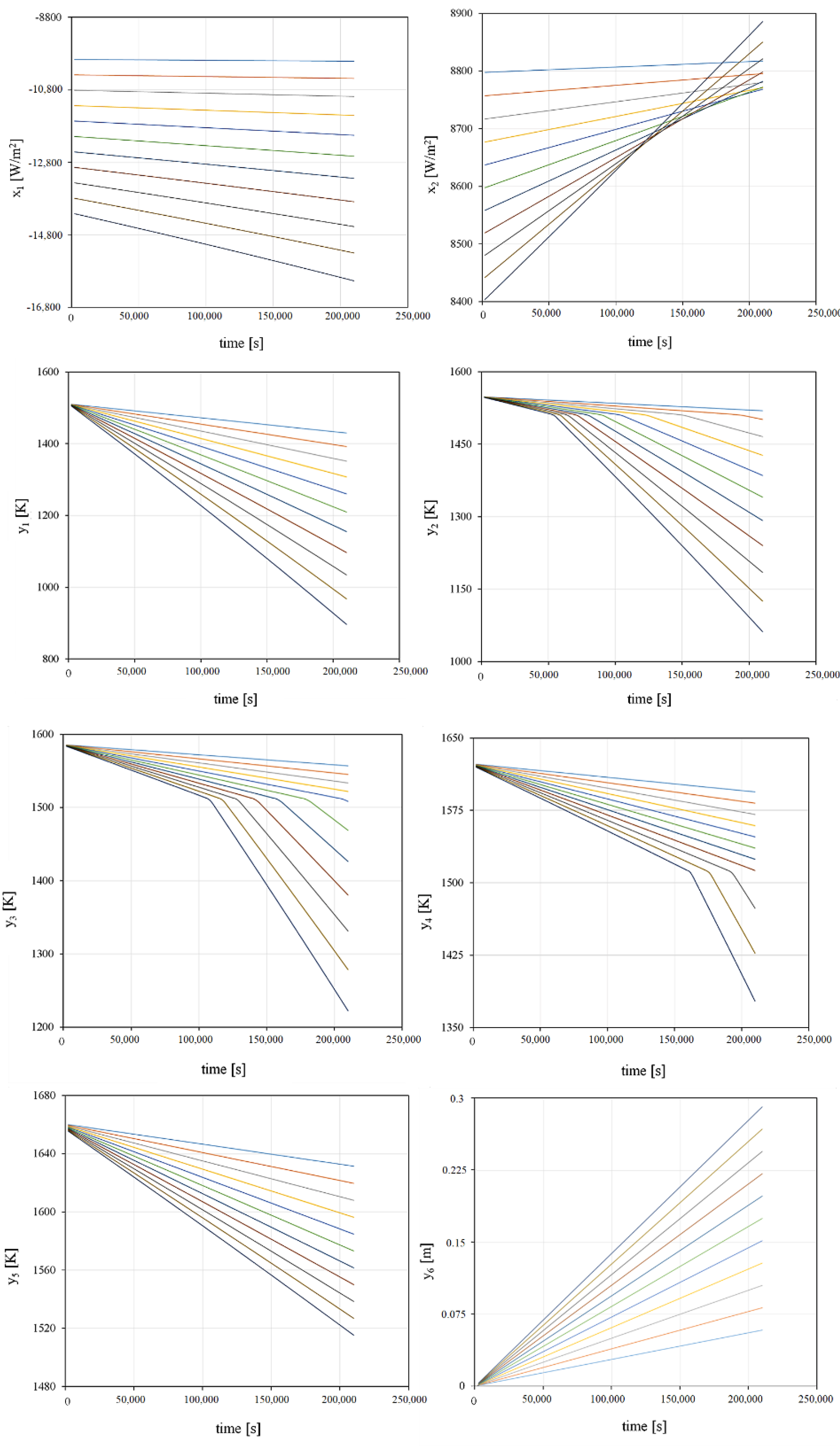

2.1. Generation of Training Data

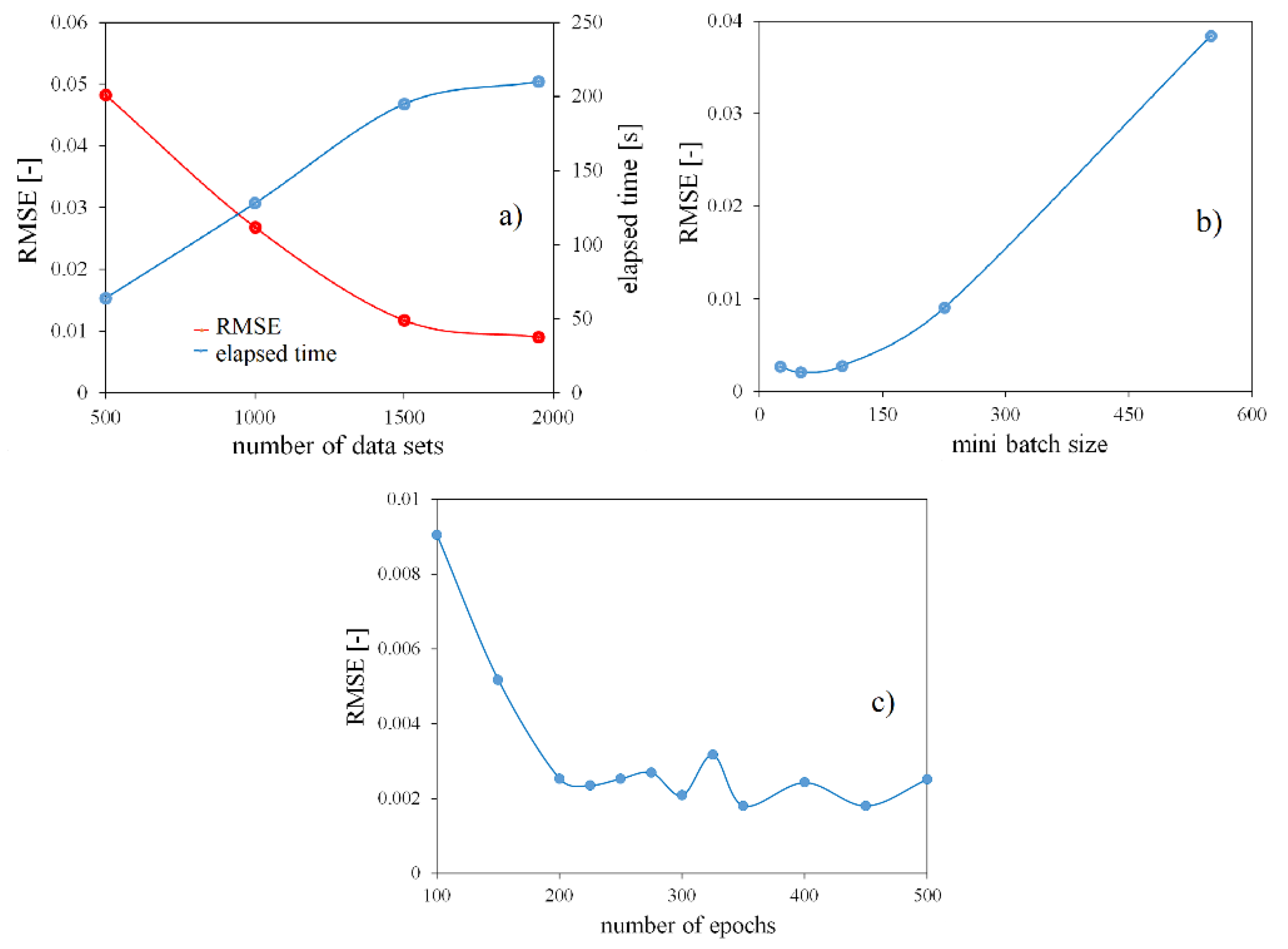

2.2. LSTM Neural Network Modeling

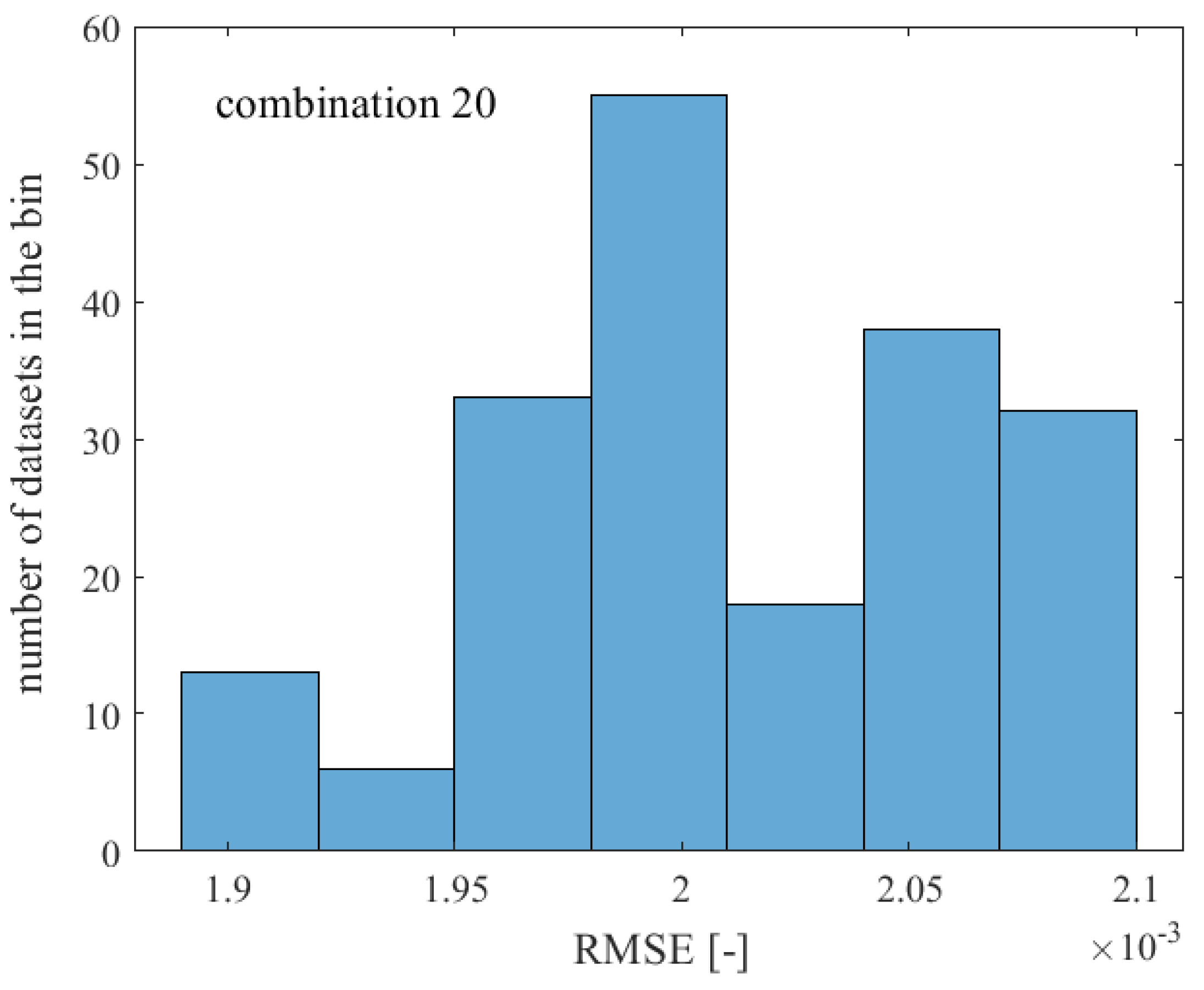

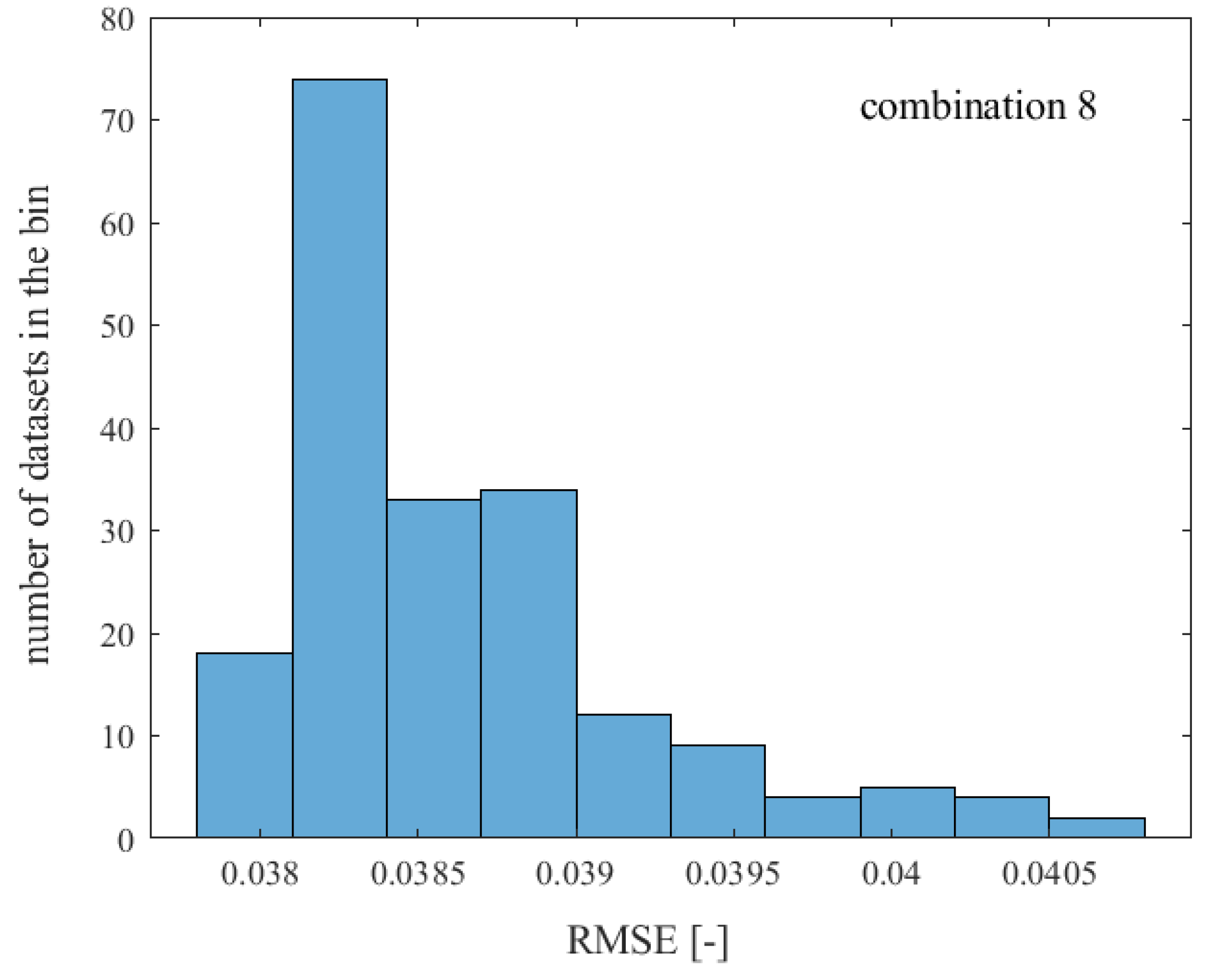

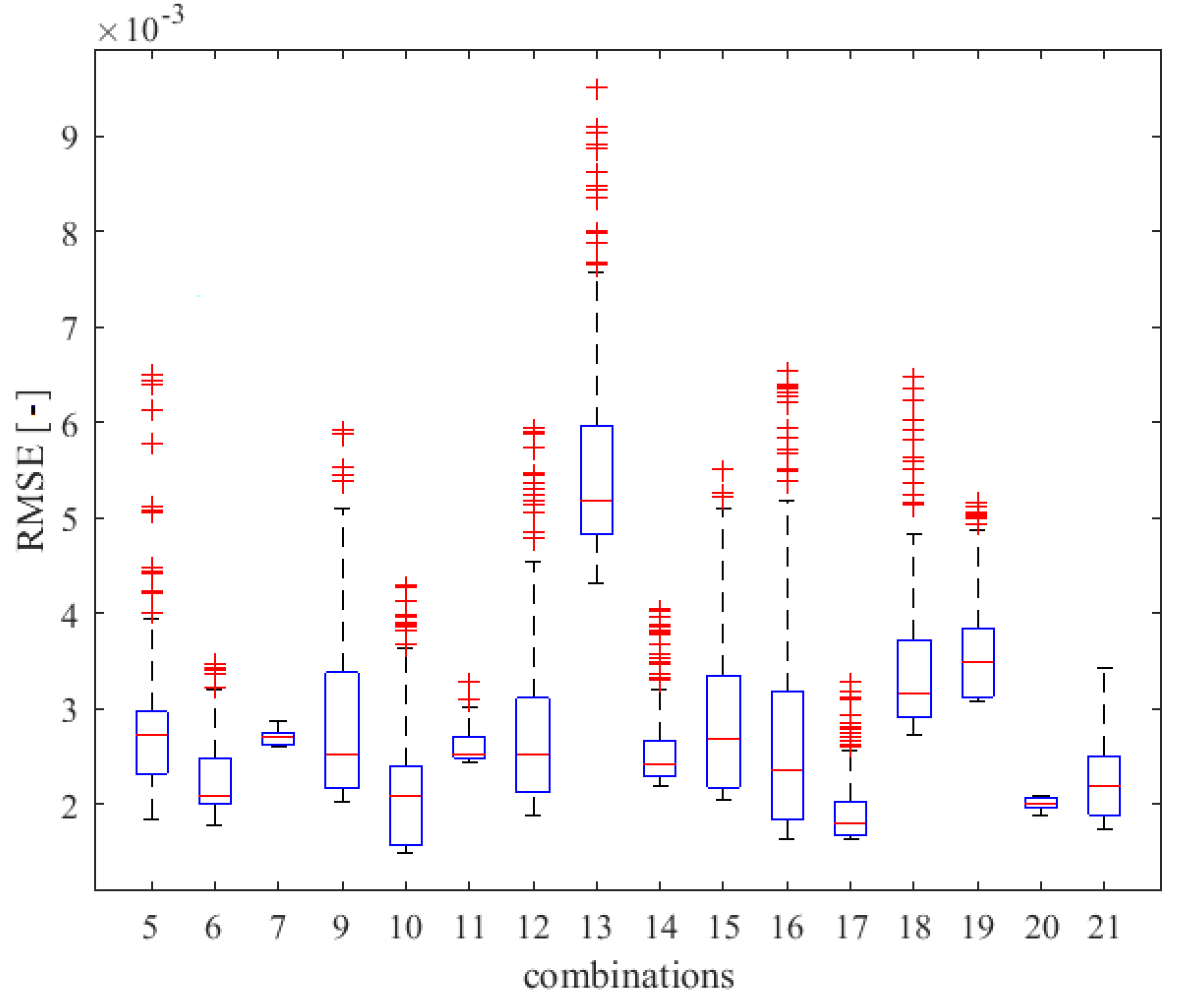

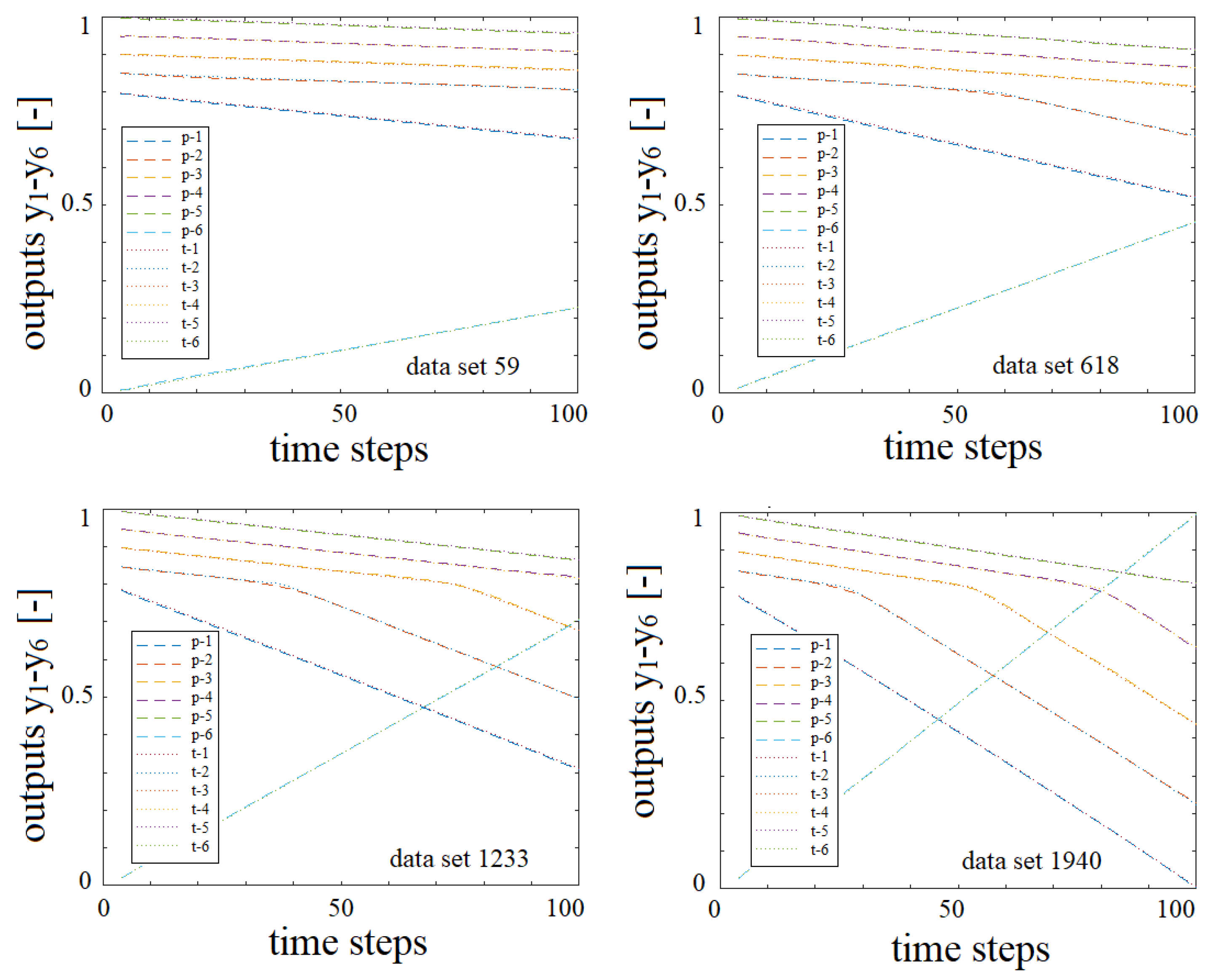

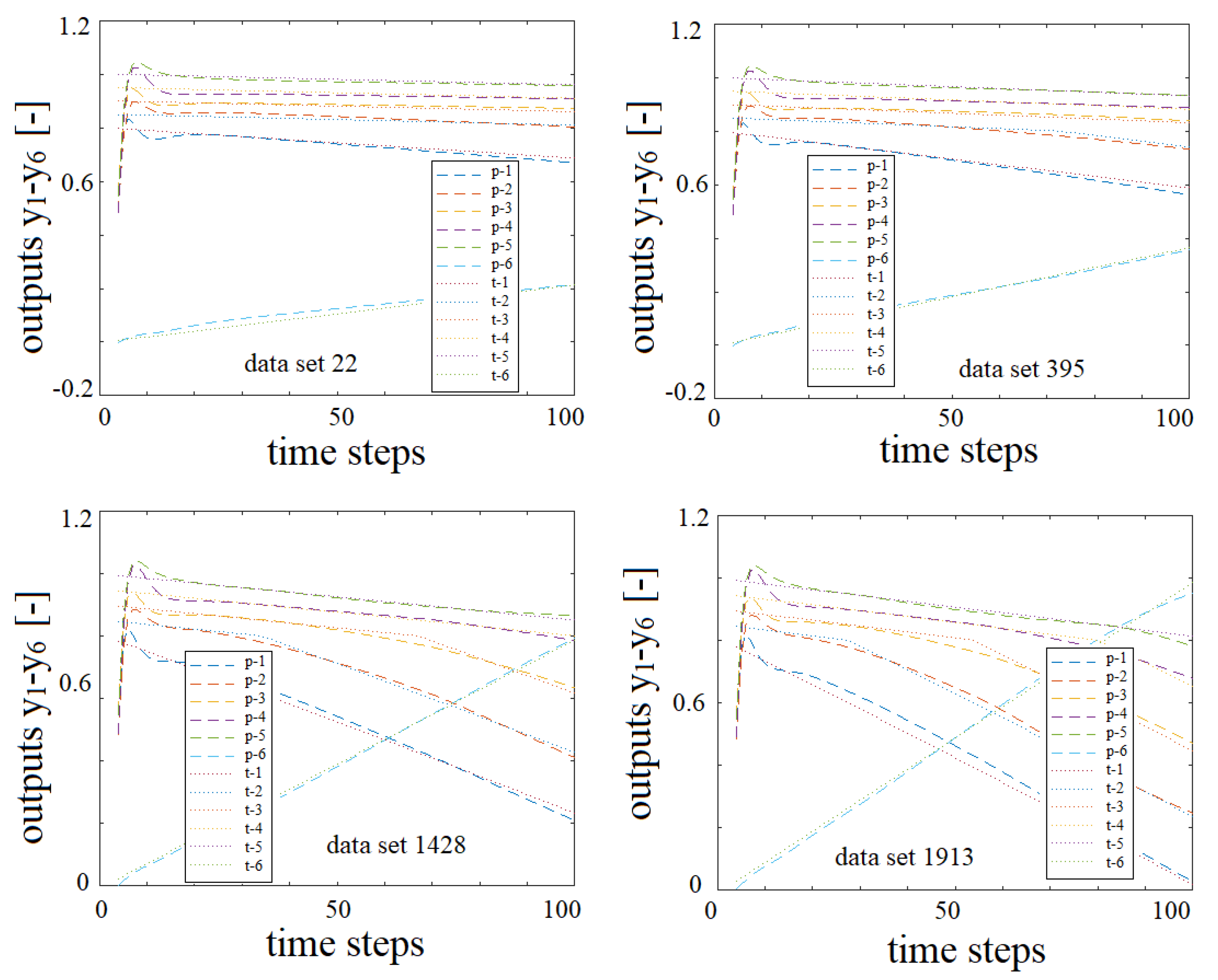

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Nomenclature

| ΔHS,L | latent heat of solidification [J/m3] |

| L | crystal length [m] |

| Tm | melting temperature [K] |

| q | heat flux [W/m3] |

| t | time [s] |

| z | axial coordinate [m] |

| α | thermal diffusivity [m2/s] |

| λ | thermal conductivity [W/mK] |

| ρ | density [kg/m3] |

References

- Yuan, Y.; Zhao, Y.; Zong, B.; Parolari, S. Potential Key Technologies for 6G Mobile Communications. Sci. China Inf. Sci. 2018, 61, 080404. [Google Scholar]

- Frank-Rotsch, C.; Dropka, N.; Rotsch, P. Chapter 6: III-Arsenides. In Single crystals of Electronic Materials: Growth and Properties; Fornari, R., Ed.; Woodhead Publishing Elsevier: Amsterdam, The Netherlands, 2018; pp. 181–240. [Google Scholar] [CrossRef]

- Dropka, N.; Holena, M.; Ecklebe, S.; Frank-Rotsch, C.; Winkler, J. Fast forecasting of VGF crystal growth process by dynamic neural networks. J. Cryst. Growth 2019, 521, 9–14. [Google Scholar] [CrossRef]

- Dropka, N.; Holena, M. Optimization of magnetically driven directional solidification of silicon using artificial neural networks and Gaussian process models. J. Cryst. Growth 2017, 471, 53–61. [Google Scholar] [CrossRef]

- Zhang, J.; Tang, Q.; Liu, D. Research into the LSTM neural network based crystal growth process model identification. IEEE Trans. Semicond. Manuf. 2019, 32, 220–225. [Google Scholar] [CrossRef]

- Asadian, M.; Seyedein, S.H.; Aboutalebi, M.R.; Maroosi, A. Optimization of the parameters affecting the shape and position of crystal-melt interface in YAG single crystal growth. J. Cryst. Growth 2009, 311, 342–348. [Google Scholar] [CrossRef]

- Tsunooka, Y.; Kokubo, N.; Hatasa, G.; Harada, S.; Tagawa, M.; Ujihara, T. High-speed prediction of computational fluid dynamics simulation in crystal growth. CrystEngComm 2018, 20, 6546–6550. [Google Scholar] [CrossRef]

- Tang, Q.; Zhang, J.; Lui, D. Diameter model identification of Cz silicon single crystal growth process. In Proceedings of the 2018 Chinese Automation Congress (CAC), Xi’an, China, 30 November–2 December 2018; pp. 2069–2073. [Google Scholar]

- Dang, Y.; Liu, L.; Li, Z. Optimization of the controlling recipe in quasi-single crystalline silicon growth using artificial neural network and genetic algorithm. J. Cryst. Growth 2019, 522, 195–203. [Google Scholar] [CrossRef]

- Ujihara, T.; Tsunooka, Y.; Hatasa, G.; Kutsukake, K.; Ishiguro, A.; Murayama, K.; Tagawa, M. The Prediction Model of Crystal Growth Simulation Built by Machine Learning and Its Applications. Vac. Surf. Sci. 2019, 62, 136–140. [Google Scholar] [CrossRef]

- Boucetta, A.; Kutsukake, K.; Kojima, T.; Kudo, H.; Matsumoto, T.; Usami, N. Application of artificial neural network to optimize sensor positions for accurate monitoring: An example with thermocouples in a crystal growth furnace. Appl. Phys. Express 2019, 12, 125503. [Google Scholar] [CrossRef]

- Qi, X.; Maa, W.; Dang, Y.; Sua, W.; Liu, L. Optimization of the melt/crystal interface shape and oxygen concentration during the Czochralski silicon crystal growth process using an artificial neural network and a genetic algorithm. J. Cryst. Growth 2020, 548, 125828. [Google Scholar] [CrossRef]

- Dupond, S. A thorough review on the current advance of neural network structures. Annu. Rev. Control 2019, 14, 200–230. [Google Scholar]

- Leontaritis, I.; Billings, S.A. Input-output parametric models for non-linear systems Part I: Deterministic non-linear systems. Int. J. Control 1985, 41, 303–328. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Billings, S.A.; Grant, P.M. Non-linear system identification using neural networks. Int. J. Control 1990, 51, 1191–1214. [Google Scholar] [CrossRef]

- Lantzsch, R. VGF Crystal Growth under the Influence of Magnetic Fields. Ph.D. Thesis, TUB Freiberg, Freiberg, Germany, 2009. [Google Scholar]

- Willers, B.; Eckert, S.; Nikrityuk, P.A.; Räbinger, D.; Dong, J.; Eckert, K.; Gerberth, G. Efficient melt stirring using pulse sequences of a rotating magnetic field: Part II. Application to solidification of Al-Si alloys. Metall. Mater. Trans. B. 2008, 39, 304–316. [Google Scholar] [CrossRef]

- Dunbar, W.B.; Petit, N.; Rouchon, P.; Martin, P. Motion planning for a nonlinear Stefan problem. ESAIM Control Optim. Calc. Var. 2003, 9, 275–296. [Google Scholar] [CrossRef]

- Rudolph, J.; Winkler, J.; Woittennek, F. Flatness Based Control of Distributed Parameter Systems: Examples and Computer Exercises from Various Technological Domains; Berichte aus der Steuerungs- und Regelungstechnik, Shaker: Aachen, Germany, 2003. [Google Scholar]

- Kingma, D.P.; Ba, J.L. Adam: A Method for stochastic optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

- Barrow, D.K.; Crone, S.F. Crogging (cross-validation aggregation) for forecasting—A novel algorithm of neural network ensembles on time series subsamples. In Proceedings of the 2013 International Joint Conference on Neural Networks, Dallas, TX, USA, 4–9 August 2013; pp. 1–8. [Google Scholar]

- Hutter, F.; Luecke, J.; Schmidt-Thieme, L. Beyond Manual Tuning of Hyperparameters. KI-Kuenstl. Intell. 2015, 29, 329–337. [Google Scholar] [CrossRef]

| Hyperparameter Combination | No. of Data | Max. Epochs | Mini Batch | Validation Frequency |

|---|---|---|---|---|

| 1 | 1950 | 100 | 225 | 50 |

| 2 | 500 | 100 | 225 | 50 |

| 3 | 1000 | 100 | 225 | 50 |

| 4 | 1500 | 100 | 225 | 50 |

| 5 | 1950 | 100 | 100 | 50 |

| 6 | 1950 | 100 | 50 | 50 |

| 7 | 1950 | 100 | 25 | 50 |

| 8 | 1950 | 100 | 550 | 50 |

| 9 | 1950 | 200 | 225 | 50 |

| 10 | 1950 | 300 | 225 | 50 |

| 11 | 1950 | 500 | 225 | 50 |

| 12 | 1950 | 250 | 225 | 50 |

| 13 | 1950 | 150 | 225 | 50 |

| 14 | 1950 | 400 | 225 | 50 |

| 15 | 1950 | 275 | 225 | 50 |

| 16 | 1950 | 225 | 225 | 50 |

| 17 | 1950 | 350 | 225 | 50 |

| 18 | 1950 | 325 | 225 | 50 |

| 19 | 1950 | 450 | 225 | 50 |

| 20 | 1950 | 450 | 50 | 50 |

| 21 | 1950 | 450 | 225 | 100 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dropka, N.; Ecklebe, S.; Holena, M. Real Time Predictions of VGF-GaAs Growth Dynamics by LSTM Neural Networks. Crystals 2021, 11, 138. https://doi.org/10.3390/cryst11020138

Dropka N, Ecklebe S, Holena M. Real Time Predictions of VGF-GaAs Growth Dynamics by LSTM Neural Networks. Crystals. 2021; 11(2):138. https://doi.org/10.3390/cryst11020138

Chicago/Turabian StyleDropka, Natasha, Stefan Ecklebe, and Martin Holena. 2021. "Real Time Predictions of VGF-GaAs Growth Dynamics by LSTM Neural Networks" Crystals 11, no. 2: 138. https://doi.org/10.3390/cryst11020138

APA StyleDropka, N., Ecklebe, S., & Holena, M. (2021). Real Time Predictions of VGF-GaAs Growth Dynamics by LSTM Neural Networks. Crystals, 11(2), 138. https://doi.org/10.3390/cryst11020138