1. Introduction

Nash equilibrium has emerged as the central solution concept in game theory, in large part due to the pioneering PhD thesis of John Nash proving that one always exists in finite games [

1]. For two-player zero-sum games (i.e., competitive games where the winnings of one player equal the losses of the other player), the solution concept is particularly compelling, as it coincides with the concept of minimax/maximin strategies developed earlier by John von Neumann [

2]. In that work, von Neumann proved that playing such a strategy guarantees a value of the game for the player in the worst case (in expectation) and that the value is the best worst-case guarantee out of all strategies. Essentially, this means that a player can guarantee winning (or at least tying) in the worst case if he/she follows such a strategy and alternates the roles of player 1 and 2. Therefore, for two-player zero-sum games, Nash equilibrium enjoys this “unbeatability” property. This has made it quite a compelling solution concept, and in fact agents based on approximating Nash equilibrium have been very successful and have even been able to defeat the strongest humans in the world in the popular large-scale game of two-player no-limit Texas hold ’em poker [

3,

4]. Nash equilibrium is additionally compelling for two-player zero-sum games because it can be computed in polynomial time [

5].

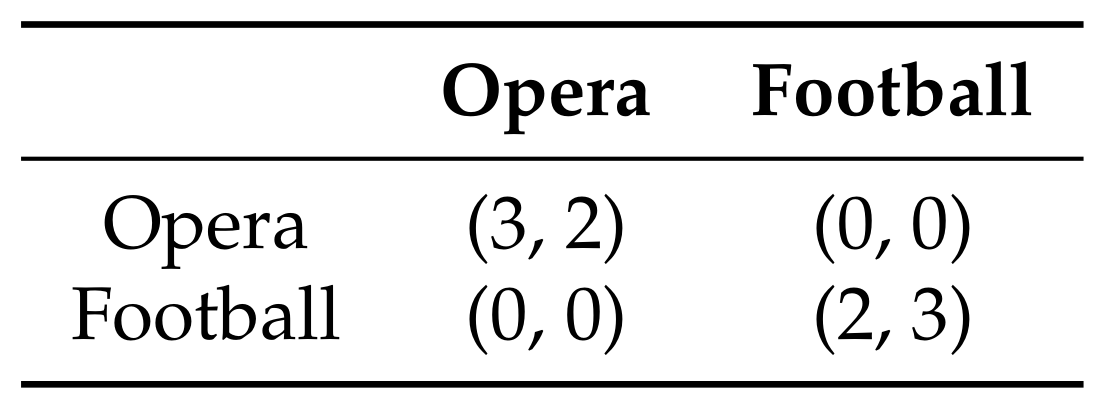

For non-zero-sum games and games with more than two players, while Nash equilibrium is still guaranteed to exist due to Nash’s result, none of these additional properties hold, as highlighted by the classic Battle of the Sexes Game depicted in

Figure 1. This game has three Nash equilibrium strategy profiles: when both players select Opera (i.e., (Opera, Opera)), when both players select Football (Football, Football) and where both select their preferred option with probability

. Clearly in this game the success of playing a Nash equilibrium depends heavily on the strategy chosen by the other player. For example, if the wife follows her strategy from the first Nash equilibrium and plays Opera, but the husband follows his strategy from the second Nash equilibrium and plays Football, the wife will receive the worst possible payoff of zero despite following a Nash equilibrium. While this example is just for a two-player game, the same phenomenon can occur in games with more than two players (though as described above, it cannot occur in two-player zero-sum games). Even three-player zero-sum games are not special, as any two-player general-sum game can be converted into a three-player zero-sum game by adding a “dummy” third player whose payoff equals negative the sum of the other two players’ payoff. Furthermore, even if we wanted to compute a Nash equilibrium, it has been proven to be PPAD-complete, and it is widely conjectured that no efficient algorithm exists [

6], though several heuristic approaches have been developed for strategic-form games (i.e., matrix games such as Battle of the Sexes) with varying degrees of success in different settings [

7,

8,

9,

10,

11]. There have also been techniques developed that approximate the Nash equilibrium to a provably very small degree of approximation error in a three-player imperfect-information game [

12,

13].

Thus, the problem of how to create strong agents for non-zero-sum and multiplayer games, and in particular the question of whether Nash equilibrium strategies are successful, remains an open problem—perhaps the most important one at the intersection of artificial intelligence and game theory. Of course, the most successful approach would not just simply follow a solution concept, but would also attempt to learn and exploit the weaknesses of the opponents [

14,

15] (note that this would be potentially very helpful for two-player zero-sum games as well, as Nash equilibrium may not fully exploit the mistakes of suboptimal opponents as much as successful exploitative agents even for that setting). However, successfully performing opponent exploitation is very difficult, particularly in very large games where the number of game iterations and observations of the opponents’ play is small compared to the number of game states. Furthermore, such approaches are susceptible to being deceived and counterexploited by sophisticated opponents. It is clear that pure exploitation approaches are insufficient to perform well against a mix of opponents of unknown skill level and that a strong strategy rooted in game-theoretic foundations is required.

The strongest existing agents for large multiplayer games have been based on approaches that attempt to approximate Nash equilibrium strategies [

16,

17]. In particular, they apply the counterfactual regret minimization algorithm [

18], which has also been used for two-player zero-sum games and has resulted in super-human level play for both limit Texas hold ’em [

19] and no-limit Texas hold ’em [

3,

4]. These agents have performed well in the three-player limit Texas hold ’em division of the Annual Computer Poker Competition, which is held annually at the AI conferences AAAI or IJCAI [

20]. Counterfactual regret minimization (CFR) is an iterative self-play algorithm that is proven to converge to a Nash equilibrium in the limit for two-player zero-sum games. It can be integrated with various forms of Monte Carlo sampling in order to improve performance both theoretically and in practice. For multiplayer and non-zero-sum games the algorithm can also be run, though the strategies computed are not guaranteed to form a Nash equilibrium. It was demonstrated that it does in fact converge to an

-Nash equilibrium (a strategy profile in which no agent can gain more than

by deviating) in the small game of three-player Kuhn poker, while it does not converge to equilibrium in Leduc hold ’em [

16]. It was subsequently proven that it guarantees converging to a strategy that is not dominated and does not put any weight on iteratively weakly-dominated actions [

17]. While for some small games this guarantee can be very useful (e.g., for two-player Kuhn poker a high fraction of the actions are iteratively-weakly-dominated), in many large games (such as full Texas hold ’em) only a very small fraction of actions are dominated, and the guarantee is not useful. Other approaches based on integrating the fictitious play algorithm with algorithms for finding optimal policies in Markov decision processes, such as policy iteration, have been demonstrated experimentally to converge to

-equilibrium for very small

in a no-limit Texas hold ’em poker tournament endgame [

12,

13]. It has been proven that if these algorithms converge, then the resulting strategy profile constitutes a Nash equilibrium (while CFR does not have such a guarantee); however, the algorithms are not proven to converge in general, despite the fact that they did for the game that was experimented on.

The empirical success of the three-player limit Texas hold ’em agents in the Annual Computer Poker Competition suggests that CFR-based approaches that are attempting to approximate a Nash equilibrium are promising for multiplayer games. However, the takeaway is not very clear. First, the algorithms are not guaranteed to converge to equilibrium for this game, and there is no guarantee on whether the strategies used by the agents constitute a Nash equilibrium or are even remotely close to one. Furthermore, there were only a small number of opposing agents submitted to the competition who may have a questionable skill level, so it is not clear whether the CFR-based approaches actually produce high-quality strategies or whether they just produced strategies that happened to outperform mediocre opponents and would have done very poorly against strong ones. While these CFR-based approaches are clearly the best so far and seem to be promising, they do not conclusively address the question of whether Nash equilibrium strategies can be successful in practice in interesting multiplayer games against realistic opponents.

In this paper, we create an agent based on an exact Nash equilibrium strategy for the game of three-player Kuhn poker. While this game is relatively small, and in particular quite small compared to three-player limit Texas hold ’em, it is far from trivial to analyze and has been used as a challenge problem at the Annual Computer Poker Competition for the past several years [

20]. A benefit of experimenting on a small problem is that exact Nash equilibrium strategies can be computed analytically [

21]. That paper computed an infinite family of Nash equilibrium strategies, though it did not perform experiments to see how they performed in practice against realistic opponents. The poker competition also did not publish any details of the agents who participated, so it is unclear what approaches were used by the successful agents. We ran experiments with our equilibrium agent against 10 agents that were created recently as part of a class project. These agents were computed using a wide range of approaches, which included deep learning, opponent modeling, rule-based approaches, as well as game-theoretic approaches. We show that an approach based on using a natural Nash equilibrium strategy is able to outperform all of the agents from the class. This suggests that agents based on using Nash equilibrium strategies can in fact be successful in multiplayer games, despite the fact that they do not have a worst-case theoretical guarantee. Of course since we just experimented on one specific game there is no guarantee that this conclusion would apply beyond this to other games, and more extensive experiments are needed to determine whether this conclusion would generalize.

2. Three-Player Kuhn Poker

Three-player Kuhn poker is a simplified form of limit poker that has been used as a testbed game in the AAAI Annual Computer Poker Competition for several years [

20]. There is a single round of betting. Each player first antes a single chip and is dealt a card from a four-card deck that contains one Jack (J), one Queen (Q), one King (K) and one Ace (A). The first player has the option to

bet a fixed amount of one additional chip (by contrast, in

no-limit games, players can bet arbitrary amounts of chips) or to

check (remain in the hand, but not bet an additional chip). When facing a bet, a player can

call (i.e., match the bet) or

fold (forfeit the hand). No additional bets or raises beyond the additional bet are allowed (while they are allowed in other common poker variants such as Texas hold ’em, both for the limit and no-limit variants). If all players but one have folded, then the player who has not folded wins the

pot, which consists of all chips in the middle. If more than one player has not folded by the end, there is a

showdown, in which the players reveal their private card and the player with the highest card wins the entire pot (which consists of the initial antes plus all additional bets and calls). The ace is the highest card, followed by the king, queen and jack. As one example of a play of the game, suppose the players are dealt queen, king and ace respectively, and Player 1 checks, then Player 2 checks, then Player 3 bets, then Player 1 folds, then Player 2 calls; then Player 3 would win a pot of five, for a profit of three from the amount with which he/she started the hand.

Note that although three-player Kuhn poker is only a synthetic simplified form of poker and is not actually played competitively, it is still far from trivial to analyze and contains many of the interesting complexities of popular forms of poker such as Texas hold ’em. First, it is a game of imperfect information, as players are dealt a private card that the other agents do not have access to, which makes the game more complex than a game with perfect information that has the same number of states. Despite the size, it is not trivial to compute Nash equilibrium analytically, though recently an infinite family of Nash equilibria has been computed [

21]. The equilibrium strategies exhibit the phenomena of

bluffing (i.e., sometimes betting with weak hands such as a jack or queen) and

slow-playing (also known as

trapping) (i.e., sometimes checking with strong hands such as a king or ace in order to induce a bet from a weaker hand). To see why, suppose an agent X played a simple strategy that only bet with an ace or sometimes a king. Then the other agents would only call the bet if they had an ace, since otherwise they would know they are beat (since there is only one king in the deck, if they held a king, they would know that Player X held an ace). But now if the other agents are only calling with an ace, it is unprofitable for Player X to bet with a king, since he/she will lose an additional chip whenever another player holds an ace and will not get a call from a worse hand; it would be better to check and then potentially call with the hope that the other player is bluffing (or to fold if you think the player is bluffing too infrequently). A better strategy may be to bet with an ace and to sometimes bet with a jack as a bluff, to put the other players in a challenging situation when holding a queen or king. However, Player X may also want to sometimes check with an ace as well so that he/she can still have some strong hands after he/she checks and the players are more wary of betting him/her after a check.

An infinite family of Nash equilibria for this game has been computed and can be seen in the tables from a recent article by Szafron et al. [

21]. The family of equilibria is based on several parameter values, which once selected determine the probabilities for the other portions of the strategies. One can see from the tables that randomization and including some probability of trapping and bluffing are essential in order to have a strong and unpredictable strategy. Thus, while this game may appear quite simple at first glance, the analysis is still very far from simple, and the game exhibits many of the complexities of far larger games that are played competitively by humans for large amounts of money.

3. Nash Equilibrium-Based Agent

One may wonder why it is worthwhile to create agents and experiment on three-player Kuhn poker, given that the game has been “solved,” as described in the preceding section. First, as described there are infinitely many Nash equilibria in this game (and furthermore, there may be others beyond those in the family computed in the prior work). Therefore, even if we wanted to create an agent that employed a Nash equilibrium “solution,” it would not be clear which one to pick, and the performance would depend heavily on the strategies selected by the other agents (who may not even be playing a Nash equilibrium at all). This is similar to the phenomenon described for the Battle of the Sexes Game in the Introduction, where even though the wife may be aware of all the equilibria, if she attends the Opera as part of the (O, O) equilibrium while the husband does Football as part of the (F, F) equilibrium, both players obtain very low payoff despite both following an equilibrium. A second reason is that, as also described in the Introduction, Nash equilibrium has no theoretical benefits in three-player games, and it is possible that a non-equilibrium strategy (particularly one that integrates opponent modeling and exploitation) would perform better, even if we expected the opponents may be following a Nash equilibrium strategy, but particularly if we expect them to be playing predictably and/or making mistakes.

Therefore, despite the fact that exact Nash equilibrium strategies have been computed for this game, it is still very unclear what a good approach is for creating a strong agent against a pool of unknown opponents.

For our agent, we have decided to use a Nash equilibrium strategy that has been singled out as being more robust than the others in prior work and that obtains the best worst-case payoff assuming that the other agents are following one of the strategies given by the computed infinite equilibrium family [

21]. We depict this strategy in

Table 1. This table assigns values for the 21 free parameters in the infinite family of Nash equilibrium strategies. To define these parameters,

,

and

denote the action probabilities for players

,

and

, respectively, when holding card

j and taking an aggressive action (Bet (B) or Call (C)) in situation

k, where the betting situations are defined in

Table 2. Prior work has actually singled out a range of strategies that receive the best worst-case payoff; above, we have described the lower bound of this space, and we also experiment using the strategy that falls at the upper bound (

Table 3). We call the first Nash Equilibrium agent NE1 and the second NE2.