Abstract

This paper analyzes the dynamic stability of moral codes in a two population trust game. Guided by a moral code, members of one population, the Trustors, are willing to punish members of the other population, the Trustees, who defect. Under replicator dynamics, adherence to the moral code has unstable oscillations around an interior Nash Equilibrium (NE), but under smoothed best response dynamics we obtain convergence to Quantal Response Equilibrium (QRE).

JEL classifications:

C72; C73

1. Introduction

In the lab, as in everyday life, we often observe trusting behavior even when it is unwarranted according to simple models of rational play. One possible explanation is that agents are willing and able to punish violations of trust. That explanation gains credence when the punishment is supported by a moral code and not just by arbitrary social preferences.

The purpose of the present paper is to explore that explanation by constructing and analyzing a simple evolutionary game model. In the context of a simple Trust game (aka sequential Prisoner’s Dilemma), we show how to (i) specify a moral code in an analytically tractable way, and (ii) analyze the stability of the equilibria under alternative dynamic adaption processes. The approach originates [1] but we extend it considerably by introducing and formalizing the idea of a moral code. Some of the ideas in the present paper are described (with attribution) in Chapter 13 of [2].

Loose verbal definitions of moral codes can be gleaned from writers from Aristotle down to [3] and beyond. Here we take a moral code to be an understanding of how to live and work together shared within a cohesive group of humans. The shared understanding can be summarized in a set of rules, which can be explicit or implicit [4]. Typically group members share, via gossip, information on code compliance, helping them avoid exploitation and reducing (and sharing) the cost of punishing noncompliers [5].

To build analytical structures capturing aspects of this verbal description, we focus on a very specific but revealing strategic interaction, a simple Trust game adapted from [1]. In that context, we introduce a moral code that enables Trustors to reduce interactions with, and to share the cost of punishing, untrustworthy partners. Our approach in some ways is reminiscent of the trustworthiness-detection approach of [6,7]) and in other ways is reminiscent of assortative matching protocols based on observables (e.g., see [8,9]). In our case, sorting is imperfect (gossip is not completely reliable) so some abuse of trust remains and the cost of punishing the abusers is shared within the group.

Our point of departure, [1], assumes that vengeful players derive utility from punishing trust violators. These vengeful players can be thought of as altruists, since they bear a personal material cost to punish while contributing to social efficiency: the fear of punishment leads to more trustworthy behavior. Nevertheless, the model has a long-run equilibrium in which vengeful players survive and trusting and trustworthy behaviors are quite common. Other known ways to support substantial altruistic cooperation include repeated interaction (for a helpful survey, see [10]), genetic relatedness (for nice summaries, see [11,12]) and the presence of types that favor cooperation in games of incomplete information (e.g., see [13,14]). The current paper adds moral codes to the list.

Our model of a moral code was inspired by reading accounts of hunter-gatherer cultures, but it could be reinterpreted as a model of corporate culture. The papers [15,16] argue that the Trust game is fundamental to corporate culture, and that firms exist to overcome distrust via social learning. The paper [17] finds that teamwork (captured in an assortative matching protocol) can explain trusting behavior in a Trust game with only one population.

This single population approach is quite common in the related literature. We simplify the analysis by working with a two population game assuming that roles evolve independently. We have in mind learning and adaptation dynamics, but the genetic analogue to our independence assumption would be that behavior in the Trustor role and the Trustee roles are controlled by genes on different chromosomes so that there is no linkage. Absent such independence, stability properties may differ in games with one versus two populations (e.g., see [18]).

We begin in Section 2 with an exposition of the Trust game with and without the moral code. The moral code affects the behavior of Trustors and of Trustees and creates a new mixed Nash Equilibrium (NE). We also compute the expected payoffs necessary to study the dynamics presented in the following sections. Section 3 reviews replicator dynamics ([19,20]) and then shows that the mixed NE is not evolutionarily stable under two-population replicator dynamics (see [21]). The next section reviews smoothed best response dynamics (aka logit dynamics, see [22,23]), and applies them to the current model. We obtain convergence to a quantal response equilibrium near the mixed NE. The stability of each dynamic system is then studied by analyzing the Jacobian matrix and the accompanying phase diagrams. Finally, Section 5 discusses implications of our results.

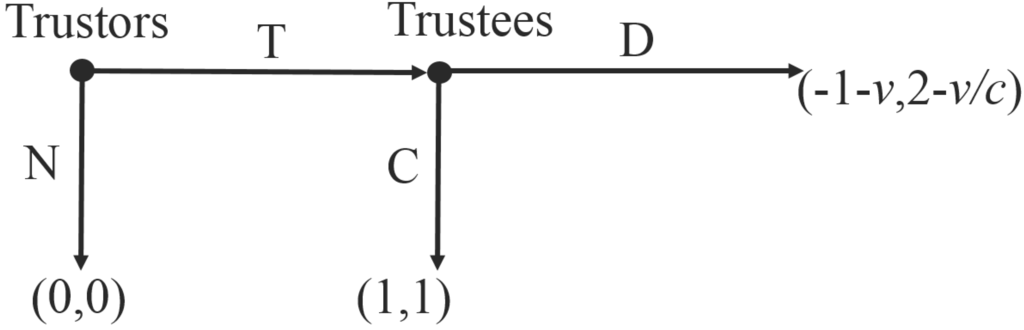

2. The Game

The basic Trust game can be thought of as a sequential, two-population version of the Prisoner’s Dilemma. Each Trustor (labelled 1 in what follows) can choose either to not to play (N), ensuring zero payoff for herself and her Trustee partner (labelled 2), or else chose to trust (T). In that case, the Trustee partner decides whether to cooperate (C) or defect (D), with respective payoffs (1, 1) or (–1, 2). Of course, the Trustees’ best response is D, implying that cooperation unravels in this basic game.

Now suppose that Trustors have the ability, at personal cost , to inflict a chosen amount of harm on Trustees, where can be thought of as a punishment technology parameter, and that Trustors may have a vengeful disposition. Specifically, suppose that in an angry state triggered by Trustees choosing D, Trustors get utility bonus of from inflicting harm h, while in other emotional states, Trustors’ utility is just the material payoff. The motivation (vengeance) parameter captures the individual’s temperament and evolves only according to the material payoffs.

Thus, following a choice of D by a Trustee, the Trustor maximizes . The unique solution is , and the Trustor’s material payoff in this case is The material (and also utility) payoff for the Trustee then becomes , as in Figure 1. If , then this payoff is less than 1 and choosing D is no longer a best response. As noted in [1], the threat of vengeance thus rationalizes Trustees’ cooperation and justifies Trustors’ initial trust, and so the first-order free rider problem is solved.

Figure 1.

Trust Game in Extensive Form.

However, this happy conclusion is based on the implausible assumption that the Trustees can see that indeed or in other words that Trustors have a transparent and quite vengeful disposition. Further, it is in the interest of a non-vengeful (where ) Trustor to mimic the outward appearance of a vengeful type, but without incurring the cost of actually inflicting harm. Assuming opaque dispositions, we obtain the population game payoff matrix shown in Table 1.

Table 1.

Trustors’ (row) and Trustees’ (column) Material Payoffs.

| Trustees: | ||

|---|---|---|

| Trustors: | C [p] | D [1−p] |

| 1, 1 | ||

| 1, 1 | –1, 2 |

The vengeful Trustor type receives the material payoff given in first row.1 Facing a p share of Cooperators and share of Defectors, the expected (material) payoff for a vengeful Trustor is then . A non-vengeful type of Trustor, with payoffs given in the second row of Table 1, obtains an expected payoff of The Trustor population mean payoff therefore is . The payoff to the Trustees, when cooperative, is , and when defecting is The Trustee population mean payoff thus is . The game has two pure strategy NE with and a family of mixed NE such that and .

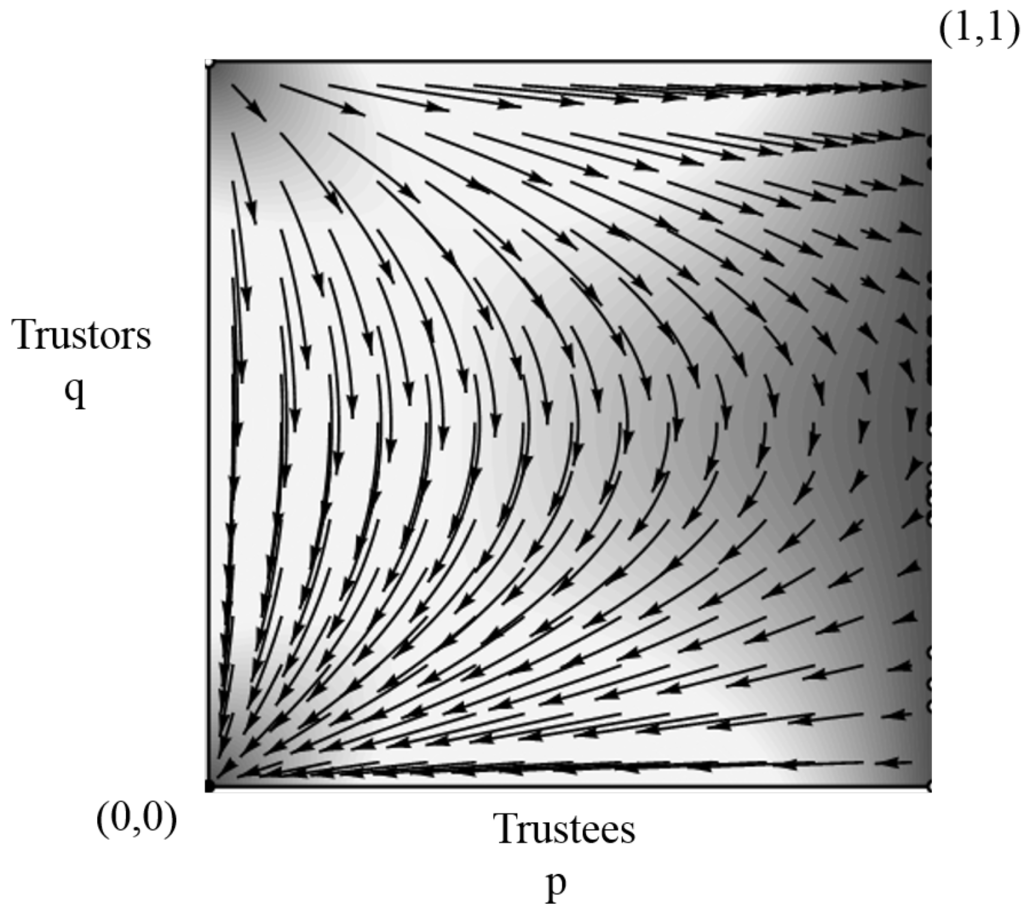

Evolutionary forces for Trustors are driven by payoff differential . As for Trustees, the payoff differential is . Figure 2 illustrates the phase diagram assuming under replicator dynamics.2

Figure 2.

Replicator dynamics of the simple game ().

Clearly the sub game perfect pure NE has a large basin of attraction. The NE segment consists of neutrally stable points, but in a long-run sense the entire segment is unstable and the ultimate destination is from every initial condition. The idea is that small perturbations from converge back to the segment at smaller values of q because they entail costly punishment by vengeful trustors. Thus q tends to drift to lower values until it crosses the boundary , below which the point is unstable (a source) rather than neutrally stable. Replicator dynamics then lead directly to . We don’t formalize this intuitive argument here because it would clash with the very similar (but not quite identical) formulation of trembles e in the next model, and point to [26] for the formal analysis of a closely related model. The upshot is that the present model, neither vengeance nor trust is evolutionary viable.

Now suppose that vengeance in our model is backed by a moral code which calls on each Trustor (a) to share equally the cost of punishing all Trustees who defect, and (b) to share information via gossip on which Trustees defect so that they can be avoided in the future.

One scenario on cost sharing is that a random person detects the defector and is called on to bear the entire cost of punishment, e.g., by destroying some of the defector’s personal property. Another scenario is that gossip immediately makes everyone aware of the transgression and everyone bears an equal share of the punishment cost, e.g., by shunning the culprit for a month. In a large population, either extreme scenario works, as do intermediate scenarios that involve larger cost shares for individuals who happen to be nearer the scene of the crime, so to speak. It is the expected cost that matters in our matrix game, and our assumption is that the expected cost is shared equally among all individuals who comply fully with the moral code.

Clearly it is personally advantageous to free ride, i.e., to receive information as in (b) but not to share costs of punishing third parties as in (a). We have in mind a coherent group of Trustors who interact frequently, and who therefore can discipline free riders. As argued by [4,5] and numerous other authors, without such discipline a moral code will soon disappear. Thus (a) and (b) are a package adopted by some Trustors, who we refer to as code compliers (K). The other Trustors, called non-compliers (N), avoid the costs of punishing third parties but have no access to gossip.

Payoffs are derived as follows. Trustors adopting strategy K gossip among themselves, but the information is imperfect, as captured in the error rate parameter : K-Trustors mistakenly avoid Trustees playing C with probability e, and with the same probability they encounter Trustees playing D. The K-Trustors also share equally the cost v of punishing the Defectors they encounter to the utility-maximizing degree This cost v is incurred at rate and spread over population share q of compliers, so the pro-rata punishment cost is for all compliers. Compliers also get the sucker payoff with probability e when matched with Defectors, and with the same probability, those Defectors receive the temptation payoff (2) less the total punishment (). Compliers matched with Cooperators get the reward payoff (1) with probability , as do the Cooperators. Applying the pro-rata punishment cost, we get the payoffs shown in the top row of Table 2. The bottom row, for the fraction of non-compliers, is the same as in Table 1.

Table 2.

Payoffs Under the Moral Code.

| Trustees: | ||

|---|---|---|

| Trustors: | C [p] | D [1−p] |

| 1, 1 | -1, 2 |

The expected payoff for non-compliers (N) coincides with that of unvengeful Trustor in the previous model, Cost sharing boosts K’s expected payoff when D is relatively rare and K is relatively common. Further, the reduction of D encounters due to the adoption of a moral code helps increase compliers’ expected payoff. The payoff for a compliant Trustor is then The presence of a moral code also changes the payoff for Trustees; the cooperative expected payoff is now and defecting expected payoff is

Besides the trivial NE , we also obtain an interior NE in this game at the intersection of the loci and , where and For example, let . Then the interior NE is with a high degree of trustworthy Trustees, and slight majority of moral code compliance.

Is this equilibrium dynamically stable with a large basin of attraction? Does the answer depend on the assumed family of dynamics? We answer these questions in the following sections.

3. Replicator Dynamics

We begin our analysis with replicator dynamics. First developed by [19] to describe biological evolution of phenotypes, these dynamics were later shown to describe certain sorts of imitation processes ([27,28]). Under replicator dynamics, the growth rate of each strategy’s share (e.g., ) is equal to its payoff relative to population average payoff. Recall that our game has two populations (Trustees and Trustors), whose behavior determines the payoffs. The population average payoff for Trustees is , so we can write the relative payoff for cooperation as . Similar algebra applies to Trustors. Thus, for the two population Trust game in Table 2, replicator dynamics are given by the following system of ordinary differential equations in the state variables ().

In order to determine the local stability of an interior equilibrium under replicator dynamics, we form the Jacobian matrix which results in

Evaluating these expressions at and , we obtain The characteristic equation is with approximate roots (eigenvalues)

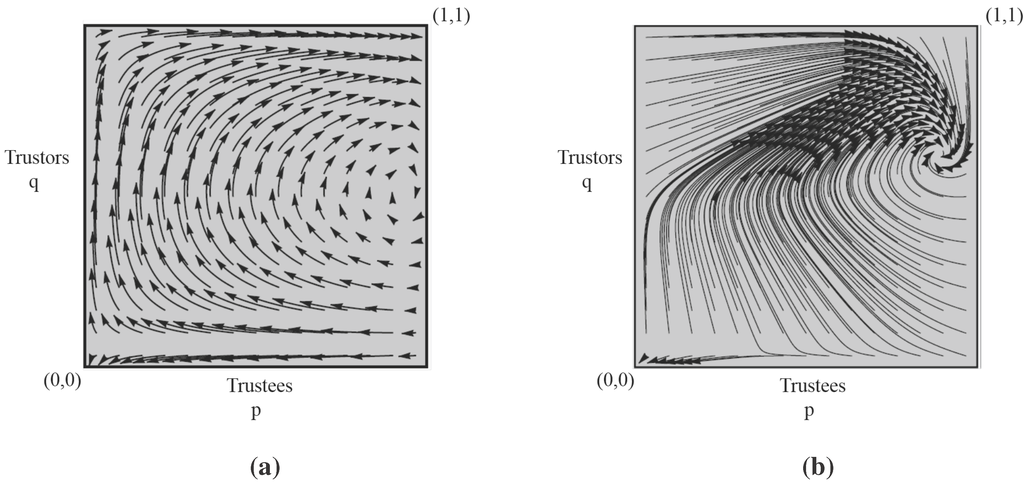

The implication is that, under replicator dynamics, the interior equilibrium is locally unstable and trajectories (depicted in Figure 3a) near it tend to slowly spiral outwards, in a clockwise direction.3 Figure 3a also shows that the trivial corner NE is evolutionary stable, but its basin of attraction is narrow, including only initial states where Trustors’ compliance rate q is very low.

Figure 3.

Evolutionary dynamics of the game under moral codes (, ). (a) Replicator; (b) Logit ()

4. Logit Dynamics

An alternative to replicator dynamics, often favored by economists (e.g., see [29]), is smoothed best response dynamics, or more specifically, logit dynamics (e.g., [22,23]). Logit dynamics has elements of both belief-based learning (since it is derived from a form of perturbation of the best-reply dynamics) and reinforcement learning (since players seek to maximize instantaneous payoffs at each revision opportunity). In particular, logit dynamics predicts that players choose a best-reply to the distribution of strategies existing in the population with a probability given by the logistic distribution.

The ODE system under logit dynamics of the state variables (p, q) is defined as

where is the precision parameter. It is well known that the equilibrium , called the quantal response equilibrium (QRE), exists but differs from the mixed NE except in the infinitely precise limit (e.g., [30]). For and the other parameters used earlier, the QRE is , fairly close to the NE .

In order to determine the local stability of under logit dynamics, we form the Jacobian matrix for (6) and evaluate it at that equilibrium point using baseline parameters. We obtain The characteristic equation is with approximate roots (eigenvalues)

Thus the interior equilibrium is locally stable under logit dynamics. As depicted in Figure 3, logit dynamics quickly take the state to a medium size neighborhood of the equilibrium, and then slowly spiral into . The trivial NE remains evolutionary stable within its narrow basin of attraction.

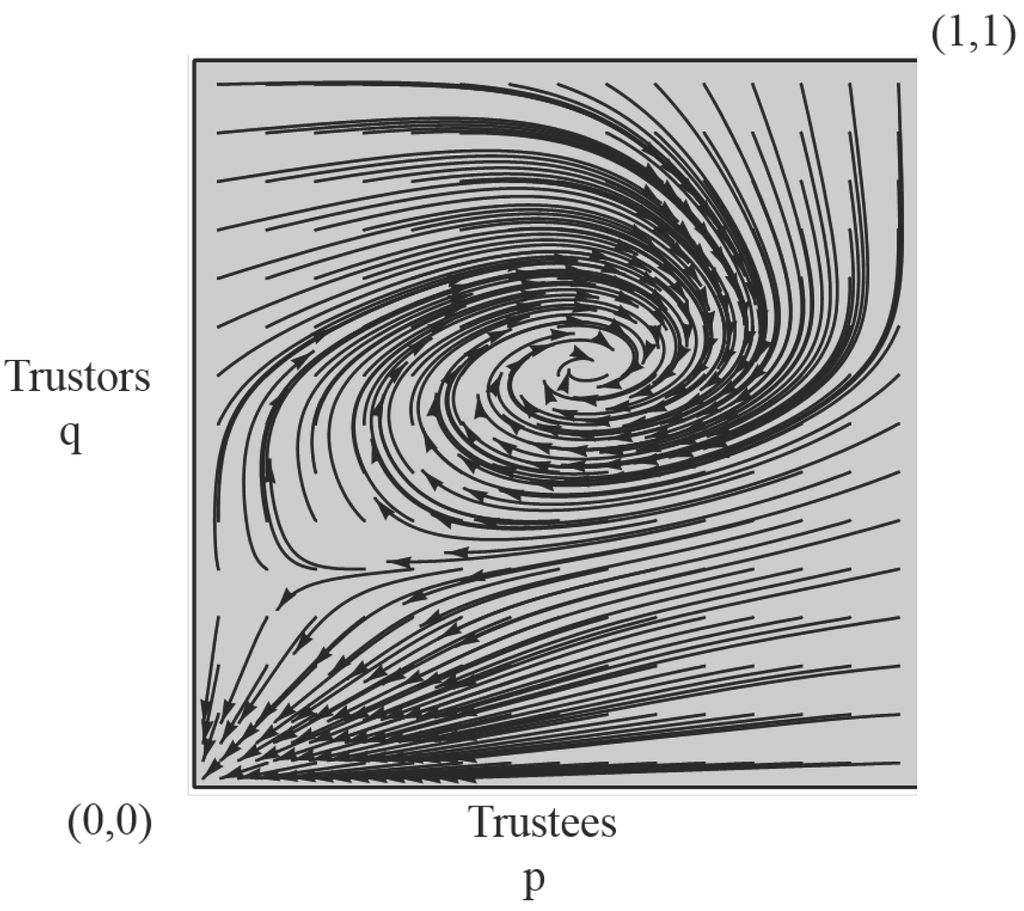

Stability under logit dynamics depends on parameter values, but is rather robust. In the baseline scenario when , it includes the range that a compliant Trustor meets an defecting Trustee. Indeed, when , the QRE becomes , which is not too far from the NE .4 The eigenvalues of the corresponding Jacobian are , which imply that the QRE remains evolutionary stable. However, as shown in Figure 4, its basin of attraction is much smaller and conversely the trivial NE has a much larger basin. This makes intuitive sense, since very unreliable gossip prevents Trustors from properly identifying Defectors, and reduces assortativity.

The impact of the other parameters, c and v, on the stability of the interior steady state and its basin of attraction is minor. For example, assuming parameters values of and , the dynamics remain qualitatively unchanged (although, of course, the steady state values change). This suggests that gossip allows cooperation in populations with lower vengeance or high punishment costs.

Figure 4.

Logit dynamics of the game under moral codes (, , ).

5. Conclusions

In this paper we illustrate how moral codes can help sustain trust and trustworthiness. The idea is that gossip permits the identification (with some error) of untrustworthy players, and that the cost of punishing such players is shared among norm-compliant trustors.

The model assumes two large populations, whose behavior is analyzed using two alternative forms evolutionary dynamics. Replicator dynamics lead to behavior that oscillates around the interior NE. An intuition behind replicator dynamics is that players imitate the strategy of other players in the same role only to the extent that the other’s strategy currently earns a higher payoff. Arguably, logit dynamics are more economically meaningful because they involve a smoothed best response to the current state, and thus incorporate a greater degree of rationality that is compatible with both beliefs-based and reinforcement learning.

Under reasonable parameters, we find that the steady state under logit dynamics is not far from the interior NE. The system converges to this interior equilibrium, and the size of the basin of attraction then mainly depends the effectiveness of moral codes, especially the reliability of gossip. Less reliable gossip implies a smaller basin of attraction around the interior NE. Drawing on the results presented above, Chapter 13 of [2] will note that results are rather insensitive to parameters representing the cost of punishment c, and the morally sanctioned degree of punishment v.

One can imagine more technically advanced versions of the model. For example, the exogenous inaccuracy rate e for gossip could be endogenized, depending on the share of code compliers and on how they discipline free riders. Alternatively, it would be interesting to study whether moral codes are robust to random pertubations in the spirit of [31] or [32]. The effect of allowing an individual’s play in one role to be correlated with play in another role could be investigated using a deterministic one-population model with a higher dimensional state space. One could also investigate the extent to which our model supports the ideas of [33,34], whose agents may possess virtuous disposition, i.e., may be a type of individual with an underlying desire to act as morality requires, together with a particular belief about what morality requires.

The modeling approach presented in this paper surely can be extended broad classes of games and moral codes. Which sorts of moral code will be most effective in promoting pro-social behavior? Which sorts of games are most amenable to moral policing? We hope that our simple examples will inspire other researchers to seek general answers to these questions.

Acknowledgments

For very helpful comments, we are indebted to Dann Arce and three anonymous referees of this journal.

Author Contributions

Both authors contributed equally to this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Friedman, D.; Singh, N. Equilibrium vengeance. Games Econ. Behav. 2009, 66, 813–829. [Google Scholar] [CrossRef]

- Friedman, D.; Sinervo, B. Evolutionary Games in Nature, Computers and Society; Oxford University Press: Oxford, UK, 2015. [Google Scholar]

- Hauser, M.D. Moral Minds; Harper-Collins: New York, NY, USA, 2006. [Google Scholar]

- Friedman, D. Morals and Markets: An Evolutionary Account; Palgrave Macmillan: New York, NY, USA, 2008. [Google Scholar]

- Cosmides, L.; Tooby, J. The psychological foundations of culture. In The Adapted Mind: Evolutionary Psychology and the Generation of Culture; Barkow, J.H., Cosmides, L., Tooby, J., Eds.; Oxford University Press: Oxford, UK, 1992; pp. 19–136. [Google Scholar]

- Ahn, T.K.; Esarey, J. A dynamic model of generalized social trust. J. Theor. Polit. 2008, 20, 157–180. [Google Scholar] [CrossRef]

- Güth, W.; Kliemt, H.; Peleg, B. Co-evolution of preferences and information in simple games of trust. German Econ. Rev. 2000, 1, 83–110. [Google Scholar] [CrossRef]

- Eshel, I.; Cavalli-Sforza, L.L. Assortment of encounters and evolution of cooperativeness. Proc. Natl. Acad. Sci. USA 1983, 79, 1331–1335. [Google Scholar] [CrossRef]

- Wilson, D.S.; Dugatkin, L.A. Group selection and assortative interactions. Am. Nat. 1997, 149, 336–351. [Google Scholar] [CrossRef]

- Sethi, R.; Somanathan, E. Understanding reciprocity. J. Econ. Behav. Organ. 2003, 50, 1–27. [Google Scholar] [CrossRef]

- Bergstrom, T.C. Evolution of social behavior: Individual and group selection. J. Econ. Perspect. 2002, 16, 67–88. [Google Scholar] [CrossRef]

- Robson, A.J. Evolution and human nature. J. Econ. Perspect. 2002, 16, 89–106. [Google Scholar] [CrossRef]

- Bicchieri, C.; Duffy, J.; Tolle, G. Trust among strangers. Philos. Sci. 2004, 71, 1–34. [Google Scholar] [CrossRef]

- Courtois, P.; Tzadaït., T. Learning to trust strangers: An evolutionary perspective. J. Evol. Econ. 2012, 22, 367–383. [Google Scholar] [CrossRef]

- Kreps, D.M. Corporate culture and economic theory. In Perspectives in Positive Political Economy; Alt, J.E., Shepsle, K.A., Eds.; Cambridge University Press: Oxford, UK, 1990; pp. 90–143. [Google Scholar]

- Kreps, D.M. Corporate culture and economic theory. In Firms, Organizations and Contracts; Buckley, P.J., Michie, J., Eds.; Oxford University Press: Oxford, UK, 1996; pp. 221–275. [Google Scholar]

- Arce, D.G. Taking corporate culture seriously: Group effects in the trust game. South. Econ. J. 2006, 73, 27–36. [Google Scholar] [CrossRef]

- Gaunersdorfer, A.; Hofbauer, J. Fictitious play, Shapley polygons, and the replicator equation. Games Econ. Behav. 1995, 11, 279–303. [Google Scholar] [CrossRef]

- Taylor, P.D.; Jonker, L.B. Evolutionary stable strategies and game dynamics. Math. Biosci. 1978, 40, 145–156. [Google Scholar] [CrossRef]

- Hofbauer, J.; Sigmund, K. The Theory of Evolution and Dynamical Systems; Cambridge University Press: Cambridge, UK, 1988. [Google Scholar]

- Weibull, W. Evolutionary Game Theory; MIT Press: Boston, MA, USA, 1997. [Google Scholar]

- Blume, L.E. The statistical mechanics of strategic interaction. Games Econ. Behav. 1993, 5, 387–424. [Google Scholar] [CrossRef]

- Fudenberg, D.; Levine, D. The Theory of Learning in Games; MIT Press: Boston, MA, USA, 1998. [Google Scholar]

- Güth, W.; Yaari, M. Explaining reciprocal behavior in simple strategic games: An evolutionary approach. In Explaining Forces and Change: Approaches to Evolutionary Economics; Witt, U., Ed.; University of Michigan Press: Ann Arbor, MI, USA, 1992; pp. 23–34. [Google Scholar]

- Sandholm, W.H.; Dokumaci, E.; Franchetti, F. Dynamo: Diagrams for Evolutionary Game Dynamics. Available online: http://www.ssc.wisc.edu/~whs/dynamo/ (accessed on 5 January 2015).

- Gale, J.; Binmore, K.; Samuelson, L. Learning to be imperfect: The ultimatum game. Games Econ. Behav. 1995, 8, 56–90. [Google Scholar] [CrossRef]

- Bjornerstedt, J.; Weibull, J. Nash equilibrium and evolution by imitation. In The Rational Foundations of Economic Behaviour; Arrow, K., Colombatto, E., Eds.; Macmillan: London, UK, 1996. [Google Scholar]

- Schlag, K.H. Which one should I imitate? J. Math. Econ. 1999, 31, 493–522. [Google Scholar] [CrossRef]

- Sandholm, W.H. Population Games and Evolutionary Dynamics; MIT Press: Cambridge, MA, USA, 2010. [Google Scholar]

- McKelvey, R.D.; Palfrey, T.R. Quantal response equilibria for normal form games. Games Econ. Behav. 1995, 10, 6–38. [Google Scholar] [CrossRef]

- Kandori, M.; Mailath, G.J.; Rob, R. Learning, mutation, and long run equilibria in games. Econometrica 1993, 61, 29–56. [Google Scholar] [CrossRef]

- Young, H.P. The evolution of conventions. Econometrica 1993, 61, 57–84. [Google Scholar] [CrossRef]

- Brennan, G.; Hamlin, A. Democratic Devices and Desires; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Brennan, G.; Pettit, P. The Economy of Esteem: An Essay on Civil and Political Society; Oxford University Press: Oxford, UK, 2004. [Google Scholar]

- 1We follow here the standard approach known as indirect evolution [24] that utility payoffs govern behavior of each type, while material payoffs govern the evolution of the type shares. Therefore, subsequent discussion of evolution will focus on material payoff.

- 2All our phase diagrams are made using Dynamo [25]. The system converges to either the subgame perfect NE or to a point on the NE segment .

- 3It is well known (e.g., [21], Ch.5) that interior NE are neutrally stable (or are saddle points) under replicator dynamics when there are no own-population effects. Here cost-sharing by code compliers creates a small own-population effect that breaks neutral stability in favor of dynamic instability.

- 4This example is admittedly extreme. The availability of the alternative strategy N in Figure 1 imposes a non-negativity constraint on the payoffs of all Trustor strategies. For values of , the payoff under the candidate QRE is negative. We ignore this non-negativity constraint in our main examples for simplicity and because it doesn’t bind.

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).