Abstract

This paper examines a general model of contract in multi-period settings with both external and self-enforcement. In the model, players alternately engage in contract negotiation and take individual actions. A notion of contractual equilibrium, which combines a bargaining solution and individual incentive constraints, is proposed and analyzed. The modeling framework helps identify the relation between the manner in which players negotiate and the outcome of the long-term contractual relationship. In particular, the model shows the importance of accounting for the self-enforced component of contract in the negotiation process. Examples and guidance for applications are provided, along with existence results and a result on a monotone relation between “activeness of contracting" and contractual equilibrium values.

1. Introduction

Many economic relationships are contractual, in that the parties negotiate and agree on matters of mutual interest and intend for their agreement to be enforced. Enforcement comes from two sources: external agents who are not party to the contract at hand (external enforcement) and the contracting parties themselves (self-enforcement). Most contracts involve some externally enforced component and some self-enforced component. When parties negotiate an agreement, they are coordinating generally on both of these dimensions.

In this paper, I further a game-theoretic framework for analyzing contractual settings with both externally enforced and self-enforced components. I model a long-term contractual relationship in which, alternately over time, the parties engage in contracting (or recontracting) and they take individual productive actions. I discuss various alternatives in the analysis of contract negotiation and I define a notion of contractual equilibrium that combines a bargaining solution with incentive conditions on the individual actions. Existence results, examples, and notes for some applications are also provided.

The framework developed herein is designed to clarify the relation between the self-enforced and externally enforced components of contract, and to demonstrate the advantages of explicitly modeling the self-enforced component in applied research. The framework emphasizes the roles of history, social convention, and bargaining power in determining the outcome of contract negotiation and renegotiation. It also helps one to compare the implications of various theories of negotiation and the meaning of disagreement.

The main points of this paper are as follows. First, self-enforced and externally enforced components of contract may be conditioned on different sets of events, due to differences between the parties’ information and that of an external enforcer. Second, the selection of self-enforced and externally enforced components of contract entail the same basic ingredients—in particular, the notion of a disagreement point and relative bargaining power. Third, these ingredients are more nuanced for self-enforcement than for external enforcement, because the implications for self-enforcement depend on how statements made during bargaining affect the way in which the players coordinate on individual actions later. Furthermore, because there may be multiple ways of coordinating future behavior even in the event that the parties do not reach an agreement, there are generally multiple disagreement points. The manner of coordination under disagreement has important implications for the agreements that the players will reach.

Third, the connection between statements made during bargaining and future coordination can be represented by a notion of activeness of contracting. Active contracting means that the players are able, through bargaining, to jointly control the manner in which they will coordinate in the future. Non-active contracting means that history and social convention are more powerful in determining the way in which the players will coordinate in the future. Fourth, under some technical assumptions, there is a negative relationship between activeness of contracting and the maximum attainable joint value (sum of payoffs).

1.1. MW Example

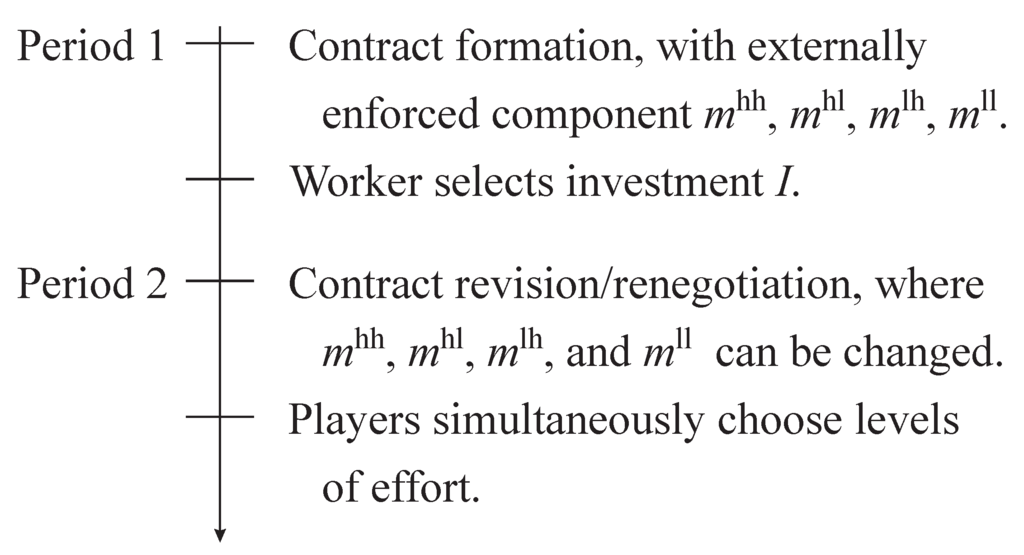

Here is an example that illustrates some of the issues in modeling both the self- and externally enforced components of contract. Suppose that a manager (player 1) and a worker (player 2) interact over two periods of time, as shown in Figure 1.

At the beginning of the first period, the parties engage in contract negotiation. The contract includes vectors , which specify externally enforced monetary transfers between the manager and the worker contingent on actions they take in the second period. Each vector is of the form , where is the monetary transfer to player 1 and is the transfer to player 2. Transfers can be negative and I assume that , meaning that the parties cannot create money. I also assume that the transfers are “relatively balanced" in that .1

Figure 1.

Time line for the MW example.

Figure 1.

Time line for the MW example.

Later in the first period, the worker selects an investment . The investment yields an immediate benefit to the manager and an immediate cost to the worker. The manager observes I, but this investment cannot be verified by the external enforcer.

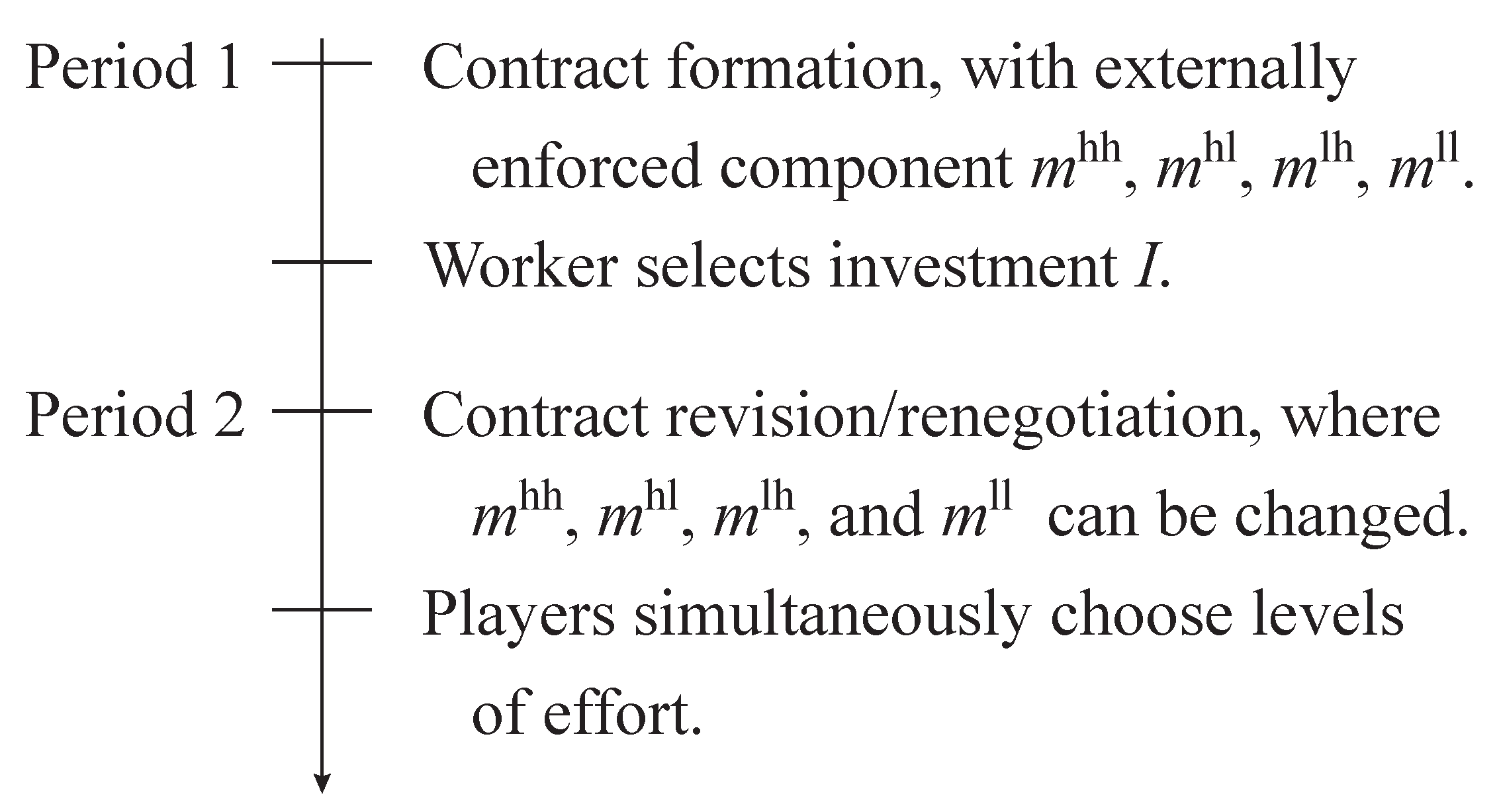

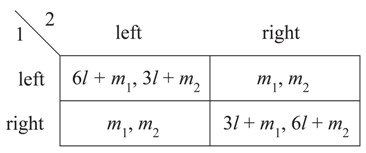

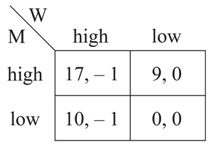

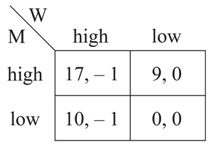

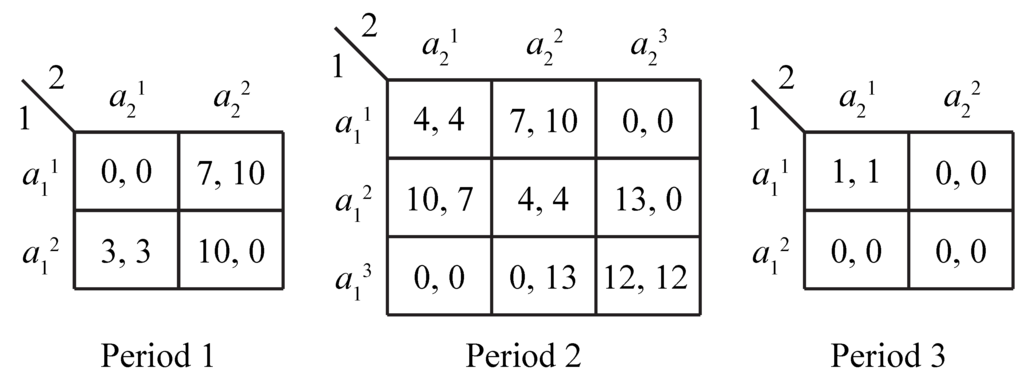

At the beginning of the second period there is another round of contracting, at which point the specification of transfers may be changed. Then the parties simultaneously select individual effort levels—“high" or “low"—and they receive monetary gains according to the following matrix:

The table represents that the parties’ efforts generate revenue, which is 18 if both exert high effort, 10 if exactly one of them exerts high effort, and zero otherwise. Each party’s cost of high effort is 1. The manager obtains the revenue, minus his effort cost, plus the transfer (which can be negative). The worker receives the monetary transfer minus his effort cost. That there is a different vector m for each cell of the table means that the external enforcer can verify the second-period effort choices of both players.

Assume that each player’s payoff for the entire relationship is the sum of first- and second-period monetary gains. Thus, an efficient specification of productive actions is one that maximizes the players’ joint value (the sum of their payoffs). Clearly, efficiency requires the worker to choose in the first period (it maximizes ) and requires the players to select in the second period. Note, however, that the parties would not have the individual incentives to select in the second period unless and ; that is, must be a Nash equilibrium of the matrix shown above. Furthermore, the worker has the strict incentive to choose if the first period is viewed in isolation. Whether efficiency, or even , can be achieved depends on the details of the contracting process.

Before considering contractual alternatives, consider the parties’ incentives regarding their effort choices in the second period. Any of the four effort profiles can be made a Nash equilibrium in dominant strategies with the appropriate selection of externally enforced transfers. This is true even if one restricts attention to balanced transfers (where no money is discarded). For example, to make “low" a dominant action for both players, one could specify the following transfers: , , and . This is an example of forcing transfers, because the players are given the incentive to play a specific action profile regardless of what happened earlier in the game.

I next sketch three contractual alternatives. While these are presented informally and do not cover the full range of possibilities, they illustrate the contractual components and issues that are formally addressed in this paper.

- Alternative 1:The players make the following agreement at the beginning of the first period. They choose a specification of transfers that satisfies and that forces to be played in the second period. With these transfers, the players would each obtain zero in the second period. The players also agree that if the worker invests then, at the beginning of period 2, they will recontract to specify transfers that force to be played and . For any other investment I, the players expect not to change the specification of transfers.If this contractual agreement holds, then it is rational for the worker to select in the first period. If he makes this choice, he gets in the first period and, anticipating recontracting to force , he gets 16 in the second period. If he chooses any other effort level in the first period, the worker obtains no more than zero. An efficient outcome results.

- Alternative 2:In first-period negotiation, the players choose a specification of transfers that forces with , as in Alternative 1. In the second period, the players renegotiate to pick transfers that force with , and they do so regardless of the worker’s first-period investment level. In other words, the outcome of second-period renegotiation is the ex-post efficient point in which the players obtain equal shares of the bargaining surplus relative to their initial contract. Anticipating that his second-period gains do not depend on his investment choice, the worker optimally selects .

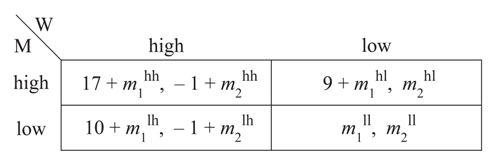

- Alternative 3:The players’ initial contract specifies , , and , which yields the following matrix of second-period payoffs:

Note that, for this matrix, , , and are the Nash equilibria, and these are ex post inefficient.2 The players agree that, in the event that they fail to renegotiate in the second period, the equilibrium on which they coordinate will be conditioned on the worker’s investment choice. Specifically, if the worker chose then they coordinate on equilibrium , whereas if then they coordinate on equilibrium . That is, the disagreement point for second-period negotiation depends on the worker’s action in the first period.Assume that the players do renegotiate in the second period so that the efficient profile is achieved. The joint surplus of renegotiation is . Suppose that player 1 has all of the bargaining power and thus negotiates a transfer that gives him the entire surplus. The worker therefore gets 9 if is the disagreement point, whereas he gets 0 if is the disagreement point. Clearly, with this contractual arrangement, the worker optimally selects in the first period.

Note that, for this matrix, , , and are the Nash equilibria, and these are ex post inefficient.2 The players agree that, in the event that they fail to renegotiate in the second period, the equilibrium on which they coordinate will be conditioned on the worker’s investment choice. Specifically, if the worker chose then they coordinate on equilibrium , whereas if then they coordinate on equilibrium . That is, the disagreement point for second-period negotiation depends on the worker’s action in the first period.Assume that the players do renegotiate in the second period so that the efficient profile is achieved. The joint surplus of renegotiation is . Suppose that player 1 has all of the bargaining power and thus negotiates a transfer that gives him the entire surplus. The worker therefore gets 9 if is the disagreement point, whereas he gets 0 if is the disagreement point. Clearly, with this contractual arrangement, the worker optimally selects in the first period.

External enforcement is represented by the way in which the contract directly affects the players’ payoffs (through vectors , , , and in the example). Self-enforcement relates to the way in which the players coordinate on their individual actions, including the effort levels that they will choose in the second period. Self-enforcement requires that these effort levels constitute a Nash equilibrium of the “induced game" that the externally enforced transfers create.

Obviously, the self- and externally enforced components of contract interact, because, at minimum, the externally enforced transfers influence which second-period action profiles are Nash equilibria. In Alternatives 1 and 2, the specification of transfers forces a unique action profile in the second period. In Alternative 3, the initial specification of transfers creates a situation in which there are three Nash equilibria. The self-enforced component of contract specifies how the players will select among these equilibria.

Whether a given contractual alternative is viable depends on what is assumed about second-period contracting. Alternative 1 is viable only if renegotiation accommodates an ex post inefficient outcome conditional on the worker failing to invest. In other words, Alternative 1 relies on fairly inactive contracting, where the players are sometimes unable to maximize their joint value. Alternative 1 also assumes that, if the worker invests , then the parties will bargain in a way that gives the entire renegotiation surplus to the worker.

Alternative 2 presumes that ex post efficiency is always reached in the renegotiation process, so there is active contracting here. In Alternative 3, the second-period disagreement point is made a function of first-period play, via the self-enforced component of contract. Note that this manner of conditioning could not be achieved through the externally enforced part, because the external enforcer does not observe the worker’s investment. Alternative 3 has active contracting in the sense that the players always renegotiate to get the highest joint value in the second period, but history still plays a role in determining the self-enforced component of the disagreement point.

The example shows that, to determine what the players can achieve in a contractual relationship, it is important to model the negotiation process, to differentiate between self-enforced and externally enforced components of contract, and to posit a level of contractual activeness. The modeling framework developed in this paper helps to sort out these elements.

1.2. Relation to the Literature

By differentiating between the two components of contract, this paper furthers the research agenda that seeks to (i) discover how the technological details of contractual settings influence outcomes; (ii) demonstrate the importance of carefully modeling these details; and (iii) provide a flexible framework that facilitates the analysis of various applications. By “technological details," I mean the nature of productive actions, the actions available to external enforcers, the manner in which agents communicate and negotiate with one another, and the exact timing of these various elements in a given contractual relationship. In other papers, I and coauthors focus on the nature of productive actions [1,2,3], the mechanics of evidence production ([4,5]), and contract writing and renegotiation costs [6,7]. These papers and the present one show that the technological details can matter significantly.

This paper builds on the large and varied contract-theory literature. In many of the literature’s standard models (such as the basic principal-agent problem with moral hazard), the self-enforced aspects of contracting are trivial. However, there are also quite a few studies of contractual relations in which self-enforcement plays a more prominent role. Finitely repeated games constitute an abstract benchmark setting in which there is no external enforcement. For this setting, Benoit and Krishna (1993) [8] provide an analysis of renegotiation. The basic idea is that, at the beginning of the repeated game, the players agree on a subgame-perfect Nash equilibrium for the entire game, but they jointly reevaluate the selection in each subgame. Benoit and Krishna assume that, in each period, the parties select an equilibrium on the Pareto frontier of the set of feasible equilibria, where feasibility incorporates the implications of negotiation in future periods.

Many papers look at the interaction of self-enforced and externally enforced components of contract in applied settings. Prominent entries include Telser (1980) [9], Bull (1987) [10], MacLeod and Malcomson (1989) [11], Schmidt and Schnitzer (1995) [12], Roth (1996) [13], Bernheim and Whinston (1998) [14], Pearce and Stacchetti (1998) [15], Ramey and Watson (2001, 2002) [16,17], Baker, Gibbons, and Murphy (2002) [18], and Levin (2003) [19]. In each of these papers, a contract specifies an externally enforced element that affects the parties’ payoffs in each stage of their game. The parties interact over time and may sustain cooperative behavior by playing repeated-game-style equilibria. Some of these papers address the issue of negotiation (and renegotiation) over the self-enforced component of contract; this is typically done with assumptions on equilibrium selection, such as Pareto perfection, rather than by explicitly modeling how contracts are formed. Of these papers, the most relevant to the work reported here is Bernheim and Whinston (1998) [14]. These authors show that, even when external enforcement can be structured to control individual actions, parties may prefer relying on self-enforcement because it can generally be conditioned on more of the history than can externally enforced elements. This is exactly the feature exhibited in the comparison of Alternatives 2 and 3 in the example discussed above.

The distinction of the modeling exercise herein, relative to the existing literature, is the depth of modeling about how parties determine the self-enforced aspects of contract, as well as the range of assumptions accommodated by the theory. Here, notions of bargaining power and disagreement points are addressed in the context of self-enforcement. The detailed examination of negotiation yields a foundation for “Pareto perfection" as well as the concepts used in some of my other work (Ramey and Watson 2002 [17], Klimenko, Ramey, and Watson 2008 [20], Watson 2005, 2007 [2,3]). Furthermore, it helps one understand the implications of various assumptions about the negotiation process, which offer a guide for future applied work.

Part of the framework developed here was previously sketched in Watson (2002) [17], which contains some of the basic definitions and concepts without the formalities. My analysis of negotiation over self-enforced elements of contract draws heavily from—and extends in a straightforward way—the literature on cheap talk, most notably Farrell (1987) [21], Rabin (1994) [22], Arvan, Cabral, and Santos (1999) [23], and Santos (2000) [24].

The present paper is a precursor to Miller and Watson (2013) [25], which develops a notion of contractual equilibrium for infinitely repeated games without external enforcement. The two papers are complementary because they focus on different settings and they treat various elements at different levels. Herein, I concentrate on environments with finite horizons, where backward induction can be used in a straightforward way to define and construct contractual equilibria. In contrast, [25] examines settings with infinite horizons, where a distinct set of conceptual issues arise and the analysis requires a recursive structure. The present paper analyzes a rich class of settings with both self- and eternal enforcement, whereas [25] looks at a stationary environment with no external enforcement. The present paper allows for a wide range of bargaining theories and explores the notion of activeness of contracting. Miller and Watson (2013) [25] focuses on a particular notion of disagreement and examines only bargaining theories that predict efficient outcomes. Miller and Watson (2013) [25] contains broader results on the relation between non-cooperative models of bargaining and cooperative solution concepts used in hybrid models.

1.3. Outline of the Paper

The following section begins the theoretical exercise by describing single contracting problems that include self-enforced and externally enforced components. Section 3 addresses how contract negotiation can be modeled. This section first describes bargaining protocols to be analyzed using standard non-cooperative theory. Assumptions on the meaning of language are described. Then non-cooperative bargaining theories are translated into a convenient cooperative-theory form. The notion of activeness is discussed and it is used to define a partial ordering of bargaining solutions. Three benchmark bargaining solutions are defined.

In Section 4, I develop a framework for analyzing long-term contractual relationships in which the parties contract and take individual actions in successive periods of time. I analyze contractual relationships in terms of the sets of continuation values from each period in various contingencies. The notion of contractual equilibrium is defined and partly characterized. Section 5 contains the formal analysis of the MW example and a repeated-game example. Section 6 provides a result on the relation between contractual activeness and maximal joint value. Section 7 offers concluding remarks and some notes for applications.

The appendices present technical details and existence results. Appendix A contains the details of an example discussed in Section 3. Appendix B defines three classes of contractual relationships that have some finite elements; existence of contractual equilibrium is proved for these classes. Appendix C contains the proofs of the lemma and theorems that appear in the body of the paper.

2. Contractual Elements in an Ongoing Relationship

Suppose that there are two players in an ongoing relationship and that at a particular time—call it a negotiation phase—they have the opportunity to establish a contract or revisit a previously established contract. At this point, the players have a history (describing how they interacted previously) and they look forward to a continuation (describing what is to come). At the negotiation phase, the players form a contract that has two components: an externally enforced part and a self-enforced part.

The externally enforced component of their agreement is a tangible joint action x, which is an element of some set X. For example, x can represent details of a document that the players are registering with the court, who later will intervene in the relationship; x could be an immediate monetary transfer; or x might represent a choice of production technology. There is a special element of X, called the default joint action and denoted , that is compelled if the players fail to reach an agreement.

The self-enforced component of the players’ joint decision is an agreement between them on how to coordinate their future behavior. For example, if the players anticipate interacting in a subgame later in their relationship, then, at the present negotiation phase, they can agree on how each of them will behave in the subgame. The feasible ways in which the players can coordinate on future behavior are exactly those that can be self-enforced, as identified by incentive conditions. Coordination on future behavior yields a continuation value following the negotiation phase.

To describe the set of feasible contracts, it is useful to start by representing the players’ alternatives in terms of feasible payoff vectors. Let be the set of payoff vectors (continuation values) on which the players can coordinate, conditional on selecting tangible joint action x. The set is assumed to embody a solution concept for future play. Defining , the contracting problem is represented as . Define

In payoff terms, the contracting problem can be thought of as a joint selection of a vector in Y, with disagreement leading to a vector in .

Some of the analysis uses the following assumption.

Assumption 1: The set is compact. For every , the set is nonempty. Furthermore, the set Y is closed and satisfies for some numbers .

The second part of this assumption is that Y is bounded above (separated by a negatively-sloped line in ), assuring that arbitrarily large utility vectors are not available to the players.

It will sometimes be necessary to focus on one player’s payoffs from sets of payoff vectors such as . For any set , let denote the projection onto player i’s axis. That is, .

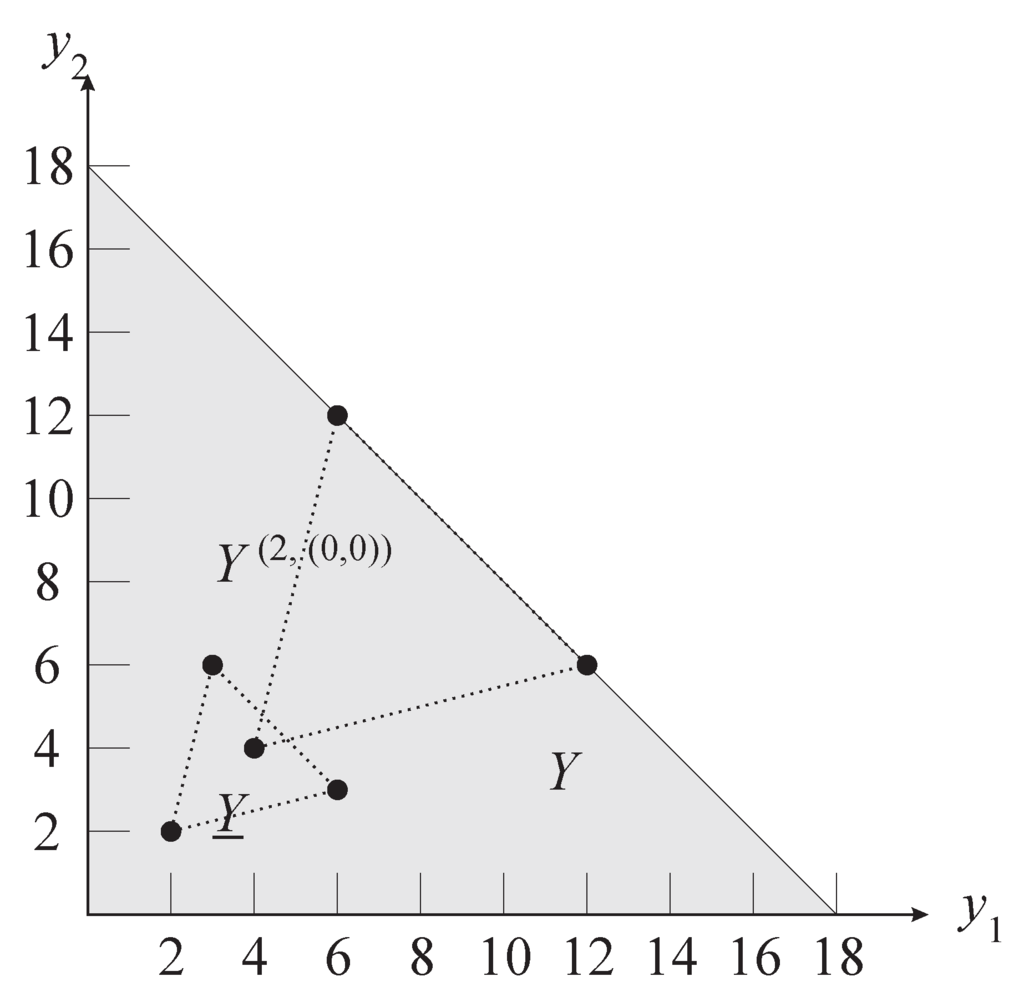

2.1. BoS Example

Here is a simple example that illustrates the determination of . There are two players who interact over two phases of time. The first is a negotiation phase, where the players negotiate and jointly select tangible action . The component l is a “level of interaction" that can be either 1 or 2, whereas is a vector of monetary transfers from the set

Note that the players can transfer money between them and can throw away money. We have . In the second phase, the players simultaneously take individual actions, with each player choosing between “left" and “right." The players then receive payoffs given by the following battle-of-the-sexes matrix:

Assume that the default joint action is , where no money is transferred and .

Self-enforcement of behavior in the second phase is modeled using Nash equilibrium. There are three Nash equilibria of the battle-of-the-sexes game. For the matrix shown above, the two pure-strategy equilibria yield payoff vectors and , whereas the mixed-strategy equilibrium yields . Thus, in this example, we have for any :

and

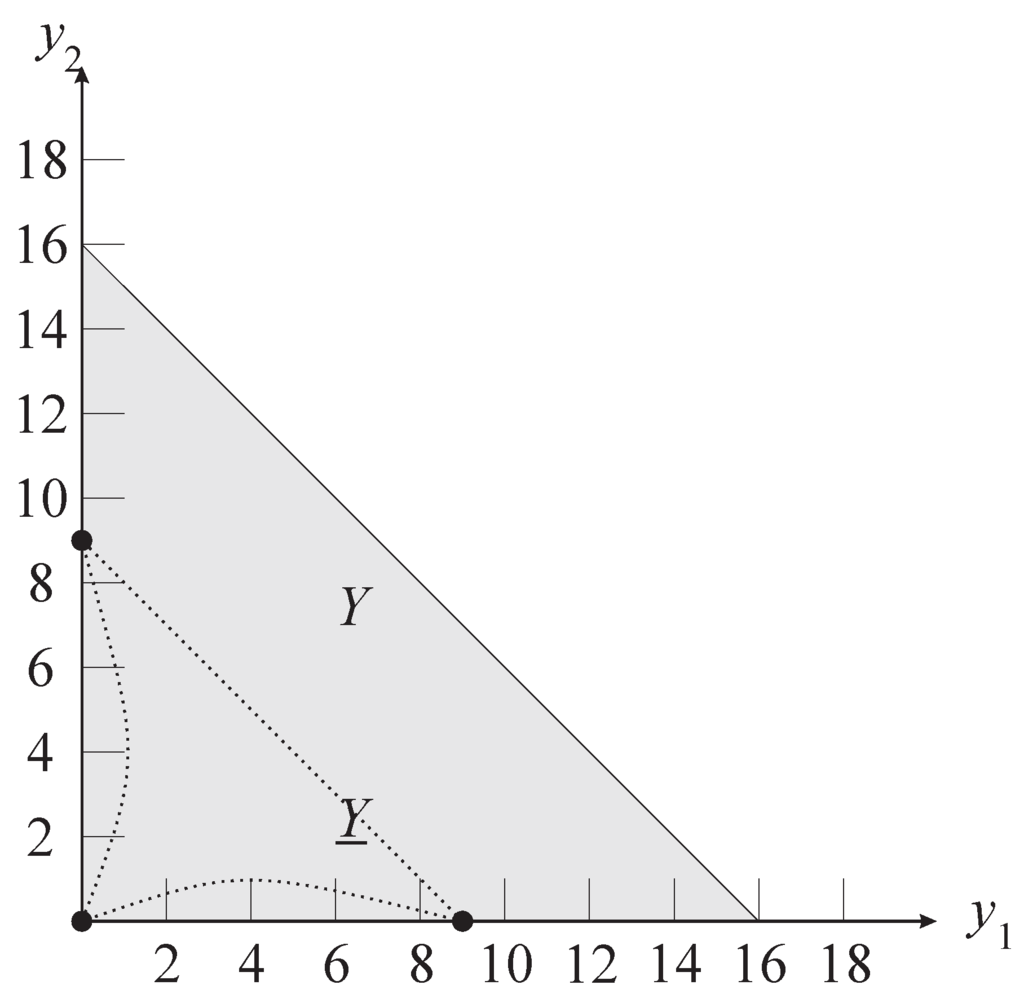

The set Y is given by the shaded region in Figure 2, which shows the intersection with the positive quadrant.

The figure also shows the three points composing and the three points composing .

Figure 2.

Y for the BoS example.

Figure 2.

Y for the BoS example.

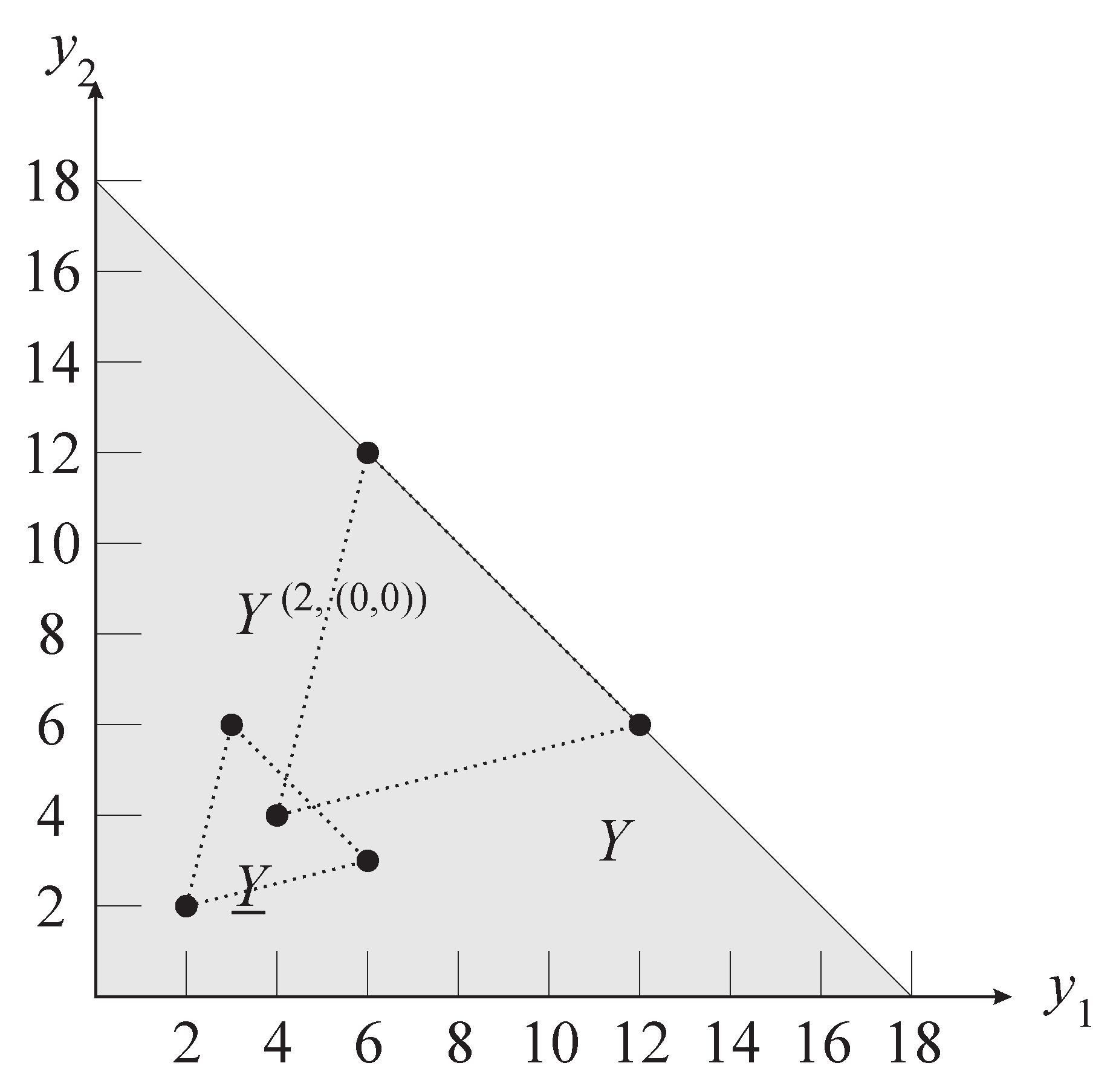

2.2. More on the MW Example

For another illustration of how to construct , consider the manager-worker example from the Introduction. Imagine that the parties are negotiating at the beginning of the second period, in the contingency in which they had selected , , and in the first period (recall the description of Alternative 3 in the Introduction).

The parties’ tangible joint action at the beginning of the second period is a new selection of transfers to supercede those chosen in the first period. Call the second period selections , , , and . The default action specifies , , and . Recall that the matrix induced by these default transfers is:

Thus, noting the three Nash equilibria of this matrix, we have

As for the set Y, note that the greatest joint payoff attainable in the second period is 16. Further, for any vector with , there is a specification of transfers such that is a Nash equilibrium, , and . This implies that

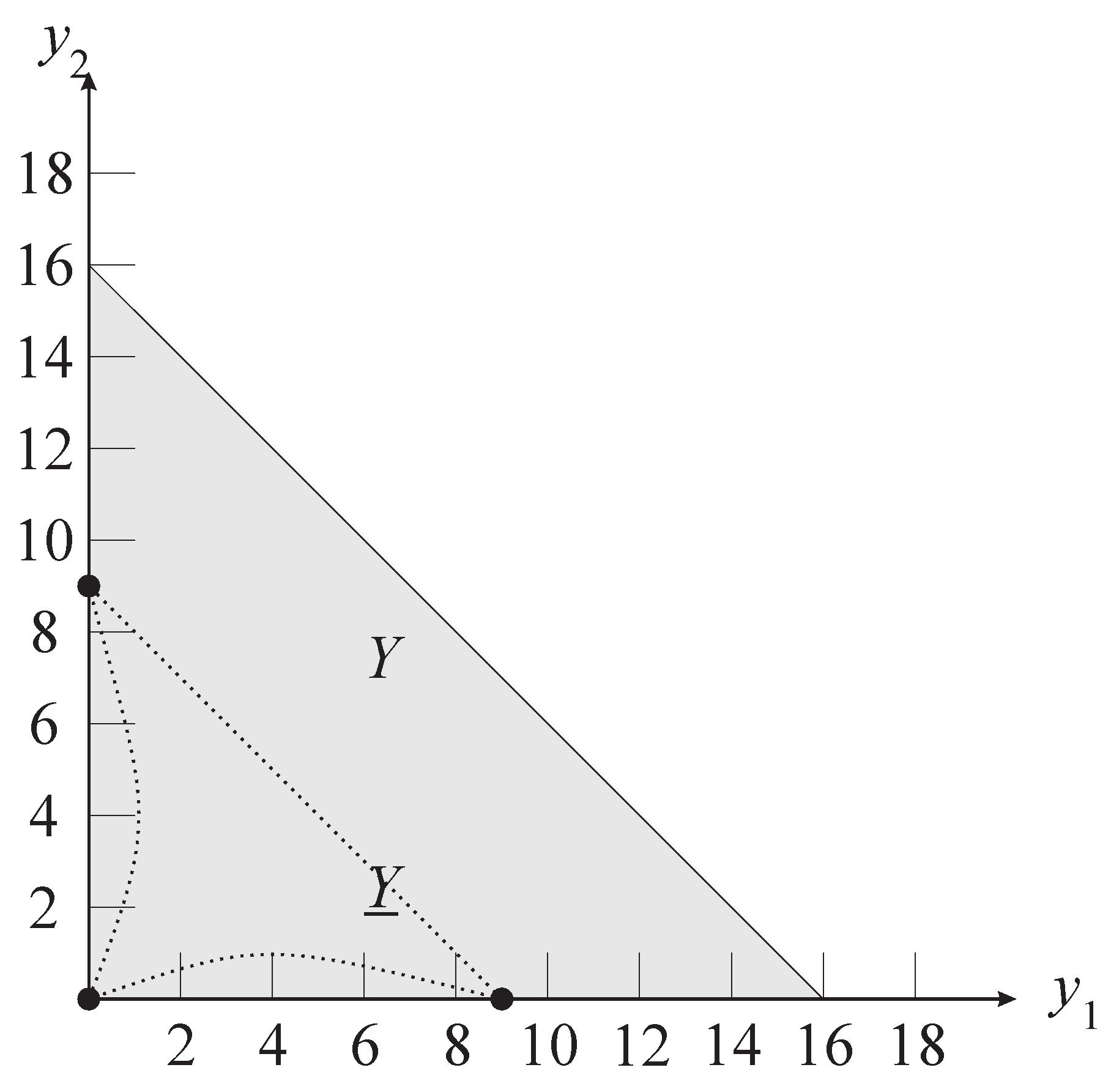

The sets and Y, intersected with the positive quadrant, are pictured in Figure 3.

Figure 3.

Y for the MW example in a second-period contingency.

Figure 3.

Y for the MW example in a second-period contingency.

3. Modeling Contract Negotiation and Activeness

Interaction in the negotiation phase leads to some contractual agreement between the players, which we can represent by a joint action x and a continuation value . The negotiation process can be modeled using a non-cooperative specification that explicitly accounts for the details of the negotiation process. For example, we could assume that negotiation follows a specific offer-counteroffer or simultaneous-demand protocol. This approach basically “inserts" into the time line of the players’ relationship a standard non-cooperative bargaining game.

For an illustration, consider the ultimatum-offer bargaining protocol whereby player 1 makes an offer to player 2 and then player 2 decides whether to accept or reject it, ending the negotiation phase. Because the players are negotiating over both the tangible action x and the intangible coordination of their future behavior, player 1’s “offer" comprises both of these elements. Because the sets represent (in payoff terms) the feasible ways in which the players can coordinate future behavior, we can describe an offer by a tuple . Translated into plain English, means “I suggest that we take tangible action x now and then coordinate our future behavior to get payoff vector y."

If an offer is accepted by player 2, then x is externally enforced. Note, however, that “y" is simply cheap talk. When player 1 suggests coordinating to obtain payoff vector y and player 2 says “I accept," there is no externally enforced commitment to play accordingly. In fact, the players could then coordinate to achieve some other payoff vector. Furthermore, there is no requirement that satisfy ; a player can say whatever he wants to say.

Because y is cheap talk, there are always equilibria of the negotiation phase in which this intangible component of the offer is ignored; in other words, the players’ future behavior is not conditioned on what they say to each other in the negotiation phase (other than through the externally enforced x).3 In such equilibria, there is a sense in which the players are not actively negotiating over how to coordinate their future behavior. Rather, some social convention or other institution is doing so. To model more active negotiation, we can proceed by combining standard equilibrium conditions with additional assumptions on the meaning of language. These additional requirements can be expressed as constraints on future behavior conditional on statements that the players make in the negotiation phase. Farrell (1987) [21], Rabin (1994) [22], Arvan, Cabral, and Santos (1999) [23], and Santos (2000) [24] conduct such an exercise for settings without external enforcement.4 This section builds on their work and offers the straightforward extension to settings with external enforcement.

3.1. Bargaining Protocols

To formalize ideas, I begin with some general definitions. I assume that the negotiation phase is modeled using standard noncooperative game theory.

Definition 1: A bargaining protocol Γ consists of (i) a game tree that has all of the standard elements except payoffs; (ii) a mapping ψ from the set of terminal nodes N of the game tree to the set X; (iii) a partition of N; and (iv) a function .

The mapping ψ identifies, for each path through the tree, a tangible action from X that the players have selected. Reaching terminal node n implies that will then be externally enforced. Although no payoff vector is specified for terminal nodes, it is understood that if players reach terminal node n in the negotiation phase then their behavior in the continuation leads to some payoff in . Note that a well-defined extensive-form game can be delineated by assigning a specific payoff vector to each terminal node of Γ.

The additional structure provided by items (iii) and (iv) in the definition is helpful in distinguishing between “agreement" and “disagreement" outcomes of the bargaining protocol. The idea is that we can label some outcomes as ones where agreements are presumed to have been made. The others are labeled as disagreement outcomes (an impasse in bargaining).

The set comprises the terminal nodes at which the players have reached an agreement, whereas comprises the terminal nodes at which the players have not reached an agreement. Nodes in must specify , because agreement is required for the players to select some other joint action. Thus, for all .

The function ν associates with each terminal node in the continuation payoff vector that was verbally stated in the players’ agreement. That is, if bargaining ends at terminal node , then we say that the offer of was made and accepted. Note that is essentially cheap talk.

Consider, as an example, the ultimatum-offer protocol in which player 1 makes an offer and player 2 accepts or rejects it. For this game tree, each terminal node represents a path consisting of player 1’s offer and player 2’s response (“accept" or “reject"). We have and for every and . That is, if player 2 accepts then external enforcement of x is triggered; otherwise, the default action occurs. Furthermore,

and . That is, if player 1’s offer of is accepted by player 2, then the players have identified the continuation payoff y. Remember, though, that y is not necessarily a feasible payoff vector in the continuation.

3.2. Equilibrium Conditions and Activeness Refinements

An equilibrium in the negotiation phase is a specification of behavior that is rational given some feasible selection of future behavior.

Definition 2: Take a bargaining protocol Γ and let s be a strategy profile (mixed or pure) for this extensive form. Call s an equilibrium of the negotiation phase if there is a mapping such that (i) for every ; and (ii) s is a subgame-perfect equilibrium in the extensive form game defined by Γ with the payoff at each node given by .5 Call an equilibrium negotiation value if it is the payoff vector for some equilibrium of the negotiation phase.

Implied by the domain of is that the behavior in the negotiation phase becomes common knowledge before the players begin interacting in the continuation. Clearly, studying equilibrium in the negotiation phase is equivalent to examining a subgame-perfect equilibrium in the entire game (negotiation phase plus continuation); here, I am simply representing post-negotiation interaction by its continuation value.

Assumptions about the meaning of language can be viewed as constraints on the function in the definition of equilibrium of the negotiation phase. It may be natural to assume that if a player accepts an offer and if (that is, y is consistent with x), then the continuation value y becomes focal and the players coordinate their future behavior to achieve it. This is expressed by

We could also make an assumption about the continuation value realized when the players fail to agree. One such condition is that the players share responsibility for disagreement in the sense that the continuation value does not depend on how the players arrived at the impasse.

Miller and Watson (2013) call this “no fault disagreement" and base their construction of contractual equilibrium on this assumption about disagreement.

The Agreement and Disagreement Conditions are added to the conditions on in Definition 2. To refer to the resulting equilibrium definitions, I use the language “equilibrium negotiation value under [the Agreement Condition, the Disagreement Condition, or both]."

Even with the Agreement and Disagreement Conditions, the disagreement value is an arbitrary vector that can depend on the players’ history of interaction. One can imagine that is selected by a previous agreement between the players or by some social norm—either way, taken as given by the players in the current negotiation phase. In contrast, one can imagine a more active form of negotiation in which communication in the current negotiation phase determines how the players coordinate their future behavior when they disagree.6

Here is a story for how may be decided in the current negotiation phase. In the event that bargaining ends at an impasse, there is one more round of communication in which “Nature" selects one of the players to make a final declaration about how they should coordinate their future behavior. Assume that Nature chooses player 1 with probability and player 2 with probability . Also suppose, along the lines of The Agreement Condition, that the declaration becomes focal, so that the players actually coordinate appropriately. Then, when player i gets to make the final declaration, he will choose the continuation value satisfying . (Recall that is the projection of onto player i’s axis.) For simplicity, assume that

so that player i is generous where it does not cost him. The disagreement value is thus given by:

The vectors and exist by Assumption 1.

3.3. Example: BoS with the Ultimatum-Offer Protocol

To illustrate the implications of the Agreement, Disagreement, and Active Disagreement Conditions, I consider as an example (i) the BoS contracting problem described in Section 2.1; and (ii) the ultimatum-offer protocol described at the end of Section 3.1. Recall that Figure 2 shows sets Y and for the BoS contracting problem. What follows are brief descriptions of the sets of equilibrium negotiation values with and without these conditions being imposed. See Appendix A for more details.

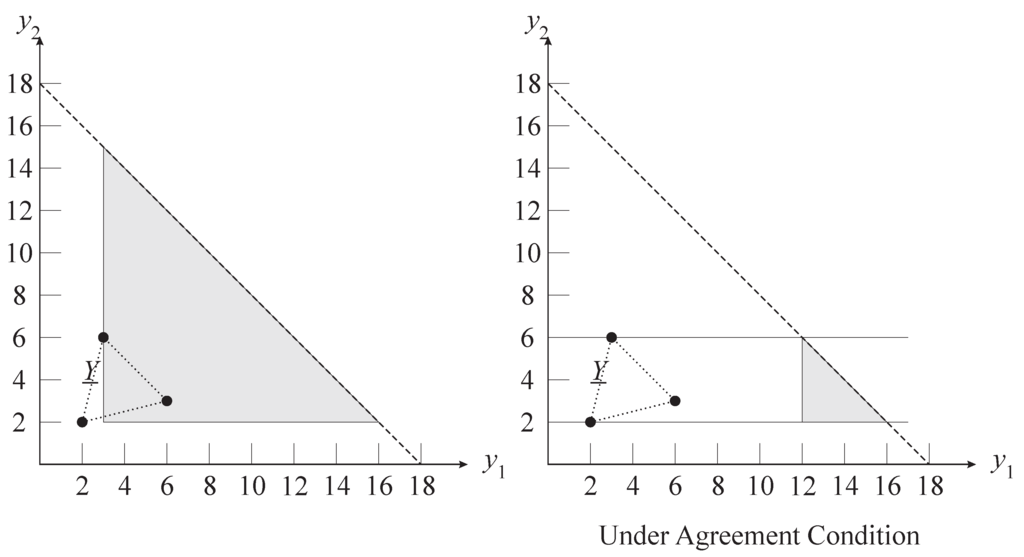

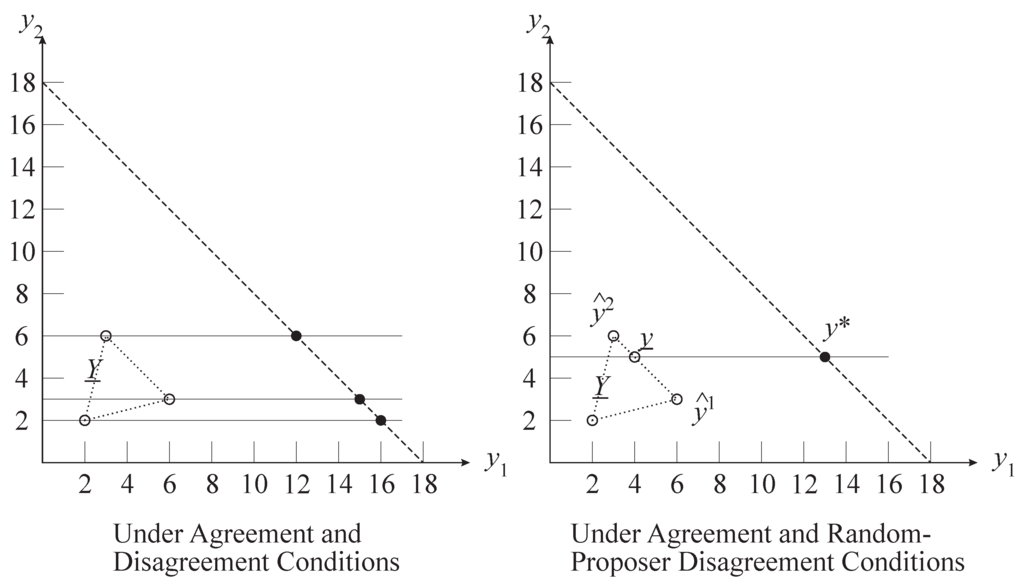

The set of equilibrium negotiation values (without the Agreement, Disagreement, and Active Disagreement Conditions) is given by the shaded region in the left picture shown in Figure 4.

The points with are not equilibrium values because, by making an offer of with and , player 1 guarantees himself a payoff of at least 3. This is because (i) if player 2 accepts such an offer, then, regardless of which continuation value they coordinate on, player 1 gets at least

and (ii) player 2 could rationally reject such an offer only if he anticipates getting at least himself, but the two such points in yield at least 3 to player 1. The asymmetry of the set of equilibrium negotiation values follows from the asymmetry of the bargaining protocol—that player 1 makes the ultimatum offer.

Figure 4.

Equilibrium negotiation values in the BoS example.

Figure 4.

Equilibrium negotiation values in the BoS example.

The set of equilibrium negotiation values is reduced under the Agreement Condition, as shown in the right picture of Figure 4. Here, player 1 can guarantee himself a value of by offering and for any small ; such an offer must be accepted by player 2 in equilibrium. Points in the shaded region can be supported with appropriately chosen disagreement values.

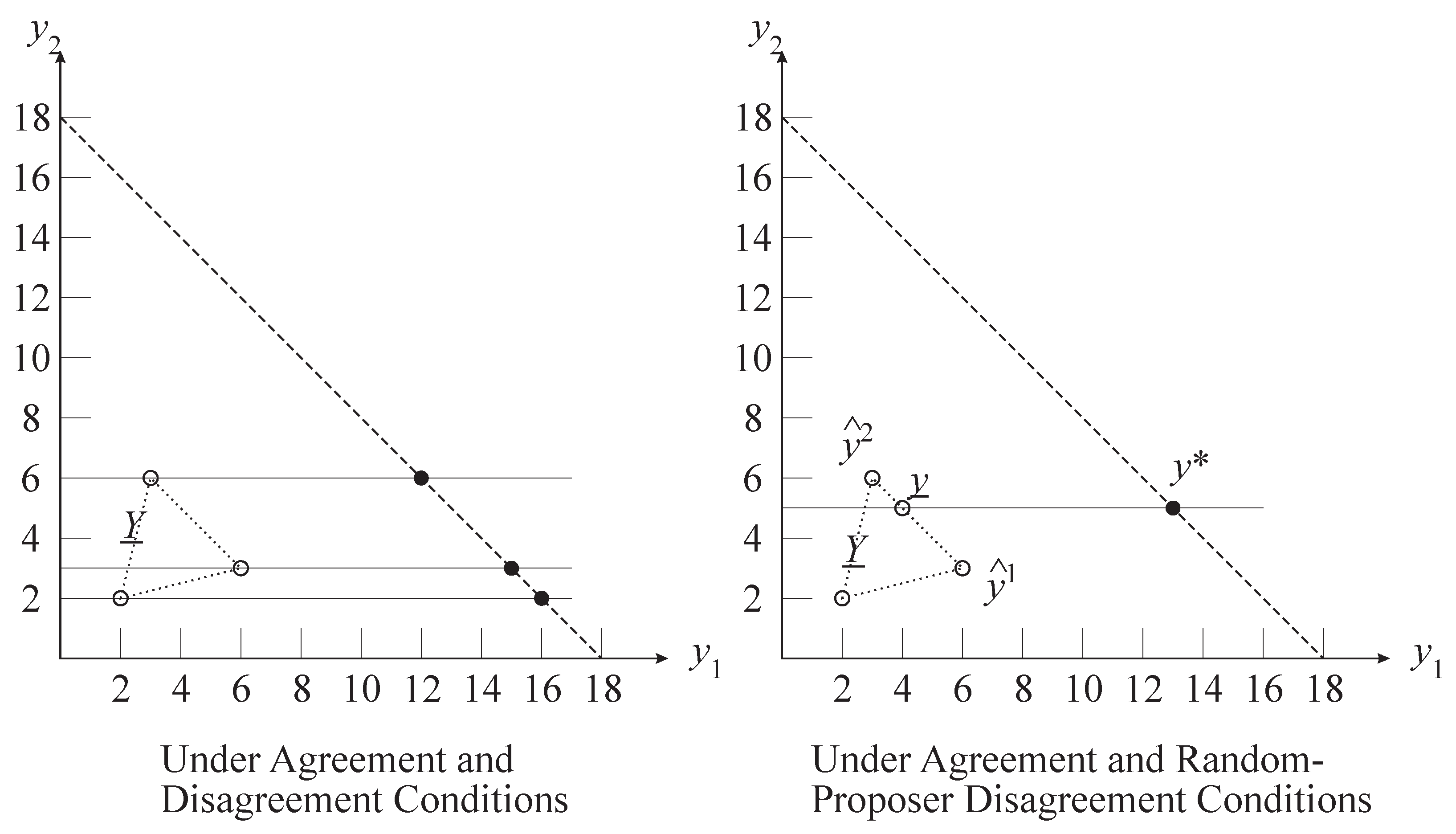

The set of equilibrium negotiation values is further reduced under both the Agreement, Disagreement and Disagreement Conditions, as shown in Figure 5.

Figure 5.

More equilibrium negotiation values in the BoS example.

Figure 5.

More equilibrium negotiation values in the BoS example.

With these conditions imposed, we have the standard outcome of the ultimatum-offer-bargaining game as though all aspects of the continuation value were externally enforced and the disagreement value were some fixed element of . In the left picture of Figure 5, the set of equilibrium negotiation values (for various ) comprises the three points indicated on the efficient boundary of Y. The picture on the right shows the single equilibrium negotiation value that results when the Random-Proposer Disagreement Condition is added; the case pictured has .

3.4. Cooperative-Theory Representation

Whatever are the assumed bargaining protocol and equilibrium conditions, their implications can be given a cooperative-theory representation in terms of a bargaining solution S that maps contracting problems into sets of equilibrium negotiation values. That is, for a contracting problem , is the set of equilibrium negotiation values for the given bargaining protocol and equilibrium conditions. It can be helpful to specify a bargaining solution S directly (with the understanding of what it represents) because this allows the researcher to focus on other aspect of the strategic situation without getting bogged down in the analysis of negotiation. One can then compare the effects of imposing various conditions regarding negotiation by altering the function S.

On the technical side, note that is a subset of the convex hull of Y. For some applications, it will be the case that is contained in Y. Points in the convex hull of Y that are not in Y are possible in settings in which the bargaining protocol involve moves of Nature or where players randomize in equilibrium. One can also use the definition of S to model situations in which the players select lotteries over joint actions and continuation values. In these cases, an equilibrium negotiation value may be a nontrivial convex combination of points in Y.

3.5. Representation of Contractual Activeness

When a bargaining solution produces more than one negotiation value (a nontrivial set) for a given contracting problem, then there is a role for social convention and history to play in the selection of the outcome. The selection may also be determined by a previous contract that the players formed. A larger set of negotiation values implies greater scope for social convention, history, and prior agreements, and so contracting is “less active." In more active contracting, contract selection depends mainly on the current technology of the relationship (including the bargaining protocol and the future productive alternatives) rather than on historical distinctions that are not payoff relevant from the current time. More active contracting is associated with a smaller set of negotiation values.

One can thus compare the relative activeness of two different bargaining solutions in terms of set inclusion.

Definition 3 Let S and be bargaining solutions that are defined on the set of contracting problems that satisfy Assumption 1. Bargaining solution is said to represent more active contracting than does S if, for every contracting problem that satisfies Assumption 1, .

In this case, S imposes fewer constraints on the outcome of the negotiation process and it allows a greater role for social convention, history, and prior agreements to play in the selection of the outcome. For example, if the players would arrive at a particular contracting problem in two or more different contingencies (from two different histories), then S affords wider scope for differentiating between the histories than does .

Note that activeness of contracting relates to assumptions about the meaning of verbal statements that the players make during bargaining, such as the Agreement and Disagreement Conditions described in Section 3.2. Stronger assumptions about the relation between language and expectations imply more active contracting. Recall that this is particularly relevant for the self-enforced component of contract, because, on this dimension, a verbal statement of agreement does not force the parties to subsequently act accordingly (as external enforcement can do) and there are various possibilities for the link between the parties’ verbal agreement and the way they actually coordinate. Language is more meaningful, and contracting more active, when statements of agreement—at least those that are consistent with individual incentives—induce the parties to comply.

3.6. Benchmark Solutions

Various bargaining protocols and equilibrium conditions can be compared on the bases of (i) the extent to which they support outcomes that are inefficient from the negotiation phase; and (ii) the extent to which they represent the players’ active exercise of bargaining power. In this subsection, I describe three benchmark solutions that collectively represent a range of possibilities on these two dimensions.

The first benchmark solution is defined by the set of equilibrium negotiation values for the Nash-demand protocol without the conditions stated in Section 3.2. In the Nash-demand protocol, the players simultaneously make demands and , and this vector of demands defines the terminal node of the bargaining protocol. Equal demands are labeled as agreements; otherwise the outcome is disagreement. If the demands are equal, so and , then is compelled by the external enforcer and the stated continuation value is . Otherwise, the default joint action is compelled. It is easy to verify that the equilibrium negotiation values in this case are given by

The superscript “ND" here denotes “Nash demand."

The second and third benchmark solutions refer to the set of equilibrium negotiation values for a standard K-round, alternating-offer bargaining protocol under the Agreement and Disagreement Conditions. I assume no discounting during the bargaining protocol, but there is random termination.

In any odd-numbered round, player 1 makes an offer to player 2, who then decides whether to accept or reject it. If player 2 accepts, then the negotiation phase ends. If player 2 rejects player 1’s offer, then a random draw determines whether the negotiation phase ends or continues into the next round; the latter occurs with probability . In an even-numbered round, the players’ roles are reversed and is the probability that the following round is reached in the event that player 1 rejects player 2’s offer. I assume that the values and are nonnegative and sum to one, and I write . The negotiation phase ends for sure after round K. Let denote this bargaining protocol.7

Functions ψ and ν are defined as in the first benchmark solution. For any agreement outcome, functions ψ and ν give the agreed upon values of x and y. At any disagreement node n, where bargaining ended without a player accepting an offer, we have .

It is easy to verify that, under Assumption 1, there is an equilibrium of the negotiation phase that satisfies the Agreement and Disagreement Conditions and that has agreement in the first round.8 For any disagreement value , let be defined as the limit superior of the set of implied equilibrium negotiation values as . Define the limit in terms of the Hausdorff metric, so that if and only if there is a sequence of vectors such that (i) is an equilibrium negotiation value for under the the Agreement and Disagreement Conditions; and (ii) has a subsequence that converges to .

Consider the limit superior of as the “time between offers" Δ converges to zero:

is implied by Assumption 1 and by the construction of . I define the second benchmark bargaining solution to be the set that results by looking at all feasible disagreement values:

For the third benchmark solution, I add the Random-Proposer Disagreement Condition to focus on the “active disagreement" vector:

By taking advantage of Binmore, Rubinstein, and Wolinsky’s (1986) [27] analysis, we can show that, assuming Y is convex, is the generalized Nash bargaining solution evaluated on bargaining set Y, with disagreement value , and with the players’ bargaining weights given by π.

Lemma 1: If Y is convex then .

Here, denotes the strong Pareto boundary of the set Y. Note, therefore, that if Y is convex then bargaining solution is a subset of, and bargaining solution is a point on, the strong Pareto boundary of Y.

4. Contractual Relationships

In this section, I develop a framework for examining multi-period contractual relationships. Each period consists of two phases of time: the negotiation phase and the individual-action phase. In the negotiation phase, the players make a joint contracting decision. Interaction in the negotiation phase is modeled as described in the preceding sections. In the individual-action phase, the players make independent decisions that are modeled as non-cooperative interaction. I constrain attention to finite-horizon settings in which, in each negotiation phase, all payoff-relevant information about future interaction is commonly known.

4.1. Formal Description of the Game with Joint Actions

Suppose that two players interact over a finite number of discrete periods. At the beginning of each period, a state variable z represents the payoff-relevant aspects of the players’ history as well as any events on which the players are assumed to condition their behavior. With common knowledge of z, the players first select a joint action x. Then, simultaneously and independently, the players choose individual actions and (for players 1 and 2, respectively) and an exogenous random draw is realized. Write as the individual-action profile. At the end of the period, the players receive payoffs given by a vector . The state is then updated and interaction continues in the next period or the game terminates.

To be more precise, fundamentals of the game include a set of states Z, a set of joint actions , and a set of individual-action profiles . There is a correspondence and a function such that gives the set of joint actions available to the players in a period that begins in state z, and denotes the default joint action in this state. Further, denotes the set of feasible individual actions for player i in a period in which z is the state and x is the joint action that the players selected. The feasible individual-action profiles are given by

where . Assume that Nature selects according to some probability distribution in each period. Note that Nature’s actions and probability distribution may be influenced by the state and joint action of the current period.

There is a transition function that defines the state in the following period as a function of the current period’s state and actions.9 Specifically, if x and a are the actions taken in a period that begins in state z, then is the state in the next period. There is an initial state of the relationship in effect at the beginning of the first period. There is also a nonempty set of terminal states that mark the end of the game. A feasible path of play is a sequence such that

- (i)

- ;

- (ii)

- , , and for all ; and

- (iii)

- .

Assumption 2: There is a positive integer τ such that every feasible path of play has and at least one feasible path has .

This assumption is maintained hereinafter. It implies that Z can be partitioned into sets with the following properties. First, and . Second, for each , each , and actions and , we have

Partitioning Z in this way facilitates backward-induction analysis.

Finally, there is a payoff function . For any given path of play , the payoff vector for the entire game is the sum of per-period payoffs:

One can incorporate discounting by defining u and the state system appropriately.

4.2. Contractual Equilibrium

To analyze the contractual relationships described in the previous subsection, I combine a bargaining solution S with individual incentive conditions. The former models how players select among joint actions in the negotiation phase, whereas the latter identify Nash equilibria for the individual-action phase in each period. The analysis is most conveniently conducted using a recursive formulation in which we focus on within-period payoffs and continuation values.

In the recursive formulation, we posit a value correspondence (a mapping from states to subsets of ) with the interpretation that, for each , is a set of continuation-value vectors for the players at the start of a period in state z. For V to be consistent with the bargaining solution S, it must be that , where is the contracting problem that the players face at the beginning of the period in state z.

In turn, should correctly describe the feasible continuation values over the joint actions that are available to the players. For a specific , every feasible continuation value is supported by some (possibly mixed) action profile that is a Nash equilibrium in the individual-action phase of the current period. The Nash equilibrium condition is

for all and for . In this expression, is player i’s continuation payoff from the beginning of the current period and denotes the expectation taken over Nature’s probability distribution and whatever randomizing the players do. The function v selects continuation-value vectors for the start of the next period. These should be consistent with the behavioral theory, meaning that . Thus, for every , we have

In this expression, denotes the set of uncorrelated probability distributions over .

These elements compose the notion of rational behavior.

Definition 4: Let S be a given bargaining solution. A value correspondence is said to represent contractual equilibrium if

- (i)

- for every and

- (ii)

Theorem 1: Take as given a contractual relationship and a bargaining solution S. If Assumption 2 is satisfied and contractual equilibrium exists, then there is a unique value correspondence that represents contractual equilibrium.

This result is a straightforward consequence of the backward-induction construction. The result is proved in Appendix C; existence results for three classes of contractual relationships are presented in Appendix B.

I conclude this section with notes on the meaning and interpretation of contractual equilibrium. Suppose that, consistent with contractual equilibrium, the players actively select a continuation value y in some state z. Note that y incorporates contractual equilibrium play in future states. For instance, if is a state that may follow from z, then continuation value y incorporates the expectation that the players would obtain some value in state , where is itself consistent with contractual equilibrium. Furthermore, could arise specifically because the players would coordinate on disagreement point in state . Therefore, by selecting y in state z, the players are coordinating on specific disagreement points for future states. In other words, an agreement in one state entails a specification of the disagreement points for all possible future states, along with the expectation of how the players will make agreements subject to these disagreement points. Thus, a lot is wrapped up in the joint selection of a continuation value y.

Considering the nature of an agreement, there is a philosophical issue about the essential role of social norms in the equilibrium context. As just discussed, the disagreement point that the players would coordinate on in some state z must have been determined by the contract that the players made in the previous period. But if z is the initial state then there is no previous period. So the question is: What is the disagreement point in the first period? Clearly, if future play would be consistent with contractual equilibrium, then the first-period disagreement point must be an element of . The point in this set that the players coordinate on must be determined exogenously, by some sort of social norm or other institution. Furthermore, since the first-period disagreement point relates to disagreement points in later periods, this institution coordinates the players’ expectations from every history in which the players had never reached an agreement.10

5. Analysis of Examples

This section contains analysis of the MW example, an example of a repeated game with contracting, and an example of contracting with spot transfers. These examples illustrate the procedure for characterizing contractual equilibrium and they demonstrate how the activeness of contracting influences the outcome.

5.1. MW Example

In this subsection, I formally elaborate the MW example from the Introduction. A state is denoted , where h is the history of individual actions to a particular period and μ is a function that tells the external enforcer what monetary transfer to make contingent on the history; that is, following history h, the external enforcer compels transfer , where is the amount given to player i. Let , where

- is the set containing the initial (null) history of actions ,

- is the set of individual-action profiles in the first period (investment levels for the worker and a null, trivial action “ϕ" for the manager), and

- is the histories of individual actions through the end of the second period.

Note that includes the worker’s first-period investment choice and both players’ second-period effort choices.

The transfer function μ maps H to with the following constraints. First, recall that, in the story, the external enforcer observes second-period effort choices but not the worker’s first-period investment. This is represented by assuming that the transfer function is measurable with respect to the partition

of H. The story also indicates that the transfer function must be relatively balanced. The interpretation I offer for this is that the transfer is actually the sum of (i) an immediate, voluntary transfer that the players make as they reach agreement in the negotiation phase, and (ii) a contingent transfer compelled by the external enforcer after individual actions are taken in a given period. Furthermore, the external enforcer only compels balanced transfers that sum to zero. I make the additional technical assumption that transfers are contained in some set , where β is arbitrarily large. To represent this idea, define to be the subset of transfer functions of the form that are measurable with respect to and have the following property. For and , .

The initial state is defined as , where denotes the constant transfer function that always specifies zero transfers. At the beginning of any period in state , the set of feasible joint actions is and the default joint action is . Regarding individual actions, there is no random draw. The set of feasible individual-action profiles in the first period is given by . The set of individual-action profiles in the second-period is for all . The transition of the state works in the obvious way. From state , where , if μ is the joint action (transfer function selected) and a is the individual action profile in the current period, then the state in the following period is where is the history formed by appending a to h. Every state of the form for is a terminal state.

On payoffs, in the first period for any and , we have . Regarding the second period, take any where and , the vector is given by plus the vector in the cell of the matrix

that is relevant for action profile a.

that is relevant for action profile a.

that is relevant for action profile a.

that is relevant for action profile a.Minor modifications represented by this formal description of the game, relative to the description in the Introduction, are (i) that the monetary transfers are restricted to be in some compact set and (ii) that an externally enforced transfer can be specified for the first period in addition to those specified for the second period. Item (i) allows an existence result from Appendix B to apply (in particular, Theorem 4); item (ii) was left out of the description in the Introduction because it was not central to the discussion.

For convenience, given any transfer function μ, I shall let be the vector specified for each in which is the individual-action played in the second period, I let be the vector specified for each in which is the individual-action played in the second period, and so on. This is in accord with the notation used in the presentation of the example in the Introduction.

I next characterize contractual equilibrium and find the maximum joint value that the players can attain, for each of the benchmark bargaining solutions , , and defined in Section 3.6. By Theorem 4 in Appendix B, contractual equilibrium exists in the cases of and ; existence will be obvious for the case of . Write , , and for the value correspondences that represent contractual equilibrium for these three cases. The following analysis establishes that

and

Thus, in this example, more active contracting implies a lower attainable joint value.

First consider . Let denote a transfer function that forces to be played in the second period and has . This is the transfer function from Alternative 1 in the Introduction. Take any and let be any state at the start of the second period where is the current transfer function. Note that because, unless the transfer function is renegotiated, the players will have the incentive to play at the end of the period. Also note that the players can renegotiate to pick a transfer function that forces and arbitrarily divide or throw away the value. This means that

By the definition of , we therefore have

In the first period, the players can select and agree on a second-period continuation value of if the worker chooses and a value of if the worker chooses any . That is, the players agree to coordinate on these history-dependent continuation values in the negotiation phase at the start of the second period.

When the players anticipate coordinating in this way, player 2 has the incentive to choose in the first period and therefore (if no transfer is specified for period 1) the payoff from the beginning of the game is . All or part of the joint value of 32 can be transferred to player 2 by way of an additional constant transfer (in period 1, for instance). The bottom line is that

In addition, it is not difficult to check that

By the definition of , we conclude that

Next consider with bargaining weights given by , as described in the Introduction. Let denote the transfer function associated with Alternative 3. That is, , , and . Take any and let be any state at the start of the second period where is the current transfer function. Noting that

and (as with the previous case), and using the definition of , we have

In the first period, the players can select and agree on a second-period continuation value of if the worker chooses and a value of if the worker chooses any . That is, the players agree to coordinate on these history-dependent continuation values in the negotiation phase at the start of the second period. Importantly, it is the disagreement point for their second-period negotiation that achieves history dependence.

When the players anticipate coordinating in this way, player 2 has the incentive to choose in the first period and therefore (if no transfer is specified for period 1) the payoff from the beginning of the game is . One can easily verify that no contract achieves a higher joint value than 31. To do so would require a specification of second-period transfers such that (i) there are two equilibria in the individual-action phase; and (ii) player 2’s payoffs in these two equilibria differ by strictly more than 9. It is not difficult to calculate that, because the transfer function is constrained to be relatively balanced (so ), the maximum difference in player 2’s payoffs in multiple equilibria of the individual-action phase is 9.

Continuing with the case of , one can easily calculate that . Combining this with the analysis of the preceding paragraph, we conclude that .

Finally, consider , with bargaining weights given by . In this case, is a singleton for every . Furthermore, on , is invariant in the “h" part of the state. Thus, whatever contract is formed in the first period, player 2’s continuation value from the beginning of the second period does not depend on his investment choice. He optimally selects . The players can do no better than agree to the contract of Alternative 2 in the Introduction. Player 1’s bargaining power gets him the entire joint value, so .

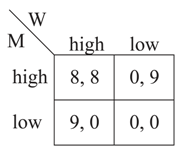

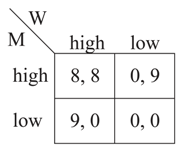

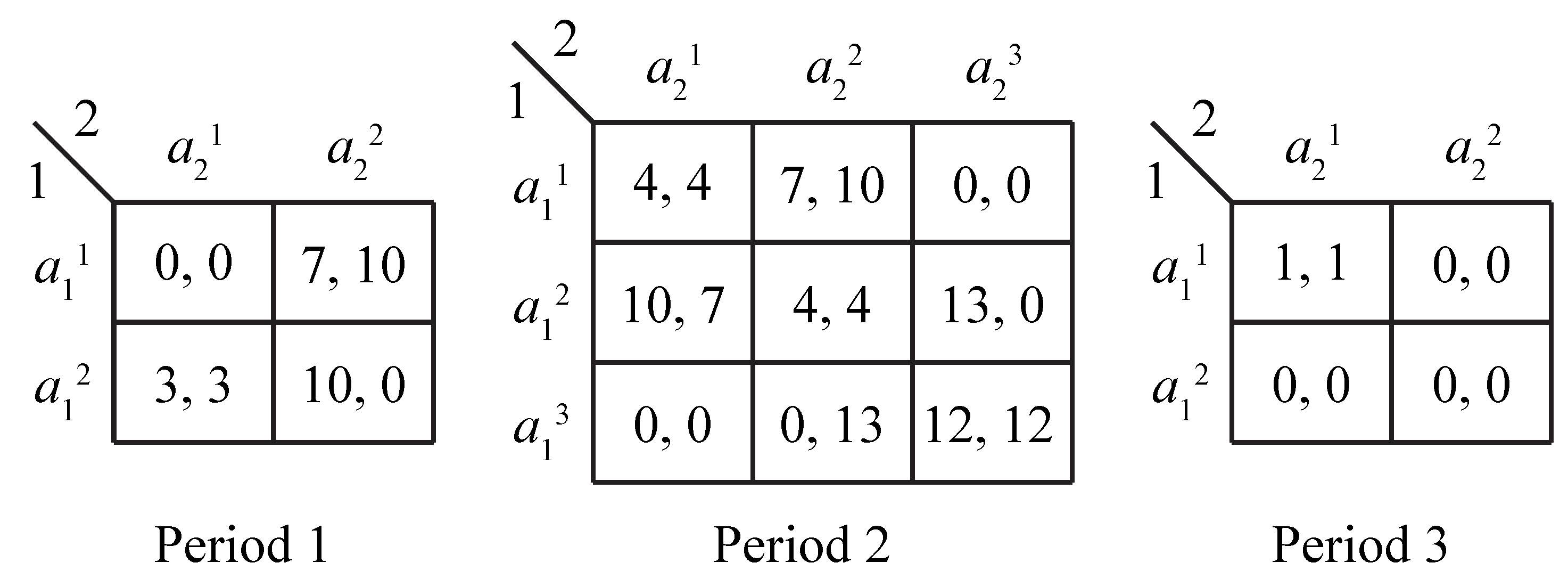

5.2. A Sequence of Stage Games with Contracting

The next example is a simple three-period sequence of stage games with no transfers and no external enforcement. There are no joint actions. Contracting thus relates only to the self-enforced activity. The example demonstrates that activeness of contracting is, in general, not monotonically related to the maximally attainable joint values.

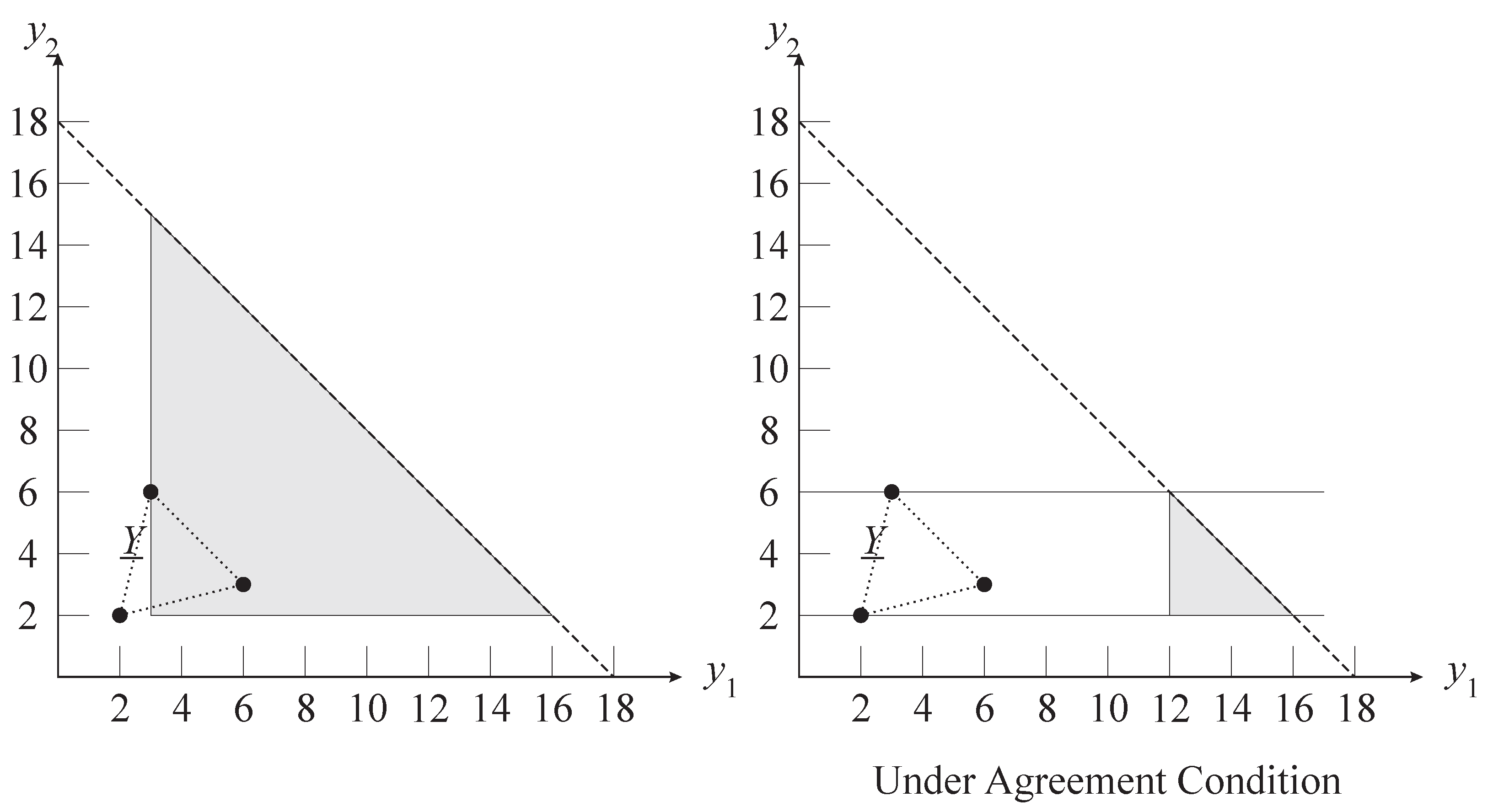

Suppose that the individual actions and payoffs are given by the three stage-game matrices shown in Figure 6.

Figure 6.

Stage games for second example.

Figure 6.

Stage games for second example.

The first stage game is played in period 1, the second in period 2, and the third in period 3. I next describe this contractual relationship formally. Because there are no joint actions, we can leave x out of the picture. First note that is the space of individual action profiles in periods 1 and 3, whereas is the space of individual action profiles in period 2. Next note that the state is simply the history of action profiles, so the set of states can be defined as follows: ,

and . State transitions are given by for each , where “" denotes the history formed by appending a to z. The definition of should be obvious. The payoff function u is defined according to the matrices shown in Figure 6.

Consider the class of bargaining solutions , parameterized by δ and defined as follows:

where “SPB" denotes the strong Pareto boundary. That is, is the set of vectors that weakly dominate a disagreement value and are within δ of the strong Pareto boundary of Y. If then this is simply the strong Pareto boundary weakly above the disagreement set and, in the context of the multistage game, will yield “strong Pareto perfection." If then inefficient points are included. This class of bargaining solutions is ordered by activeness, so that for it is the case that represents more active contracting than does .

By Theorem 3 in Appendix B, contractual equilibrium exists. Note that, because the set of joint actions is trivial, for every z. Note also that, in this relationship, the actions in a period have no direct effect on the set of feasible actions or the payoffs in future periods. Therefore, for any two histories for some t, we must have and .

I next calculate the value correspondences for the case of bargaining solution (where ) and bargaining solution (where ). Let and describe the value correspondences for these two cases, respectively, and let and be the feasible continuation values from period t in these two cases.

Consider first the case of and let us construct V by backward induction, starting with the third period. The stage game in period 3 has two Nash equilibria, with payoffs and . Thus, we have . Because bargaining solution selects the Pareto dominant vector, we obtain . Since there is just one continuation value from the start of period 3, the only action profiles that can be achieved in period 2 are the Nash equilibria of the period 2 stage game (two in pure strategies, plus one mixed). These equilibria yield payoff vectors , , and . Adding the continuation value yields

Bargaining solution selects only the two vectors on the Pareto boundary, so we have

Finally, let us examine the players’ incentives regarding individual actions in period 1. Note that it is rational for the players to select in period 1 if they would then coordinate on continuation value following this action profile but switch to continuation value if player 1 were to deviate. This leads to continuation value from the beginning of the game. In fact, if the players were to coordinate in another way from the start of period 2, then only can be achieved in period 1. Therefore, we obtain

and the bargaining solution selects .

The story is different in the case of . For period 3, we have as before, but now both values are selected by the bargaining solution because is a distance of 1 from , given the metric used in the bargaining solution. Thus, we have . In period 2, can be supported in equilibrium. This is achieved by the players coordinating on continuation value if this profile is played, and coordinating on continuation value if is not played. This specification leads to continuation value from period 2. The other values that can be achieved all utilize stage-game Nash equilibria in period 2, so the set of feasible continuation values is

Only is selected by the bargaining solution, because none of the other values is within unit distance of this point. Thus, . This further implies that, in period 1, only the stage-game Nash equilibrium can be played. So we obtain .

In this example, represents more active contracting than does , yet dominates . From the beginning of the game, the players fare strictly better if describes their negotiation behavior than if does. Intuitively, the problem with is that cannot be supported in the first period because there is no way to punish deviators with the single continuation value from the start of period 2. If the players could make spot transfers, then more continuation values would be available based on the disagreement points in period 2, which is the next topic of the paper.

6. Activeness and Monotonicity

The second example demonstrates that there is no general monotonicity relation between activeness of contracting and the maximal values attainable in contractual equilibrium. The issue with the second example is that the players are unable to transfer utility at the Pareto frontier. In settings where individual and joint actions have long-term effects (influencing the available actions and payoffs in future periods), monotonicity generally will fail to hold even with transfers. This section describes a simple class of contractual relationships, along the lines of the second example but with spot transfers, for which a monotonicity result obtains.

Definition 5: A contractual relationship is said to be a sequence of stage games with spot transfers if it has the following structure. Interaction occurs over T periods, for some positive integer T. The joint action in each period is a transfer . For each , the set of individual action profiles in period t is given by some product set , where is the space of random draws. Payoffs in period t are given by a function . The set of states is , where:

- (i)

- ,

- (ii)

- for , , and

- (iii)

- .

Regarding state transitions, for , , and , the state at the start of period is , where “" denotes the vector formed by appending to z. Regarding feasible actions and payoffs, for any , , , and , define , , , and .

This class of contractual relationships is a slightly generalized version of the repeated-game setting that Miller and Watson (2013) studies, but with a finite horizon. The spot transfers are exactly as specified by Miller and Watson, including that disagreement implies zero transfers in the current period. The slight generalization is that here I allow for different stage games across periods, whereas Miller and Watson focus on settings with a fixed stage game over an infinite number of periods.

As was the case for the example in Section 5.2, contractual equilibrium values depend only on the period number. That is, for any two histories for some t, we have . The same is true for the feasible continuation values and disagreement values: and . Note, however, that generally , because transfers are zero in disagreement.

To present the monotonicity result, I use an additional definition and two assumptions are about bargaining solutions. For any vector and any set , define the sum as . Also, let “" be defined as the collection of sets .

Definition 6: Contracting problem is said to be linear-comprehensive if for some .

A linear-comprehensive problem has a linear Pareto boundary, below which all points are feasible, which is the case when the players can make spot transfers.

Definition 7: A bargaining solution S is said to be monotone in the disagreement set if, for any contracting problems and that satisfy Assumption 1, , and , the bargaining solution satisfies . The bargaining solution is said to be shift-monotone if, for any contracting problems and that are linear-comprehensive, satisfy Assumption 1, and have the property that and , the bargaining solution satisfies for some .

Definition 8: A bargaining solution S is said to be invariant to additive constants if, for any contracting problem and vector , the bargaining solution satisfies .

“Monotone in the disagreement set" and “invariance to additive constants" are standard conditions. “Shift-monotone" basically says that if the Pareto boundary of the bargaining set shifts out and the set of disagreement points expands, then the set of negotiation values expands subject to a normalizing shift. It is not difficult to confirm that bargaining solutions , , and described in Section 3.6 and Section 5.2 all have these properties (but does not).

Here is the monotonicity result.

Theorem 2: Consider a contractual relationship given be a sequence of stage games with spot transfers. Let S and be bargaining solutions that are shift-monotone and invariant to additive constants, and suppose that represents more active contracting than does S. Assume that contractual equilibrium exists for both S and . Let be the contractual equilibrium values for , and let be the contractual equilibrium values for S. Then for each , there exists a vector such that .

An immediate implication of Theorem 2 is that, for each t, the set V is Pareto superior to the set in that, for every point there is a point that weakly Pareto dominates v. In words, less active contracting leads to higher maximial joint values in all states. A proof of Theorem 2 may be found in Appendix C.

7. Conclusions

The modeling exercise reported here has two objectives. First, it seeks to elaborate on the existing contract-theory literature by clarifying some basic concepts and, in so doing, encouraging research that explicitly considers both the externally enforced and self-enforced aspects of contract enforcement. The framework I have developed brings together elements of the contract-theory literature (which, although not exclusively, tends to focus on renegotiation of externally enforced contracts), the repeated-game literature (which focuses on renegotiation of self-enforced contracts), and the cheap-talk literature (which focuses on how verbal statements may influence subsequent behavior in equilibria of non-cooperative games).

The second objective of this modeling exercise is to introduce the idea of activeness of contracting (including the key element of a disagreement point for self-enforced components) and to show that the relation between activeness and the predicted outcomes of a contractual relationship is subtle and perhaps interesting. I do not take a position on whether people in real contractual settings are more or less active in their contract negotiation, for this is an empirical issue. Rather, the model helps define activeness and explore its implications, which is a prerequisite for any empirical evaluation.

The modeling framework developed herein lends itself to a wide variety of applications. Repeated-game models are one special case. For example, using the terminology developed here, the analysis of Benoit and Krishna (1993) [8] identifies contractual equilibrium associated with the bargaining solution that selects weakly efficient continuation values. The point of the present modeling exercise is not to reinvent the renegotiation-in-repeated-games wheel, of course, but to expand the theory in terms of generalizing the bargaining theory and including external enforcement. For instance, the framework can be used to analyze more complicated and realistic settings than are commonly studied in the literature (for example, settings with monetary transfers and external enforcement, and where players take actions that directly affect the structure of the game in future periods).

Another special case is the setting of relationships with unverifiable investment and hold-up (Hart and Moore 1988 [28], Maskin and Moore 1999 [29], and the ensuing body of work). In this category, the framework facilitates the careful analysis of individual trade actions (Watson 2007 [3]) and the examination of settings with more complicated dynamics than has been studied to date (such as Watson 2005 [2]). On the abstract side, the framework developed here may be useful in analyzing problems of “design" with all sorts of real constraints (on, say, liquidity, the timing and alienability of actions, and external enforcement) that are currently not extensively studied.

There are some obvious directions for further research, including on the relation between activeness and maximal joint values. Also, contracts along the lines of Alternative 3 in the MW example represent a nuanced view of Bernheim and Whinston’s (1998) [14] “strategic ambiguity," where players take full advantage of external enforcement to create situations with multiple equilibria and then select among these equilibria as a function of the history. To create the multiplicity of equilibrium, players may want to use “less complete" contracts rather than “more complete" ones. (Bernheim and Whinston restrict attention to forcing contracts, where multiplicity is negatively related to a notion of completeness.)

Additional links to the literature are worth pointing out. Watson (2002) [30] puts forth an informal version of the contractual equilibrium concept called negotiation equilibrium, whose definition can be made more precise. A negotiation equilibrium is the instance of contractual equilibrium in which the parties negotiate actively over only the externally enforced component of contract. Specifically, a continuation value is selected and fixed for each individual joint action, and then the bargaining set is defined as the set of these values, yielding a conventional bargaining problem (with a single disagreement point) to which a bargaining solution is applied. Ramey and Watson (2002) [17] and Klimenko, Ramey, and Watson (2008) [20] examine another variant that the second group calls a recurrent agreement; it is built on the theoretical foundation developed here but is based on the idea that, in a repeated game, players revert to a Nash equilibrium of the stage game when they fail to reach an agreement on how to play in the continuation.

Acknowledgements

University of California, San Diego. Internet: http://weber.ucsd.edu/∼jwatson/. The research reported herein was supported by NSF grants SES-0095207 and SES-1227527.

Conflict of Interest

The author declares no conflict of interest.

- 1.I elaborate on this assumption in Section 5, where the example is described more formally.

- 2.The determination of efficiency includes consideration of transfers; the profile is inefficient because, with the appropriate transfers, both players can be made better off if they select . That the Nash equilibria are weak is of little consequence for the point of the example; for simplicity of exposition, this example was constructed to avoid having to examine mixed strategy equilibria.

- 3.For example, the parties may say to each other that, at some later time, they will coordinate on the individual-action profile a that leads to value y, whereas they both anticipate actually coordinating on .

- 4.See also Farrell and Rabin (1996) [26].

- 5.One could use something other than subgame perfection for condition (ii), but subgame perfection is suitable for my purposes herein.

- 6.There is an important philosophical issue regarding the essential role of a social norm even with the most active of contracting, which I discuss in Section 4.

- 7.One could have , but the case of a finite K has perhaps a more straightforward interpretation whereby the continuation of the relationship must start at units of time from when negotiation begins.

- 8.For some nonconvex examples of Y, one can find mixed equilibria and/or equilibria in which delay occurs. I ignore these.

- 9.By making z the full history of the relationship, we have a setting of almost perfect information. From this, assumptions about on what the players condition their behavior are achieved by restricting Z. Imperfect information can be accommodated by specifying that the state does not fully record all of the players’ past actions; in this case, one’s attention is limited to settings in which the events that are not common knowledge are also not payoff relevant for future interaction, and then we are assuming public equilibrium.