Modern video content distribution requirements include a minimum of two metrics. The transmission needs to use low bandwidth and have low latency.

Usually, the latency problem is hard-imposed by the underlying network transmitting media, but the software used to send and receive the media content can also be responsible for introducing extra delay. This software algorithm may add latency due to the processing it does (e.g., coding/decoding, compression/decompression).

The minimal bandwidth limitation is an issue strictly pertaining to software, and while the transmitting media has an upper limit, it does not impose a lower bound, like in the case of latency. The bandwidth problem can be mitigated by implementing proper algorithms for coding and compression before sending the media data to the clients.

1.1. Introduction to Similar Systems

A video streaming service must be able to offer low latency, high quality, extensions, like ease of navigation, subtitles, chapters,

etc. In order to manage the complexity of the system, it must be split into layers [

1,

2], our attention being aimed towards the application layer—the coding and decoding of the transmitted data through the transport layer.

The application layer consists of the video container, the codec and the program that renders the results. The video container is a meta-file containing information, like aspect ratio, frame rate, key-frames and duration, with reference to the coded data. Popular containers are AVI, MP4, MOV and FLV, with MP4 and MOV evolving to be technically the same and AVI being more limited than the rest.

The video codec is responsible for packing the video data in as little information as possible and recovering the original data while rendering. It is not mandatory for the compression to be light-weight, because it only takes place once, on a dedicated machine (though portable video cameras can benefit from a low complexity compression). On the other hand, the decompressing takes place on all of the systems on which the video must be displayed; thus, even low power devices must be able to cope with the complexity of the algorithm.

The most used video codec today is H.264 (ex: YouTube), an improved version of MPEG. It uses three types of frames—I (Initial, all information in an image), P (Predicted, difference between the predicted and actual frame) and B (Bi-Predicted, constructed from I and P or O and P frames). Each frame is composed of 8 × 8 pixel tiles, which are compressed with the discrete cosine transform and are tracked inside larger 16 × 16 pixel macro-blocks, in order estimate the movement and to improve the P and B frames. The High Efficiency Video Coding standard (HEVC/H.265) uses tiles of variable size (1–64 pixels), intra-frame predictions (from neighboring pixels in a frame), more accurate motion estimation, deblocking for diminishing compression artifacts, along with numerous extensions, like 3D depth data.

The extension for H.264, Scalable Video Codec (SVC) splits the video data into a base video and multiple residues, intending to add an adaptive quality feature to the codec. It includes temporal (removing of frames), spatial (losing resolution, obtained through layers) and quality scalability.

The drawbacks of this mechanism are the complexity and single-frame image quality. To exemplify: at the transition of surveillance cameras from Motion JPEG to MPEG2, the recording device was observed to drop frames, and at the expense of maintaining a high quality video recording of the static scenes, the single important frames lacked the needed details in order to distinguish a subject. If someone wants to freeze a H.264 picture for forensic investigation purposes, his/her best option is to step to the closest I frame, possibly missing the important event. Furthermore, more advanced network cameras and high-performance monitoring stations are required in order to be able to meet the current demands of 30 fps and high resolution acquisitions, a purpose which cannot be easily achieved with wireless devices. However, this problem has been partially handled by the profile settings: the baseline uses simpler algorithms and no B frames, resulting in a low quality video at the same bandwidth; the high setting uses high-performance and high-complexity algorithms. One of the big problems that affect MPEG encoders is rapid motion, as motion deduction cannot correctly estimate the positions of the blocks. This has been improved in H.264, by using more reference frames and, thus, smaller distances between fast moving frames, but at the cost of processing power (every reference frame has to be tested for block movements) and file dimensions. These are unacceptable in a system where a minute percentage of the frames contain the desired information. MPEG variants are designed to give a sense of quality while playing, by predicting, but when a person’s face needs to be observed, no single frame contains enough information, because of the high compression.

Motion JPEG and MxPEG encode each frame using temporal coherence and the JPEG image codec in order to resolve the single frame quality issue. Motion tracking does not take place, so fast moving scenes do not represent a problem. Another advantage comes with Windows-based file systems like New Technology File System (NTFS) and File Allocation Table (FAT) because they are optimized to work with many small files rather than a single large file. As a file becomes larger, the system ends up fragmenting it, the performance decreasing with time for single, large file-based devices. However, all of these come at the cost of bandwidth and memory, typical videos being around 80% larger than their H.264 counterparts. That is why they are seldom used at the needed speed of 30 fps. They are also unreliable on variable bandwidth networks, because they can only be sent at the quality at which they exist on the disk.

1.2. Hypercube Base Algorithm

This paper continues and generalizes the research presented in [

3], proposing a method for compressing a video sequence by grouping multiple frames in a cube and splitting it into multiple cube residues (of different resolutions), a “hypercube”. The work conducted in this paper introduces the finer hypercubes mentioned in the “future work” section of the previous article and includes the implementation and testing of the codec.

The idea is an extension of the Laplacian pyramid [

4] for images to video. A frame cube is a 3D texture created from a sequence of frames taken from a video source. Frame cubes can be downsampled and re-upsampled multiple times (at different scales) in order to compute frame cube residues (which are the difference between the original and interpolated cubes).

As a quick overview, a “frame hypercube” is a series of incrementally smaller frame cube residues and a base frame cube. As seen in

Figure 1, the reconstruction of the original frame cube is done by reversing the process, repeatedly upsampling the base cube and adding the subsequent residue level. The reason behind this representation is that the residue frame cubes contain very little information (the difference between scales), in the form of small values, centered at zero [

5,

6]. Thus, after a remapping of the value interval, they are better suited for entropic compression methods (e.g., Huffman coding).

The real advantage of this scheme is that it can be used for adaptive transmission and reception: transmitting the hypercube slices (

i.e., frame cube residues at different scales) is done starting from lower sizes going to higher sizes. A consequence of this strategy is that, if a drop in bandwidth occurs, even though a client may receive an incomplete number of frame cube residues, the client software is still able to synthesize a lower-quality frame cube and use that to present the frames [

7].

The method and results for this technique are presented in more detail in [

3].

Figure 1.

Algorithm to synthesize initial full-quality frame cube Ai. Residue cubes are marked R; the starting base frame cube is marked B.

Figure 1.

Algorithm to synthesize initial full-quality frame cube Ai. Residue cubes are marked R; the starting base frame cube is marked B.

1.3. Octree Base Algorithm

The main optimization, which is the topic of this paper, is splitting the initial frame cube using an octree algorithm. The reason for doing this is the need to increase the granularity of the transmitted data. In this sense, the octree splitting algorithm works in the following way.

Given the starting full-quality frame cube, it is no longer converted directly into a frame hypercube, but it can be split into eight sub-cubes, as depicted in

Figure 2. The positions of the sub-cubes are fixed in order to remove complexity from the reconstruction algorithm. As such, the origin is in the top, left, front sub-cube, and we have:

Cube 0 in top, left, front

Cube 1 in top, right, front

Cube 2 in bottom, left, front

Cube 3 in bottom, right, front

Cube 4 in top, left, back

Cube 5 in top, right, back

Cube 6 in bottom, left, back

Cube 7 in bottom, right, back

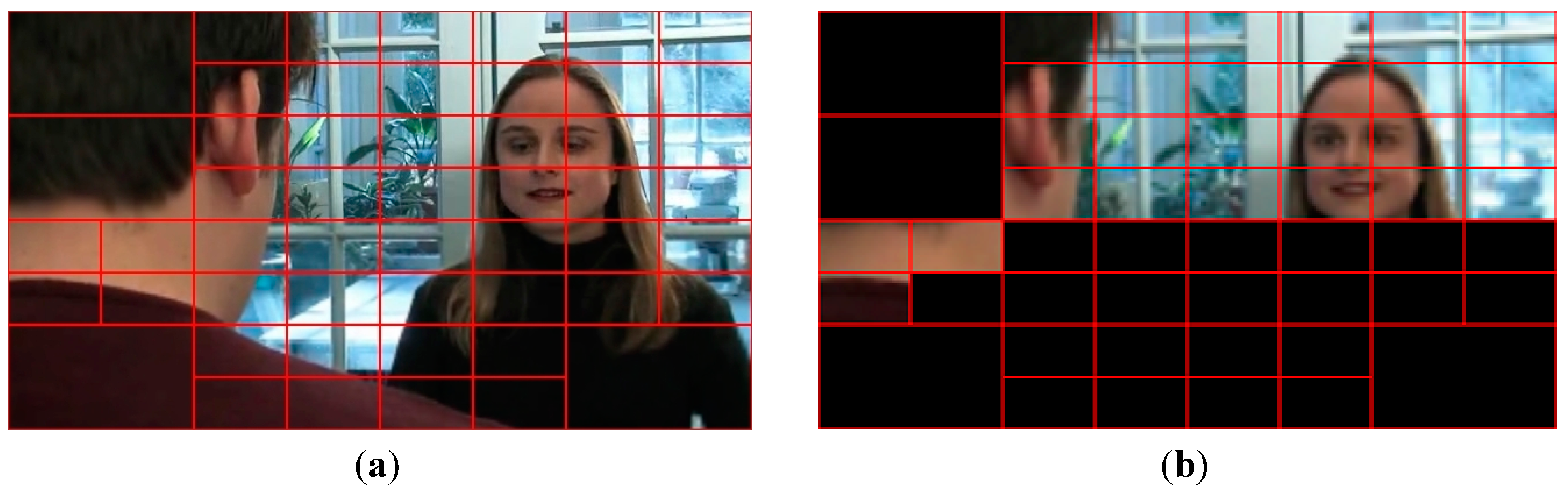

The splitting into sub-cubes is recursive, and the end-condition can be expressed as a function of the sub-cube size and a metric determined before each splitting. The metric chosen in the current implementation is based on the standard deviation. It is calculated as a mean between the standard deviations of each slice in the given frame cube. While there may exist better metrics, the reasoning behind choosing this particular metric is that it measures the “uniformity” of the pixel colors inside the given frame cube [

8]. This means that, the more uniform the color is the less information will be wasted in the compression stage. Since this algorithm generates a tree structure (octree), the cubes that can no longer get split will be called leaf-cubes.

Figure 2.

Octree fixed structure. Second sub-cube (highlighted) is recursively split.

Figure 2.

Octree fixed structure. Second sub-cube (highlighted) is recursively split.

The algorithm used for the construction of the octree generates a set of leaf-cubes. Each of these can now be fed through the same algorithm presented in [

3], in order to create a hypercube of resolutions. Therefore, at the end of the octree construction, the software will have a list of leaf-cubes represented in hypercube form. Since the initial transmission order was implicit (due to the fact that there was only one hypercube, so the base and residue layers would be sent in succession), the problem remaining is that there are multiple hypercubes of different sizes that need to be prioritized.

The chosen prioritization scheme is based on a round-robin transmission from a sorted set of hypercubes. The sorting is done in order of importance: the temporal depth and, afterwards, the actual 2D sizes of the frame images. Using this scheme, the server can use a severely limited bandwidth in order to get a minimum amount of information to the client, to the point that, for hypercubes that get to transmit no data, the client just replaces them with a blank frame cube or the most recent available cube.

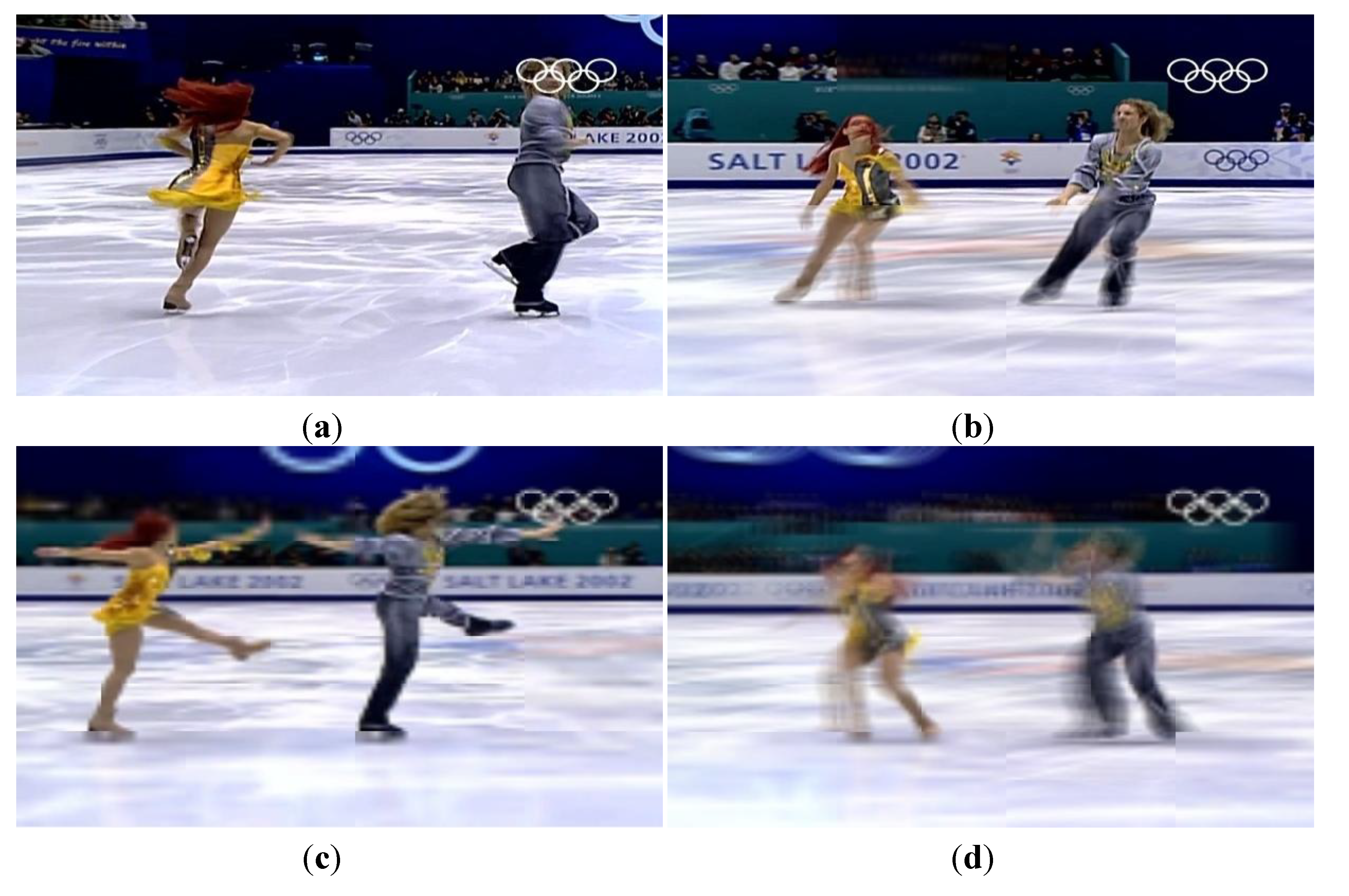

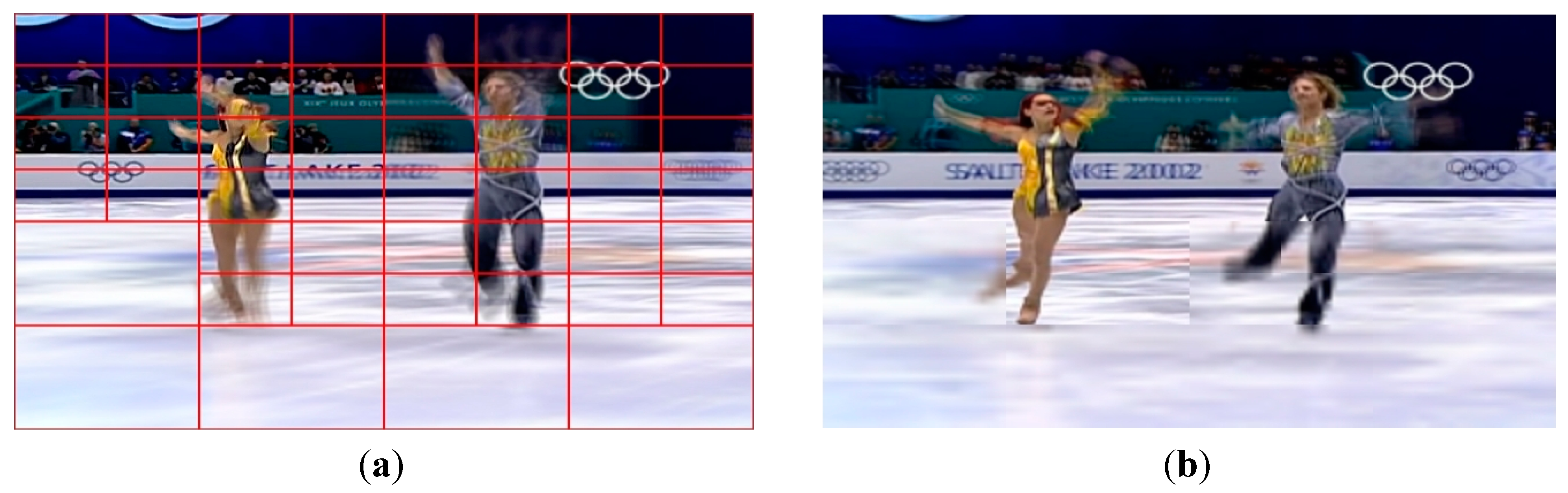

Figure 3.

Degradation process in a dynamic scene: (a) 90%, loss of insensible detail (Peak Signal-to-Noise Ratio (PSNR) 36.9 dB); (b) 55%, uniform areas start losing temporal information, causing regional motion blur (PSNR 22.3 dB); (c) 30%, spatial information loss becomes noticeable (PSNR 14.0 dB); (d) 25%, example of temporal and spatial information loss (PSNR 18.0 dB).

Figure 3.

Degradation process in a dynamic scene: (a) 90%, loss of insensible detail (Peak Signal-to-Noise Ratio (PSNR) 36.9 dB); (b) 55%, uniform areas start losing temporal information, causing regional motion blur (PSNR 22.3 dB); (c) 30%, spatial information loss becomes noticeable (PSNR 14.0 dB); (d) 25%, example of temporal and spatial information loss (PSNR 18.0 dB).

The raw presented technique does, however, have a drawback. If the frame hypercubes are not received in full resolution, there will be a noticeable border between them and the complete sub-sequences (

Figure 3b). For correcting this issue, a mechanism of compensation needs to be involved. In this project, this was done by artificially increasing the size of the leaf-cubes, therefore getting some overlapping data between them. This also raises the lower bandwidth limit. The overlapping of the hypercubes now allows the client software (which knows the relative positions between the octree nodes) to blend into the 3D edges between the frame cubes. This means that frame cubes of higher quality (computed as the ratio between the received number of hypercube slices and the full quality number of slices) get to blend into the neighboring lower quality cubes. If they have the same quality factor, the blending occurs simultaneously on both sides. The blending algorithm is quite straightforward, and it assumes that the blending is done (either linearly with equal weights or non-linearly with variable weights) on each of the three axes using Equation (1).

where:

x is the distance from the edge on the respective axis/plane;

P(x) is the computed pixel color;

C1(x) and C2(x) are input colors from both cubes at distance x from the edge;

w(x)is the computed weight w.r.t. x. If used as a linear blending function: w(x) = 0.5.