Architecture and Knowledge-Driven Self-Adaptive Security in Smart Space

Abstract

:1. Introduction

2. Background and Related Work

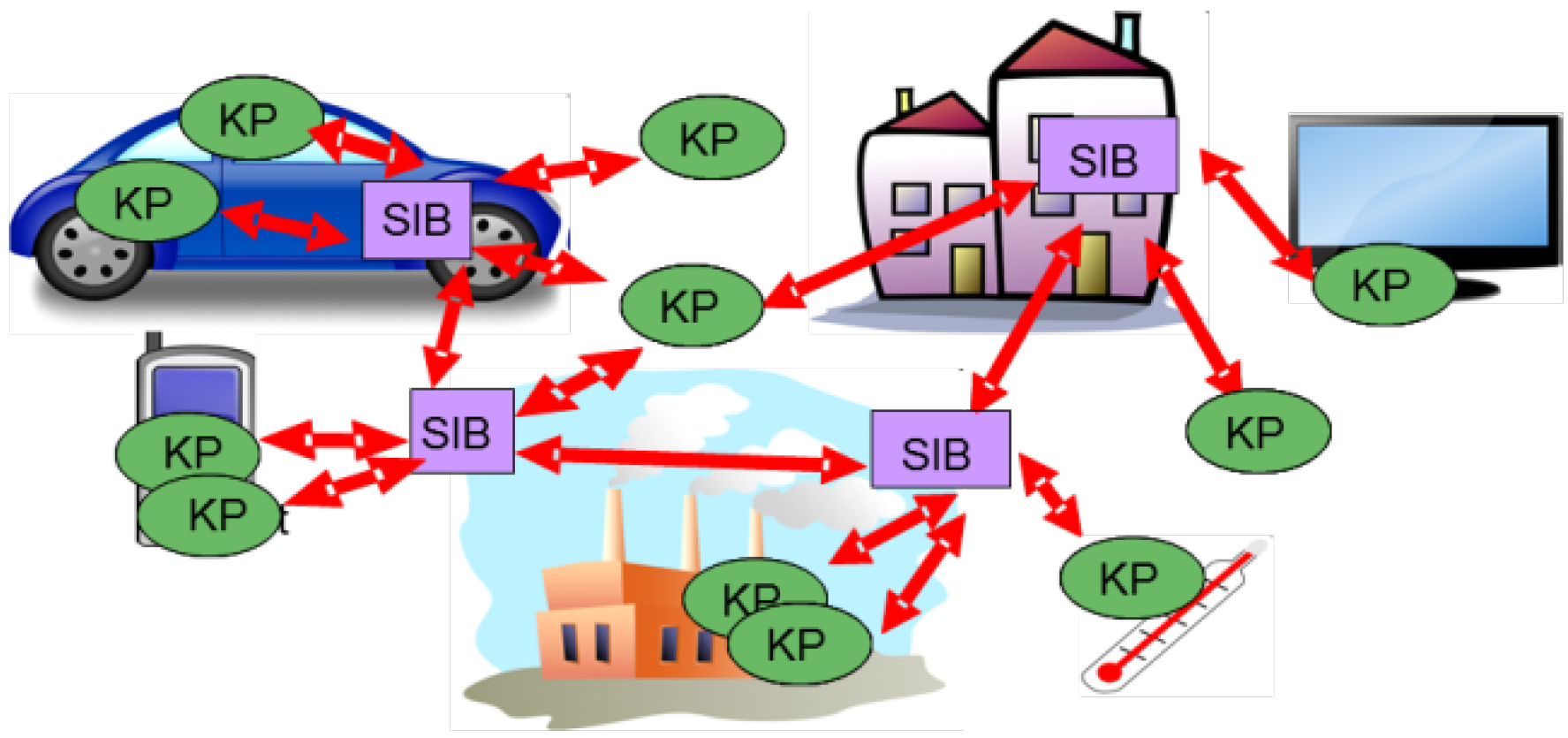

2.1. Smart Spaces

2.2. Security Adaptation

2.3. Adaptation Knowledge from Ontologies

2.4. Access Control over Semantic Information

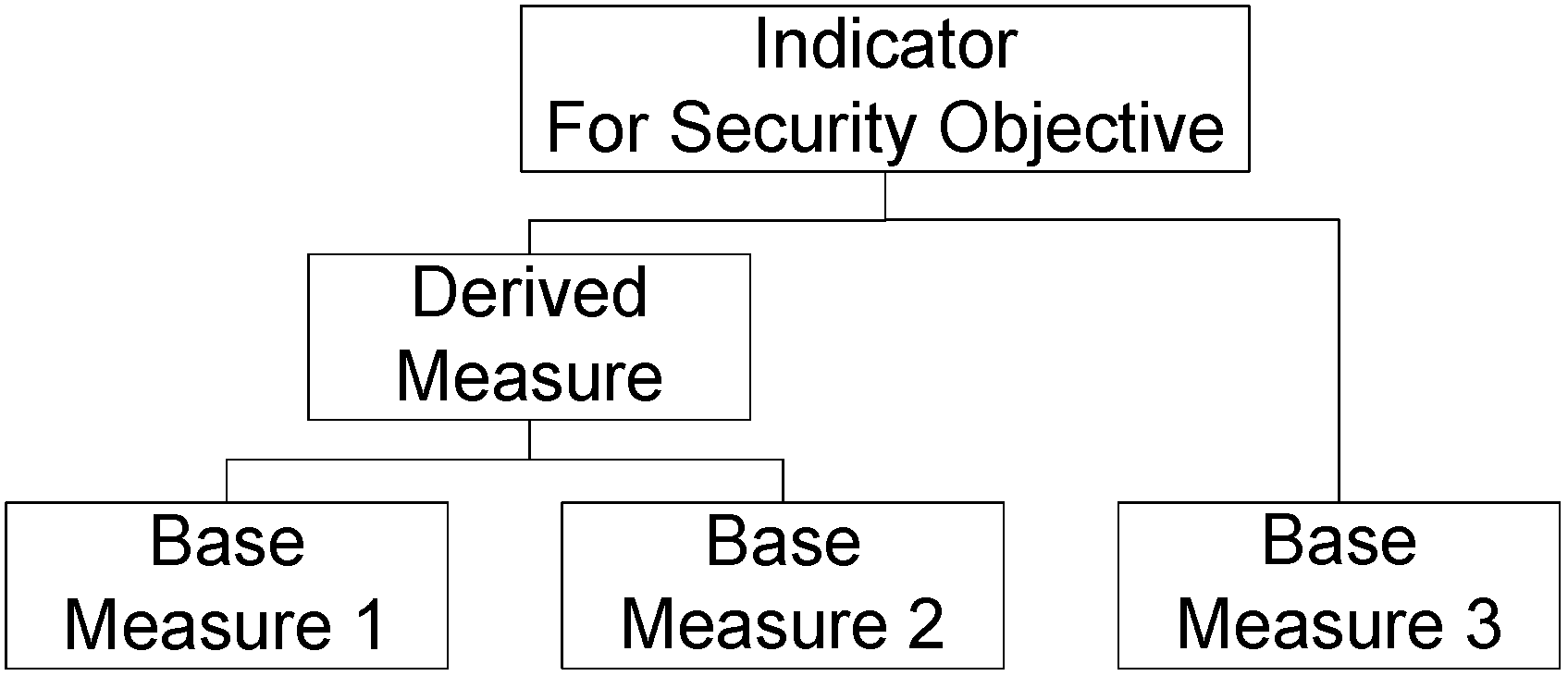

2.5. From Quality Variability to Quality Adaptation

3. The Concept for Adaptive Security

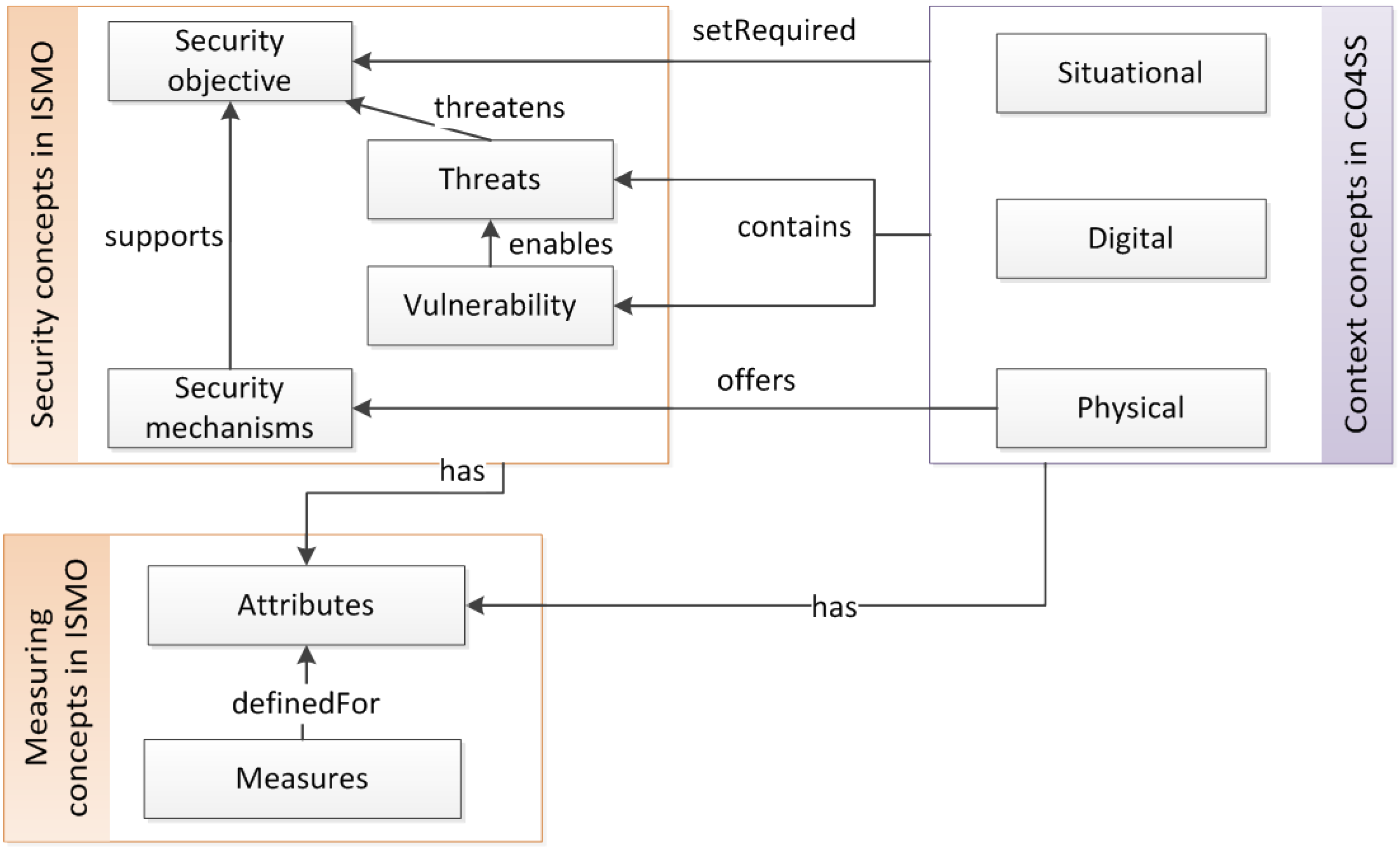

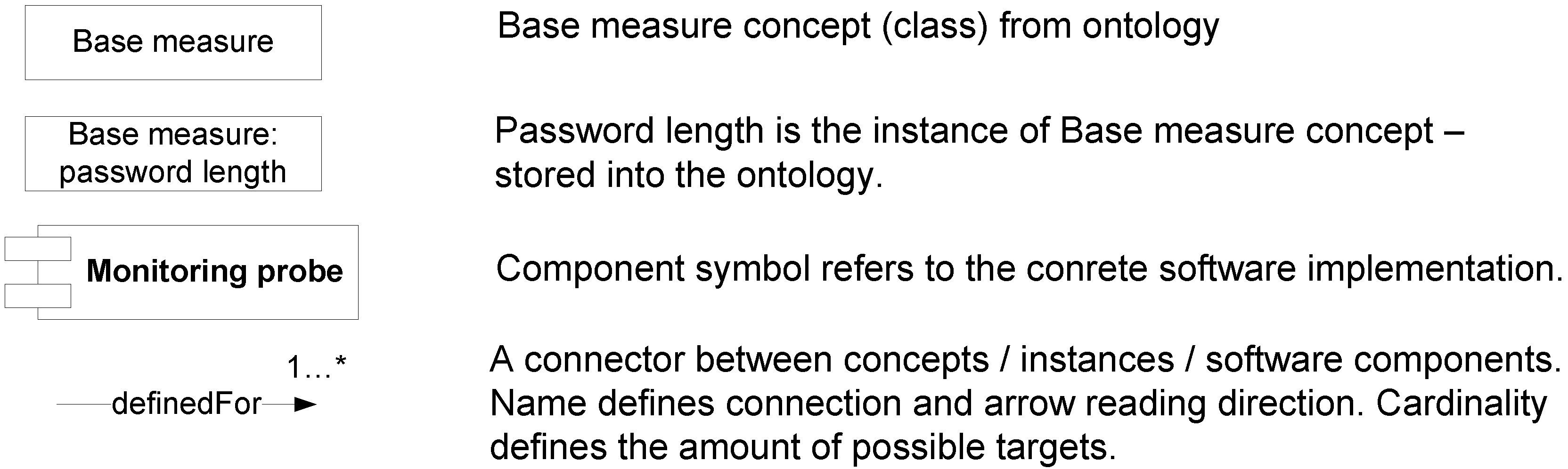

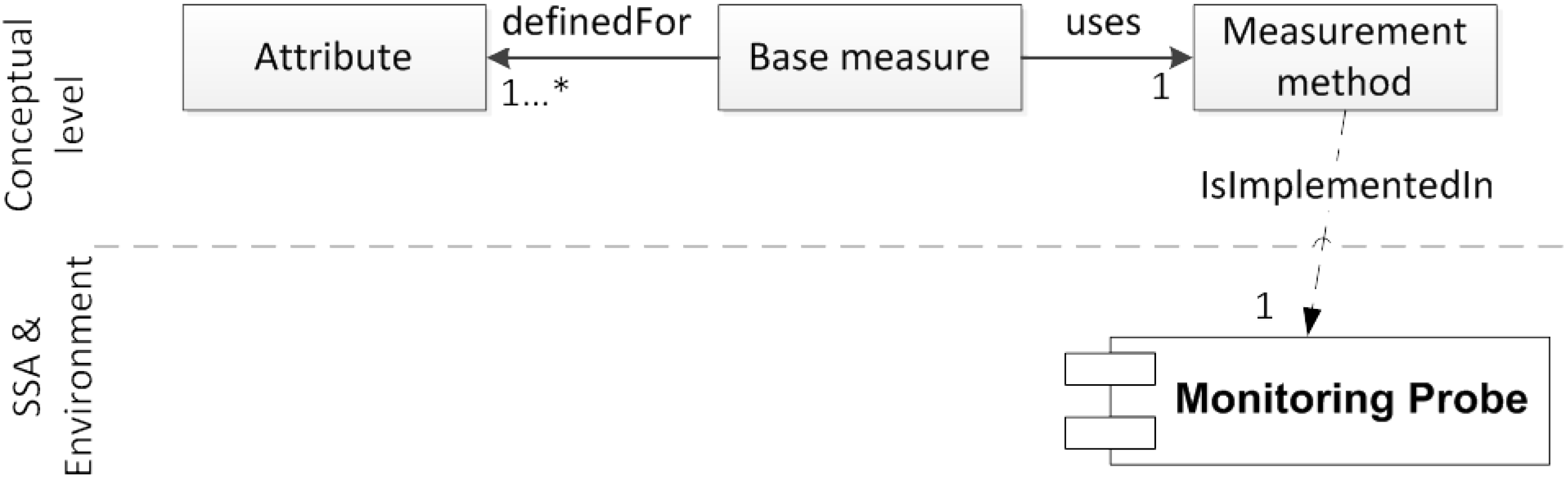

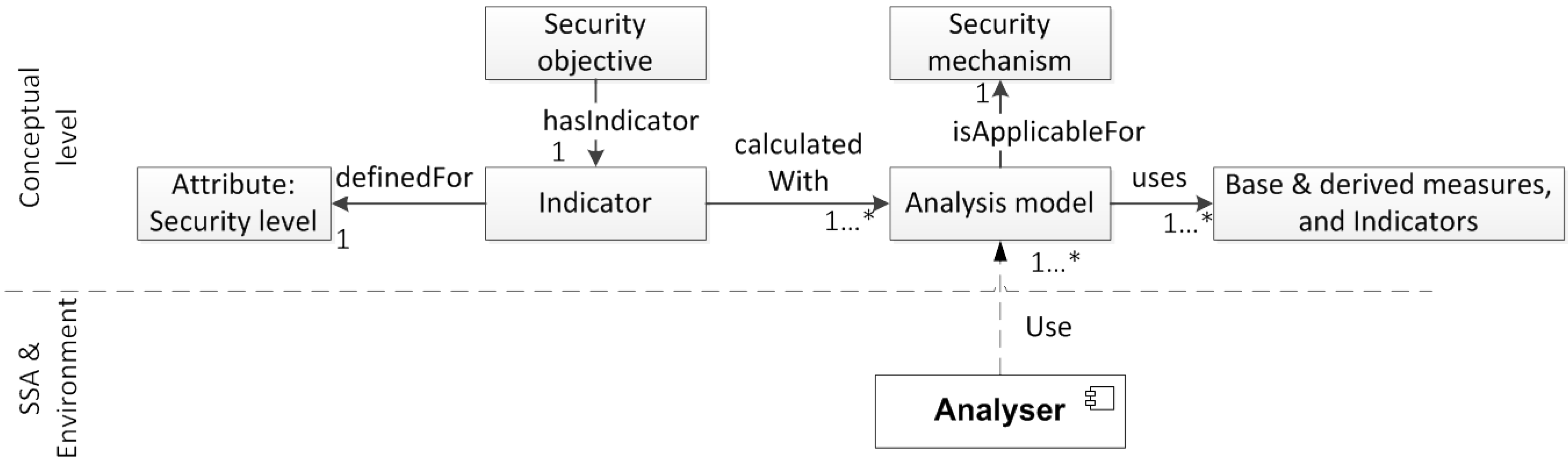

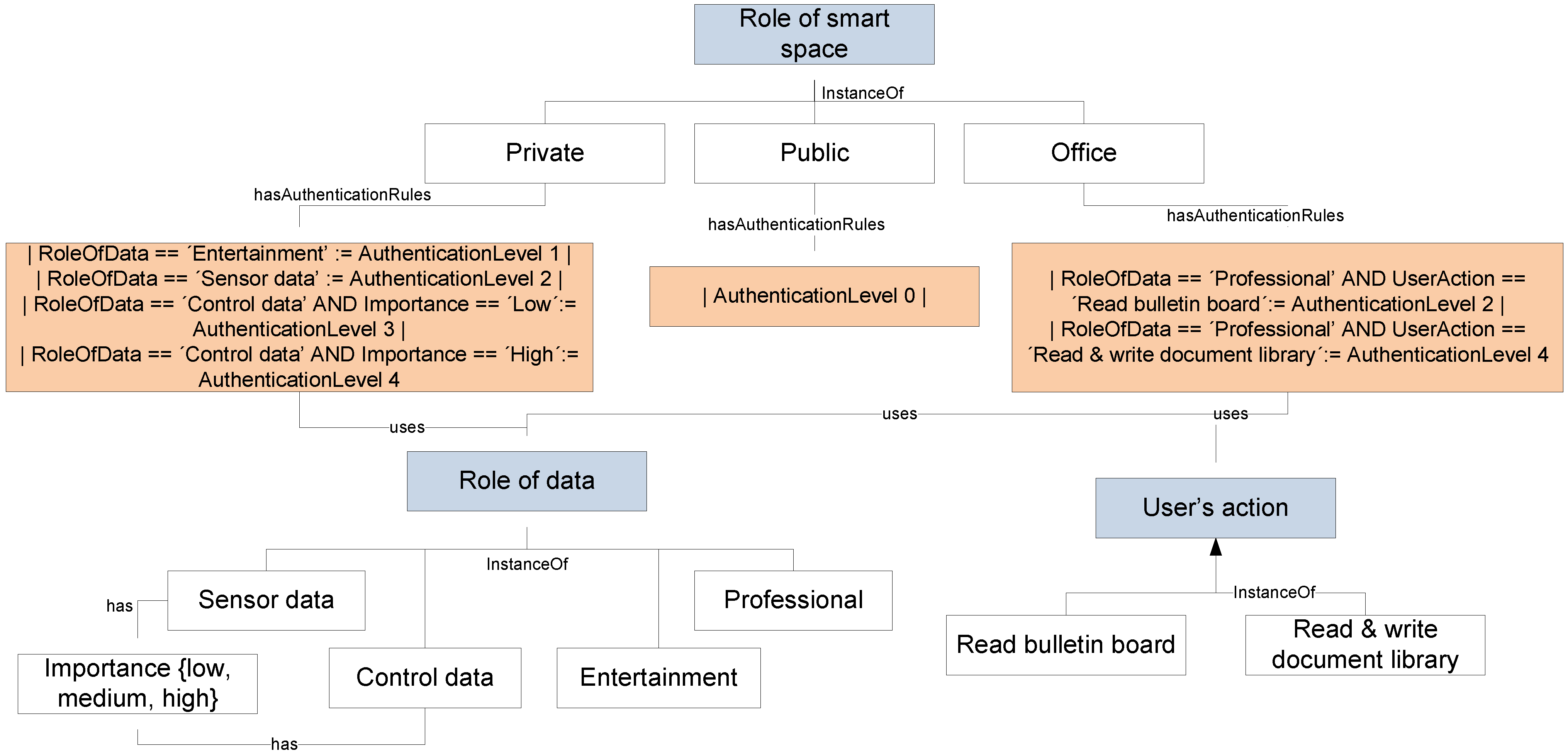

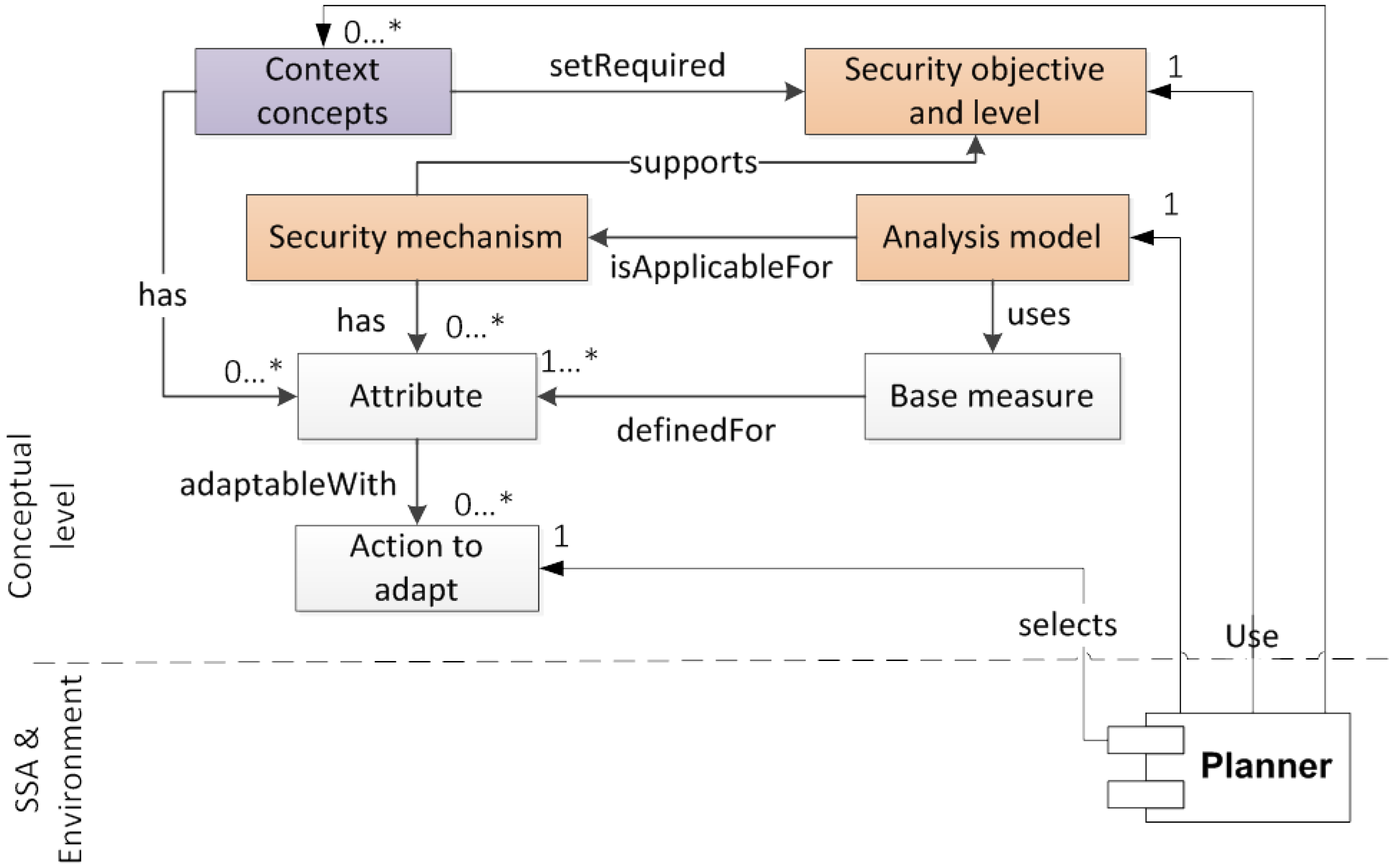

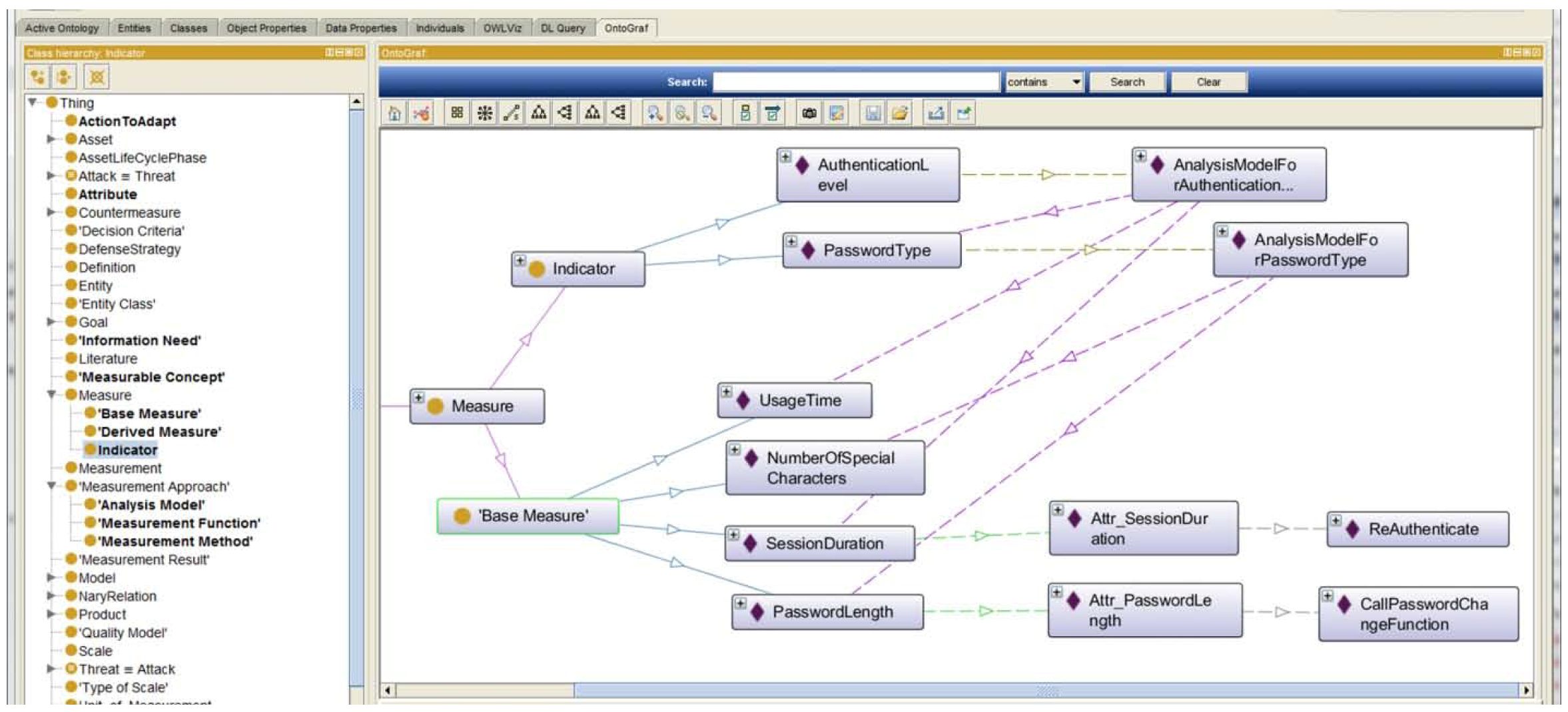

3.1. Security Adaptation Concepts from Ontologies

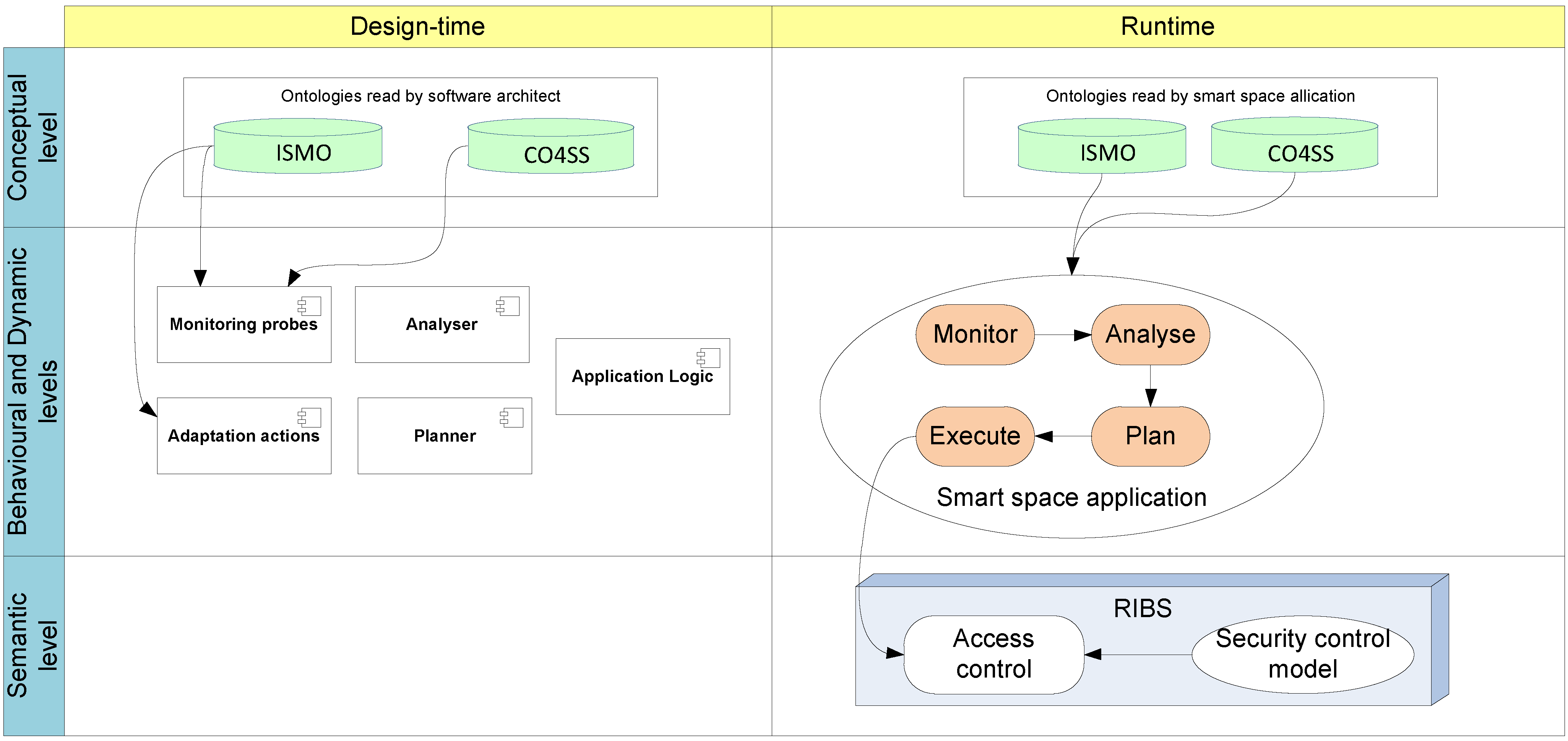

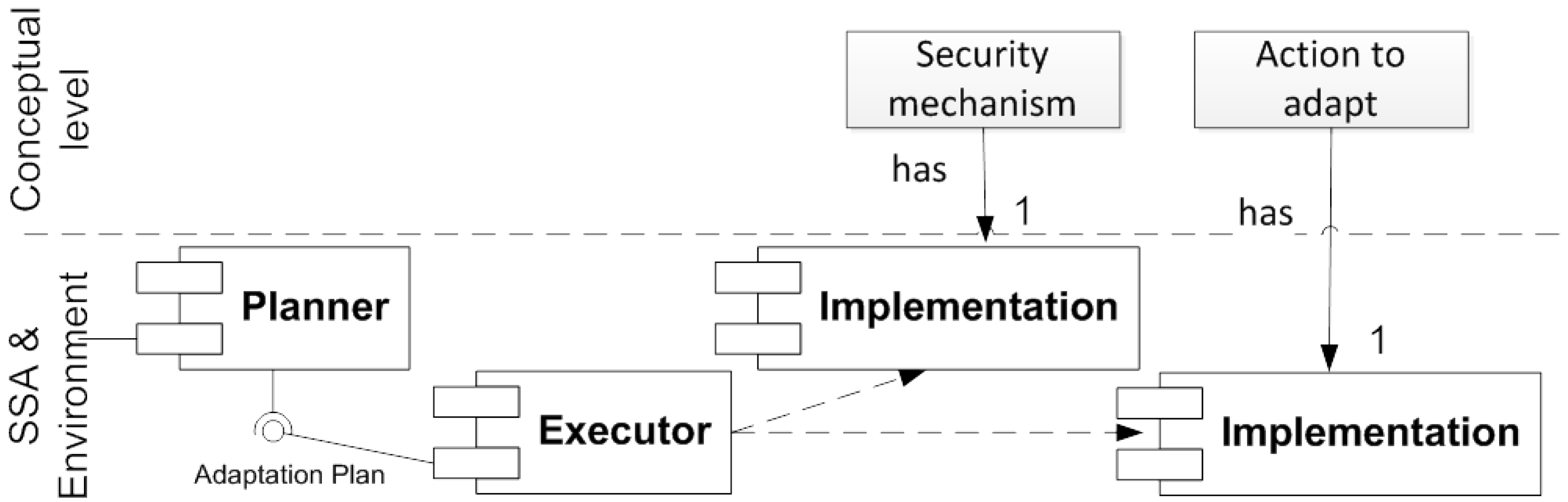

3.2. Architecture for Security Adaptation

3.2.1. Monitor

3.2.2. Analyze

3.2.3. Plan

- (1)

- SSA utilizes pre-defined configuration alternatives.

- (2)

- SSA searches alternative security mechanisms or individual attributes to adapt, based on knowledge from the ISMO.

- (3)

- SSA asks the user how to proceed.

3.2.4. Execute

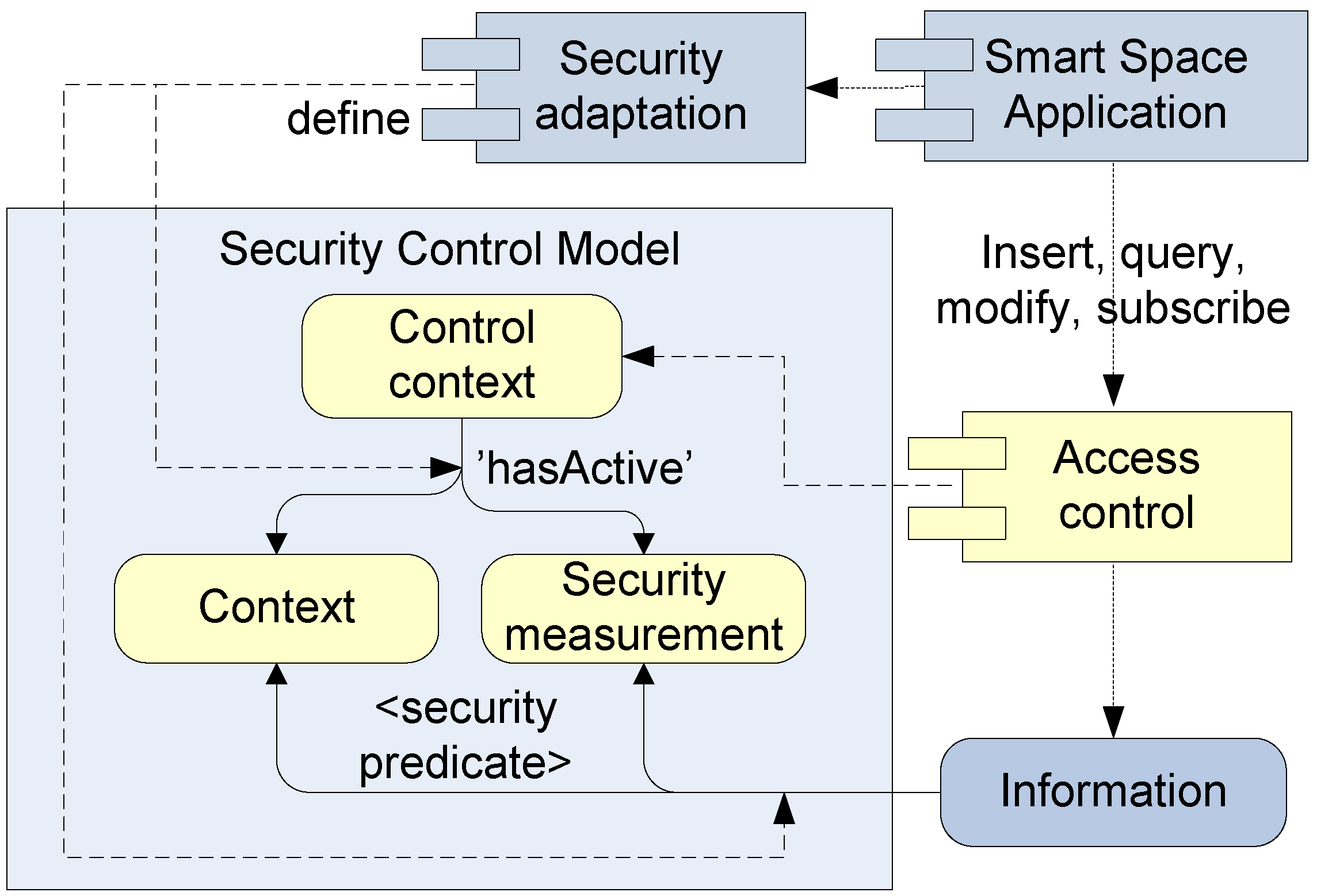

3.3. Runtime Security Control Model

3.3.1. Authorization Predicates

| Predicate | Description |

|---|---|

| GetAllowedFor | Authorizes reading a URI or literal value |

| SetAllowedFor | Authorizes modifying a URI or literal value |

| PropertyCreationAllowedFor | Authorizes adding a new URI or literal node under the URI node |

| PropertyRemovalAllowedFor | Authorizes the removal of a URI or literal node from the URI node |

| UseAsPropertyAllowedFor | Authorizes use of this node under other URI nodes |

| GetDisabledFor | Prevents reading a URI or literal value |

| SetDisabledFor | Prevents modifying a URI or literal value |

| PropertyCreationDisabledFor | Prevents adding a new URI or literal node under the URI node |

| PropertyRemovalDisabledFor | Prevents the removal of a URI or literal node from the URI node |

| UseAsPropertyDisabledFor | Prevents the use of this node under other URI nodes |

| IsAuthorisedBy | Sets a node under access control and specifies authority. There may be several authorities in one broker. |

| Predicate | Description |

|---|---|

| HasBeenAuthoredBy | Identifies a resource’s author |

| HasAddedPredicate | Identifies authors who have added predicates under the resource |

| IsSignedWith | Link to a signature proving authenticity and the origin of the resource |

| HasSecurityContext | Link to any security measurement or context resource which was active when the data was stored (needed to verify e.g. trustworthiness of data ) |

| IsAuthorisedBy | Specifies the authority that controls security. If such relationship to a known security authority is missing, access can be directly authorized without any other checks. |

| CanBeMonitored | Allows or disallows logging (e.g. due to performance or privacy) |

| HasBeenReadBy | Identifies contexts (users) where data has been successfully queried |

| HadInvalidReadAttemptBy | Identifies contexts (users) with rejected read requests |

| HadInvalidWriteAttemptBy | Identifies contexts (users) who have made rejected write requests |

3.3.2. Accounting Predicates

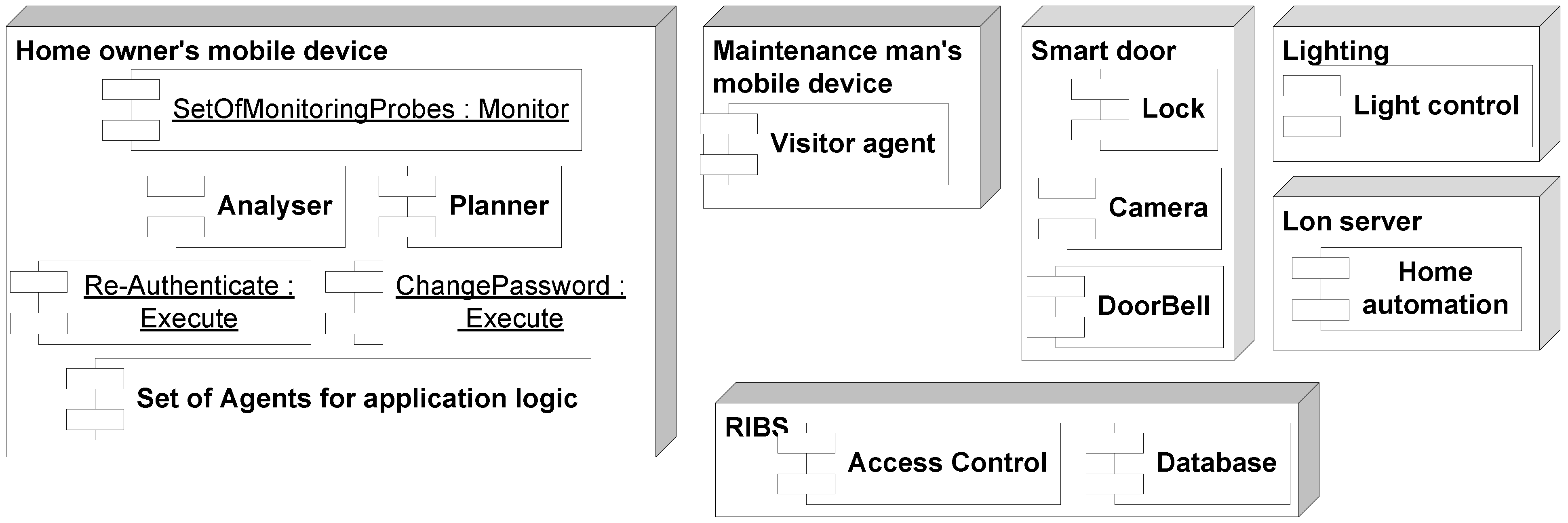

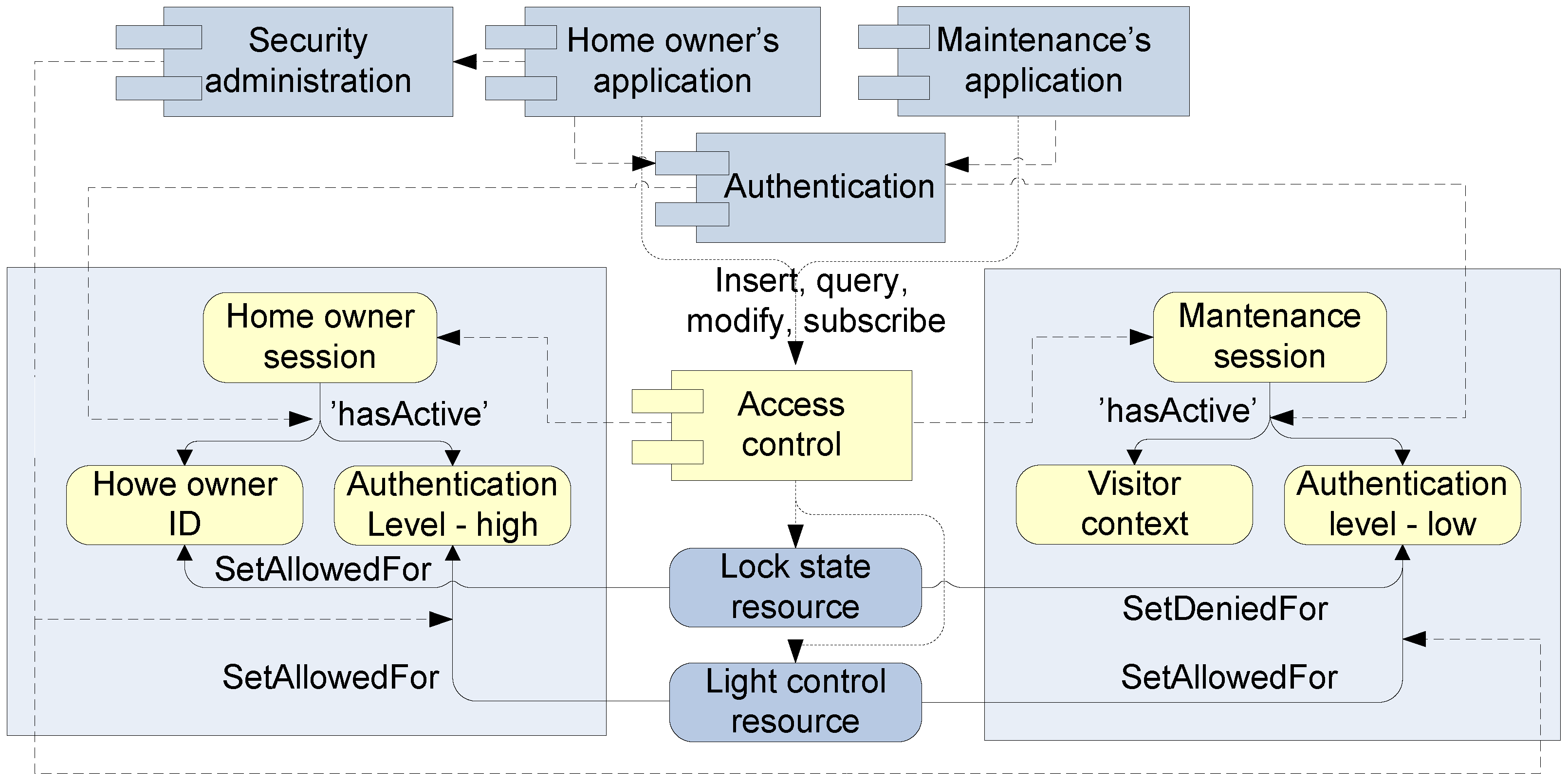

4. Implementation of Adaptive Security

4.1. Case Description

- The SSA running in an end user’s mobile phone utilizes the adaptation loop to ensure an appropriate authentication level in different situations.

- Security- and context-related knowledge is retrieved from ontologies.

- RIBS controls access over shared information by using the security control model.

4.2. Case Implementation

| Action | Situation and Input Information for the Analysis Model | Achieved Auth. Level | Required Auth. Level |

|---|---|---|---|

| 1. Check the lock status | Check the lock status | 2 | 0 |

| Normal password selected 708 h ago. | |||

| Session duration: 2 h | |||

| 2. Open the front door | Remote opening | 2 | 3 |

| Normal password selected 710 h ago. | |||

| Session duration: 4 h | |||

| 3. Lock the front door | Remote locking | 2 | 1 |

| Normal password selected 712 h ago. | |||

| Session duration: 2 h | |||

| 4. Open the front door | Local opening | 0 | 3 |

| Normal password selected 719 h ago. | |||

| Session duration: 7 h | |||

| 5. Modify lighting | Local modification | 3 | 1 |

| Normal password selected 719 h ago. | |||

| Session duration: 0.02 h | |||

| 6. Modify home automation | Local modification | 1 | 2 |

| Normal password selected 721 h ago. | |||

| Session duration: 2 h |

4.3. Lessons Learned

5. Discussion

5.1. The Advantages of the Approach

5.2. The Challenges of the Approach and Future Research

5.3. The Maturity of the Approach

| Validation Case | Description |

|---|---|

| Risk-based security adaptation in a greenhouse [41,57]. | A greenhouse with a shopping area constitutes a public smart space. In the smart space threats increase the risk levels and security mechanisms decrease risks. Hence, the monitoring concentrates on recognizing threats. In this case, confidentiality and integrity were considered. Furthermore, users authenticated by means of gait information identified from the measurements of acceleration sensors inside the mobile phone. |

| Adaptive user authentication [38]. | The first case that utilized knowledge from the ISMO. It adapts user authentication by monitoring authentication-related measures: password length, age, variation of characters and session duration. Important information was available only when an acceptable authentication level was reached. The user’s re-authentication was requested when the session duration was exceeded. |

| Role- and popularity-based access-control simulations [51,59]. | Controlling access to information according to the user’s role or popularity of information. Popularity is a measure that indicates how many readers or how many authors an RDF resource has. These adaptation cases were simulated with the smodels logic solver. |

| Adding new knowledge into the ISMO [54]. | The paper and related case example showed how easily knowledge in the ISMO can be extended. Moreover, design steps to develop adaptive security were presented. |

6. Conclusions

Acknowledgments

Conflict of Interests

References

- Conti, M.; Das, S.K.; Bisdikian, C.; Kumar, M.; Ni, L.M.; Passarella, A.; Roussos, G.; Tröster, G.; Tsudik, G.; Zambonelli, F. Looking ahead in pervasive computing: Challenges and opportunities in the era of cyber–physical convergence. Pervasive Mob. Comput. 2012, 8, 2–21. [Google Scholar]

- Elkhodary, A.; Whittle, J. A Survey of Approaches to Adaptive Application Security. In Proceedings of the International Workshop on Software Engineering for Adaptive and Self-Managing Systems, Minneapolis, USA, 20–26 May 2007; pp. 16–23.

- Yuan, E.; Malek, S. A taxonomy and survey of self-protecting software systems. In Proceedings of the IEEE Software Engineering for Adaptive and Self-Managing Systems, Zürich, Switzerland, 4–5 June 2012; pp. 109–118.

- Cook, D.J.; Das, S.K. How smart are our environments? An updated look at the state of the art. Pervasive Mob. Comput. 2007, 3, 53–73. [Google Scholar] [CrossRef]

- Ovaska, E.; Salmon Cinotti, T.; Toninelli, A. Design principles and practices of interoperable smart spaces. In Advanced Design Approaches to Emerging Software Systems: Principles, Methodologies, and Tools; Liu, X., Li, Y., Eds.; IGI Global, 2011; pp. 18–47. [Google Scholar]

- Pantsar-Syväniemi, S.; Purhonen, A.; Ovaska, E.; Kuusijärvi, J.; Evesti, A. Situation-Based and Self-Adaptive Applications for Smart Environment. J. Ambient Intelligence and Smart Environ. 2012, 4, 491–516. [Google Scholar]

- Honkola, J.; Laine, H.; Brown, R.; Tyrkkö, O. Smart–M3 information sharing platform. In Proceedings of the IEEE Symposion on Computers and Communications, Riccione, Italy, 22–25 June 2010; pp. 1041–1046.

- RDF Primer. 2004. Available online: http://www.w3.org/TR/rdf-primer/ (accessed on 23 November 2012).

- SOFIA Smart Objects For Intelligent Applications. 2012. Available online: http://www.sofia-project.eu (accessed on 23 November 2012).

- Suomalainen, J.; Hyttinen, P.; Tarvainen, P. Secure information sharing between heterogeneous embedded devices. In Proceedings of the 4th European Conference on Software Architecture: Companion Volume, Copenhagen, Denmark, 23–26 August 2010; ACM, 2010; pp. 205–212. [Google Scholar]

- Kephart, J.O.; Chess, D.M. The vision of autonomic computing. Computer 2003, 36, 41–50. [Google Scholar] [CrossRef]

- Dobson, S.; Denazis, S.; Fernández, A.; Gaïti, D.; Gelenbe, E.; Massacci, F.; Nixon, P.; Saffre, F.; Schmidt, N.; Zambonelli, F. A survey of autonomic communications. ACM Trans. Auton. Adapt. Syst. 2006, 1, 223–259. [Google Scholar] [CrossRef]

- Salehie, M.; Tahvildari, L. Self-adaptive software: Landscape and research challenges. ACM Trans. Auton. Adapt. Syst. 2009, 4, 1–42. [Google Scholar] [CrossRef]

- Psaier, H.; Dustdar, S. A survey on self-healing systems: approaches and systems. Computing 2011, 91, 43–73. [Google Scholar] [CrossRef]

- Huebscher, M.C.; McCann, J.A. A survey of autonomic computing—degrees, models, and application. ACM Comput. Surv. 2008, 40, 1–28. [Google Scholar] [CrossRef]

- Matinlassi, M.; Niemelä, E. The impact of maintainability on component-based software systems. In Proceedings of the 29th Euromicro Conference, Belek-Antalya, Turkey, 3–5 September 2003; IEEE, 2003; pp. 25–32. [Google Scholar]

- Hashii, B.; Malabarba, S.; Pandey, R.; Bishop, M. Supporting reconfigurable security policies for mobile programs. Computer Networks 2000, 33, 77–93. [Google Scholar] [CrossRef]

- Hu, W.; Hiser, J.; Williams, D.; Filipi, A.; Davidson, J.W.; Evans, D.; Knight, J.C.; Nguyen-Tuong, A.; Rowanhill, J. Secure and practical defense against code-injection attacks using software dynamic translation. In Proceedings of the 2nd international conference on Virtual execution environments, Ottawa, Canada, 14–16 June 2006; ACM, 2006; pp. 2–12. [Google Scholar]

- Knight, J.C.; Strunk, E.A. Achieving critical system survivability through software architectures. In Architecting Dependable Systems II; Lemos, R., Gacek, C., Romanovsky, A., Eds.; Springer: Berlin Heidelberg, 2004; pp. 51–78. [Google Scholar]

- Ryutov, T.; Zhou, L.; Neuman, C.; Leithead, T.; Seamons, K.E. Adaptive trust negotiation and access control. In Proceedings of the 10th ACM Symposium on Access Control Models and Technologies, Stockholm, Sweden, 1–3 June 2005; pp. 139–146.

- Klenk, A.; Niedermayer, H.; Masekowsky, M.; Carle, G. An architecture for autonomic security adaptation. Ann. Telecommun. 2006, 61, 1066–1082. [Google Scholar] [CrossRef]

- Hulsebosch, R.; Bargh, M.; Lenzini, G.; Ebben, P.; Iacob, S. Context sensitive adaptive authentication. In Smart Sensing and Context; Kortuem, G., Finney, J., Lea, R., Sundramoorthy, V., Eds.; Springer: Berlin Heidelberg, 2007; pp. 93–109. [Google Scholar]

- Abie, H.; Savola, R.M.; Bigham, J.; Dattani, I.; Rotondi, D.; Da Bormida, G. Self-Healing and Secure Adaptive Messaging Middleware for Business-Critical Systems. Int. J. Adv. Se. 2010, 3, 34–51. [Google Scholar]

- Savola, R.; Abie, H. Development of measurable security for a distributed messaging system. Int. J. Adv. 2009, 2, 358–380. [Google Scholar]

- Wang, C.; Wulf, W.A. Towards a Framework for Security Measurement. In Proceedings of the 20th National Information Systems Security Conference, Baltimore, Maryland, USA, October 1997; pp. 522–533.

- García, F.; Bertoa, M.F.; Calero, C.; Vallecillo, A.; Ruíz, F.; Piattini, M.; Genero, M. Towards a consistent terminology for software measurement. Inf. Softw. Technol. 2006, 48, 631–644. [Google Scholar] [CrossRef]

- Haley, C.B.; Laney, R.; Moffett, J.D.; Nuseibeh, B. Security Requirements Engineering: A Framework for Representation and Analysis. IEEE Trans. Softw. Eng. 2008, 34, 133–153. [Google Scholar] [CrossRef]

- Salehie, M.; Pasquale, L.; Omoronyia, I.; Ali, R.; Nuseibeh, B. Requirements-driven adaptive security: Protecting variable assets at runtime. In Proceedings of the 20th International Requirements Engineering Conference (RE), Chicago, USA, 24–28 September 2012; IEEE, 2012; pp. 111–120. [Google Scholar]

- ISO/IEC 15408-1:2009 Standard, Common Criteria for Information Technology Security Evaluation – Part 1: Introduction and general model, International Organization of Standardization. 2009.

- Sahinoglu, M. Security meter: a practical decision-tree model to quantify risk. Security Privacy 2005, 3, 18–24. [Google Scholar] [CrossRef]

- Zhou, J. Knowledge Dichotomy and Semantic Knowledge Management. In Proceedings of the 1st IFIP WG12.5 Working Conference on Industrial Applications of Semantic Web, Jyväskylä, Finland, 25–27 August 2005; Springer: US, 2005; pp. 305–316. [Google Scholar]

- Blanco, C.; Lasheras, J.; Valencia-García, R.; Fernández-Medina, E.; Toval, A.; Piattini, M. A systematic review and comparison of security ontologies. In Proceedings of the 3rd International Conference on AvailabilitySecurityand Reliability, Barcelona, Spain, 4–7 March 2008; IEEE, 2008; pp. 813–820. [Google Scholar]

- Evesti, A.; Ovaska, E.; Savola, R. From security modelling to run-time security monitoring. In Proceedings of the European Worshop on Security in Model Driven ArchitectureCTIT Centre for Telematics and Information Technology, Enchede, Netherlands, 23–26 June 2009; pp. 33–41.

- Kim, A.; Luo, J.; Kang, M. Security Ontology for annotating resources. In Proceedings of the On the Move to Meaningful Internet Systems 2005: CoopIS, DOA, and ODBASE, Agia Napa, Cyprus, 31 October–4 November 2005; Springer-Verlag: Berlin Heidelberg, 2005; pp. 1483–1499. [Google Scholar]

- Denker, G.; Kagal, L.; Finin, T. Security in the Semantic Web using OWL. Inform. Sec. Tech. Rep. 2005, 10, 51–58. [Google Scholar] [CrossRef]

- Savolainen, P.; Niemelä, E.; Savola, R. A taxonomy of information security for service centric systems. In Proceedings of the 33rd EUROMICRO Conference on Software Engineering and Advanced Applications, Lübeck, Germany, 27–31 August 2007; IEEE, 2007; pp. 5–12. [Google Scholar]

- Herzog, A.; Shahmehri, N.; Duma, C. An ontology of information security. J. Inform. Sec. Privacy 2007, 1, 1–23. [Google Scholar] [CrossRef]

- Evesti, A.; Savola, R.; Ovaska, E.; Kuusijärvi, J. The Design, Instantiation, and Usage of Information Security Measuring Ontology. In Proceedings of the 2nd International Conference on Models and Ontology-based Design of Protocols, Architectures and Services, Budapest, Hungary, 17–22 April 2011; IARIA, 2011; pp. 1–9. [Google Scholar]

- Pantsar-Syväniemi, S.; Kuusijärvi, J.; Ovaska, E. Supporting Situation-awareness in Smart Spaces. In Proceedings of the International Workshops, S3E, HWTS, Doctoral Colloquium, Held in Conjunction with GPC 2011, Oulu, Finland, 11–13 May 2011; Springer-Verlag: Berlin Heidelberg, Germany, 2012; pp. 14–23. [Google Scholar]

- Evesti, A.; Pantsar-Syväniemi, S. Towards micro architecture for security adaptation. In Proceedings of the 4th European Conference on Software Architecture: Companion Volume, Copenhagen, Denmark, 23–26 August 2010; ACM, 2010; pp. 181–188. [Google Scholar]

- Evesti, A.; Ovaska, E. Ontology-Based Security Adaptation at Run-Time. In Proceedings of the 4th International Conference on Self-Adaptive and Self-Organizing Systems, Budapest, Hungary, 27 September–1 October 2010; IEEE, 2010; pp. 204–212. [Google Scholar]

- Dietzold, S.; Auer, S. Access control on RDF triple stores from a semantic wiki perspective. In Proceedings of the Scripting for the Semantic Web Workshop at 3rd European Semantic Web Conference, Budva, Montenegro, 11–14 June 2006; CEUR Workshop, 2006; pp. 1–9. [Google Scholar]

- D’Elia, A.; Honkola, J.; Manzaroli, D.; Salmon Cinotti, T. Access Control at Triple Level: Specification and Enforcement of a Simple RDF Model to Support Concurrent Applications in Smart Environments. In Proceedings of the 11th International Conference, NEW2AN 2011, and 4th Conference on Smart Spaces, ruSMART 2011, St. Petersburg, Russia, 22–25 August 2011; Springer: Berlin Heidelberg, 2011; pp. 63–74. [Google Scholar]

- Reddivari, P.; Finin, T.; Joshi, A. Policy-based access control for an RDF store. In Proceedings of the Policy Management for the Web, Chiba, Japan, 10–14 May 2005; pp. 78–81.

- Jain, A.; Farkas, C. Secure resource description framework: an access control model. In Proceedings of the 11th symposium on Access control models and technologies, Lake Tahoe, California, USA, 7–9 June 2006; ACM, 2006; pp. 121–129. [Google Scholar]

- Flouris, G.; Fundulaki, I.; Michou, M.; Antoniou, G. Controlling access to RDF graphs. In Proceedings of the Future Interner - FIS 2010, Berlin, Germany, 20–22 September 2010; Springer: Berlin Heidelberg, 2010; pp. 107–117. [Google Scholar]

- Kim, J.; Jung, K.; Park, S. An Introduction to Authorization Conflict Problem in RDF Access Control. In Proceedings of the Knowledge-Based Intelligent Information and Engineering Systems, Zagreb, Croatia, 3–5 September 2008; Springer: Berlin Heidelberg, 2008; pp. 583–592. [Google Scholar]

- Cho, E.; Kim, Y.; Hong, M.; Cho, W. Fine-Grained View-Based Access Control for RDF Cloaking. In Proceedings of the 9th International Conference on Computer and Information Technology, Xiamen, China, 11–14 October 2009; IEEE, 2009; pp. 336–341. [Google Scholar]

- Bock, J.; Haase, P.; Ji, Q.; Volz, R. Benchmarking OWL reasoners. In Proceedings of the Workshop on Advancing Reasoning on the Web: Scalability and Commonsense; CEUR Workshop Proceedings: Tenerife, Spain, 2008; pp. 1–15. [Google Scholar]

- Dentler, K.; Cornet, R.; Ten Teije, A.; De Keizer, N. Comparison of reasoners for large ontologies in the OWL 2 EL profile. Semantic Web 2011, 2, 71–87. [Google Scholar]

- Suomalainen, J.; Hyttinen, P. Security Solutions for Smart Spaces. In Proceedings of the 11th International Symposium on Applications and the Internet, Munich, Germany, 18–21 July 2011; IEEE, 2011; pp. 297–302. [Google Scholar]

- Niemelä, E.; Evesti, A.; Savolainen, P. Modeling quality attribute variability. In Proceedings of the 3rd International Conference on Evaluation of Novel Approaches to Software Engineering, Funchal, Madeira, Portugal, 4–7 May 2008; pp. 169–176.

- Ovaska, E.; Evesti, A.; Henttonen, K.; Palviainen, M.; Aho, P. Knowledge based quality-driven architecture design and evaluation. Inf. Softw. Technol. 2010, 52, 577–601. [Google Scholar] [CrossRef]

- Evesti, A.; Ovaska, E. Design Time Reliability Predictions for Supporting Runtime Security Measuring and Adaptation. In Proceedings of the 3rd International Conference on Emerging Network Intelligence, Lisbon, Portugal, 20–25 November 2011; IARIA, 2011; pp. 94–99. [Google Scholar]

- Sofia Pilot Brochure. 2012. Available online: http://www.slideshare.net/sofiaproject/sofia-project-brochure-pilots-set (accessed on 23 November 2012).

- Cam4Home Project. Cam4Home. Available online: http://www.cam4home-itea.org/ (accessed on 8 May 2012).

- Evesti, A.; Eteläperä, M.; Kiljander, J.; Kuusijärvi, J.; Purhonen, A.; Stenudd, S. Semantic Information Interoperability in Smart Spaces. In Proceedings of the The 8th International Conference on Mobile and Ubiquitous Multimedia, Cambride, UK, 22–25 November 2009; ACM, 2009; pp. 158–159. [Google Scholar]

- Dierks, T.; Rescorla, E. The Transport Layer Security (TLS) Protocol Version 1.2. 2008. Available online: http://www.ietf.org/rfc/rfc5246.txt (accessed on 23 November 2012).

- Suomalainen, J. Flexible Security Deployment in Smart Spaces. In Proceedings of the International Workshops, S3E, HWTS, Doctoral Colloquium, Held in Conjunction with GPC 2011, Oulu, Finland, 11–13 May 2011; Springer: Berlin Heidelberg, Germany, 2012; pp. 34–43. [Google Scholar]

© 2013 by the authors; licensee MDPI, Basel, Switzerland. This article is an open-access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Evesti, A.; Suomalainen, J.; Ovaska, E. Architecture and Knowledge-Driven Self-Adaptive Security in Smart Space. Computers 2013, 2, 34-66. https://doi.org/10.3390/computers2010034

Evesti A, Suomalainen J, Ovaska E. Architecture and Knowledge-Driven Self-Adaptive Security in Smart Space. Computers. 2013; 2(1):34-66. https://doi.org/10.3390/computers2010034

Chicago/Turabian StyleEvesti, Antti, Jani Suomalainen, and Eila Ovaska. 2013. "Architecture and Knowledge-Driven Self-Adaptive Security in Smart Space" Computers 2, no. 1: 34-66. https://doi.org/10.3390/computers2010034

APA StyleEvesti, A., Suomalainen, J., & Ovaska, E. (2013). Architecture and Knowledge-Driven Self-Adaptive Security in Smart Space. Computers, 2(1), 34-66. https://doi.org/10.3390/computers2010034