Fake News Detection Using Machine Learning and Deep Learning Algorithms: A Comprehensive Review and Future Perspectives

Abstract

1. Introduction

2. Related Works

2.1. Machine Learning

2.2. Deep Learning

2.3. Machine Learning and Deep Learning

2.4. Optimization Techniques

3. Methodology

3.1. Research Questions

- RQ1: What is the accuracy of the primary techniques employed to detect fake news?

- RQ2: What datasets are used?

- RQ3: Do gaps affect model performance?

3.2. Search Process

3.2.1. Sources and Data Collection

3.2.2. Search Keywords

3.2.3. Expression of Research

3.2.4. Inclusion and Exclusion Standards

- Detecting fake news;

- Using machine learning to detect the fake news;

- Using deep learning to detect the fake news.

- It does not present the use of algorithms to detect fake news.

- No performance has been provided in identifying fake news.

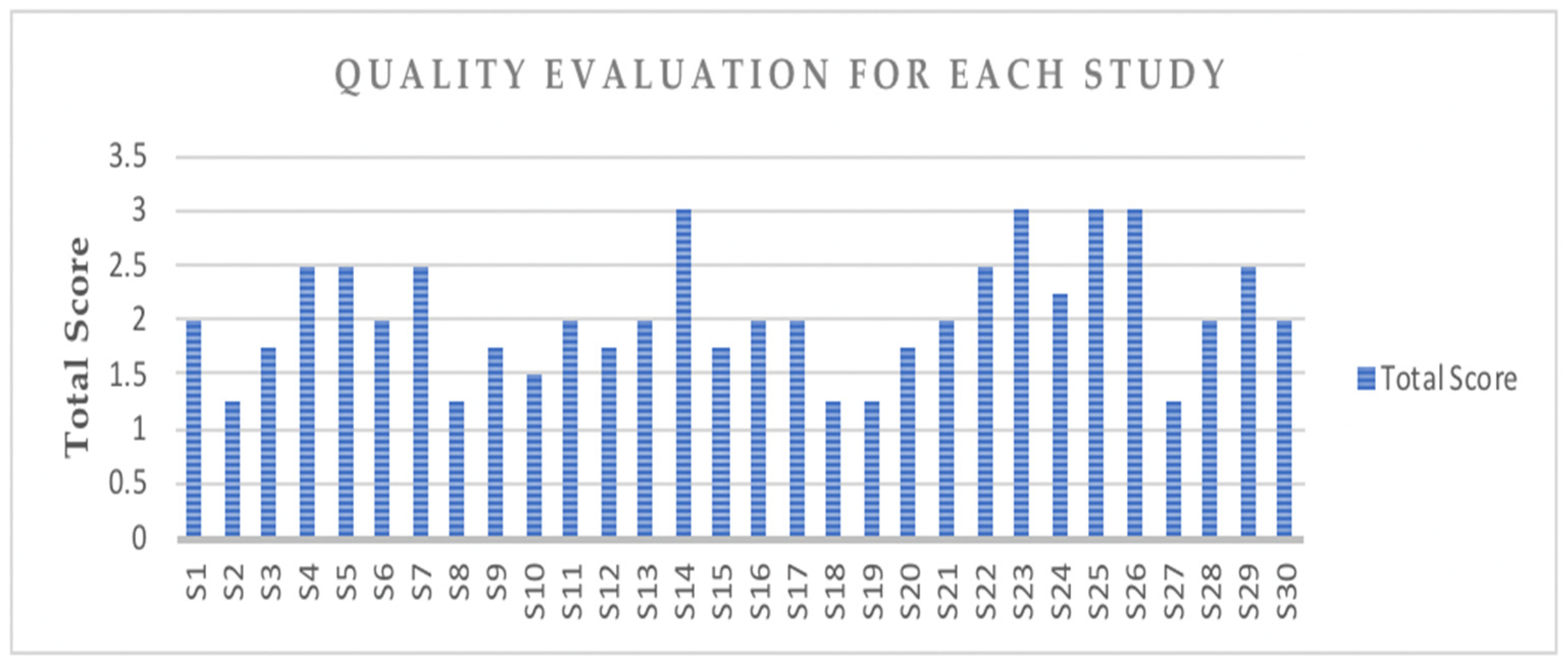

3.2.5. Quality Valuation

- QV1: Did the study demonstrate the use of machine learning and deep learning methods/algorithms together to detect fake news?

- QV2: Is the dataset used in the model sufficient to achieve high performance?

- QV3: Does the model demonstrate high performance?

- QA1 as described in QV1: Y (yes)—the study demonstrated both machine learning and deep learning methods for detecting fake news. P (partially)—the study demonstrated either machine learning or deep learning methods. N (no)—the study did not demonstrate clear methods for detecting fake news.

- QA2 as described in QV2: Y (yes)—the dataset is sufficient. P (partially)—the dataset is partially sufficient. N (no)—the study did not state a clear dataset.

- QA3 as described in QV3: The study showed a high performance of greater than or equal to 98%, with an RMSE of less than or equal to 0.75 and an MAE of less than 0.5. P (partial)—the study showed a performance of less than 98% and greater than or equal to 95%, with an RMSE of greater than 0.75 and less than or equal to 1 and an MAE of greater than 0.5 and less than or equal to 0.75. LP (less than partial)—the study showed a performance of less than 95%, with an RMSE of greater than 1 and less than or equal to 2 and am MAE of greater than 0.75 and less than or equal to 1.5.

4. Results and Discussion

4.1. Machine and Deep Learning Algorithms

4.1.1. Logistic Regression Classification Algorithm

4.1.2. Decision Tree Algorithm

4.1.3. Random Forest Classification Algorithm

4.1.4. Boosting Classification Algorithm

4.1.5. K-Nearest Neighbor (KNN) Algorithm

4.1.6. Naïve Bayes Classification Algorithm

4.1.7. Support Vector Machine (SVM) Algorithm

4.1.8. Convolutional Neural Network (CNN) Algorithm

4.1.9. Recurrent Neural Network (RNN) Algorithm

4.1.10. BI-Directional Long Short-Term Memory (BI-LSTM) Algorithm

4.1.11. Graph Neural Network (GNN) Algorithm

4.2. Features Extraction

4.2.1. Term Frequency (TF)

4.2.2. Term Frequency–Inverse Document Frequency (TF-IDF)

4.2.3. Word2Vec Embedding

4.2.4. FastText

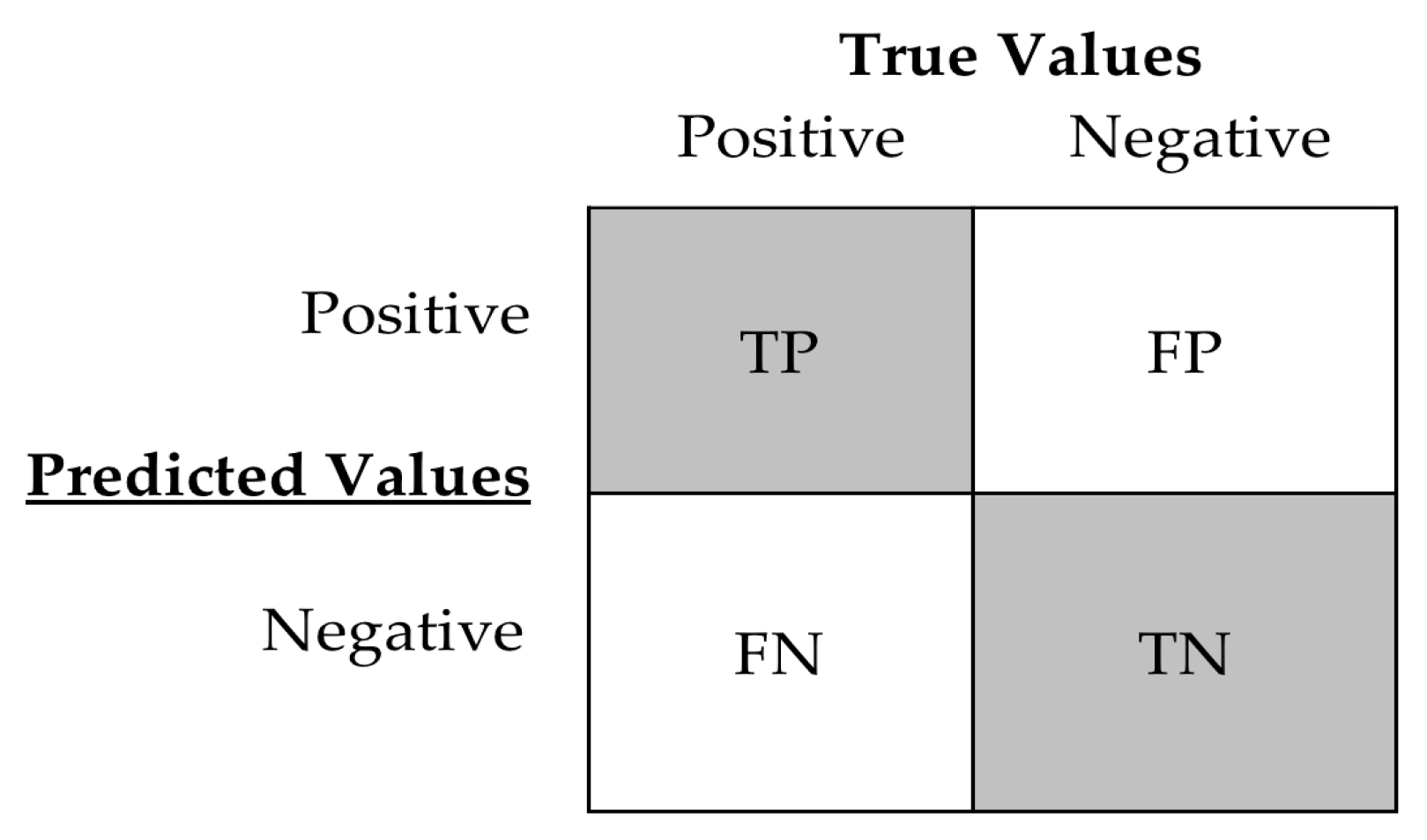

4.3. Performance

4.4. Current Challenges and Future Perspectives

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Study | Author | Year | Dataset | Algorithms/Methods |

|---|---|---|---|---|

| S1 | Aphiwongsophon and Chongstitvatana [1] | 2018 | Twitter API |

|

| S2 | Krishna and Kumar [2] | 2021 | Kaggle |

|

| S3 | Ni et al. [3] | 2021 | Open-source FakeNewsNet PolitiFact and GossipCop |

|

| S4 | Singh et al. [4] | 2023 | Kaggle |

|

| S5 | Jiang et al. [5] | 2021 | ISOT KDnugget |

|

| S6 | Gereme et al. [6] | 2021 | GPAC ETH_FAKE AMFTWE |

|

| S7 | Pardamean and Pardede [7] | 2021 | Kaggle |

|

| S8 | Kaliyar et al. [8] | 2019 | Multi-class |

|

| S9 | Nagoudi et al. [25] | 2020 | Arabic TreeBank AraNews |

|

| S10 | Hamed et al. [26] | 2023 | Fakeddit news |

|

| S11 | Verma et al. [27] | 2021 | WELFake articles |

|

| S12 | Ivancova et al. [28] | 2021 | Articles from Slovak websites |

|

| S13 | Albahr and Albahr [9] | 2020 | LIAR |

|

| S14 | Goldani et al. [10] | 2021 | ISOT LIAR |

|

| S15 | Wang et al. [11] | 2021 | LUN English SLN English Weibo Chinese RCED Chinese |

|

| S16 | Ozbay and Alatas [12] | 2019 | BuzzFeed political news Random political news LIAR |

|

| S17 | Birunda and Devi [13] | 2021 | Kaggle |

|

| S18 | Mugdha et al. [14] | 2020 | Bengali news |

|

| S19 | Al-Ahmad et al. [15] | 2021 | Koirala |

|

| S20 | Jardaneh et al. [16] | 2019 | Twitter API |

|

| S21 | Tiwari and Jain [22] | 2024 | Articles |

|

| S22 | Rampurkar and D.R [23] | 2024 | ISOT |

|

| S23 | Mouratidis et al. [33] | 2025 |

|

|

| S24 | Subramanian et al. [29] | 2025 |

|

|

| S25 | Jingyuan et al. [30] | 2025 |

|

|

| S26 | Al-Tarawneh et al. [34] | 2024 |

|

|

| S27 | Shen et al. [35] | 2025 |

|

|

| S28 | Tan and Bakir [31] | 2025 |

|

|

| S29 | Mutri et al. [24] | 2025 |

|

|

| S30 | Alsuwat, E. and Alsuwat, H. [32] | 2025 |

|

|

| Study | Features/Attributes | Language |

|---|---|---|

| S1 |

| Thailand |

| S2 |

| English |

| S3 |

| English |

| S4 |

| English |

| S5 |

| English |

| S6 |

| Amharic (African) |

| S7 |

| English |

| S8 |

| English |

| S9 |

| Arabic |

| S10 |

| English |

| S11 |

| English |

| S12 |

| Slovak |

| S13 |

| English |

| S14 |

| English |

| S15 |

| English + Chinese |

| S16 |

| English |

| S17 |

| English |

| S18 |

| Bengali |

| S19 |

| English |

| S20 |

| Arabic |

| S21 |

| English |

| S22 |

| English |

| S23 |

| English |

| S24 |

| Malayalam |

| S25 |

| English |

| S26 |

| |

| S27 |

| English |

| S28 |

| English |

| S29 |

| English |

| S30 |

| English |

| Study | Model | Performance |

|---|---|---|

| S1 |

|

|

| S2 |

|

|

| S3 |

|

|

| S4 |

|

|

| S5 |

|

|

| S6 |

|

|

| S7 |

|

|

| S8 |

|

|

| S9 |

|

|

| S10 |

|

|

| S11 |

|

|

| S12 |

|

|

| S13 |

|

|

| S14 |

|

|

| S15 |

|

|

| S16 |

|

|

| S17 |

|

|

| S18 |

|

|

| S19 |

|

|

| S20 |

|

|

| S21 |

|

|

| S22 |

|

|

| S23 |

|

|

| S24 |

|

|

| S25 |

|

|

| S26 |

|

|

| S27 |

|

|

| S28 |

|

|

| S29 |

|

|

| S30 |

|

|

References

- Aphiwongsophon, S.; Chongstitvatana, P. Detecting Fake News with Machine Learning Method. In Proceedings of the 2018 15th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), Chiang Rai, Thailand, 18–21 July 2018; pp. 528–531. [Google Scholar]

- Krishna, I.; Kumar, S. Fake News Detection using Naïve Bayes Classifier. Int. J. Creat. Res. Thought (IJCRT) 2021, 9, e757–e761. Available online: https://ijcrt.org/papers/IJCRT2106550.pdf (accessed on 26 May 2025).

- Ni, B.; Guo, Z.; Li, J.; Jiang, M. Improving Generalizability of Fake News Detection Methods using Propensity Score Matching. arXiv 2020, arXiv:2002.00838. [Google Scholar] [CrossRef]

- Singh, D.; Khan, A.H.; Meena, S. Fake News Detection Using Ensemble Learning Models. In Proceedings of the Data Analytics and Management. ICDAM 2023; Lecture Notes in Networks and Systems. Swaroop, A., Polkowski, Z., Correia, S.D., Virdee, B., Eds.; Springer: Berlin/Heidelberg, Germany, 2023; Volume 78, pp. 55–63. [Google Scholar]

- Jiang, T.; Li, J.P.; Haq, A.U.; Saboor, A.; Ali, A. A novel stacking approach for accurate detection of fake news. IEEE Access 2021, 9, 22626–22639. [Google Scholar] [CrossRef]

- Gereme, F.; Zhu, W.; Ayall, T.; Alemu, D. Combating fake news in “low-resource” languages: Amharic fake news detection accompanied by resource crafting. Information 2021, 12, 20. [Google Scholar] [CrossRef]

- Pardamean, A.; Pardede, H.F. Tuned bidirectional encoder representations from transformers for fake news detection. Indones. J. Electr. Eng. Comput. Sci. 2021, 22, 1667–1671. [Google Scholar] [CrossRef]

- Kaliyar, R.K.; Goswami, A.; Narang, P. Multiclass Fake News Detection using Ensemble Machine Learning. In Proceedings of the 2019 IEEE 9th International Conference on Advanced Computing (IACC), Tiruchirappalli, India, 13–14 December 2019; pp. 103–107. [Google Scholar] [CrossRef]

- Albahr, A.; Albahar, M. An Empirical Comparison of Fake News Detection using different Machine Learning Algorithms. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 146–152. [Google Scholar] [CrossRef]

- Goldani, M.H.; Momtazi, S.; Safabakhsh, R. Detecting fake news with capsule neural networks. Appl. Soft Comput. 2021, 101, 106991. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, L.; Yang, Y.; Lian, T. Sem-Seq4FD: Integrating global semantic relationship and local sequential order to enhance text representation for fake news detection. Expert Syst. Appl. 2021, 166, 114090. [Google Scholar]

- Ozbay, F.A.; Alatas, B. A novel approach for detection of fake news on social media using metaheuristic optimization algorithms. Elektron. Ir. Elektrotechnika 2019, 25, 62–67. [Google Scholar]

- Birunda, S.S.; Devi, R.K. A Novel Score-Based Multi-Source Fake News Detection using Gradient Boosting Algorithm. In Proceedings of the 2021 International Conference on Artificial Intelligence and Smart Systems (ICAIS), Coimbatore, India, 25–27 March 2021; pp. 406–414. [Google Scholar] [CrossRef]

- Mugdha, S.B.S.; Ferdous, S.M.; Fahmin, A. Evaluating machine learning algorithms for bengali fake news detection. In Proceedings of the 23rd International Conference on Computer and Information Technology (ICCIT), DHAKA, Bangladesh, 19–21 December 2020; pp. 1–6. [Google Scholar]

- Al-Ahmad, B.; Al-Zoubi, A.M.; Abu Khurma, R.; Aljarah, I. An evolutionary fake news detection method for COVID-19 pandemic information. Symmetry 2021, 13, 1091. [Google Scholar] [CrossRef]

- Jardaneh, G.; Abdelhaq, H.; Buzz, M.; Johnson, D. Classifying Arabic tweets based on credibility using content and user features. In Proceedings of the 2019 IEEE Jordan International Joint Conference on Electrical Engineering and Information Technology (JEEIT), Amman, Jordan, 9–11 April 2019; pp. 596–601. [Google Scholar]

- Alshuwaier, F.; Areshey, A.; Poon, J. Applications and Enhancement of Document-Based Sentiment Analysis in Deep learning Methods: Systematic Literature Review. Intell. Syst. Appl. 2022, 15, 200090. [Google Scholar] [CrossRef]

- Battal, B.; Yıldırım, B.; Dinçaslan, Ö.F.; Cicek, G. Fake News Detection with Machine Learning Algorithms. Celal Bayar Univ. J. Sci. 2024, 20, 65–83. [Google Scholar]

- Kitchenhamy, B.; Brereton, O.; Budgen, D.; Turner, M.; Bailey, J.; Linkman, S. Systematic Literature Reviews in Software Engineering-A Systematic Literature Review; Elsevier: Amsterdam, The Netherlands, 2009; Volume 51, pp. 7–15. [Google Scholar]

- Do, H.H.; Prasad, P.; Maag, A.; Alsadoon, A. Deep learning for aspect-based sentiment analysis: A comparative review. Expert Syst. Appl. 2019, 118, 272–299. [Google Scholar] [CrossRef]

- Toyer, S.; Thiebaux, S.; Trevizan, F.; Xie, L. Asnets: Deep learning for generalised planning. J. Artif. Intell. Res. (JAIR) 2020, 68, 1–68. [Google Scholar] [CrossRef]

- Tiwari, S.; Jain, S. Fake News Detection Using Machine Learning Algorithms. In Proceedings of the KILBY 100 7th International Conference on Computing Sciences 2023 (ICCS 2023), Phagwara, India, 5 May 2024. [Google Scholar]

- Rampurkar, M.V.; Thirupurasundari, D.D. An Approach towards Fake News Detection using Machine Learning Techniques. Int. J. Intell. Syst. Appl. Eng. 2024, 12, 2868–2874. [Google Scholar]

- Murti, H.; Sulastri, S.; Santoso, D.B.; Diartono, D.A.; Nugroho, K. Design of Intelligent Model for Text-Based Fake News Detection Using K-Nearest Neighbor Method. Sinkron 2025, 9, 1–7. [Google Scholar] [CrossRef]

- Nagoudi, E.M.; Elmadany, A.; Abdul-Mageed, M.; Alhindi, T.; Cavusoglu, H. Machine Generation and Detection of Arabic Manipulated and Fake News. In Workshop on Arabic Natural Language Processing; Association for Computational Linguistics: Stroudsburg, PA, USA, 2020. [Google Scholar]

- Hamed, S.K.; Ab Aziz, M.J.; Yaakub, M.R. Fake News Detection Model on Social Media by Leveraging Sentiment Analysis of News Content and Emotion Analysis of Users’ Comments. Sensors 2023, 23, 1748. [Google Scholar] [CrossRef]

- Verma, P.K.; Agrawal, P.; Amorim, I.; Prodan, R. WELFake: Word Embedding Over Linguistic Features for Fake News Detection. IEEE Trans. Comput. Soc. Syst. 2021, 8, 881–893. [Google Scholar] [CrossRef]

- Ivancova, K.; Sarnovsky, M.; Krešňáková, V. Fake news detection in Slovak language using deep learning techniques. In Proceedings of the SAMI 2021, IEEE 19th World Symposium on Applied Machine Intelligence and Informatics, Herl’any, Slovakia, 21–23 January 2021; pp. 000255–000260. [Google Scholar]

- Subramanian, M.; Premjith, B.; Shanmugavadivel, K.; Pandiyan, S.; Palani, B.; Chakravarthi., B. Overview of the Shared Task on Fake News Detection in Dravidian Languages-DravidianLangTech@NAACL 2025. In Proceedings of the Fifth Workshop on Speech, Vision, and Language Technologies for Dravidian Languages, Acoma, The Albuquerque Convention Center, Albuquerque, NM, USA, 3 May 2025; Association for Computational Linguistics (ACL): Stroudsburg, PA, USA, 2025; pp. 759–767. [Google Scholar]

- Jingyuan, Y.; Zeqiu, X.; Tianyi, H.; Peiyang, Y. Challenges and Innovations in LLM-Powered Fake News Detection: A Synthesis of Approaches and Future Directions. Comput. Lang. 2025, 87–93. [Google Scholar] [CrossRef]

- Tan, M.; Bakır, H. Fake News Detection Using BERT and Bi-LSTM with Grid Search Hyperparameter Optimization. Bilişim Teknolojileri Dergisi. 2025, 18, 11–28. [Google Scholar]

- Alsuwat, E.; Alsuwat, H. An improved multi-modal framework for fake news detection using NLP and Bi-LSTM. J. Supercomput. 2025, 81, 177. [Google Scholar] [CrossRef]

- Mouratidis, D.; Kanavos, A.; Kermanidis, K. From Misinformation to Insight: Machine Learning Strategies for Fake News Detection. Information 2025, 16, 189. [Google Scholar] [CrossRef]

- Al-Tarawneh, M.A.B.; Al-Irr, O.; Al-Maaitah, K.S.; Kanj, H.; Aly, W.H.F. Enhancing Fake News Detection with Word Embedding: A Machine Learning and Deep Learning Approach. Computers 2024, 13, 239. [Google Scholar] [CrossRef]

- Shen, L.; Long, Y.; Cai, X.; Razzak, I.; Chen, G.; Liu, K.; Jameel, S. GAMED: Knowledge Adaptive Multi- Experts Decoupling for Multimodal Fake News Detection. In Proceedings of the Eighteenth ACM International Conference on Web Search and Data Mining (WSDM ’25), Hannover, Germany, 10–14 March 2025; pp. 586–595. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Aki, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Aizawa, A. An information-theoretic perspective of tf–idf measures. Inf. Process. Manag. 2003, 39, 45–65. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar] [CrossRef]

- Matrix, C. Available online: https://h2o.ai/wiki/confusion-matrix/ (accessed on 26 May 2025).

| Category | Machine Learning | |||

|---|---|---|---|---|

| Study | Algorithms/Methods | Dataset | Features/Attributes | Performance |

| S1 |

|

|

|

|

| S2 |

|

|

|

|

| S3 |

|

|

|

|

| S8 |

|

|

|

|

| S13 |

|

|

|

|

| S14 |

|

|

|

|

| S17 |

|

|

|

|

| S18 |

|

|

|

|

| S20 |

|

|

|

|

| S21 |

|

|

|

|

| S4 |

|

|

|

|

| S22 |

|

|

|

|

| S29 |

|

|

|

|

| Category | Deep Learning | |||

|---|---|---|---|---|

| Study | Algorithms/Methods | Dataset | Features/Attributes | Performance |

| S12 |

|

|

|

|

| S15 |

|

|

|

|

| S24 |

|

|

|

|

| S25 |

|

|

|

|

| S30 DL |

|

|

|

|

| S6 |

|

|

|

|

| S9 |

|

|

|

|

| S10 |

|

|

|

|

| S11 |

|

|

|

|

| S28 DL |

|

|

|

|

| Category | Both ML and DL | |||

|---|---|---|---|---|

| Study | Algorithms/Methods | Dataset | Features/Attributes | Performance |

| S26 |

|

|

|

|

| S27 |

|

|

|

|

| S5 |

|

|

|

|

| S7 |

|

|

|

|

| S23 |

|

|

|

|

| Category | Optimization Techniques | |||

|---|---|---|---|---|

| Study | Algorithms/Methods | Dataset | Features/Attributes | Performance |

| S16 |

|

|

|

|

| S19 |

|

|

|

|

| Category | Twitter/X API | |||

|---|---|---|---|---|

| Study | Dataset | Algorithms/Methods | Features/Attributes | Performance |

| S1 |

|

|

|

|

| S20 |

|

|

|

|

| S28 |

|

|

|

|

| S26 |

|

|

|

|

| Category | Kaggle | |||

|---|---|---|---|---|

| Study | Dataset | Algorithms/Methods | Features/Attributes | Performance |

| S17 |

|

|

|

|

| S2 |

|

|

|

|

| S7 |

|

|

|

|

| S23 |

|

|

|

|

| S4 |

|

|

|

|

| S3 |

|

|

|

|

| S29 |

|

|

|

|

| S25 |

|

|

|

|

| Category | LIAR and ISOT | |||

|---|---|---|---|---|

| Study | Dataset | Algorithms/Methods | Features/Attributes | Performance |

| S13 ML |

|

|

|

|

| S14 |

|

|

|

|

| S22 |

|

|

|

|

| S30 |

|

|

|

|

| S5 |

|

|

|

|

| S16 |

|

|

|

|

| Category | Different Datasets | |||

|---|---|---|---|---|

| Study | Dataset | Algorithms/Methods | Features/Attributes | Performance |

| S18 |

|

|

|

|

| S19 |

|

|

|

|

|

|

|

| |

| S12 |

|

|

|

|

| S15 |

|

|

|

|

| S24 |

|

|

|

|

| S6 |

|

|

|

|

| S8 |

|

|

|

|

| S9 |

|

|

|

|

| S10 |

|

|

|

|

| S11 |

|

|

|

|

| S21 |

|

|

|

|

| S27 |

|

|

|

|

| Study | Gap |

|---|---|

| S1 | There is a gap in the applicability of this study’s findings to real-life news. Therefore, it is important to expand the range of data gathering and attempt to apply the algorithm more broadly in the future, as explained in the research. |

| S2 | For this research, the gap can be bridged by using more data for training. Therefore, in machine learning problems, obtaining more data often significantly improves the efficiency of the algorithm. |

| S3 | The gap in this research, which was mentioned by the researchers, is that the PSM model only considers biases resulting from observed variables and does not consider unobserved variables. |

| S4 | The gap in this research is that when the AdaBoost algorithm is used, the number of iterations is too big, so the model will overfit the training data. |

| S5 | The gap in this research is that decision trees, support vector machines, logistic regression, RNN, GRU, and LSTM had poor performance on small data. |

| S6 | To fill this research gap, utilizing alternative word embedding algorithms, such as BERT (Bidirectional Encoder Representations from Transformers), may help train word embeddings better than AMFTWE, but BERT requires a large amount of data. However, creating an Amharic fake news dataset and providing transcripts will be a significant challenge. |

| S7 | The gap in this research is that BERT is a very computational model, so there is a need to reduce the computational load of BERT. |

| S8 | A gap in this research is that the accuracy of classifying fake news into multiple classes is not high, reaching 86%. Therefore, more training is needed. |

| S9 | The gap in this research is that the model is only applicable to one language and needs to be applied to languages other than Arabic. The model also has difficulty processing texts. |

| S10 | The gap in this research is the difficulty in dealing with an unbalanced dataset. |

| S11 | As for the gap in this research, the WELFake model does not deal with the factors of knowledge graphs. |

| S12 | To address the gap in the paper, the model needs to be improved by expanding and collecting more datasets. Therefore, researchers need to create more datasets based on specific topics. |

| S13 | The gap in this research is the dataset and class set. The model built requires a number of fine-tuning operations on different datasets during testing. |

| S14 | The gap in this research is the loss of accuracy in the location and pose of objects in the image when the image is not fully classified. |

| S15 | A gap in research is that most text structure information needs to be extracted. Similarly, text modeling methods require further improvements in their accuracy. |

| S16 | The gap in this research is that most of the supervised algorithms applied in fake news detection are black-box approaches. |

| S17 | The gap in this research is that to increase accuracy, other deep learning techniques must be used, with a focus on expanding datasets that include more articles. |

| S18 | The gap in this research is to increase the dataset to extract more features. |

| S19 | To address the gap in research, achieving high performance requires larger datasets. |

| S20 | The gap in this research is that the model needs to leverage Twitter responses to enhance the overall precision of the model. |

| S21 | The gap in this research is that the model needs to increase the number of datasets to enhance the accuracy of the model. |

| S22 | The gap in this research is that the model must contain complex correlation management to increase the accuracy of the model. |

| S23 | The gap in this research involves further improving the model, in terms of adding its labels and making use of transfer learning techniques. |

| S24 | For the gap in this research, the model needs improvement in NLP to enhance the accuracy. |

| S25 | Regarding the gap in this research, current models cannot adapt to the dynamic trends of social media. Some models may provide inaccurate information, and they are difficult to scale to include all types of fake news. |

| S26 | Regarding the gap in this research, the decision of word embedding technique significantly affects the model’s accuracy in detecting fake news. |

| S27 | In identifying the gap in this research, this model does not include all fake news from media such as audio or video to obtain a systematic and comprehensive analysis. |

| S28 | Regarding the gap in this research, it needs a more comprehensive study to strengthen its resilience to fake news in social media. |

| S29 | A gap in this research is that the model does not include a deep learning algorithm using different social media dataset to detect fake news. |

| S30 | For the gap in this research, more datasets need to be added. |

| Study | Names | Institutions | Country | Citation Access |

|---|---|---|---|---|

| S1 | Supanya Aphiwongsophon | Chulalongkorn Uni. | Thailand | 220—Open Access |

| Prabhas Chongstitvatana | Chulalongkorn Uni. | Thailand | ||

| S2 | I.M.V.Krishna | PVP Siddhartha Ins. | India | N/A—Open Access |

| Dr. S.Sai Kumar | PVP Siddhartha Ins. | India | ||

| S3 | Bo Ni | Uni. of Notre Dame | USA | 17—Open Access |

| Zhichun Guo | Uni. of Notre Dame | USA | ||

| Jianing Li | Uni. of Notre Dame | USA | ||

| Meng Jiang | Uni. of Notre Dame | USA | ||

| S4 | Devanshi Singh | Delhi Tech. Uni. | India | 238—Closed Access |

| Ahmad Habib Khan | Delhi Tech. Uni. | India | ||

| Shweta Meena | Delhi Tech. Uni. | India | ||

| S5 | TAO JIANG | UESTC | China | 210—Open Access |

| JIAN PING LI | UESTC | China | ||

| AMIN UL HAQ | UESTC | China | ||

| ABDUS SABOOR | UESTC | China | ||

| AMJAD ALI | University of Swat | Pakistan | ||

| S6 | Fantahun Gereme | UESTC | China | 52—Open Access |

| William Zhu | UESTC | China | ||

| Tewodros Ayall | UESTC | China | ||

| Dagmawi Alemu | UESTC | China | ||

| S7 | Amsal Pardamean Hilman F. Pardede | STMIK Nusa Mand. Indo. Ins. of Sci. | Indonesia Indonesia | 12—Open Access |

| S8 | Rohit Kumar Kaliyar | Bennett University | India | 75—Closed Access |

| Anurag Goswami | Bennett University | India | ||

| Pratik Narang | Bits Pilani | India | ||

| S9 | El Moatez Billah Nagoudi | The Uni. of Brit. Colu. | Canada | 66—Open Access |

| AbdelRahim Elmadany | The Uni. of Brit. Colu. | Canada | ||

| Muhammad Abdul-Mageed | The Uni. of Brit. Colu. | Canada | ||

| Tariq Alhindi | Columbia University | Canada | ||

| Hasan Cavusoglu | The Uni. of Brit. Colu. | Canada | ||

| S10 | Suhaib Kh. Hamed | UKM | Malaysia | 66—Open Access |

| Mohd Juzaiddin Ab Aziz | UKM | Malaysia | ||

| Mohd Ridzwan Yaakub | UKM | Malaysia | ||

| S11 | Pawan Kumar Verma | GLA University | India | 270—Open Access |

| Prateek Agrawal | Lovely Prof. Uni. | Austia | ||

| Ivone Amorim | University of Porto | Portugal | ||

| Radu Prodan | Uni. of Klagenfurt | Austia | ||

| S12 | Klaudia Ivancová | Tech. Uni. Kosˇice | Slovakia | 24—Close Access |

| Martin Sarnovský | Tech. Uni. Kosˇice | Slovakia | ||

| Viera Maslej-Kresˇnˇáková | Tech. Uni. Kosˇice | Slovakia | ||

| S13 | Abdulaziz Albahr | KSAUHS | Saudi Arabia | 47—Closed Access |

| Marwan Albahar | Umm Al Qura Uni. | Saudi Arabia | ||

| S14 | Mohammad Hadi Goldani | Amirkabir Uni. of Tec. | Iran | 170—Closed Access |

| Saeedeh Momtazi | Amirkabir Uni. of Tec. | Iran | ||

| Reza Safabakhsh | Amirkabir Uni. of Tec. | Iran | ||

| S15 | Yuhang Wang | Taiyuan Uni. of Tec. | China | 73—Closed Access |

| Li Wang | Taiyuan Uni. of Tec. | China | ||

| Yanjie Yang | Taiyuan Uni. of Tec. | China | ||

| Tao Lian | Taiyuan Uni. of Tec. | China | ||

| S16 | Feyza Altunbey Ozbay | Firat University | Turkey | 73—Closed Access |

| Bilal Alatas | Firat University | Turkey | ||

| S17 | S. Selva Birunda | Kalasalingam ARE | India | 31—Closed Access |

| Dr. R. Kanniga Devi | Kalasalingam ARE | India | ||

| S18 | Shafayat Shabbir Mugdha | United Intern. Uni. | Bangladesh | 47—Closed Access |

| Saayeda Muntaha Ferdous | United Intern. Uni. | |||

| Ahmed Fahmin | United Intern. Uni. | |||

| S19 | Bilal Al-Ahmad | The Uni. of Jordan | Jordan | 108—Open Access |

| Ala’ M. Al-Zoubi | The Uni. of Jordan | Jordan | ||

| Ruba Abu Khurma | The Uni. of Jordan | Jordan | ||

| Ibrahim Aljarah | The Uni. of Jordan | Jordan | ||

| S20 | Ghaith Jardaneh | An-Najah Nati. Uni. | Palestine | 65—Closed Access |

| Hamed Abdelhaq | An-Najah Nati. Uni. | Palestine | ||

| Momen Buzz | An-Najah Nati. Uni. | Palestine | ||

| Douglas Johnson | Uni. of Colorado | USA | ||

| S21 | Shreya Tiwari | Amity University | India | 100—Open Access |

| Sarika Jain | Amity University | India | ||

| S22 | Mr. Vyankatesh Rampurkar | BIHER | India | 23—Open Access |

| Dr. Thirupurasundari D.R. | BIHER | India | ||

| S23 | Despoina Mouratidis | Ionian University | Greece | 1—Open Access |

| Andreas Kanavos | Ionian University | Greece | ||

| Katia Kermanidis | Ionian University | Greece | ||

| S24 | Malliga Subramanian | Kongu Eng. College | India | 13—Open Access |

| Premjith B | Amrita School of AI | India | ||

| K. Shanmugavadivel | Kongu Eng. College | India | ||

| Santhiya Pandiyan | Kongu Eng. College | India | ||

| Balasubramanian Palani | Indian Inst. of IT. | India | ||

| Bharathi Raja Chakravarthi | Uni. of Galway | Ireland | ||

| S25 | Jingyuan Yi | Carnegie Mellon Uni. | USA | 27—Open Access |

| Zeqiu Xu | Carnegie Mellon Uni. | USA | ||

| Tianyi Huang | Uni. of California | USA | ||

| Peiyang Yu | Carnegie Mellon Uni. | USA | ||

| S26 | Mutaz A. B. Al-Tarawneh | Am. Uni. of the ME | Kuwait | 8-Open Access |

| Omar Al-irr | Am. Uni. of the ME | Kuwait | ||

| Khaled S. Al-Maaitah | Mutah University | Jordan | ||

| Hassan Kanj | Am. Uni. of the ME | Kuwait | ||

| Wael Hosny Fouad Aly | Am. Uni. of the ME | Kuwait | ||

| S27 | Lingzhi Shen | Uni. of Southampt. | UK | 7—Open Access |

| Yunfei Long | University of Essex | UK | ||

| Xiaohao Cai | Uni. of Southampt. | UK | ||

| Imran Razzak | M. bin Z. Uni. of AI | UAE | ||

| Guanming Chen | Uni. of Southampt. | UK | ||

| Kang Liu | Uni. of Southampt. | UK | ||

| Shoaib Jameel | Uni. of Southampt. | UK | ||

| S28 | Muhammet TAN | Sivas Uni. of S.&T. | Turkey | N/A—Open Access |

| Halit BAKIR | Sivas Uni. of S.&T. | Turkey | ||

| S29 | Hari Murti | Uni. Stikubank | Indonesia | 1—Open Access |

| Sulastri | Uni. Stikubank | Indonesia | ||

| Dwi Budi Santoso | Uni. Stikubank | Indonesia | ||

| Dwi Agus Diartono | Uni. Stikubank | Indonesia | ||

| Kristiawan Nugroho | Uni. Stikubank | Indonesia | ||

| S30 | Emad Alsuwat | Taif University | Saudi Arabia | 674—Closed Access |

| Hatim Alsuwat | Umm Al-Qura Uni. | Saudi Arabia |

| Study | Study Type | QA1 | QA2 | QA3 | Total Score |

|---|---|---|---|---|---|

| S1 | Experiment | P | P | Y | 2 |

| S2 | Experiment | P | P | LP | 1.25 |

| S3 | Experiment | P | Y | LP | 1.75 |

| S4 | Experiment | P | Y | Y | 2.5 |

| S5 | Experiment | Y | P | Y | 2.5 |

| S6 | Experiment | P | P | Y | 2 |

| S7 | Experiment | Y | Y | P | 2.5 |

| S8 | Experiment | P | P | LP | 1.25 |

| S9 | Experiment | P | Y | LP | 1.75 |

| S10 | Experiment | P | P | P | 1.5 |

| S11 | Experiment | P | Y | P | 2 |

| S12 | Experiment | Y | P | LP | 1.75 |

| S13 | Experiment | P | P | Y | 2 |

| S14 | Experiment | Y | Y | Y | 3 |

| S15 | Experiment | P | Y | LP | 1.75 |

| S16 | Experiment | P | Y | P | 2 |

| S17 | Experiment | P | P | Y | 2 |

| S18 | Experiment | P | P | LP | 1.25 |

| S19 | Experiment | P | P | LP | 1.25 |

| S20 | Experiment | P | Y | LP | 1.75 |

| S21 | Experiment | P | P | Y | 2 |

| S22 | Experiment | P | Y | Y | 2.5 |

| S23 | Experiment | Y | Y | Y | 3 |

| S24 | Experiment | Y | Y | LP | 2.25 |

| S25 | Experiment | Y | Y | Y | 3 |

| S26 | Experiment | Y | Y | Y | 3 |

| S27 | Experiment | P | P | LP | 1.25 |

| S28 | Experiment | P | P | Y | 2 |

| S29 | Experiment | Y | P | Y | 2.5 |

| S30 | Experiment | Y | P | P | 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alshuwaier, F.A.; Alsulaiman, F.A. Fake News Detection Using Machine Learning and Deep Learning Algorithms: A Comprehensive Review and Future Perspectives. Computers 2025, 14, 394. https://doi.org/10.3390/computers14090394

Alshuwaier FA, Alsulaiman FA. Fake News Detection Using Machine Learning and Deep Learning Algorithms: A Comprehensive Review and Future Perspectives. Computers. 2025; 14(9):394. https://doi.org/10.3390/computers14090394

Chicago/Turabian StyleAlshuwaier, Faisal A., and Fawaz A. Alsulaiman. 2025. "Fake News Detection Using Machine Learning and Deep Learning Algorithms: A Comprehensive Review and Future Perspectives" Computers 14, no. 9: 394. https://doi.org/10.3390/computers14090394

APA StyleAlshuwaier, F. A., & Alsulaiman, F. A. (2025). Fake News Detection Using Machine Learning and Deep Learning Algorithms: A Comprehensive Review and Future Perspectives. Computers, 14(9), 394. https://doi.org/10.3390/computers14090394